1. Introduction

The prediction of corporate failure has been a longstanding concern in accounting, finance and risk management, initially explored in early empirical work by

Fitzpatrick (

1932) and gaining widespread academic and practical attention through the influential studies of

Beaver (

1966,

1968) and

Altman (

1968), whose studies shifted the focus from descriptive ratio analysis to replicable predictive models, defined core variables, and established evaluation practices that became benchmarks for subsequent research, underpinned by both strong predictive performance and methodological rigor. In recent decades, interest in this area has intensified, with the majority of related studies published after 2008 (

Shi & Li, 2019), particularly following major systemic disruptions such as the 2008 global financial crisis or the COVID-19 pandemic. While business failures were once predominantly associated with small or new firms, large, established corporations have increasingly faced collapse (

Jones, 2023), raising public awareness of the broader consequences (

Altman & Hotchkiss, 1993;

Cole et al., 2021). These failures can destabilize global financial systems and impose substantial economic costs on a broad spectrum of market participants, including investors, creditors, auditors, regulators, suppliers, customers, and employees. The sustained scholarly attention has led to a proliferation of predictive modeling approaches, ranging from classical statistical methods such as discriminant analysis (DA) and logistic regression (LR) to more complex machine learning (ML) techniques. Much of the literature has centered on pushing the frontiers of predictive accuracy for these models.

Despite the breadth of research and models developed, comparatively little emphasis has been placed on the practical adoption and usability of failure prediction models in real-world corporate settings. Recent work highlights a persistent gap between accuracy-driven studies and decision-oriented practice (

Veganzones & Severin, 2021;

Radovanovic & Haas, 2023). Qualitative insights into how organizations select, integrate, and govern such models remain scarce, yet they hold significant potential to complement and validate quantitative findings. This scarcity likely stems from the sensitivity and confidentiality surrounding failure-related data, which pose substantial barriers to data access and applied research. Current research in corporate failure prediction predominantly emphasizes the development of new quantitative models aimed at marginally enhancing accuracy rates (

Bellovary et al., 2007;

Sun et al., 2014). This trend is further illustrated by

Mallinguh & Zeman (

2020) and is consistently reflected in contemporary review studies, which primarily concentrate on methodological considerations. These reviews typically assess variations in model design and modeling techniques in relation to predictive performance (

Tay & Shen, 2002;

Alaka et al., 2018;

Jayasekera, 2018;

Qu et al., 2019;

Kim et al., 2020;

Ciampi et al., 2021;

Ratajczak et al., 2022), leaving a notable gap in understanding the practical limitations in operationalizing these tools that are essential for driving adoption within firms. In essence, even the most accurate prediction model holds limited value if it is not embraced and effectively used by organizations and decision-makers.

In response to this gap, the present study conducts a literature review to evaluate the practicality of corporate failure prediction models from an innovation adoption perspective. We apply a technology adoption framework that combines the Technology–Organization–Environment (TOE) framework with the Technology Acceptance Model (TAM) to evaluate two distinct model archetypes: (i) the long-established, widely accepted Altman Z-score model and (ii) modern ML-based models, which have become the primary focus of recent failure prediction research. The TOE framework examines how a firm’s technological capabilities, organizational factors, and environmental conditions influence its readiness to adopt new technologies or analytical tools, whereas TAM focuses on individual users’ acceptance of a technology based on its perceived usefulness and ease of use. By integrating these organizational-level and user-level perspectives into a unified TOE–TAM framework, our analysis offers a comprehensive evaluation of each model type’s practical applicability. This hybrid approach has already proven its value in several domains (

Gangwar et al., 2014;

Qin et al., 2020;

Bryan & Zuva, 2021) and allows us to systematically investigate both the contextual readiness of organizations (e.g., data availability, resource capacity, regulatory environment) and the attitudes of end-users or decision-makers (e.g., trust in the model, interpretability, ease of implementation) that collectively determine the likelihood of a model’s successful adoption. In the specific context of corporate failure prediction, integrating the two models is particularly valuable as it captures the influence of both internal and external factors on adoption decisions. For artificial intelligence (AI)-enabled applications, in particular, individuals’ behavior is likely shaped by a combination of organizational and institutional pressures—such as internal policies and incentives, regulatory demands for transparency, data protection requirements, and prevailing industry norms.

Overall, this literature review provides both an assessment of the current state of corporate failure prediction models and insights into their real-world usability. It offers a balanced critique of traditional and ML-based approaches, identifying key strengths and limitations from the standpoint of technology adoption. In doing so, the study contributes to the literature by bridging the gap between predictive performance and practical implementation considerations. The findings not only lay a foundation for future academic research with practical relevance—by highlighting underexplored dimensions such as organizational readiness and user acceptance—but also furnish practical guidance for managers and policymakers. By understanding the conditions under which each type of failure prediction model is most effective, stakeholders can make more informed decisions in selecting and deploying these tools in practice.

2. Practical Applications of Failure Prediction Models in Corporations

Failure prediction models have numerous practical applications, including portfolio risk assessment, early warning systems, and support for corporate restructuring initiatives. These models are utilized by a wide range of stakeholders, such as lending specialists, equity and bond investors, security analysts, rating agencies, regulators, government authorities, auditors, risk management consultants, accounts receivable managers, finance and purchasing managers, corporate development teams, and executives in distressed firms (

Altman & Hotchkiss, 2010).

Table 1 presents selected use cases, some of which are primarily relevant to internal corporate operations, others to external stakeholders such as lenders and investors, and some that hold significance for both groups.

We argue that the feasibility of applying failure prediction models to specific use cases is primarily determined by four key attributes: accuracy rate, forecasting horizon, interpretability, and manageability. While some applications emphasize high predictive power, others require greater transparency and usability. Current research predominantly prioritizes enhancing predictive performance (

Bellovary et al., 2007;

Sun et al., 2014;

Veganzones & Severin, 2021)—particularly over short-term horizons—which benefits predominantly external stakeholders such as financial institutions, analysts, investors, and lenders. However, internal stakeholders often prioritize models with extended forecasting horizons and higher interpretability to support strategic decision-making.

Within corporate environments, failure prediction models can serve multiple use cases. For example, firms may seek to assess risk exposure within their operational ecosystems. Companies that rely on just-in-time inventory systems, single sourcing, or face high switching costs must carefully evaluate the financial stability of potential partners. A distressed partner could lead to substantial losses or necessitate financial intervention to maintain supply chain continuity. For practical deployment in supply chain management, a predictive model must satisfy three key requirements. First, it should forecast disruption risk far enough in advance to enable timely intervention. Second, it should achieve high accuracy rates while relying on a compact set of non-sensitive variables, minimizing the datapoints partners need to share. Third, it must be transparent and interpretable, clearly revealing the drivers of failure risk so procurement teams can run scenario analyses and simulate risk under different conditions and alternative sourcing strategies. Similarly, accounts receivable departments may require different timeframes and insights into customer solvency to adjust payment terms in response to changing credit risks.

In the context of mergers and acquisitions (M&A), failure prediction models can support negotiations by informing price discovery, evaluating how the risk structure changes in a post-merger scenario. This potentially enables more precise capital planning to achieve desired risk thresholds. Moreover, corporate managers should continuously monitor their firm’s financial health, leveraging predictive models to simulate various market and operational scenarios. As an integrated management tool, failure prediction models could facilitate long-term value creation and enable early intervention when signs of distress emerge.

Altman and La Fleur (

1981) demonstrate the managerial utility of such models, recounting how the Z-score model supported GTI Corporation in overcoming a financial crisis by identifying key performance metrics that could be optimized to enhance the firm’s performance. For effective crisis management, firms may require models that offer long-term predictions, highlight relevant operational drivers, and support scenario analysis with minimal complexity.

Despite the practical value of failure prediction models, there is a notable lack of research that systematically addresses the distinct needs of internal corporate stakeholders or examines how failure prediction models should be tailored to support specific managerial use cases. Consequently, our understanding of the practical deployment of failure prediction models within organizations, their alignment with requirements for certain use cases, and the resulting implications remains limited.

3. Evolvement of Z-Score and ML-Based Failure Prediction Models

The Z-score model developed by

Altman (

1968) is among the most widely recognized models in the domain of corporate failure prediction.

Altman (

1968) introduced the Z-score model to assess the default probability of publicly traded manufacturing companies in the United States within a two-year period.

The original function developed by

Altman (

1968) combines four accounting ratios and one market-based metric using a linear discriminant analysis approach and is as follows:

where

X1 = Working capital/total assets (measure of liquidity);

X2 = Retained earnings/total assets (indicator of cumulative profitability over time);

X3 = Earnings before interest and taxes/total assets (reflection of true productivity of the firm’s assets);

X4 = Market value of equity/book value of total liabilities (measure of leverage and market confidence);

X5 = Sales/total assets (measure of asset turnover indicating management’s effectiveness in utilizing the firm’s assets to generate sales);

Z = Overall index.

The resulting Z-score classifies companies into three distinct zones, each reflecting a different level of financial stability and associated risk of corporate failure. Corporations with a Z-score greater than 2.99 fall within the “safe zone”, indicating a low likelihood of bankruptcy. These firms generally exhibit strong financial fundamentals, including adequate liquidity and high operational productivity and financial profitability. Companies with a Z-score ranging between 1.81 and 2.99 are assigned to the “grey zone”, which denotes an ambiguous financial position. Firms in this range may face potential financial distress, though not immediately or inevitably. This classification signals the need for closer scrutiny, as the firm’s trajectory may improve or deteriorate depending on internal dynamics or external economic conditions. A Z-score below 1.81 signals that a company is in the “distress zone”. Based on his observations,

Altman (

1968) concluded that firms with scores below this threshold were all bankrupt, indicating a high probability of imminent financial failure. Firms in this category typically exhibit inadequate liquidity, poor profitability, low efficiency of deployed capital and elevated leverage—factors that collectively increase their vulnerability to financial distress and ultimately corporate insolvency. According to

Altman (

2018a), the Z-score model has demonstrated sustained high Type I accuracy in predicting bankruptcy, even in subsequent studies conducted nearly 50 years later, and remains a dominant reference in bankruptcy prediction research.

Recognizing the need for broader industrial applicability,

Altman et al. (

1977) developed the ZETA model, refining the original Z-score by incorporating additional variables to enhance the predictive power and extend its usability across various industries. Later,

Altman (

1983) introduced the Z’-score model, which was specifically adapted to assess both private and publicly listed firms, covering manufacturing as well as non-manufacturing sectors.

To accommodate various financial and market conditions, the Z-score model has undergone multiple revisions, with parameters and coefficients recalibrated to improve its applicability. To address the assessment of non-publicly traded firms,

Altman and Hotchkiss (

1993) modified the Z-score model by replacing the market value of equity with the book value of equity. Subsequently,

Altman et al. (

1998) developed the Z’’-score model by extending the Z’-score model for emerging markets and non-manufacturing firms, notably removing the sales-to-total-assets ratio to enhance its applicability in diverse economic environments.

The Z-score model provides a continuous measure for evaluating corporate financial health. To facilitate a direct and precise calculation of default probability from the Z-score classification zones, the model was enhanced with the bond rating equivalent method, which maps the Z-score onto traditional credit ratings assigned by Standard & Poor’s (

Altman & Hotchkiss, 2010).

Further refining corporate default prediction methodologies,

Altman et al. (

2010) introduced the Z-Metrics model, marking a significant evolution in credit risk assessment. Designed for application across various industries and geographic regions, the Z-Metrics model offers enhanced flexibility in evaluating financial distress. It integrates traditional financial ratios with market-based indicators, such as equity volatility, credit spreads, and macroeconomic variables, to improve the accuracy of corporate default predictions.

While the original Z-score model, along with its enhancements—the Z’-score and Z’’-score models—utilizes multiple discriminant analysis (MDA) to classify firms into safe, grey, or distress zones, the Z-Metrics model transitions to LR and hazard models, providing a probability-based risk assessment rather than a categorical classification.

Although the subsequent analysis focuses on the Z-score, both LR and hazard models are well-established alternatives for failure prediction. LR, first introduced to bankruptcy prediction by

Ohlson (

1980), is widely regarded as the dominant statistical approach in this field (

Shi & Li, 2019). The logit specification is appropriate for failure prediction as it models a binary outcome without assuming normality or equal covariance among predictors (

Jones, 2017,

2023;

Sun et al., 2014;

Jabeur, 2017). However, LR requires an absence of multicollinearity among independent variables (

Sun et al., 2014). Empirical evidence suggests that LR typically outperforms MDA (

Wu et al., 2010;

Barboza et al., 2017;

Zizi et al., 2021), though some indicate similar predictive power (

Huo et al., 2024). LR even performs comparably to simpler single-learner ML models (

Barboza et al., 2017;

Zizi et al., 2021), with

Altman et al. (

2020) confirming its strong performance relative to ML benchmarks. Hazard models have gained prominence in failure prediction for their ability to model time-to-event outcomes using panel datasets and survival functions (

Jones, 2023), mitigating the selection bias common in single-period approaches like LR and MDA (

Shumway, 2001).

Shumway (

2001), who introduced the discrete-time hazard model to bankruptcy prediction, showed it outperforms static models such as MDA and LR. When combining accounting- and market-based variables, hazard models are particularly effective (

Hillegeist et al., 2004;

Charalambakis, 2015) and can exceed the predictive accuracy of the Z-score (

Bauer & Agarwal, 2014). However, as the number of predictors increases, hazard models may overfit, undermining out-of-sample reliability and predictive power (

Alam et al., 2021). For broader overviews of LR and hazard models in failure prediction, see

Jones (

2023),

Barboza et al. (

2017),

Alam et al. (

2021),

Jones et al. (

2017), and

Wu et al. (

2010).

The application of ML models in corporate failure prediction has evolved significantly over time, progressing from the early neural networks (

Odom & Sharda, 1990) to more advanced techniques such as gradient boosting, AdaBoost, random forests, and deep learning (

Jones, 2023). Over the past decade, academic research has increasingly focused on transferring various ML architectures to the context of corporate failure prediction. These models range from standalone ML techniques, such as support vector machine (SVM), neural network (NN), decision tree (DT), case-based reasoning (CBR), classification and regression tree (CART), k-nearest neighbor (KNN), multiple criteria decision aid, rough sets, self-organizing map (SOM), naive Bayes (NB), and Kohonen map and trajectories, to ensemble methods that combine multiple ML models, including gradient boosting, bagging, AdaBoost, majority voting, and random forests. Among them, NN, DT, CBR, and SVM are the most widely applied methodologies (

Veganzones & Severin, 2021). More recently, deep learning models, such as deep neural networks, convolutional neural networks (CNN), recurrent neural networks, and long short-term memory networks, have also been applied to bankruptcy prediction tasks.

The contemporary models have demonstrated superior predictive performance compared to traditional classifiers like linear discriminant analysis (LDA), LR, and standard ML models such as CART (

Jones, 2023). While ML models like gradient boosting machines, along with ensemble techniques such as random forests and AdaBoost, have gained significant traction in the literature over the past decade, deep learning techniques have gained prominence due to their ability to handle complex environments and their self-correction capabilities, which offer advantages over conventional ML models (

Alam et al., 2021). Several studies have explored deep learning-based failure prediction models. For instance,

Hosaka (

2019) developed a failure prediction model based on CNN.

Alam et al. (

2021) introduced a deep learning model utilizing the Grassmannian network, originally proposed by

Huang et al. (

2018), to enhance predictive accuracy.

Despite the growing adoption of ML techniques in corporate failure prediction, the application of natural language processing (NLP) and text mining remains relatively underexplored, despite their potential benefits (

Shirata et al., 2011;

Gupta et al., 2020).

Jones (

2023) highlights that NLP can be effectively leveraged to extract predictive signals embedded within unstructured text data, including annual reports, emails, social media posts, and multimedia content such as video and audio files, presenting a valuable yet underutilized avenue for corporate failure prediction research.

In the subsequent analysis, we focus on the original Z-score model and its slightly modified variations, ensuring that the foundational principles of the original model are preserved. The Z-score model is compared to a range of ML approaches applied in corporate bankruptcy prediction which, despite their methodological diversity, share several core characteristics. Our primary focus is on the most prevalent ML models identified in the literature, specifically NN, SVM, DT, genetic algorithms (GA), and rough sets—highlighted by

Shi and Li (

2019) as the most widely applied ML methods in this field. In addition, we also discuss a set of more advanced ML techniques that have recently gained traction and exhibit increasing popularity. These include gradient boosting algorithms, AdaBoost, random forests, and deep learning architectures (

Shi & Li, 2019;

Jones, 2023).

While these ML models differ in terms of interpretability, computational complexity, data requirements, and model transparency, they are uniformly data-driven, relying on algorithmic learning from historical patterns rather than predefined statistical assumptions. Moreover, they are generally well-suited to capturing non-linear relationships, handling high-dimensional input data, and modeling complex interaction effects. As such, ML-based models represent a significant departure from traditional statistical approaches such as the Z-score model.

4. Failure Prediction Models in the Light of the TOE–TAM Framework

In this literature review we analyze the current state of corporate failure prediction research through the lens of technology adoption theory. Over the past five decades, scholars have proposed a wide range of statistical and ML-based models, yet their practical appeal varies widely. To move beyond a narrow comparison of predictive accuracy by model setup and apply a holistic approach instead, we evaluate these models with an integrated technology adoption framework that combines the TOE model (

Tornatzky et al., 1990) and the TAM by

Davis (

1989). The TOE model explains adoption at the firm level by linking technological attributes, organizational readiness and environmental pressure, whereas TAM captures the individual user’s perspective by relating perceived usefulness and perceived ease of use to behavioral intentions.

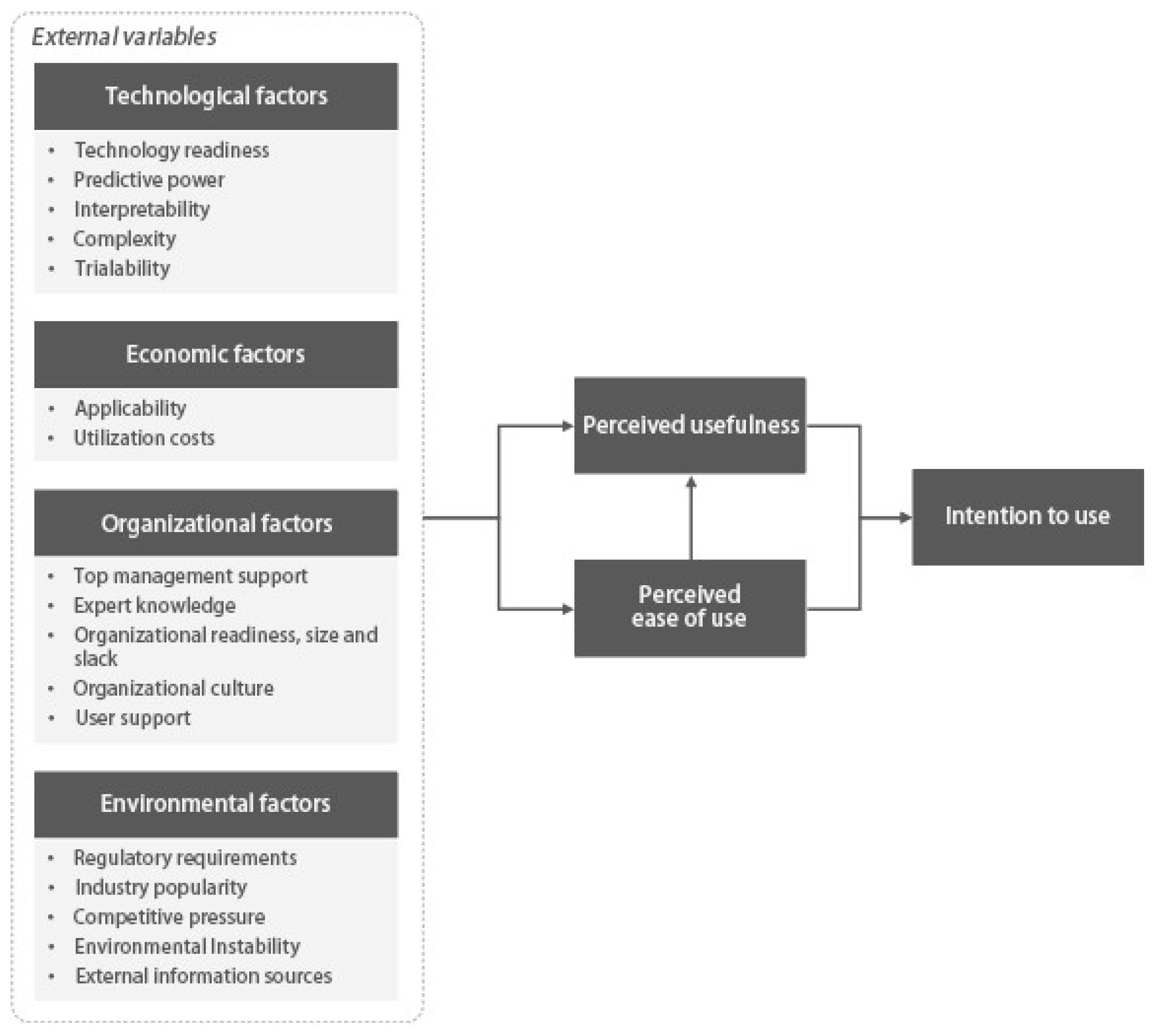

Integrating these perspectives yields the hybrid TOE–TAM framework, a coherent framework based on an established body of work from the technology adoption research, whose dual focus is well-suited for the structured evaluation of failure prediction models. The hybrid TOE–TAM framework used to systematically evaluate the Altman Z-score model and ML-based corporate failure prediction models, with a focus on their practical applicability and limitations within corporate contexts, is illustrated in

Figure 1 and builds on foundational work in the field of technology adoption (

Davis, 1989;

Rogers, 1995;

Jeyaraj et al., 2006;

Ramdani et al., 2009;

Wang et al., 2010). The framework integrates recent insights from research employing a combined TOE–TAM approach (

Gangwar et al., 2014;

Qin et al., 2020;

Bryan & Zuva, 2021), which has been validated in both developed and developing countries (

Bryan & Zuva, 2021). Drawing on findings across a range of regions and industries, it offers a comprehensive structure that includes variables consistently identified across studies. While acknowledging that contextual factors—such as technology type, national setting, and company size—affect the relative importance of these variables (

Salwani et al., 2009;

Gangwar et al., 2014), we adopt the position of

Gangwar et al. (

2014) that both significant and insignificant variables should be retained when constructing an integrated TOE–TAM model. This inclusive approach underpins the development of a unified, generalizable framework capable of accommodating diverse conditions, thereby providing robust guidance for future research.

Whereas prior technology adoption studies have examined enterprise resource planning (ERP), electronic data interchange (EDI), e-commerce and radio-frequency identification (RFID) (

Gangwar et al., 2014), corporate failure prediction models have not yet been analyzed through an adoption lens. Addressing this gap, our review maps each model’s technical merits—e.g., data demands, interpretability and scalability—onto organizational capabilities and constraints, considers regulatory or competitive forces that may accelerate or hinder uptake, and incorporates user-level factors such as trust, transparency and usability.

Following

Gangwar et al. (

2014), we integrate the TOE framework and TAM to capitalize on their respective strengths while addressing their inherent limitations. Although TAM has proven to be a valuable theoretical model,

Legris et al. (

2003) highlights notable shortcomings. Specifically, TAM explains about 40% of a system’s use, and its findings are often inconsistent and ambiguous, indicating that significant factors may be missing.

Legris et al. (

2003) therefore advocate for embedding TAM within a broader framework that incorporates human and social change processes, influencing individual adoption behavior. Conversely, the TOE framework has been criticized for its conceptual generality and limited operational guidance (

Gangwar et al., 2014). The TOE framework delineates three mutually interacting contexts. It categorizes influencing factors into technological, organizational, and environmental contexts. These dimensions are not isolated but function as interdependent forces shaping adoption decisions (

Depietro et al., 1990). While the organizational dimension is inward-looking, explaining the dynamics and characteristics of an organization, including governance structure, culture, organizational size, knowledge and slack, the environmental dimension reflects external pressures—typically beyond the organization’s control—such as government regulations, competitive dynamics, and supplier influence. The technological context encompasses characteristics of the technology itself, including market maturity, functionality, complexity, and compatibility with the existing IT infrastructure. In contrast,

Davis (

1989) proposed that IT adoption is driven by individuals’ behavioral intentions, which are primarily influenced by perceived usefulness (PU) and perceived ease of use (PEOU). PU refers to the degree to which an individual believes that a technology enhances job performance, whereas PEOU denotes the belief that using the technology will require minimal effort (

Davis, 1989). These two constructs are shaped by external variables, and PEOU also exerts a direct influence on PU. Together, they account for variances in users’ behavioral intentions and are strong predictors of IT acceptance and use at the individual level (

Gangwar et al., 2014).

The remainder of

Section 4 applies the integrated TOE–TAM framework. We first analyze technological factors, then examine economic, organizational and environmental dimensions, and conclude with the user-level drivers of acceptance.

4.1. Technological Context

Within the technological context, characteristics such as relative advantage, complexity, compatibility, trialability, observability, trustworthiness, and technology readiness have been extensively researched in the field of information technology adoption (

Jeyaraj et al., 2006;

Gangwar et al., 2014). However, the significance of these attributes varies across studies and is influenced by the specific technology and organizational structure under examination. For instance,

Ramdani et al. (

2009) found that compatibility, complexity, and observability were insignificant factors in the adoption of enterprise systems (e.g., ERP, CRM) among small and medium enterprises (SME). In contrast,

Wang et al. (

2010) identified complexity as a significant barrier to RFID adoption, highlighting the context-dependent nature of these attributes.

In this context, we will critically assess the model archetypes in terms of technology readiness, predictive power and interpretability of the models and will draw on the diffusion of innovation (DOI) theory by

Rogers (

1995). The DOI explains how technologies spread within organizations through five technological attributes: relative advantage, compatibility, complexity, trialability, and observability. It aligns with the TOE framework (

Salwani et al., 2009;

Wang et al., 2010) and remains widely accepted for analyzing technology adoption at the organizational level. While, in line with

Qin et al. (

2020), the attribute of relative advantage will be examined within the economic dimension, we will exclude observability, consistent with prior studies, due to its limited significance in IT adoption (

Wang et al., 2010;

Gangwar et al., 2014). We will also exclude compatibility, as both model archetypes exhibit similar characteristics with respect to integrating with the organization’s existing IT landscape.

4.1.1. Technology Readiness

According to

Qin et al. (

2020) the maturity and standardization level of a technology significantly influence companies’ perceptions of new technological innovations. The likelihood of organizations adopting a technological innovation increases when the technology is well-developed, broadly tested and the market ecosystem is mature.

The technology readiness level (TRL) framework, originally introduced by NASA in 1974 and later refined by

Mankins (

1995), is a structured methodology for assessing the development stage of a technology, progressing from the initial concept to full deployment and operational use. Three criteria guide the assignment of TRL scores: (i) the maturity and standardization of the underlying methodology, (ii) the breadth and consistency of empirical validation, and (iii) the presence of a supportive market and regulatory ecosystem. In this section, we focus on the standardization and empirical validation of the two model archetypes. Observations that relate specifically to predictive performance and model interpretability are treated in

Section 4.1.2 and

Section 4.1.3, while organizational and regulatory implications are analyzed in

Section 4.3 and

Section 4.4.

After more than five decades of largely unchanged coefficients, national recalibrations have reinforced its status as an industry standard (

Altman, 2018a).

Radovanovic and Haas (

2023) reaffirm the enduring predictive strength of the five original Altman variables, suggesting that the inclusion of additional growth and market variables offers only marginal improvements in predictive accuracy. Meta-reviews covering over thirty countries show average one-year accuracy rates of approximately 75%, which rise above 90% when the coefficients are tuned to local datasets (

Altman et al., 2017). The Z-score model’s adaptability has enabled its effective application across various financial domains beyond its original purpose (

Altman, 2018a). Over time, it has been refined and adjusted to align with industry standards and broaden its applicability (

Altman et al., 1977,

1998;

Altman & Hotchkiss, 1993;

Altman & Hotchkiss, 2010;

Almamy et al., 2016;

Altman, 2018a). The model has been extensively tested across diverse industries and firm sizes, in both developed and emerging markets, and under varying economic conditions—ranging from financial crises to periods of economic prosperity (

Hillegeist et al., 2004;

Agarwal & Taffler, 2008;

Gerantonis et al., 2009;

Altman et al., 2013;

Tinoco & Wilson, 2013;

Almamy et al., 2016;

Gavurova et al., 2017;

Ashraf et al., 2019;

Panigrahi, 2019). However, its predictive performance declines over longer time horizons compared to more advanced models (see

Section 4.1.2). Additionally, the Z-score model has limitations in addressing modern financial complexities and shifts in economic policies. Moreover, the model lacks empirical evidence supporting its effectiveness in predicting the failure of start-ups and technology-driven companies, which often prioritize growth over profitability or do not capitalize self-developed intangible assets, making traditional financial ratios less reflective of their financial health.

On the contrary, the absence of a prevailing ML-based reference model within the realm of ML limits synthesizing results of various empirical tests and the understanding of its broader applicability. Unlike the Z-score model, most ML techniques inherently depend on extensive datasets and derive both the selection and weighting of the most influential predictors based on the underlying training data. Due to the high heterogeneity and variability in training data, dataset composition, as well as predictor selection and weighting, synthesizing results of ML-based models across studies remains challenging. Although these models have received considerable attention in research, no consensus has been reached regarding which ML technique is best suited for certain failure prediction use cases, as results vary significantly across different techniques and studies.

While traditional statistical models largely concentrate on LR and DA, ML approaches are more dispersed across a wide spectrum of algorithms.

Shi and Li (

2019) found that NNs are the second most applied technique, well behind LR, which dominates with over 38% of the reviewed sample. The predominance of NN over other ML techniques has likewise been highlighted by other review studies, who, in a systematic literature review on the application of ML models, reported that NN constituted the most prevalent approach, followed by SVM and DT (

Alaka et al., 2018;

Dasilas & Rigani, 2024).

Notably, while NN appears more frequently in empirical studies than the Altman Z-score model (

Shi & Li, 2019), synthesizing NN-based findings remains difficult due to high variability in model architectures and configurations (

Du Jardin, 2009;

Hosaka, 2019;

Alam et al., 2021), in contrast to the more standardized nature of the Z-score model, which typically follows a fixed structure. Advanced ML techniques are emerging but remain far less applied than traditional statistical models and more established ML methods such as NN and SVM (

Shi & Li, 2019).

In summary, the Altman Z-score model continues to be one of the most frequently referenced and applied models, either independently or as a benchmark across various applications (

Altman, 2018a). Although research on new machine learning and deep learning techniques has expanded significantly over time due to the predictive advantages these methods offer (

Jones, 2023), the Z-score family’s simplicity and high degree of standardization make it easy to synthesize results of the various empirical tests and understand the model’s performance across different fields of application. In practice, only 14% of firms feel fully prepared for enterprise AI and over 80% of projects fail, despite 58% already piloting models (

Ryseff et al., 2024), highlighting the gap between experimentation and enterprise-wide adoption.

4.1.2. Predictive Power

Trust in technology is a prerequisite for the adoption of failure prediction systems.

Huang et al. (

2008) show that trust rises when users perceive a tool to be both accurate and comprehensible. In corporate failure research these two criteria translate into predictive power and interpretability, and they are often at odds with each other because highly accurate algorithms tend to be opaque whereas transparent models may sacrifice accuracy (

Kim et al., 2020;

Veganzones & Severin, 2021).

Ensuring high and consistent predictive power remains the primary objective in the failure prediction research field. The bulk of empirical work has concentrated on refining modeling techniques and benchmarking them against one another, with ML algorithms usually outperforming classical statistical approaches, even if the margin of improvement is sometimes modest (

Li & Sun, 2011,

2012;

Du Jardin & Severin, 2012;

Ciampi & Gordini, 2013;

Korol, 2013;

Du Jardin, 2016;

Mselmi et al., 2017;

Zhao et al., 2017).

Alaka et al. (

2018), in a systematic review of 49 studies published between 2010 and 2015, reported that artificial neural networks (ANNs) achieve the highest accuracy, while MDA was the least effective.

Jones (

2023) corroborates this result, stressing that the superiority of gradient boosting machines, AdaBoost, random forests and deep learning widens as the prediction task becomes more complex. Nevertheless, LR can still match or even surpass sophisticated models in small-sample or SME settings (

Altman et al., 2020), indicating that model selection must consider data constraints as well as conceptual clarity.

Studies that altered the original Z-score variables by either re-weighting them or replacing them with alternative predictors invariably found ML techniques to be superior (

Li & Sun, 2011,

2013;

Rafiei et al., 2011;

S. Lee & Choi, 2013).

Ciampi and Gordini (

2013) demonstrated that, for Italian SMEs, ANNs outperformed MDA, partly because financial statement data are less reliable for smaller firms.

Li and Sun (

2012) confirmed the usefulness of Altman’s variables in China’s tourism sector but also found that ML models improved two-year predictions marginally and three-year forecasts by roughly 10%. Ensemble models demonstrated only marginal improvements over simpler, single-learner ML methods across both prediction horizons.

Direct head-to-head comparisons reinforce this picture.

Barboza et al. (

2017) document gains of up to 36% in accuracy when boosting algorithms are applied to the same variables that feed Altman’s model, and

Heo and Yang (

2014) show that AdaBoost performs especially well for large Korean construction firms, where the Z-score deteriorates.

Hosaka (

2019) arrives at analogous results for Japan: the CNN he developed raises the AUC from 71.5% (Z’’-score) to above 90%. From a risk management standpoint, the reduction in Type I errors—misclassifying failing firms as healthy—is often more consequential than average accuracy and gives advanced ML approaches a further edge. The prevailing evidence indicates that ensemble methods yield only modest accuracy improvements of a few percentage points over single-learner ML models (

Li & Sun, 2012;

Heo & Yang, 2014;

Jones et al., 2017;

Hosaka, 2019), with occasional exceptions, such as

Barboza et al. (

2017), who reported more substantial gains, with boosting, bagging, and random forest models outperforming simpler SVM and NN models by approximately 10% to 15%.

Accuracy inevitably declines when the forecast horizon extends beyond one year (

Altman et al., 2020). Reported accuracy rates for one- to three-year horizons vary widely but tend to remain higher for ML-based models than for statistical methods; beyond three years the literature becomes inconsistent, with accuracy rates ranging from 18% to 91% (

Lin et al., 2011,

2014;

Charalambakis, 2015;

Geng et al., 2015;

Manzaneque et al., 2015;

Tian et al., 2015;

Jabeur, 2017;

Jones, 2017). These discrepancies underline that long-term prediction is confined not so much by algorithmic sophistication as by data relevance and the dynamic nature of corporate behavior.

Three research avenues appear particularly promising for reaching more consistent long-term predictions. First, industry-specific operational and market indicators should complement financial ratios because the latter lose explanatory power as the horizon lengthens (

Lin et al., 2011). Leading indicators, such as customer satisfaction scores, repeat-purchase rates, cross-sell activity, product return ratios or capacity-utilization metrics, can reveal strategic decline well before it surfaces in accounting numbers, and several studies demonstrate that inserting sector-tailored variables—for instance, energy-efficiency metrics in utilities (

Doumpos et al., 2017) or market concentration in hospitality (

C. M. Chen & Yeh, 2012)—materially lifts model discrimination. Product life cycle staging, refined through big data and AI techniques, can flag whether a firm’s key offerings are shifting from growth to maturity or decline—an early warning signal that financial ratios often miss (

Chong et al., 2016;

Calder et al., 2016;

Iveson et al., 2022), while ESG performance may capture emerging regulatory and reputational pressures that conventional ratios overlook (

Alshehhi et al., 2018;

Cabaleiro-Cervino & Mendi, 2024).

Second, turnaround measures, such as cost efficiency, asset retrenchment, focus on core business, leadership change, cultural renewal, and long-term capability-building should be embedded in modeling frameworks, given their potential to alter trajectories (

Slatter, 2011;

Schoenberg et al., 2013). Substantial evidence shows that turnaround measures substantially influence the turnaround outcome, yet most prediction models still treat firms as static in nature. However, among them, only leadership change has been studied to a limited extent in the context of failure prediction:

Wilson and Altanlar (

2014) see only a slight uptick in failure risk after CEO turnover, while

Liang et al. (

2016) detect no predictive gain. This contrasts with turnaround theory’s general endorsement of leadership renewal (

Schweizer & Nienhaus, 2017;

Bouncken et al., 2022), which is highly context-dependent (

G. Chen & Hambrick, 2012;

O’Kane & Cunningham, 2012). Integrating event flags for restructuring announcements or C-suite turnover—and quantifying change management capabilities through proxies like change management capacity, internal communication, and employee sentiment indices (

Nyagiloh & Kilika, 2020)—would allow models to refine risk assessments in near real time.

Third, unstructured information, such as customer reviews, analyst calls, maintenance logs or supply chain sensor data, remains largely untapped even though it comprises more than 80% of enterprise data (

Castellanos et al., 2017;

Harbert, 2021) and can provide firms with a competitive advantage (

Ravichandran et al., 2024). These sources can reveal patterns related to customer sentiment, innovation cycles, brand perception, and operational stress points, factors that structured datasets often miss. AI-driven techniques, such as sentiment analysis, NLP, and pattern recognition, allow researchers to extract meaningful insights from large volumes of qualitative data (

Adnan & Akbar, 2019;

Cetera et al., 2022;

Mahadevkar et al., 2024). However, processing unstructured data requires significant effort in data collection, cleansing, and model training.

De Haan et al. (

2024) propose a structured, three-step approach to enhance data usability. Research collaborations with firms could provide access to proprietary data, while synthetic data generation, as suggested by

Sideras et al. (

2024), may help mitigate data scarcity. As ML architectures mature and AI infrastructure becomes more accessible, the integration of unstructured data into failure models will likely become more feasible.

In summary, ML-based models exhibit superior predictive performance compared to the Z-score, but their advantage erodes for shorter prediction horizons and when the cost of model opacity increases. Future work that combines their algorithmic strength with richer, forward-looking data and transparent explanations has the greatest potential to make failure prediction a genuinely actionable management tool.

4.1.3. Interpretability

In terms of interpretability, Z-score models offer a significant advantage over ML-based models. As a simple linear equation, the Z-score model assumes that the relationships between financial ratios and the probability of bankruptcy are additive and proportional. This structure enables users to determine how changes in individual financial ratios affect the overall score, facilitating transparency and ease of interpretation. However,

Qiu et al. (

2020) critique the Z-score model’s assumption of linearity, arguing that failing firms do not always cluster neatly within the model’s designated “distress” zone. This misclassification suggests that financial ratios interact in a more complex, non-linear fashion, limiting the model’s effectiveness in capturing intricate, less obvious bankruptcy patterns. Although MDA is frequently regarded as an interpretable method due to its linear combination of predictors,

Alaka et al. (

2018) highlighted several studies questioning the validity of this assumption. The coefficients in the discriminant function do not reliably reflect the relative importance of the input variables, due to the influence of multicollinearity, variable scaling, and the underlying assumptions of homoscedasticity and normal distribution. As a result, despite its apparent transparency, MDA can be challenging to interpret in practice, especially when used for decision-making or explanatory purposes. The assumption of independent and proportional relationships between variables may oversimplify the underlying financial dynamics and, in some cases, produce coefficients with counterintuitive signs (

Balcaen & Ooghe, 2006).

Conversely, ML-based models, particularly deep learning and ensemble methods, demonstrate superior predictive accuracy and the ability to model complex, non-linear relationships. However, this comes at the cost of interpretability. While certain rule-based classification techniques, such as rough sets, CBR, GA, and DT, produce explanatory outputs that are relatively easy to interpret,

Alaka et al. (

2018) observed a general trade-off between accuracy and transparency in ML models: The more accurate the model, the less interpretable its results tend to be. This observation aligns with findings by

Jones (

2023) who highlights that the deep learning model developed by

Alam et al. (

2021), specifically designed for panel-structured datasets, outperforms all other models in predictive power but provides minimal transparency regarding the influence of individual predictor variables. This limitation arises because deep learning methodologies do not readily allow for the extraction of variable importance scores, making it difficult to interpret the role and behavior of financial indicators in the model’s decision-making process.

In conclusion, while the Altman Z-score model is less accurate than ML-based models in predictive performance, it remains a viable option for use cases where simplicity, transparency and interpretability are prioritized over prediction accuracy. In scenarios where transparency in decision-making is crucial, the Z-score model provides a practical alternative due to its straightforward structure and ease of interpretation. When employing ML models, users may benefit from focusing on rule-based classification techniques such as rough sets, CBR, GA, and DT (

Alaka et al., 2018). While these techniques generally achieve lower accuracy rates than more advanced ML methods, they usually surpass traditional statistical models (

Li & Sun, 2011,

2013;

Rafiei et al., 2011;

Heo & Yang, 2014;

Hosaka, 2019).

Future research should focus on refining the Z-score model by incorporating evolving market dynamics while preserving its interpretability and simplicity. Notably, the model remains insufficiently explored in certain contexts where it demonstrates limited predictive efficacy, such as start-ups, technology companies, and longer prediction horizons. Addressing these gaps could enhance the model’s applicability across diverse financial environments.

Conversely, research on ML-based models should aim to improve their interpretability and mitigate their inherent “black box” nature. A promising direction for future studies involves integrating topological data analysis (TDA) with ML techniques.

Qiu et al. (

2020) demonstrate that TDA can offer deeper insights into financial distress while maintaining a level of interpretability that traditional ML models often lack. In particular, TDA ball mapper has proven effective in identifying clusters of firms at risk, providing a more nuanced understanding of corporate failure than conventional linear models.

A further strand of research suggested by

Jones (

2023) entails the use of ML in processing text data. Specifically, the integration of text mining and NLP techniques might further enhance the explanatory power of corporate failure prediction models.

Another promising avenue for enhancing transparency in ML-based models is the advancement of “explainable AI” techniques. Feature importance methods, such as local interpretable model-agnostic explanations (LIME) and Shapley additive explanations (SHAP), have shown potential in approximating the significance of individual variables (

Schalck & Yankol-Schalck, 2021;

Nguyen et al., 2025;

Fasano et al., 2025).

Fasano et al. (

2025) developed a deep recurrent neural network architecture and applied SHAP to quantify the contribution of each financial index to the prediction process. Their approach successfully increased the interpretability of ML models by identifying the most influential financial indicators in predicting corporate failure. Future research should continue exploring these interpretability-enhancing methodologies, ensuring that ML-based models not only improve predictive accuracy but also provide meaningful insights for decision-makers in financial risk assessment.

4.1.4. Complexity

According to

Rogers (

1995), complexity—defined as the “degree to which an innovation is perceived as relatively difficult to understand and use”—is negatively associated with the adoption rate of innovations. This insight is particularly relevant in the context of corporate failure prediction models, which exhibit considerable variation in complexity, ranging from intuitive statistical formulas to highly sophisticated ML-based approaches.

The Z-score model developed by

Altman (

1968)—with its relatively simple structure based on a linear combination of a few financial ratios—has gained widespread popularity among practitioners, including auditors, lenders, security analysts, regulators, and advisors (

Altman et al., 2017;

Altman, 2018a,

2018b). The model’s simplicity and ease of application make it especially suitable for a variety of use cases (

Altman, 2018b).

In response to implied limitations of simple statistical approaches, failure prediction models have evolved toward more complex configurations, incorporating ML techniques and drawing on large-scale datasets for training and testing (

Jones, 2023). Although rule-based classification techniques are generally easier to interpret and audit than the more advanced ML models, they are not as straightforward as the Z-score, and their performance advantage over the Z-score model is often limited. In contrast, the more advanced ML-based models typically exhibit superior predictive accuracy and are more adaptable to heterogeneous and dynamic economic conditions (

Jones, 2023). However, their complexity might pose a considerable barrier to adoption. Developing and deploying ML-based models typically requires advanced technical expertise, access to high-quality data, and ongoing model validation—resources that may not be readily available within organizations. Thus, the increased complexity of ML-based models introduces a trade-off between predictive performance and usability. As a result, their adoption might be constrained due to limited, specialized resources in many, particularly smaller, organizational settings.

Nevertheless, ongoing advancements in AI research may help bridge this gap. For instance, future research could explore integrating deep learning models with “Interactive AI” systems, designed to facilitate naturalistic, bidirectional communication between users and algorithms. Such integration has the potential to reduce perceived complexity by translating technical outputs into intuitive, actionable insights (

Sarker, 2022). These developments could significantly enhance the usability of sophisticated models, thereby enabling broader adoption across a range of organizational contexts.

4.1.5. Trialability

The trialability of an innovation—the degree to which it can be experimented with on a limited basis—positively correlates with its adoption, particularly in early stages when precedents are limited. According to

Rogers (

1995), perceived trialability within a social system is decisive for the willingness to adopt innovation, especially among early adopters who often lack observable benchmarks for guidance.

In the context of corporate failure prediction models, practical trialability in operational settings is inherently constrained. These models aim to predict rare and inherently complex events whose real-world occurrence cannot easily be simulated or observed in a test environment. Consequently, proof-of-concept efforts typically rely on retrospective validation using historical financial or non-financial data. While this may offer some indication of technical feasibility, it introduces considerable uncertainty as financial structures and market dynamics can vary significantly across firms and time periods, thereby limiting the generalizability of the results. Moreover, there is a pronounced time lag in realizing a potential return on the investment in failure prediction models, which makes near-term economic validation nearly impossible. Combined with upfront implementation and data integration efforts, particularly for more sophisticated ML-based models, these uncertainties may reduce organizational willingness to adopt such technologies.

One way to reduce uncertainty is through real-world case studies that demonstrate the successful application and impact of failure prediction models. In the case of the Z-score model, there is an established body of literature demonstrating its predictive utility in specific corporate collapses. For example,

MacCarthy (

2017) showed that both the Altman Z-score and Beneish M-score could have provided early warnings about Enron’s financial distress and manipulation years before its collapse.

Altman and La Fleur (

1981) highlighted how the Z-score was actively used by executives at GTI Corporation as a strategic management tool to guide recovery efforts and restore GTI’s financial stability. This case study exemplifies how failure prediction models, like the Altman Z-score, could be pragmatically integrated into the strategic planning of companies to achieve financial recovery.

Altman (

2018b) outlines numerous applications of the Z-score in practice, from credit risk assessment and audit support to investment decision-making, noting its broad and continued use across sectors even five decades after its introduction. These documented applications might enhance the perceived trialability of the Z-score model and contribute to its sustained credibility in both academic and practitioner communities. Nevertheless, we expect that additional contemporary case studies, covering a broader range of real-world scenarios, would further facilitate the adoption of failure prediction models by illustrating their practical value in diverse operational contexts.

By contrast, ML-based failure prediction models currently lack equivalent real-world case studies published in the academic literature. While research has shown the predictive superiority of ML models, their deployment in actual decision-making processes remains largely opaque. As a result, firms lack examples that would help de-risk investments in ML approaches.

We therefore posit that increased transparency and documentation of ML model implementations in real-world corporate environments would improve their perceived trialability. Case studies that detail not only predictive performance but also integration challenges, cost structures, and managerial decision-making outcomes would provide much-needed reference points for potential adopters. Without this, organizations may continue to favor more established models like the Z-score, not necessarily due to superior predictive power, but because of greater practical visibility and demonstrable utility. Although uncertainty can never be fully eliminated, given that model performance may vary across different organizational and market contexts, well-documented examples can reduce perceived risk and help justify upfront investments.

4.2. Economic Context

In line with

Qin et al. (

2020), we treat the economic dimension as a distinct component within the technology adoption framework. This perspective incorporates the dimension of relative advantage, as defined by

Rogers (

1995), which captures the perceived economic benefits associated with adopting a new technology.

Rogers (

1995) defines relative advantage as “the degree to which an innovation is perceived as being better than the idea it supersedes,” encompassing benefits such as economic profitability, social prestige, or other gains. A strong relative advantage enables organizations to outperform competitors through faster market development, cost-efficient production, and superior product offerings. It is one of the most influential factors driving innovation adoption, with studies consistently confirming a positive relationship between relative advantage and adoption rates (

Rogers, 1995;

Jeyaraj et al., 2006;

Al Hadwer et al., 2021;

Bryan & Zuva, 2021;

Emon, 2023). Technologies can enhance core value chain activities, such as improving speed, quality, or cost-efficiency, as well as support functions. For example, RFID systems optimize supply chains, while data technologies can indirectly enhance organizational performance by generating knowledge for better decision-making.

Although failure prediction models do not directly transform core operations, they can enrich the business intelligence landscape by offering predictive insights that inform strategic decisions. Nevertheless, these models are typically viewed as risk mitigation tools rather than strategic assets.

Rogers (

1995) notes that preventive innovations tend to face slower adoption because their relative advantage is less tangible, particularly compared to technologies offering immediate returns on the investment.

In the absence of regulatory requirements, two key factors are economically decisive when evaluating whether to implement a new technology: the expected financial impact—whether through revenue generation, cost reduction, or risk mitigation—and the costs of implementation and ongoing operation. The interaction between these two elements provides a clear indication of the return on investment for the respective technology. Technologies that offer a higher return are therefore more likely to be prioritized within corporate decision-making.

With regard to failure prediction models, we examine both the breadth of their applicability and the costs associated with their development and deployment. A broader applicability spectrum indicates that the model can support a wider range of use cases within the organization, thereby delivering value to multiple stakeholders. We expect that increased versatility in application will contribute to a higher overall corporate benefit.

4.2.1. Applicability

Four attributes—predictive accuracy, forecasting horizon, interpretability and manageability—determine a failure prediction model’s practical value. While corporate managers might favor interpretability, as it links risk signals to actionable steps, lenders emphasize accuracy and model generalizability for managing portfolio risks. Longer prediction horizons are particularly critical for turnaround planning, where reliable multi-year forecasts are needed to judge corrective measures. Manageability, in turn, matters most to resource-constrained SMEs; enhancing model generalizability would reduce the number of models required to address diverse use cases, thereby improving overall manageability.

The classical Z-score remains attractive: its original weights still perform well across countries decades later (

Altman, 2018a), giving unmatched simplicity and transparency. While one-year-ahead failure predictions generally perform well for both Z-score and ML-based models, ML approaches deliver higher accuracy over longer timeframes. While short-term models serve credit and supply chain screening, they are weak early-warning tools for managerial turnaround—a process that unfolds over several years (

Merendino & Sarens, 2020;

Bouncken et al., 2022). Since corporate crises do not follow a uniform trajectory, detecting early signs of a crisis remains challenging, particularly when the crisis unfolds rapidly (

Lukason & Laitinen, 2019). Effective turnaround demands timely detection, as managerial latitude shrinks as distress deepens (

Liou & Smith, 2007), and countermeasures, as leadership change, marketing spend and R&D investments need lead time before benefits emerge (

Schweizer & Nienhaus, 2017;

Bhattacharya et al., 2024). Managers therefore need tools that track the direction of risk, not merely static default probabilities.

Das and LeClere (

2003) further note that both the likelihood and speed of a turnaround evolve over time, shaped by shifting managerial responses. Larger firms tend to recover more quickly, potentially due to greater strategic flexibility and organizational resilience.

The Z-score’s ability to observe trends fits the purpose of managing a turnaround. However, its long-horizon accuracy is modest. In contrast, ML-based models offer greater potential for capturing the complex, non-linear trajectories of corporate decline. Their ability to integrate diverse data sources and detect subtle patterns positions them as promising tools for early crisis detection far ahead of time. Nevertheless, their limited interpretability and manageability remains a barrier to adoption in managerial practice. Enhancing the explainability of these models could bridge this gap and substantially increase their value as decision-support tools across multiple use cases.

In terms of SME failure prediction, research has expanded for both the Z-score model (

Altman et al., 2020) and ML-based models (

Camacho-Minano et al., 2015;

Tobback et al., 2017). However, findings remain partially contradictory, highlighting further research potential. For instance,

Altman et al. (

2020), using a dataset primarily composed of SMEs, found that LR and NN achieved the highest overall rankings. This contrasts with the findings of

Jones et al. (

2017) and

Barboza et al. (

2017), who reported superior performance for more advanced ML models, such as gradient boosting, bagging, and random forest, over statistical approaches. Moreover, studies specifically investigating start-ups and technology-driven companies remain limited. This gap underscores the need for further research to assess the applicability and effectiveness of failure prediction models in these dynamic and high-growth sectors.

4.2.2. Utilization Costs

Regarding utilization costs, we consider both the initial implementation efforts and the ongoing maintenance costs required to operate failure prediction models. The evaluation of ML-based models is grounded in more sophisticated failure prediction models, which is where these models surpass the Z-score in terms of predictive accuracy.

Due to its simplicity, the deployment of the Z-score model in a corporate setting does not require advanced infrastructure, integration efforts, specialized expertise, or significant resources. In contrast, ML-based models typically demand sophisticated data infrastructure, substantial ML and engineering capabilities, and high-performance computing resources for model training.

Alaka et al. (

2018) note that ML models typically require more time to develop than statistical tools, primarily because they rely on extensive training data and iterative training cycles (

Kumar & Ravi, 2007). In particular, deep learning models require significantly larger volumes of training data than traditional ML techniques to attain high predictive accuracy (

Alam et al., 2021). However, they offer several advantages, including superior ability to handle complex real-world scenarios, enhanced capacity for self-correction, and reduced reliance on developer guidance (

Alam et al., 2021). When only a small sample size is available, users are advised to prioritize SVM, CBR, and rough set approaches, as these methods require comparatively less data for effective training (

Alaka et al., 2018).

In terms of maintenance, ML models entail significantly greater effort than the static Z-score model. Sustaining model accuracy necessitates continuous monitoring, retraining, and upkeep of data pipelines. Among ML approaches, CBR and GA are considered the most adaptable for failure prediction, with CBR being especially easy to update by simply adding new cases to the case library (

Alaka et al., 2018). Although ANNs are robust to sample variation, they require retraining when confronted with novel cases that differ substantially from those used in the original training set (

Alaka et al., 2018).

Overall, for ML-based models to excel they incur higher utilization costs than the Z-score model. For short-term prediction horizons, the marginal improvement in predictive performance may not justify these costs (

Veganzones & Severin, 2021). Furthermore, there is no widely accepted standardized, fully transparent ML-based model currently available that discloses training data and algorithms for organizational adoption. As a result, companies must develop such models in-house. While financial institutions may possess the necessary scale, resources, expertise, and data access to do so, most businesses, especially SMEs, lack these capabilities. This presents a significant barrier, as organizations must allocate substantial time, data, and resources to independently develop, test, implement, and maintain ML-based failure prediction models.

4.3. Organizational Context

The organizational context encompasses descriptive characteristics of firms, including their scope, size, and managerial beliefs (

Salwani et al., 2009).

Gangwar et al. (

2014), in a review of organizational IT adoption, identified several significant variables, including financial resources, firm structure, organizational slack, innovation capacity, knowledge capability, operational capability, strategic use of technology, trust, technological resources, top management support, support for innovation, quality of human capital, expertise, infrastructure, and organizational readiness. However, the relevance of these variables varies by context. For instance, firm size significantly influenced the adoption of RFID, e-commerce, and ERP, but not EDI, due to differences in strategic perception. Across studies, top management support consistently emerged as a key predictor of IT adoption (

Jeyaraj et al., 2006).

We assess the prediction model types based on the criteria of expert knowledge capability, organizational readiness, size and slack, top management support, and user support—factors identified as significant across a range of studies (

Jeyaraj et al., 2006;

Gangwar et al., 2014;

Qin et al., 2020). Key differences between the Z-score and ML-based models become apparent along these determinants.

ML-based models, in general, demand greater organizational readiness and require higher upfront investment in infrastructure, data governance, ML and data science capabilities. A lack of these capabilities has been cited as a key reason for AI project failure (

Ryseff et al., 2024), whereas the Z-score remains low-cost, requires minimal infrastructure, and is broadly accessible to firms with limited technical resources. Expert knowledge capability in combination with organizational size and slack also shape adoption capacity. Larger firms are more likely to possess surplus (financial, human, and managerial) resources that support innovation and the acquisition of critical ML and data science expertise. They benefit from economies of scale when deploying models across departments. Smaller firms, however, may lack access to ML engineers and data scientists, or find these resources tied to core operational tasks. As a result, while ML-based models are more feasible for larger firms, the Z-score remains viable for firms of all sizes due to its simplicity and minimal resource requirements. Top management support tends to be more critical for ML models, which require strategic commitment due to their complexity, implementation timelines, and resource demands. This complexity increases the burden on management to ensure alignment between strategic intent and technical execution—a factor widely reported as a root cause of failure in AI initiatives, where leadership misalignment was found to be the most frequent source of project failure (

Ryseff et al., 2024). The Z-score, by contrast, can easily be integrated into existing workflows with minimal executive involvement and upfront investment. Top management support for ML-based failure prediction models may, however, increase in the future, as market sentiment continues to emphasize the positive impact of AI adoption on shareholder value (

Eisfeldt et al., 2023;

Falcioni, 2024).

Finally, user support is a critical factor. ML models typically require structured training, technical assistance, and active user engagement throughout the implementation life cycle. The lack of user buy-in and resistance from domain experts—who may perceive AI as a threat to their roles—has been documented as a contributor to AI project failure (

Ryseff et al., 2024). In contrast, the Z-score requires minimal user support and training, contributing to a wider acceptance and ease of implementation.

4.4. Environmental Context

The environmental context refers to the broader business ecosystem—comprising the interconnected governmental, regulatory, and social aspects; market and industry life cycle dynamics; technological advancements; and interorganizational relationships—that collectively shape how companies operate. It comprises external forces that exert a significant influence on both the overall industry and the individual firms within it. These include government incentives, regulatory requirements, and broader institutional conditions such as industry norms, pressure intensity, network intensity and environmental stability (

Jeyaraj et al., 2006;

Salwani et al., 2009;

Gangwar et al., 2014). It comprises competitive dynamics such as rivalry, relationships with buyers and suppliers, and elements associated with the industry life cycle (

Bryan & Zuva, 2021). Synthesizing empirical findings on environmental factors,

Gangwar et al. (

2014) identified several key variables that shape organizational technology adoption, including customer demand, competitive and trading partner pressure, vendor support, commercial dependence, environmental uncertainty as well as information and network intensity. These results align with the earlier work of

Jeyaraj et al. (

2006), who identified environmental instability and external pressure as significant predictors of IT innovation adoption. Specifically, competitive pressure, supplier expectations, and conformity to industry standards emerged as critical drivers of organizational IT adoption (

Iacovou et al., 1995;

Premkumar & Roberts, 1999;

Nam et al., 2019).

Findings regarding the influence of government regulation have been more nuanced. While there is general recognition of the government’s potential role in shaping innovation adoption, empirical evidence remains mixed. For example,

Kuan and Chau (

2001) reported that adopters of innovation perceived higher government pressure than non-adopters. Similarly,

Qin et al. (

2020) identified national policy requirements as the most influential driver in the adoption of building information modeling technology, underscoring the importance of top-down regulatory influence. Conversely,

Pan and Jang (

2008) found that regulatory policies were statistically insignificant predictors, a result potentially attributable to the high standard deviation and limited sample size in their study.

Salwani et al. (

2009) did not find compelling evidence to support the role of regulatory support in influencing IT adoption. Similarly,

Scupola (

2009) also observed mixed evidence concerning the regulatory environment, further illustrating the variability of regulatory impact across different contexts. The role of external support for information systems has likewise yielded inconclusive findings. While

DeLone (

1981) identified it as a critical variable for adoption, subsequent research did not consistently replicate this result (

Premkumar & Roberts, 1999).

The Z-score model and ML-based models exhibit contrasting characteristics in relation to key environmental determinants such as regulatory requirements, industry popularity, competitive pressure, environmental instability, and the ability to leverage external information sources (e.g., data, insights or competitive benchmarks relevant to the adoption of the technology).

Under the Basel Accord, specifically Basel II and Basel III, financial institutions using the internal ratings-based approach are required to maintain models that estimate the probability of default (PD) for their credit exposures. According to the Basel II framework (

Basel Committee on Banking Supervision [BCBS], 2006) and its refinements in Basel III (

Basel Committee on Banking Supervision [BCBS], 2017), PD must be estimated using historical default data and should reflect a long-run default-weighted average loss rate. Banks must ensure that these models are statistically sound, appropriately conservative, and regularly validated. Importantly, regulators must formally approve these internal models before they can be used for regulatory capital calculations.

While regulatory frameworks in the banking sector mandate the use of robust default prediction models—subject to supervision, validation, and stress testing—no comparable regulatory obligation exists for non-financial corporations. As a result, companies are not legally compelled to implement failure prediction models, although doing so may enhance internal risk management. Furthermore, reliance on ML-based models could present regulatory challenges in certain jurisdictions. For example, the EU AI Act (

Regulation (EU), 2024) introduces governance obligations that could constrain the use of ML-based models. Although corporate failure prediction models that assess corporate entities are not directly classified as high-risk under Annex III of the AI Act, their regulatory classification depends on how they are used and who is affected by the outcomes. If such models involve profiling individuals within firms to derive failure predictions, rely on general-purpose AI systems, or influence downstream decisions that affect individuals (such as employees, executives, or small business owners), then indirect obligations under the AI Act may still apply. These include requirements related to human oversight, fairness, transparency, quality management, and data governance. Few jurisdictions have issued AI-specific regulations for financial institutions. As a result, many institutions remain cautious about adopting AI in consumer-facing applications, citing uncertainty over regulatory expectations regarding accountability, ethics, data privacy, fairness, transparency, and explainability (

Crisanto et al., 2024).

Consequently, ML-based models, despite their superior predictive capabilities, may face regulatory barriers due to their often opaque, “black box” nature. Many such models—particularly those employing deep learning or complex ensemble techniques—are difficult to interpret and explain, complicating regulatory compliance.

Rudin (