Abstract

A new comprehensive approach to nonlinear time series analysis and modeling is developed in the present paper. We introduce novel data-specific mid-distribution-based Legendre Polynomial (LP)-like nonlinear transformations of the original time series that enable us to adapt all the existing stationary linear Gaussian time series modeling strategies and make them applicable to non-Gaussian and nonlinear processes in a robust fashion. The emphasis of the present paper is on empirical time series modeling via the algorithm LPTime. We demonstrate the effectiveness of our theoretical framework using daily S&P 500 return data between 2 January 1963 and 31 December 2009. Our proposed LPTime algorithm systematically discovers all the ‘stylized facts’ of the financial time series automatically, all at once, which were previously noted by many researchers one at a time.

1. Introduction

When one observes a sample , of a (discrete parameter) time series , one seeks to nonparametrically learn from the data a stochastic model with two purposes: (a1) scientific understanding; (a2) forecasting (predict future values of the time series under the assumption that the future obeys the same laws as the past). Our prime focus in this paper is on developing a nonparametric empirical modeling technique for nonlinear (stationary) time series that can be used by data scientists as a practical tool for obtaining insights into (i) the temporal dynamic patterns and (ii) the internal data generating mechanism; a crucial step for achieving (a1) and (a2).

Under the assumption that the time series is stationary (which can be extended to asymptotically stationary), the distribution of is identical for all t, and the joint distribution of and depends only on lag h. Typical estimation goals are as follows:

- (1)

- Marginal modeling: The identification of the marginal probability law (in particular, the heavy-tailed marginal densities) of a time series plays a vital role in financial econometrics. Notations: common quantile Q, inverse of the distribution function F, respectively denoted and . The mid-distribution is defined as .

- (2)

- Correlation modeling: Covariance function (defined for positive and negative lag h) . , assumed zero in our prediction theory. Correlation function .

- (3)

- Frequency-domain modeling: When covariance is absolutely summable, define spectral density function .

- (4)

- Time-domain modeling: The time domain model is a linear filter relating to white noise , independent random variables. Autoregressive scheme of order m, a predominant linear time series technique for modeling conditional mean, is defined as (assuming ):with the spectral density function given by:To fit an AR model, compute the linear predictor of given by:Verify that the prediction error is white noise. The best fitting AR order is identified by the Akaike criterion (AIC) (or Schwarz’s criterion, BIC) as the value of m minimizes:In what follows, we aim to develop a parallel modeling framework for nonlinear time series.

2. From Linear to Nonlinear Modeling

Our approach to nonlinear modeling, called LPTime1, is via approximate calculation of conditional expectation . Because with probability one , one can prove that the conditional expectation of given past values is equal to (with probability one) the conditional expectation of given past values , which can be approximated by linear orthogonal series expansion in score functions constructed by Gram–Schmidt orthonormalization of powers of:

where is the standard deviation of the mid-distribution transform random variable given by and denotes the probability mass function of Y. This score polynomial allows us to simultaneously tackle the discrete (say count-valued) and continuous time series. Note that for Y continuous, reduces to:

and all the higher order polynomials can be compactly expressed as , where denotes orthonormal Legendre polynomials. It is worthwhile to note that are orthonormal polynomials of mid-rank (instead of polynomials of the original y’s), which inject robustness into our analysis while allowing us to capture nonlinear patterns. Having constructed score functions of y denoted by , we transform it into a unit interval by letting and defining:

In general, our score functions are custom constructed for each distribution function F, which can be discrete or continuous.

3. Nonparametric LPTime Analysis

Our LPTime empirical time series modeling strategy for nonlinear modeling of a univariate time series is based on linear modeling of the multivariate time series:

where , our tailor-made orthonormal mid-rank-based nonlinear transformed series. We summarize below the main steps of the algorithm LPTime. To better understand the functionality and applicability of LPTime, we break it into several inter-connected steps, each of which highlights:

- (a)

- The algorithmic modeling aspect (how it works).

- (b)

- The required theoretical ideas and notions (why it works).

- (c)

- The application to daily S&P 500 return data between 2 January 1963 and 31 December 2009 (empirical proof-of-work).

3.1. The Data and LP-Transformation

The data used in this paper are daily S&P 500 return data between 2 January 1963 and 31 December 2009 (defined as , where is the closing price on trading day t). We begin our modeling process by transforming the given univariate time series into multiple (robust) time series by means of special data-analytic construction rules described in Equations (4)–(6) and (7). We display the original “normalized” time series and the transformed time series on a single plot.

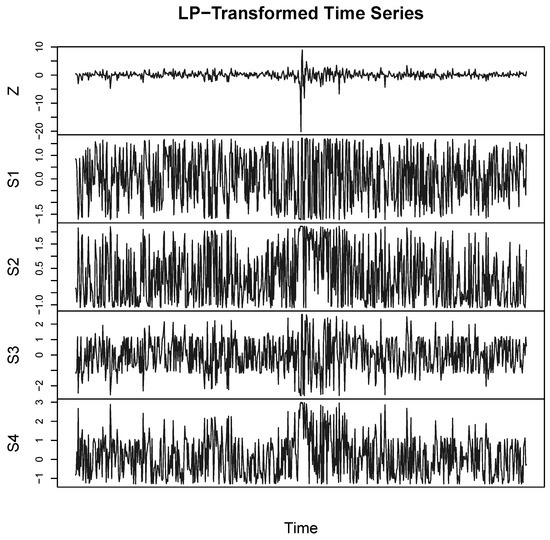

Figure 1 shows the first look at the transformed S&P 500 return data between October 1986 and October 1988. These newly-constructed time series work as a universal preprocessor for any time series modeling in contrast with other ad hoc power transformations. In the next sections, we will describe how the temporal patterns of these multivariate LP-transformed series generate various insights for the time series in an organized fashion.

Figure 1.

LP-transformed S&P 500 daily stock returns between October 1986 and October 1988. This is just a small part of the full time series from 2 January 1963–31 December 2009 (cf. Section 3.1).

3.2. Marginal Modeling

Our time series modeling starts with the nonparametric identification of probability distributions.

Non-Normality Diagnosis

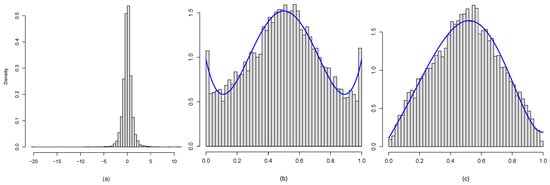

Does the normal probability distribution provide a good fit to the S&P 500 return data? Figure 2a clearly indicates that the distribution of daily return is certainly non-normal. At this point, the natural question is how the distribution is different from the assumed normal one? A quick insight into this question can be gained by looking at the distribution of the random variable , called the comparison density (Mukhopadhyay 2017; Parzen 1997), given by:

where is the quantile function. The flat uniform shape of the estimated comparison density provides a quick graphical diagnostic to test the fit of the parametric G to the true unknown distribution F. The Legendre polynomial-based orthogonal series comparison density estimator is given by:

where the Fourier coefficients .

Figure 2.

(a) The marginal distribution of daily returns; (b) plots the histogram of and display the LP-estimated comparison density curve. and (c) shows the associated comparison density estimate with G as t-distribution with 2 degrees of freedom.

For , Figure 2b displays the histogram of for . The corresponding comparison density estimate is shown with the blue curve, which reflects the fact that the distribution of daily return (i) has a sharp peaked (inverted “U” shape) and (ii) is negatively skewed with (iii) fatter tails than the Gaussian distribution. We can carry out a similar analysis by asking whether the t-distribution with two degrees of freedom provides a better fit. Figure 2c demonstrates the full analysis, where the estimated comparison density indicates that (iv) the t-distribution fits the data better than normal, especially in the tails, although not a fully-adequate model.

The shape of the comparison density (along with the histogram of , ) captures and exposes the adequacy of the assumed model G for the true unknown F; thus acting as an exploratory, as well as confirmatory tool.

3.3. Copula Dependence Modeling

Distinguishing uncorrelatedness and independence by properly quantifying association is an essential task in empirical nonlinear time series modeling.

3.3.1. Nonparametric Serial Copula

We display the nonparametrically-estimated smooth serial copula density to get a much finer understanding of the lagged interdependence structure of a stationary time series. For a continuous distribution, define the copula density for the pair as the joint density of and , which is estimated by sample mid-distribution transform , . Following Mukhopadhyay and Parzen (2014) and Parzen and Mukhopadhyay (2012), we expand the copula density (square integrable) in a orthogonal series of product LP-basis functions as:

where . Equation (10) allows us to pictorially represent the information present in the LP-comoment matrix via copula density. The various “shapes” of the copula density give insight into the structure and dynamics of the time series.

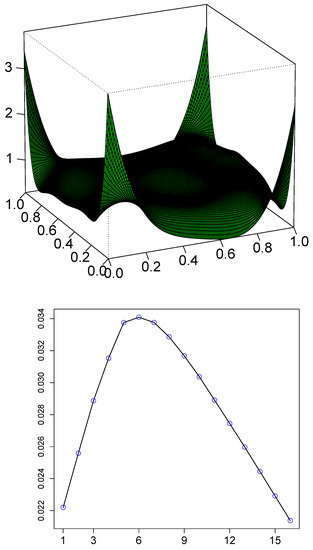

Now, we apply this nonparametric copula estimation theory to model the temporal dependence structure of S&P return data. The copula density estimate based on the smooth LP-comoments is displayed in Figure 3. The shape of the copula density shows strong evidence of asymmetric tail dependence. Note that the dependence is only present in the extreme quantiles, another well-known stylized fact of economic and financial time series.

Figure 3.

Top: Nonparametric smooth serial copula density (lag one) estimate of S&P return data. Bottom: BIC plot to select the significant LP-comoments computed in Equation (11).

3.3.2. LP-Comoment of Lag h

Here, we will introduce the concept of the LP-comoment to get a complete understanding of the nature of the serial dependence present in the data. The LP-comoment of lag h is defined as the joint covariance of and .

The lag one LP-comoment matrix for S&P 500 return data is displayed below:

To identify the significant elements, we first rank order the squared LP-comoments. Then, we take the penalized cumulative sum of m comoments using BIC criterion , where n is the sample size, and choose the m for which the BIC is maximum. The complete BIC path for S&P 500 data is shown in Figure 3, which selects the top six comments, also denoted by in the LP-comoment matrix display (Equation (11)). By making all those uninteresting “small” comoments equal to zero, we get the “smooth” LP-comoment matrix denoted by . The linear auto-correlation is captured by the term. The presence of higher order significant terms in the LP-comoment matrix indicates the possible nonlinearity. Another interesting point to note is that , whereas the auto-correlation between the mid-rank transformed data , considerably larger and picked by the BIC criterion. This is an interesting fact as it indicates that the rank-transform time series () is much more predictable than the original raw time series .

3.3.3. LP-Correlogram, Evidence and Source of Nonlinearity

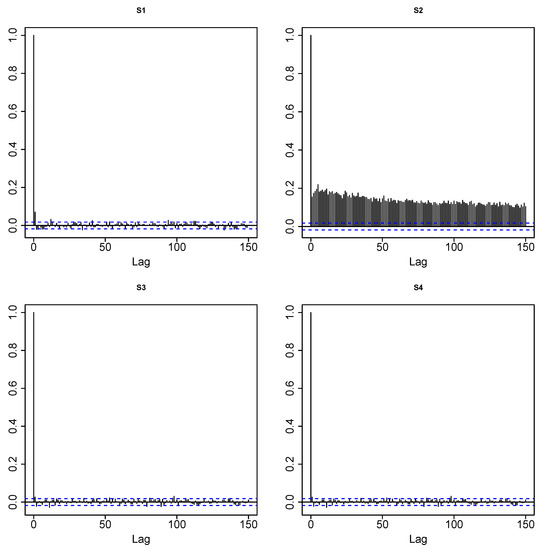

We provide a nonparametric exploratory test for (non)linearity (the spectral domain test is given in Section 3.6). Plot the correlogram of : (a) diagnose possible nonlinearity; and (b) identify possible sources. This constitutes an important building block for methods of model identification. The LP-correlogram generalizes the classical sample Autocorrelation Function (ACF). Applying the acf() R function on generates the graphical display of our proposed LP-correlogram plot.

Figure 4 shows the LP-correlogram of S&P stock return data. Panel A shows the absence of linear autocorrelation, which is known as an efficient market hypothesis in finance literature. A prominent auto-correlation pattern for the series (top right panel of Figure 4) is the source of nonlinearity. This fact is known as “volatility clustering”, which says that a large price fluctuation is more likely to be followed by large price fluctuations. Furthermore, the slow decay of the autocorrelation of the series can be interpreted as an indication of the long-memory volatility structure.

Figure 4.

LP-correlogram: Sample autocorrelations of LP-transformed time series. The decay rate of the sample autocorrelations of appears to be much slower than the exponential decay of the ARMA process, implying possible long-memory behavior.

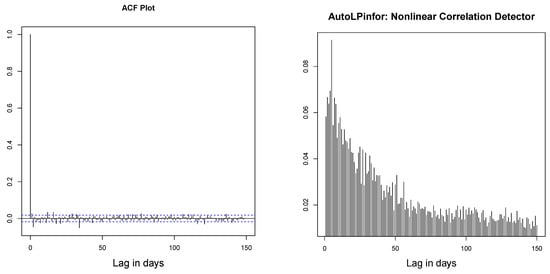

3.3.4. AutoLPinfor: Nonlinear Correlation Measure

We display the sample AutoLPinforplot, a diagnostic tool for nonlinear autocorrelation. We define the lag h AutoLPinfor as the squared Frobenius norm of the smooth-LP-comoment matrix of lag h,

where the sum is over BIC selected for which LP-comoments are significantly non-zero.

Our robust nonparametric measure can be viewed as capturing the deviation of the copula density from uniformity:

which is closely related to the entropy measure of association proposed in Granger and Lin (1994):

It can be shown using Taylor series expansion that asymptotically:

An excellent discussion of the role of information theory methods for unified time series analysis is given in Parzen (1992) and Brillinger (2004). For an extensive survey of tests of independence for nonlinear processes, see Chapter 7.7 of Terasvirta et al. (2010). AutoLPinfor is a new information theoretic nonlinear autocorrelation measure, which detects generic association and serial dependence present in a time series. Contrast the AutoLPinfor plot for S&P 500 return data shown in Figure 5 with the ACF plot (left panel). This underlies the need for building a nonlinear time series model, which we will be discussing next.

Figure 5.

Left: ACF plot of S&P 500 data. Right: AutoLPinforPlot up to lag 150.

3.3.5. Nonparametric Estimation of Blomqvist’s Beta

Estimate the Blomqvist’s (also known as the medial correlation coefficient) of lag h by using the LP-copula estimate in the following equation,

The values and 1 are interpreted as reverse correlation, independence and perfect correlation, respectively. Note that,

For S&P 500 return data, we compute the following dependence numbers,

3.3.6. Nonstationarity Diagnosis, LP-Comoment Approach

Viewing the time index as the covariate, we propose a nonstationarity diagnosis based on LP-comoments of and the time index variable T. Our treatment has the ability to detect the time-varying nature of mean, variance, skewness, and so on, represented by various custom-made LP-transformed time series.

For S&P data, we computed the following LP-comoment matrix to investigate the nonstationarity:

This indicates the presence of the slight non-stationarity behavior of variance or volatility () and the kurtosis of tail-thickness (). Similar to AutoLPinfor, we propose the following statistic for detecting nonstationarity:

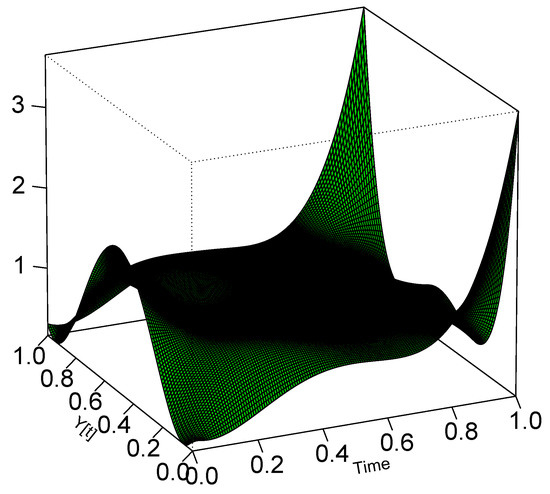

We can also generate the corresponding smooth copula density of based on the smooth matrix to visualize the time-varying information as in Figure 6.

Figure 6.

LP copula diagnostic for detecting non-stationarity in S&P 500 return data.

3.4. Local Dependence Modeling

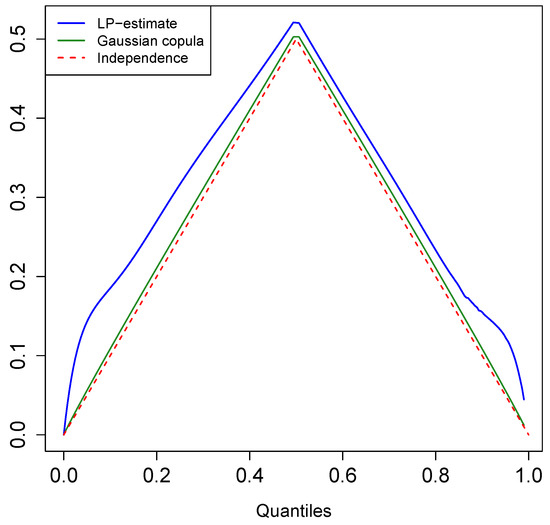

3.4.1. Quantile Correlation Plot and Test for Asymmetry

We display the quantile correlation plot, a copula distribution-based graphical diagnostic to visually examine the asymmetry of dependence. The goal is to get more insight into the nature of tail-correlation.

Motivated by the concept of the lower and upper tail dependence coefficient, we define the quantile correlation function (QCF) as the following in terms of the copula distribution function of denoted by ,

Our nonparametric estimate of the quantile correlation function is based on the LP-copula density, which we denote as . Figure 7 shows the corresponding quantile correlation plot for S&P 500 data. The dotted line represents QCF under the independence assumption. Deviation from this line helps us to better understand the nature of asymmetry. We compute using the fitted Gaussian copula:

where S is the sample covariance matrix. The dark green line in Figure 7 shows the corresponding curve, which is almost identical to the “no dependence” curve, albeit misleading. The reason is the Gaussian copula is characterized by linear correlation, while S&P data are highly nonlinear in nature. As the linear auto-correlation of a stock return is almost zero, we have approximately . Similar to the Gaussian copula, there are several other parametric copula families, which can give similar misleading conclusions. This simple illustration reminds us of the pernicious effect of not “looking into the data”.

Figure 7.

Estimated Quantile Correlation Function (QCF) . It detects asymmetry in the tail dependence between the lower-left quadrant and upper-right quadrant for S&P 500 return data. The red dotted line denotes the quantile correlation function under dependence. The dark green line shows the quantile correlation curve for the fitted Gaussian copula.

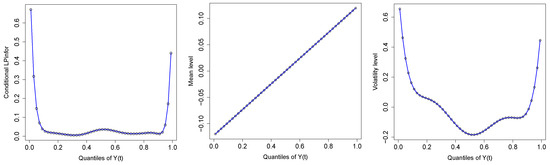

3.4.2. Conditional LPinfor Dependence Measure

For more transparent and clear insight into the asymmetric nature of the tail dependence, we need to introduce the concept of conditional dependence. In what follows, we propose a conditional LPinfor function , a quantile-based diagnostic for tracking how the dependence of on changes at various quantiles.

To quantify the conditional dependence, we seek to estimate . A brute force approach estimates separately the conditional distribution and the unconditional distribution and takes the ratio to estimate this arbitrary function. An alternative elegant way is to recognize that by “going to the quantile domain” (i.e., and ), we can interpret the ratio as “slices” of the copula density, which we call the conditional comparison density:

where the LP-Fourier orthogonal coefficients are given by:

Define the conditional LPinfor as:

We use this theory to investigate the conditional dependency structure of S&P 500 return data. Figure 8a traces out the complete path of the estimated function, which indicates the high asymmetric tail correlation. These conditional correlation curves can be viewed as a “local” dependence measure. An excellent discussion on this topic is given in Section 3.3.8 of Terasvirta et al. (2010).

Figure 8.

(a) The conditional LPinfor curve is shown for the pair . The asymmetric dependence in the tails is clearly shown, and almost nothing is going on in between. (b,c) Display of how the mean and volatility levels of conditional distribution change with respect to the unconditional marginal distribution at different quantiles.

At this point, we can legitimately ask: What aspects of the conditional distributions are changing most? Figure 8b,c displays only the two coefficients and for the S&P 500 return data for the pairs . These two coefficients represent how the mean and the volatility levels of the conditional density change with respect to the unconditional reference distribution. The typical asymmetric shape of conditional volatility shown in the right panel of Figure 8b,c indicates what is known as the “leverage effect”; future stock volatility negatively correlated with past stock return, i.e., stock volatility tends to increase when stock prices drop.

3.5. Non-Crossing Conditional Quantile Modeling

We display the nonparametrically-estimated conditional quantile curves of given . Our new modeling approach uses the estimated conditional comparison density to simulate from by utilizing the given sample via an accept-reject rule to arrive at the “smooth” nonparametric model for . See Parzen and Mukhopadhyay (2013b) for details about the method. Our proposed algorithm generates “large” additional simulated samples from the conditional distribution, which allows us to accurately estimate the conditional quantiles (especially the extreme quantiles). By construction, our method is guaranteed to produce non-crossing quantile curves; thus tackling a challenging practical problem.

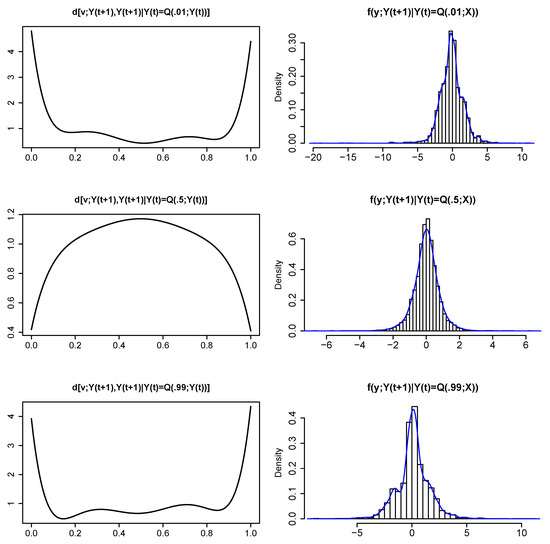

For S&P 500 data, we first nonparametrically estimate the conditional comparison densities shown in the left panel of Figure 9 for and , which can be thought of as a “weighting function” for an unconditional marginal distribution to produce the conditional distributions:

Figure 9.

Each row displays the estimated conditional comparison density and the corresponding conditional distribution for .

This density estimation technique belongs to the skew-G modeling class (Mukhopadhyay 2016). We simulate n = 10,000 samples from by accept-reject sampling from , . The histograms and the smooth conditional densities are shown in the right panel of Figure 9. It shows some typical shapes in terms of long-tailedness.

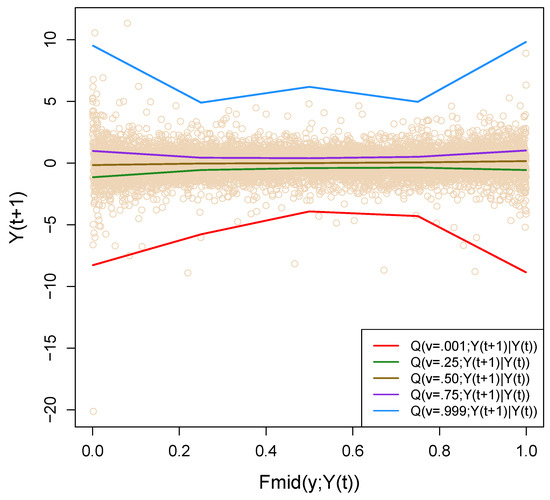

Next, we proceed to estimate the nonparametric conditional quantiles , for , from the simulated data. Figure 10 shows the estimated conditional quantiles. The extreme conditional quantiles have a special significance in the context of financial time series. They are sometimes popularly known as Conditional Value at Risk (CoVaR), currently the most popular quantitative risk management tool (see Adrian and Brunnermeier (2011); Engle and Manganelli (2004)). The red solid line in Figure 10 is , which is known as the 0.1% CoVaR function for a one-day holding period for S&P 500 daily return data. Although the upper conditional quantile curve (blue solid line) shows symmetric behavior around , the lower quantile has a prominent asymmetric shape. These conditional quantiles give the ultimate description of the auto-regressive dependence of S&P 500 return movement in the tail region.

Figure 10.

The figure shows estimated non-parametric conditional quantile curves for S&p 500 return data. The red solid line, which represents , is popularly known as the one-day 0.1% Conditional Value at Risk measure (CoVaR).

3.6. Nonlinear Spectrum Analysis

Here, we extend the concept of spectral density for nonlinear processes. We display the LPSpectrum -Autoregressive (AR) spectral density estimates of . The spectral density for each LP-transformed series is defined as:

We separately fit the univariate AR model for the components of and use the BIC order selection criterion to select the “best” parsimonious parametrization using the Burg method.

Finally, we use the estimated model coefficients to produce the “smooth” estimate of the spectral density function (see Equation (2)). The copula spectral density is defined as:

To estimate the copula spectral density, we use the LP-comoment-based nonparametric copula density estimate. Note that both the serial copula (3.12) and the corresponding spectral density (3.25) capture the same amount of information for the serial dependence of . For that reason, we recommend computing AutoLPinfor as a general dependence measure for non-Gaussian nonlinear processes.

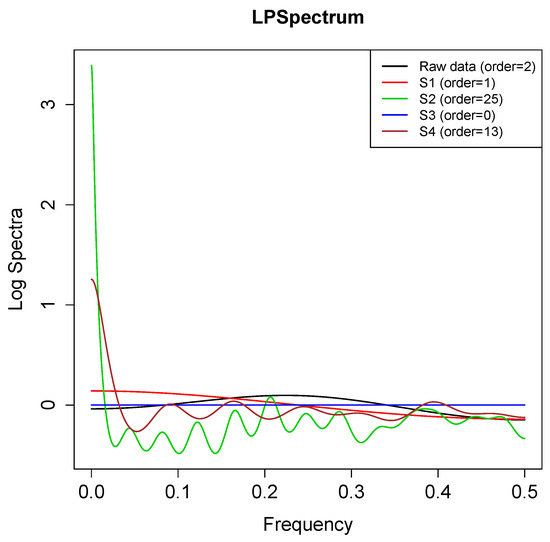

The application of our LPSpectral tool on S&P 500 return data is shown in Figure 11. A few interesting observations are: (i) the conventional spectral density (black solid line) provides no insight into the (complex) serial dependency present in the data; (ii) the nonlinearity in the series is captured by the interesting shapes of our specially-designed times series and , which classical (linear) correlogram-based spectra cannot account for; (iii) the shape of the spectra of and the rank-transformed time series look very similar; and a (iv) pronounced singularity near zero of the spectrum of hints at some kind of “long-memory” behavior. This phenomena is also known as regular variation representation at frequency (Granger and Joyeux 1980).

Figure 11.

LPSpectrum: AR spectral density estimate for S&P 500 return data. Order selected by the BIC method. This provides a diagnostic tool for providing evidence of hidden periodicities in non-Gaussian nonlinear time series.

A quick diagnostic measure for screening significant spectrums can be computed via the information number . The LPSpectrum methodology is highly robust and, thus, can tackle the heavy-tailed S&P data quite successfully.

3.7. Nonparametric Model Specification

The ultimate goal of empirical time series analysis is nonparametric model identification. To model the univariate stationary nonlinear process, we specify the multiple autoregressive model based on of the form:

where is multivariate mean zero Gaussian white noise with covariance . This system of equations jointly describes the dynamics of the nonlinear process and how it evolves over time. We use the BIC criterion to select the model order m, which minimizes:

We carry out this step for our S&P 500 return data. We estimate our multiple AR model based on . We discard due to its flat spectrum (see Figure 11). BIC selects “best” order eight. Although the complete description of the estimated model is clearly cumbersome, we provide below the approximate structure by selecting a few large coefficients from the actual matrix equation. The goal is to interpret the coefficients (statistical parameters) of the estimated model and relate them to economic theory (scientific parameters/theory). This multiple AR LP-model (LPVAR) is given by:

and the residual covariance matrix is:

The autoregressive model of can be considered as a robust stock return volatility model (LPVolatility modeling), which is less affected by unusually large extreme events. The model for automatically discovers many known facts: (a) the sign of the coefficient linking volatility and return is negative, confirming the “leverage effect”; (b) is positively autocorrelated, known as volatility clustering; (c) the positive interaction with lagged accounts for the “excess kurtosis”.

4. Conclusions

This article provides a pragmatic and comprehensive framework for nonlinear time series modeling that is easier to use, more versatile and has a strong theoretical foundation based on the recently-developed theory of unified algorithms of data science via LP modeling (Mukhopadhyay 2016, 2017; Mukhopadhyay and Fletcher 2018; Mukhopadhyay and Parzen 2014; Parzen and Mukhopadhyay 2012, 2013a, 2013b). The summary and broader implications of the proposed research are:

- From the theoretical standpoint, the unique aspect of our proposal lies in its ability to simultaneously embrace and employ the spectral domain, time domain, quantile domain and information domain analyses for enhanced insights, which to the best of our knowledge has not appeared in the nonlinear time series literature before.

- From a practical angle, the novelty of our technique is that it permits us to use the techniques from linear Gaussian time series to create non-Gaussian nonlinear time series models with highly interpretable parameters. This aspect makes LPTime computationally extremely attractive for data scientists, as they can now borrow all the standard time series analysis machinery from R libraries for implementation purposes.

- From the pedagogical side, we believe that these concepts and methods can easily be augmented with the standard time series analysis course to modernize the current curriculum so that students can handle complex time series modeling problems (McNeil et al. 2010) using the tools with which they are already familiar.

The main thrust of this article is to describe and interpret the steps of LPTime technology to create a realistic general-purpose algorithm for empirical time series modeling. In addition, many new theoretical results and diagnostic measures were presented, which laid the foundation for the algorithmic implementation of LPTime. We showed how LPTime can systematically explore the data to discover empirical facts hidden in time series. For example, LPTime empirical modeling of S&P 500 return data reproduces the ‘stylized facts’—(a) heavy tails; (b) non-Gaussian; (c) nonlinear serial dependence; (d) tail correlation; (e) asymmetric dependence; (f) volatility clustering; (g) long-memory volatility structure; (h) efficient market hypothesis; (i) leverage effect; (j) excess kurtosis—in a coherent manner under a single general unified framework. We have emphasized how the statistical parameters of our model can be interpreted in light of established economic theory.

We have recently applied this theory for large-scale eye-movement pattern discovery problem, which came out as the winner (among 82 competing algorithms) of the 2014 IEEE International Biometric Eye Movements Verification and Identification Competition (Mukhopadhyay and Nandi 2017). The proposed algorithm is implemented in the R package LPTime (Mukhopadhyay and Nandi 2015), which is available on CRAN.

We conclude with some general references: a few popular articles: Brillinger (1977, 2004); Engle (1982); Granger and Lin (1994); Granger (1993, 2003); Parzen (1967, 1979); Salmon (2012); Tukey (1980); books: Guo et al. (2017); Terasvirta et al. (2010); Tsay (2010); Woodward et al. (2011); and review articles: Granger (1998); Hendry (2011).

Author Contributions

Conceptualization, S.M. and E.P.; Methodology, S.M. and E.P.; Formal Analysis, S.M.; Computation, S.M.; Writing-Review & Editing, S.M.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Adrian, Tobias, and Markus K Brunnermeier. 2011. Covar. Technical Report. Cambridge: National Bureau of Economic Research. [Google Scholar]

- Brillinger, David R. 1977. The identification of a particular nonlinear time series system. Biometrika 64: 509–15. [Google Scholar] [CrossRef]

- Brillinger, David R. 2004. Some data analyses using mutual information. Brazilian Journal of Probability and Statistics 18: 163–83. [Google Scholar]

- Engle, Robert F. 1982. Autoregressive conditional heteroscedasticity with estimates of the variance of united kingdom inflation. Econometrica: Journal of the Econometric Society 50: 987–1007. [Google Scholar] [CrossRef]

- Engle, Robert F., and Simone Manganelli. 2004. Caviar: Conditional autoregressive value at risk by regression quantiles. Journal of Business & Economic Statistics 22: 367–81. [Google Scholar]

- Granger, Clive, and Jin-Lung Lin. 1994. Using the mutual information coefficient to identify lags in nonlinear models. Journal of Time Series Analysis 15: 371–84. [Google Scholar] [CrossRef]

- Granger, Clive W. J. 1993. Strategies for modelling nonlinear time-series relationships. Economic Record 69: 233–38. [Google Scholar] [CrossRef]

- Granger, Clive W. J. 1998. Overview of nonlinear time series specification in economics. Paper presented at the NSF Symposium on Nonlinear Time Series Models, University of California, Berkeley, CA, USA, 22 May 1998. [Google Scholar]

- Granger, Clive W. J. 2003. Time series concepts for conditional distributions. Oxford Bulletin of Economics and Statistics 65: 689–701. [Google Scholar] [CrossRef]

- Granger, Clive W. J., and Roselyne Joyeux. 1980. An introduction to long-memory time series models and fractional differencing. Journal of Time Series Analysis 1: 15–29. [Google Scholar] [CrossRef]

- Guo, Xin, Howard Shek, Tze Leung Lai, and Samuel Po-Shing Wong. 2017. Quantitative Trading: Algorithms, Analytics, Data, Models, Optimization. New York: Chapman and Hall/CRC. [Google Scholar]

- Hendry, David F. 2011. Empirical economic model discovery and theory evaluation. Rationality, Markets and Morals 2: 115–45. [Google Scholar]

- McNeil, Alexander J., Rüdiger Frey, and Paul Embrechts. 2010. Quantitative Risk Management: Concepts, Techniques, and Tools. Princeton: Princeton University Press. [Google Scholar]

- Mukhopadhyay, Subhadeep. 2016. Large scale signal detection: A unifying view. Biometrics 72: 325–34. [Google Scholar] [CrossRef] [PubMed]

- Mukhopadhyay, Subhadeep. 2017. Large-scale mode identification and data-driven sciences. Electronic Journal of Statistics 11: 215–40. [Google Scholar] [CrossRef]

- Mukhopadhyay, Subhadeep, and Douglas Fletcher. 2018. Generalized Empirical Bayes Modeling via Frequentist Goodness-of-Fit. Nature Scientific Reports 8: 1–15. [Google Scholar] [CrossRef] [PubMed]

- Mukhopadhyay, Subhadeep, and Shinjini Nandi. 2015. LPTime: LP Nonparametric Approach to Non-Gaussian Non-Linear Time Series Modelling. CRAN, R Package Version 1.0-2. Ithaca: Cornell University Library. [Google Scholar]

- Mukhopadhyay, Subhadeep, and Shinjini Nandi. 2017. LPiTrack: Eye movement pattern recognition algorithm and application to biometric identification. Machine Learning. [Google Scholar] [CrossRef]

- Mukhopadhyay, Subhadeep, and Emanuel Parzen. 2014. LP approach to statistical modeling. arXiv, arXiv:1405.2601. [Google Scholar]

- Parzen, Emanuel. 1967. On empirical multiple time series analysis. In Statistics, Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability. Berkeley: University of California Press, vol. 1, pp. 305–40. [Google Scholar]

- Parzen, Emanuel. 1979. Nonparametric statistical data modeling (with discussion). Journal of the American Statistical Association 74: 105–31. [Google Scholar] [CrossRef]

- Parzen, Emanuel. 1992. Time series, statistics, and information. In New Directions in Time Series Analysis. Edited by Emanuel Parzen, Murad Taqqu, David R. Brillinger, Peter Caines, John Geweke and Murray Rosenblatt. New York: Springer Verlag, pp. 265–86. [Google Scholar]

- Parzen, Emanuel. 1997. Comparison distributions and quantile limit theorems. Paper presented at the International Conference on Asymptotic Methods in Probability and Statistics, Carleton University, Ottawa, ON, Canada, July 8–13. [Google Scholar]

- Parzen, Emanuel, and Subhadeep Mukhopadhyay. 2012. Modeling, Dependence, Classification, United Statistical Science, Many Cultures. arXiv, arXiv:1204.4699. [Google Scholar]

- Parzen, Emanuel, and Subhadeep Mukhopadhyay. 2013a. United Statistical Algorithms, LP comoment, Copula Density, Nonparametric Modeling. Paper presented at the 59th ISI World Statistics Congress (WSC) of the International Statistical Institute, Hong Kong, China, August 25–30. [Google Scholar]

- Parzen, Emanuel, and Subhadeep Mukhopadhyay. 2013b. United Statistical Algorithms, Small and Big Data, Future of Statisticians. arXiv, arXiv:1308.0641. [Google Scholar]

- Salmon, Felix. 2012. The formula that killed wall street. Significance 9: 16–20. [Google Scholar] [CrossRef]

- Terasvirta, Timo, Dag Tjøstheim, and Clive W. J. Granger. 2010. Modelling Nonlinear Economic Time Series. Kettering: OUP Catalogue. [Google Scholar]

- Tsay, Ruey S. 2010. Analysis of Financial Time Series. Hoboken: Wiley. [Google Scholar]

- Tukey, John. 1980. Can we predict where “time series” should go next. In Directions in Times Series. Hayward: IMS, pp. 1–31. [Google Scholar]

- Woodward, Wayne A., Henry L. Gray, and Alan C. Elliott. 2011. Applied Time Series Analysis. Boca Raton: CRC Press. [Google Scholar]

| 1 | The LP nomenclature: In nonparametric statistics, the letter L plays a special role to denote robust methods based on ranks and order statistics such as quantile-domain methods. With the same motivation, we use the letter L. On the other hand, P simply stands for Polynomials. Our custom-constructed basis functions are orthonormal polynomials of mid-rank transform instead of raw y-values; for more details see Mukhopadhyay and Parzen (2014). |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).