Abstract

We address the problem of how COVID-19 deaths observed in an oncology clinical trial can be consistently taken into account in typical survival estimates. We refer to oncological patients since there is empirical evidence of strong correlation between COVID-19 and cancer deaths, which implies that COVID-19 deaths cannot be treated simply as non-informative censoring, a property usually required by the classical survival estimators. We consider the problem in the framework of the widely used Kaplan–Meier (KM) estimator. Through a counterfactual approach, an algorithmic method is developed allowing to include COVID-19 deaths in the observed data by mean-imputation. The procedure can be seen in the class of the Expectation-Maximization (EM) algorithms and will be referred to as Covid-Death Mean-Imputation (CoDMI) algorithm. We discuss the CoDMI underlying assumptions and the convergence issue. The algorithm provides a completed lifetime data set, where each Covid-death time is replaced by a point estimate of the corresponding virtual lifetime. This complete data set is naturally equipped with the corresponding KM survival function estimate and all available statistical tools can be applied to these data. However, mean-imputation requires an increased variance of the estimates. We then propose a natural extension of the classical Greenwood’s formula, thus obtaining expanded confidence intervals for the survival function estimate. To illustrate how the algorithm works, CoDMI is applied to real medical data extended by the addition of artificial Covid-death observations. The results are compared with the estimates provided by the two naïve approaches which count COVID-19 deaths as censoring or as deaths by the disease under study. In order to evaluate the predictive performances of CoDMI an extensive simulation study is carried out. The results indicate that in the simulated scenarios CoDMI is roughly unbiased and outperforms the estimates obtained by the naïve approaches. A user-friendly version of CoDMI programmed in R is freely available.

1. Introduction

The problem of defining a common and appropriate method in survival analysis for handling the dropouts due to coronavirus disease 2019 (COVID-19) deaths of patients participating to oncology clinical trials has been recently stressed [1,2]. In oncology trials, all-causality deaths are often counted as events for death-related endpoints, e.g., overall survival. However, as it has been pointed out [2], counting a COVID-19 fatality as a death-related endpoint requires a complex redefinition of the estimand, considering a composite strategy for using the so-called intercurrent events [3], as, e.g., “discontinuation from treatment due to COVID-19” or “delay of scheduled intervention”. The problem is also exacerbated by the difficulty of homogeneously determining whether a death is entirely attributable to COVID-19. In this paper, we address a simplified version of this problem, assuming that COVID-19-related deaths are homogeneously identified and are the only intercurrent events to be considered. In this framework, we tackle the problem of how data in an oncology trial having the overall survival as the endpoint can be dealt with when deaths due to COVID-19 are present in the sample.

COVID-19 deaths should not be treated as standard censored data, because usual censoring should be considered—at least in principle—non-informative. Informative censoring, instead, occurs when participants are lost to follow-up also due to reasons related to the study, as it seems to be the case with COVID-19 deaths of oncological patients. Direct data on how COVID-19 affects survival outcomes in patients with active or a history of malignancy are immature. However, early evidence identified increased risks of COVID-19 mortality in patients with cancer, especially in those patients who have progressive disease [4]. Patients with cancer and COVID-19 were associated with increased death rate compared to unselected COVID-19 patient population (13% versus 1.4%) [4,5]. Based on these findings, in survival analysis dropouts due to COVID-19 deaths should be considered as cases of informative censoring. Another way used in survival analysis literature to represent this dependence is to view cancer deaths and COVID-19 deaths as competing events, see, e.g., [6] Ch. 8. In this paper, we propose an algorithmic method to include COVID-19 deaths of oncological patients in typical survival data, focusing on the classical Kaplan–Meier (KM) product-limit survival estimator. Our method is in the spirit of the Expectation-Maximization (EM) algorithms [7] used for handling missing or fake data in statistical analysis. In this sense, the method could also be used in applications different from clinical trials, e.g., reliability analysis. Correction of actuarial life tables can be also a possible application.

An overview of methods for dealing with missing data in clinical trials is provided by DeSouza, Legedza and Sankoh [8]. See also Shih [9]. In Shen and Chen [10] the problem of doubly censored data is considered and a maximum likelihood estimator is obtained via EM algorithms that treat the survival times of left censored observations as missing. As concerning situations with informative censoring, where there is stochastic dependence between the time to event and the time to censoring (which is our case if “censoring” is a COVID-19 death), a distinction is proposed by Willems, Schat and van Noorden [11] among cases where the stochastic dependence is direct, or through covariates. In that paper [11], the latter case is considered and an “inverse probability censoring weighting” approach is proposed for handling this kind of censoring. Since at this stage it is difficult to model cancer deaths and COVID-19 deaths through covariates in common, in this paper, we consider the case of direct dependence. We do not consider a survival regression model based on specified covariates, and limit the analysis, as has been said, to the basic Kaplan–Meier survival model, which is assumed to be applied, as usual, to a sufficiently homogeneous cohort of oncological patients. In this framework, we propose a so-called mean-imputation method for COVID-19 deaths using a purpose-built algorithm, referred to as Covid-Death Mean-Imputation (CoDMI) algorithm. A user-friendly version of this algorithm programmed in R is freely available. The corresponding source code can be downloaded from the website: https://github.com/alef-innovation/codmi (accessed on 6 July 2021).

An alternative approach to survival analysis when COVID-19 deaths are present in an oncology clinical trial in addition to cancer deaths could be based on the cumulative incidence functions, which estimate the marginal probability for each competing risks.This would lead to dealing with subdistributions and would require appropriate statistical tests to be used, see, e.g., [12]. Our algorithmic approach, instead, acts directly on the data, producing an adjustment that virtually eliminates the presence of the competing risk, thus allowing the use of standard statistical tools. This comes at the price of accepting some simplifications and specific assumptions.

The basic idea of CoDMI algorithm is of a counterfactual nature. Since the KM model provides an estimation of the probability distribution to survive until a chosen point on the time axis for any patients in the sample, for each of the patients which is observed to die of COVID-19 at time , we derive from this distribution , the expected lifetime beyond time , thus obtaining the “no-Covid” expected lifetime for each of these patients. Each value is then replaced by the virtual lifetime (this is the mean-imputation) and the KM estimation is repeated on the original data completed in this way, providing a new estimate of . This procedure is iterated until the change between two successive estimates is considered immaterial (according to a specified criterion).

It is pointed out by Shih [9] that “The attraction of imputation is that once the missing data are filled-in (imputed), all the statistical tools available for the complete data may be applied”. Although in our case we are not dealing with missing data but with partially observed data, this attractive property of mean-imputation still holds true. It should be noticed, however, that in general, treating an estimated value—even an unbiased one—as an observed value should require some increase in variance. In particular, the confidence limits of KM estimates on data including imputations should be appropriately enlarged. We propose an extension of the classical Greenwood’s formula providing this correction.

The paper is organized as follows. In Section 2, the notations and the basic structure of the KM survival estimator are provided and the related problem of computing expected lifetimes is illustrated. The representation of Covid-death cases in the sample is described. In Section 3, CoDMI algorithm is introduced and the details of the iteration procedure are provided. The convergence issue is discussed and the underlying assumptions of the algorithm are considered, taking into account some subtleties required by the non-parametric nature of the KM estimator. A possible adjustment for censoring of the algorithm is presented and a correction of Greenwood’s formula is derived for taking into account the estimation error in the imputed point estimates. Application of CoDMI to real medical data are provided in Section 4. Two oncological survival data sets which are well referenced in the literature are completed by artificial Covid-death observations and the survival curves estimated by CoDMI are compared with the no-Covid KM estimates and with the two naïve KM estimates obtained by considering COVID-19 deaths as censorings or as death of disease. The effect of the final adjustment for censoring is also illustrated. In Section 5, an extensive simulation study is presented to evaluate the CoDMI predictive performances. We discuss the details of the simulation procedure and provide tables illustrating the results. Some conclusions and comments are given in Section 6. In Appendix A a derivation of the extended Greenwood’s formula is provided.

2. Notation and Assumptions on Covid Deaths in the Sample

2.1. Typical Clinical Trial Data and the Kaplan–Meier Estimator

We consider a study group of n oncological patients which received a specified treatment and are followed-up for a fixed calendar time interval. The response for each patient is the survival time which is computed starting from the date of enrollment in the study, date 0.

Remark 1.

This is in line with the standard actuarial notation, where is used to denote the survival time of a subject of age x. Our patients actually have “age 0” (in the study) at the time they are enrolled.

Typically, the observations include censored data, that is, survival times known only to exceed reported value. Formally, for a given patient there is a censoring at time point t if we only know that for this patient . If denotes the last observed time point in the study, i.e., corresponds to the current date or the end of the study, the case of a censored time corresponds to a patient lost to follow-up. To take into account censoring, the observations can be represented in the form:

where is the observed survival time of patient i and is a “status” indicator at which is equal to 1 if death of disease under study (DoD) is observed and is equal to 0 if there is a censoring (Cen) on that time. We assume that the group of patients provides a homogeneous sample, that is, all the observations come from the same probability distribution for , and our aim is to estimate the cumulated probability function , or the related survival function:

The estimation of can be realized non-parametrically by the well-known Kaplan–Meier product-limit estimator [13]. If we denote by:

the observations ordered by increasing value of t (with ), the KM estimator is written as:

where is the number of subjects at risk at (immediately before) time , and the ratio is the hazard rate at time . Therefore is a (left continuous) step function with steps at each time a DoD event occurs.

Remark 2.

(i) If there are ties in the sample, the ordering can always be unambiguously defined by adopting the appropriate conventions. We refrain here from describing these conventions, already considered in the original paper [13] and extensively discussed in the subsequent literature.

(ii) In general, the event of interest (in our case DoD) acts on the ratio in the estimator (1) by modifying both the numerator and the denominator. The not-of-interest event (Cen) only acts on the denominator. This follows from the assumption that a Cen corresponds to a non-informative censoring.

It is assumed that the censored observations do not contribute additional information to the estimation, which is the case if censoring is independent of the survival process. If the time points are given, it was already shown in the original paper [13] that (1) is a maximum likelihood estimator. Obviously , the last time point in the observed sequence. For our purposes, it is important to distinguish two cases, depending on whether at there is a DoD or a Cen.

2.2. The Case of Complete Death-Observations

If , i.e., relates to a DoD event, and if , then one has , which means that the data allows us to estimate the entire probability distribution of . Let us refer to this case as the complete death-observations case or, briefly, the complete case. In this situation, we can compute the estimated expected future lifetime for a patient which is alive at time . Let us denote the conditional lifetime, given , as:

Then the expected future lifetime (the life expectancy) beyond is:

Since is a step function and the jump at time with equals the probability to die of disease at this time point, (2) is equivalent to the average taken on the truncated distribution of :

where if .

2.3. The Incomplete Case

If the condition is not fulfilled, we are in an incomplete (death-observations) case: one has , meaning that the data are not sufficient to estimate the entire survival distribution, then the expected future lifetime cannot be derived without some ad hoc choices or suitable additional assumptions.

Let us denote by the last observed time point of a DoD event (i.e., ). If , the KM estimate only provides the final survival probability . We then choose to complete the distribution by setting , which is equivalent to posing the entire probability mass on the last time point . In terms of the data, this is also equivalent to change to 1 the status indicator . The effect of this choice depends on the actual meaning we attribute to the random variable . If represents the entire future lifetime of the patients since they entered the study, then posing provides an underestimation of the life expectancy, since we have while we know that at least one patient was alive at the end of the study. In many cases, however, it is convenient to assume that the variable of interest is the patient’s lifetime in the study. Formally, we would consider the random variable , where is the duration of the study. The completed survival function refers to this random variable and no underestimation would be produced in this case. This issue is strictly related to the special nature of the final time point in this kind of survival problems. For example, self-consistency, an important property of the KM estimator, only holds if

Remark 3.

This was pointed out by Efron [14] p. 843, where it is observed that the iterative construction underlying the KM estimator “sheds some light on the special nature of the largest observation, which the self-consistent estimator always treats as uncensored, irrespective of” .

2.4. Including Covid-Death Events in the Data

Assume that, in addition to the n patients who left the study by a DoD or a Cen event, also m patients were present in the oncological trial for whom death of COVID-19 (DoC) was observed on the time points . The corresponding observed data set can be represented as follows:

where the status indicator of each DoC event is missing. It is clearly inappropriate to pose these indicators equal to 1, but it is also not appropriate to set them equal to 0, since the DoC event provides an informative censoring, given that we know this event does carry prognostic information about the survival experience of the oncological patients. More precisely, we know that there is a positive correlation between DoD and DoC events. However, ignoring DoC data would cause an unpleasant loss of information and we would like to adjust these data in some ways, so that it can be included in the study. Formally, we are interested in replacing each of the observed by a different appropriate time point , a virtual lifetime conditional on , possibly with an appropriate value of the corresponding status indicator, which we will denote by . We are confident that this replacement of the DoC time points can be properly completed just because we assume that, due to the dependence between DoC and DoD events, the “standard” data z contain information on the COVID-19 data (and vice versa). The determination of the status indicators is more challenging. However, with the appropriate adjustment we can consider the whole data set:

and we can safely apply the KM estimator to these data, thus also using the information contribution carried by COVID-19 deaths. In the following section, we will propose an iterative procedure to suitably realize this adjustment.

3. The EM Mean-Imputation Procedure

3.1. The CoDMI Algorithm

Obviously, the input data to the algorithm are given by the observation set x in (4). We will assume, however, that all patients who died of COVID-19 would have died of disease if COVID-19 had not intervened, thus setting , i.e., assuming that all the virtual lifetimes would have been terminated by a DoD event). We will see in Section 3.4 how one can try to get around this limitation in this counterfactual problem. Under the assumption , the basic idea of our COVID-19 adjustment is to estimate the virtual lifetimes as the expectation , provided by the KM estimator itself. This is realized by a procedure consisting of the following steps.

- Initialization step. One starts by setting for , where are arbitrarily chosen initial values. Then one obtains an artificial complete data set , as defined in (5). Examples of initialization are or , where is the life expectancy computed by applying the KM estimator to the standard data z.

- Estimation step. The KM estimator is applied to to produce the survival function estimate . In case of incomplete death-observations, the distribution is completed by posing .

- Expectation step. Using , the m future life expectancy are computed as in (3). The corresponding time points are then replaced by . One then obtains the new artificial complete data set:

- The estimation and the expectation steps are repeated, producing at the k-th stage a new complete data set , provided by the expectations . The iterations stop when a specified convergence criterion is fulfilled. A natural criterion is:for a suitable specified tolerance level (this choice will be left as an option for the user). If condition (6) is not satisfied after a fixed maximum number of iterations (which will also be chosen as a user option), the convergence is considered failed.

If the convergence criterion is met, the final values of the m life expectancy provide estimates which we will denote by . The corresponding estimated lifetimes are and the estimated whole data set is:

This iterative procedure can be seen in the class of the well-known Expectation-Maximization (EM) algorithms, since the estimation step can be interpreted as a maximization, given that the KM approach provides a maximum likelihood estimator. In this class of algorithms the expectation step is often referred to as mean-imputation, hence we will call our iterative procedure Covid-Death Mean-Imputation (CoDMI) algorithm.

Remark 4.

(i) Usually EM algorithms, and the concept of imputation, refer to procedures aimed to filling-in missing data. What we are dealing with here is data observed to a limited extent, rather than completely missing. Therefore, in this application the imputation corresponds rather to a replacement(of the observed time points by the estimated time points ). Our method is, however, in the spirit of the fake-data principle, as illustrated by Efron and Hastie [15], pp. 148–149.

(ii) It should be noted that the idea of estimating the virtual lifetimes as the expectation implies a further more subtle assumption. Let DoCj be the event: “Patient j died of COVID-19 at time ” and RoCj: “Patient j became ill with COVID-19 but recovered at time ”. Using notation introduced by Pearl in causal analysis (e.g., [16]), we are assuming for this patient that:

where is the intervention operator on event A. This means that we are assuming that the event RoCj, which is not excluded by DoCj = 0, does not change the probability distribution of . This is clearly a simplifying assumption that makes our counterfactual problem easy to solve. In a more rigorous analysis, the effect of events as RoCj should be also taken into account [1]. We refrain to do this here, since such an analysis would take us out of the KM survival framework.

3.2. The Convergence Issue

In general, CoDMI is not guaranteed to converge. If we make the classical binomial assumptions, we can derive the KM likelihood as a function of the hazard rates . Running the algorithm, we find it is possible that different parameter sets, then different sets of estimates, correspond to the same likelihood value. This should indicate an issue in parameter identifiability. However the classical KM likelihood is defined for fixed time points, while the estimates change at each step in our algorithm. Thus, the identifiability problem should be more properly studied referring to a likelihood function which includes the event times in the parameters as well.

Remark 5.

A similar problem of iterated estimates for the KM product-limit estimator, but with fixed time points hence without parameter identifiability issues, was studied by Efron [14]. He proved in this case that, provided that the probability distribution is complete, the solution of the convergence problem exists and is unique. The previously mentioned self-consistency refers precisely to this property.

However, in order to manage the convergence problem, even based on the results of the simulation exercise presented in Section 5, it is worth considering the following three types of situation.

- (1)

- Finite time convergence. The difference between two successive estimates becomes zero after a finite number of iterations.

- (2)

- Asymptotic convergence. The difference between two successive estimates tends to zero asymptotically.

- (3)

- Cyclicity. After a certain number of iterations, cycles of the estimated values are established which tend to repeat themselves indefinitely, so that the minimum difference between two successive estimates remains greater than zero. In this case, if this minimal difference is less than the tolerance , the corresponding estimate can be accepted (this is actually referred to by the term “tolerance”). It often happens that small changes in some of the values are sufficient to get out of cyclicity cases. Therefore, some fudging of these data could be used to obtain acceptable solutions when the minimum improvement is out of tolerance.

As shown in the simulation study in Section 5, cases of non-convergence are not very frequent, and many of these can be circumvented by milding the convergence criterion (6) and fudging the COVID-19 data a little, if necessary. In general, the results are found to be sensitive to the initial values . In cases of convergence this is not a problem since different solutions, but within the chosen tolerance criterion, are equivalent from a practical point of view. In some cases of non-convergence, on the other hand, it is possible to skip to convergence cases by changing the initial values.

3.3. Assumptions Underlying CoDMI

The iterative procedure described in Section 3.1 can probably be easily justified by intuitive reasoning. However, also to give internal consistency to the simulation procedure presented in Section 5, it is convenient to better specify the assumptions underlying the CoDMI algorithm. A preliminary remark is important to be made. In our framework, the “true” probability distribution of the random variable is the best-fitting distribution in the KM sense, i.e., the distribution identified by applying the maximum likelihood product-limit estimator to existing data. Without appropriate additional assumptions (e.g., specifying an analytic form of the hazard function) this distribution is completely non-parametric and there is no other way to identify it than by specifying the data as well as the estimator used (the product-limit estimator, in fact). One could say, data provide information to the estimator, and the estimator provides probabilistic structure to data. Having remarked upon this, the basic assumption underlying CoDMI algorithm outlined in the following section. When COVID-19 deaths are present in the study sample, there is an extended underlying data structure composed of the n observed lifetimes (ending with a DoD or a Cen) and by the m partially observed lifetimes (virtually ending, if we assume , with a DoD). The corresponding probability distribution is the best-fitting distribution specified by this extended data, i.e., by applying the KM estimator to the data set . We will keep have this property in mind when we generate the simulated scenarios on which to measure the algorithm’s predictive performance.

3.4. Adjusting for the Assumption

Relaxing the assumption that patients eliminated by a DoC event would have died of disease without this event is not an easy task. The prediction regarding the status operators increases the forecasting problem by one dimension and requires a reliable predictive model, which is currently not available to us. We are therefore content to propose an adjustment for censoring of the response of CoDMI algorithm which should mitigate the possible bias produced by the assumption . If the algorithm met the convergence criterion, the final data set is given by (7). We then consider the modified data set:

where both the observed and the estimated virtual lifetimes are kept the same, while all the status indicators are reversed. Running the KM estimator on the set , one obtains the so-called reverse Kaplan–Meier survival curve , which refers to Cen instead of DoD endpoints, and provides the new conditional expectations , given , of the virtual lifetimes. We then choose to derive the adjusted estimates , for , as:

where is the probability that an event observed at time t is a DoD (as opposed to a Cen). In order to estimate these non-censoring probabilities, the standard observations are represented on a time grid spanning the time interval with cells , and a parametric hazard rate function is fitted on this grid. The same procedure is then applied to the “reverse observations” and the corresponding hazard rate function is then derived. The probability estimates are then computed as , where is the cell containing the time point t. Examples of estimated functions are provided in the next section.

The above procedure is fairly ad hoc and the indications provided do not necessarily have to be accepted. It may be the case that the user of the procedure has a personal opinion, based on external information, on the value of (some of) the virtual status operator . In this situation the coefficients in (9) could be assigned or modified by the user on the basis of this expert judgment.

3.5. An Extended Greenwood’s Formula

The virtual lifetime expectations provided by CoDMI and included in the mean-imputed data are point estimates which allow these data to be applied to any statistical tool available for survival analysis. However, replacing an observed value with a point estimate, even an unbiased one, increases the variance of the survival estimates, since the mean-imputed data convey their own estimation error. Usually the standard deviation of the KM survival function estimate is computed using Greenwood’s formula. On the standard data, using the same notation in (1), this can be written as:

where the summand is set to 0 if . We provide an extension of this formula in order to include the variance component due to the estimated time points .

We start by the CoDMI output, eventually with the adjustment for censoring:

where the are derived by (9) and the indicators can be equal to 0 or 1. We represent the data set in the alternative form:

where:

- or are the observed or estimated survival times ordered by increasing value (the usual conventions on tied values apply);

- if corresponds to a Cen and 1 otherwise;

- if corresponds to a DoC and 0 otherwise.

Since the time points are assumed to be ordered, we simplify the exposition in this section by using the subscript i instead of (and instead of ). We then consider both the “direct” probability distribution and the reverse one , both taken from the CoDMI output, and from these we derive the m direct and the m reverse truncated distributions:

These distributions are defined, with null values, also for . Finally, we compute the total probabilities:

and define . Observe that .

With these definitions, we propose the following correction of Greenwood’s formula:

where the hazard rates are specified as:

and the number of subjects at risk is computed as:

The basic idea underlying this formula is that the m COVID-19 deaths are distributed as “fractional deaths” over all the uncensored time points (both DoD and DoC), and the hazard rate at time has a random component with mean and variance . The details of the derivation of Formula (12) are provided in Appendix A. Using (12), the approximate 95% confidence intervals can be computed by:

4. Examples of Application to Real Survival Data

4.1. Application to COVID-19 Extended NCOG Data

To illustrate the effects of our mean-imputation adjustments, we start by considering some real survival data well referenced in the literature and apply CoDMI algorithm to these data after the addition of some artificial COVID-19 deaths. This is carried out because, currently, sufficiently rich real datasets containing both cancer-death and Covid-death events are hardly available. To this aim, we chose, as the real reference data, the head/neck cancer data of the NCOG (North Carolina Oncology Group) study, which was used to illustrate the KM approach in the book by Efron and Hastie, Section 9.2 [15]. We considered data from the two arms, A and B, separately.

4.1.1. Arm A of NCOG Data

Survival times (in days) from Arm A in the first panel of Table 9.2 [15] are reported in Table 1.

Table 1.

Censored survival times from Arm A (Chemotherapy) of the NCOG study.

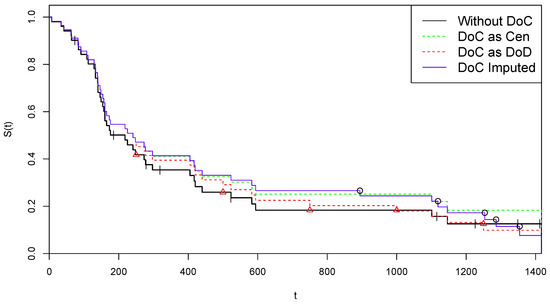

To save space, data is presented, as in the book, in compact form, with the + sign representing censoring. The conversion of these data into the form of a two-component vector is immediate. There are patients, with 43 DoD events and 8 Cen events. The final time point is 1417 days after the beginning of the study, and a DoD is observed on that date. Therefore we are in a complete death-observations case, with . The corresponding KM estimate of the survival function is illustrated by the black line in Figure 1.

Figure 1.

Kaplan–Meier curves for alternative treatments of COVID-19 deaths—Arm A.

To illustrate the application of CoDMI algorithm, we add to these data an artificial group of m Covid death observations, i.e., m DoC events assumed being observed at the time points . We chose (roughly of n) DoC events, on 5 time points roughly equally spaced in :

Since the observation set has been specified, we have to choose the virtual lifetimes in the data set which is used to initialize CoDMI algorithm. If, for example, we choose the option to set , then we have , with:

We run CoDMI algorithm with this initialization and . The procedure converged after 10 iterations, providing the following estimates for the lifetimes :

The corresponding COVID-19 data:

are then used as mean-imputed data to obtain the final complete data set in (7). As one can observe, the expectation Formula (3) provides non-integer values, which is not a problem since the survival function provided by the KM estimator is defined on the real axis.

Remark 6.

A tolerance of 0.1 already provides overabundant precision for our applications. However, in order to stress the algorithm, we also tried with and , obtaining convergence after 33 and 51 iterations, respectively. This seems to be a case of asymptotic convergence.

The survival curve provided by the KM estimator applied to the completed data (“DoC Imputed”) is illustrated in blue color in Figure 1, where it can be compared with the original survival estimate based on the z data (“Without DoC”, black color). For further comparisons, we also present the survival KM curves estimated by the two naïve strategies, comprising a classification of all DoC events as Cen, i.e., and (“DoC as Cen”, green color), or all DoC events as DoD, i.e., and (“DoC as DoD”, red). In the figure, the “critical” time points are reported by indicating the 14 Cen points by tiks and the 5 points by red triangles on the black curve, while the 5 points are indicated by circles on the blue line (where, obviously, each circle corresponds to a jump).

We finally illustrate the application of the adjustment for censoring presented in Section 3.4. After deriving from the modified data set in (8), we apply the KM estimator to these data, obtaining the following alternative lifetimes :

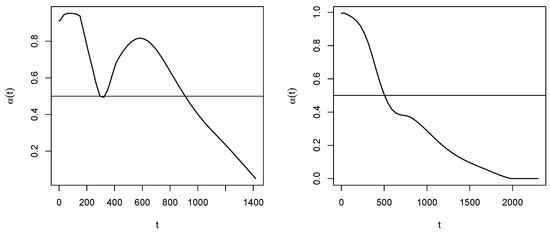

In Figure 2, on the left it is illustrated the probability curve estimated as specified in Section 3.4. By this function, one obtains:

Figure 2.

Non-censoring probability curves for Arm A (left) and Arm B (right).

Therefore, the procedure suggests to consider the last two time points as (potentially) censored, then estimated as in (17). The data set in (16) is then modified as:

These suggestions, however, are purely indicative and can be rejected or changed based on expert opinion.

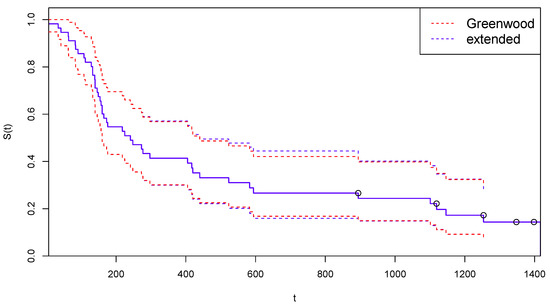

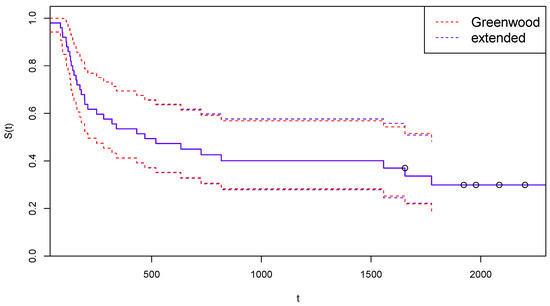

In Figure 3, the survival function estimated after the suggested adjustment for censoring is reported, together with the confidence limits computed with the traditional Greenwood’s formula (red dotted lines) and with the extended Formula (12) (blue dashed lines).

Figure 3.

Kaplan–Meier curves estimated by CoDMI with adjustment for censoring and related confidence intervals—Arm A.

4.1.2. Arm B of NCOG Data

In Table 2, we report censored survival times (in days) from Arm B in the second panel of Table 9.2 [15].

Table 2.

Censored survival times from Arm B (Chemotherapy+Radiation) of the NCOG study.

Furthermore, in this case, we refrain, for reasons of space, to present data converted into z form. Data are heavily censored in this arm, having patients, with 14 Cen events, which are mainly distributed among the largest time points. Moreover, we are in a case of incomplete death-observations, since the final time point is a Cen point. The last time point with a DoD event observed is and 4 Cen events are observed thereafter. The final level of the survival curve provided by the KM estimator is and we choose to allocate this probability mass entirely on the final Cen point 2297. For the artificial data on COVID-19 deaths, also in this case we choose m roughly and assume equally spaced DoC events in the interval . That is we assume with values in the set:

The last time point in is after the last observed DoD time point (1776). As in the previous case, the initial data set is derived by setting , and the complete data set is used to initialize CoDMI algorithm. The algorithm, run again with , converged after 12 iterations (convergence was met after 49 iterations for and 78 iterations for ), providing the following estimates for the adjusted lifetimes :

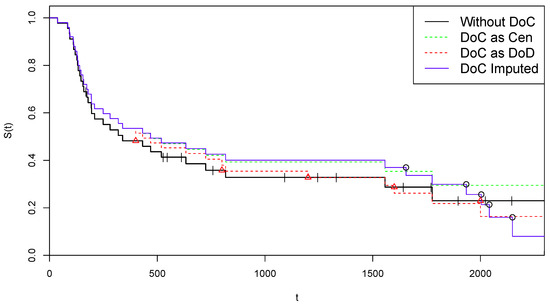

In Figure 4, we replicate the illustrations of Figure 1 on these data. As concerning the adjustment for censoring, from the estimated probability curve reported in Figure 2 on the right we obtain:

Figure 4.

Kaplan–Meier curves for alternative treatments of COVID-19 deaths—Arm B.

Therefore, in this case, the procedure suggests to consider the last four time points as censored. Using the criterion in (17), the final data set is obtained:

Figure 5 is the analogous for Arm B of Figure 3.

Figure 5.

Kaplan–Meier curves estimated by CoDMI with adjustment for censoring and related confidence intervals—Arm B.

5. A Simulation Study

In order to test the ability of CoDMI to correctly estimate the expected life-shortening (or the corresponding virtual lifetime) due to DoC events in a study population, we generate many scenarios each containing simulated data. These pseudo-data include a data set of standard observations and a data set of (preliminary) virtual lifetimes. By randomly censoring the time variables in a corresponding set of DoC time points is derived. In order to equip these pseudo-data with a probabilistic structure consistent with CoDMI assumptions, a KM best-fitting distribution is derived by applying the product-limit estimator to . The “true” virtual lifetimes are then derived by conditional sampling, given , from this distribution. Running CoDMI algorithm on the pseudo-observations , the estimated virtual lifetimes are obtained and the quality of the estimator is measured by computing the average, over all scenarios, of the prediction errors .

5.1. Details of the Simulation Process

The details of each scenario simulation are as follows:

- 1.

- Simulation of standard survival data . The simulated standard (i.e., non-Covid) survival data is generated in each scenario starting from the same set of real data , spanning the time interval . The set is generated by drawing with replacement pairs from the n real-life pairs , maintaining the proportion between DoD and Cen events in z. Let us denote by the largest uncensored time point in .Remark. It should be noted that many tied values can be generated in this step, especially if . Moreover, could result to be censored (a case of incomplete death observations) even if the death observations are complete in the original data. It is easy to guess that generating many scenarios in this way can produce a number of “extreme” pseudo-data . This is useful, however, for testing the algorithm even in unrealistic situations. Most cases of failed convergence correspond to extreme situations.

- 2.

- Simulation of DoC time points . In order to simulate a number of COVID-19 deaths, the time points are generated by drawings with replacement from the points in real data z, satisfying the conditions and . These time points are interpreted as temporary virtual lifetimes and are first used to generate the DoC time points . A number of independent drawings from a uniform (0, 1) distribution are performed, and the corresponding DoC time points are obtained as . Therefore, for all j one has , with taking equally probable values in .Remark. The use of a uniform distribution is obviously questionable, and more “informative” distribution could be suggested. For example, a beta distribution with first parameter greater than 1 and second parameter lower than 1 may be preferable, as it makes more probable values of closer to . However, the form of this distribution is irrelevant to our purposes: we are interested in observing how CoDMI is able to capture the simulated virtual lifetimes, independently of how they are generated.

- 3.

- Simulation of virtual lifetimes . The temporary lifetimes (and the data set ) cannot be directly used to test CoDMI algorithm, since their probabilistic structure is indeterminate and, in any case, we have too few (pseudo-)observations. In order to introduce a probabilistic structure consistent with CoDMI assumptions, we first run the KM estimator on the data set , thus obtaining the corresponding death probability distribution . The virtual lifetimes are then obtained by computing the conditional expectations by this distribution. However, this is not yet fully consistent with CoDMI assumptions, since, as discussed in Section 3.3, the appropriate distribution is the KM best-fitting distribution specified on the extended data, i.e., data including the virtual lifetimes themselves. To obtain this result we should repeat the previous step, i.e., running the product-limit estimator on the new data set , thus producing the new distribution and then simulating new time points by taking the conditional expectation on this distribution. In principle, this step should be iterated similarly to what is completed in the CoDMI algorithm. To avoid convergence problems, however, we prefer to limit the number of iterations to a fixed (low) value , thereby implicitly accepting a certain level of bias in the estimations. After these iterations has been made, the final data set is obtained. Running the KM estimator on these data again, the final distribution is obtained and the definitive time points , with the corresponding , are computed by conditional sampling, given , i.e., simulating from the truncated distribution (after normalization). These sampled values are taken as the true values of virtual lifetimes and life expectancy, respectively, which should be estimated by CoDMI using only the information .

- 4.

- Application of CoDMI and naïve estimators. CoDMI algorithm is applied to the simulated data:with obtained in step 1 and in step 2. Provided that the algorithm converges, we obtain the estimated virtual lifetimes and the estimated life expectancy .To allow comparison, we also derive in this step the predictions of the two naïve “estimators” which are obtained by applying the KM estimator to the simulated data , modified by posing, for all j, and (“DoC as DoD”) or (“DoC as Cen”).

5.2. Valuation of the Predictive Performances

In the simulation exercise, a large number N of scenarios are generated. This provides, for and , the CoDMI estimates (from step 4) and the true realizations (from step 3). Then we can compute the prediction errors:

and the average errors:

Positive (negative) values of correspond to under(over)-estimates provided by CoDMI. As usual, we can associate to these average errors the corresponding standard error, i.e., the standard error of the mean (s.e.m.). Given the independence assumption, the central limit theorem guarantees, as usual, that the sample means are asymptotically normal. Therefore, the corresponding s.e.m. is inversely proportional to .

The same summary statistics are computed for the prediction errors relative to the two naïve estimators.

5.3. Results from Simulation Exercises

Two separate simulation exercises were performed, one using Arm A, the other using Arm B as real-life data. In both the exercises, scenarios were generated, with standard observations (roughly double the real ones) and COVID-19 deaths. A tolerance was chosen for the CoDMI algorithm, with a maximum number of allowed iterations . The number of iterations for generating the true values was and for all the initializations the option was chosen. Since in some scenarios CoDMI failed to converge (with the chosen values for and ), the sample means and the corresponding s.e.m. where computed only on the convergence cases.

In Table 3, which is referred to Arm A data, the simulation results are reported for each of the 10 DoC cases. We obtained convergence cases out of the 10,000 simulated. In each row, the sample mean of the DoC time points , the true life expectancy and the CoDMI estimated life expectancy are reported in columns 2–4. In columns 5–9, we provide summary statistics of the corresponding prediction errors: the mean error , the related s.e.m., the relative mean error and the minimum and maximum value of .

Table 3.

Results by DoC event from simulations () generated by Arm A data.

The same results for 10,000 scenarios generated by Arm B data are reported in Table 4.

Table 4.

Results by DoC event from simulations () generated by Arm B data.

Table 5 provides the results in Table 3 and Table 4 aggregated over all the DOC events. These overall results are summarized in blok “DoC imputed”. In the bloks, “DoC as DoD” and ”DoC as Cen” the average prediction errors are reported for the two corresponding naïve estimators. The main finding from the simulations is that the CoDMI estimates seem to be essentially unbiased, with a relative prediction error of around for both the original data considered. Some more extensive (and time consuming) tests, with or , have shown a further reduction of the error (as well as, obviously, of the corresponding s.e.m.).

Table 5.

Overall results from simulations.

As a final exercise, we used a modified version of the simulation procedure to obtain an assessment of goodness of the adjustment for censoring described in Section 3.4. In the modified simulation, all the true virtual lifetimes were generated assuming a censoring, instead of a DoC, as the endpoint. Then we set and in step 3 of Section 5 we generated in all iterations the virtual lifetimes using the truncated reverse probability distribution, i.e., the distribution obtained by applying the Kaplan–Meier estimator to the reversed data (see (8)). Correspondingly with this change in assumption, the estimated values in each simulation were obtained by applying the CoDMI algorithm with the final adjustment for censoring, setting at 0 all the probabilities in (9). The overall results from these simulations are summarized in Table 6, which have the same structure as Table 5 and where the results without adjustment are also provided for comparison.

Table 6.

Effect of CoDMI adjustment for censoring when all COVID-19 endpoints are simulated as censored (). Overall results from simulations.

As we can see, the changed assumption on the status of the DoC endpoints provides a large increase of the true life expectancy , but the adjustment for censoring seems to capture quite well this change. Of course, in real life we do not know what the true value of the is, and we will have to try to choose the suitable in (9) based on the probabilities and/or using expert judgment.

6. Conclusions and Directions for Future Research

In the simulated scenarios, where all the virtual endpoints of COVID-19 cases are assumed to be DoD, the results indicate that CoDMI estimator is roughly unbiased and outperforms alternative estimates obtained by the naïve approaches. In the opposite extreme situation, where all the virtual endpoints of COVID-19 cases are assumed to be censored, the final adjustment for censoring of CoDMI also guarantees unbiasedness, provided that the information on the status of DoC events is assumed to be known. The non-convergence cases can often be circumvented by milding the convergence criterion and/or fudging COVID-19 data a little. Furthermore, changing the initialization of the algorithm can be useful in some cases.

By a natural extension of the binomial assumptions underlying the KM estimator, a version of the classical Greenwood formula can be derived for computing the variance of CoDMI estimates. Equipped with this formula, the CoDMI algorithm is proposed as a complete statistical estimation tool.

As we pointed out in the Introduction, CoDMI algorithm, compared with the cumulative incidence functions method often used to study competing risks, is a pragmatic approach that allows to directly apply all standard statistical tools to “augmented” data. However, it remains important to compare the predictive performance of the two approaches. In our applications, where the competing events are DoD and DoC, we do not yet have sufficiently rich data to test the effectiveness—and possibly the necessity—of an approach based on the cumulative incidence functions, or even to test the possibility of using the two methods in conjunction. Therefore, this topic is left for future research.

Another interesting issue is the convergence of CoDMI algorithm, which is discussed in Section 3.2. A natural way to approach this problem is to study the behavior of the log-likelihood function. However, as we have pointed out, we are not in a fixed time points situation. So it is not a trivial task to explicitly write the updated log-likelihood at each iteration step, because the replacements in each step imply a re-ordering of the time points and consequently a change in the number of items at risk in each death probability estimate. This problem is also left as a future work.

Author Contributions

F.D.F. and F.M. conceived the basic structure of the paper. F.M. designed the CoDMI algorithm and derived the extended Greenwood’s formula. L.M. realized the simulation study and implemented the CoDMI algorithm in R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Derivation of the Extended Greenwood’s Formula

We organize data in a life table with K time intervals , spanning the interval , each with length . Let us denote by and the hazard rate and the number of DoD events, respectively, in the interval k and by the number of subjects at risk at the end of the interval . In this setting the survival function is defined as , and an estimate for is obtained by plugging in an estimate for , . We make the binomial assumption:

where the parameters and are the DoD and the DoC hazard rate, respectively. In addition to this usual assumption, we express as the random variable:

where, as usual, the random variable is the conditional lifetime and is the time of the j-th observed DoC event. The probability distribution of , however, is not necessarily specified for the moment. Let and .

In order to derive an approximation of the variance of , in the same spirit of Greenwood’s formula we consider the variance of the logarithm:

As for the second equality, it should be noted that the values are not independent, since depends on the events in the previous periods. However successive conditional independence, given (essentially, a martingale argument), is a sufficient condition for the equality to hold. Now we use the so-called delta-method approximation , to obtain:

Therefore, for the expectation of we obtain:

and for the variance of we have:

or, with a little algebra:

Plugging in and posing , we obtain:

Using the inverse approximation , we finally have:

Now, in the life table we take small enough to make each time interval contain at most one time point . In this limit, if denotes the interval containing , we assume that:

consistently with the fact that, in this setting, has discrete distribution with probability masses in the points . These probabilities are the provided by CoDMI. Then, by (A2) and (11), we obtain:

For the variance, assuming the independence of the we have:

Thus, we estimate by and with . Putting it all together, for the survival function we arrive at the product-limit estimator:

where:

and is computed recursively as:

Correspondingly, (A7) reduces to:

In summary, the addition of the random component in the binomial assumption (A1) has the effect of distributing each of the m COVID-19 deaths, which has been imputed by CoDMI on the time points , on all the uncensored time points according to its truncated distribution . Summing over j we obtain the total probabilities , for which the property holds . In the variance expression (A9) the estimation error of the is taken into account by the additional term containing the variance estimates .

It should be noted, however, that the survival function estimate given by (A8) is slightly different by the estimate given by (1). Since the COVID-19 deaths are spread out on all the time points, one usually has for small t and for large t. One can accept the approximation:

which gives Formula (12). The more conservative approximation:

could be also considered.

References

- De Felice, F.; Moriconi, F. COVID-19 and Cancer: Implications for Survival Analysis. Ann. Surg. Oncol. 2021, 28, 5446–5447. [Google Scholar] [CrossRef] [PubMed]

- Degtyarev, E.; Rufibach, K.; Shentu, Y.; Yung, G.; Casey, M.; Englert, S.; Liu, F.; Liu, Y.; Sailer, O.; Siegel, J.; et al. Assessing the Impact of COVID-19 on the Clinical Trial Objective and Analysis of Oncology Clinical Trials—Application of the Estimand Framework. Stat. Biopharm. Res. 2020, 12, 427–437. [Google Scholar] [CrossRef] [PubMed]

- European Medicines Agency. ICH E9 (R1) Addendum on Estimands and Sensitivity Analysis in Clinical Trials to the Guideline on Statistical Principles for Clinical Trials. Scientific Guideline. Available online: https://www.ema.europa.eu/en/documents/scientific-guideline (accessed on 17 February 2020).

- Kuderer, N.M.; Choueiri, T.K.; Shah, D.P.; Shyr, Y.; Rubinstein, S.M.; Rivera, D.R.; Shete, S.; Hsu, C.Y.; Desai, A.; de Lima Lopes, G., Jr.; et al. Clinical impact of COVID-19 on patients with cancer (CCC19): A cohort study. Lancet 2020, 395, 1907–1918. [Google Scholar] [CrossRef] [PubMed]

- Guan, W.J.; Ni, Z.Y.; Hu, Y.; Liang, W.H.; Ou, C.Q.; He, J.X.; Liu, L.; Shan, H.; Lei, C.L.; Hui, D.S.; et al. Clinical Characteristics of Coronavirus Disease 2019 in China. N. Engl. J. Med. 2020, 382, 1708–1720. [Google Scholar] [CrossRef] [PubMed]

- Kalbfleisch, J.D.; Prentice, R.L. The Statistical Analysis of Failure Time Data; Wiley: Hoboken, NJ, USA, 2002. [Google Scholar]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B 1977, 39, 1–38. [Google Scholar]

- DeSouza, C.M.; Legedza, A.T.R.; Sankoh, A.J. An Overview of Practical Approaches for Handling Missing Data in Clinical Trials. J. Biopharm. Stat. 2009, 19, 1055–1073. [Google Scholar] [CrossRef] [PubMed]

- Shih, W.J. Problems in dealing with missing data and informative censoring in clinical trials. Curr. Control. Trials Cardiovasc. Med. 2002, 3, 4. [Google Scholar] [PubMed]

- Shen, P.S.; Chen, C.M. Aalen’s linear model for doubly censored data. Statistics 2018, 52, 1328–1343. [Google Scholar] [CrossRef]

- Willems, S.J.V.; Schat, A.; van Noorden, M.S.; Fiocco, M. Correcting for dependent censoring in routine outcome monitoring data by applying the inverse probability censoring weighted estimator. Stat. Methods Med. Res. 2018, 27, 323–335. [Google Scholar] [CrossRef] [PubMed]

- Gray, R.J. A class of K-sample tests for comparing the cumulative incidence of a competing risk. Ann. Stat. 1988, 4, 1141–1154. [Google Scholar]

- Kaplan, E.L.; Meier, P. Nonparametric estimation from incomplete observations. J. Am. Stat. Assoc. 1958, 53, 457–481. [Google Scholar]

- Efron, B. The two sample problem with censored data. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability; University of California Press: Berkeley, CA, USA, 1967; Volume 4, pp. 831–853. [Google Scholar]

- Efron, B.; Hastie, T. Computer Age Statistical Inference. Algorithms, Evidence, and Data Science; Cambridge University Press: Cambridge, UK, 2016. [Google Scholar]

- Pearl, J.; Glymour, M.; Jewell, N.P. Causal Inference in Statistics. A Primer; Wiley: Hoboken, NJ, USA, 2016. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).