Sustainability of AI-Assisted Mental Health Intervention: A Review of the Literature from 2020–2025

Abstract

1. Introduction

2. Materials and Methods

3. Results

3.1. Systematic Literature Review

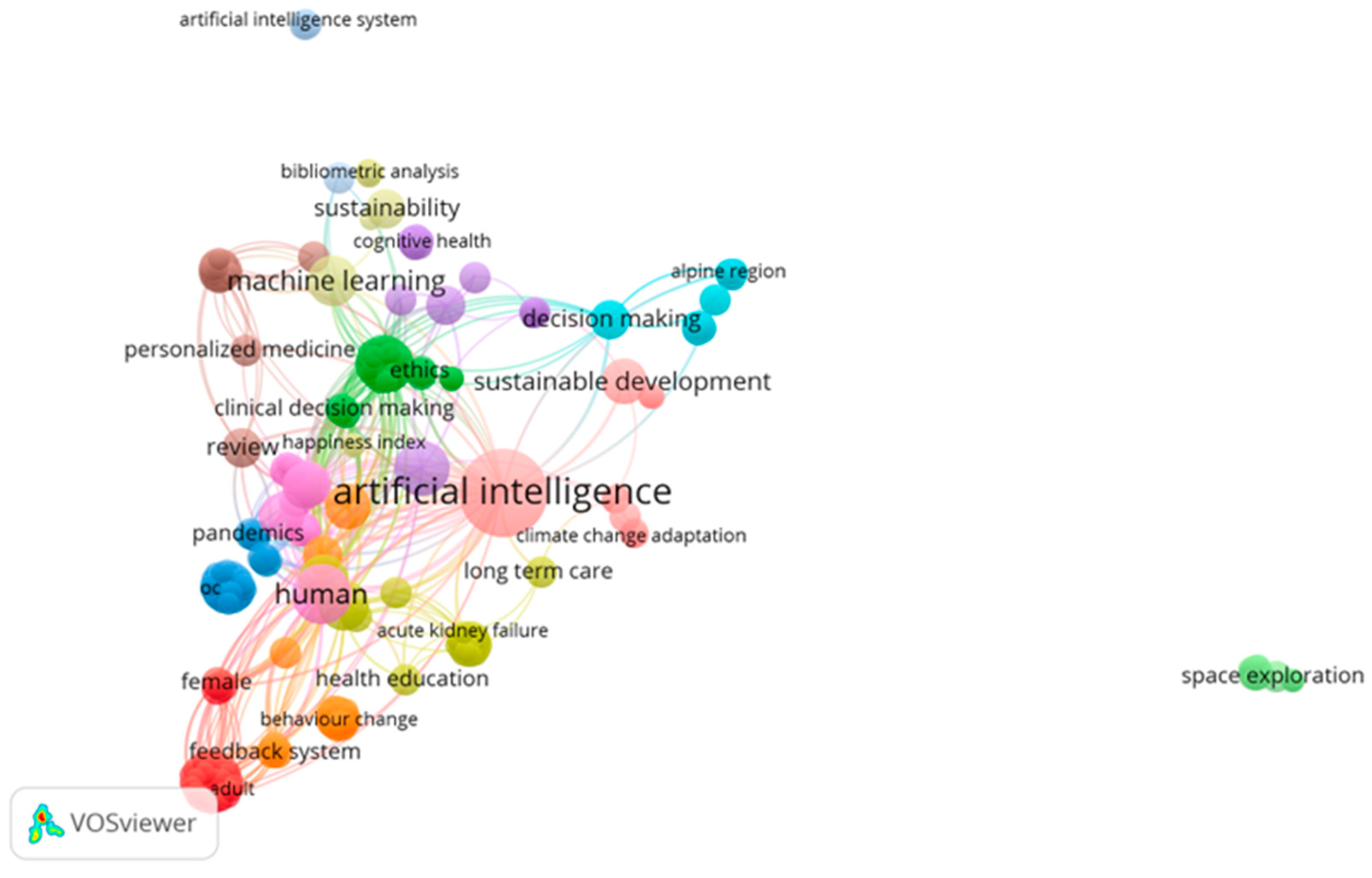

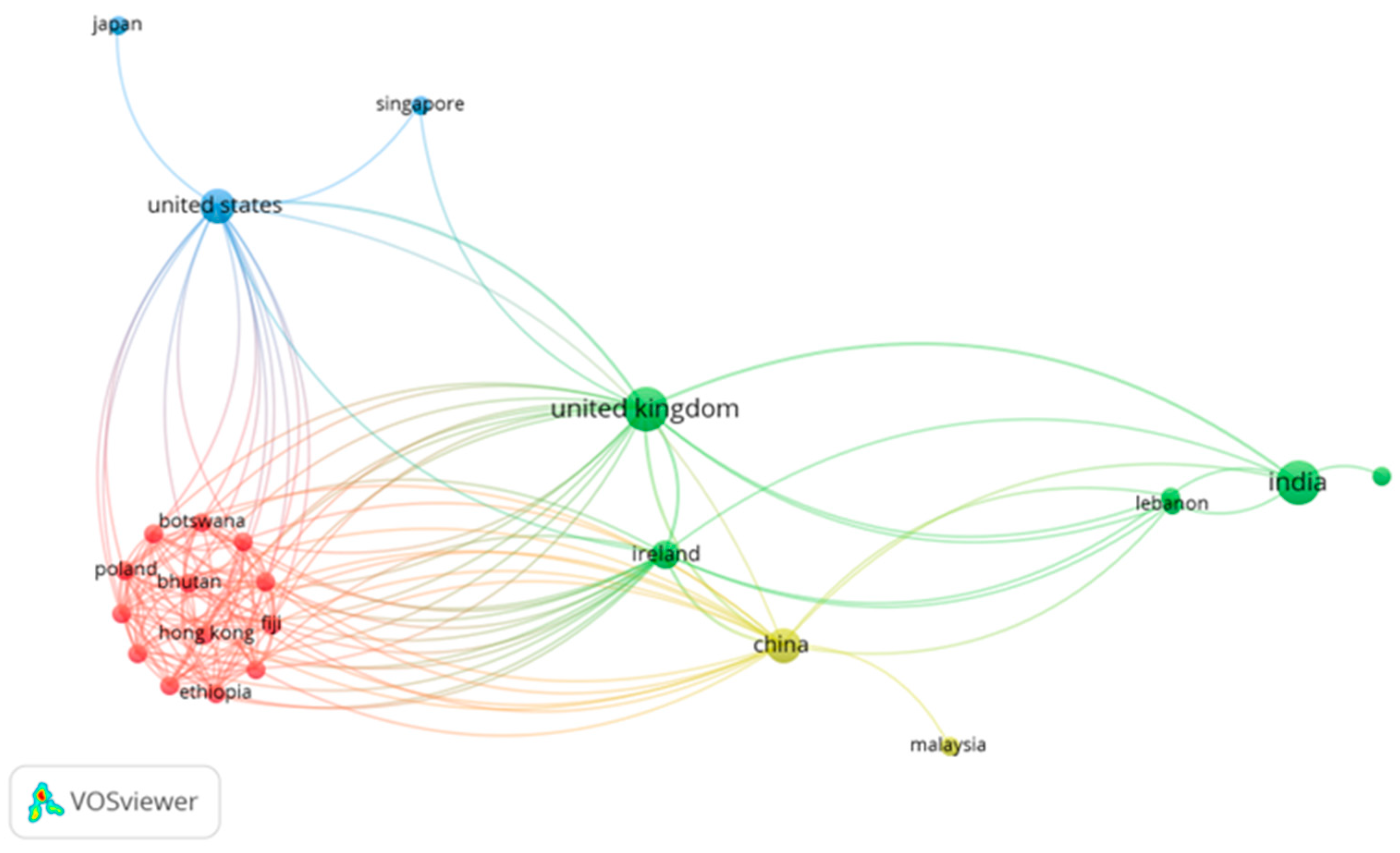

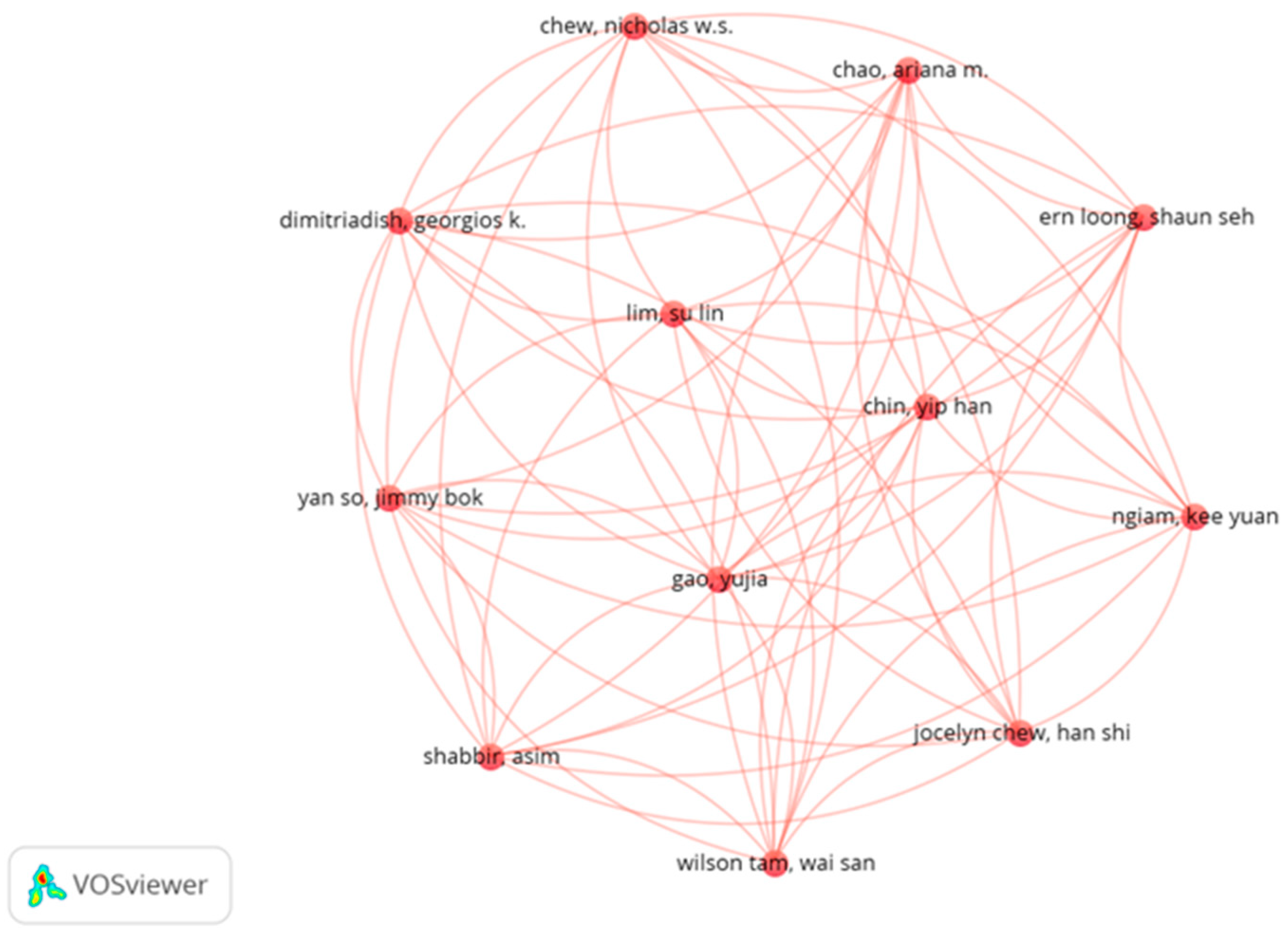

3.2. Bibliometric Analysis of Keywords and Authors

3.3. Descriptive Statistics of Included Studies

4. Discussion

4.1. Ethical Considerations in AI Mental Health Interventions

4.2. Personalization Approaches While Respecting Patient Privacy

4.3. Risk Mitigation Strategies for AI Mental Health Interventions

4.4. Global Implementation Challenges for AI Mental Health Interventions

4.5. Implications for Sustainable Mental Health AI Through Psychological First Aid Integration

4.6. Theoretical and Practical Implications for Global Mental Health Sustainability

4.7. Limitations and Future Research Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data availability statement

Conflicts of Interest

References

- Daneshvar, H.; Boursalie, O.; Samavi, R.; Doyle, T.E.; Duncan, L.; Pires, P.; Sassi, R. SOK: Application of machine learning models in child and youth mental health decision-making. In Artificial Intelligence for Medicine: An Applied Reference for Methods and Applications; Elsevier: Amsterdam, The Netherlands, 2024; pp. 113–132. [Google Scholar] [CrossRef]

- Fiske, A.; Henningsen, P.; Buyx, A. The Implications of Embodied Artificial Intelligence in Mental Healthcare for Digital Wellbeing. In Philosophical Studies; Springer Nature: Cham, Switzerland, 2020; Volume 140, pp. 207–219. [Google Scholar] [CrossRef]

- Zhang, Y.; Lu, X.; Xiao, J.J. Does financial education help to improve the return on stock investment? Evidence from China. Pac.-Basin Financ. J. 2023, 78, 101940. [Google Scholar] [CrossRef]

- Alhuwaydi, A.M. Exploring the Role of Artificial Intelligence in Mental Healthcare: Current Trends and Future Directions—A Narrative Review for a Comprehensive Insight. Risk Manag. Healthc. Policy 2024, 17, 1339–1348. [Google Scholar] [CrossRef] [PubMed]

- Malik, S.; Surbhi, A. Artificial Intelligence in Mental Health landscape: A Qualitative analysis of Ethics and Law. In Proceedings of the AIP Conference Proceedings; Roy, D., Fragulis, G., Eds.; American Institute of Physics: College Park, MD, USA, 2024; Volume 3220. [Google Scholar] [CrossRef]

- Milne-Ives, M.; Selby, E.; Inkster, B.; Lam, C.; Meinert, E. Artificial intelligence and machine learning in mobile apps for mental health: A scoping review. PLoS Digit. Health 2022, 1, e0000079. [Google Scholar] [CrossRef] [PubMed]

- Chaudhry, B.M.; Debi, H.R. User perceptions and experiences of an AI-driven conversational agent for mental health support. mHealth 2024, 10, 22. [Google Scholar] [CrossRef] [PubMed]

- Thakkar, A.; Gupta, A.; De Sousa, A. Artificial intelligence in positive mental health: A narrative review. Front. Digit. Health 2024, 6, 1280235. [Google Scholar] [CrossRef]

- Campion, J.; Javed, A.; Lund, C.; Sartorius, N.; Saxena, S.; Marmot, M.; Allan, J.; Udomratn, P. Public mental health: Required actions to address implementation failure in the context of COVID-19. Lancet Psychiatry 2022, 9, 169–182. [Google Scholar] [CrossRef]

- Kagee, A. Designing interventions to ameliorate mental health conditions in resource-constrained contexts: Some considerations. S. Afr. J. Psychol. 2023, 53, 429–437. [Google Scholar] [CrossRef]

- Cappello, G.; Defeudis, A.; Giannini, V.; Mazzetti, S.; Regge, D. Artificial intelligence in oncologic imaging. In Multimodality Imaging and Intervention in Oncology; Springer International Publishing: Cham, Switzerland, 2023; pp. 585–597. [Google Scholar] [CrossRef]

- Darda, P.; Pendse, M.K. The impact of artificial intelligence (AI) transformation on the financial sector from the trading to security operations. In Shaping Cutting-Edge Technologies and Applications for Digital Banking and Financial Services; Taylor and Francis: New York, NY, USA, 2025; pp. 322–339. [Google Scholar] [CrossRef]

- Lexcellent, C. Artificial Intelligence. In SpringerBriefs in Applied Sciences and Technology; Springer Nature: Berlin, Germany, 2019; pp. 5–21. [Google Scholar] [CrossRef]

- Bastawrous, A.; Cleland, C. Artificial intelligence in eye care: A cautious welcome. Community Eye Health J. 2022, 35, 13. [Google Scholar]

- Sloane, E.B.; Silva, R.J. Artificial intelligence in medical devices and clinical decision support systems. In Clinical Engineering Handbook, 2nd ed.; Academic Press: Amsterdam, The Netherlands, 2019; pp. 556–568. [Google Scholar] [CrossRef]

- Zhang, Y.-B.; Zhang, L.-L.; Kim, H.-K. The effect of utaut2 on use intention and use behavior in online learning platform. J. Logist. Inform. Serv. Sci. 2021, 8, 67–81. [Google Scholar] [CrossRef]

- De Silva, M.J. Making mental health an integral part of sustainable development: The contribution of a social determinants framework. Epidemiol. Psychiatr. Sci. 2015, 24, 100–106. [Google Scholar] [CrossRef]

- Scorza, P.; Poku, O.; Pike, K.M. Mental health: Taking its place as an essential element of sustainable global development. BJPsych Int. 2018, 15, 72–74. [Google Scholar] [CrossRef][Green Version]

- Cabassa, L.J.; Stefancic, A.; Bochicchio, L.; Tuda, D.; Weatherly, C.; Lengnick-Hall, R. Organization leaders’ decisions to sustain a peer-led healthy lifestyle intervention for people with serious mental illness in supportive housing. Transl. Behav. Med. 2021, 11, 1151–1159. [Google Scholar] [CrossRef] [PubMed]

- Saeidnia, H.R.; Hashemi Fotami, S.G.; Lund, B.; Ghiasi, N. Ethical Considerations in Artificial Intelligence Interventions for Mental Health and Well-Being: Ensuring Responsible Implementation and Impact. Soc. Sci. 2024, 13, 381. [Google Scholar] [CrossRef]

- Alfano, L.; Malcotti, I.; Ciliberti, R. Psychotherapy, artificial intelligence and adolescents: Ethical aspects. J. Prev. Med. Hyg. 2023, 64, E438–E442. [Google Scholar] [CrossRef]

- Dhar, S.; Sarkar, U. Safeguarding Data Privacy and Informed Consent: Ethical Imperatives in AIDriven Mental Healthcare. In Intersections of Law and Computational Intelligence in Health Governance; IGI Global: Hershey, PA, USA, 2024; pp. 197–219. [Google Scholar] [CrossRef]

- Laacke, S.; Mueller, R.; Schomerus, G.; Salloch, S. Artificial Intelligence, Social Media and Depression. A New Concept of Health-Related Digital Autonomy. Am. J. Bioeth. 2021, 21, 4–20. [Google Scholar] [CrossRef]

- Fiske, A.; Henningsen, P.; Buyx, A. Your Robot Therapist Will See You Now: Ethical Implications of Embodied Artificial Intelligence in Psychiatry, Psychology, and Psychotherapy. J. Med. Internet Res. 2019, 21, e13216. [Google Scholar] [CrossRef]

- Timmons, A.C.; Duong, J.B.; Simo Fiallo, N.; Lee, T.; Vo, H.P.Q.; Ahle, M.W.; Comer, J.S.; Brewer, L.C.; Frazier, S.L.; Chaspari, T. A Call to Action on Assessing and Mitigating Bias in Artificial Intelligence Applications for Mental Health. Perspect. Psychol. Sci. 2023, 18, 1062–1096. [Google Scholar] [CrossRef]

- Pesapane, F.; Summers, P. Ethics and regulations for AI in radiology. In Artificial Intelligence for Medicine: An Applied Reference for Methods and Applications; Elsevier: Amsterdam, The Netherlands, 2024; pp. 179–192. [Google Scholar] [CrossRef]

- Silveira, P.V.R.; Paravidini, J.L.L. The Ethics of Applying Artificial Intelligence and Chatbots in Mental Health: A Psychoanalytic Perspective. Rev. Pesqui. Qual. 2024, 12, 30. [Google Scholar] [CrossRef]

- Pardeshi, S.M.; Jain, D.C. AI in mental health federated learning and privacy. In Federated Learning and Privacy-Preserving in Healthcare AI; IGI Global: Hershey, PA, USA, 2024; pp. 274–287. [Google Scholar] [CrossRef]

- Solaiman, B.; Malik, A.; Ghuloum, S. Monitoring Mental Health: Legal and Ethical Considerations of Using Artificial Intelligence in Psychiatric Wards. Am. J. Law Med. 2023, 49, 250–266. [Google Scholar] [CrossRef]

- Nashwan, A.J.; Gharib, S.; Alhadidi, M.; El-Ashry, A.M.; Alamgir, A.; Al-Hassan, M.; Khedr, M.A.; Dawood, S.; Abufarsakh, B. Harnessing Artificial Intelligence: Strategies for Mental Health Nurses in Optimizing Psychiatric Patient Care. Issues Ment. Health Nurs. 2023, 44, 1020–1034. [Google Scholar] [CrossRef]

- Oyebode, O.; Fowles, J.; Steeves, D.; Orji, R. Machine Learning Techniques in Adaptive and Personalized Systems for Health and Wellness. Int. J. Hum.-Comput. Interact. 2023, 39, 1938–1962. [Google Scholar] [CrossRef]

- Rashid, Z.; Folarin, A.A.; Zhang, Y.; Ranjan, Y.; Conde, P.; Sankesara, H.; Sun, S.; Stewart, C.; Laiou, P.; Dobson, R.J.B. Digital Phenotyping of Mental and Physical Conditions: Remote Monitoring of Patients Through RADAR-Base Platform. JMIR Ment. Health 2024, 11, e51259. [Google Scholar] [CrossRef] [PubMed]

- Cross, S.; Bell, I.; Nicholas, J.; Valentine, L.; Mangelsdorf, S.; Baker, S.; Titov, N.; Alvarez-Jimenez, M. Use of AI in Mental Health Care: Community and Mental Health Professionals Survey. JMIR Ment. Health 2024, 11, e51259. [Google Scholar] [CrossRef] [PubMed]

- Mahmud, T.R.; Porntrakoon, P. The Use of AI Chatbots in Mental Healthcare for University Students in Thailand: A Case Study. In Proceedings of the 2023 7th International Conference on Business and Information Management (ICBIM), Bangkok, Thailand, 18–20 August 2023; Institute of Electrical and Electronics Engineers Inc.: Pathum Thani, Thailand, 2023; pp. 48–53. [Google Scholar] [CrossRef]

- Uygun Ilikhan, S.; Özer, M.; Tanberkan, H.; Bozkurt, V. How to mitigate the risks of deployment of artificial intelligence in medicine? Turk. J. Med. Sci. 2024, 54, 483–492. [Google Scholar] [CrossRef]

- Solaiman, B. Generative artificial intelligence (GenAI) and decision-making: Legal & ethical hurdles for implementation in mental health. Int. J. Law Psychiatry 2024, 97, 102028. [Google Scholar] [CrossRef]

- Koutsouleris, N.; Hauser, T.U.; Skvortsova, V.; De Choudhury, M. From promise to practice: Towards the realisation of AI-informed mental health care. Lancet Digit. Health 2022, 4, e829–e840. [Google Scholar] [CrossRef]

- Auf, H.; Svedberg, P.; Nygren, J.; Nair, M.; Lundgren, L.E. The Use of AI in Mental Health Services to Support Decision-Making: Scoping Review. J. Med. Internet Res. 2025, 27, e63548. [Google Scholar] [CrossRef]

- Shidhaye, R. Mental health of the Pacific Island Nation communities: What the rest in the world can contribute to and learn from? Australas. Psychiatry 2024, 33, 217–219. [Google Scholar] [CrossRef]

- Wainberg, M.L.; Scorza, P.; Shultz, J.M.; Helpman, L.; Mootz, J.J.; Johnson, K.A.; Neria, Y.; Bradford, J.-M.E.; Oquendo, M.A.; Arbuckle, M.R. Challenges and Opportunities in Global Mental Health: A Research-to-Practice Perspective. Curr. Psychiatry Rep. 2017, 19, 28. [Google Scholar] [CrossRef]

- DeSilva, M.; Samele, C.; Saxena, S.; Patel, V.; Darzi, A. Policy actions to achieve integrated community-based mental health services. Health Aff. 2014, 33, 1595–1602. [Google Scholar] [CrossRef]

- McInnis, M.G.; Merajver, S.D. Global mental health: Global strengths and strategies. Task-shifting in a shifting health economy. Asian J. Psychiatry 2011, 4, 165–171. [Google Scholar] [CrossRef] [PubMed]

- Zaidan, A.M. The leading global health challenges in the artificial intelligence era. Front. Public Health 2023, 11, 1328918. [Google Scholar] [CrossRef] [PubMed]

- van der Schyff, E.L.; Ridout, B.; Dip, G.; Amon, K.L.; Forsyth, R.; Cert, G.; Campbell, A.J. Providing Self-Led Mental Health Support Through an Artificial Intelligence–Powered Chat Bot (Leora) to Meet the Demand of Mental Health Care. J. Med. Internet Res. 2023, 25, e46448. [Google Scholar] [CrossRef]

- Beg, M.J.; Verma, M.; Chanthar, K.M.M.V.; Verma, M.K. Artificial Intelligence for Psychotherapy: A Review of the Current State and Future Directions. Indian J. Psychol. Med. 2024, 47. [Google Scholar] [CrossRef]

- Rauws, M. The Rise of the Mental Health Chatbot. In Artificial Intelligence in Medicine; Springer International Publishing: Cham, Switzerland, 2022; pp. 1609–1618. [Google Scholar] [CrossRef]

- Beg, M.J.; Verma, M.K. Exploring the Potential and Challenges of Digital and AI-Driven Psychotherapy for ADHD, OCD, Schizophrenia, and Substance Use Disorders: A Comprehensive Narrative Review. Indian J. Psychol. Med. 2024, 02537176241300569. [Google Scholar] [CrossRef]

- Almuhanna, H.; Alenezi, M.; Abualhasan, M.; Alajmi, S.; Alfadhli, R.; Karar, A.S. AI Asthma Guard: Predictive Wearable Technology for Asthma Management in Vulnerable Populations. Appl. Syst. Innov. 2024, 7, 78. [Google Scholar] [CrossRef]

- Bondar, K.M.; Bilozir, O.S.; Shestopalova, O.P.; Hamaniuk, V.A. Bridging minds and machines: AI’s role in enhancing mental health and productivity amidst Ukraine’s challenges. In Proceedings of the CEUR Workshop Proceedings; Semerikov, S.O., Striuk, A.M., Marienko, M.V., Pinchuk, O.P., Eds.; 2025; Volume 3918, pp. 43–59. Available online: https://ceur-ws.org/Vol-3918/paper020.pdf (accessed on 22 February 2025).

- Bouktif, S.; Khanday, A.M.U.D.; Ouni, A. Explainable Predictive Model for Suicidal Ideation During COVID-19: Social Media Discourse Study. J. Med. Internet Res. 2025, 27, e65434. [Google Scholar] [CrossRef]

- Farhat, T.; Akram, S.; Rashid, M.; Jaffar, A.; Bhatti, S.M.; Iqbal, M.A. A deep learning-based ensemble for autism spectrum disorder diagnosis using facial images. PLoS ONE 2025, 20, e0321697. [Google Scholar] [CrossRef]

- Feng, H.-P.; Cheng, Y.; Tzeng, W.-C. Health Equity in Mental Health Care: Challenges for Nurses and Related Preparation. J. Nurs. 2025, 72, 14–20. [Google Scholar] [CrossRef]

- Freitas, A.; Costa, B.; Martinho, D.; Pais, F.; Duarte, I.; Martins, C.; Marreiros, G.; Almeida, R. A multidisciplinary approach to prevent student anxiety: A Toolkit for educators. In Procedia Computer Science; Martinho, R., Cruz Cunha, M.M., Eds.; Elsevier: Amsterdam, The Netherlands, 2025; Volume 256, pp. 852–860. [Google Scholar]

- Gao, Y. The role of artificial intelligence in enhancing sports education and public health in higher education: Innovations in teaching models, evaluation systems, and personalized training. Front. Public Health 2025, 13, 1554911. [Google Scholar] [CrossRef]

- Huang, C.-K.; Lin, J.-S. Firm Performance on Artificial Intelligence Implementation. Manag. Decis. Econ. 2025, 46, 1856–1870. [Google Scholar] [CrossRef]

- Irfan, N.; Zafar, S.; Hussain, I. Synergistic Precision: Integrating Artificial Intelligence and Bioactive Natural Products for Advanced Prediction of Maternal Mental Health During Pregnancy. J. Nat. Rem. 2024, 24, 2559–2569. [Google Scholar] [CrossRef]

- Kant, V.; Govindrao, P.S.; Mahajan, S. Facial Emotion Recognition Using CNNs: Implications for Affective Computing and Surveillance. In Proceedings of the 2024 Second International Conference Computational and Characterization Techniques in Engineering and Sciences (IC3TES), Lucknow, India, 15–16 November 2024. [Google Scholar] [CrossRef]

- Khare, S.K.; March, S.; Barua, P.D.; Gadre, V.M.; Acharya, U.R. Application of data fusion for automated detection of children with developmental and mental disorders: A systematic review of the last decade. Inf. Fusion 2023, 99, 101898. [Google Scholar] [CrossRef]

- Khoodoruth, M.A.S.; Khoodoruth, M.A.R.; Khoodoruth, W.N.C.-K. Implementing a collaborative care model for child and adolescent mental health in Qatar: Addressing workforce and access challenges. Asian J. Psychiatry 2025, 103, 104347. [Google Scholar] [CrossRef]

- Koseoglu, S.C.; Delice, E.K.; Erdebilli, B. Nurse-Task Matching Decision Support System Based on FSPC-HEART Method to Prevent Human Errors for Sustainable Healthcare. Int. J. Comput. Intell. Syst. 2023, 16, 53. [Google Scholar] [CrossRef]

- Kueper, J.K.; Terry, A.; Bahniwal, R.; Meredith, L.; Beleno, R.; Brown, J.B.; Dang, J.; Leger, D.; McKay, S.; Pinto, A.; et al. Connecting artificial intelligence and primary care challenges: Findings from a multi stakeholder collaborative consultation. BMJ Health Care Inform. 2022, 29, e100493. [Google Scholar] [CrossRef]

- Lu, G.; Kubli, M.; Moist, R.; Zhang, X.; Li, N.; Gächter, I.; Wozniak, T.; Fleck, M. Tough Times, Extraordinary Care: A Critical Assessment of Chatbot-Based Digital Mental Healthcare Solutions for Older Persons to Fight Against Pandemics Like COVID-19. In Proceedings of the Sixth International Congress on Information and Communication Technology; Lecture Notes in Networks and Systems; Yang, X., Sherratt, S., Dey, N., Joshi, A., Eds.; Springer Science and Business Media Deutschland GmbH: Berlin/Heidelberg, Germany, 2022; Volume 235, pp. 735–743. [Google Scholar] [CrossRef]

- Lucero-Prisno, D.E.; Shomuyiwa, D.O.; Kouwenhoven, M.B.N.; Dorji, T.; Adebisi, Y.A.; Odey, G.O.; George, N.S.; Ajayi, O.T.; Ekerin, O.; Manirambona, E.; et al. Top 10 Public Health Challenges for 2024: Charting a New Direction for Global Health Security. Public Health Chall. 2025, 4, e70022. [Google Scholar] [CrossRef]

- Maglogiannis, I.; Trastelis, F.; Kalogeropoulos, M.; Khan, A.; Gallos, P.; Menychtas, A.; Panagopoulos, C.; Papachristou, P.; Islam, N.; Wolff, A.; et al. AI4Work Project: Human-Centric Digital Twin Approaches to Trustworthy AI and Robotics for Improved Working Conditions in Healthcare and Education Sectors. Stud. Health Technol. Inform. 2024, 316, 1013–1017. [Google Scholar] [CrossRef]

- Maguraushe, K.; Ndayizigamiye, P.; Bokaba, T. A Systematic Review on the Use of AI-Powered Cloud Computing for Healthcare Resilience. In Emerging Technologies for Developing Countries. AFRICATEK 2023; Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Masinde, M., Möbs, S., Bagula, A., Eds.; Springer Science and Business Media Deutschland GmbH: Berlin/Heidelberg, Germany, 2024; Volume 520, pp. 126–141. [Google Scholar]

- Mari, J.J.; Kapczinski, F.; Brunoni, A.R.; Gadelha, A.; Prates-Baldez, D.; Miguel, E.C.; Scorza, F.A.; Caye, A.; Quagliato, L.A.; De Boni, R.B.; et al. The S20 Brazilian Mental Health Report for building a just world and a sustainable planet: Part II. Braz. J. Psychiatry 2024, 46, e20243707. [Google Scholar] [CrossRef]

- Njoroge, W.; Maina, R.; Elena, F.; Atwoli, L.; Wu, Z.; Ngugi, A.K.; Sen, S.; Wang, J.L.; Wong, S.; Baker, J.A.; et al. Use of mobile technology to identify behavioral mechanisms linked to mental health outcomes in Kenya: Protocol for development and validation of a predictive model. BMC Res. Notes 2023, 16, 226. [Google Scholar] [CrossRef]

- Occhipinti, J.-A.; Skinner, A.; Doraiswamy, P.M.; Saxena, S.; Eyre, H.A.; Hynes, W.; Geli, P.; Jeste, D.V.; Graham, C.; Song, C.; et al. The influence of economic policies on social environments and mental health. Bull. World Health Organ. 2024, 102, 323–329. [Google Scholar] [CrossRef] [PubMed]

- Putri Syarifa, D.F.; Krisna Moeljono, A.A.; Hidayat, W.N.; Shafelbilyunazra, A.; Abednego, V.K.; Prasetya, D.D. Development of a Micro Counselling Educational Platform Based on AI and Face Recognition to Prevent Students Anxiety Disorder. In Proceedings of the 2024 International Conference on Electrical and Information Technology (IEIT), Da Nang, Vietnam, 29 July–2 August 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Sadasivuni, S.T.; Zhang, Y. A New Method for Discovering Daily Depression from Tweets to Monitor Peoples Depression Status. In Proceedings of the 2020 IEEE International Conference on Humanized Computing and Communication with Artificial Intelligence (HCCAI), Irvine, CA, USA, 21 September 2020; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2020; pp. 47–50. [Google Scholar] [CrossRef]

- Saltzman, L.Y.; Hansel, T.C. Child and Adolescent Trauma Response Following Climate-Related Events: Leveraging Existing Knowledge With New Technologies. Traumatology 2024. [Google Scholar] [CrossRef]

- Stitini, O.; Kaloun, S. Towards a Multi-Objective and Contextual Multi-Criteria Recommender System for Enhancing User Well-Being in Sustainable Smart Homes. Electronics 2025, 14, 809. [Google Scholar] [CrossRef]

- Sujanthi, S.; Abishek Raghavan, V.B.; Poovizhi, M.; Kayathri, T.L.; Balaji, P.; Hariharan, M. Smart Wellness: AI-Driven IoT and Wearable Sensors for Enhanced Workplace Well-Being. In Proceedings of the 2025 International Conference on Multi-Agent Systems for Collaborative Intelligence (ICMSCI), Chicago, IL, USA, 20–22 January 2025; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2025; pp. 502–509. [Google Scholar] [CrossRef]

- Villegas-Ch, W.; Govea, J.; Revelo-Tapia, S. Improving Student Retention in Institutions of Higher Education through Machine Learning: A Sustainable Approach. Sustainability 2023, 15, 14512. [Google Scholar] [CrossRef]

- Vyas, S.; Kumari, A. AI Application in Achieving Sustainable Development Goal Targeting Good Health and Well-Being: A New Holistic Paradigm. In Control and Information Sciences. CISCON 2018; Lecture Notes in Electrical Engineering; George, V.I., Santhosh, K.V., Lakshminarayanan, S., Eds.; Springer Science and Business Media Deutschland GmbH: Berlin/Heidelberg, Germany, 2024; pp. 75–96. [Google Scholar] [CrossRef]

- Xie, C.; Wang, R.; Gong, X. The influence of environmental cognition on green consumption behavior. Front. Psychol. 2022, 13, 988585. [Google Scholar] [CrossRef]

- Yadav, G.; Bokhari, M.U. Comparative Study of Mental Stress Detection through Machine Learning Techniques. In Proceedings of the 2023 10th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 15–17 March 2023; pp. 1662–1666. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85159561342&partnerID=40&md5=cf4521fa4f42f7e5de110e9313c53f5c (accessed on 22 February 2025).

- Albertini, M.; Bei, E. The Potential for AI to the Monitoring and Support for Caregivers: An Urgent Tech-Social Challenge. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85184517637&partnerID=40&md5=aa27c6ce302a1f051d77807412624f01 (accessed on 22 February 2025).

- Barbazzeni, B.; Haider, S.; Friebe, M. Engaging Through Awareness: Purpose-Driven Framework Development to Evaluate and Develop Future Business Strategies With Exponential Technologies Toward Healthcare Democratization. Front. Public Health 2022, 10, 851380. [Google Scholar] [CrossRef]

- Bauman, M.J.; Salomon, E.; Walsh, J.; Sullivan, R.; Boxer, K.S.; Naveed, H.; Helsby, J.; Schneweis, C.; Lin, T.-Y.; Haynes, L.; et al. Reducing incarceration through prioritized interventions. In Proceedings of the 1st ACM SIGCAS Conference on Computing and Sustainable Societies, San Jose, CA, USA, 20–22 June 2018. [Google Scholar] [CrossRef]

- Bhawra, J.; Skinner, K.; Favel, D.; Green, B.; Coates, K.; Katapally, T.R. The food equity and environmental data sovereignty (feeds) project: Protocol for a quasi-experimental study evaluating a digital platform for climate change preparedness. JMIR Res. Prot. 2021, 10, e31389. [Google Scholar] [CrossRef]

- Caddle, X.V.; Razi, A.; Kim, S.; Ali, S.; Popo, T.; Stringhini, G.; De Choudhury, M.; Wisniewski, P.J. MOSafely: Building an Open-Source HCAI Community to Make the Internet a Safer Place for Youth. In Proceedings of the Companion Publication of the 2021 Conference on Computer Supported Cooperative Work and Social Computing, Virtual Event, 23–27 October 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 315–318. [Google Scholar] [CrossRef]

- Cahill, J.; Howard, V.; Huang, Y.; Ye, J.; Ralph, S.; Dillon, A. Intelligent Work: Person Centered Operations, Worker Wellness and the Triple Bottom Line. In HCI International 2021—Posters. HCII 2021; Communications in Computer and Information Science; Stephanidis, C., Antona, M., Ntoa, S., Eds.; Springer Science and Business Media Deutschland GmbH: Berlin/Heidelberg, Germany, 2021; Volume 1421, pp. 307–314. [Google Scholar] [CrossRef]

- Carroll, M.; James, J.A.; Lardiere, M.R.; Proser, M.; Rhee, K.; Sayre, M.H.; Shore, J.H.; Ternullo, J. Innovation networks for improving access and quality across the healthcare ecosystem. Telemed. e-Health 2010, 16, 107–111. [Google Scholar] [CrossRef]

- Casillo, M.; Cecere, L.; Dembele, S.P.; Lorusso, A.; Santaniello, D.; Valentino, C. The Metaverse and Revolutionary Perspectives for the Smart Cities of the Future. In Proceedings of the Ninth International Congress on Information and Communication Technology. ICICT 2024 2024; Lecture Notes in Networks and Systems; Yang, X.-S., Sherratt, S., Dey, N., Joshi, A., Eds.; Springer Science and Business Media Deutschland GmbH: Berlin/Heidelberg, Germany, 2024; pp. 215–225. [Google Scholar] [CrossRef]

- Chang, C.-L.; Chang, C.-R.; Chuang, T.-W.; Wang, Y.-H.; Hou, C.-C.; Chen, H.-J.; Chuang, F.-C. An Efficiency Analysis of Artificial Intelligence Medical Equipment for Civil Use. In Proceedings of the 2020 IEEE 2nd Eurasia Conference on Biomedical Engineering, Healthcare and Sustainability (ECBIOS), Tainan, Taiwan, 29–31 May 2020; Meen, T.-H., Ed.; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2020; pp. 116–118. [Google Scholar] [CrossRef]

- Hazra-Ganju, A.; Dlima, S.D.; Menezes, S.R.; Ganju, A.; Mer, A. An omni-channel, outcomes-focused approach to scale digital health interventions in resource-limited populations: A case study. Front. Digit. Health 2023, 5, 1007687. [Google Scholar] [CrossRef]

- Hsu, M.-C. The Construction of Critical Factors for Successfully Introducing Chatbots into Mental Health Services in the Army: Using a Hybrid MCDM Approach. Sustainability 2023, 15, 7905. [Google Scholar] [CrossRef]

- Kurolov, M.O. A systematic mapping study of using digital marketing technologies in health care: The state of the art of digital healthcare marketing. In ICFNDS’22: Proceedings of the 6th International Conference on Future Networks & Distributed Systems, Tashkent, Uzbekistan, 15 December 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 318–323. [Google Scholar] [CrossRef]

- Molan, G.; Molan, M. Sustainable level of human performance with regard to actual availability in different professions. Work 2020, 65, 205–213. [Google Scholar] [CrossRef] [PubMed]

- Murtiningsih, S.; Sujito, A. Reimagining the future of education: Inclusive pedagogies, critical intergenerational justice, and technological disruption. Policy Futures Educ. 2025, 14782103251341406. [Google Scholar] [CrossRef]

- Maria, S.; Rachmawaty, A.; Aini, R.N. Artificial Intelligence and Labour Markets: Analyzing Job Displacement and Creation. Int. J. Eng. Sci. Inf. Technol. 2024, 4, 284–290. Available online: https://ijesty.org/index.php/ijesty/article/view/830/0 (accessed on 25 June 2025).

- Shabu, K.; Priya, K.M.; Mageswari, S.S.; Prabhash, N.; Hari, A. Shaping the gig economy: Insights into management, technology, and workforce dynamics. Int. J. Account. Econ. Stud. 2025, 12, 92–103. [Google Scholar] [CrossRef]

- Tariq, A.; Awan, M.J.; Alshudukhi, J.; Alam, T.M.; Alhamazani, K.T.; Meraf, Z. Software Measurement by Using Artificial Intelligence. J. Nanomater. 2022, 2022, 7283171. [Google Scholar] [CrossRef]

- Wang, L.; Shao, Z.Y.; Pan, C.Y.; Kong, L.K.; Tan, X.; Liu, H.N.; Huang, P.X.; Shen, Y.H.; Wu, J.L.; Wang, Y.F. Research on evaluation indicator system and intelligent monitoring framework for cultural services in community parks: A case study of Guanggang Park in Guangzhou, China. Acta. Ecol. Sin. 2025, 45, 4599–4613. Available online: http://dx.doi.org/10.20103/j.stxb.202501080069 (accessed on 25 June 2025).

- Wang, Y.-H.; Chuang, F.-C.; Chang, C.-R.; Chuang, T.-W.; Yeh, H.-W.; Chang, C.-L. A Study on Public Acceptability of Traditional Chinese Medicine Meridian Instruments. In Proceedings of the 2020 IEEE 2nd Eurasia Conference on Biomedical Engineering, Healthcare and Sustainability (ECBIOS), Tainan, China, 29–31 May 2020; pp. 105–107. [Google Scholar] [CrossRef]

- Yang, Y.Q.; Zhao, Y.H.; Guo, J.Q. Visualization and analysis of the integration mechanism of artificial intelligence-enabled sports development and ecological environment protection. Mol. Cell. Biomech. 2024, 21, 697. [Google Scholar] [CrossRef]

- Yu, Y. The role of psycholinguistics for language learning in teaching based on formulaic sequence use and oral fluency. Front. Psychol. 2022, 13, 1012225. [Google Scholar] [CrossRef]

- Bhat, T.F.; Bhat, R.A.; Tramboo, I.A.; Antony, S. Surveilled Selves and Silenced Voices: A Linguistic and Gendered Critique of Privacy Invasion in Marie Lu’s Warcross. Forum. Linguist. Stud. 2025, 7, 437–448. [Google Scholar] [CrossRef]

- Anwar, M.A.; Ahmad, S.S. Use of artificial intelligence in medical sciences. In Proceedings of the 28th International Business Information Management Association Conference—Vision 2020: Innovation Management, Development Sustainability, and Competitive Economic Growth, Seville, Spain, 9–10 November 2016; pp. 415–422. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85013963990&partnerID=40&md5=a90276682ce72f0e28a5eb5e4b4b91c0 (accessed on 22 February 2025).

- Bai, S.; Zhang, J. Management and information disclosure of electric power environmental and social governance issues in the age of artificial intelligence. Comput. Electr. Eng. 2022, 104, 108390. [Google Scholar] [CrossRef]

- Yang, D. EFL teacher’s integrated key competency cultivation mode in western rural areas from the socio-cognitive perspective. World J. English Lang. 2020, 10, 14–19. [Google Scholar] [CrossRef]

| Database | Complete Search String | Filters Applied | Search Dates | Date Range | Documents |

|---|---|---|---|---|---|

| Scopus | TITLE-ABS-KEY ((“artificial intelligence” OR “AI” OR “machine learning” OR “deep learning”) AND (“mental health” OR “psychological health” OR “psychiatric care” OR “behavioral health”) AND (“sustainable” OR “sustainability” OR “intervention” OR “therapy” OR “treatment”)) AND PUBYEAR > 2019 AND PUBYEAR < 2026 | Language: English; Document type: Article, Review; Subject area: Medicine, Psychology, Computer Science | 15 January 2025 | 2020–2025 | 467 |

| Web of Science | TI = ((“artificial intelligence” OR “AI” OR “machine learning” OR “deep learning”) AND (“mental health” OR “psychological health” OR “psychiatric care” OR “behavioral health”)) AND TS = (“sustainable” OR “sustainability” OR “intervention” OR “therapy” OR “treatment”) AND PY = (2020–2025) | Language: English; Document types: Article, Review; Web of Science Categories: Psychology, Computer Science, Health Care Sciences | 16 January 2025 | 2020–2025 | 386 |

| Science Direct | TITLE ((“artificial intelligence” OR “AI” OR “machine learning” OR “deep learning”) AND (“mental health” OR “psychological health” OR “behavioral health”)) AND ALL (“sustainable” OR “sustainability” OR “intervention” OR “therapy”) AND YEAR (2020–2025) | Content type: Research articles, Review articles; Subject areas: Psychology, Computer Science, Medicine | 17 January 2025 | 2020–2025 | 561 |

| Dimensions | title: ((“artificial intelligence” OR “AI” OR “machine learning” OR “deep learning”) AND (“mental health” OR “psychological health” OR “psychiatric care”)) AND (title: (“sustainable” OR “intervention” OR “therapy”) OR abstract: (“sustainable” OR “intervention” OR “therapy”)) AND year: [2020 TO 2025] | Publication type: Article; Language: English; Field of Research: Psychology, Computer Science, Clinical Sciences | 18 January 2025 | 2020–2025 | 238 |

| Total documents | 15–18 January 2025 | 2020–2025 | 1652 |

| Main Category | Subcategory | N° Articles | Representative Studies |

|---|---|---|---|

| 1. Ethical Considerations | Privacy and Confidentiality | 9 | [20,21,22] |

| Informed Consent | 5 | [22,23] | |

| Bias and Fairness | 6 | [24,25] | |

| Transparency and Accountability | 7 | [24,26] | |

| Human Oversight | 3 | [3,27] | |

| 2. Personalization Approaches | Federated Learning | 4 | [28,29] |

| AI-Enhanced Therapeutic Interventions | 8 | [30,31] | |

| Secure Technological Solutions | 6 | [32,33] | |

| Patient-Centered Design | 4 | [21,34] | |

| 3. Risks and Mitigation Strategies | Data Security | 7 | [5,35] |

| Algorithmic Bias | 5 | [20,25] | |

| Clinical Efficacy | 6 | [33,36] | |

| Healthcare Integration | 4 | [3,30] | |

| 4. Implementation Challenges | Technical Infrastructure | 5 | [37,38] |

| Cultural Adaptation | 4 | [39,40] | |

| Resource Allocation | 4 | [41,42] | |

| Evidence and Evaluation | 3 | [43,44] |

| Main Category | Authors’ Contribution | Authors |

|---|---|---|

| Ethical Considerations | Privacy and Confidentiality: Patient data security is paramount in AI mental health interventions. Studies emphasize the need for robust data encryption, secure storage protocols, and transparent data handling practices. Special attention must be given to the sensitive nature of mental health data, which requires stricter protections than general health information. | [20,21,22,24,45] |

| Informed Consent and Transparency: Obtaining genuine informed consent requires clear communication about how AI systems use patient data, their limitations, and potential risks. The studies highlight the importance of ongoing consent processes and accessible explanations of AI functionality appropriate to different literacy levels. | [20,21,22,23] | |

| Bias and Fairness: AI systems can perpetuate existing health disparities if not carefully designed. Research emphasizes the need for diverse training datasets, regular bias audits, and the development of fairness metrics specific to mental health applications. Cultural diversity in AI design emerges as particularly important in mental health contexts. | [8,20,24,25] | |

| Human Oversight and Accountability: Maintaining human clinician involvement in AI-supported care is essential. Studies recommend clear frameworks for accountability, regular ethical reviews, and systemic approaches to error detection and correction. The therapeutic relationship remains central despite technological advancement. | [3,24,27,30] | |

| Personalization Approaches | Federated Learning: This approach allows AI models to learn from distributed data without centralizing sensitive information, thereby enhancing privacy. Studies show that federated learning can improve diagnostic accuracy while maintaining robust data protection, especially important for personalized interventions in diverse populations. | [22,28,29] |

| AI-Enhanced Therapeutic Interventions: AI-powered tools like chatbots and virtual assistants demonstrate effectiveness in providing personalized support for conditions like depression and anxiety. Research highlights the importance of user-friendly interfaces and adaptive content delivery based on individual progress and preferences. | [30,31,34,46,47] | |

| Secure Technological Solutions: Research describes platforms like RADAR-base that support remote data collection with built-in security protocols. Implementation of explainable AI techniques increases transparency and trust while maintaining personalization capabilities. Privacy-by-design principles emerge as best practice. | [29,31,32,33] | |

| Patient-Centered Design: Studies emphasize the importance of involving patients in the design of AI systems to ensure relevance and acceptability. Patient preferences regarding the balance between personalization and privacy protection show significant variation, highlighting the need for flexible approaches. | [21,33,34,44] | |

| Risks and Mitigation Strategies | Data Security Risks and Protections: AI systems face potential vulnerabilities including unauthorized access and data breaches. Research recommends implementing robust encryption, conducting regular security audits, and ensuring compliance with evolving data protection regulations. Explicit consent processes need continuous improvement. | [5,20,33,35] |

| Algorithmic Bias and Fairness Measures: Studies document how AI algorithms can inherit biases present in training data, potentially leading to disparities in care. Recommended mitigation strategies include using diverse datasets, implementing bias detection tools, and conducting regular fairness audits with special attention to cultural factors. | [8,20,24,25] | |

| Clinical Efficacy and Safety Concerns: Research identifies potential risks of misdiagnosis or inappropriate treatment recommendations from AI systems. Rigorous clinical validation, continuous performance monitoring, and integration with clinical workflows are recommended to ensure safety and effectiveness. | [21,30,33,35,36] | |

| Human-AI Integration: Overreliance on technology can potentially diminish the therapeutic relationship. Studies emphasize the importance of using AI as augmentation rather than replacement for human clinicians, with clear protocols for determining appropriate levels of automation. | [3,24,30,33] | |

| Implementation Challenges | Technical Infrastructure Requirements: Implementing AI systems requires substantial computational resources and technical expertise. Studies highlight challenges in integrating AI with existing healthcare systems, especially in resource-limited settings, and recommend phased implementation approaches with careful consideration of local capacity. | [37,38,42,43] |

| Cultural and Contextual Adaptation: AI interventions developed in Western contexts may not be appropriate in different cultural settings. Research emphasizes the need for cultural adaptation of AI systems, including language modifications, cultural relevance assessments, and engagement with local stakeholders to ensure acceptability. | [8,39,40,43] | |

| Resource Allocation and Sustainability: Significant financial and human resources are required for successful AI implementation. Studies recommend strategic resource allocation, capacity building initiatives, and sustainable funding models, particularly for low and middle-income countries with existing mental health resource constraints. | [39,40,41,42] | |

| Evidence Requirements and Evaluation Frameworks: There is a critical need for robust evidence on the effectiveness of AI interventions in real-world settings. Research calls for rigorous evaluation methodologies, including randomized controlled trials, implementation science approaches, and long-term outcome studies for AI mental health tools. | [37,38,43,44] |

| Engagement | Outcomes | ||

|---|---|---|---|

| Categories and Subcategories | Technical | Clinical | Ethical |

| Ethical Considerations | |||

| Privacy and Confidentiality | ✓ Security protocols | ✓ Therapeutic relationship | ✓ Data rights |

| Informed Consent | ✓ Clinical communication | ✓ Autonomy | |

| Bias and Fairness | ✓ Algorithm design | ✓ Health equity | |

| Human Oversight | ✓ Clinical judgment | ✓ Accountability | |

| Personalization Approaches | |||

| Federated Learning | ✓ Distributed computing | ✓ Privacy preservation | |

| AI-Enhanced Therapeutic Interventions | ✓ Adaptive algorithms | ✓ Therapeutic delivery | ✓ Support ethics |

| Secure Technological Solutions | ✓ Data architecture | ✓ Clinical integration | ✓ Confidentiality |

| Patient-Centered Design | ✓ Therapeutic needs | ✓ Empowerment | |

| Risks and Mitigation Strategies | |||

| Data Security | ✓ Security testing | ✓ Clinical governance | ✓ Data protection |

| Algorithmic Bias | ✓ Bias detection | ✓ Clinical validation | ✓ Fairness |

| Clinical Efficacy | ✓ Performance metrics | ✓ Evidence-based practice | ✓ Safety assurance |

| Healthcare Integration | ✓ Workflow alignment | ✓ Role definition | |

| Implementation Challenges | |||

| Technical Infrastructure | ✓ System requirements | ✓ Healthcare compatibility | |

| Cultural Adaptation | ✓ Cultural competence | ✓ Contextual ethics | |

| Resource Allocation | ✓ Technical resources | ✓ Human resources | |

| Evidence and Evaluation | ✓ Performance metrics | ✓ Clinical metrics | ✓ Ethical compliance |

| Ref. | Year | Title | AI Technology | Intervention Type | Mental Health Focus | Quality Score | Category | Subcategory |

|---|---|---|---|---|---|---|---|---|

| [48] | 2024 | AI Asthma Guard: Predictive Wearable Technology for Asthma Management in Vulnerable Populations | Machine Learning | Predictive Monitoring | Comorbid Mental Health | 9 | Personalization Approaches | Secure Technological Solutions |

| [49] | 2025 | Bridging minds and machines: AI’s role in enhancing mental health and productivity amidst Ukraine’s challenges | Machine Learning | Mental Health Enhancement | General Mental Health | 10 | Ethical Considerations | Human Oversight |

| [50] | 2025 | Explainable Predictive Model for Suicidal Ideation During COVID-19: Social Media Discourse Study | Explainable AI | Predictive Analytics | Suicide Prevention | 11 | Risks and Mitigation Strategies | Clinical Efficacy |

| [51] | 2025 | A deep learning-based ensemble for autism spectrum disorder diagnosis using facial images | Deep Learning | Diagnostic Tool | Autism Spectrum Disorder | 10 | Risks and Mitigation Strategies | Clinical Efficacy |

| [52] | 2025 | Health Equity in Mental Health Care: Challenges for Nurses and Related Preparation | Health Informatics | Educational Framework | Mental Health Equity | 8 | Implementation Challenges | Cultural Adaptation |

| [53] | 2025 | A multidisciplinary approach to prevent student anxiety: A Toolkit for educators | Machine Learning | Prevention Toolkit | Student Anxiety | 9 | Personalization Approaches | Patient-Centered Design |

| [54] | 2025 | The role of artificial intelligence in enhancing sports education and public health in higher education | Machine Learning | Educational Platform | Mental Wellness | 8 | Implementation Challenges | Technical Infrastructure |

| [55] | 2025 | Firm Performance on Artificial Intelligence Implementation | Deep Learning | Emotion Recognition | Personalized Therapy | 11 | Personalization Approaches | AI-Enhanced Therapeutic Interventions |

| [56] | 2024 | Synergistic Precision: Integrating Artificial Intelligence and Bioactive Natural Products for Advanced Prediction of Maternal Mental Health During Pregnancy | Machine Learning | Predictive Modeling | Maternal Mental Health | 10 | Personalization Approaches | AI-Enhanced Therapeutic Interventions |

| [57] | 2024 | Facial Emotion Recognition Using CNNs: Implications for Affective Computing and Surveillance | Deep Learning | Emotion Recognition | Affective Computing | 9 | Ethical Considerations | Privacy and Confidentiality |

| [58] | 2023 | Application of data fusion for automated detection of children with developmental and mental disorders: A systematic review of the last decade | Machine Learning | Data Fusion | Pediatric Mental Health | 11 | Risks and Mitigation Strategies | Clinical Efficacy |

| [59] | 2025 | Implementing a collaborative care model for child and adolescent mental health in Qatar: Addressing workforce and access challenges | Health Informatics | Care Model | Child Mental Health | 8 | Implementation Challenges | Resource Allocation |

| [60] | 2023 | Nurse-Task Matching Decision Support System Based on FSPC-HEART Method to Prevent Human Errors for Sustainable Healthcare | Machine Learning | Decision Support | Healthcare Quality | 9 | Risks and Mitigation Strategies | Healthcare Integration |

| [61] | 2022 | Connecting artificial intelligence and primary care challenges: Findings from a multi stakeholder collaborative consultation | Health Informatics | Stakeholder Analysis | Primary Care Integration | 8 | Implementation Challenges | Technical Infrastructure |

| [62] | 2022 | Tough Times, Extraordinary Care: A Critical Assessment of Chatbot-Based Digital Mental Healthcare Solutions for Older Persons to Fight Against Pandemics Like COVID-19 | Natural Language Processing | Chatbot Therapy | Elderly Mental Health | 10 | Personalization Approaches | AI-Enhanced Therapeutic Interventions |

| [63] | 2025 | Top 10 Public Health Challenges for 2024: Charting a New Direction for Global Health Security | Health Policy | Policy Framework | Global Mental Health | 7 | Implementation Challenges | Resource Allocation |

| [64] | 2024 | AI4Work Project: Human-Centric Digital Twin Approaches to Trustworthy AI and Robotics for Improved Working Conditions in Healthcare and Education Sectors | Digital Twin Technology | Workplace Wellness | Occupational Health | 9 | Ethical Considerations | Human Oversight |

| [65] | 2024 | A Systematic Review on the Use of AI-Powered Cloud Computing for Healthcare Resilience | Cloud Computing | Infrastructure Analysis | Healthcare Resilience | 8 | Implementation Challenges | Technical Infrastructure |

| [66] | 2024 | The S20 Brazilian Mental Health Report for building a just world and a sustainable planet: Part II | Health Policy | Policy Framework | Population Mental Health | 9 | Implementation Challenges | Cultural Adaptation |

| [67] | 2023 | Use of mobile technology to identify behavioral mechanisms linked to mental health outcomes in Kenya: Protocol for development and validation of a predictive model | Mobile Technology | Predictive Modeling | Behavioral Health | 10 | Personalization Approaches | Secure Technological Solutions |

| [68] | 2024 | The influence of economic policies on social environments and mental health | Policy Analytics | Economic Analysis | Social Mental Health | 8 | Implementation Challenges | Resource Allocation |

| [69] | 2024 | Development of a Micro Counselling Educational Platform Based on AI and Face Recognition to Prevent Students Anxiety Disorder | Face Recognition | Educational Platform | Student Anxiety | 9 | Personalization Approaches | AI-Enhanced Therapeutic Interventions |

| [70] | 2020 | A New Method for Discovering Daily Depression from Tweets to Monitor Peoples Depression Status | Natural Language Processing | Social Media Monitoring | Depression Detection | 10 | Risks and Mitigation Strategies | Data Security |

| [71] | 2024 | Child and Adolescent Trauma Response Following Climate-Related Events: Leveraging Existing Knowledge With New Technologies | Machine Learning | Trauma Response | Climate-Related Trauma | 9 | Personalization Approaches | AI-Enhanced Therapeutic Interventions |

| [72] | 2025 | Towards a Multi-Objective and Contextual Multi-Criteria Recommender System for Enhancing User Well-Being in Sustainable Smart Homes | Recommender Systems | Wellness Platform | Environmental Wellness | 8 | Personalization Approaches | Patient-Centered Design |

| [73] | 2025 | Smart Wellness: AI-Driven IoT and Wearable Sensors for Enhanced Workplace Well-Being | IoT Analytics | Workplace Monitoring | Occupational Wellness | 9 | Personalization Approaches | Secure Technological Solutions |

| [74] | 2023 | Improving Student Retention in Institutions of Higher Education through Machine Learning: A Sustainable Approach | Machine Learning | Retention Analysis | Student Mental Health | 8 | Implementation Challenges | Evidence and Evaluation |

| [75] | 2024 | AI Application in Achieving Sustainable Development Goal Targeting Good Health and Well-Being: A New Holistic Paradigm | Machine Learning | SDG Framework | Global Health | 7 | Implementation Challenges | Resource Allocation |

| [76] | 2022 | The influence of environmental cognition on green consumption behavior | Computer Vision | Image Therapy | Mood Enhancement | 9 | Personalization Approaches | AI-Enhanced Therapeutic Interventions |

| [77] | 2020 | Comparative Study of Mental Stress Detection through Machine Learning Techniques | Machine Learning | Clinical Application | Mental Disorders | 8 | Ethical Considerations | Transparency and Accountability |

| [3] | 2023 | Does financial education help to improve the return on stock investment? | Qualitative Analysis | Adoption Study | Professional Perspectives | 11 | Ethical Considerations | Human Oversight |

| [78] | 2023 | The potential for AI to the monitoring and support for caregivers: An urgent tech-social challenge | Machine Learning | Caregiver Support | Caregiver Wellness | 9 | Ethical Considerations | Privacy and Confidentiality |

| [79] | 2022 | Engaging Through Awareness: Purpose-Driven Framework Development to Evaluate and Develop Future Business Strategies With Exponential Technologies Toward Healthcare Democratization | Strategic AI | Framework Development | Healthcare Access | 8 | Implementation Challenges | Resource Allocation |

| [80] | 2018 | Reducing incarceration through prioritized interventions | Predictive Analytics | Intervention Prioritization | Criminal Justice Mental Health | 9 | Risks and Mitigation Strategies | Algorithmic Bias |

| [81] | 2021 | The food equity and environmental data sovereignty (feeds) project: Protocol for a quasi-experimental study evaluating a digital platform for climate change preparedness | Data Sovereignty | Digital Platform | Environmental Health | 8 | Ethical Considerations | Data Protection |

| [82] | 2021 | MOSafely: Building an Open-Source HCAI Community to Make the Internet a Safer Place for Youth | Human-Centered AI | Safety Platform | Youth Mental Health | 10 | Ethical Considerations | Human Oversight |

| [83] | 2021 | Intelligent Work: Person Centered Operations, Worker Wellness and the Triple Bottom Line | Intelligent Systems | Workplace Design | Occupational Mental Health | 9 | Personalization Approaches | Patient-Centered Design |

| [84] | 2010 | Innovation networks for improving access and quality across the healthcare ecosystem | Network Analysis | Healthcare Innovation | Healthcare Access | 7 | Implementation Challenges | Technical Infrastructure |

| [85] | 2024 | The Metaverse and Revolutionary Perspectives for the Smart Cities of the Future | Metaverse Technology | Virtual Environment | Digital Wellness | 8 | Personalization Approaches | Secure Technological Solutions |

| [86] | 2020 | An Efficiency Analysis of Artificial Intelligence Medical Equipment for Civil Use | Efficiency Analysis | Medical Equipment | Healthcare Technology | 7 | Implementation Challenges | Technical Infrastructure |

| [87] | 2023 | An omni-channel, outcomes-focused approach to scale digital health interventions in resource-limited populations: A case study | Digital Health | Scaling Framework | Resource-Limited Settings | 9 | Implementation Challenges | Resource Allocation |

| [88] | 2023 | The Construction of Critical Factors for Successfully Introducing Chatbots into Mental Health Services in the Army: Using a Hybrid MCDM Approach | Chatbot Technology | Military Mental Health | Military Personnel | 10 | Personalization Approaches | AI-Enhanced Therapeutic Interventions |

| [89] | 2022 | A systematic mapping study of using digital marketing technologies in health care: The state of the art of digital healthcare marketing | Digital Marketing | Marketing Analysis | Healthcare Communication | 7 | Implementation Challenges | Evidence and Evaluation |

| [90] | 2020 | Sustainable level of human performance with regard to actual availability in different professions | Performance Analytics | Human Performance | Occupational Health | 8 | Ethical Considerations | Human Oversight |

| [91] | 2025 | Reimagining the future of education: Inclusive pedagogies, critical intergenerational justice, and technological disruption | Educational Technology | Pedagogical Framework | Educational Mental Health | 8 | Implementation Challenges | Cultural Adaptation |

| [92] | 2024 | Artificial Intelligence and Labour Markets: Analyzing Job Displacement and Creation | Labor Analytics | Economic Impact | Work-Related Stress | 7 | Implementation Challenges | Resource Allocation |

| [93] | 2025 | Shaping the gig economy: Insights into management, technology, and workforce dynamics | Workforce Analytics | Economic Analysis | Gig Worker Mental Health | 8 | Implementation Challenges | Resource Allocation |

| [94] | 2022 | Software Measurement by Using Artificial Intelligence | Software Metrics | Quality Assessment | Technology Stress | 7 | Risks and Mitigation Strategies | Clinical Efficacy |

| [95] | 2025 | Research on evaluation indicator system and intelligent monitoring framework for cultural services in community parks: A case study of Guanggang Park in Guangzhou, China | Monitoring Systems | Community Services | Community Mental Health | 8 | Personalization Approaches | Patient-Centered Design |

| [96] | 2020 | A Study on Public Acceptability of Traditional Chinese Medicine Meridian Instruments | Acceptability Study | Traditional Medicine | Cultural Health Practices | 7 | Implementation Challenges | Cultural Adaptation |

| [97] | 2024 | Visualization and analysis of the integration mechanism of artificial intelligence-enabled sports development and ecological environment protection | Data Visualization | Sports Technology | Physical Mental Health | 8 | Personalization Approaches | Secure Technological Solutions |

| [98] | 2022 | The role of psycholinguistics for language learning in teaching based on formulaic sequence use and oral fluency | Psycholinguistics | Language Learning | Educational Psychology | 7 | Implementation Challenges | Evidence and Evaluation |

| [99] | 2025 | Surveilled Selves and Silenced Voices: A Linguistic and Gendered Critique of Privacy Invasion in Marie Lu’s Warcross | Privacy Analysis | Literary Critique | Digital Privacy | 9 | Ethical Considerations | Privacy and Confidentiality |

| [100] | 2016 | Use of artificial intelligence in medical sciences | Medical AI | Medical Applications | Healthcare Technology | 7 | Implementation Challenges | Technical Infrastructure |

| [101] | 2022 | Management and information disclosure of electric power environmental and social governance issues in the age of artificial intelligence | Environmental AI | ESG Management | Environmental Psychology | 8 | Ethical Considerations | Transparency and Accountability |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Espino Carrasco, D.K.; Palomino Alcántara, M.d.R.; Arbulú Pérez Vargas, C.G.; Santa Cruz Espino, B.M.; Dávila Valdera, L.J.; Vargas Cabrera, C.; Espino Carrasco, M.; Dávila Valdera, A.; Agurto Córdova, L.M. Sustainability of AI-Assisted Mental Health Intervention: A Review of the Literature from 2020–2025. Int. J. Environ. Res. Public Health 2025, 22, 1382. https://doi.org/10.3390/ijerph22091382

Espino Carrasco DK, Palomino Alcántara MdR, Arbulú Pérez Vargas CG, Santa Cruz Espino BM, Dávila Valdera LJ, Vargas Cabrera C, Espino Carrasco M, Dávila Valdera A, Agurto Córdova LM. Sustainability of AI-Assisted Mental Health Intervention: A Review of the Literature from 2020–2025. International Journal of Environmental Research and Public Health. 2025; 22(9):1382. https://doi.org/10.3390/ijerph22091382

Chicago/Turabian StyleEspino Carrasco, Danicsa Karina, María del Rosario Palomino Alcántara, Carmen Graciela Arbulú Pérez Vargas, Briseidy Massiel Santa Cruz Espino, Luis Jhonny Dávila Valdera, Cindy Vargas Cabrera, Madeleine Espino Carrasco, Anny Dávila Valdera, and Luz Mirella Agurto Córdova. 2025. "Sustainability of AI-Assisted Mental Health Intervention: A Review of the Literature from 2020–2025" International Journal of Environmental Research and Public Health 22, no. 9: 1382. https://doi.org/10.3390/ijerph22091382

APA StyleEspino Carrasco, D. K., Palomino Alcántara, M. d. R., Arbulú Pérez Vargas, C. G., Santa Cruz Espino, B. M., Dávila Valdera, L. J., Vargas Cabrera, C., Espino Carrasco, M., Dávila Valdera, A., & Agurto Córdova, L. M. (2025). Sustainability of AI-Assisted Mental Health Intervention: A Review of the Literature from 2020–2025. International Journal of Environmental Research and Public Health, 22(9), 1382. https://doi.org/10.3390/ijerph22091382