Scientific Evidence in Public Health Decision-Making: A Systematic Literature Review of the Past 50 Years

Abstract

1. Introduction

- (i)

- To synthesise knowledge on the use of scientific evidence in public health decisions;

- (ii)

- To identify the determinants, barriers, and facilitators;

- (iii)

- To contribute to the improvement of evidence-based public health decisions;

- (iv)

- To evaluate the implementation of evidence;

- (v)

- To define perspectives for future research on this topic.

- What is the current state of knowledge on scientific production concerning the use of evidence in public health decision making? Specifically, what are the main characteristics of this output in terms of volume, research themes, main geographic centres, leading contributors, publication channels, types of study design, etc., over the period under review?

- What are the obstacles, barriers, and/or facilitators to such use, or, more precisely, what are the organisational, structural, or contextual factors that influence, in one way or another, the use of evidence?

- Finally, what actions can be undertaken by actors and institutions to enhance the use of evidence in the formulation, implementation, and evaluation of public health policies, i.e., in public health practice?

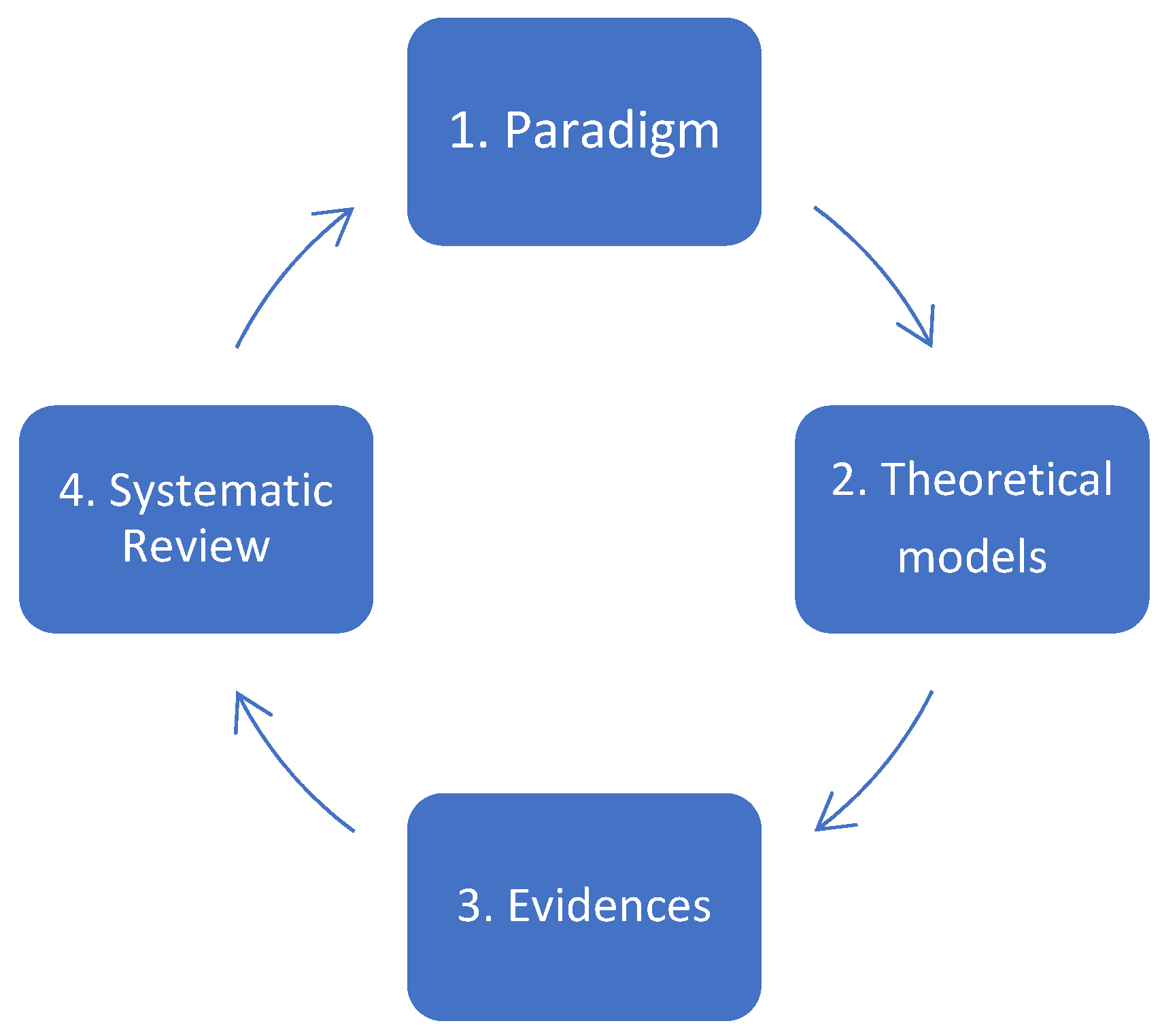

2. Theoretical Framework and Study Structure

- (i)

- (ii)

- Methodological challenges, linked to the complexity and heterogeneity of data, difficulties in contextualising or generalising results, and the misalignment between the production of evidence and political agendas [35].

- (iii)

- Finally, the use of scientific evidence can face practical and ethical challenges. Among the practical barriers are financial constraints, a lack of or poor-quality data, fragmented data sources, and weak transmission mechanisms between evidence producers and decision-makers. Additionally, certain public health decisions based on evidence may raise risks of human rights violations if not handled ethically [36,37,38].

3. Materials and Methods

3.1. Protocol

3.2. Search Strategy

3.2.1. Data Extraction

3.2.2. Exclusion Criteria

3.2.3. Data Analysis

- -

- “Evidence” does not reside only in the world where science is produced; it emerges in the political world of policy making, where it is interpreted, made sense of, and used, perhaps persuasively, in policy arguments [40].

- -

- “Evidence production” means scientific evidence production.

- -

- “Use” refers again to the utilisation of scientific evidence.

- -

- -

- “Evaluation” is the systematic process to determine merit, worth, value, or significance [43].

- -

- “Translation” is associated with knowledge utilisation and refers to the use of knowledge in practice and decision making by the public, patients, health care professionals, managers, and policy-makers [44].

- -

- “Collaboration” is a core activity of a collaboration to share resources and capabilities that make the participators work closely together to create mutually beneficial outcomes [45].

- -

- “Resource allocation” is an aggregation of the functions required to track and manage all resources related to production. These resources include labour, machines, tools, fixtures, materials, and other entities, such as documents that must be available in order for work to start at the operation [46].

- -

- “Capacity building” is the development of knowledge, skills, commitment, structures, systems, and leadership to enable effective health policies to build capacity for public health [47].

4. Results

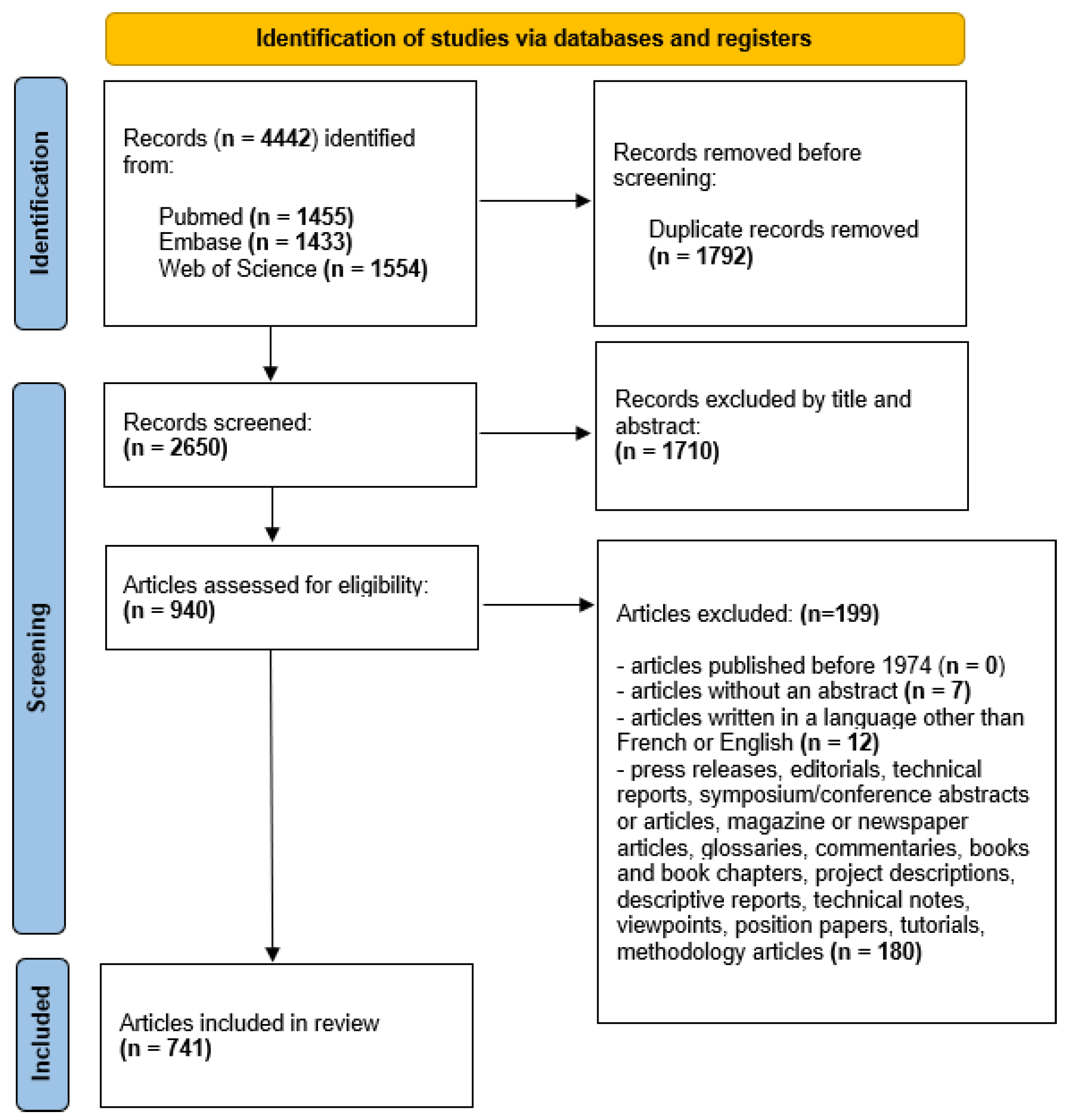

4.1. Application of PRISMA Model (PRISMA 2020)

4.2. Characteristics of Scientific Production

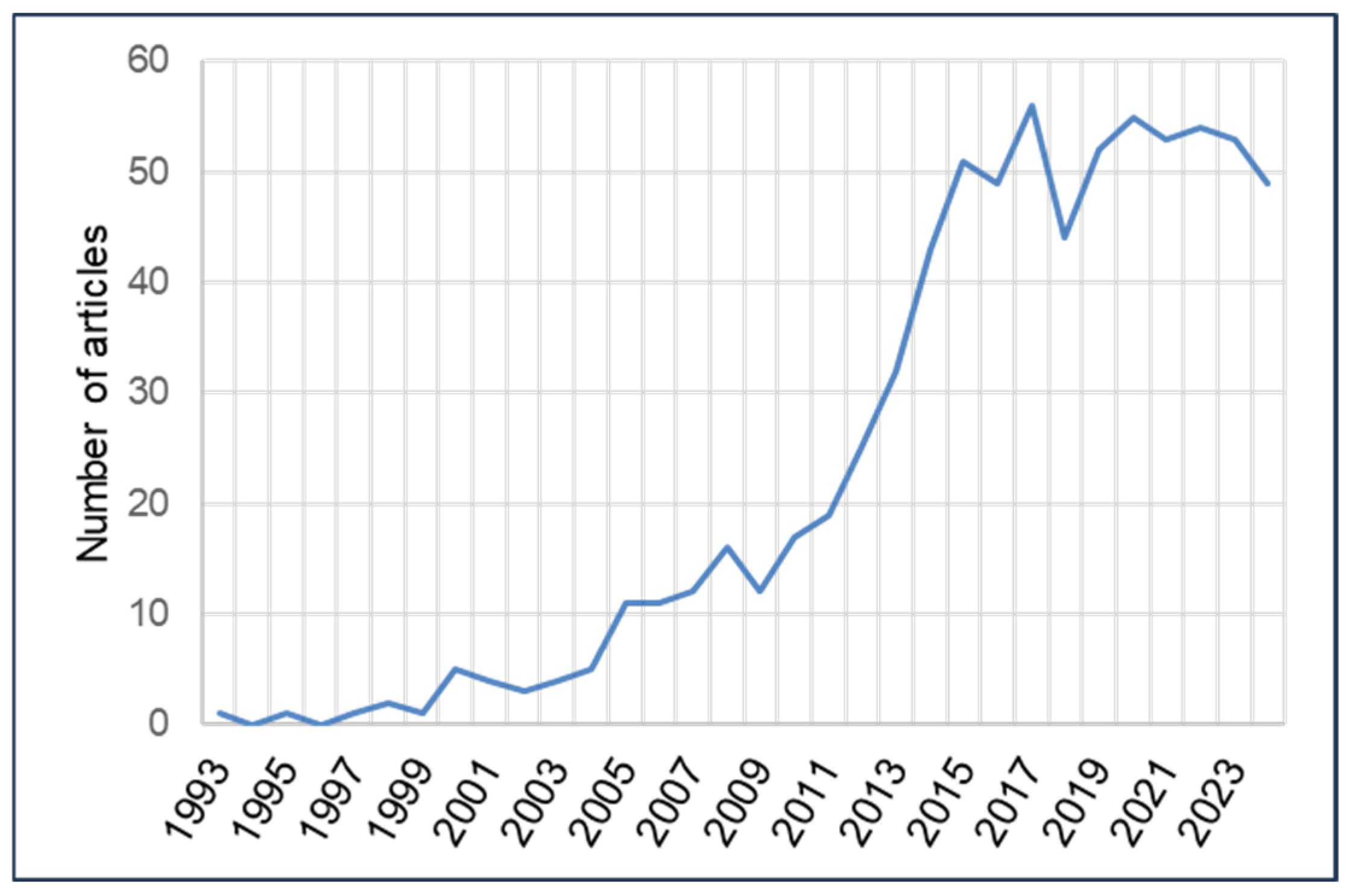

4.2.1. Annual Volume of Scientific Production

4.2.2. Types of Journals and Study Designs, Study Settings and Scopes, and Author Affiliations

| Frequency | Percentage | |

|---|---|---|

| Type of publication journal | ||

| Public health | 473 | 63.8% |

| Biomedical | 132 | 17.8% |

| Humanities and social sciences | 78 | 10.5% |

| Mixed | 54 | 7.3% |

| Natural sciences | 3 | 0.4% |

| Care-focused journals | 1 | 0.1% |

| Study design | ||

| Qualitative studies | 240 | 32.4% |

| Case studies | 140 | 18.9% |

| Focus groups | 11 | 1.5% |

| Delphi Studies | 4 | 0.5% |

| Descriptive quantitative studies | 83 | 11.2% |

| Cohort studies | 1 | 0.1% |

| Case–control studies | 0 | 0.0% |

| Randomised controlled trials | 7 | 0.9% |

| Socio-political analyses | 62 | 8.4% |

| Ethical/moral analyses | 1 | 0.1% |

| Legal, medico-legal, and juridical analyses | 3 | 0.4% |

| Historical analyses | 4 | 0.5% |

| Philosophical analyses | 2 | 0.3% |

| Economic analyses | 1 | 0.1% |

| Psychological analyses | 1 | 0.1% |

| Narrative reviews | 61 | 8.2% |

| Rapid reviews | 5 | 0.7% |

| Scoping reviews | 19 | 2.6% |

| Realist reviews | 3 | 0.4% |

| Systematic reviews | 38 | 5.1% |

| Meta-analyses | 1 | 0.1% |

| Mixed-methods studies | 54 | 7.3% |

| Frequency | Percentage | |

|---|---|---|

| Continent of the study | ||

| South America | 18 | 2.4% |

| Asia | 68 | 9.2% |

| Oceania | 79 | 10.7% |

| Africa | 106 | 14.3% |

| North America | 128 | 17.3% |

| Europe | 135 | 18.2% |

| International | 207 | 27.9% |

| Study scope | ||

| Local/community | 11 | 1.5% |

| Regional (EU, AU, WHO, Africa, etc.) | 49 | 6.6% |

| Provincial | 62 | 8.4% |

| International | 218 | 29.4% |

| National | 401 | 54.1% |

| Frequency | Percentage | |

|---|---|---|

| Institutional affiliation of the first author | ||

| University | 579 | 78.1% |

| Government | 75 | 10.1% |

| Foundation, think tank, or private organisations | 45 | 6.1% |

| International organisation | 26 | 3.5% |

| Other | 16 | 2.2% |

| Country of the first author’s institutional affiliation (top ten) | ||

| United Kingdom | 142 | 19.2% |

| USA | 141 | 19.0% |

| Australia | 106 | 14.3% |

| Canada | 88 | 11.9% |

| Nigeria | 28 | 3.8% |

| Switzerland | 24 | 3.2% |

| Iran | 21 | 2.8% |

| South Africa | 12 | 1.6% |

| Lebanon | 11 | 1.5% |

| The Netherlands | 10 | 1.3% |

4.2.3. Main Contributors

| Main Authors | Frequency |

|---|---|

| Uneke, C. J. et al. | 14 |

| El-Jardali, F. et al. | 8 |

| Nabyonga Orem, J. et al. | 6 |

| Lavis, J. N. et al. | 5 |

| Smith, K. E. et al. | 5 |

| Zardo, P. et al. | 5 |

| Armstrong, R. et al. | 4 |

| Khalid, A. F. et al. | 4 |

| Oliver, K. et al. | 4 |

| Onwujekwe, O. et al. | 4 |

| Waqa, G. et al. | 4 |

4.3. Content

4.3.1. Domains and Themes of Study

| Frequency | Percentage | |

|---|---|---|

| Theme | ||

| Production | 192 | 25.9% |

| Use | 315 | 42.5% |

| Implementation | 251 | 33.9% |

| Evaluation | 65 | 8.8% |

| Translation | 115 | 15.5% |

| Collaboration | 13 | 1.8% |

| Resource allocation | 37 | 5.0% |

| Capacity building | 32 | 4.3% |

| Domain | ||

| Medicine | 35 | 4.7% |

| Public health | 706 | 95.3% |

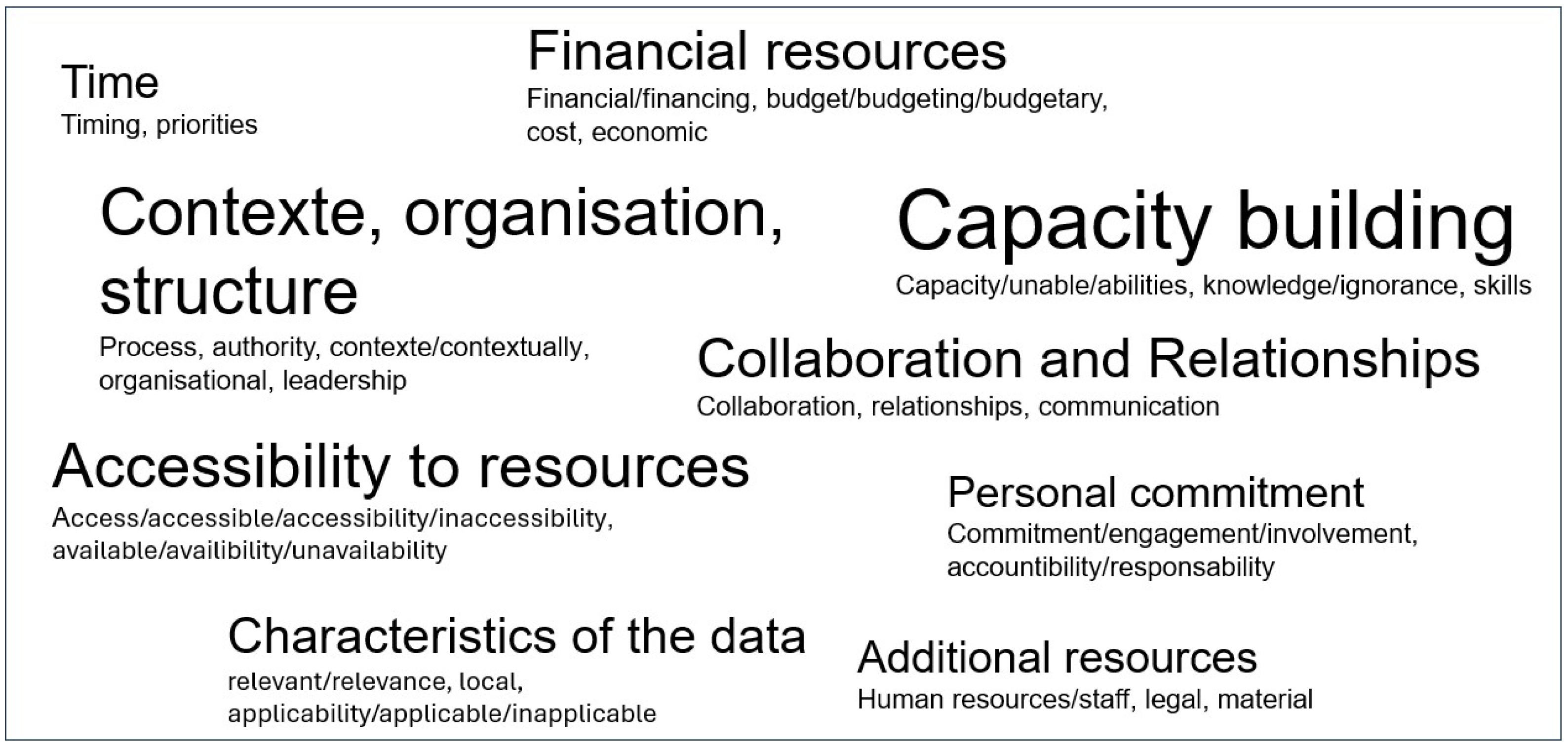

4.3.2. Determinants (Barriers and/or Facilitators)

4.4. Analysis of Trends in Scientific Production over Time

| Variables | 1993–2009 | 2010–2024 |

|---|---|---|

| Author affiliation | ||

| Universities | 72 | 507 |

| Government institutions | 7 | 68 |

| Foundations, think tanks, and private companies | 6 | 39 |

| Study scope | ||

| Provincial | 13 | 49 |

| Regional | 1 | 48 |

| Study setting | ||

| Single site | 54 | 397 |

| Multi-site | 16 | 151 |

| Global | 19 | 104 |

| Type of journal | ||

| Biomedical | 29 | 48 |

| Public health | 102 | 425 |

| Study domain | ||

| Medicine | 11 | 24 |

| Public health | 78 | 628 |

5. Discussion

- (i)

- Evidence is often instrumentalised or disregarded depending on the political or institutional context. Centralised political systems, for example, are less conducive to research uptake, as power concentration limits pluralistic debate and reduces demand for evidence. In contrast, decentralised or federal systems foster greater use of research to legitimise and defend policy decisions [37,80].

- (ii)

- Evidence may be used in ways that are more strategic than scientific, with policy-makers tending to rely more heavily on technical reports from international agencies than on scientific data generated at the community or local level [80].Indeed, the authors of those studies identify the most frequently cited obstacles as limited access to research, a lack of relevant studies, timing constraints/lack of opportunities for result application, and insufficient research literacy among policy-makers and other users.

- (iii)

- Certain forms of scientific evidence are either adopted or dismissed depending on the influence of lobbyists within national decision-making bodies, particularly in policy areas such as drug regulation, tobacco control, and the food and pharmaceutical industries. International organisations can exert direct power through conditionality attached to aid or loans, or indirect power by setting norms and standards that national governments adopt [81,82].

- (iv)

- The ability—or inability—to adapt and interpret knowledge in relation to local contexts can either facilitate or hinder the use of evidence from a technical standpoint [83].

- (v)

- Finally, it is important to recall that the prevailing culture of evidence hierarchies—particularly the privileging of quantitative over qualitative data—can lead to the marginalisation of qualitative evidence in certain public health decisions. According to some authors, specific collaborative environments between researchers and policy-makers can help facilitate the use of evidence in decision-making processes [5].

Strengths and Limitations

- (i)

- Conceptual clarification and contextual adaptation of nosologies, that is, the definitions and classifications of diseases that are culturally and epidemiologically relevant to local health realities.

- (ii)

- Methodologically, while randomised controlled trials (RCTs) are often considered the gold standard, incorporating experiential knowledge, including community narratives, traditional knowledge systems, and local health practices, may usefully complement formal scientific evidence.

- (iii)

- In contexts where health information systems are weak or non-operational, efforts should be made to leverage routine data generated by community-based structures, and not to rely solely on academic or institutional data sources.

- (iv)

- In cases where conflicts arise between the research agendas of donors, national or local governments, and community needs, the establishment of mediation and negotiation structures could help broker consensus and lead to politically and socially acceptable decisions, even in the absence—or in the presence of limitations—of conventional scientific evidence.

6. Future Research

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Weir, E.; d’Entremont, N.; Stalker, S.; Kurji, K.; Robinson, V. Applying the balanced scorecard to local public health performance measurement: Deliberations and decisions. BMC Public Health 2009, 9, 127. [Google Scholar] [CrossRef]

- Degeling, C.; Carter, S.M.; Rychetnik, L. Which public and why deliberate?—A scoping review of public deliberation in public health and health policy research. Soc. Sci. Med. 2015, 131, 114–121. [Google Scholar] [CrossRef]

- La Brooy, C.; Kelaher, M. The research–policy–deliberation nexus: A case study approach. Health Res. Policy Syst. 2017, 15, 75. [Google Scholar] [CrossRef] [PubMed]

- Magnus, P.D. Science, values, and the priority of evidence. Logos Epistem. 2018, 9, 413–431. [Google Scholar] [CrossRef]

- Pappaioanou, M.; Malison, M.; Wilkins, K.; Otto, B.; Goodman, R.A.; Churchill, R.E.; White, M.; Thacker, S.B. Strengthening capacity in developing countries for evidence-based public health: The data for decision-making project. Soc. Sci. Med. 2003, 57, 1925–1937. [Google Scholar] [CrossRef]

- Mooney, H. Ignoring evidence has led to ineffective policies to prevent drug misuse, shows research. BMJ Br. Med. J. 2012, 344, e148. [Google Scholar] [CrossRef]

- Dockery, D.W. Health effects of particulate air pollution. Ann. Epidemiol. 2009, 19, 257–263. [Google Scholar] [CrossRef] [PubMed]

- Malta, M.; Vettore, M.V.; da Silva, C.M.F.P.; Silva, A.B.; Strathdee, S.A. Political neglect of COVID-19 and the public health consequences in Brazil: The high costs of science denial. EClinicalMedicine 2021, 35, 100878. [Google Scholar] [CrossRef]

- Idrovo, A.J.; Manrique-Hernández, E.F.; Fernández Niño, J.A. Report from Bolsonaro’s Brazil: The consequences of ignoring science. Int. J. Health Serv. 2021, 51, 31–36. [Google Scholar] [CrossRef]

- Jenicek, M. Epidemiology, evidenced-based medicine, and evidence-based public health. J. Epidemiol. 1997, 7, 187–197. [Google Scholar] [CrossRef]

- U.S. Department of Health, Education, and Welfare. Smoking and Health. Report of the Advisory Committee to the Surgeon General of the Public Health Service; PHS Publication No. 1103.; U.S. Department of Health, Education, and Welfare, Public Health Service, Center for Disease Control: Washington DC, USA, 1964. [Google Scholar]

- Marmot, M.G.; Smith, G.D.; Stansfeld, S.; Patel, C.; North, F.; Head, J.; White, I.; Brunner, E.; Feeney, A. Health inequalities among British civil servants: The Whitehall II study. Lancet 1991, 337, 1387–1393. [Google Scholar] [CrossRef] [PubMed]

- Cooper, A.; Lewis, R.; Gal, M.; Joseph-Williams, N.; Greenwell, J.; Watkins, A.; Strong, A.; Williams, D.; Doe, E.; Law, R.-J.; et al. Informing evidence-based policy during the COVID-19 pandemic and recovery period: Learning from a national evidence centre. Glob. Health Res. Policy 2024, 9, 18. [Google Scholar] [CrossRef]

- Kneale, D.; Rojas-García, A.; Raine, R.; Thomas, J. The use of evidence in English local public health decision-making: A systematic scoping review. Implement. Sci. 2017, 12, 53. [Google Scholar] [CrossRef] [PubMed]

- Goyet, S.; Touch, S.; Ir, P.; SamAn, S.; Fassier, T.; Frutos, R.; Tarantola, A.; Barennes, H. Gaps between research and public health priorities in low income countries: Evidence from a systematic literature review focused on Cambodia. Implement. Sci. 2015, 10, 32. [Google Scholar] [CrossRef] [PubMed]

- Campbell, D.M.; Moore, G. Increasing the use of research in population health policies and programs: A rapid review. Public Health Res. Pract. 2018, 28, e2831816. [Google Scholar] [CrossRef] [PubMed]

- Innvaer, S.; Vist, G.; Trommald, M.; Oxman, A. Health policy-makers’ perceptions of their use of evidence: A systematic review. J. Health Serv. Res. Policy 2002, 7, 239–244. [Google Scholar] [CrossRef]

- Orton, L.; Lloyd-Williams, F.; Taylor-Robinson, D.; O’Flaherty, M.; Capewell, S. The use of research evidence in public health decision making processes: Systematic review. PLoS ONE 2011, 6, e21704. [Google Scholar] [CrossRef]

- Oliver, K.; Innvar, S.; Lorenc, T.; Woodman, J.; Thomas, J. A systematic review of barriers to and facilitators of the use of evidence by policymakers. BMC Health Serv. Res. 2014, 14, 2. [Google Scholar] [CrossRef]

- Masood, S.; Kothari, A.; Regan, S. The use of research in public health policy: A systematic review. Evid. Policy 2020, 16, 7–43. [Google Scholar] [CrossRef]

- Zardo, P.; Collie, A. Predicting research use in a public health policy environment: Results of a logistic regression analysis. Implement. Sci. 2014, 9, 142. [Google Scholar] [CrossRef]

- Milat, A.J.; Li, B. Narrative review of frameworks for translating research evidence into policy and practice. Public Health Res. Pract. 2017, 27, e2711704. [Google Scholar] [CrossRef] [PubMed]

- Brownson, R.C.; Fielding, J.E.; Maylahn, C.M. Evidence-based public health: A fundamental concept for public health practice. Annu. Rev. Public Health 2009, 30, 175–201. [Google Scholar] [CrossRef] [PubMed]

- Hansen, H.F. Organisation of evidence-based knowledge production: Evidence hierarchies and evidence typologies. Scand. J. Public Health 2014, 42 (Suppl. S13), 11–17. [Google Scholar] [CrossRef] [PubMed]

- Kemm, J. The limitations of ‘evidence-based’ public health. J. Eval. Clin. Pract. 2006, 12, 319–324. [Google Scholar] [CrossRef]

- Attena, F. Complexity and indeterminism of evidence-based public health: An analytical framework. Med. Health Care Philos. 2014, 17, 459–465. [Google Scholar] [CrossRef]

- Colombo, S.; Checchi, F. Decision-making in humanitarian crises: Politics, and not only evidence, is the problem. Epidemiol. Prev. 2018, 42, 214–225. [Google Scholar]

- Jansen, M.W.; Van Oers, H.A.; Kok, G.; De Vries, N.K. Public health: Disconnections between policy, practice and research. Health Res. Policy Syst. 2010, 8, 37. [Google Scholar] [CrossRef]

- Weiss, C.H. The many meanings of research utilization. In Social Science and Social Policy; Routledge: Milton Park, UK, 2021; pp. 31–40. [Google Scholar]

- Weiss, C.H.; Bucuvalas, M.J. Social Science Research and Decision-Making; Columbia University Press: New York, NY, USA, 1980. [Google Scholar]

- Pawson, R.; Tilley, N. Realistic Evaluation; Sage Publications: Thousand Oaks, CA, USA, 1997. [Google Scholar]

- Lavis, J.N.; Lomas, J.; Hamid, M.; Sewankambo, N.K. Assessing country-level efforts to link research to action. Bull. World Health Organ. 2006, 84, 620–628. [Google Scholar] [CrossRef]

- Astbury, B. Some reflections on Pawson’s science of evaluation: A realist manifesto. Evaluation 2013, 19, 383–401. [Google Scholar] [CrossRef]

- Petticrew, M. Public health evaluation: Epistemological challenges to evidence production and use. Evid. Policy 2013, 9, 87–95. [Google Scholar] [CrossRef]

- Anderson, L.M.; Brownson, R.C.; Fullilove, M.T.; Teutsch, S.M.; Novick, L.F.; Fielding, J.; Land, G.H. Evidence-based public health policy and practice: Promises and limits. Am. J. Prev. Med. 2005, 28, 226–230. [Google Scholar] [CrossRef]

- Dobbins, M.; Cockerill, R.; Barnsley, J. Factors affecting the utilization of systematic reviews: A study of public health decision makers. Int. J. Technol. Assess. Health Care 2001, 17, 203–214. [Google Scholar] [CrossRef]

- Dobrow, M.J.; Goel, V.; Lemieux-Charles, L.; Black, N.A. The impact of context on evidence utilization: A framework for expert groups developing health policy recommendations. Soc. Sci. Med. 2006, 63, 1811–1824. [Google Scholar] [CrossRef] [PubMed]

- Opsahl, A.; Nelson, T.; Madeira, J.; Wonder, A.H. Evidence-based, ethical decision-making: Using simulation to teach the application of evidence and ethics in practice. Worldviews Evid. Based Nurs. 2020, 17, 412–417. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Straf, M.L.; Schwandt, T.A.; Prewitt, K. (Eds.) Using Science as Evidence in Public Policy; National Academies Press: Washington, DC, USA, 2012. [Google Scholar]

- CES Guide to Implementation, What is Implementation Science? Available online: https://implementation.effectiveservices.org/ (accessed on 30 July 2025).

- Bauer, M.S.; Kirchner, J. Implementation science: What is it and why should I care? Psychiatry Res. 2020, 283, 112376. [Google Scholar] [CrossRef]

- Wanzer, D.L. What is evaluation? Perspectives of how evaluation differs (or not) from research. Am. J. Eval. 2021, 42, 28–46. [Google Scholar] [CrossRef]

- Straus, S.E.; Tetroe, J.M.; Graham, I.D. Knowledge translation is the use of knowledge in health care decision making. J. Clin. Epidemiol. 2011, 64, 6–10. [Google Scholar] [CrossRef]

- Lin, C.; Tsai, H.L.; Wu, J.C. Collaboration strategy decision-making using the Miles and Snow typology. J. Bus. Res. 2014, 67, 1979–1990. [Google Scholar] [CrossRef]

- Benatar, S.R.; Ashcroft, R. International perspectives on resource allocation. Health Syst. Policy Financ. Organ. 2010, 78, 180. [Google Scholar]

- WHO. Health Promotion: Glossary of Terms; World Health Organization: Geneva, Switzerland, 2006. [Google Scholar]

- Yao, Q.; Chen, K.; Yao, L.; Lyu, P.H.; Yang, T.A.; Luo, F.; Chen, S.-Q.; He, L.-Y.; Liu, Z.-Y. Scientometric trends and knowledge maps of global health systems research. Health Res. Policy Syst. 2014, 12, 26. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Liu, P.; Zhang, R.; Li, Z.; Li, X. A Scientometric Analysis of Global Health Research. Int. J. Environ. Res. Public Health 2020, 17, 2963. [Google Scholar] [CrossRef]

- Abimbola, S. The uses of knowledge in global health. BMJ Glob. Health 2021, 6, e005802. [Google Scholar] [CrossRef]

- Bottemanne, H. Conspiracy theories and COVID-19: How do conspiracy beliefs arise? L’encephale 2022, 48, 571–582. [Google Scholar] [CrossRef]

- Carrion-Alvarez, D.; Tijerina-Salina, P.X. Fake news in COVID-19: A perspective. Health Promot. Perspect. 2020, 10, 290. [Google Scholar] [CrossRef] [PubMed]

- Schonhaut, L.; Costa-Roldan, I.; Oppenheimer, I.; Pizarro, V.; Han, D.; Díaz, F. Scientific publication speed and retractions of COVID-19 pandemic original articles. Rev. Panam. Salud Publica 2022, 46, e25. [Google Scholar] [CrossRef]

- Bong, C.L.; Brasher, C.; Chikumba, E.; McDougall, R.; Mellin-Olsen, J.; Enright, A. The COVID-19 Pandemic: Effects on Low- and Middle-Income Countries. Anesth. Analg. 2020, 131, 86–92. [Google Scholar] [CrossRef] [PubMed]

- Neresini, F.; Giardullo, P.; Di Buccio, E.; Morsello, B.; Cammozzo, A.; Sciandra, A.; Boscolo, M. When scientific experts come to be media stars: An evolutionary model tested by analysing coronavirus media coverage across Italian newspapers. PLoS ONE 2023, 18, e0284841. [Google Scholar] [CrossRef]

- Phillips, M.; Reed, J.B.; Zwicky, D.; Van Epps, A.S.; Buhler, A.G.; Rowley, E.M.; Zhang, Q.; Cox, J.M.; Zakharov, W. Systematic Reviews in the Engineering Literature: A Scoping Review. IEEE Access 2024, 12, 62648–62663. [Google Scholar] [CrossRef]

- Monteiro, A.; Cepêda, C. Accounting Information Systems: Scientific Production and Trends in Research. Systems 2021, 9, 67. [Google Scholar] [CrossRef]

- Homolak, J. Opportunities and risks of ChatGPT in medicine, science, and academic publishing: A modern Promethean dilemma. Croat. Med. J. 2023, 64, 1. [Google Scholar] [CrossRef] [PubMed]

- Natow, R.S. Policy actors’ perceptions of qualitative research in policymaking: The case of higher education rulemaking in the United States. Evid. Policy 2022, 18, 109–126. [Google Scholar] [CrossRef]

- Rabab’h, B.S.; Omar, K.M.; Alzyoud, A.A.Y. Literature review of the Impact of the Use of Quantitative techniques in administrative Decision Making: Study (Public and private sector institutions). Int. J. Sci. Res. Publ. (IJSRP) 2019, 9, 515–521. [Google Scholar] [CrossRef]

- Dieckmann, N.F.; Slovic, P.; Peters, E.M. The use of narrative evidence and explicit likelihood by decisionmakers varying in numeracy. Risk Anal. An. Int. J. 2009, 29, 1473–1488. [Google Scholar] [CrossRef]

- Van der Voort, H.G.; Klievink, A.J.; Arnaboldi, M.; Meijer, A.J. Rationality and politics of algorithms. Will the promise of big data survive the dynamics of public decision making? Gov. Inf. Q. 2019, 36, 27–38. [Google Scholar] [CrossRef]

- Moynihan, D.P.; Lavertu, S. Does involvement in performance management routines encourage performance information use? Evaluating GPRA and PART. Public Adm. Rev. 2012, 72, 592–602. [Google Scholar] [CrossRef]

- Egan, M.; Petticrew, M.; Ogilvie, D. Informing healthy transport policies: Systematic reviews and research synthesis. Transp. Res. Rec. 2005, 1908, 214–220. [Google Scholar] [CrossRef]

- Uneke, C.J.; Okedo-Alex, I.N.; Akamike, I.C.; Uneke, B.I.; Eze, I.I.; Chukwu, O.E.; Otubo, K.I.; Urochukwu, H.C. Institutional roles, structures, funding and research partnerships towards evidence-informed policy-making: A multisector survey among policy-makers in Nigeria. Health Res. Policy Syst. 2023, 21, 36. [Google Scholar] [CrossRef]

- Salager-Meyer, F. Scientific publishing in developing countries: Challenges for the future. J. Engl. Acad. Purp. 2008, 7, 121–132. [Google Scholar] [CrossRef]

- Akena, F.A. Critical Analysis of the Production of Western Knowledge and Its Implications for Indigenous Knowledge and Decolonization. J. Black Stud. 2012, 43, 599–619. [Google Scholar] [CrossRef]

- Chanza, N.; De Wit, A. Epistemological and methodological framework for indigenous knowledge in climate science. Indilinga Afr. J. Indig. Knowl. Syst. 2013, 12, 203–216. [Google Scholar]

- Castro Torres, A.F.; Alburez-Gutierrez, D. North and South: Naming practices and the hidden dimension of global disparities in knowledge production. Proc. Natl. Acad. Sci. USA 2022, 119, e2119373119. [Google Scholar] [CrossRef]

- Nugent, R.; Feigl, A. Where Have All the Donors Gone? Scarce Donor Funding for Non-Communicable Diseases; Working Paper No. 228; Center for Global Development: Washington, DC, USA, 2010. [Google Scholar]

- Altbach, P.G. Peripheries and centers: Research universities in developing countries. Asia Pac. Educ. Rev. 2009, 10, 15–27. [Google Scholar] [CrossRef]

- González-Dambrauskas, S.; Salluh, J.I.F.; Machado, F.R.; Rotta, A.T. Science over language: A plea to consider language bias in scientific publishing. Crit. Care Sci. 2024, 36, e20240084en. [Google Scholar] [CrossRef] [PubMed]

- Ciocca, D.R.; Delgado, G. The reality of scientific research in Latin America; an insider’s perspective. Cell Stress Chaperones 2017, 22, 847–852. [Google Scholar] [CrossRef] [PubMed]

- Oliver, K.A.; de Vocht, F. Defining ‘evidence’ in public health: A survey of policymakers’ uses and preferences. Eur. J. Public Health 2017, 27 (Suppl. S2), 112–117. [Google Scholar] [CrossRef]

- Oliver, K.; Lorenc, T.; Innvær, S. New directions in evidence-based policy research: A critical analysis of the literature. Health Res. Policy Syst. 2014, 12, 34. [Google Scholar] [CrossRef]

- Head, B.W. Toward more “evidence-informed” policy making? Public Adm. Rev. 2016, 76, 472–484. [Google Scholar] [CrossRef]

- Head, B.W. Three lenses of evidence-based policy. Aust. J. Public Adm. 2008, 67, 1–11. [Google Scholar] [CrossRef]

- Shulha, L.M.; Cousins, J.B. Evaluation use: Theory, research, and practice since 1986. Eval. Pract. 1997, 18, 195–208. [Google Scholar] [CrossRef]

- Humphries, S.; Stafinski, T.; Mumtaz, Z.; Menon, D. Barriers and facilitators to evidence-use in program management: A systematic review of the literature. BMC Health Serv. Res. 2014, 14, 171. [Google Scholar] [CrossRef]

- Liverani, M.; Hawkins, B.; Parkhurst, J.O. Political and institutional influences on the use of evidence in public health policy. A systematic review. PLoS ONE 2013, 8, e77404. [Google Scholar] [CrossRef]

- Fang, S.; Stone, R.W. International organizations as policy advisors. Int. Organ. 2012, 66, 537–569. [Google Scholar] [CrossRef]

- Barnett, M.; Duvall, R. Power in International Politics. Int. Organ. 2005, 59, 39–75. [Google Scholar] [CrossRef]

- Greenhalgh, T.; Howick, J.; Maskrey, N. Evidence Based Medicine: A Movement in Crisis? BMJ 2014, 348, g3725. [Google Scholar] [CrossRef] [PubMed]

- Pawson, R.; Greenhalgh, T.; Harvey, G.; Walshe, K. Realist Review—A New Method of Systematic Review Designed for Complex Policy Interventions. J. Health Serv. Res. Policy 2005, 10 (Suppl. S1), 21–34. [Google Scholar] [CrossRef] [PubMed]

- Head, B.W. Reconsidering evidence-based policy: Key issues and challenges. Policy Soc. 2010, 29, 77–94. [Google Scholar] [CrossRef]

- Erasmus, E.; Orgill, M.; Schneider, H.; Gilson, L. Mapping the existing body of health policy implementation research in lower income settings: What is covered and what are the gaps? Health Policy Plan. 2014, 29 (Suppl. S3), iii35–iii50. [Google Scholar] [CrossRef]

- Naude, C.E.; Zani, B.; Ongolo-Zogo, P.; Wiysonge, C.S.; Dudley, L.; Kredo, T.; Garner, P.; Young, T. Research evidence and policy: Qualitative study in selected provinces in South Africa and Cameroon. Implement. Sci. 2015, 10, 126. [Google Scholar] [CrossRef]

- Uneke, C.J.; Ezeoha, A.E.; Ndukwe, C.D.; Oyibo, P.G.; Onwe, F. Promotion of evidence-informed health policymaking in Nigeria: Bridging the gap between researchers and policymakers. Glob. Public Health 2012, 7, 750–765. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kabengele Mpinga, E.; Chebbaa, S.; Pittet, A.-L.; Kayumbi, G. Scientific Evidence in Public Health Decision-Making: A Systematic Literature Review of the Past 50 Years. Int. J. Environ. Res. Public Health 2025, 22, 1343. https://doi.org/10.3390/ijerph22091343

Kabengele Mpinga E, Chebbaa S, Pittet A-L, Kayumbi G. Scientific Evidence in Public Health Decision-Making: A Systematic Literature Review of the Past 50 Years. International Journal of Environmental Research and Public Health. 2025; 22(9):1343. https://doi.org/10.3390/ijerph22091343

Chicago/Turabian StyleKabengele Mpinga, Emmanuel, Sara Chebbaa, Anne-Laure Pittet, and Gabin Kayumbi. 2025. "Scientific Evidence in Public Health Decision-Making: A Systematic Literature Review of the Past 50 Years" International Journal of Environmental Research and Public Health 22, no. 9: 1343. https://doi.org/10.3390/ijerph22091343

APA StyleKabengele Mpinga, E., Chebbaa, S., Pittet, A.-L., & Kayumbi, G. (2025). Scientific Evidence in Public Health Decision-Making: A Systematic Literature Review of the Past 50 Years. International Journal of Environmental Research and Public Health, 22(9), 1343. https://doi.org/10.3390/ijerph22091343