Abstract

The gig economy has led to a new management style, using algorithms to automate managerial decisions. Algorithmic management has aroused the interest of researchers, particularly regarding the prevalence of precarious working conditions and the health issues related to gig work. Despite algorithmically driven remuneration mechanisms’ influence on work conditions, few studies have focused on the compensation dimension of algorithmic management. We investigate the effects of algorithmic compensation on gig workers in relation to perceptions of procedural justice and time-based stress, two important predictors of work-related health problems. Also, this study examines the moderating effect of algorithmic transparency in these relationships. Survey data were collected from 962 gig workers via a research panel. The results of hierarchical multiple regression analysis show that the degree of exposure to algorithmic compensation is positively related to time-based stress. However, contrary to our expectations, algorithmic compensation is also positively associated with procedural justice perceptions and our results indicate that this relation is enhanced at higher levels of perceived algorithmic transparency. Furthermore, transparency does not play a role in the relationship between algorithmic compensation and time-based stress. These findings suggest that perceived algorithmic transparency makes algorithmic compensation even fairer but does not appear to make it less stressful.

1. Introduction

In recent years, the labor market has been going through a period of radical change, characterized by the introduction of new technologies in businesses, ushering in a fourth industrial revolution [1,2]. One of the distinctive features of this revolution consists of the increasing number of organizations that use algorithms in their decision-making processes, automating tasks and responsibilities typically performed by managers. This refers to algorithmic management (AM), a process aimed at automating managerial decisions or assisting managers with algorithms [3,4,5]. While its use is increasing in traditional industries, this new form of management is prevalent in the gig economy, of which Uber is the most well-known platform, and where most of the operational management is performed using algorithms [5,6,7,8]. Platforms using this disruptive technology are found in numerous sectors such as Upwork for freelance tasks; Task Rabbit for home services; or Amazon Mechanical Turk (MTurk), Microworkers and Clickworker for microtasks [5,9,10]. Moreover, due to the rise of the gig economy, this novel management method is experiencing notable growth, becoming a global phenomenon attracting millions of workers and consumers. In fact, while estimates vary, there are roughly 70 million workers and this number is estimated to grow at a 26% annual pace [11,12]. Furthermore, it is estimated 8% of workers in the Canada participate in the gig economy, a smaller number is found in the EU, UK and US with 3%, 4% and 4.5%, respectively [9,13]. Also, India is estimated to have more than 3 million gig economy workers [14].

This rise had led researchers in different disciplines to study the impacts of this new business model, especially with a focus on people who use these platforms to earn an income, called gig workers. Currently, the literature is struggling to catch up with real-world applications of AM [6]. Nevertheless, the literature highlights more unfavorable than positive effects for these workers, particularly regarding their working conditions, decreased autonomy, compensation schemes or the inherent safety issues and precariousness of gig work [5,15,16,17]. Furthermore, some authors also suggest that gig work is linked with mental health issues [18,19,20,21].

Despite its impact on working conditions in the gig economy, few researchers have looked specifically at algorithmic compensation, which refers to the near-complete automation of the calculation and subsequent granting of monetary rewards for gig work [5,22,23]. Indeed, the literature on this topic rarely distinguishes AM from its compensation component, thus offering little empirical knowledge on the subject [5]. In fact, this distinction is important to properly analyze the effects of algorithmic compensation and gig work. By examining this distinction in this study, we will be able to identify specific impacts, thereby broadening the analytical framework and understanding of gig work.

In particular, AM, including compensation practices, raises issues related to organizational justice, as the automation of managerial decisions to optimize productivity requires a degree of monitoring, control and task division that leaves little room for worker input [24,25,26,27]. For example, in order to assess work performance and calculate pay a number of gig economy online labor platforms (OLP), such as Foodora, Deliveroo and CrowdFlower, collect continuous GPA data, feedback from customers, colleagues and may monitor facial expressions [5,26,28,29]. Given the scope and the impact of these algorithmic decisions on gig workers and their reduced voice and control over these procedures, procedural justice issues seem significant, because employee “voice” shapes procedural justice perceptions. It has been established that this has numerous consequences, notably in respect of occupational health and safety [30,31,32,33]. For example, Magnavita et al. [34] have shown that decreased procedural justice perceptions were related to mental and physical health issues.

Also, algorithmic compensation enables platforms to reward only the “productive” time of task completion which seems to worsen working conditions in gig work, due to the fact that these workers usually perform a number of unpaid related tasks, leading to feelings of stress due to the need to optimize so-called productive moments [24,35,36]. This race for efficiency seems to generate significant stress and work intensification. Indeed, several authors associate gig work and AM to a version of digital Taylorism, with similar practices to those pioneered by the scientific management of the previous century [35,37,38,39]. Despite the proliferation of literature on gig work, few researchers have empirically measured its impact on workers’ health [40,41]. Yet, decades of research on stress have shown across multiple perspectives the detrimental effects of work-related stress and pressure on individuals optimal functioning at work and in general life [42,43].

Furthermore, with the growing number of firms using AM there is a need to understand what may mitigate its detrimental effects on work conditions. Current research indicates that the opacity of operational algorithms, particularly those used for compensation, exacerbates deleterious effects on working conditions [44,45]. Hence, some authors suggest increasing transparency in these algorithmic systems in order to make the mechanisms leading to algorithmic decisions more explainable, thereby enabling workers to have greater voice, more power and, ultimately, more autonomy, thus making working conditions less difficult [5,8,22,24]. However, despite these assumptions, there are few empirical studies that evaluate the extent to which algorithmic transparency mitigates the impacts of AM on work conditions.

Given that the literature on this topic is at an early stage, the aim of this quantitative study is to contribute to a deeper understanding of these novel issues. More precisely, we analyze the influence of algorithmic compensation on gig workers’ perceptions of procedural justice and time-based stress. In addition, we will evaluate the effect of algorithmic transparency as a potential remedy for the impacts associated with algorithmic compensation.

Our paper furthers the understanding in this research area in several ways. First, by examining algorithmic compensation separately from AM, we will attempt to reconcile organizational reality with the literature, while proposing a replicable conceptualization. Also, this study contributes to the emerging thinking on the applicability of perceived organizational justice, particularly its procedural tangent, within the gig economy. Finally, this paper fosters nuance and deepens the knowledge related to the influence of transparency in AM but also to its limitations.

On the practical side, given the explosive growth of the gig economy, this study could contribute to ongoing efforts and discussions to improve working conditions. Our research could detail certain impacts of transparency as well as the influence of algorithmic compensation, potentially helping decision makers, gig workers or platforms examining AM.

This paper is organized as follows. The next section reviews the literature on algorithmic compensation and the other variables of our study, namely procedural justice, time-based stress and algorithmic transparency. The ‘Methodology’ section details our research methods, while the ‘Results’ section displays our findings and states the descriptive statistics in our sample. Then, our ‘Discussion’ section highlights the theoretical and practical contributions of this study, while our final section offers a short conclusion.

2. Literature Review

2.1. Algorithmic Compensation and Procedural Justice

Organizational justice is defined as the role that fairness plays in employees’ working conditions and perceptions of them [46,47]. Colquitt et al. [48] have shown that in can be modeled in three branches: distributive justice, which focuses on fairness in the allocation of resources; procedural justice, which refers to workers’ perceptions of fairness in the decision-making process leading to resource allocation; and interactional justice, which focuses on the perception of fairness related to individual treatment in work processes [48].

In order to assess procedural justice perceptions, Leventhal’s [49] justice judgment model seems the most generally accepted theoretical conceptualization. In fact, organizational perceptions of procedural justice are based on the degree to which workers consider that organizational procedures meet the six criteria proposed by Leventhal [49], namely, consistency, bias-suppression, accuracy, correctability, representativeness and ethicality. The current literature suggests that algorithmic compensation as perceived by gig workers likely violates several of these rules.

First, OLPs automate performance management by disciplining or rewarding workers, based on consumer ratings [8]. Indeed, gig workers who do not achieve adequate customer satisfaction scores can be penalized, whereas top performer may receive increased rewards [50,51]. However, customers often fail to provide feedback when tasks are completed and gig workers have virtually no opportunity to give their assessment when these automated systems malfunction [51,52]. Therefore, performance management is often evaluated using a curtailed view, as gig workers find themselves without influence on this mechanism. These situations seem to suggest a lack of information accuracy, a criterion in the justice judgment model, which emphasizes the importance of only using complete information in organizational processes. Moreover, several studies report accounts from gig workers highlighting the unfair, subjective and volatile nature of this facet of algorithmic compensation [25,27,36,44]

Second, the weight of customer satisfaction on gig workers’ compensation, which is reflected both in the job evaluation of the service they provided and in tips received, is far from being free of bias. Several authors have raised the sometimes-discriminatory nature of customer evaluations, which are not subject to any verification and thus rely on the everyone’s various biases [51,53,54,55,56]. Furthermore, electronic customer reviews seem prone to be biased by emotional contagion when visible to other customers [57]. This evidence suggests that the weight of customer evaluations in algorithmic compensation is likely to violate the bias-suppression criterion.

Third, this automated client-assessment-dependent compensation is often claimed to be more accurate, as it be less error-prone than traditional appraisal [16,58]. However, when these automated systems malfunction, or when a malicious customer gives a review there is virtually no recourse for gig workers, particularly following a negative evaluation [8,27,59,60]. Many accounts detail that this phenomenon is fraught with consequences, as on the one hand, platforms can unilaterally disable collaboration and thus deprive individuals of their income, and on the other hand, they do not have adequate channels in order to appeal these decisions and restore their employment [27,44,59,60]. These situations seem to run counter to the correctability criterion of Leventhal [49]. In fact, allowing individuals to appeal organizational decisions increases the perceptions of procedural justice.

Fourth, algorithmic compensation mechanisms are subject to unilateral and unannounced changes, as OLPs are private firms with gig workers viewed as contractors, not employees. This situation may lead to significant consequences for these gig workers, who see their wage potential changed from one day to the next, which lead to feelings of apprehension and acts of defiance [27,59,61]. These repeated changes in the retribution mechanisms create unpredictability in algorithmic compensation systems and seem to run counter to the criterion of uniformity and consistency of procedures. Indeed, even if these rules are generally applied uniformly to platform workers, the lack of stability over time can undermine the perceptions of procedural justice.

Fifth, the literature suggests that algorithmic compensation in the gig economy is organized without gig workers’ input, increasing the platforms’ control level and reducing employee “voice” [5,27,52,58,62,63]. Indeed, gig workers have limited means of influencing workflow management, customer reviews or the tipping system. According to Colquitt et al. [64], the criteria of Leventhal [49] and “voice” are the two items most strongly shaping procedural justice perceptions.

By virtue of these arguments, we propose the following hypothesis:

Hypothesis 1:

Algorithmic compensation is negatively related with procedural justice perceptions.

2.2. Algorithmic Compensation and Time-Based Stress

The gig economy offers its workers great flexibility in scheduling, which seems to be the most attractive aspect of gig work, leading to increased job satisfaction [18,65,66,67,68,69]. However, this creates a paradox, as the literature suggests that AM reduces worker’s autonomy due to externally defined goals, rigid time constraints and increased monitoring [5,16,70]. These characteristics of algorithmic compensation appear to accentuate time constrains and intensity workload, which are associated with time-based stress [71,72]. Time-based stress refers to feelings of anxiety related to the increased work demands employees may perceive in having too little time to complete a task [73]. Furthermore, according to several authors, gig work is akin to piece-rate work, an intense form of pay-for-performance [39,58,59,74]. In fact, piece-rate compensation enables OLPs to compensate only “productive” time, which results in gig workers spending long stretches on OLPs unpaid, looking for work, leading to lengthier work hours and the incentive to accept as many gigs as possible, even the disliked or dangerous tasks [24,36].

Also, while meal delivery platforms offer gig workers a flexible schedule, they also constantly measure, guide and limit trips in order to standardize service and may penalize gig workers for not meeting their targets [26,39,65]. Furthermore, these control mechanisms can fail, sending bikers in car-only lanes or missing GPS data preventing workers from receiving their delivery, thereby prolonging the trips and undermining customer satisfaction and productivity goals [26,65,69,75]. While gig workers have limited control vis-à-vis these algorithmic compensation issues, they may still be penalized by them. According to Karasek Jr. [76]’s stress model, this kind of reduced worker autonomy leads to feeling of job stress. Studies have shown that restaurants not having order ready on time, gig workers having their means of transport stolen, customer behaviors and the platform mechanisms themselves can lengthen the time of a task [15,59,69,75,77]. Furthermore, the algorithmically set objectives need to be achieved during bad weather, which increases the risks of accidents [15,77]. Despite these arguments, few researchers seem to have empirically measured algorithmic compensation’s impact on time-based stress and workers’ health [40,41]. Nevertheless, for these reasons, we propose the following hypothesis:

Hypothesis 2:

Algorithmic compensation is positively related to time-based stress.

2.3. The Moderating Role of Algorithmic Transparency

As we noted earlier, the literature regarding transparency in algorithmic compensation appears to be in an early stage and few studies have measured its effects. Nonetheless, the generalized opacity of AM algorithms leads to several issues in the gig economy. Algorithmic transparency refers to the explainability of automated decisions as well as the degree of understanding of individuals towards them [78]. Particularly, according to Malhotra [79], this lack of transparency may exacerbate procedural justice problems in gig work. Indeed, opacity in customer appraisals, tipping mechanisms and changes in algorithmic procedures in ride-sharing platforms could cause AM to negatively impact procedural justice perceptions, thus causing adverse health effects [34,79]. For example, some gig economy firms do not inform gig workers which performance criteria are assessed for increased monetary rewards [40]. This opacity seems to hinder the ability to evaluate the criteria of consistency, bias-suppression, accuracy, correctability and representativeness of Leventhal [49]. Furthermore, the lack of transparency in these automated systems seems to exacerbate the loss of “voice” from algorithmic compensation and increases anxiety related to procedures, because gig workers do not have the tools to understand them and thus cannot offer meaningful input [5,24,27,80,81] Therefore, with greater transparency, gig workers could be informed and able to judge procedures for themselves, seemingly leading to increased procedural justice perceptions.

Also, the opacity of AM systems appears to exacerbate the development of feelings of misunderstanding, uncertainty, frustration, reduced trust and dignity, work overload and stress [5,7,8,52,82,83,84,85]. For example, according to Parent-Rocheleau and Parker [5], a lack of transparency in algorithmic performance management is likely to lead to role ambiguity, as gig workers rarely have explanations about how the algorithms work or how work data are used. A study by Bucher, Schou and Waldkirch [24] points out that gig workers invest considerable time and effort in unpaid tasks, leading to an intensification of workload, as what is measured by the algorithms is unknown, thus the criteria for compensation as well. Furthermore, a lack of algorithmic transparency in automated decision systems prevents its assessment from individuals, who may be inclined to accept every decision, which may lead to work overload and thus feelings of time-based stress [22]. For instance, in ride-sharing platforms, gig workers do not have access to the details of the next ride before accepting it and are subject to time constraints to accept a task, thus preventing a specific evaluation of the following job, they resign themselves to accept every gig offered by these platforms [8,16]. Similarly, algorithmic transparency have been found to foster fairness perceptions and prevent withdrawal intentions among truck drivers [86]. As a result, more transparency in these algorithmic compensation systems could increase decision-making and control for gig workers. Increasing gig workers’ control over their tasks could denote a resource that can alleviate the effects of time-based stress [5,76,87]. Based on the arguments, we propose the following hypothesis:

Hypothesis 3:

Perceived algorithmic transparency will moderate the relationship between algorithmic compensation and procedural justice, such that this relationship will be weaker when perceived transparency is high.

Hypothesis 4:

Perceived algorithmic transparency will moderate the relationship between algorithmic compensation and time-based stress, such that this relationship will be weaker when perceived transparency is high.

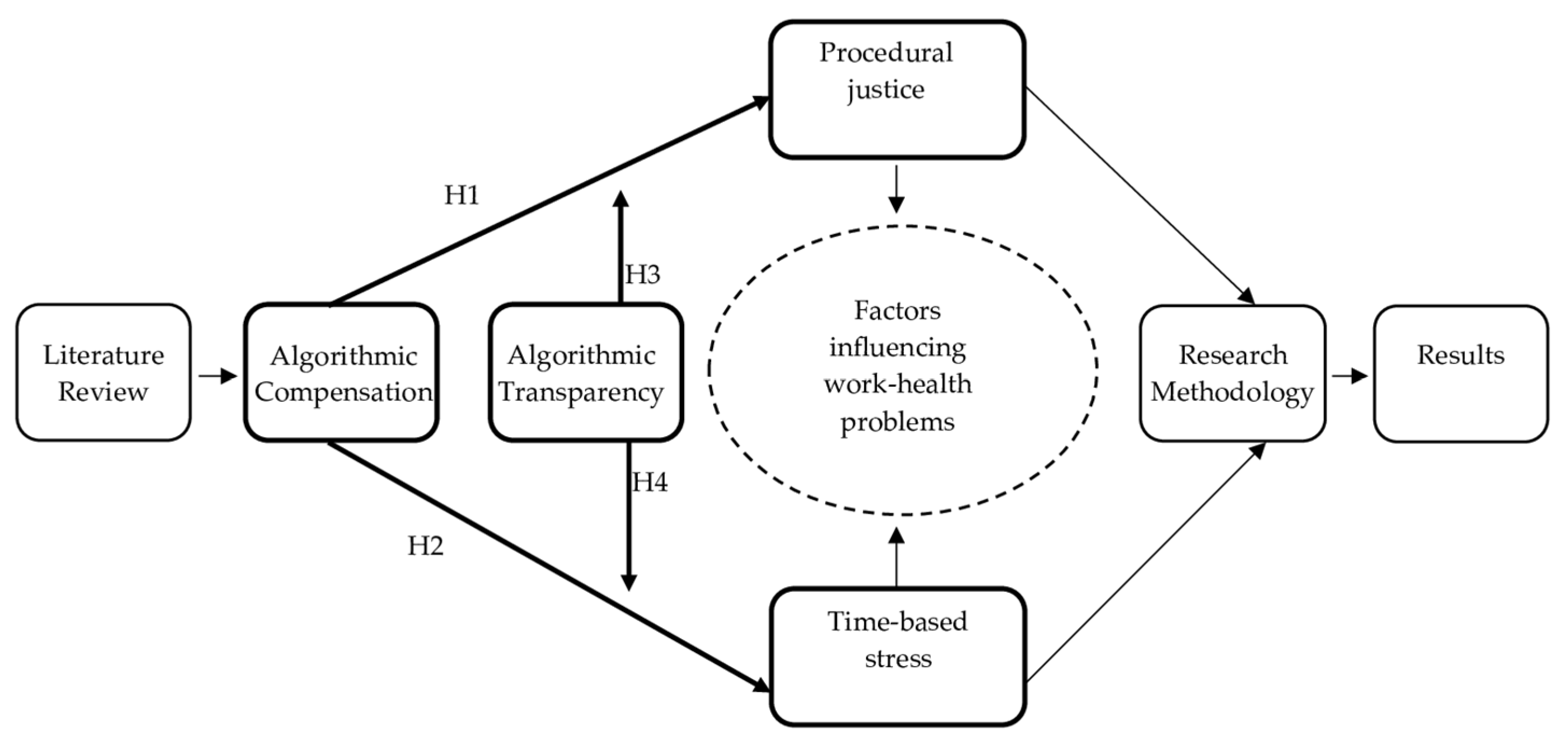

In order to illustrate the novelty of our proposed model and emphasize that numerous factors may influence worker health issues, we offer the following flowchart of this study in Figure 1. Furthermore, our hypotheses and variables, concerning the moderating effect of transparency in the relationship between algorithmic compensation and procedural justice are shown in bold.

Figure 1.

Flowchart of this study showing the process in which algorithmic compensation may influence work-health problems.

3. Methodology

3.1. Participants and Procedure

Our literature review notes a lack of quantitative empirical research on the variables under study and the need to diversify the methodological approaches in this field. With this in mind, we opt for a hypothetical-deductive and quantitative approach in order to test our hypotheses on data collected from gig workers. As part of a larger research project, data collection in our study was conducted using an external research panel. This panel combined social networks, a pre-established bank of respondents and crowd-work platforms, such as Mechanical Turk, in its solicitation for our project. We aimed for a target of 1500 participants.

Participants were paid a fee of USD 5 for participating in the study. A total of 1200 gig workers took part in this study, which led to a final sample of 962, after removing incomplete surveys and participants who failed attention checks [88].

Of the respondents, 59.8% were male, while 38.8% were female, which seems to indicate, similarly with the literature, that there exists a male preponderance in the gig economy [89]. Furthermore, participants were coded in seven age categories, namely under 18 years old (0.1%), between 18 and 24 years old (11.5%), between 25 and 34 years old (36.4%), between 35 and 44 years old (32.2%), between 45 and 54 years old (13.1%), between 55 and 64 years old (5.5%) and between 65 and 74 years old (1.3%). Moreover, 53.6% of respondents worked for crowd-work platforms, like MTurk, whereas 43.2% worked for app-work platforms like Uber. A total of 63.1% of the sample indicated that gig work was not their primary source of income, while this was the case for 36.9% of the respondents. Also, 83.5% of respondents resided in the USA, whereas 13.4% lived in India.

3.2. Measures

3.2.1. Perceived Exposure to Algorithmic Compensation

To measure our independent variable, we used the four items of the perceived exposure to algorithmic compensation, as part of the AMQ recently developed and validated by Parent-Rocheleau et al. [90]. Samples items are “A large part of my compensation is determined by an automated system” and “An automated system is responsible for calculating my pay, with no human intervention” [90]. Items were rated using a scale ranging from 1 (strongly disagree) to 7 (strongly agree) [90]. This scale’s Cronbach’s alpha (α) was 0.93.

3.2.2. Procedural Justice

To measure procedural justice perceptions we used the seven indicators (scale by Colquitt [91]. Examples of the items are: “I had influence over the outcomes of those procedures” and “Those [compensation] procedures have been based on accurate information”. Answers were rated using a scale ranging from 1 (strongly disagree) to 7 (strongly agree). This scale’s Cronbach’s alpha (α) was 0.89.

3.2.3. Time-Based Stress

To measure our second dependent variable, we used the four-item scale developed by Kinicki and Vecchio [73]. Examples of the items are: “I have to rush in order to complete my job” and “I am constantly working against the pressure of time”. Many researchers examining time constraints leading to feelings of job stress have used this measurement tool [92,93,94,95]. Moreover, these authors examined this variable across a number of different work environments, suggesting this measurement could also be valid in an atypical workplace as found in the gig economy. Answers were rated using a scale ranging from 1 (strongly disagree) to 7 (strongly agree). This scale’s Cronbach’s alpha (α) was 0.91.

3.2.4. Perceived Algorithmic Transparency

Algorithmic transparency in compensation was measured using four items derived from the scale proposed by Bujold, Parent-Rocheleau and Gaudet [86]. Examples of the items are: “I am aware of how the automated system calculates my remuneration” and “It is easy to predict how much I will receive as a compensation for my work”. Answers were rated using a scale ranging from 1 (strongly disagree) to 7 (strongly agree). This scale’s Cronbach’s alpha (α) was 0.87.

3.2.5. Control Variables

We controlled for four socio-demographic variables (age, gender, platform type, primary source of income) and positive orientation towards technological change, a dimension of positive appraisal toward technological change, as many authors argue these characteristics can influence responses to technology [18,96,97,98,99,100,101].

4. Results

4.1. Confirmatory Factor Analyses

In order to evaluate the validity of our measurement model, we performed a confirmatory factor analysis (CFA) using AMOS 26.0 software. This consists of estimating the joint effects of our variables allowing to comparison between the selected model and the unobservable models [102,103]. We evaluated the fit of our model using the root mean square error of approximation (RMSEA), the Tucker–Lewis Index (TLI) and the comparative fit index (CFI). For TLI and CFI we regard values over 0.90 as an adequate fit, whereas for RMSEA values under 0.08 would be acceptable and the best cases would be less than 0.05 [103]. Our theoretical four factor model (algorithmic compensation, algorithmic transparency, procedural justice and time-based stress) compared favorably with the other two alternative models tested (algorithmic compensation and transparency paired together, procedural justice and time-based stress) and a one-factor model. Only the four factor model respected the established critical values, namely χ2 = 990.5, (219), CFI = 0.93, TLI = 0.91 and RMSEA = 0.07, suggesting a better reliability than our constructs [103,104]. The tree factor model had χ2 = 1328.5 (222), CFI = 0.89, TLI = 0.88 and RMSEA = 0.08, and the one factor model resulted in χ2 = 3764, (225), CFI = 0.66, TLI = 0.61 and RMSEA = 0.15. In short, the CFA results indicate that our theoretical four-factor model is a statistically preferable choice for the analysis of our constructs.

4.2. Descriptive Statistics and Correlations

The descriptive statistics and correlations among the variables of this study can be found in Table 1. We can observe that algorithmic compensation is positively correlated with procedural justice (r = 0.42, p < 0.01) and time-based stress (r = 0.29, p < 0.01). This result contrasts a priori with our first hypothesis, arguing that the relationship with procedural justice is negative. Furthermore, we also performed Harman’s Single Factor Test using SPSS, in order to examine the common variance bias in our research variables, in which our threshold would be a value below 50%. The single factor had a variance = 35.25%, suggesting our instruments should not significantly bias our results [105].

Table 1.

Descriptive statistics and correlations among research variables.

4.3. Hypothesis Testing

In order to test our hypotheses, we performed hierarchical regressions on our dependent variables using three separate models. First, we constructed models (1 and 4) with our control variables. Second, we added our independent variables (model 2 and 5). Our last models (3 and 6) consisted of the previous interactions plus our moderator and its interaction term in VI. These results are presented in Table 2.

Table 2.

Results of hierarchical regressions.

Hypothesis 1 holds that algorithmic compensation would be negatively related to procedural justice perceptions. Yet, and surprisingly, the results of Model 2 suggest that the relationship between these two variables is positive and significant (β = 0.24, p < 0.001). Thus, Hypothesis 1 is rejected.

With respect to our second dependent variable, we proposed in Hypothesis 2 that algorithmic compensation would be positively related to time-based stress. Table 2 shows that this relationship is also positive and significant (β = 0.23, p < 0.001). As a result, Hypothesis 2 is supported.

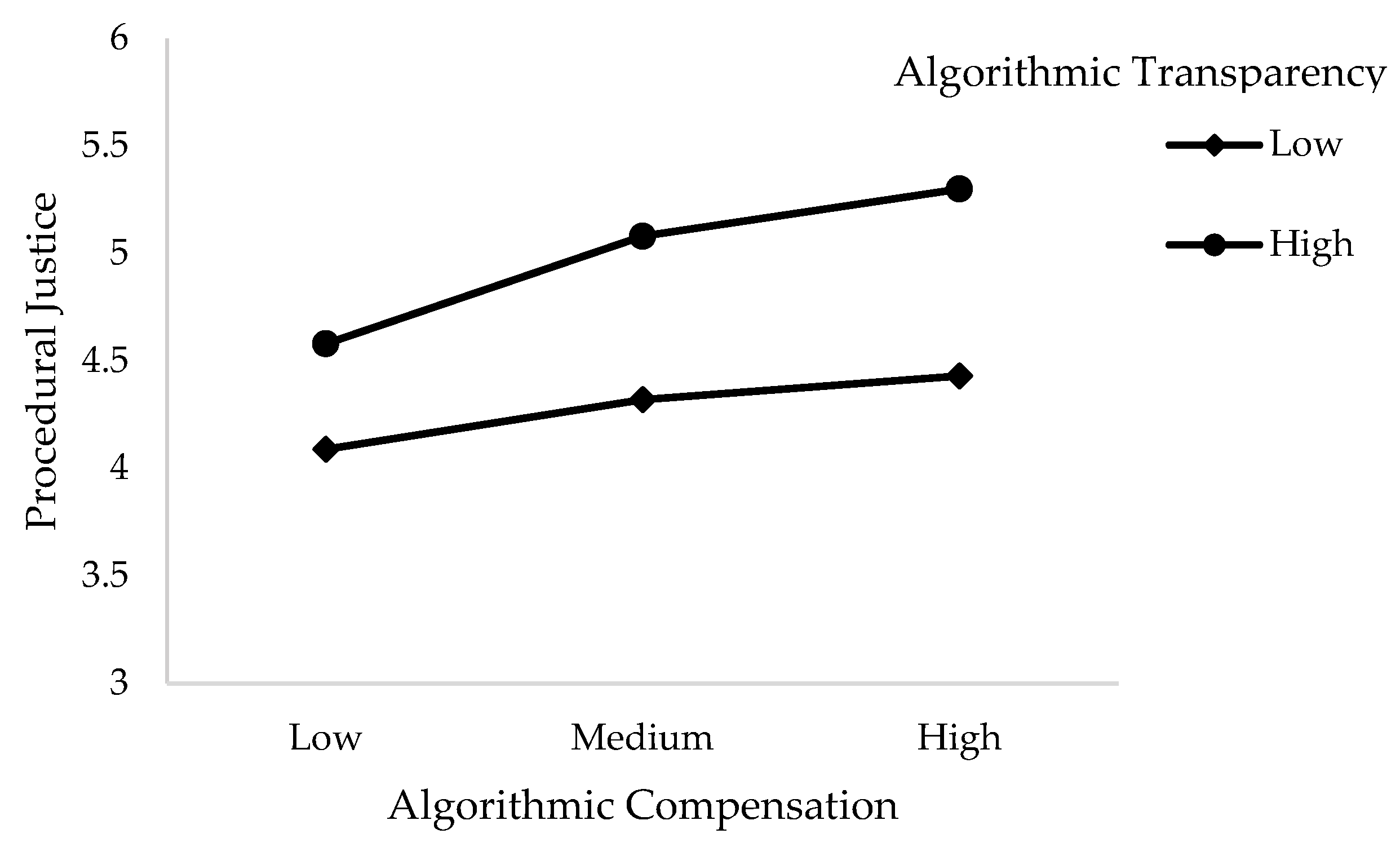

Hypotheses 3 and 4 hold that algorithmic transparency moderates the relationships between the independent variable and the two dependent variables, so that these relationships will be weaker when perceived transparency is high. As can be seen in Table 2, only one of the two interactions tested is significant. Indeed, the moderating effect in the relationship between algorithmic compensation and procedural justice is significant (β = 0.05, p < 0.001) and significantly improves the model’s ability to explain the variance in procedural justice (ΔR2 = 0.09 ***). In Hypothesis 3, we proposed that increasing algorithmic transparency could mitigate the negative effect of algorithmic compensation on procedural justice perceptions. Instead, it appears to increase the positive relationship between these two variables. Therefore, such a result suggests that our reasoning is not essentially flawed, as the moderating effect appears to explain some of this relationship. Thus, Hypothesis 3 is partially supported.

The interactions of Model 6 in Table 2 predict that time-based stress is not significant nor does it help explain the variance in time stress across individuals. Indeed, it appears that the positive relationship between algorithmic compensation and time-based stress is independent of perceptions of algorithmic transparency, thus Hypothesis 4 is rejected.

Figure 2 consists of simple slopes from our regressions for Hypothesis 3, allowing us to visualize the moderating effects under study. Indeed, even if the coefficient of Hypothesis 3 is low, we can observe that the higher the algorithmic transparency, the more pronounced the relationship between algorithmic compensation and procedural justice.

Figure 2.

Moderating effect of transparency in the relationship between algorithmic compensation and procedural justice.

5. Discussion

5.1. Theoretical Contributions

This study contributes to the growing literature on the effects of AM, particularly concerning algorithmic compensation. Indeed, few studies have focused on this topic and generally they examine this concept interchangeably with, or as a function of, AM [52,59,80,84]. Indeed, to our knowledge, this study is the first to quantitatively measure the effects algorithmic compensation and its relationship to procedural justice perceptions and time-based stress. Algorithmic transparency has received more attention from researchers, but no study has applied this concept specifically to algorithmic compensation. Indeed, we were able conceptualize it and measure its unexpected impacts, thus opening new avenues, based on more generalizable results.

The results regarding our first hypothesis, which expected a negative relationship between algorithmic compensation and procedural justice, is counterintuitive and thus raise some questions. The positive association between algorithmic compensation and perceived justice of procedures can first be interpreted in the light of specificities of gig work realities. Whereas a vast literature on artificial intelligence (un)fairness highlights the phenomenon of algorithmic aversion and the risks and biases associated with AI tools, another stream of research have started to show that humans may prefer algorithmic decision to human-made decision in certain circumstances and for some types of decisions [106,107,108,109]. We believe that gig workers specifically, because they engage in this work knowing that most of the procedures are technology-mediated and free of human intervention, are likely to assimilate higher level of exposure to algorithmic compensation to greater level of procedural justice. In other words, the justice expectations of gig workers might be higher when everything is automated, whereas human intervention could be perceived as a risk of unfairness.

Relatedly, this adds a question regarding the applicability of the Leventhal [49] criteria, the dominant theoretical framework of procedural justice, to the reality of gig work [110]. Without answering it, highlighting this question sets the table for a potentially important theorical development, knowing that the gig economy’s growth will probably continue in the years to come. Thus, the fact that our quantitative data appear to embolden certain theoretical leads seems to be an interesting contribution to the current literature.

Though, our results are consistent with the hypothesis according to which algorithmic compensation is associated with greater time-based stress for workers. This contributes to the literature on working conditions among OLPs, showing that exposure to algorithmic compensation mode, characterized by piecework pay and algorithmic control over tips and gig allocation is a risk factor for workers. This is because algorithmic compensation forces them to work faster and longer to make a living.

Finally, algorithmic transparency as a field of research is growing rapidly [78,84,111]. On one hand, the results of this study contribute to the advocating for greater explicability in algorithmic decisions by suggesting that transparency fosters greater procedural justice perceptions in algorithmic management. On the other hand, this study also notes that, rather than representing a panacea for all of AM’s ills, transparency fails to mitigate its stressful effect. In other words, algorithmic compensation is stressful, even when the platform provides details and information regarding automated decision-making criteria and processes. First, it could be that some forms of transparency are too cognitively demanding to be helpful to mitigate stress. Second, and worst, it could be that transparency also uncovers information regarding pay procedures that contribute to (rather than reduce) stress. Third, it could also be that or just that it does not change the stressful nature of piecework pay and pay for performance. Thus, our results could benefit future research questions aimed at clarifying the nuanced effect of algorithmic transparency.

5.2. Practical Contributions

Our results suggest that transparency has a limited range of applications as a remedy for the consequences of algorithmic compensation. Indeed, our results show that, despite a significant moderating effect in the case of procedural justice perceptions, increasing transparency does not seem to mitigate the time-based stress, due to gig work. Thus, the contribution for actors in these industries is manifold, especially regarding platforms aiming to self-regulate, legislators seeking to build a framework around the use of algorithms in the labor market or platform designs and managers concerned about worker health. Indeed, our results indicate that transparency can be beneficial, but that organizational realities must be considered as a whole in order to maintain a balanced view of the contribution of explicability in an automated system. This result seems to partially nuance the generally accepted view around AM, which offers algorithmic transparency as a solution that could solve most deleterious effect caused by these systems [7,112,113,114]. Thus, to reduce time-based stress, a reevaluation of other aspects of algorithmic compensation, such as pay-for-performance, tip management and the preponderance of customer appraisals, could be needed.

Our study also contributes to the reflections and attempts to regulate gig work. Indeed, our results clearly show that compensation practices are neither necessarily nor fundamentally unfair. Thus, on the one hand, this de-demonization allows us to foresee a great possibility of responsible algorithmic compensation, provided that the practices are supervised. On the other hand, our results also argue for such a framework, given the evidence of the time-based stress experienced by gig workers.

Our contributions also extend beyond the gig economy and are informative for traditional workplaces where AM is implemented. Workers of different sectors with traditional employment arrangement (e.g., transportation, logistics, warehouses, retail, healthcare, factories) are increasingly exposed to AM, including algorithmic compensation practices [22]. Literature reveals similar problems of opacity in algorithmic systems in these settings. Hence, our findings indicate to managers that these practices are seen as stressful and unfair. Albeit incomplete, greater transparency in algorithmic pay determination represent a solution to reestablish the perceived justice surrounding these practices.

5.3. Limitations and Future Studies

The first limitation of this study refers to the sampling method, since the participants in the questionnaires could share common traits, thus reducing the generalization of the results. In this case, 83.5% of the respondents were American, and this geographical concentration is a limitation of our thesis, given that the population under study consists mainly of residents of emerging countries [115]. Moreover, these results concerning platform workers seem difficult to generalize to other industries.

Also, a methodological limitation arises from our cross-sectional design, where variables were self-reported and measured in a single questionnaire. This type of design leaves room for common variance bias. Despite our validity analyses and low-to-moderate correlations suggesting that this risk of bias is moderate, studies aiming to replicate our results would greatly benefit from spreading the measures over time and including constructs assessed by third parties. Another methodological limitation of this study is the number of control variables. Given the sample size and the fact that these are new constructs, more control variables, such as trust and job satisfaction, could have better isolated the measured effects.

Moreover, despite interesting results that are at odds with the current literature, several other studies are needed to further investigate this research topic and to ensure the validity of our results. First, it could be rewarding to examine in more detail the effect of transparency as a predictor of procedural justice. Indeed, our results suggest that it acts more as an antecedent than a moderator. Second, it would be interesting to analyze the impacts related to algorithmic compensation and AM of perceived transparency, especially when it reaches high proportions. Indeed, a study on the marginal effect of transparency would allow us to examine certain propositions in the literature, indicating that above a certain level transparency produces unfavorable effects [7,111]. Third, in order to broaden the scope of the research, it would be interesting to conduct a study in relation to the primary source of income and time-based stress. Indeed, our results suggest that this relationship is strong, more so than those found in our hypotheses, and many authors already distinguish these types of gig workers [13,28,100,116]. Thus, future research could investigate the differences between these populations, allowing for an even finer-grained analysis of the effects of algorithmic compensation. Finally, examining other dimensions of organizational justice in relation to algorithmic compensation could be of interest, allowing for a comparison with the surprising results of this research.

6. Conclusions

The objective of this study was to analyze the influence of algorithmic compensation on perceptions of procedural justice and time-based stress and to evaluate the moderating effect of transparency in these relationships. The study carried out allowed us to achieve this objective, bringing surprising findings, since our results show that there is a positive relationship between algorithmic compensation and procedural justice and time-based stress. Also, algorithmic transparency mitigates the former relationship, but we did not find an effect on the latter. We believe that this study will deepen the knowledge regarding gig work and new management methods such as algorithmic compensation and management. Thus, our empirical analysis contributes to the evolution of the still early literature on this topic, allowing for the initiation of new avenues for research, and contributes to the development of methods in this industry.

Author Contributions

Conceptualization, B.S. and X.P.-R.; formal analysis, B.S.; funding acquisition, X.P.-R.; data collection, X.P.-R.; methodology, B.S.; project administration: X.P.-R.; writing—original draft preparation, B.S.; writing—review and editing, X.P.-R. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Social Sciences and Humanities Research Council of Canada, (SSHRC: grant number 756-2019-0094).

Institutional Review Board Statement

The study was conducted in accordance with the ethical guidelines and approved by the Ethics Committee of HEC Montréal.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Schwab, K. The Fourth Industrial Revolution; Crown Publishing: New York, NY, USA, 2017. [Google Scholar]

- Pereira Pessôa, M.V.; Jauregui Becker, J.M. Smart design engineering: A literature review of the impact of the 4th industrial revolution on product design and development. Res. Eng. Des. 2020, 31, 175–195. [Google Scholar] [CrossRef]

- Meijerink, J.; Keegan, A. Conceptualizing human resource management in the gig economy. J. Manag. Psychol. 2019, 34, 214–232. [Google Scholar] [CrossRef]

- Meijerink, J.; Boons, M.; Keegan, A.; Marler, J. Algorithmic human resource management: Synthesizing developments and cross-disciplinary insights on digital HRM. Int. J. Hum. Resour. Manag. 2021, 32, 2545–2562. [Google Scholar] [CrossRef]

- Parent-Rocheleau, X.; Parker, S.K. Algorithms as work designers: How algorithmic management influences the design of jobs. Hum. Resour. Manag. Rev. 2022, 32, 100838. [Google Scholar] [CrossRef]

- Cheng, M.M.; Hackett, R.D. A critical review of algorithms in HRM: Definition, theory, and practice. Hum. Resour. Manag. Rev. 2021, 31, 100698. [Google Scholar] [CrossRef]

- Gal, U.; Jensen, T.B.; Stein, M.-K. Breaking the vicious cycle of algorithmic management: A virtue ethics approach to people analytics. Inf. Organ. 2020, 30, 100301. [Google Scholar] [CrossRef]

- Kellogg, K.C.; Valentine, M.A.; Christin, A. Algorithms at work: The new contested terrain of control. Acad. Manag. Ann. 2020, 14, 366–410. [Google Scholar] [CrossRef]

- Rani, U.; Kumar Dhir, R.; Furrer, M.; Gőbel, N.; Moraiti, A.; Cooney, S. World Employment and Social Outlook: The Role of Digital Labour Platforms in Transforming the World of Work; International Labour Organisation: Geneva, Switzerland, 2021. [Google Scholar]

- De Stefano, V. The rise of the just-in-time workforce: On-demand work, crowdwork, and labor protection in the gig-economy. Comp. Lab. L. Pol’y J. 2015, 37, 471. [Google Scholar] [CrossRef]

- Lata, L.N.; Burdon, J.; Reddel, T. New tech, old exploitation: Gig economy, algorithmic control and migrant labour. Sociol. Compass 2023, 17, e13028. [Google Scholar] [CrossRef]

- Fernández-Macías, E.; Urzì Brancati, C.; Wright, S.; Pesole, A. The Platformisation of Work; Joint Research Centre (Seville Site): Sevilla, Spain, 2023. [Google Scholar]

- Hou, F.; Lu, Y.; Schimmele, C. Measuring the Gig Economy in Canada Using Administrative Data; Statistics Canada: Ottawa, ON, Canada, 2019. [Google Scholar]

- Ghosh, A.; Ramachandran, R.; Zaidi, M. Women Workers in the Gig Economy in India: An Exploratory Study; Elsevier: Amsterdam, The Netherlands, 2021. [Google Scholar] [CrossRef]

- Gregory, K. ‘My Life Is More Valuable than This’: Understanding Risk among On-Demand Food Couriers in Edinburgh. Work Employ. Soc. 2021, 35, 316–331. [Google Scholar] [CrossRef]

- Wood, A. Algorithmic Management Consequences for Work Organisation and Working Conditions; JRC Working Papers Series on Labour, Education and Technology; Joint Research Centre (JRC): Sevilla, Spain, 2021. [Google Scholar]

- Taylor, K.; Van Dijk, P.; Newnam, S.; Sheppard, D. Physical and psychological hazards in the gig economy system: A systematic review. Saf. Sci. 2023, 166, 106234. [Google Scholar] [CrossRef]

- Glavin, P.; Schieman, S. Dependency and hardship in the gig economy: The mental health consequences of platform work. Socius 2022, 8, 23780231221082414. [Google Scholar] [CrossRef]

- Keith, M.G.; Harms, P.D.; Long, A.C. Worker health and well-being in the gig economy: A proposed framework and research agenda. In Entrepreneurial and Small Business Stressors, Experienced Stress, and Well-Being; Emerald Publishing Limited: Leeds, UK, 2020. [Google Scholar]

- Khethisa, B.L.; Tsibolane, P.; Van Belle, J.-P. Surviving the Gig Economy in the Global South: How Cape Town Domestic Workers Cope. In Proceedings of the IFIP Joint Working Conference on the Future of Digital Work: The Challenge of Inequality, Hyderabad, India, 10–11 December 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 67–85. [Google Scholar]

- Mousteri, V.; Daly, M.; Delaney, L. The gig economy is taking a toll on UK workers’ mental health. LSE Bus. Rev. 2020. [Google Scholar]

- Jarrahi, M.H.; Newlands, G.; Lee, M.K.; Wolf, C.T.; Kinder, E.; Sutherland, W. Algorithmic management in a work context. Big Data Soc. 2021, 8, 20539517211020332. [Google Scholar] [CrossRef]

- Degryse, C. Du flexible au liquide: Le travail dans l’économie de plateforme. Relat. Ind./Ind. Relat. 2020, 75, 660–683. [Google Scholar] [CrossRef]

- Bucher, E.L.; Schou, P.K.; Waldkirch, M. Pacifying the algorithm–Anticipatory compliance in the face of algorithmic management in the gig economy. Organization 2021, 28, 44–67. [Google Scholar] [CrossRef]

- Hill, K. Algorithmic Insecurity, Schedule Nonstandardness, and Gig Worker Wellbeing. In Population Association of America Poster Presentation; Working Paper; Elsevier: Amsterdam, The Netherlands, 2021. [Google Scholar] [CrossRef]

- Newlands, G. Algorithmic surveillance in the gig economy: The organization of work through Lefebvrian conceived space. Organ. Stud. 2021, 42, 719–737. [Google Scholar] [CrossRef]

- Rosenblat, A. Uberland: How Algorithms Are Rewriting the Rules of Work; University of California Press: Berkeley, CA, USA, 2018. [Google Scholar]

- Dunn, M. Making gigs work: Digital platforms, job quality and worker motivations. New Technol. Work Employ 2020, 35, 232–249. [Google Scholar] [CrossRef]

- Nguyen, A. The Constant Boss: Work under Digital Surveillance; Data and Society: New York, NY, USA, 2021; Available online: https://datasociety.net/wp-content/uploads/2021/05/The_Constant_Boss.pdf (accessed on 8 January 2024).

- Leineweber, C.; Eib, C.; Peristera, P.; Bernhard-Oettel, C. The influence of and change in procedural justice on self-rated health trajectories: Swedish Longitudinal Occupational Survey of Health results. Scand. J. Work Environ. Health 2016, 42, 320–328. [Google Scholar] [CrossRef]

- Kivimäki, M.; Elovainio, M.; Vahtera, J.; Ferrie, J.E. Organisational justice and health of employees: Prospective cohort study. Occup. Environ. Med. 2003, 60, 27–34. [Google Scholar] [CrossRef]

- Liljegren, M.; Ekberg, K. The associations between perceived distributive, procedural, and interactional organizational justice, self-rated health and burnout. Work 2009, 33, 43–51. [Google Scholar] [CrossRef]

- Greenberg, J. Organizational injustice as an occupational health risk. Acad. Manag. Ann. 2010, 4, 205–243. [Google Scholar] [CrossRef]

- Magnavita, N.; Chiorri, C.; Acquadro Maran, D.; Garbarino, S.; Di Prinzio, R.R.; Gasbarri, M.; Matera, C.; Cerrina, A.; Gabriele, M.; Labella, M. Organizational justice and health: A survey in hospital workers. Int. J. Environ. Res. Public Health 2022, 19, 9739. [Google Scholar] [CrossRef]

- Glavin, P.; Bierman, A.; Schieman, S. Über-Alienated: Powerless and Alone in the Gig Economy. Work Occup. 2020, 48, 399–431. [Google Scholar] [CrossRef]

- Goods, C.; Veen, A.; Barratt, T. “Is your gig any good?” Analysing job quality in the Australian platform-based food-delivery sector. J. Ind. Relat. 2019, 61, 502–527. [Google Scholar] [CrossRef]

- Bieber, F.; Moggia, J. Risk shifts in the gig economy: The normative case for an insurance scheme against the effects of precarious work. J. Political Philos. 2021, 29, 281–304. [Google Scholar] [CrossRef]

- Montgomery, T.; Baglioni, S. Defining the gig economy: Platform capitalism and the reinvention of precarious work. Int. J. Sociol. Soc. Policy, 2020; ahead-of-print. [Google Scholar] [CrossRef]

- Woodcock, J. The algorithmic panopticon at Deliveroo: Measurement, precarity, and the illusion of control. Ephemera 2020, 20, 67–95. [Google Scholar]

- Bérastégui, P. Exposure to Psychosocial Risk Factors in the Gig Economy: A Systematic Review; ETUI Research Paper—Report 2021.01; Elsevier: Amsterdam, The Netherlands, 2021. [Google Scholar] [CrossRef]

- Freni-Sterrantino, A.; Salerno, V. A plea for the need to investigate the health effects of Gig-Economy. Front. Public Health 2021, 9, 638767. [Google Scholar] [CrossRef]

- Van den Bergh, O. Principles and Practice of Stress Management; Guilford Publications: New York, NY, USA, 2021. [Google Scholar]

- Kaye, M.; McIntosh, D.; Horowitz, J. Stress: The Psychology of Managing Pressure; DK Publishing: London, UK, 2017. [Google Scholar]

- Veen, A.; Barratt, T.; Goods, C. Platform-capital’s ‘app-etite’for control: A labour process analysis of food-delivery work in Australia. Work Employ. Soc. 2020, 34, 388–406. [Google Scholar] [CrossRef]

- Waldkirch, M.; Bucher, E.; Schou, P.K.; Grünwald, E. Controlled by the algorithm, coached by the crowd–how HRM activities take shape on digital work platforms in the gig economy. Int. J. Hum. Resour. Manag. 2021, 32, 2643–2682. [Google Scholar] [CrossRef]

- Folger, R.A.; Cropanzano, R. Organizational Justice and Human Resource Management; Sage: Thousand Oaks, CA, USA, 1998; Volume 7. [Google Scholar]

- Greenberg, J. Organizational justice: Yesterday, today, and tomorrow. J. Manag. 1990, 16, 399–432. [Google Scholar] [CrossRef]

- Colquitt, J.A.; Greenberg, J.; Zapata-Phelan, C.P. What Is Organizational Justice? A historical Overview; Lawrence Erlbaum Associates Publishers: Mahwah, NJ, USA, 2005. [Google Scholar]

- Leventhal, G.S. What should be done with equity theory? In Social Exchange; Springer: Berlin/Heidelberg, Germany, 1980; pp. 27–55. [Google Scholar]

- Wood, A.J.; Graham, M.; Lehdonvirta, V.; Hjorth, I. Good gig, bad gig: Autonomy and algorithmic control in the global gig economy. Work Employ. Soc. 2019, 33, 56–75. [Google Scholar] [CrossRef] [PubMed]

- Gerber, C.; Krzywdzinski, M. Brave New Digital Work? New Forms of Performance Control in Crowdwork. In Work and Labor in the Digital Age; Emerald: Bingley, UK, 2019; pp. 121–143. [Google Scholar]

- Rani, U.; Furrer, M. Digital labour platforms and new forms of flexible work in developing countries: Algorithmic management of work and workers. Compet. Change 2021, 25, 212–236. [Google Scholar] [CrossRef]

- Hannák, A.; Wagner, C.; Garcia, D.; Mislove, A.; Strohmaier, M.; Wilson, C. Bias in online freelance marketplaces: Evidence from taskrabbit and fiverr. In Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing, Portland, OR, USA, 25 February–1 March 2017; pp. 1914–1933. [Google Scholar]

- Lynn, M.; Sturman, M.; Ganley, C.; Adams, E.; Douglas, M.; McNeil, J. Consumer racial discrimination in tipping: A replication and extension. J. Appl. Soc. Psychol. 2008, 38, 1045–1060. [Google Scholar] [CrossRef]

- Orlikowski, W.J.; Scott, S.V. What happens when evaluation goes online? Exploring apparatuses of valuation in the travel sector. Organ. Sci. 2014, 25, 868–891. [Google Scholar] [CrossRef]

- Rosenblat, A.; Levy, K.E.; Barocas, S.; Hwang, T. Discriminating tastes: Uber’s customer ratings as vehicles for workplace discrimination. Policy Internet 2017, 9, 256–279. [Google Scholar] [CrossRef]

- Herrando, C.; Jiménez-Martínez, J.; Martín-De Hoyos, M.J.; Constantinides, E. Emotional contagion triggered by online consumer reviews: Evidence from a neuroscience study. J. Retail. Consum. Serv. 2022, 67, 102973. [Google Scholar] [CrossRef]

- Duggan, J.; Sherman, U.; Carbery, R.; McDonnell, A. Algorithmic management and app-work in the gig economy: A research agenda for employment relations and HRM. Hum. Resour. Manag. J. 2019, 30, 114–132. [Google Scholar] [CrossRef]

- Griesbach, K.; Reich, A.; Elliott-Negri, L.; Milkman, R. Algorithmic control in platform food delivery work. Socius 2019, 5, 2378023119870041. [Google Scholar] [CrossRef]

- Wood, A.J.; Lehdonvirta, V. Platform Precarity: Surviving Algorithmic Insecurity in the Gig Economy; Elsevier: Amsterdam, The Netherlands, 2021. [Google Scholar] [CrossRef]

- Myhill, K.; Richards, J.; Sang, K. Job quality, fair work and gig work: The lived experience of gig workers. Int. J. Hum. Resour. Manag. 2020, 32, 4110–4135. [Google Scholar] [CrossRef]

- Thibaut, J.; Walker, L. Procedural Justice: A Psychological Analysis; L. Erlbaum Associates: Hillsdale, MI, USA, 1975. [Google Scholar]

- Wood, A.J.; Lehdonvirta, V. Platform Labour and Structured Antagonism: Understanding the Origins of Protest in the Gig Economy; Oxford Internet Institute Platform Economy Seminar Series; Oxford Internet Institute: Oxford, UK, 2019. [Google Scholar] [CrossRef]

- Colquitt, J.A.; Conlon, D.E.; Wesson, M.J.; Porter, C.O.; Ng, K.Y. Justice at the millennium: A meta-analytic review of 25 years of organizational justice research. J. Appl. Psychol. 2001, 86, 425. [Google Scholar] [CrossRef]

- Apouey, B.; Roulet, A.; Solal, I.; Stabile, M. Gig workers during the COVID-19 crisis in France: Financial precarity and mental well-being. J. Urban Health 2020, 97, 776–795. [Google Scholar] [CrossRef] [PubMed]

- Christie, N.; Ward, H. The health and safety risks for people who drive for work in the gig economy. J. Transp. Health 2019, 13, 115–127. [Google Scholar] [CrossRef]

- Lehdonvirta, V. Flexibility in the gig economy: Managing time on three online piecework platforms. New Technol. Work Employ. 2018, 33, 13–29. [Google Scholar] [CrossRef]

- Riezzo, C. An Exploration of Employee Motivation and Job Satisfaction in the Gig Economy. Elphinstone Rev. 2021, 7, 61–74. [Google Scholar]

- Zheng, Y.; Wu, P.F. Producing speed on demand: Reconfiguration of space and time in food delivery platform work. Inf. Syst. J. 2022, 32, 973–1004. [Google Scholar] [CrossRef]

- Möhlmann, M.; Zalmanson, L. Hands on the wheel: Navigating algorithmic management and Uber drivers’. In Proceedings of the 38th International Conference on Information Systems, Seoul, Republic of Korea, 10–13 December 2017. [Google Scholar]

- Kuhn, K.M.; Meijerink, J.; Keegan, A. Human resource management and the gig economy: Challenges and opportunities at the intersection between organizational HR decision-makers and digital labor platforms. In Research in Personnel and Human Resources Management; Emerald Publishing Limited: Bingley, UK, 2021. [Google Scholar]

- Baktash, M.B.; Heywood, J.S.; Jirjahn, U. Worker Stress and Performance Pay: German Survey Evidence. J. Econ. Behav. Organ. 2022, 201, 276–291. [Google Scholar] [CrossRef]

- Kinicki, A.J.; Vecchio, R.P. Influences on the quality of supervisor–subordinate relations: The role of time-pressure, organizational commitment, and locus of control. J. Organ. Behav. 1994, 15, 75–82. [Google Scholar] [CrossRef]

- Lazear, E.P. Compensation and Incentives in the Workplace. J. Econ. Perspect. 2018, 32, 195–214. [Google Scholar] [CrossRef]

- Krishna, S. Spatiotemporal (In)justice in Digital Platforms: An Analysis of Food-Delivery Platforms in South India. In The Future of Digital Work: The Challenge of Inequality; Springer: Cham, Switzerland, 2020; pp. 132–147. [Google Scholar]

- Karasek, R.A., Jr. Job demands, job decision latitude, and mental strain: Implications for job redesign. Adm. Sci. Q. 1979, 24, 285–308. [Google Scholar] [CrossRef]

- Cant, C. Riding for Deliveroo: Resistance in the New Economy; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Shin, D. The effects of explainability and causability on perception, trust, and acceptance: Implications for explainable AI. Int. J. Hum.-Comput. Stud. 2021, 146, 102551. [Google Scholar] [CrossRef]

- Malhotra, A. Making the one-sided gig economy really two-sided: Implications for future of work. In Handbook of Digital Innovation; Edward Elgar Publishing: Cheltenham, UK, 2020. [Google Scholar]

- Möhlmann, M.; Zalmanson, L.; Henfridsson, O.; Gregory, R.W. Algorithmic management of work on online labor platforms: When matching meets control. MIS Q. 2020, 45, 1999–2022. [Google Scholar] [CrossRef]

- Wood, A.J.; Lehdonvirta, V. Antagonism beyond employment: How the ‘subordinated agency’of labour platforms generates conflict in the remote gig economy. Socio-Econ. Rev. 2021, 19, 1369–1396. [Google Scholar] [CrossRef]

- Duggan, J.; Sherman, U.; Carbery, R.; McDonnell, A. Boundaryless careers and algorithmic constraints in the gig economy. Int. J. Hum. Resour. Manag. 2021, 33, 4468–4498. [Google Scholar] [CrossRef]

- Lamers, L.; Meijerink, J.; Jansen, G.; Boon, M. A Capability Approach to worker dignity under Algorithmic Management. Ethics Inf. Technol. 2022, 24, 10. [Google Scholar] [CrossRef]

- Langer, M.; Landers, R.N. The future of artificial intelligence at work: A review on effects of decision automation and augmentation on workers targeted by algorithms and third-party observers. Comput. Hum. Behav. 2021, 123, 106878. [Google Scholar] [CrossRef]

- Popan, C. Embodied Precariat and Digital Control in the “Gig Economy”: The Mobile Labor of Food Delivery Workers. J. Urban Technol. 2021, 1–20. [Google Scholar] [CrossRef]

- Bujold, A.; Parent-Rocheleau, X.; Gaudet, M.-C. Opacity behind the wheel: The relationship between transparency of algorithmic management, justice perception, and intention to quit among truck drivers. Comput. Hum. Behav. Rep. 2022, 8, 100245. [Google Scholar] [CrossRef]

- Demerouti, E.; Bakker, A.B.; Nachreiner, F.; Schaufeli, W.B. The job demands-resources model of burnout. J. Appl. Psychol. 2001, 86, 499. [Google Scholar] [CrossRef]

- Aguinis, H.; Villamor, I.; Ramani, R.S. MTurk research: Review and recommendations. J. Manag. 2021, 47, 823–837. [Google Scholar] [CrossRef]

- Hunt, A.; Samman, E. Gender and the Gig Economy: Critical Steps for Evidence-Based Policy; Overseas Development Institute: London, UK, 2019. [Google Scholar]

- Parent-Rocheleau, X.; Parker, S.K.; Bujold, A.; Gaudet, M.C. Creation of the algorithmic management questionnaire: A six-phase scale development process. Hum. Resour. Manag. 2023. [Google Scholar] [CrossRef]

- Colquitt, J.A. On the dimensionality of organizational justice: A construct validation of a measure. J. Appl. Psychol. 2001, 86, 386. [Google Scholar] [CrossRef]

- Fritz, C.; Sonnentag, S. Antecedents of day-level proactive behavior: A look at job stressors and positive affect during the workday. J. Manag. 2009, 35, 94–111. [Google Scholar] [CrossRef]

- Prem, R.; Ohly, S.; Kubicek, B.; Korunka, C. Thriving on challenge stressors? Exploring time pressure and learning demands as antecedents of thriving at work. J. Organ. Behav. 2017, 38, 108–123. [Google Scholar] [CrossRef]

- Silla, I.; Gamero, N. Shared time pressure at work and its health-related outcomes: Job satisfaction as a mediator. Eur. J. Work Organ. Psychol. 2014, 23, 405–418. [Google Scholar] [CrossRef]

- Sonnentag, S.; Pundt, A. Leader-member exchange from a job-stress perspective. In The Oxford Handbook of Leader-Member Exchange; Oxford University Press: Oxford, UK, 2016. [Google Scholar]

- Goswami, A.; Dutta, S. Gender differences in technology usage—A literature review. Open J. Bus. Manag. 2015, 4, 51–59. [Google Scholar] [CrossRef]

- Hauk, N.; Göritz, A.S.; Krumm, S. The mediating role of coping behavior on the age-technostress relationship: A longitudinal multilevel mediation model. PLoS ONE 2019, 14, e0213349. [Google Scholar] [CrossRef]

- Huffman, A.H.; Whetten, J.; Huffman, W.H. Using technology in higher education: The influence of gender roles on technology self-efficacy. Comput. Hum. Behav. 2013, 29, 1779–1786. [Google Scholar] [CrossRef]

- Morris, M.G.; Venkatesh, V. Age differences in technology adoption decisions: Implications for a changing work force. Pers. Psychol. 2000, 53, 375–403. [Google Scholar] [CrossRef]

- Keith, M.G.; Harms, P.; Tay, L. Mechanical Turk and the gig economy: Exploring differences between gig workers. J. Manag. Psychol. 2019, 34, 286–306. [Google Scholar] [CrossRef]

- Fugate, M.; Prussia, G.E.; Kinicki, A.J. Managing employee withdrawal during organizational change: The role of threat appraisal. J. Manag. 2012, 38, 890–914. [Google Scholar] [CrossRef]

- Carricano, M.; Poujol, F.; Bertrandias, L. Analyse de Données avec SPSS®; Pearson Education France: Paris, France, 2010. [Google Scholar]

- Roussel, P.; Durrieu, F.; Campoy, E.; El Akremi, A. Analyse des effets linéaires par modèles d’équations structurelles. In Management des Ressources Humaines; De Boeck Supérieur: Louvain-la-Neuve, Belgium, 2005; pp. 297–324. [Google Scholar]

- Schumacker, R.E.; Lomax, R.G. A Beginner’s Guide to Structural Equation Modeling; Psychology Press: London, UK, 2004. [Google Scholar]

- Fuller, C.M.; Simmering, M.J.; Atinc, G.; Atinc, Y.; Babin, B.J. Common methods variance detection in business research. J. Bus. Res. 2016, 69, 3192–3198. [Google Scholar] [CrossRef]

- Burton, J.W.; Stein, M.K.; Jensen, T.B. A systematic review of algorithm aversion in augmented decision making. J. Behav. Decis. Mak. 2020, 33, 220–239. [Google Scholar] [CrossRef]

- John-Mathews, J.-M.; Cardon, D.; Balagué, C. From reality to world. A critical perspective on AI fairness. J. Bus. Ethics 2022, 178, 945–959. [Google Scholar] [CrossRef]

- Logg, J.M.; Minson, J.A.; Moore, D.A. Algorithm appreciation: People prefer algorithmic to human judgment. Organ. Behav. Hum. Decis. Process. 2019, 151, 90–103. [Google Scholar] [CrossRef]

- Narayanan, D.; Nagpal, M.; McGuire, J.; Schweitzer, S.; De Cremer, D. Fairness Perceptions of Artificial Intelligence: A Review and Path Forward. Int. J. Hum.-Comput. Interact. 2023, 40, 4–23. [Google Scholar] [CrossRef]

- Colquitt, J.A.; Hill, E.T.; De Cremer, D. Forever focused on fairness: 75 years of organizational justice in Personnel Psychology. Pers. Psychol. 2023, 76, 413–435. [Google Scholar] [CrossRef]

- Langer, M.; König, C.J. Introducing a multi-stakeholder perspective on opacity, transparency and strategies to reduce opacity in algorithm-based human resource management. Hum. Resour. Manag. Rev. 2021, 33, 100881. [Google Scholar] [CrossRef]

- Felzmann, H.; Fosch-Villaronga, E.; Lutz, C.; Tamò-Larrieux, A. Towards transparency by design for artificial intelligence. Sci. Eng. Ethics 2020, 26, 3333–3361. [Google Scholar] [CrossRef]

- Kemper, J.; Kolkman, D. Transparent to whom? No algorithmic accountability without a critical audience. Inf. Commun. Soc. 2019, 22, 2081–2096. [Google Scholar] [CrossRef]

- Larsson, S.; Heintz, F. Transparency in artificial intelligence. Internet Policy Rev. 2020, 9. [Google Scholar] [CrossRef]

- Galperin, H.; Greppi, C. Geographic Discrimination in the Gig Economy. Digit. Econ. Glob. Margins 2019, 12, 295–318. [Google Scholar]

- Hamann, T.K.; Güldenberg, S. New Forms of Creating Value: Platform-Enabled Gig Economy Today and in 2030. In Managing Work in the Digital Economy; Springer: Cham, Switzerland; Edinburgh, UK, 2021; pp. 81–98. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).