1. Introduction

With the development of computer technology, artificial intelligence has made considerable progress in recent years. Optical character recognition (OCR) [

1], which is a field of computer science, has also gradually appeared in various industries. OCR has traditionally involved the processing and analysis of images to recognize specific information. However, with continual technological developments, OCR is often defined as an all-image text detection and recognition technology in a broad sense (graphic recognition technology), which includes both traditional OCR recognition technology and scene text recognition [

2].

Traditional OCR recognition may yield distortions, folds, mutilations, and blurring, and in some cases, the text in the image is distorted. Moreover, because most document images are of regular tables, a large-scale and effective preprocessing process, that is, the use of image preprocessing technology, is required in advance to achieve a high level of text detection and recognition. The preprocessing may include image segmentation, image rotation correction, line detection, image matching, text outline extraction, and local segmentation.

Image segmentation is the process of dividing an image into several specific regions with unique properties in which each region is a continuous set of pixels. It is a key step in image processing and analysis. Commonly used segmentation methods are mainly divided into the following categories [

3]: threshold-based, region-based, and edge-based segmentation methods. In recent years, researchers have continuously improved the original image segmentation methods. Several new theories and methods from other disciplines have been used for image segmentation and many new segmentation methods have been proposed, such as those based on specific theories, cluster analysis [

4,

5], fuzzy set theory [

6], wavelet transform, and neural networks [

7]. However, as no general theory has been developed thus far, most existing segmentation algorithms are problem-specific and do not provide a general segmentation method that is suitable for all images. In practical applications, multiple segmentation algorithms are generally used together effectively to achieve better image segmentation results.

Image rotation correction is necessary because images that are obtained via printer scanning or cell phone photography may be rotated; for example, a rotated bill image will seriously affect the positioning of the rows and columns in the bill image layout, thereby increasing the difficulty of bill layout analysis. Therefore, the use of an effective rotation correction method [

8,

9,

10] to correct bill images can significantly improve the performance of the bill recognition system.

The line detection technique refers to the need for the straight line detection of images in certain recognition processing scenarios. The accuracy of straight line detection is decisive for the OCR localization of form-based bills. Commonly used methods [

11,

12,

13] include projection transform, Hough transform, chain code, and tour, among which the Hough transform algorithm and its improvements have exhibited improved practicality [

14]. Furthermore, vectorization algorithms [

15,

16] offer a wide range of applications in the straight line detection process.

Image matching techniques can be applied to character localization and stamp detection. The position of the region of interest can be retrieved to locate the corresponding character position indirectly with the aid of image matching algorithms. Many matching methods have been proposed to improve the matching speed and performance; for example, the mutual correlation detection image matching algorithm [

17], similarity measure matching method [

18], adaptive mapping matching method [

19], scale-invariant feature transform matching algorithm [

20], and genetic-algorithm-based image matching method [

21].

Character outline extraction and local segmentation techniques are required to segment the characters completely prior to character recognition. Common methods include threshold segmentation [

22], morphological segmentation [

23], and model-based segmentation algorithms. The general character segmentation algorithm first performs vertical projection, then analyzes the character outline, and finally segments the characters according to a predetermined character pitch or size. This approach is mainly divided into the following two categories: row segmentation [

24] and isolated image decomposition [

25].

With the development of deep learning technology, the complex preprocessing of traditional OCR is gradually being replaced with deep learning with simple steps [

26]. The OCR process mainly includes two models: a text detection model and a text recognition model. At the inference stage, the two models are combined to build the entire graphic recognition system. End-to-end graphic detection and recognition networks have emerged in recent years. In this approach, during the training phase, the input to the model contains the image to be trained, text content in the image, and coordinates corresponding to the text. Following the inference phase, the original image is predicted directly using the end-to-end model with text content information. Xuebo Liu et al. proposed an end-to-end network for FOTS, which saves time and learns more image features than the traditional approach [

27]. Christian Bartz et al. proposed an STN-OCR end-to-end network, which improves the accuracy of the recognition phase by embedding a spatial transformation network in the detection process to perform affine transformation on the input image [

28]. Siyang Qin et al. proposed an unconstrained end-to-end network to simplify the recognition process by reducing the detection of arbitrarily shaped text to an instance segmentation problem without the need to perform text region correction in advance [

29].

This method is simpler to train and more efficient than the traditional OCR recognition approach, and it can enable easier deployment through model optimization.

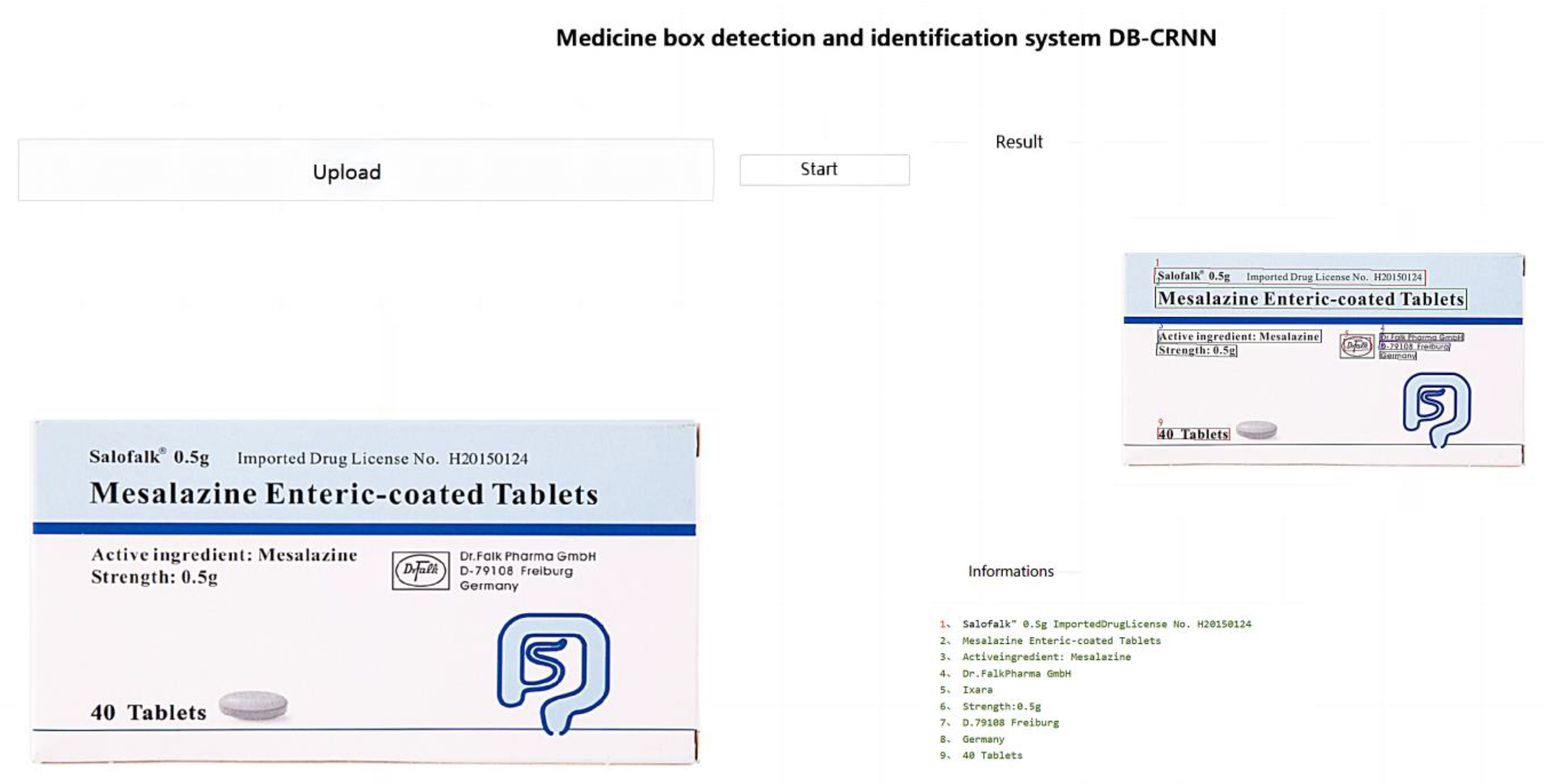

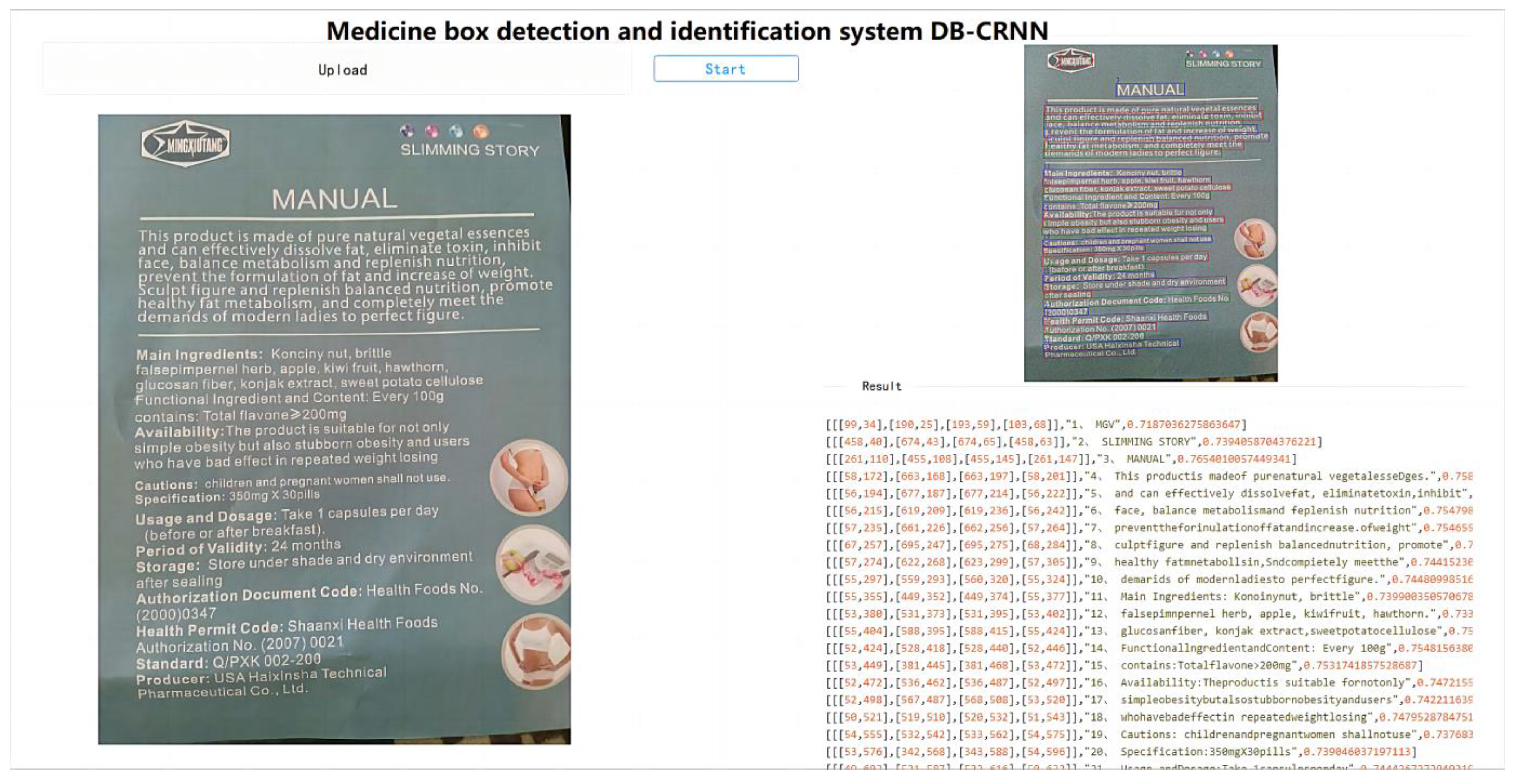

With the implementation of information technology in pharmaceutical systems, more and more complex and redundant tasks can be gradually improved by means of information technology. Among them, the entry of drug box information is a time-consuming and labor-intensive task that is also error-prone. Based on the excellent recognition capabilities of end-to-end deep learning networks, they can be used to reduce the complexity of this task while improving the accuracy of entry. In this paper, we propose and design a deep-learning-based end-to-end scene text recognition algorithm for the task of recognizing pill box text.

The contributions of this study are summarized as follows:

(1) A deep-learning-based scene text recognition algorithm was designed to recognize medicine names from the text of pill boxes. Considering the complexity of pill box images, this algorithm is more convenient for training than traditional OCR algorithms, and it does not require complicated and redundant image preprocessing. Thus, the ease of use of the entire algorithm is improved.

(2) DBNet, which is superior to other models in detecting text regions in scene text recognition, was used as the image text region detection model. Thus, the accuracy of the text analysis was improved.

(3) The B/S-based detection architecture, which is convenient for text content recognition, was used to reduce the learning cost of the users in the scenes, thereby significantly improving the ease of use of the model.

This paper is organized in the following manner:

Section 2 discusses related work, primarily in the area of deep learning and neural networks. This is followed by a description of several deep-learning-driven graphical neural networks that differ from traditional approaches to graphical recognition. We describe the proposed deep learning-based method for recognizing pill boxes in

Section 3. The concept and principle of the method are also discussed in detail. The experimentation process for deep-learning-based pill box recognition is elaborated on in

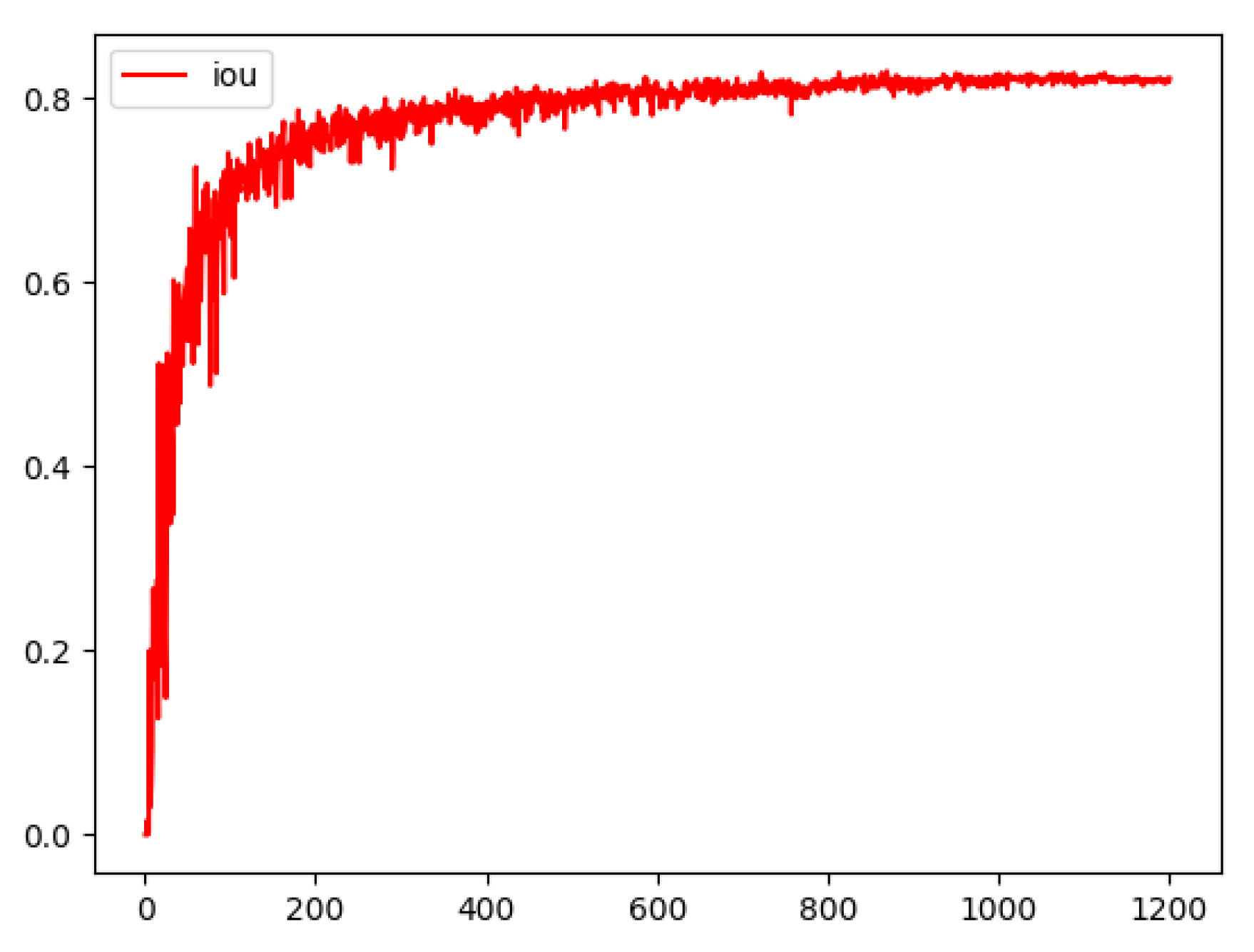

Section 4, along with the specific network training process and the specific training results. In addition to the architecture design of the recognition system, the final experimental results are presented. Lastly, the experimental results presented in

Section 4 are analyzed and the next research direction is determined.

3. Proposed Method

3.1. Pill Box Text Recognition

The recognition of pill box text requires two parts: text region detection and text recognition. Owing to the increased interest in neural networks over the past few years and the efficiency and accuracy that they provide, they have gradually replaced the traditional method of detecting natural scene text using a deep-learning-based approach to recognize pill box text. Comparisons with the traditional method have demonstrated that deep learning provides better recognition results and a faster recognition speed [

37].

Two general methods are available for text detection: regression and segmentation methods. The Fast R-CNN and SSD models are the most common regression-based algorithms. In addition to their poor performance and lengthy training process, they cannot handle distorted shape data with poor shooting effects. DBNet uses an image segmentation method to detect arbitrary text shapes, for example, pill boxes. The process may include factors such as text occlusion, bending, and complex backgrounds.

Once the text region has been determined, the next step is to recognize the images in the region.

Figure 1 depicts the traditional image detection and recognition processes. Traditional image detection and recognition methods are redundant and complex, with low recognition efficiency.

In this study, the DBNet detection framework was used in combination with a CRNN and CTC loss.

Figure 2 presents the use of the DBNet-CRNN as the basis for end-to-end image text detection and recognition.

3.2. Text Region Detection

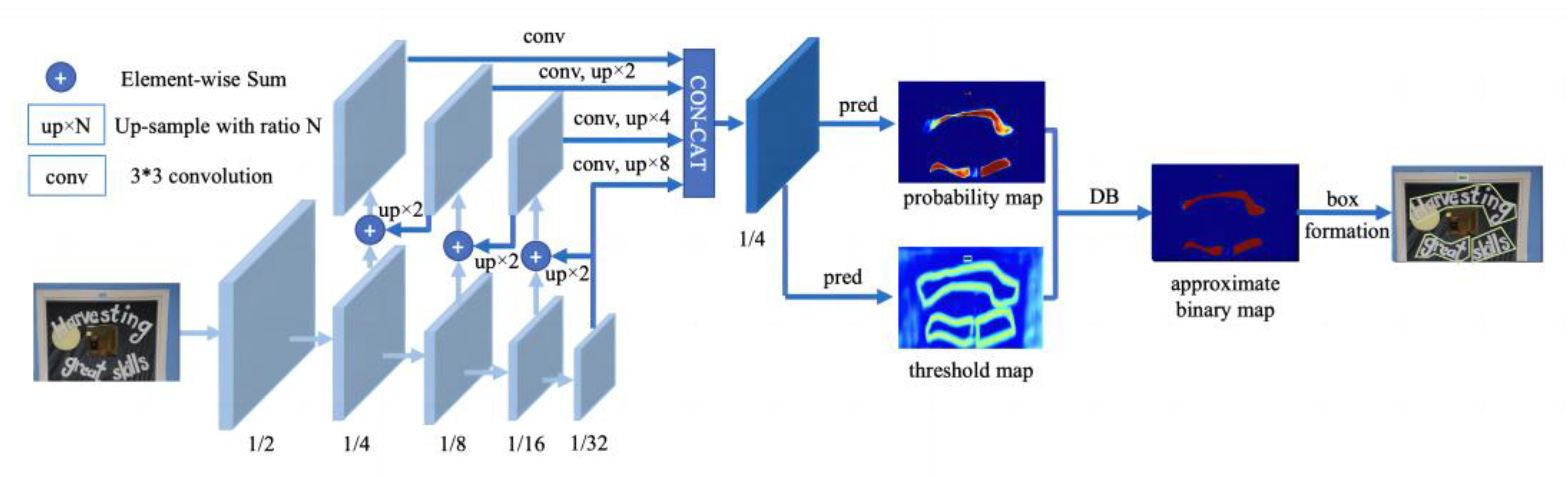

The text region detection module must locate the text region in the captured image. This method is used to draw a detection box that contains the text to provide the input for the subsequent text recognition. In this study, the DBNet algorithm was used to segment the data; compared with the more mainstream use of CTPN, DBNet can solve not only rotated and tilted text, but also distorted text. For the task of drug box text recognition, this method can greatly improve the accuracy of text detection. The structure of the DBNet model network is illustrated in

Figure 3.

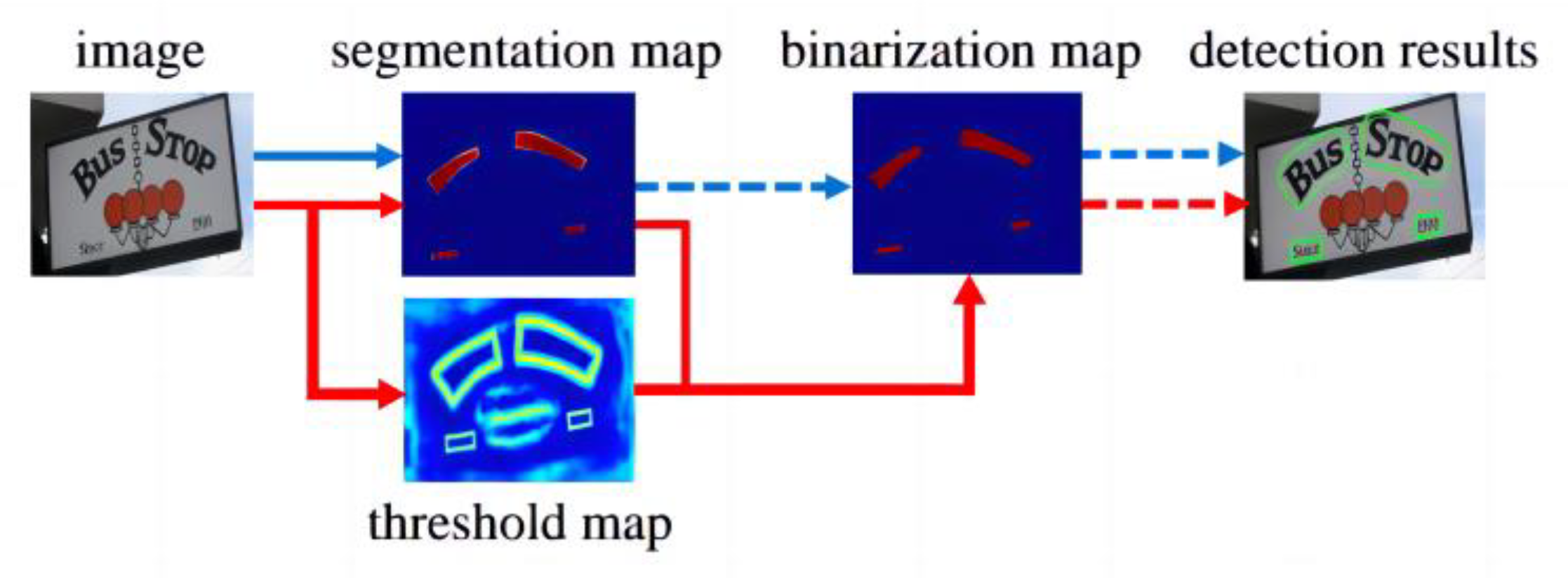

Figure 4 compares the traditional text detection algorithm and the DBNet detection algorithm. The main principle of the DBNet detection algorithm is the transformation of the probability map that is generated using segmentation methods into bounding boxes and text regions, which includes binarization postprocessing. Binarization is crucial in character recognition, and the traditional binarization operation by setting a fixed threshold is difficult to adapt to complex and variable detection scenarios (the blue process in the figure). In this study, the binarization operation was applied to the network and optimized simultaneously so that the threshold value of each pixel point could be predicted adaptively, that is, using DBNet (the red flow in the figure). Its text detector involves the insertion of differentiable binarization into the segmentation network as a simple segmentation network. In this manner, the adaptive thresholding of the entire heat map is eventually achieved.

The basic steps of differentiable binarization are as follows: First, the backbone and features of the input image are extracted. Subsequently, the image is passed to the feature giant, the same-size image is acquired during feature association, the acquired backbone and features are analyzed, and the predicted probability and threshold maps are calculated. Finally, the final approximate binarization map is obtained according to the predicted probability and threshold maps, and the text edge frame is generated. Standard binary processing is not differentiable and the segmentation network cannot be optimized during the training process. Therefore, microscopic binarization is used for improved computation of the inverse values.

Differentiable binarization differs from standard binarization in that the transitive function is approximated as follows:

where

denotes the approximate binary map,

T is the threshold feature map that is learned by the network, and

k denotes the magnification, which is set to an empirical value of 50. This enables better distinction between the foreground and background.

The text detection header uses a feature pyramid network structure, which is divided into a bottom-up convolution operation and top-down sampling operation to obtain multi-scale features. The lower part of the structure is a 3 × 3 convolution operation, which obtains 1/2, 1/4, 1/8, 1/16, and 1/32 of the original image size according to the convolution formula. Subsequently, top-down up-sampling ×2 is performed, followed by fusion with the same size feature map that is generated from the bottom-up operation. After fusion, a 3 × 3 convolution is used to eliminate the blending effect of the up-sampling, and, finally, the output of each layer is rescaled and unified into a feature map of 1/4 size.

Thereafter, in the training phase, the probability map was determined by obtaining the binarized image. The binary map was then derived based on a threshold value and the connected region was determined. Finally, the text box was obtained by expanding and shrinking the sample region through the compensation calculation of Equation (2).

3.3. Text Recognition

The CRNN text recognition algorithm integrates feature extraction, sequence modeling, and transcription for natural scene text recognition. Its greatest advantage is that it can recognize text sequences of variable lengths, especially in natural scene text recognition tasks. Furthermore, the recognition accuracy is high after studying the surface.

Figure 5 depicts the CRNN recognition process, in which a convolutional layer, recurrent layer, and transcription layer are included. These layers are used for the feature extraction, sequence modeling, and transcription functions, respectively.

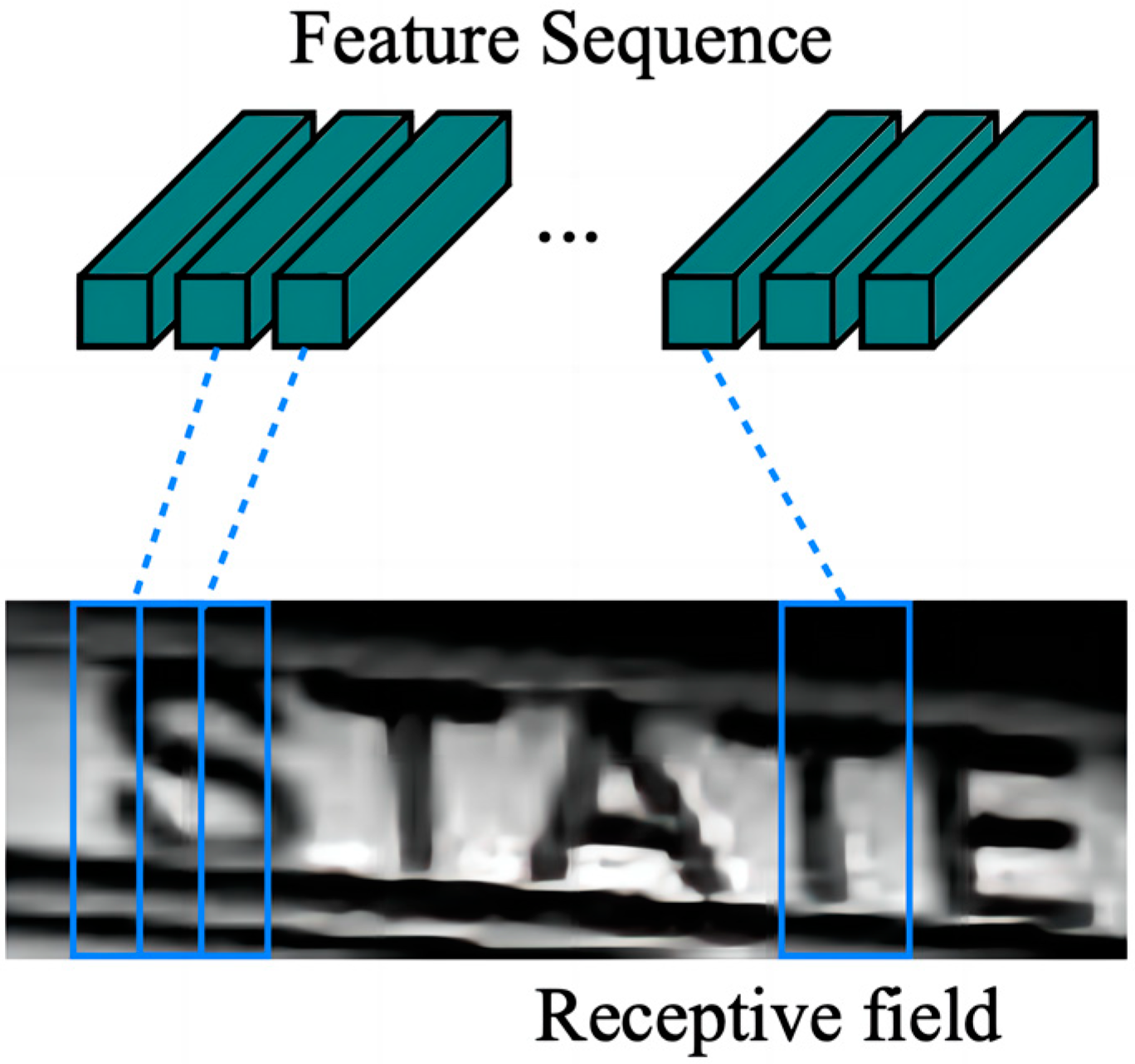

The feature sequences of the input image are first obtained in the convolutional layer and then converted into labels using per-frame prediction in the recurrent layer. The convolutional layer (Conv) contains Conv and MaxPool, which are used to transform the input image into a set of convolutional feature matrices, following which the BN network is used to normalize these convolutional feature matrices sequentially. Thereafter, the obtained feature vectors are arranged individually with the feature maps from left to right. The resulting feature sequence is illustrated in

Figure 6.

As RNNs exhibit the problem of gradient disappearance and cannot obtain more contextual information, CRNN uses a BiLSTM structure in the recurrent layer. The label X =

,……

of the feature sequence

is predicted for each frame

. Because the deep bidirectional recurrent network can memorize the sequence output of long segments, it can utilize the length of the time series in the persistent layer of the LSTM network to obtain more image information, and the forward and reverse directions of the BiLSTM network can obtain more complete image information, thereby effectively preventing the gradient disappearance problem.

Figure 7 presents the structure of the deep bidirectional recurrent network.

The prediction result label of the loop layer is converted into a label sequence by the transcription layer at the top of the CRNN. Theoretically, the transcription layer uses the CTC algorithm to calculate the loss function, which simplifies the training process and causes it to converge rapidly. In the lexicon-free transcription mode, the label of the target text is mainly determined by the label with the highest probability among the predicted result labels. In the lexical transcription mode, the label of the target text is first determined by locating the target text and later by using a fuzzy matching algorithm.

The transcription layer indicates the label sequence

using the predicted result label

for each frame, and any result label

therein is the set

{blank} on a probability distribution, as indicated in Equation (3).

The mapping function β is defined on the sequence

, which is mainly used to eliminate blank or redundant labels. In this manner, the sequence

is written into sequence I, where T is the length and π is the predicted probability. For example, for the sequence “-hh-e-l-ll-0--” (where “-” is a blank character), the resultant sequence after removing blank characters and duplicate labels using the β mapping function is “hello.” The conditional probability is the sum of the probabilities of passing all sequences π through the β mapping function to label sequence I, as shown in Equation (4).

The probability of

π is defined as follows:

where

is the probability of representing label

on timestamp

t.

CRNN offers several advantages in the field of natural scene research. The CRNN model can be trained end to end and its training steps are easier than those of other algorithms. The CRNN model is not limited by the existence of dictionaries, and high accuracy and robustness can be obtained both with and without dictionaries. Furthermore, CRNN is not restricted by the length of the sequences and can handle arbitrary lengths. The CRNN model is smaller in overall size and requires fewer parameters, which provides better performance for many natural scene recognition applications.

5. Conclusions

We combined a DBNet detection network with a CRNN text recognition network to develop a pill box recognition method based on a deep learning approach. Unlike in other methods, prior image preprocessing was not required. The image could be input into the network directly, and, subsequently, output directly following automatic processing by the network. According to experiments using 100 pill boxes, the proposed method offers a significant advantage over other methods for recognizing medicine names.

In the experiments, it was difficult to achieve full recognition when locating the text on the pill box. This is because the pill box itself has many complex factors. Furthermore, although recognition of branch text could be achieved, it could not be output as a whole, which resulted in separate results. According to the results of testing on drug instruction manuals, the key factors affecting the accuracy of drug box content recognition detection was not only the angle problem, but also the irregularity of the text. The next step of research is to solve these two problems in order to improve the accuracy of drug name positioning.

“Smart medicine” is the future direction of medicine and the frontier of academia. As deep learning technology continues to break through, its performance in various fields is increasingly being discussed by academics. By integrating deep learning with medicine, the redundancy and complexity of the work of public health professionals can be reduced, and more powerful artificial intelligence can be used to assist them in completing related tasks more efficiently. The use of smarter, simpler and more er-ror-tolerant ways of entering and managing medicines will be an integral part of the future of medicine.