Improving Intensive Care Unit Early Readmission Prediction Using Optimized and Explainable Machine Learning

Abstract

1. Introduction

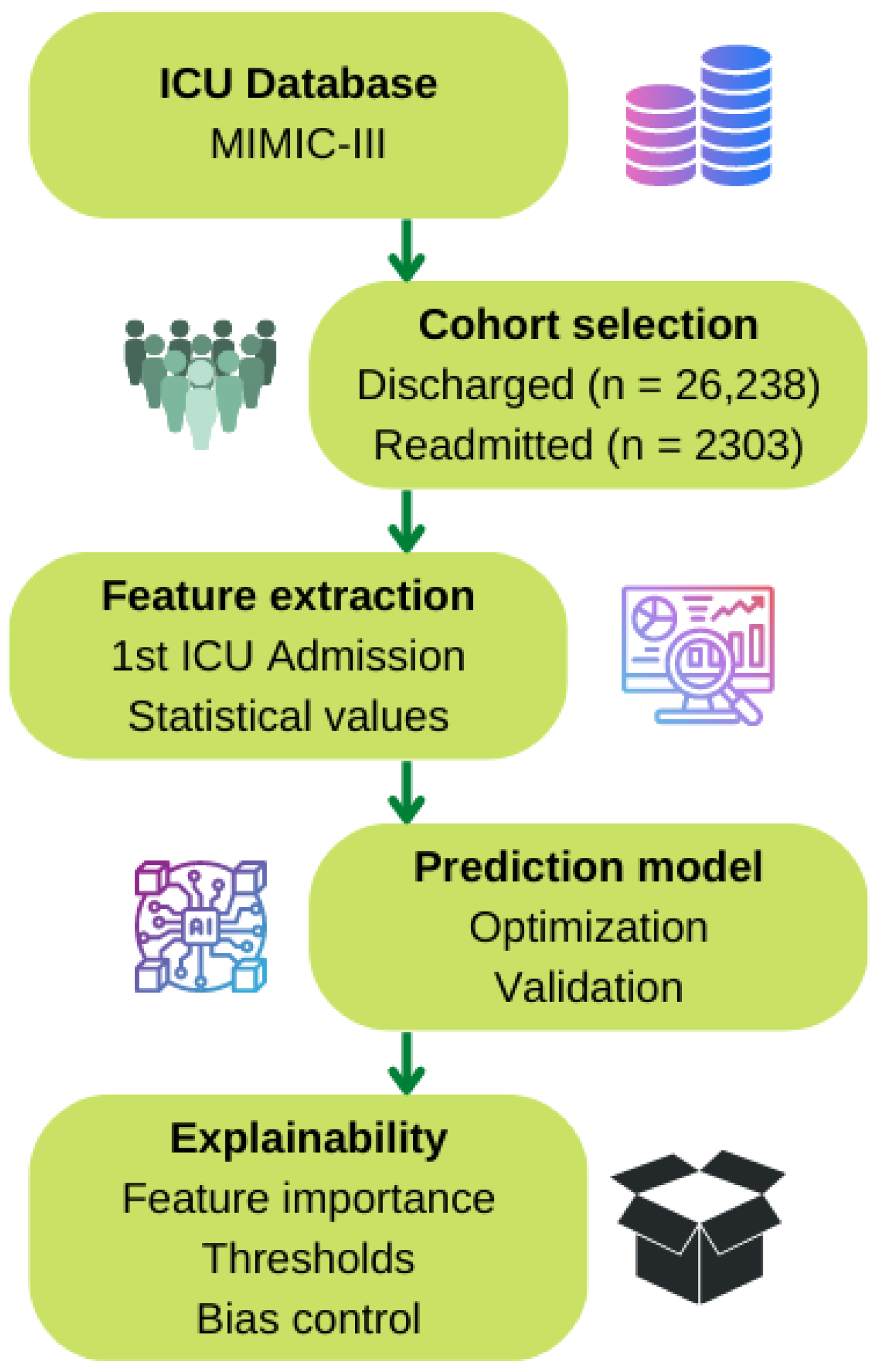

2. Materials and Methods

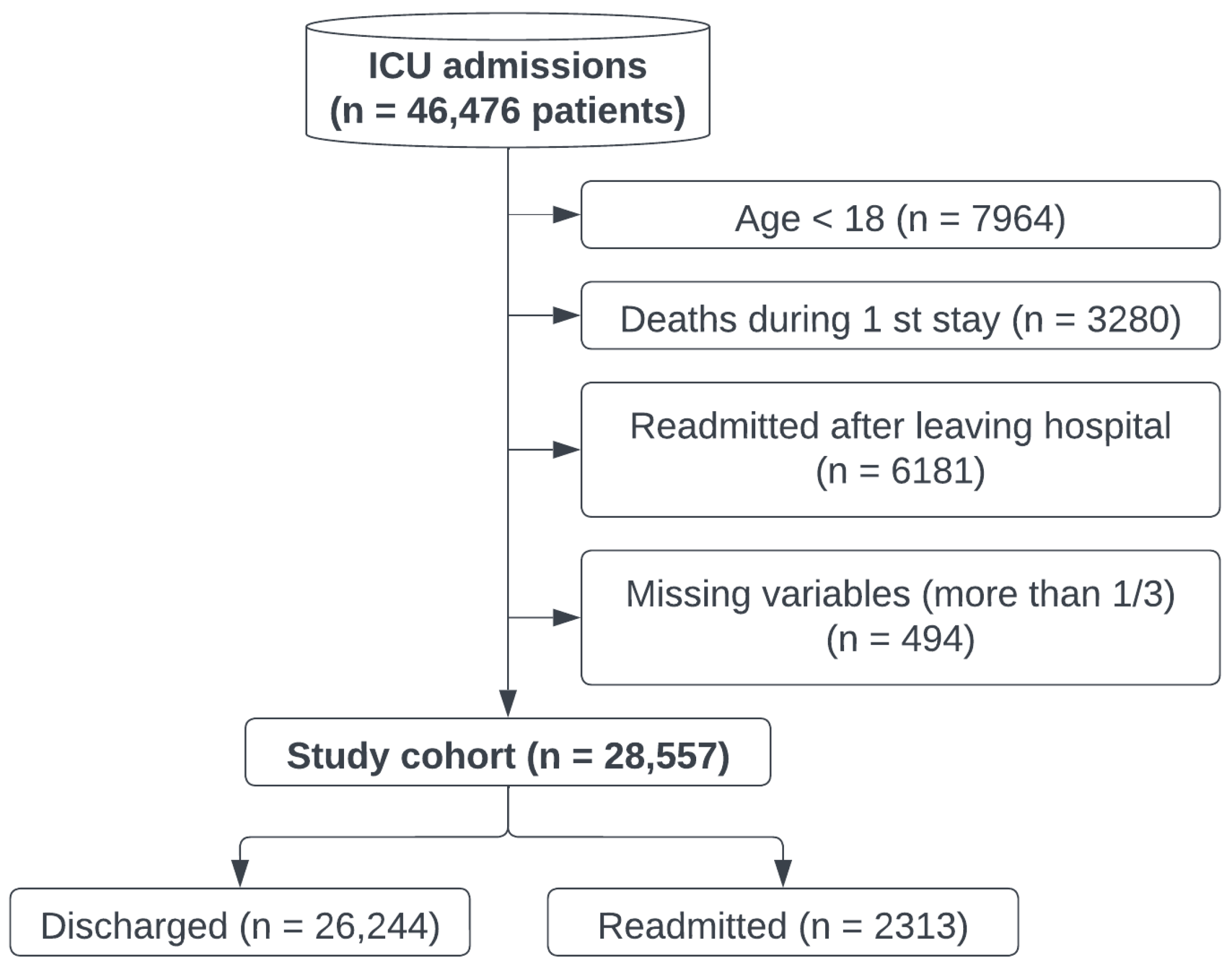

2.1. Cohort Selection

2.2. Feature Extraction

2.3. Early-Readmission Predictor Model

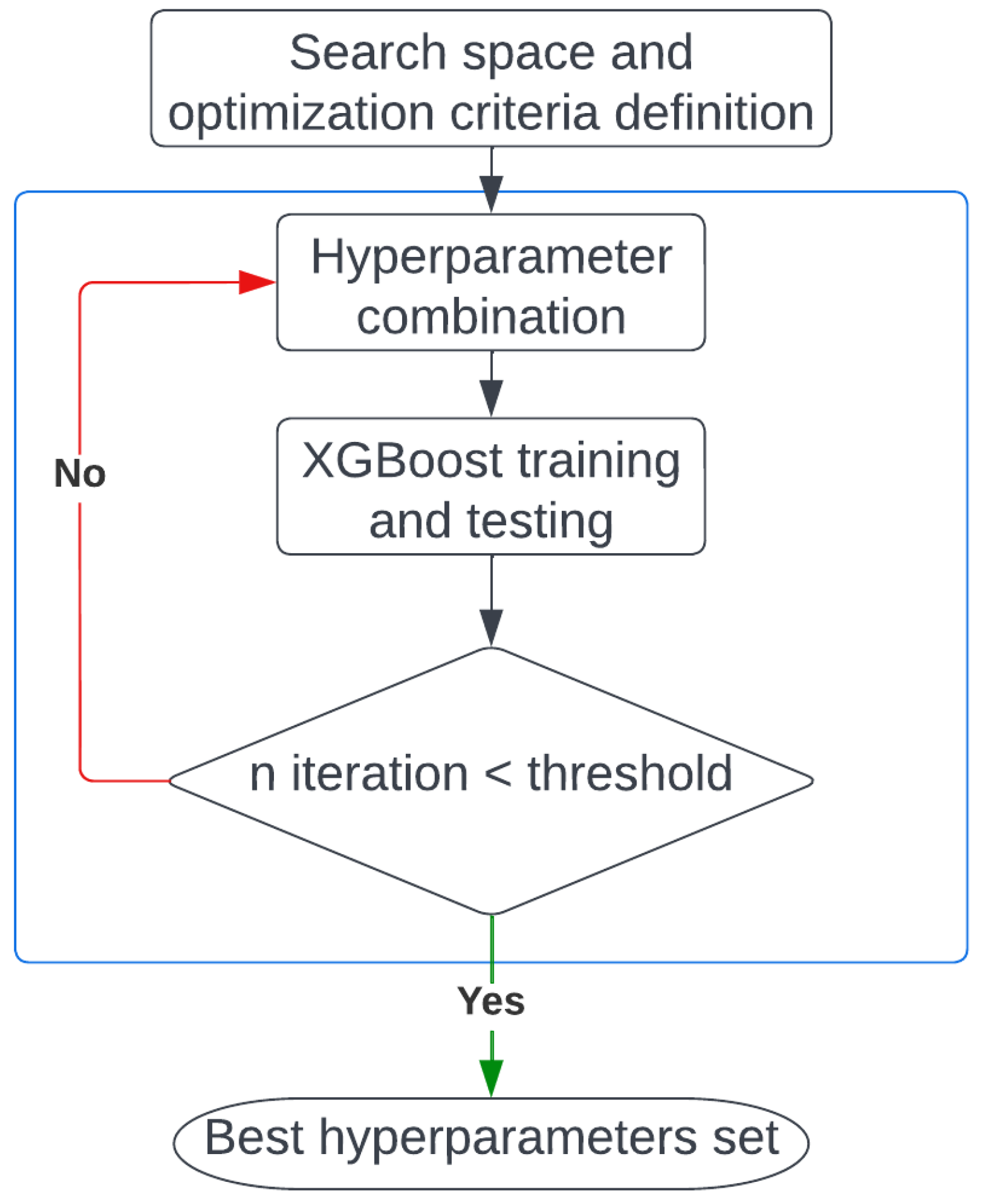

2.3.1. Model Optimization

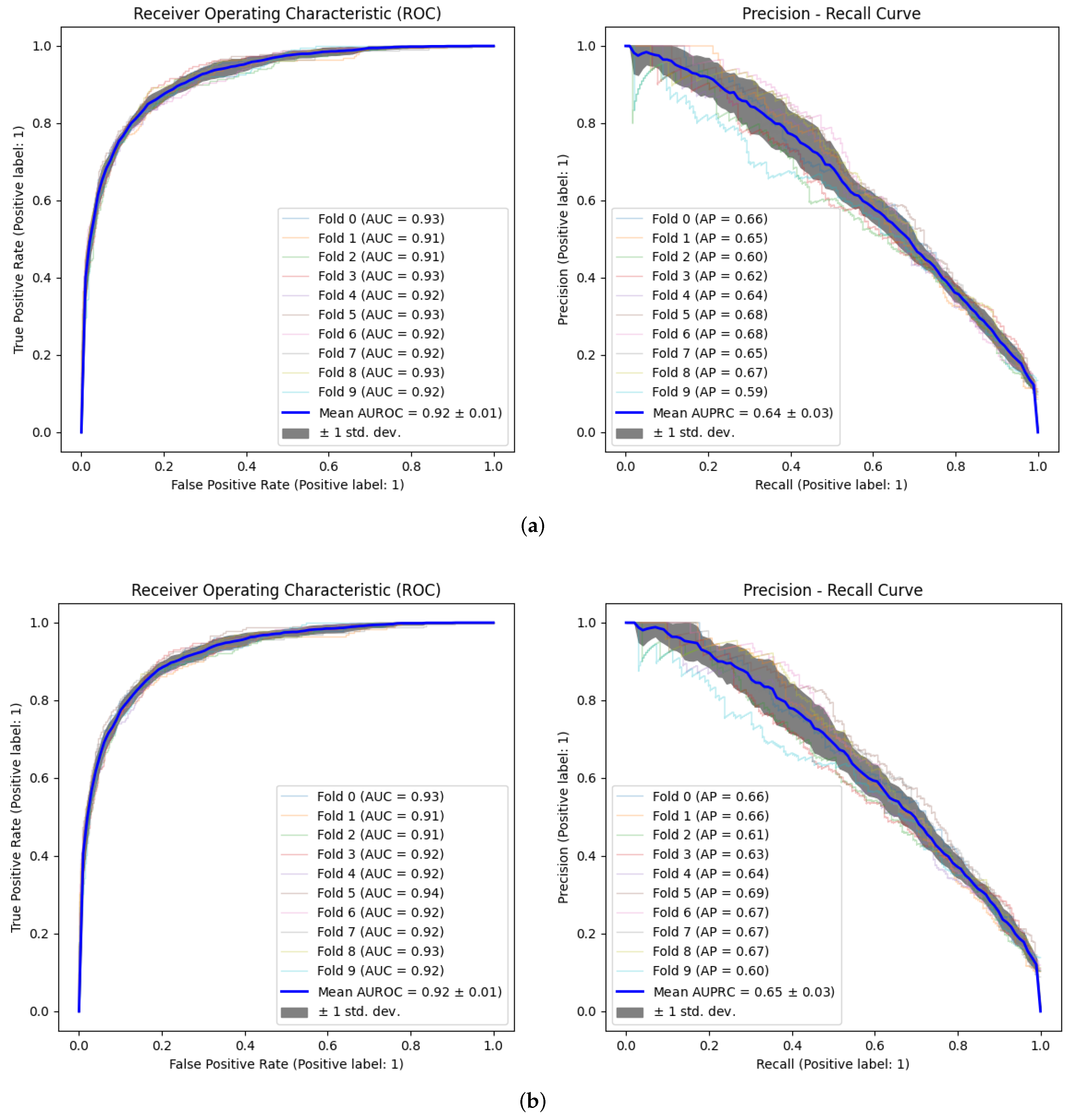

2.3.2. Model Validation

3. Results

3.1. Model Optimization

3.2. Model Validation

3.3. Explainability

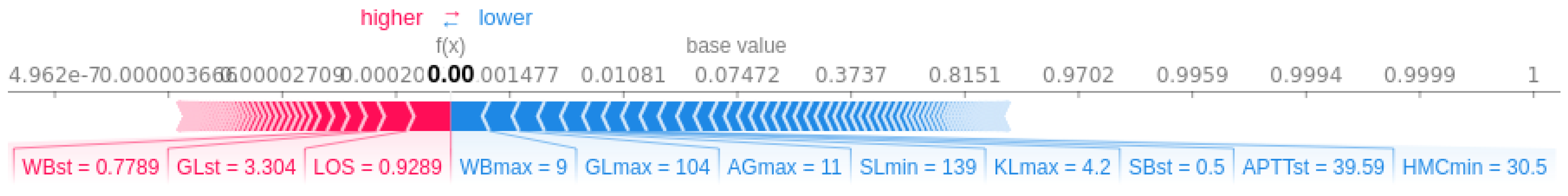

3.3.1. Patient-Specific Information

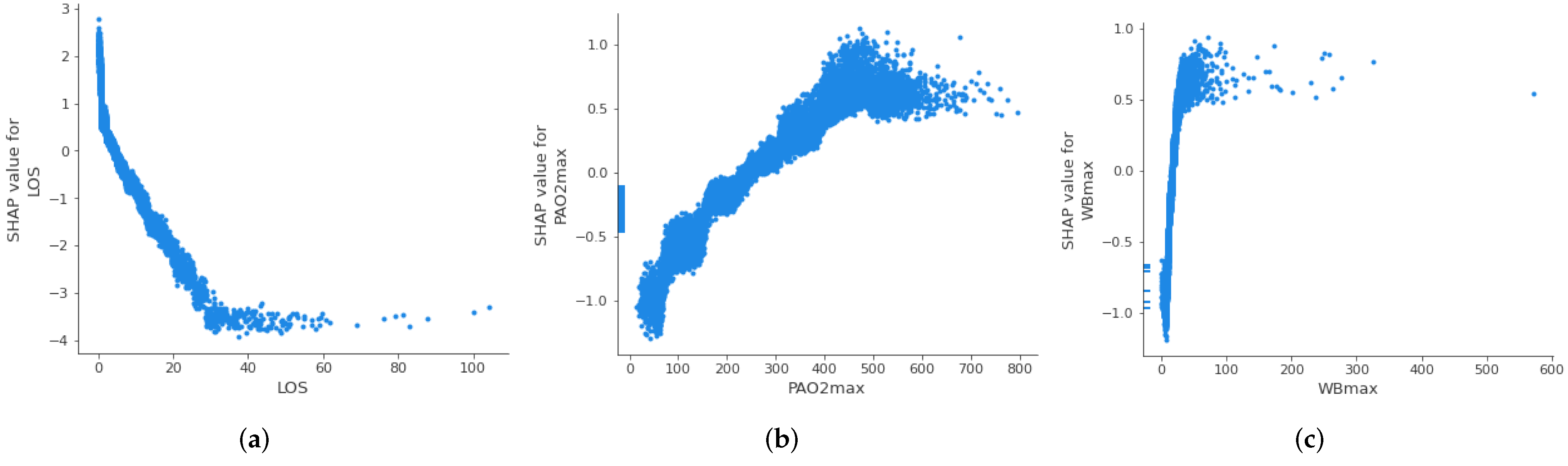

3.3.2. Threshold Identification

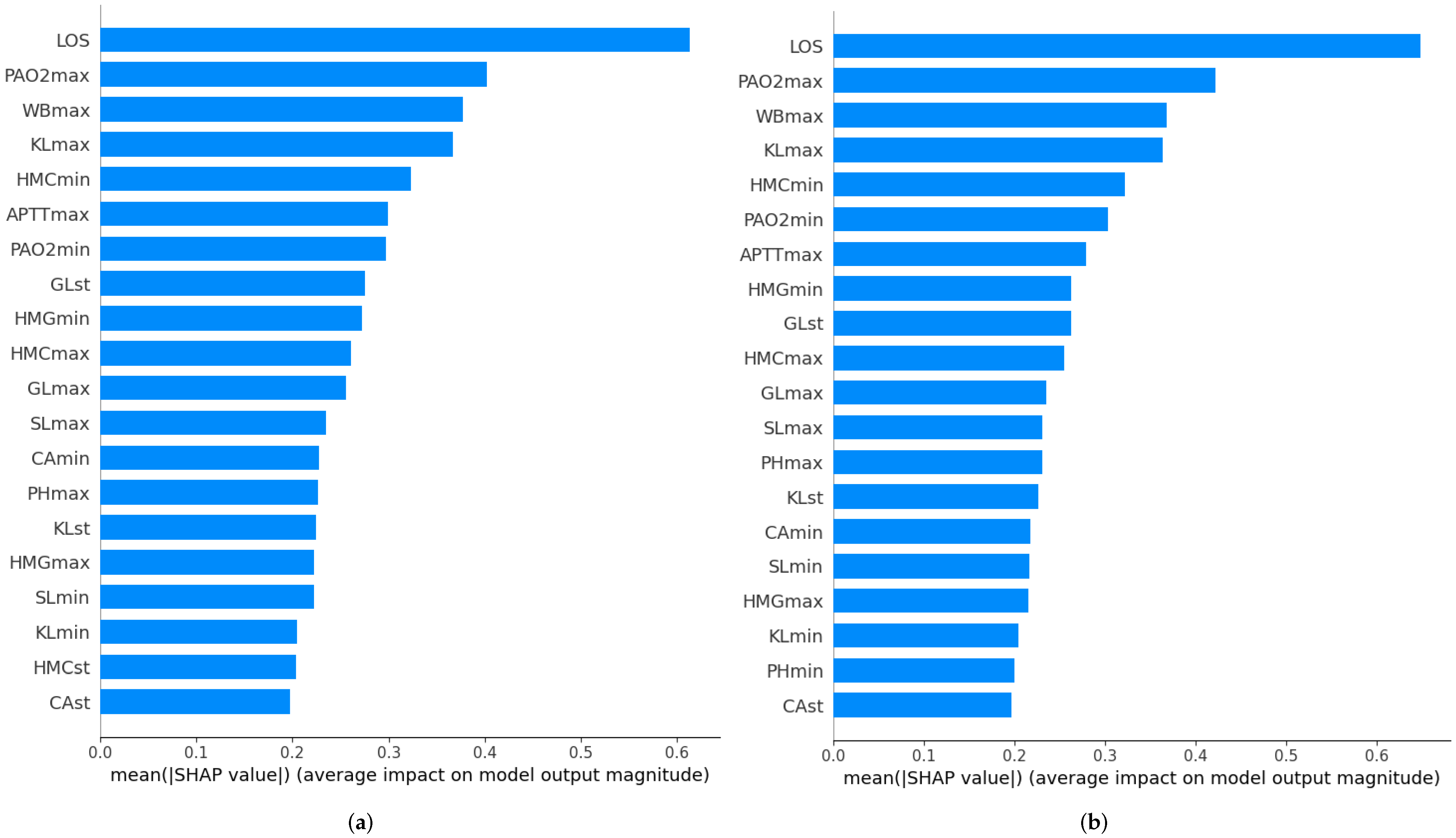

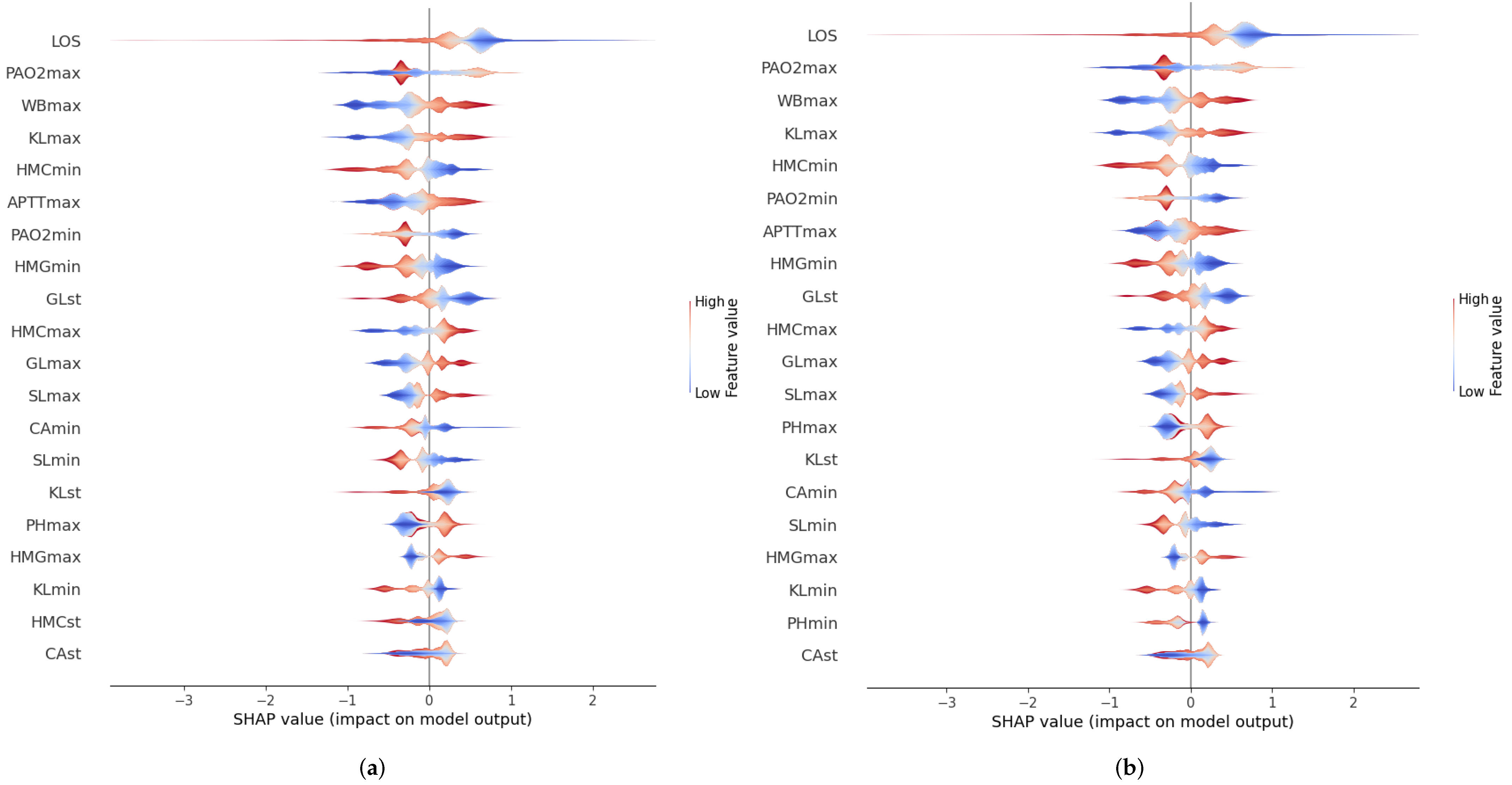

3.3.3. Feature Importance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AUPRC | Area Under Precision-Recall Curve |

| AUROC | Area Under Curve Receiver Operator Characteristic |

| ICU | Intensive Care Unit |

| LOS | Length of Stay |

| MDPI | Multidisciplinary Digital Publishing Institute |

| MIMIC | Medical Information Mart for Intensive Care |

| ROC | Receiver Operator Characteristic |

| SD | Standard Deviation |

| TN | True Negatives |

| TP | True Positives |

| TPE | Tree Parzen Estimator |

References

- Santamaria, J.D.; Duke, G.J.; Pilcher, D.V.; Cooper, D.J.; Moran, J.; Bellomo, R.; on behalf of Discharge and Readmission Evaluation (DARE) Study Group. Readmissions to Intensive Care: A Prospective Multicenter Study in Australia and New Zealand. Crit. Care Med. 2017, 45, 290–297. [Google Scholar] [CrossRef] [PubMed]

- Markazi-Moghaddam, N.; Fathi, M.; Ramezankhani, A. Risk prediction models for intensive care unit readmission: A systematic review of methodology and applicability. Aust. Crit. Care 2020, 33, 367–374. [Google Scholar] [CrossRef] [PubMed]

- Noorbakhsh-Sabet, N.; Zand, R.; Zhang, Y.; Abedi, V. Artificial Intelligence Transforms the Future of Health Care. Am. J. Med. 2019, 132, 795–801. [Google Scholar] [CrossRef] [PubMed]

- Obermeyer, Z.; Lee, T.H. Lost in Thought — The Limits of the Human Mind and the Future of Medicine. N. Engl. J. Med. 2017, 377, 1209–1211. [Google Scholar] [CrossRef] [PubMed]

- Aznar-Gimeno, R.; Esteban, L.M.; Labata-Lezaun, G.; Del-Hoyo-Alonso, R.; Abadia-Gallego, D.; Paño-Pardo, J.R.; Esquillor-Rodrigo, M.J.; Lanas, Á.; Serrano, M.T. A Clinical Decision Web to Predict ICU Admission or Death for Patients Hospitalised with COVID-19 Using Machine Learning Algorithms. Int. J. Environ. Res. Public Health 2021, 18, 8677. [Google Scholar] [CrossRef]

- van de Sande, D.; van Genderen, M.E.; Huiskens, J.; Gommers, D.; van Bommel, J. Moving from bytes to bedside: A systematic review on the use of artificial intelligence in the intensive care unit. Intensive Care Med. 2021, 47, 750–760. [Google Scholar] [CrossRef]

- Barbieri, S.; Kemp, J.; Perez-Concha, O.; Kotwal, S.; Gallagher, M.; Ritchie, A.; Jorm, L. Benchmarking Deep Learning Architectures for Predicting Readmission to the ICU and Describing Patients-at-Risk. Sci. Rep. 2020, 10, 1111. [Google Scholar] [CrossRef]

- Rojas, J.C.; Carey, K.A.; Edelson, D.P.; Venable, L.R.; Howell, M.D.; Churpek, M.M. Predicting Intensive Care Unit Readmission with Machine Learning Using Electronic Health Record Data. Ann. Am. Thorac. Soc. 2018, 15, 846–853. [Google Scholar] [CrossRef]

- Thoral, P.J.; Fornasa, M.; de Bruin, D.P.; Tonutti, M.; Hovenkamp, H.; Driessen, R.H.; Girbes, A.R.J.; Hoogendoorn, M.; Elbers, P.W.G. Explainable Machine Learning on AmsterdamUMCdb for ICU Discharge Decision Support: Uniting Intensivists and Data Scientists. Crit. Care Explor. 2021, 3, e0529. [Google Scholar] [CrossRef]

- Badawi, O.; Breslow, M.J. Readmissions and Death after ICU Discharge: Development and Validation of Two Predictive Models. PLoS ONE 2012, 7, e48758. [Google Scholar] [CrossRef]

- Fialho, A.S.; Cismondi, F.; Vieira, S.M.; Reti, S.R.; Sousa, J.M.C.; Finkelstein, S.N. Data mining using clinical physiology at discharge to predict ICU readmissions. Expert Syst. Appl. 2012, 39, 13158–13165. [Google Scholar] [CrossRef]

- Frost, S.A.; Tam, V.; Alexandrou, E.; Hunt, L.; Salamonson, Y.; Davidson, P.M.; Parr, M.J.A.; Hillman, K.M. Readmission to intensive care: Development of a nomogram for individualising risk. Crit. Care Resusc. 2010, 12, 83–89. [Google Scholar] [PubMed]

- Fehr, J.; Jaramillo-Gutierrez, G.; Oala, L.; Gröschel, M.I.; Bierwirth, M.; Balachandran, P.; Werneck-Leite, A.; Lippert, C. Piloting A Survey-Based Assessment of Transparency and Trustworthiness with Three Medical AI Tools. Healthcare 2022, 10, 1923. [Google Scholar] [CrossRef] [PubMed]

- Guan, H.; Dong, L.; Zhao, A. Ethical Risk Factors and Mechanisms in Artificial Intelligence Decision Making. Behav. Sci. 2022, 12, 343. [Google Scholar] [CrossRef] [PubMed]

- Alonso-Moral, J.M.; Mencar, C.; Ishibuchi, H. Explainable and Trustworthy Artificial Intelligence [Guest Editorial]. IEEE Comput. Intell. Mag. 2022, 17, 14–15. [Google Scholar] [CrossRef]

- Tjoa, E.; Guan, C. A Survey on Explainable Artificial Intelligence (XAI): Toward Medical XAI. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4793–4813. [Google Scholar] [CrossRef] [PubMed]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy 2020, 23, 18. [Google Scholar] [CrossRef]

- Thomson, R.b.W. Review on JSTOR. Economica 1991, 58, 123–124. [Google Scholar] [CrossRef]

- Kaczmarek-Majer, K.; Casalino, G.; Castellano, G.; Dominiak, M.; Hryniewicz, O.; Kamińska, O.; Vessio, G.; Díaz-Rodríguez, N. PLENARY: Explaining black-box models in natural language through fuzzy linguistic summaries. Inform. Sci. 2022, 614, 374–399. [Google Scholar] [CrossRef]

- Casalino, G.; Castellano, G.; Kaymak, U.; Zaza, G. Balancing Accuracy and Interpretability through Neuro-Fuzzy Models for Cardiovascular Risk Assessment. In Proceedings of the 2021 IEEE Symposium Series on Computational Intelligence (SSCI), Orlando, FL, USA, 5–7 December 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Molnar, C. Interpretable Machine Learning. 2022. Available online: https://christophm.github.io/interpretable-ml-book (accessed on 22 December 2021).

- MIMIC-III Clinical Database v1.4. 2022. Available online: https://physionet.org/content/mimiciii/1.4/ (accessed on 4 February 2021).

- Johnson, A.E.W.; Pollard, T.J.; Shen, L.; Lehman, L.w.H.; Feng, M.; Ghassemi, M.; Moody, B.; Szolovits, P.; Anthony Celi, L.; Mark, R.G. MIMIC-III, a freely accessible critical care database. Sci. Data 2016, 3, 160035. [Google Scholar] [CrossRef]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, 215–220. [Google Scholar] [CrossRef] [PubMed]

- Jo, Y.S.; Lee, Y.J.; Park, J.S.; Yoon, H.I.; Lee, J.H.; Lee, C.T.; Cho, Y.J. Readmission to Medical Intensive Care Units: Risk Factors and Prediction. Yonsei Med. J. 2015, 56, 543–549. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Z.; Bo, L.; Xu, Z.; Song, Y.; Wang, J.; Wen, P.; Wan, X.; Yang, T.; Deng, X.; Bian, J. An explainable machine learning algorithm for risk factor analysis of in-hospital mortality in sepsis survivors with ICU readmission. Comput. Methods Programs Biomed. 2021, 204, 106040. [Google Scholar] [CrossRef] [PubMed]

- González-Nóvoa, J.A.; Busto, L.; Rodríguez-Andina, J.J.; Fariña, J.; Segura, M.; Gómez, V.; Vila, D.; Veiga, C. Using Explainable Machine Learning to Improve Intensive Care Unit Alarm Systems. Sensors 2021, 21, 7125. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. arXiv 2016. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. In Advances in Neural Information Processing Systems 30; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 4765–4774. [Google Scholar]

- Kimani, L.; Howitt, S.; Tennyson, C.; Templeton, R.; McCollum, C.; Grant, S.W. Predicting Readmission to Intensive Care after Cardiac Surgery Within Index Hospitalization: A Systematic Review. J. Cardiothorac. Vasc. Anesth. 2021, 35, 2166–2179. [Google Scholar] [CrossRef]

- Nielsen, D. Tree Boosting with XGBoost—Why Does XGBoost Win “Every” Machine Learning Competition? Ph.D. Thesis, Norwegian University of Science and Technology, Trondheim, Norway, 2016. [Google Scholar]

- Liashchynskyi, P.; Liashchynskyi, P. Grid Search, Random Search, Genetic Algorithm: A Big Comparison for NAS. arXiv 2019. [Google Scholar] [CrossRef]

- Hutter, F.; Kotthoff, L.; Vanschoren, J. Automated Machine Learning; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- González-Nóvoa, J.A.; Busto, L.; Campanioni, S.; Fariña, J.; Rodríguez-Andina, J.J.; Vila, D.; Veiga, C. Two-Step Approach for Occupancy Estimation in Intensive Care Units Based on Bayesian Optimization Techniques. Sensors 2023, 23, 1162. [Google Scholar] [CrossRef]

- González-Nóvoa, J.A.; Busto, L.; Santana, P.; Fariña, J.; Rodríguez-Andina, J.J.; Juan-Salvadores, P.; Jiménez, V.; Íñiguez, A.; Veiga, C. Using Bayesian Optimization and Wavelet Decomposition in GPU for Arterial Blood Pressure Estimation. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; pp. 1012–1015. [Google Scholar] [CrossRef]

- Shahriari, B.; Swersky, K.; Wang, Z.; Adams, R.P.; de Freitas, N. Taking the Human Out of the Loop: A Review of Bayesian Optimization. Proc. IEEE 2015, 104, 148–175. [Google Scholar] [CrossRef]

- Bergstra, J.; Yamins, D.; Cox, D.D. Making a Science of Model Search. arXiv 2012. [Google Scholar] [CrossRef]

- Niven, D.J.; Bastos, J.F.; Stelfox, H.T. Critical Care Transition Programs and the Risk of Readmission or Death after Discharge from an ICU: A Systematic Review and Meta-Analysis. Crit. Care Med. 2014, 42, 179–187. [Google Scholar] [CrossRef] [PubMed]

- Fu, G.H.; Yi, L.Z.; Pan, J. Tuning model parameters in class-imbalanced learning with precision-recall curve. Biom. J. 2019, 61, 652–664. [Google Scholar] [CrossRef] [PubMed]

| MIMIC-III | Cohort | |

|---|---|---|

| Patients | 46,476 | 28,557 |

| Age (SD 1) | 55.8 (27.3) | 63.3 (18.1) |

| Gender | M: 26,087 F: 20,380 | M: 16,390 F: 12,167 |

| Readmission rate | 18.84% | 8.10% |

| Variable | Units | Features Extracted | Average | Standard Deviation |

|---|---|---|---|---|

| Age | Years | Value at 1st admission | 63.3 | 18.1 |

| Gender | - | - | - | - |

| LOS | Days | - | 3.7 | 5.2 |

| Urine output | mL | Total volume | 138.6 | 3539.4 |

| Glasgow Coma Scale (verbal) | - | Average, standard deviation, maximum, minimum | 3.9 | 1.2 |

| Glasgow Coma Scale (motor) | - | 5.6 | 0.6 | |

| Glasgow Coma Scale (eyes) | - | 3.6 | 0.5 | |

| Systolic Blood Pressure | mmHg | 121.6 | 15.4 | |

| Heart rate | bpm | 84.1 | 13.4 | |

| Body temperature | °C | 36.8 | 0.75 | |

| PaO2 | mmHg | 165.7 | 79.7 | |

| FiO2 | mmHg | 51.2 | 11.43 | |

| Serum urea nitrogen level | mg/dL | 22.1 | 15.5 | |

| White blood cells count | k/uL | 10.8 | 5.7 | |

| Serum bicarbonate level | mEq/L | 25.5 | 3.2 | |

| Sodium level | mEq/L | 138.7 | 3.3 | |

| Potassium level | mEq/L | 4.1 | 0.4 | |

| Bilirubin level | mg/dL | 1.2 | 2.8 | |

| Breathing Rhythm | bpm | 19.3 | 102.8 | |

| Glucose | mg/dL | 132.9 | 42.3 | |

| Albumin | g/dL | 3.5 | 5.3 | |

| Anion gap | mEq/L | 13.2 | 2.3 | |

| Chrolide | mEq/L | 105.5 | 5.9 | |

| Creatinine | mg/dL | 1.2 | 1.1 | |

| Lactate | mmol/L | 2.0 | 1.1 | |

| Calcio | mg/dL | 8.5 | 0.6 | |

| Heamotocrit | % | 32.2 | 4.6 | |

| Hemoglobin | g/dL | 10.97 | 1.7 | |

| International Normalized Ratio (INR) | - | 1.4 | 0.6 | |

| Platelets | - | 215.8 | 101.5 | |

| Prothrombin Time | s | 14.7 | 3.7 | |

| Activated partial thromboplastin time (APTT) | s | 35.8 | 14.1 | |

| Base excess | mEq/L | 0.1 | 3.6 | |

| PaCO2 | mmHg | 41.84 | 9.8 | |

| PH | - | 6.9 | 0.7 | |

| Total CO2 | mEq/L | 25.74 | 4.3 |

| Hyperparameter | Search Space | Optimal Values | ||

|---|---|---|---|---|

| Min | Max | AUROC Criterion | AUPRC Criterion | |

| Learning rate | −8 | 0 | 0.024 | 0.009 |

| Maximum delta step | 0 | 10 | 3 | 4 |

| Maximum depth | 1 | 30 | 8 | 23 |

| Maximum n° leaves | 0 | 10 | 6 | 8 |

| Minimum child weight | 0 | 15 | 3 | 2 |

| N° of estimators | 1 | 10,000 | 4319 | 9078 |

| Alpha region | 0.1 | 1 | 0.912 | 0.445 |

| Lambda region | 0.1 | 1.5 | 0.427 | 0.493 |

| Scale weight | 0.1 | 1 | 0.851 | 0.296 |

| Subsample | 0.1 | 1 | 0.479 | 0.595 |

| Truth (Golden Standard) | |||

|---|---|---|---|

| True | False | ||

| Predicted value | True | TP (True Positive) | FP (False Positive) |

| False | FN (False Negative) | TN (True Negative) | |

| Optimization Criteria | Default Criterion | ||

|---|---|---|---|

| AUROC | AUPRC | ||

| AUROC | 0.92 (±0.03) | 0.92 (±0.02) | 0.90 (±0.03) |

| Accuracy | 0.94 (±0.01) | 0.94 (±0.01) | 0.94 (±0.01) |

| Specificity | 0.99 (±0.01) | 0.99 (±0.01) | 0.99 (±0.01) |

| F1 | 0.53 (±0.12) | 0.47 (±0.11) | 0.49 (±0.11) |

| Precision | 0.77 (±0.18) | 0.85 (±0.17) | 0.74 (±0.13) |

| Recall | 0.40 (±0.09) | 0.32 (±0.10) | 0.37 (±0.10) |

| AUPRC | 0.64 (±0.09) | 0.65 (±0.09) | 0.60 (±0.10) |

| Author | Dataset | Predictor | AUROC |

|---|---|---|---|

| Badawi et al. [10] | eICU Research Database | Logistic regression | 0.71 |

| Fialho et al. [11] | MIMIC-II | Fuzzy Models | 0.72 |

| Frost et al. [12] | Own data | Logistic Regression | 0.66 |

| Rojas et al. [8] | MIMIC-III | Gradient Boosting Machine | 0.76 |

| Thoral et al. [9] | AmsterdamUMCdb | XGBoost | 0.78 |

| Barbieri et al. [7] | MIMIC-III | Neural Network (ODE) | 0.71 |

| Our work | MIMIC-III | XGBoost | 0.92 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

González-Nóvoa, J.A.; Campanioni, S.; Busto, L.; Fariña, J.; Rodríguez-Andina, J.J.; Vila, D.; Íñiguez, A.; Veiga, C. Improving Intensive Care Unit Early Readmission Prediction Using Optimized and Explainable Machine Learning. Int. J. Environ. Res. Public Health 2023, 20, 3455. https://doi.org/10.3390/ijerph20043455

González-Nóvoa JA, Campanioni S, Busto L, Fariña J, Rodríguez-Andina JJ, Vila D, Íñiguez A, Veiga C. Improving Intensive Care Unit Early Readmission Prediction Using Optimized and Explainable Machine Learning. International Journal of Environmental Research and Public Health. 2023; 20(4):3455. https://doi.org/10.3390/ijerph20043455

Chicago/Turabian StyleGonzález-Nóvoa, José A., Silvia Campanioni, Laura Busto, José Fariña, Juan J. Rodríguez-Andina, Dolores Vila, Andrés Íñiguez, and César Veiga. 2023. "Improving Intensive Care Unit Early Readmission Prediction Using Optimized and Explainable Machine Learning" International Journal of Environmental Research and Public Health 20, no. 4: 3455. https://doi.org/10.3390/ijerph20043455

APA StyleGonzález-Nóvoa, J. A., Campanioni, S., Busto, L., Fariña, J., Rodríguez-Andina, J. J., Vila, D., Íñiguez, A., & Veiga, C. (2023). Improving Intensive Care Unit Early Readmission Prediction Using Optimized and Explainable Machine Learning. International Journal of Environmental Research and Public Health, 20(4), 3455. https://doi.org/10.3390/ijerph20043455