Reproducibility and Scientific Integrity of Big Data Research in Urban Public Health and Digital Epidemiology: A Call to Action

Abstract

1. Introduction

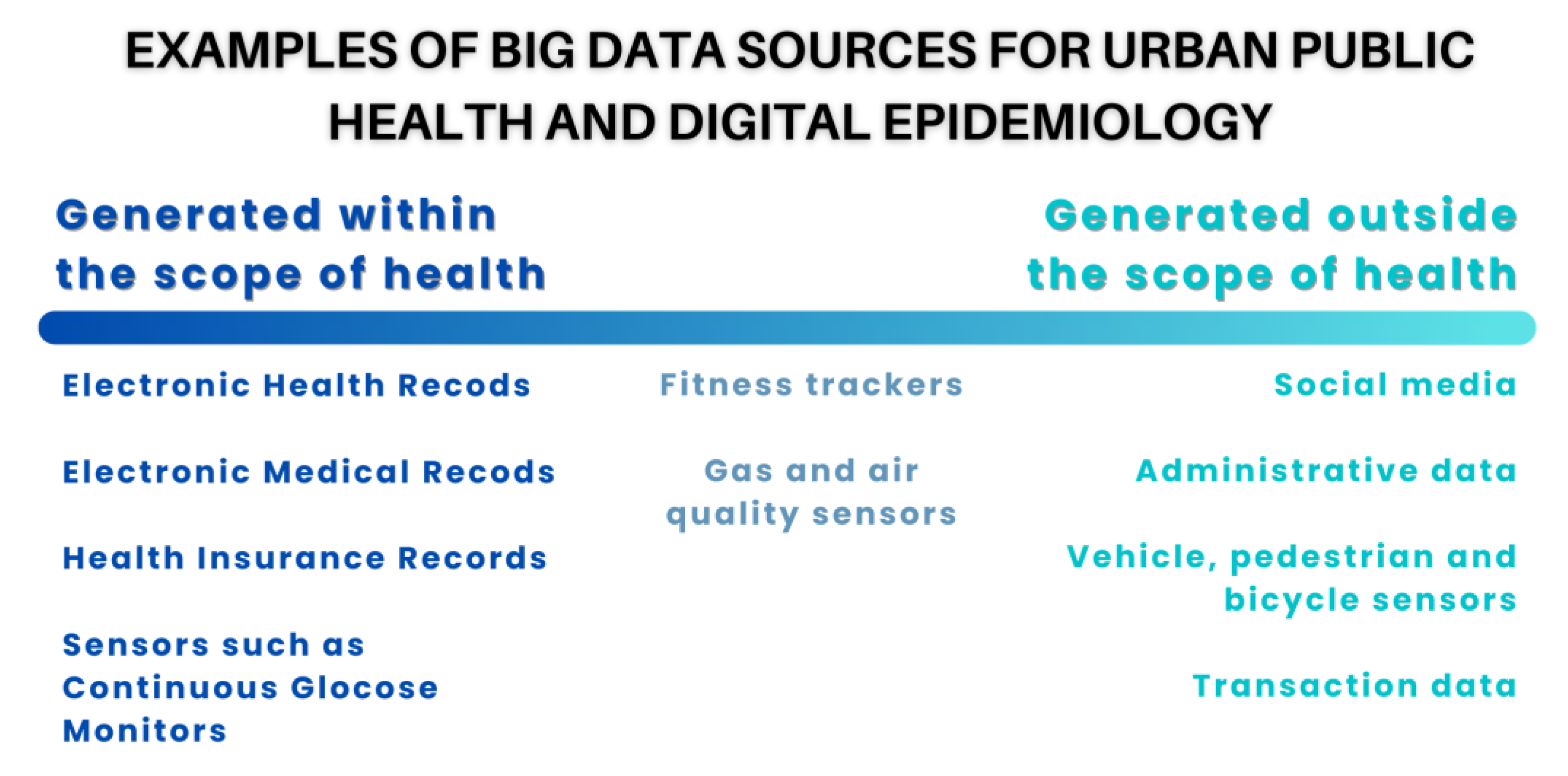

2. Big Data Sources and Uses in Urban Public Health and Digital Epidemiology

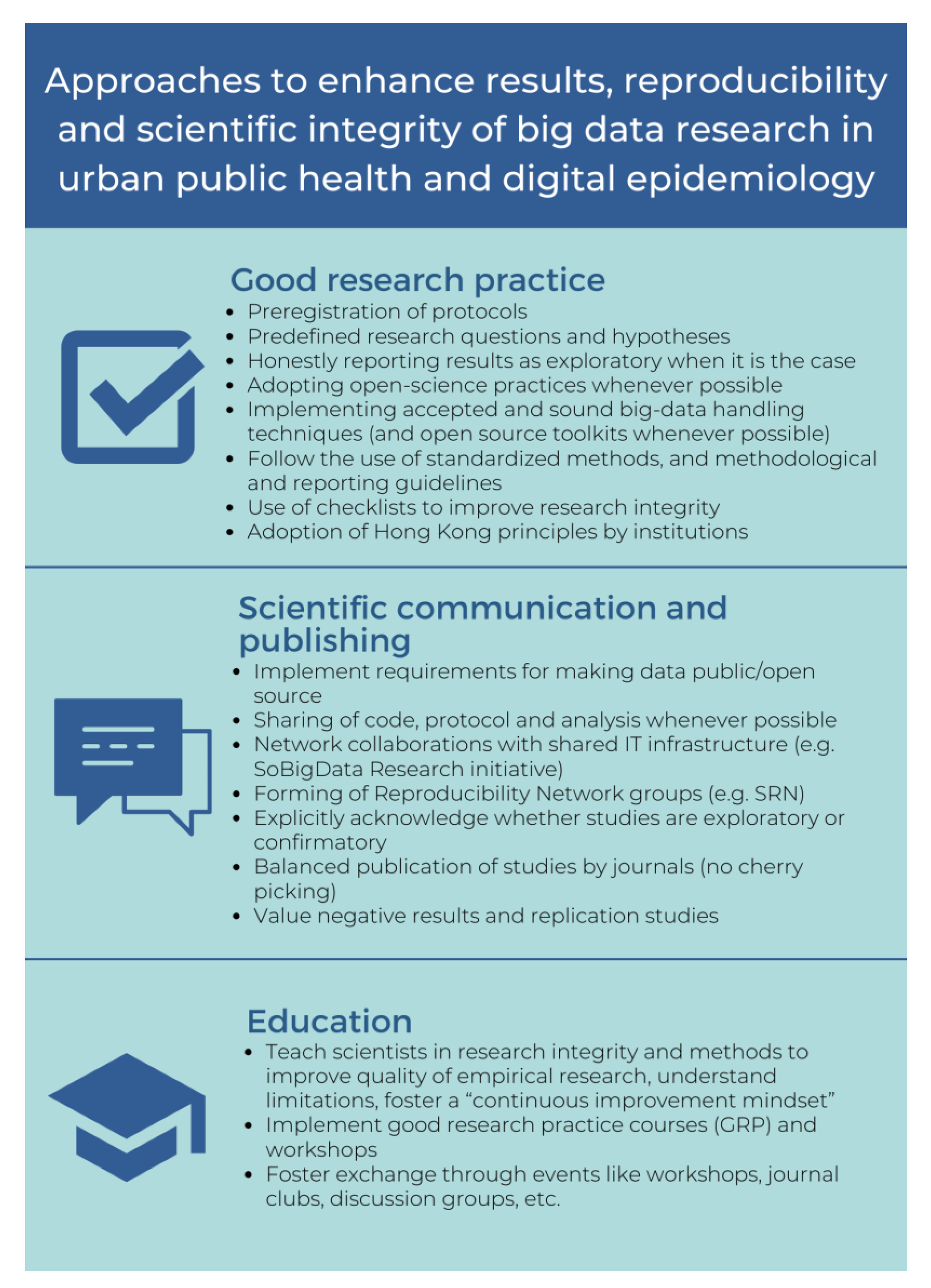

3. Approaches to Improving Reproducibility and Scientific Integrity

3.1. Good Research Practice

3.2. Scientific Communication

3.3. Education

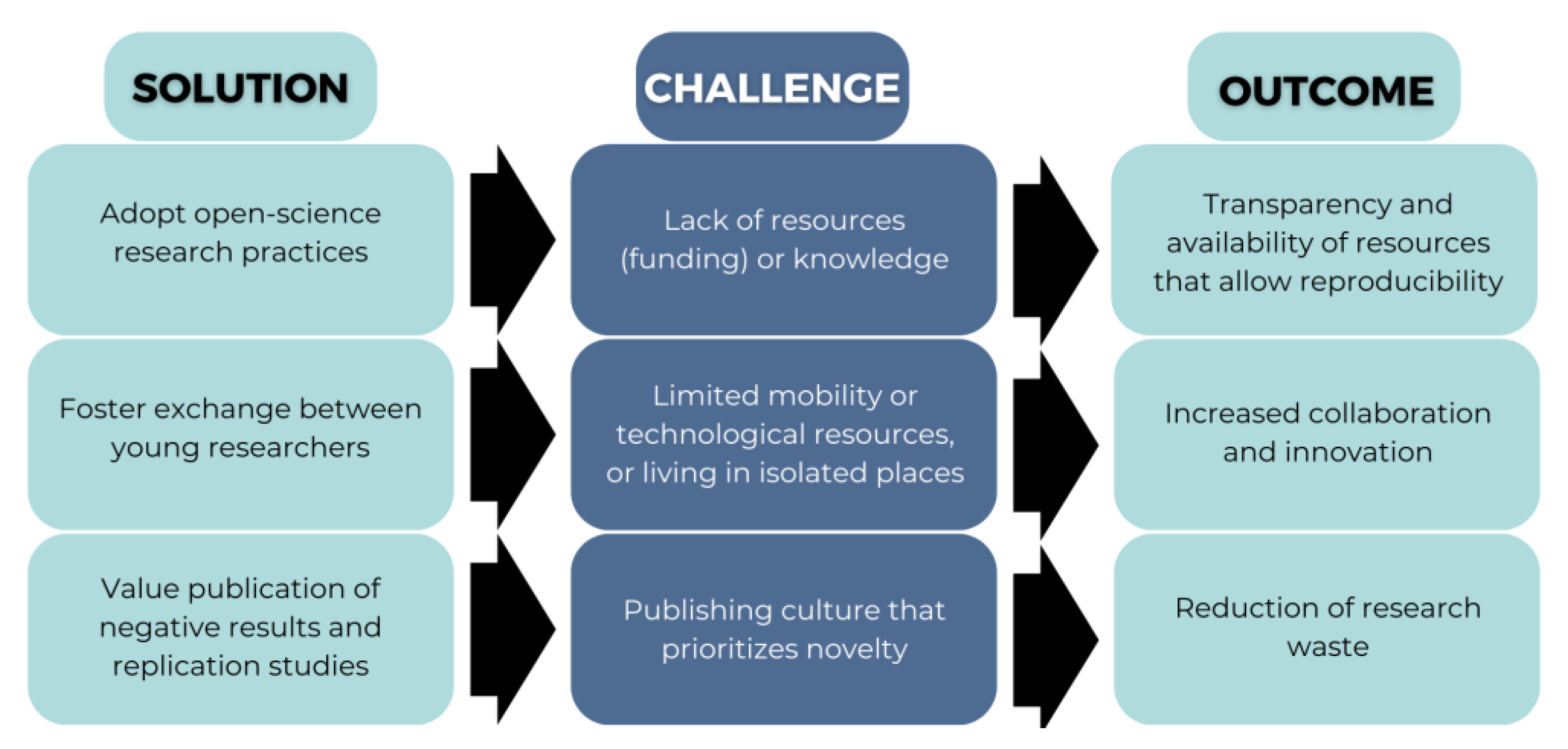

4. Expected Outcomes

5. Anticipated Challenges

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- OECD. OECD Frascati Manual 2015: Guidelines for Collecting and Reporting Data on Research and Experimental Development; OECD: Paris, France, 2015. [Google Scholar]

- Grainger, M.J.; Bolam, F.C.; Stewart, G.B.; Nilsen, E.B. Evidence Synthesis for Tackling Research Waste. Nat. Ecol. Evol. 2020, 4, 495–497. [Google Scholar] [CrossRef] [PubMed]

- Glasziou, P.; Chalmers, I. Research Waste Is Still a Scandal—An Essay by Paul Glasziou and Iain Chalmers. BMJ 2018, 363, k4645. [Google Scholar] [CrossRef]

- Ioannidis, J.P.A.; Greenland, S.; Hlatky, M.A.; Khoury, M.J.; Macleod, M.R.; Moher, D.; Schulz, K.F.; Tibshirani, R. Increasing Value and Reducing Waste in Research Design, Conduct, and Analysis. Lancet 2014, 383, 166–175. [Google Scholar] [CrossRef] [PubMed]

- Salman, R.A.-S.; Beller, E.; Kagan, J.; Hemminki, E.; Phillips, R.S.; Savulescu, J.; Macleod, M.; Wisely, J.; Chalmers, I. Increasing Value and Reducing Waste in Biomedical Research Regulation and Management. Lancet 2014, 383, 176–185. [Google Scholar] [CrossRef] [PubMed]

- Begley, C.G.; Ellis, L.M. Raise Standards for Preclinical Cancer Research. Nature 2012, 483, 531–533. [Google Scholar] [CrossRef]

- Nosek, B.A.; Hardwicke, T.E.; Moshontz, H.; Allard, A.; Corker, K.S.; Dreber, A.; Fidler, F.; Hilgard, J.; Kline Struhl, M.; Nuijten, M.B.; et al. Replicability, Robustness, and Reproducibility in Psychological Science. Annu. Rev. Psychol. 2022, 73, 719–748. [Google Scholar] [CrossRef]

- Mesquida, C.; Murphy, J.; Lakens, D.; Warne, J. Replication Concerns in Sports and Exercise Science: A Narrative Review of Selected Methodological Issues in the Field. R. Soc. Open Sci. 2022, 9, 220946. [Google Scholar] [CrossRef]

- Ioannidis, J.P.A. Why Most Published Research Findings Are False. PLoS Med. 2005, 2, e124. [Google Scholar] [CrossRef]

- Raff, E. A Step Toward Quantifying Independently Reproducible Machine Learning Research. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Hudson, R. Should We Strive to Make Science Bias-Free? A Philosophical Assessment of the Reproducibility Crisis. J. Gen. Philos. Sci. 2021, 52, 389–405. [Google Scholar] [CrossRef]

- Block, J.A. The Reproducibility Crisis and Statistical Review of Clinical and Translational Studies. Osteoarthr. Cartil. 2021, 29, 937–938. [Google Scholar] [CrossRef]

- Baker, M. 1500 Scientists Lift the Lid on Reproducibility. Nature 2016, 533, 452–454. [Google Scholar] [CrossRef]

- Munafò, M.R.; Chambers, C.D.; Collins, A.M.; Fortunato, L.; Macleod, M.R. Research Culture and Reproducibility. Trends Cogn. Sci. 2020, 24, 91–93. [Google Scholar] [CrossRef]

- Benjamin, D.J.; Berger, J.O.; Johannesson, M.; Nosek, B.A.; Wagenmakers, E.-J.; Berk, R.; Bollen, K.A.; Brembs, B.; Brown, L.; Camerer, C.; et al. Redefine Statistical Significance. Nat. Hum. Behav. 2018, 2, 6–10. [Google Scholar] [CrossRef]

- Skelly, A.C. Credibility Matters: Mind the Gap. Evid. Based Spine Care J. 2014, 5, 2–5. [Google Scholar] [CrossRef]

- Romero, F. Philosophy of Science and the Replicability Crisis. Philos. Compass 2019, 14, e12633. [Google Scholar] [CrossRef]

- Perry, C.J.; Lawrence, A.J. Hurdles in Basic Science Translation. Front. Pharmacol. 2017, 8, 478. [Google Scholar] [CrossRef]

- Reynolds, P.S. Between Two Stools: Preclinical Research, Reproducibility, and Statistical Design of Experiments. BMC Res. Notes 2022, 15, 73. [Google Scholar] [CrossRef]

- Haymond, S.; Master, S.R. How Can We Ensure Reproducibility and Clinical Translation of Machine Learning Applications in Laboratory Medicine? Clin. Chem. 2022, 68, 392–395. [Google Scholar] [CrossRef]

- Grant, S.; Wendt, K.E.; Leadbeater, B.J.; Supplee, L.H.; Mayo-Wilson, E.; Gardner, F.; Bradshaw, C.P. Transparent, Open, and Reproducible Prevention Science. Prev. Sci. 2022, 23, 701–722. [Google Scholar] [CrossRef]

- Giannotti, F.; Trasarti, R.; Bontcheva, K.; Grossi, V. SoBigData: Social Mining & Big Data Ecosystem. In Companion Proceedings of the Web Conference 2018, Lyon, France, 23–27 April 2018; International World Wide Web Conferences Steering Committee: Geneva, Switzerland, 2018; pp. 437–438. [Google Scholar]

- Trilling, D.; Jonkman, J.G.F. Scaling up Content Analysis. Commun. Methods Meas. 2018, 12, 158–174. [Google Scholar] [CrossRef]

- Olteanu, A.; Castillo, C.; Diaz, F.; Kıcıman, E. Social Data: Biases, Methodological Pitfalls, and Ethical Boundaries. Front. Big Data 2019, 2, 13. [Google Scholar] [CrossRef] [PubMed]

- National Academies of Sciences, Engineering, and Medicine, Policy and Global Affairs; Committee on Science, Engineering, Medicine, and Public Policy; Board on Research Data and Information; Division on Engineering and Physical Sciences; Committee on Applied and Theoretical Statistics; Board on Mathematical Sciences and Analytics; Division on Earth and Life Studies; Nuclear and Radiation Studies Board; Division of Behavioral and Social Sciences and Education; Committee on National Statistics; et al. Understanding Reproducibility and Replicability; National Academies Press: Washington, DC, USA, 2019. [Google Scholar]

- Hensel, P.G. Reproducibility and Replicability Crisis: How Management Compares to Psychology and Economics—A Systematic Review of Literature. Eur. Manag. J. 2021, 39, 577–594. [Google Scholar] [CrossRef]

- Bertoncel, T.; Meško, M.; Bach, M.P. Big Data for Smart Factories: A Bibliometric Analysis. In Proceedings of the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019; pp. 1261–1265. [Google Scholar]

- Mishra, D.; Gunasekaran, A.; Papadopoulos, T.; Childe, S.J. Big Data and Supply Chain Management: A Review and Bibliometric Analysis. Ann. Oper. Res. 2018, 270, 313–336. [Google Scholar] [CrossRef]

- Šuštaršič, A.; Videmšek, M.; Karpljuk, D.; Miloloža, I.; Meško, M. Big Data in Sports: A Bibliometric and Topic Study. Bus. Syst. Res. Int. J. Soc. Adv. Innov. Res. Econ. 2022, 13, 19–34. [Google Scholar] [CrossRef]

- Marín-Marín, J.-A.; López-Belmonte, J.; Fernández-Campoy, J.-M.; Romero-Rodríguez, J.-M. Big Data in Education. A Bibliometric Review. Soc. Sci. 2019, 8, 223. [Google Scholar] [CrossRef]

- Galetsi, P.; Katsaliaki, K. Big Data Analytics in Health: An Overview and Bibliometric Study of Research Activity. Health Inf. Libr. J. 2020, 37, 5–25. [Google Scholar] [CrossRef]

- Salathé, M. Digital Epidemiology: What Is It, and Where Is It Going? Life Sci. Soc. Policy 2018, 14, 1. [Google Scholar] [CrossRef]

- Mooney, S.J.; Westreich, D.J.; El-Sayed, A.M. Epidemiology in the Era of Big Data. Epidemiology 2015, 26, 390–394. [Google Scholar] [CrossRef]

- Smith, D.L. Health Care Disparities for Persons with Limited English Proficiency: Relationships from the 2006 Medical Expenditure Panel Survey (MEPS). J. Health Disparit. Res. Pract. 2010, 3, 11. [Google Scholar]

- Glymour, M.M.; Osypuk, T.L.; Rehkopf, D.H. Invited Commentary: Off-Roading with Social Epidemiology—Exploration, Causation, Translation. Am. J. Epidemiol. 2013, 178, 858–863. [Google Scholar] [CrossRef]

- Lin, M.; Lucas, H.C.; Shmueli, G. Research Commentary—Too Big to Fail: Large Samples and the p-Value Problem. Inf. Syst. Res. 2013, 24, 906–917. [Google Scholar] [CrossRef]

- Fan, J.; Han, F.; Liu, H. Challenges of Big Data Analysis. Available online: https://academic.oup.com/nsr/article/1/2/293/1397586 (accessed on 8 August 2022).

- Dash, S.; Shakyawar, S.K.; Sharma, M.; Kaushik, S. Big Data in Healthcare: Management, Analysis and Future Prospects. J. Big Data 2019, 6, 54. [Google Scholar] [CrossRef]

- Rumbold, J.M.M.; O’Kane, M.; Philip, N.; Pierscionek, B.K. Big Data and Diabetes: The Applications of Big Data for Diabetes Care Now and in the Future. Diabet. Med. 2020, 37, 187–193. [Google Scholar] [CrossRef]

- Hswen, Y.; Gopaluni, A.; Brownstein, J.S.; Hawkins, J.B. Using Twitter to Detect Psychological Characteristics of Self-Identified Persons with Autism Spectrum Disorder: A Feasibility Study. JMIR Mhealth Uhealth 2019, 7, e12264. [Google Scholar] [CrossRef]

- Lewis, B.; Kakkar, D. Harvard CGA Geotweet Archive v2.0; Harvard University: Cambridge, MA, USA, 2022. [Google Scholar]

- University of Zurich; Università della Svizzera italiana; Swiss School of Public Health. Emotions in Geo-Referenced Tweets in the European Region 2015–2018. Available online: https://givauzh.shinyapps.io/tweets_app/ (accessed on 12 January 2023).

- Pivar, J. Conceptual Model of Big Data Technologies Adoption in Smart Cities of the European Union. Entren. Enterp. Res. Innov. 2020, 6, 572–585. [Google Scholar]

- Smart Cities. Available online: https://ec.europa.eu/info/eu-regional-and-urban-development/topics/cities-and-urban-development/city-initiatives/smart-cities_en (accessed on 7 December 2022).

- Iskandaryan, D.; Ramos, F.; Trilles, S. Air Quality Prediction in Smart Cities Using Machine Learning Technologies Based on Sensor Data: A Review. Appl. Sci. 2020, 10, 2401. [Google Scholar] [CrossRef]

- Fantin Irudaya Raj, E.; Appadurai, M. Internet of Things-Based Smart Transportation System for Smart Cities. In Intelligent Systems for Social Good: Theory and Practice; Advanced Technologies and Societal Change; Mukherjee, S., Muppalaneni, N.B., Bhattacharya, S., Pradhan, A.K., Eds.; Springer Nature: Singapore, 2022; pp. 39–50. ISBN 978-981-19077-0-8. [Google Scholar]

- Tella, A.; Balogun, A.-L. GIS-Based Air Quality Modelling: Spatial Prediction of PM10 for Selangor State, Malaysia Using Machine Learning Algorithms. Env. Sci. Pollut. Res. 2022, 29, 86109–86125. [Google Scholar] [CrossRef]

- Gender Equality and Big Data: Making Gender Data Visible. Available online: https://www.unwomen.org/en/digital-library/publications/2018/1/gender-equality-and-big-data (accessed on 9 December 2022).

- De-Arteaga, M.; Dubrawski, A. Discovery of Complex Anomalous Patterns of Sexual Violence in El Salvador. arXiv 2017, arXiv:1711.06538v1. [Google Scholar] [CrossRef]

- Hersh, J.; Harding, M. Big Data in Economics. IZA World Labor 2018. [Google Scholar] [CrossRef]

- Lu, X.H.; Mamiya, H.; Vybihal, J.; Ma, Y.; Buckeridge, D.L. Application of Machine Learning and Grocery Transaction Data to Forecast Effectiveness of Beverage Taxation. Stud. Health Technol. Inform. 2019, 264, 248–252. [Google Scholar] [CrossRef]

- Petimar, J.; Zhang, F.; Cleveland, L.P.; Simon, D.; Gortmaker, S.L.; Polacsek, M.; Bleich, S.N.; Rimm, E.B.; Roberto, C.A.; Block, J.P. Estimating the Effect of Calorie Menu Labeling on Calories Purchased in a Large Restaurant Franchise in the Southern United States: Quasi-Experimental Study. BMJ 2019, 367, l5837. [Google Scholar] [CrossRef] [PubMed]

- McCoach, D.B.; Dineen, J.N.; Chafouleas, S.M.; Briesch, A. Reproducibility in the Era of Big Data. In Big Data Meets Survey Science; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2020; pp. 625–655. ISBN 978-1-118-97635-7. [Google Scholar]

- Big Data and Development: An Overview. Available online: https://datapopalliance.org/publications/big-data-and-development-an-overview/ (accessed on 9 December 2022).

- Simera, I.; Moher, D.; Hirst, A.; Hoey, J.; Schulz, K.F.; Altman, D.G. Transparent and Accurate Reporting Increases Reliability, Utility, and Impact of Your Research: Reporting Guidelines and the EQUATOR Network. BMC Med. 2010, 8, 24. [Google Scholar] [CrossRef]

- Equator Network. Enhancing the QUAlity and Transparency of Health Research. Available online: https://www.equator-network.org/ (accessed on 17 October 2021).

- Laurinavichyute, A.; Yadav, H.; Vasishth, S. Share the Code, Not Just the Data: A Case Study of the Reproducibility of Articles Published in the Journal of Memory and Language under the Open Data Policy. J. Mem. Lang. 2022, 125, 104332. [Google Scholar] [CrossRef]

- Stewart, S.L.K.; Pennington, C.R.; da Silva, G.R.; Ballou, N.; Butler, J.; Dienes, Z.; Jay, C.; Rossit, S.; Samara, A.U.K. Reproducibility Network (UKRN) Local Network Leads Reforms to Improve Reproducibility and Quality Must Be Coordinated across the Research Ecosystem: The View from the UKRN Local Network Leads. BMC Res. Notes 2022, 15, 58. [Google Scholar] [CrossRef] [PubMed]

- Wright, P.M. Ensuring Research Integrity: An Editor’s Perspective. J. Manag. 2016, 42, 1037–1043. [Google Scholar] [CrossRef]

- Brdar, S.; Gavrić, K.; Ćulibrk, D.; Crnojević, V. Unveiling Spatial Epidemiology of HIV with Mobile Phone Data. Sci. Rep. 2016, 6, 19342. [Google Scholar] [CrossRef]

- Fillekes, M.P.; Giannouli, E.; Kim, E.-K.; Zijlstra, W.; Weibel, R. Towards a Comprehensive Set of GPS-Based Indicators Reflecting the Multidimensional Nature of Daily Mobility for Applications in Health and Aging Research. Int. J. Health Geogr. 2019, 18, 17. [Google Scholar] [CrossRef]

- Ahas, R.; Silm, S.; Järv, O.; Saluveer, E.; Tiru, M. Using Mobile Positioning Data to Model Locations Meaningful to Users of Mobile Phones. J. Urban Technol. 2010, 17, 3–27. [Google Scholar] [CrossRef]

- Chen, Q.; Poorthuis, A. Identifying Home Locations in Human Mobility Data: An Open-Source R Package for Comparison and Reproducibility. Int. J. Geogr. Inf. Sci. 2021, 35, 1425–1448. [Google Scholar] [CrossRef]

- Schusselé Filliettaz, S.; Berchtold, P.; Kohler, D.; Peytremann-Bridevaux, I. Integrated Care in Switzerland: Results from the First Nationwide Survey. Health Policy 2018, 122, 568–576. [Google Scholar] [CrossRef]

- Maalouf, E.; Santo, A.D.; Cotofrei, P.; Stoffel, K. Design Principles of a Central Metadata Repository as a Key Element of an Integrated Health Information System. SLSH: Lucerne, Switzerland, 2020; 44p. [Google Scholar]

- Tapscott, D.; Tapscott, A. What Blockchain Could Mean For Your Health Data. Harvard Business Review. 12 June 2020. Available online: https://hbr.org/2020/06/what-blockchain-could-mean-for-your-health-data (accessed on 17 November 2022).

- FAIR Principles. Available online: https://www.go-fair.org/fair-principles/ (accessed on 7 December 2022).

- Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J.; Appleton, G.; Axton, M.; Baak, A.; Blomberg, N.; Boiten, J.-W.; da Silva Santos, L.B.; Bourne, P.E.; et al. The FAIR Guiding Principles for Scientific Data Management and Stewardship. Sci. Data 2016, 3, 160018. [Google Scholar] [CrossRef]

- Kretser, A.; Murphy, D.; Bertuzzi, S.; Abraham, T.; Allison, D.B.; Boor, K.J.; Dwyer, J.; Grantham, A.; Harris, L.J.; Hollander, R.; et al. Scientific Integrity Principles and Best Practices: Recommendations from a Scientific Integrity Consortium. Sci. Eng. Ethics 2019, 25, 327–355. [Google Scholar] [CrossRef]

- Moher, D.; Bouter, L.; Kleinert, S.; Glasziou, P.; Sham, M.H.; Barbour, V.; Coriat, A.-M.; Foeger, N.; Dirnagl, U. The Hong Kong Principles for Assessing Researchers: Fostering Research Integrity. PLoS Biol. 2020, 18, e3000737. [Google Scholar] [CrossRef]

- Bouter, L. Hong Kong Principles. Available online: https://wcrif.org/guidance/hong-kong-principles (accessed on 8 August 2022).

- Bafeta, A.; Bobe, J.; Clucas, J.; Gonsalves, P.P.; Gruson-Daniel, C.; Hudson, K.L.; Klein, A.; Krishnakumar, A.; McCollister-Slipp, A.; Lindner, A.B.; et al. Ten Simple Rules for Open Human Health Research. PLoS Comput. Biol. 2020, 16, e1007846. [Google Scholar] [CrossRef]

- Beam, A.L.; Manrai, A.K.; Ghassemi, M. Challenges to the Reproducibility of Machine Learning Models in Health Care. JAMA 2020, 323, 305–306. [Google Scholar] [CrossRef]

- SwissRN. Available online: http://www.swissrn.org/ (accessed on 8 August 2022).

- Nilsen, E.B.; Bowler, D.E.; Linnell, J.D.C. Exploratory and Confirmatory Research in the Open Science Era. J. Appl. Ecol. 2020, 57, 842–847. [Google Scholar] [CrossRef]

- Curry, S. Let’s Move beyond the Rhetoric: It’s Time to Change How We Judge Research. Nature 2018, 554, 147. [Google Scholar] [CrossRef]

- Honey, C.P.; Baker, J.A. Exploring the Impact of Journal Clubs: A Systematic Review. Nurse Educ. Today 2011, 31, 825–831. [Google Scholar] [CrossRef]

- Lucia, V.C.; Swanberg, S.M. Utilizing Journal Club to Facilitate Critical Thinking in Pre-Clinical Medical Students. Int. J. Med. Educ. 2018, 9, 7–8. [Google Scholar] [CrossRef]

- EQUATOR. Available online: https://www.equator-network.org/about-us/ (accessed on 17 November 2021).

- Concannon, T.W.; Fuster, M.; Saunders, T.; Patel, K.; Wong, J.B.; Leslie, L.K.; Lau, J. A Systematic Review of Stakeholder Engagement in Comparative Effectiveness and Patient-Centered Outcomes Research. J. Gen. Intern. Med. 2014, 29, 1692–1701. [Google Scholar] [CrossRef]

- Meehan, J.; Menzies, L.; Michaelides, R. The Long Shadow of Public Policy; Barriers to a Value-Based Approach in Healthcare Procurement. J. Purch. Supply Manag. 2017, 23, 229–241. [Google Scholar] [CrossRef]

- Mulugeta, L.; Drach, A.; Erdemir, A.; Hunt, C.A.; Horner, M.; Ku, J.P.; Myers, J.G., Jr.; Vadigepalli, R.; Lytton, W.W. Credibility, Replicability, and Reproducibility in Simulation for Biomedicine and Clinical Applications in Neuroscience. Front. Neuroinform. 2018, 12, 18. [Google Scholar] [CrossRef] [PubMed]

- Kuo, Y.-H.; Leung, J.; Tsoi, K.; Meng, H.; Graham, C. Embracing Big Data for Simulation Modelling of Emergency Department Processes and Activities. In Proceedings of the 2015 IEEE International Congress on Big Data, Santa Clara, CA, USA, 29 October–1 November 2015. [Google Scholar]

- Belbasis, L.; Panagiotou, O.A. Reproducibility of Prediction Models in Health Services Research. BMC Res. Notes 2022, 15, 204. [Google Scholar] [CrossRef] [PubMed]

- Schwander, B.; Nuijten, M.; Evers, S.; Hiligsmann, M. Replication of Published Health Economic Obesity Models: Assessment of Facilitators, Hurdles and Reproduction Success. PharmacoEconomics 2021, 39, 433–446. [Google Scholar] [CrossRef] [PubMed]

- Mahmood, I.; Arabnejad, H.; Suleimenova, D.; Sassoon, I.; Marshan, A.; Serrano-Rico, A.; Louvieris, P.; Anagnostou, A.; Taylor, S.J.E.; Bell, D.; et al. FACS: A Geospatial Agent-Based Simulator for Analysing COVID-19 Spread and Public Health Measures on Local Regions. J. Simul. 2022, 16, 355–373. [Google Scholar] [CrossRef]

- Rand, W.; Wilensky, U. Verification and Validation through Replication: A Case Study Using Axelrod and Hammond’s Ethnocentrism Model; North American Association for Computational Social and Organizational Science (NAACSOS): Pittsburgh, PA, USA, 2006. [Google Scholar]

- AllTrials All Trials Registered. All Results Reported. AllTrials 2014. Available online: http://www.alltrials.net (accessed on 17 November 2021).

- Chinnery, F.; Dunham, K.M.; van der Linden, B.; Westmore, M.; Whitlock, E. Ensuring Value in Health-Related Research. Lancet 2018, 391, 836–837. [Google Scholar] [CrossRef]

- EVBRES. Available online: https://evbres.eu/ (accessed on 17 November 2021).

- Shanahan, D.R.; Lopes de Sousa, I.; Marshall, D.M. Simple Decision-Tree Tool to Facilitate Author Identification of Reporting Guidelines during Submission: A before–after Study. Res. Integr. Peer Rev. 2017, 2, 20. [Google Scholar] [CrossRef]

- Percie du Sert, N.; Hurst, V.; Ahluwalia, A.; Alam, S.; Avey, M.T.; Baker, M.; Browne, W.J.; Clark, A.; Cuthill, I.C.; Dirnagl, U.; et al. The ARRIVE Guidelines 2.0: Updated Guidelines for Reporting Animal Research. J. Cereb. Blood Flow Metab. 2020, 40, 1769–1777. [Google Scholar] [CrossRef]

- STROBE. Available online: https://www.strobe-statement.org/ (accessed on 14 November 2022).

- Group, T.N.C. Did a Change in Nature Journals’ Editorial Policy for Life Sciences Research Improve Reporting? BMJ Open Sci. 2019, 3, e000035. [Google Scholar] [CrossRef]

- Big Data Hackathon for San Diego 2022. Available online: https://bigdataforsandiego.github.io/ (accessed on 8 August 2022).

- Yale CBIT Healthcare Hackathon. Available online: https://yale-hack-health.devpost.com/ (accessed on 8 August 2022).

- Ramachandran, R.; Bugbee, K.; Murphy, K. From Open Data to Open Science. Earth Space Sci. 2021, 8, e2020EA001562. [Google Scholar] [CrossRef]

- Wilson, J.; Bender, K.; DeChants, J. Beyond the Classroom: The Impact of a University-Based Civic Hackathon Addressing Homelessness. J. Soc. Work Educ. 2019, 55, 736–749. [Google Scholar] [CrossRef]

- van Dalen, H.P.; Henkens, K. Intended and Unintended Consequences of a Publish-or-perish Culture: A Worldwide Survey. J. Am. Soc. Inf. Sci. Technol. 2012, 63, 1282–1293. [Google Scholar] [CrossRef]

- Andrade, C. HARKing, Cherry-Picking, P-Hacking, Fishing Expeditions, and Data Dredging and Mining as Questionable Research Practices. J. Clin. Psychiatr. 2021, 82, 20f13804. [Google Scholar] [CrossRef]

- Munafò, M.R.; Nosek, B.A.; Bishop, D.V.M.; Button, K.S.; Chambers, C.D.; Percie du Sert, N.; Simonsohn, U.; Wagenmakers, E.-J.; Ware, J.J.; Ioannidis, J.P.A. A Manifesto for Reproducible Science. Nat. Hum. Behav. 2017, 1, 1–9. [Google Scholar] [CrossRef]

- Qi, W.; Sun, M.; Hosseini, S.R.A. Facilitating Big-Data Management in Modern Business and Organizations Using Cloud Computing: A Comprehensive Study. J. Manag. Organ. 2022, 1–27. [Google Scholar] [CrossRef]

- Thomas, K.; Praseetha, N. Data Lake: A Centralized Repository. Int. Res. J. Eng. Technol. 2020, 7, 2978–2981. [Google Scholar]

- Machado, I.A.; Costa, C.; Santos, M.Y. Data Mesh: Concepts and Principles of a Paradigm Shift in Data Architectures. Procedia Comput. Sci. 2022, 196, 263–271. [Google Scholar] [CrossRef]

- Fadler, M.; Legner, C. Data Ownership Revisited: Clarifying Data Accountabilities in Times of Big Data and Analytics. J. Bus. Anal. 2022, 5, 123–139. [Google Scholar] [CrossRef]

- Mostert, M.; Bredenoord, A.L.; Biesaart, M.C.I.H.; van Delden, J.J.M. Big Data in Medical Research and EU Data Protection Law: Challenges to the Consent or Anonymise Approach. Eur. J. Hum. Genet. 2016, 24, 956–960. [Google Scholar] [CrossRef] [PubMed]

- Hariri, R.H.; Fredericks, E.M.; Bowers, K.M. Uncertainty in Big Data Analytics: Survey, Opportunities, and Challenges. J. Big Data 2019, 6, 44. [Google Scholar] [CrossRef]

- Mooney, S.J.; Keil, A.P.; Westreich, D.J. Thirteen Questions About Using Machine Learning in Causal Research (You Won’t Believe the Answer to Number 10!). Am. J. Epidemiol. 2021, 190, 1476–1482. [Google Scholar] [CrossRef] [PubMed]

- Bennett, L.M.; Gadlin, H. Collaboration and Team Science: From Theory to Practice. J. Investig. Med. 2012, 60, 768–775. [Google Scholar] [CrossRef] [PubMed]

- Horbach, S.P.J.M.; Halffman, W. The Changing Forms and Expectations of Peer Review. Res. Integr. Peer Rev. 2018, 3, 8. [Google Scholar] [CrossRef] [PubMed]

- Yuan, W.; Liu, P.; Neubig, G. Can We Automate Scientific Reviewing? J. Artif. Intell. Res. 2022, 75, 171–212. [Google Scholar] [CrossRef]

- Allen, C.; Mehler, D.M.A. Open Science Challenges, Benefits and Tips in Early Career and Beyond. PLoS Biol. 2019, 17, e3000246. [Google Scholar] [CrossRef]

- Mirowski, P. The Future(s) of Open Science. Soc. Stud. Sci. 2018, 48, 171–203. [Google Scholar] [CrossRef]

- Ross-Hellauer, T.; Görögh, E. Guidelines for Open Peer Review Implementation. Res. Integr. Peer Rev. 2019, 4, 4. [Google Scholar] [CrossRef]

- Hume, K.M.; Giladi, A.M.; Chung, K.C. Factors Impacting Successfully Competing for Research Funding: An Analysis of Applications Submitted to The Plastic Surgery Foundation. Plast. Reconstr. Surg. 2014, 134, 59. [Google Scholar] [CrossRef]

- Bloemers, M.; Montesanti, A. The FAIR Funding Model: Providing a Framework for Research Funders to Drive the Transition toward FAIR Data Management and Stewardship Practices. Data Intell. 2020, 2, 171–180. [Google Scholar] [CrossRef]

- Gorgolewski, K.; Alfaro-Almagro, F.; Auer, T.; Bellec, P.; Capotă, M.; Chakravarty, M. BIDS Apps: Improving Ease of Use, Accessibility, and Reproducibility of Neuroimaging Data Analysis Methods. PLoS Comput. Biol. 2017, 13, e1005209. [Google Scholar] [CrossRef]

- Agarwal, R.; Chertow, G.M.; Mehta, R.L. Strategies for Successful Patient Oriented Research: Why Did I (Not) Get Funded? Clin. J. Am. Soc. Nephrol. 2006, 1, 340–343. [Google Scholar] [CrossRef] [PubMed]

- Harper, S. Future for Observational Epidemiology: Clarity, Credibility, Transparency. Am. J. Epidemiol. 2019, 188, 840–845. [Google Scholar] [CrossRef] [PubMed]

- Antràs, P.; Redding, S.J.; Rossi-Hansberg, E. Globalization and Pandemics; National Bureau of Economic Research: Cambridge, MA, USA, 2020. [Google Scholar]

- Ebrahim, S.; Garcia, J.; Sujudi, A.; Atrash, H. Globalization of Behavioral Risks Needs Faster Diffusion of Interventions. Prev. Chron. Dis. 2007, 4, A32. [Google Scholar]

- Gilmore, R.O.; Diaz, M.T.; Wyble, B.A.; Yarkoni, T. Progress toward Openness, Transparency, and Reproducibility in Cognitive Neuroscience. Ann. N. Y. Acad. Sci. 2017, 1396, 5–18. [Google Scholar] [CrossRef] [PubMed]

- Brunsdon, C.; Comber, A. Opening Practice: Supporting Reproducibility and Critical Spatial Data Science. J. Geogr. Syst. 2021, 23, 477–496. [Google Scholar] [CrossRef]

- Catalá-López, F.; Caulley, L.; Ridao, M.; Hutton, B.; Husereau, D.; Drummond, M.F.; Alonso-Arroyo, A.; Pardo-Fernández, M.; Bernal-Delgado, E.; Meneu, R.; et al. Reproducible Research Practices, Openness and Transparency in Health Economic Evaluations: Study Protocol for a Cross-Sectional Comparative Analysis. BMJ Open 2020, 10, e034463. [Google Scholar] [CrossRef]

- Wachholz, P.A. Transparency, openness, and reproducibility: GGA advances in alignment with good editorial practices and open science. Geriatr. Gerontol. Aging 2022, 16, 1–5. [Google Scholar] [CrossRef]

- Girault, J.-A. Plea for a Simple But Radical Change in Scientific Publication: To Improve Openness, Reliability, and Reproducibility, Let’s Deposit and Validate Our Results before Writing Articles. eNeuro 2022, 9. [Google Scholar] [CrossRef]

- Schroeder, S.R.; Gaeta, L.; Amin, M.E.; Chow, J.; Borders, J.C. Evaluating Research Transparency and Openness in Communication Sciences and Disorders Journals. J. Speech Lang. Hear. Res. 2022. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Quiroga Gutierrez, A.C.; Lindegger, D.J.; Taji Heravi, A.; Stojanov, T.; Sykora, M.; Elayan, S.; Mooney, S.J.; Naslund, J.A.; Fadda, M.; Gruebner, O. Reproducibility and Scientific Integrity of Big Data Research in Urban Public Health and Digital Epidemiology: A Call to Action. Int. J. Environ. Res. Public Health 2023, 20, 1473. https://doi.org/10.3390/ijerph20021473

Quiroga Gutierrez AC, Lindegger DJ, Taji Heravi A, Stojanov T, Sykora M, Elayan S, Mooney SJ, Naslund JA, Fadda M, Gruebner O. Reproducibility and Scientific Integrity of Big Data Research in Urban Public Health and Digital Epidemiology: A Call to Action. International Journal of Environmental Research and Public Health. 2023; 20(2):1473. https://doi.org/10.3390/ijerph20021473

Chicago/Turabian StyleQuiroga Gutierrez, Ana Cecilia, Daniel J. Lindegger, Ala Taji Heravi, Thomas Stojanov, Martin Sykora, Suzanne Elayan, Stephen J. Mooney, John A. Naslund, Marta Fadda, and Oliver Gruebner. 2023. "Reproducibility and Scientific Integrity of Big Data Research in Urban Public Health and Digital Epidemiology: A Call to Action" International Journal of Environmental Research and Public Health 20, no. 2: 1473. https://doi.org/10.3390/ijerph20021473

APA StyleQuiroga Gutierrez, A. C., Lindegger, D. J., Taji Heravi, A., Stojanov, T., Sykora, M., Elayan, S., Mooney, S. J., Naslund, J. A., Fadda, M., & Gruebner, O. (2023). Reproducibility and Scientific Integrity of Big Data Research in Urban Public Health and Digital Epidemiology: A Call to Action. International Journal of Environmental Research and Public Health, 20(2), 1473. https://doi.org/10.3390/ijerph20021473