Assessing the Integrity of Clinical Trials Included in Evidence Syntheses

Abstract

1. Introduction

2. How Can We Trust Evidence Syntheses?

3. Relationship between Primary Research Integrity and Evidence Syntheses

4. Importance of Assessing the Integrity of RCTs Included in Evidence Syntheses

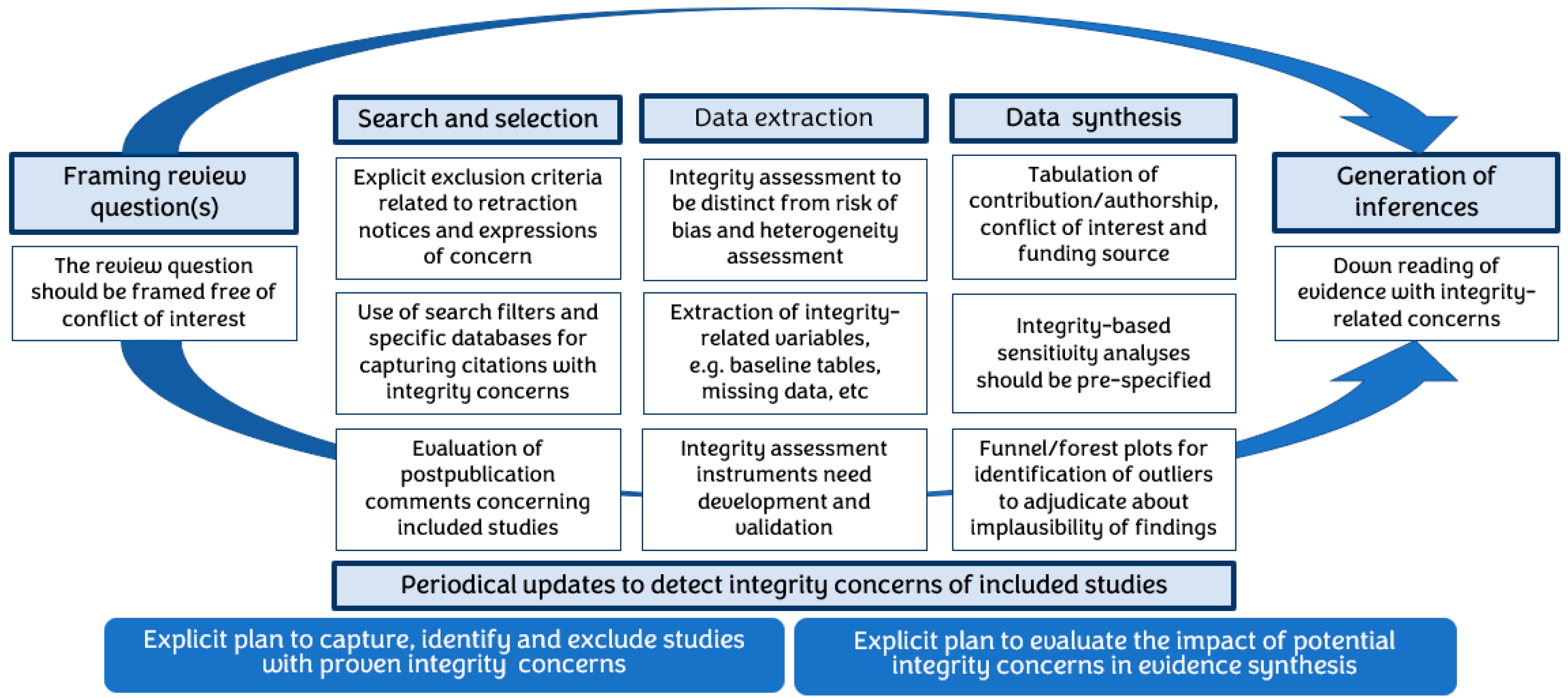

5. How to Incorporate RCT Integrity Assessment in Evidence Syntheses

6. Implications, Issues, Challenges, and Limitations

7. Artificial Intelligence for Integrity Assessment

8. Current Conclusions

9. Future Direction

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Khan, K.S.; Zamora, J. Systematic Reviews to Support Evidence-Based Medicine, 3rd ed.; Taylor & Francis Publishing: London, UK, 2022. [Google Scholar]

- De Vrieze, J. Large survey finds questionable research practices are common. Science 2021, 373, 265. [Google Scholar] [CrossRef] [PubMed]

- Gopalakrishna, G.; ter Riet, G.; Vink, G.; Stoop, I.; Wicherts, J.M.; Bouter, L.M. Prevalence of questionable research practices, research misconduct and their potential explanatory factors: A survey among academic researchers in the Netherlands. PLoS ONE 2022, 17, e0263023. [Google Scholar] [CrossRef] [PubMed]

- Steen, R.G.; Casadevall, A.; Fang, F.C. Why Has the Number of Scientific Retractions Increased? PLoS ONE 2013, 8, e68397. [Google Scholar] [CrossRef]

- Vinkers, C.H.; Lamberink, H.J.; Tijdink, J.K.; Heus, P.; Bouter, L.; Glasziou, P.; Moher, D.; Damen, J.A.; Hooft, L.O.W. The methodological quality of 176,620 randomized controlled trials published between 1966 and 2018 reveals a positive trend but also an urgent need for improvement. PLoS Biol. 2021, 19, e3001162. [Google Scholar] [CrossRef]

- Ioannidis, J.P.A. Hundreds of thousands of zombie randomised trials circulate among us. Anaesthesia 2021, 76, 444–447. [Google Scholar] [CrossRef]

- Teixeira da Silva, J.A. A Synthesis of the Formats for Correcting Erroneous and Fraudulent Academic Literature, and Associated Challenges. J. Gen. Philos. Sci. 2022, 53, 583–599. Available online: https://link.springer.com/article/10.1007/s10838-022-09607-4 (accessed on 30 January 2023). [CrossRef]

- Higgins, G. Cochrane Handbook for Systematic Reviews of Interventions; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Twells, L.K. Evidence-Based Decision-Making 1: Critical Appraisal. Methods Mol. Biol. 2021, 2249, 389–404. [Google Scholar]

- World Medical Association (WMA). Declaration on Guidelines for Continuous Quality Improvement in Healthcare. Available online: https://www.wma.net/policies-post/wma-declaration-on-guidelines-for-continuous-quality-improvement-in-health-care/ (accessed on 9 April 2023).

- ICH Official Web Site: ICH. Available online: https://www.ich.org/ (accessed on 17 January 2022).

- Bauchner, H.; Golub, R.M.; Fontanarosa, P.B. Reporting and Interpretation of Randomized Clinical Trials. JAMA 2019, 322, 732–735. [Google Scholar] [CrossRef]

- Stavale, R.; Ferreira, G.I.; Galvão, J.A.M.; Zicker, F.; Novaes, M.R.C.G.; De Oliveira, C.; Guilhem, D. Research misconduct in health and life sciences research: A systematic review of retracted literature from Brazilian institutions. PLoS ONE 2019, 14, e0214272. [Google Scholar] [CrossRef]

- Shea, B.J.; Reeves, B.C.; Wells, G.; Thuku, M.; Hamel, C.; Moran, J.; Moher, D.; Tugwell, P.; Welch, V.; Kristjansson, E.; et al. AMSTAR 2: A critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ 2017, 358, j4008. [Google Scholar] [CrossRef]

- Whiting, P.; Savović, J.; Higgins, J.P.; Caldwell, D.M.; Reeves, B.C.; Shea, B.; Davies, P.; Kleijnen, J.; Churchill, R.; ROBIS group. ROBIS: A new tool to assess risk of bias in systematic reviews was developed. J. Clin. Epidemiol. 2016, 69, 225–234. [Google Scholar] [CrossRef]

- Molino, C.d.G.R.C.; Ribeiro, E.; Romano-Lieber, N.S.; Stein, A.T.; de Melo, D.O. Methodological quality and transparency of clinical practice guidelines for the pharmacological treatment of non-communicable diseases using the AGREE II instrument: A systematic review protocol. Syst. Rev. 2017, 6, 220. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, K.; Marušić, A.; Qaseem, A.; Meerpohl, J.J.; Flottorp, S.; Akl, E.A.; Schünemann, H.J.; Chan, E.S.Y.; Falck-Ytter, Y.; et al. A reporting tool for practice guidelines in health care: The RIGHT statement. Ann. Intern. Med. 2017, 166, 128–132. [Google Scholar] [CrossRef]

- Steneck, N.H. Fostering integrity in research: Definitions, current knowledge, and future directions. Sci. Eng. Ethics 2006, 12, 53–74. [Google Scholar] [CrossRef]

- H2020 INTEGRITY—Glossary. Available online: https://h2020integrity.eu/resources/glossary/ (accessed on 27 January 2023).

- National Library of Medicine. Errata, Retractions, and Other Linked Citations in PubMed. 2015. Available online: http://wayback.archive-it.org/org-350/20180312141525/https://www.nlm.nih.gov/pubs/factsheets/errata.html (accessed on 9 February 2023).

- COPE. COPE Forum 26 February 2018: Expressions of Concern. Available online: https://publicationethics.org/resources/forum-discussions/expressions-of-concern (accessed on 11 April 2023).

- Malički, M.; Jerončić, A.; Aalbersberg, I.J.J.; Bouter, L.; ter Riet, G. Systematic review and meta-analyses of studies analysing instructions to authors from 1987 to 2017. Nat Commun. 2021, 12, 5840. [Google Scholar] [CrossRef]

- Schneider, J.; Ye, D.; Hill, A.M.; Whitehorn, A.S. Continued post-retraction citation of a fraudulent clinical trial report, 11 years after it was retracted for falsifying data. Scientometrics 2020, 125, 2877–2913. [Google Scholar] [CrossRef]

- Kataoka, Y.; Banno, M.; Tsujimoto, Y.; Ariie, T.; Taito, S.; Suzuki, T.; Oide, S.; Furukawa, T.A. Retracted randomized controlled trials were cited and not corrected in systematic reviews and clinical practice guidelines. J. Clin. Epidemiol. 2022, 150, 90–97. [Google Scholar] [CrossRef]

- Fanelli, D.; Wong, J.; Moher, D. What difference might retractions make? An estimate of the potential epistemic cost of retractions on meta-analyses. Account. Res. 2022, 29, 442–459. [Google Scholar] [CrossRef]

- Fleming, T.R.; Labriola, D.; Wittes, J. Conducting Clinical Research During the COVID-19 Pandemic: Protecting Scientific Integrity. JAMA 2020, 324, 33–34. [Google Scholar] [CrossRef]

- Núñez-Núñez, M.; Andrews, J.C.; Fawzy, M.; Bueno-Cavanillas, A.; Khan, K.S. Research integrity in clinical trials: Innocent errors and spin versus scientific misconduct. Curr. Opin. Obstet. Gynecol. 2022, 34, 332–339. [Google Scholar] [CrossRef]

- Fletcher, R.H.B.B. “Spin” in scientific writing: Scientific mischief and legal jeopardy. Med. Law 2007, 26, 511–525. [Google Scholar] [PubMed]

- Avenell, A.; Stewart, F.; Grey, A.; Gamble, G.; Bolland, M. An investigation into the impact and implications of published papers from retracted research: Systematic search of affected literature. BMJ Open 2019, 9, e031909. [Google Scholar] [CrossRef] [PubMed]

- Hill, A.; Mirchandani, M.; Pilkington, V. Ivermectin for COVID-19: Addressing Potential Bias and Medical Fraud. Open Forum Infect. Dis. 2022, 9, ofab645. [Google Scholar] [CrossRef] [PubMed]

- Hill, A.; Garratt, A.; Levi, J.; Falconer, J.; Ellis, L.; McCann, K.; Pilkington, V.; Qavi, A.; Wang, J.; Wentzel, H. Retracted: Meta-analysis of Randomized Trials of Ivermectin to Treat SARS-CoV-2 Infection. Open Forum Infect. Dis. 2021, 8, ofab358. [Google Scholar] [CrossRef] [PubMed]

- Bolland, M.J.; Avenell, A.; Gamble, G.D.; Grey, A. Systematic review and statistical analysis of the integrity of 33 randomized controlled trials. Neurology 2016, 87, 2391–2402. [Google Scholar] [CrossRef]

- Marret, E.; Elia, N.; Dahl, J.B.; McQuay, H.J.; Møiniche, S.; Moore, R.A.; Straube, S.; Tramèr, M.R. Susceptibility to fraud in systematic reviews: Lessons from the reuben case. Anesthesiology 2009, 111, 1279–1289. [Google Scholar] [CrossRef] [PubMed]

- Habib, A.S.G.T. Scientific fraud: Impact of Fujii’s data on our current knowledge and practice for the management of postoperative nausea and vomiting. Anesth. Analg. 2013, 116, 520–522. [Google Scholar] [CrossRef]

- Resnik, D.B.; Smith, E.M.; Chen, S.H.G.C. What is Recklessness in Scientific Research? The Frank Sauer Case. Account. Res. 2017, 24, 497–502. [Google Scholar] [CrossRef]

- Sterne, J.A.C.; Savović, J.; Page, M.J.; Elbers, R.G.; Blencowe, N.S.; Boutron, I.; Cates, C.J.; Cheng, H.Y.; Corbett, M.S.; Eldridge, S.M.; et al. RoB 2: A revised tool for assessing risk of bias in randomised trials. BMJ 2019, 366, l4898. [Google Scholar] [CrossRef]

- Jüni, P.; Witschi, A.; Bloch, R.; Egger, M. The hazards of scoring the quality of clinical trials for meta-analysis. JAMA 1999, 282, 1054–1060. [Google Scholar] [CrossRef]

- Schulz, K.F.; Altman, D.G.; Moher, D. CONSORT 2010 statement: Updated guidelines for reporting parallel group randomized trials. Ann. Intern. Med. 2010, 152, 726–732. [Google Scholar] [CrossRef]

- van den Bor, R.M.; Vaessen, P.W.J.; Oosterman, B.J.; Zuithoff, N.P.A.; Grobbee, D.E.; Roes, K.C.B. A computationally simple central monitoring procedure, effectively applied to empirical trial data with known fraud. J. Clin. Epidemiol. 2017, 87, 59–69. [Google Scholar] [CrossRef]

- Pogue, J.M.; Devereaux, P.J.; Thorlund, K.; Yusuf, S. Central statistical monitoring: Detecting fraud in clinical trials. Clin. Trials 2013, 10, 225–235. [Google Scholar] [CrossRef]

- de Viron, S.; Trotta, L.; Schumacher, H.; Hans-Juergen, L.; Höppner, S.; Young, S.; Buyse, M. Detection of Fraud in a Clinical Trial Using Unsupervised Statistical Monitoring. Ther. Innov. Regul. Sci. 2022, 56, 130–136. [Google Scholar] [CrossRef]

- O’Kelly, M. Using statistical techniques to detect fraud: A test case. Pharm. Stat. 2004, 3, 237–246. [Google Scholar] [CrossRef]

- Núñez-Núñez, M.; Maes-Carballo, M.; Mignini, L.E.; Chien, P.F.W.; Khalaf, Y.; Fawzy, M.; Zamora, J.; Khan, K.S.; Bueno-Cavanillas, A. Research integrity in randomized clinical trials: A scoping umbrella review. Int. J. Gynecol. Obstet. 2023, 1–17. [Google Scholar] [CrossRef]

- Khan, K.S. Cairo Consensus Group on Research Integrity. International multi-stakeholder consensus statement on clinical trial integrity. BJOG 2023, 1–16. [Google Scholar] [CrossRef]

- Khan, K.S.; Fawzy, M.; Chien, P.F.W. Integrity of randomized clinical trials: Performance of integrity tests and checklists requires assessment. Int. J. Gynaecol. Obstet. 2023; in press. [Google Scholar] [CrossRef]

- Morán, J.M.; Santillán-García, A.; Herrera-Peco, I. SCRUTATIOm: How to detect retracted literature included in systematics reviews and metaanalysis using SCOPUS© and ZOTERO©. Gac. Sanit. 2022, 36, 64–66. [Google Scholar] [CrossRef]

- Ware, M.; Mabe, M. The STM Report: An Overview of Scientific and Scholarly Journal Publishing Fourth Edition; International Association of Scientific, Technical and Medical Publishers: Oxford, UK, 2015. [Google Scholar]

- The Systematic Review Toolbox. Available online: http://systematicreviewtools.com/software.php (accessed on 18 January 2023).

- Borah, R.; Brown, A.W.; Capers, P.L.; Kaiser, K.A. Analysis of the time and workers needed to conduct systematic reviews of medical interventions using data from the PROSPERO registry. BMJ Open 2017, 7, e012545. [Google Scholar] [CrossRef]

- Wang, Z.; Nayfeh, T.; Tetzlaff, J.; O’Blenis, P.; Murad, M.H. Error rates of human reviewers during abstract screening in systematic reviews. PLoS ONE 2020, 15, e0227742. [Google Scholar] [CrossRef] [PubMed]

- Tercero-Hidalgo, J.R.; Khan, K.S.; Bueno-Cavanillas, A.; Fernández-López, R.; Huete, J.F.; Amezcua-Prieto, C.; Zamora, J.; Fernández-Luna, J.M. Artificial intelligence in COVID-19 evidence syntheses was underutilized, but impactful: A methodological study. J. Clin. Epidemiol. 2022, 148, 124–134. [Google Scholar] [CrossRef] [PubMed]

- Pietrzykowski, T.; Smilowska, K. The reality of informed consent: Empirical studies on patient comprehension—Systematic review. Trials 2021, 22, 57. [Google Scholar] [CrossRef] [PubMed]

- Schellings, R.; Kessels, A.G.; ter Riet, G.; Knottnerus, J.A.; Sturmans, F. Randomized consent designs in randomized controlled trials: Systematic literature search. Contemp. Clin. Trials 2006, 27, 320–332. [Google Scholar] [CrossRef]

- Timmermann, C.; Orzechowski, M.; Kosenko, O.; Woniak, K.; Steger, F. Informed Consent in Clinical Studies Involving Human Participants: Ethical Insights of Medical Researchers in Germany and Poland. Front. Med. 2022, 19, 901059. [Google Scholar] [CrossRef]

- Khan, K.S. Comment on Khan: “Flawed Use of Post Publication Data Fabrication Tests’. Research Misconduct Tests: Putting Patients” Interests First. J. Clin. Epidemiol. 2021, 138, 227. Available online: https://pubmed.ncbi.nlm.nih.gov/34343640/ (accessed on 9 April 2023). [CrossRef]

| Research Integrity Terms | Definition of Terms * |

|---|---|

| Research integrity | Undertaking research in accordance with ethical and professional principles and standards. |

| Integrity principles | A set of values and concepts for guiding researcher behavior. |

| Integrity standards | Specifications of conduct that must be adhered to when participating in or carrying out research. |

| Bias | Systematic error that invalidates the observed effects in trials due to flaws in methodological aspects such as failure to concealment of randomization, lack of blinding, etc. Bias is distinct from data-related integrity flaws that arise due to misconduct. |

| Moral Values | The set of principles and standards that differentiate “right” from “wrong”. |

| Bioethics | Making choices in biomedical research around what are “right” and “wrong” values and behaviors. |

| Anti-whistleblower | Individuals who do not report nor prevent another individual from reporting known research misconduct. |

| Dishonesty | Behaviors that transgress moral values and bioethical standards |

| Duplication | A redundant publication that recycles or borrows content from authors own previous work without citation (see also self-plagiarism). |

| Ethics | Decision making based on moral and bioethical principles to protect those subjects of research and wider society. |

| Ethical Misdemeanors | Unacceptable or improper behavior that violates formal regulations. |

| Expression of concern | Note issued by journal editors or publishers to make readers aware that there is a concern about the integrity of a particular published article. |

| Fabrication, Falsification, Plagiarism (FFP) | The unholy trinity of misconduct in education, research or scholarship. Plagiarism is not the focus of this paper. |

| Fabrication | Making up data, experiments, or other significant information in proposing, conducting, or reporting research and using them as if genuine. |

| Falsification | Forging research content, images, data, equipment, or processes in the way that they are inaccurately represented. |

| Forgery | Forging content, images, data, equipment, or processes in the way that they are inaccurately represented. |

| Fraud | Any intentional act of deception in research violating research ethics. |

| Infringement | Breach of good practice occurring from questionable, unlawful or unethical behavior. |

| Irresponsible Research Practices | Practices that are regarded as unethical but fall short of being considered research misconduct |

| Masking | Subset of data falsification consisting of minimizing or omitting data which does not support desired conclusions or results. |

| Misconduct | Unethical or unprofessional behavior in research. |

| Negligence | Failure to follow the required standard that results in harm to a person or organization. |

| Plagiarism | Presenting the work of others as if it were own work without proper acknowledgment or citation of the original source. Plagiarism is not the focus of this paper. |

| Self-plagiarism | Auto-plagiarism, i.e., the author adds insignificant additional data or information to previously published work changing title, modifying aim of the study or recalculating results, with the omission of citation to own previous publications. Self-plagiarism is not the focus of this paper. |

| Questionable research practices (QRPs) | Research practices that are unethical but fall short of being considered research misconduct. |

| Recycle | Recycling or borrowing content from authors own previous work without citation. |

| Redundant Publication | A published work (or substantial sections from a published work) is/are published more than once (in the same or another language) without adequate acknowledgment of the source/cross-referencing/justification. It is also when the same (or substantially overlapping) data is presented in more than one publication without adequate cross-referencing/justification, particularly when this is done in such a way that reviewers/readers are unlikely to realise that most or all the findings have been published before. |

| Replication | Repeating a piece of research in order to verify and/or complement the original results. |

| Retraction | Withdrawing or removing a published paper from the research record because of a variety of reasons including a post-publication reassessment showing that the data or results reported are unreliable or because the paper involves research misconduct. Journals publish retraction notices and identify retracted papers in electronic databases with reasons for retraction no always clearly stated. |

| Transgression | Breach of good practice occurring from questionable, unlawful or unethical behavior. |

| Transparency | Openness about activities and related decisions that affect academia and society and willingness to communicate these in a clear, accurate, timely, honest and complete manner. |

| Violation/Breach | Breach of responsible research practices due to questionable, unlawful or unethical behavior in the conduct, analysis and reporting of research. |

| Conflict of interest | Potential or perceived compromise in judgement or objectivity due to financial or personal relationships or other considerations. |

| Confidentiality Violation | Disclosing to others information received in confidence without prior, explicit authorization of the person to whom the information belongs. |

| Author’s Ethical Rights | The right to vindicate the ownership of work and assure its integrity and genuine status |

| Authorship Abuse | Any kind of authorship attribution not based on genuine contribution. |

| Authorship Coercion | An authorship that is demanded rather than voluntarily awarded. |

| Ghost Authorship | The practice of using a non-named (merited, but not listed) author to write or prepare a text for publication. |

| Invented Authorship | Naming a fictitious person, a colleague or a stranger as a co-author without permission. |

| Unethical Authorship | Crediting a person who has not contributed to the research in authorship; excluding from authorship a genuine contributor; manipulating the sequence of authors in an unjustified and improper way; removing names of contributors in subsequent publications; using one’s power to insist on being added as an author without any contribution; including an author without their permission. |

| Steps of Evidence Synthesis | Integrity Related Issues |

|---|---|

| Framing question | Review question should be framed free of conflict of interest and should specify its focus on including studies with integrity |

| Search and selection | Explicit exclusion criteria related to retraction notices and expressions of concern about integrity should be pre-specified |

| Specific retraction and integrity concern searches should be deployed, e.g., in Retraction Watch Database | |

| Search filters for capturing citations with integrity concerns should be developed and used | |

| Evaluation of post-publication comments concerning included studies should be sought and evaluated, e.g., letters to editors, Pub-Peer comments, etc. | |

| Data extraction | Specific data extraction to permit integrity assessment, e.g., baseline tables, missing data, etc. |

| Integrity assessment needs to be distinct from risk of bias and heterogeneity assessment | |

| Integrity assessment instruments need development and validation | |

| Developed integrity assessment instruments need automation | |

| Data synthesis | Tabulation of contribution/authorship, conflict of interest and funding source, etc. related to integrity should be routine |

| Integrity-based sensitivity analyses should be pre-specified | |

| Use of funnel plots to look for outliers should additionally be pre-specified with delineation of threshold for defining implausibly extreme results | |

| Inference generation | Down grading of evidence with integrity concerns should be explicitly deployed in generation of recommendations |

| Updates | Periodical updates of reviews to detect integrity concerns of included studies and issuing correction notices |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Núñez-Núñez, M.; Cano-Ibáñez, N.; Zamora, J.; Bueno-Cavanillas, A.; Khan, K.S. Assessing the Integrity of Clinical Trials Included in Evidence Syntheses. Int. J. Environ. Res. Public Health 2023, 20, 6138. https://doi.org/10.3390/ijerph20126138

Núñez-Núñez M, Cano-Ibáñez N, Zamora J, Bueno-Cavanillas A, Khan KS. Assessing the Integrity of Clinical Trials Included in Evidence Syntheses. International Journal of Environmental Research and Public Health. 2023; 20(12):6138. https://doi.org/10.3390/ijerph20126138

Chicago/Turabian StyleNúñez-Núñez, María, Naomi Cano-Ibáñez, Javier Zamora, Aurora Bueno-Cavanillas, and Khalid Saeed Khan. 2023. "Assessing the Integrity of Clinical Trials Included in Evidence Syntheses" International Journal of Environmental Research and Public Health 20, no. 12: 6138. https://doi.org/10.3390/ijerph20126138

APA StyleNúñez-Núñez, M., Cano-Ibáñez, N., Zamora, J., Bueno-Cavanillas, A., & Khan, K. S. (2023). Assessing the Integrity of Clinical Trials Included in Evidence Syntheses. International Journal of Environmental Research and Public Health, 20(12), 6138. https://doi.org/10.3390/ijerph20126138