Evaluating Healthcare Performance in Low- and Middle-Income Countries: A Pilot Study on Selected Settings in Ethiopia, Tanzania, and Uganda

Abstract

1. Introduction

Theoretical Background

2. Materials and Methods

2.1. Methodological Approach

2.2. Stages of Development

2.3. Study Setting

2.4. Data Collection and Graphical Representation

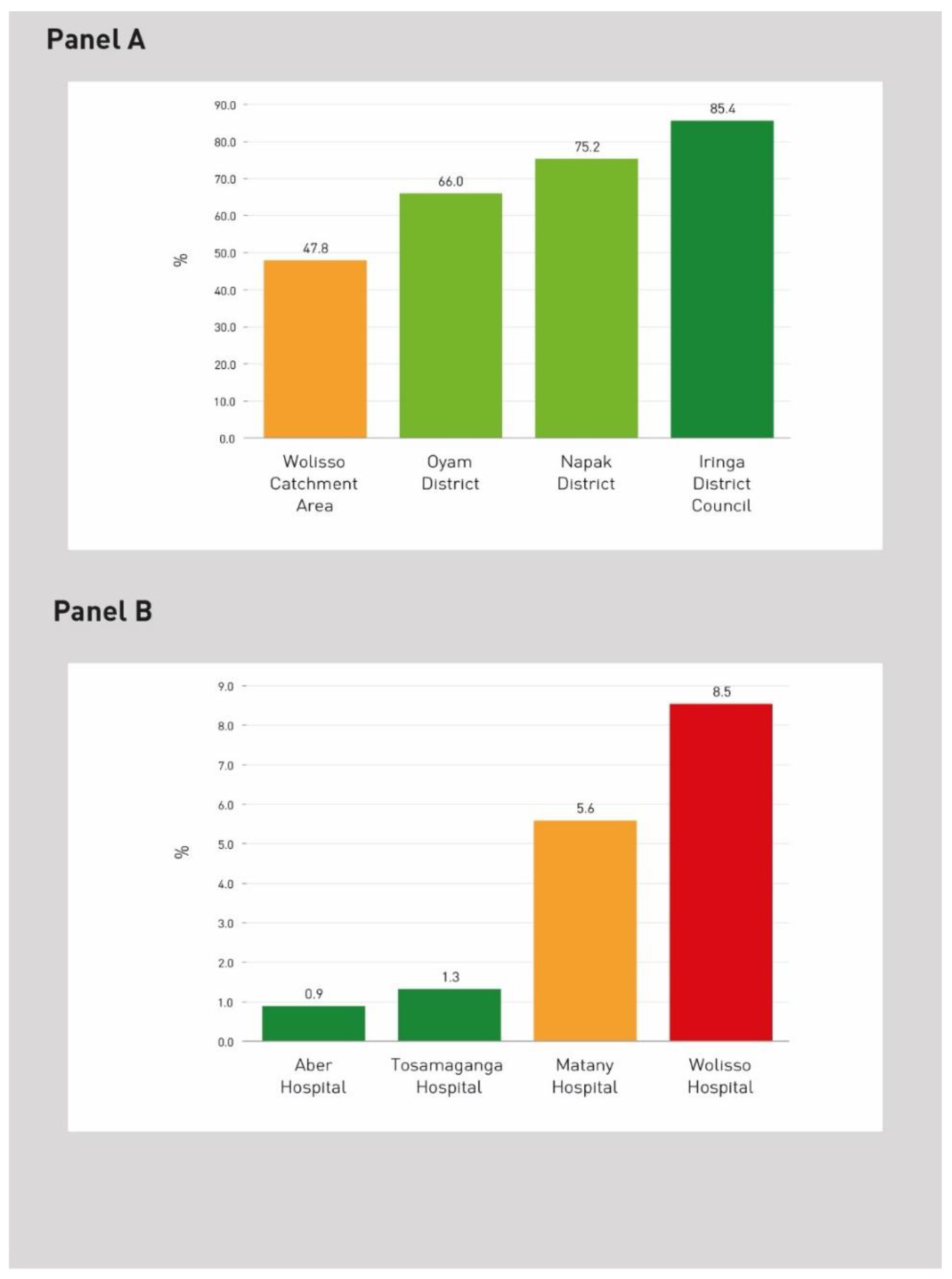

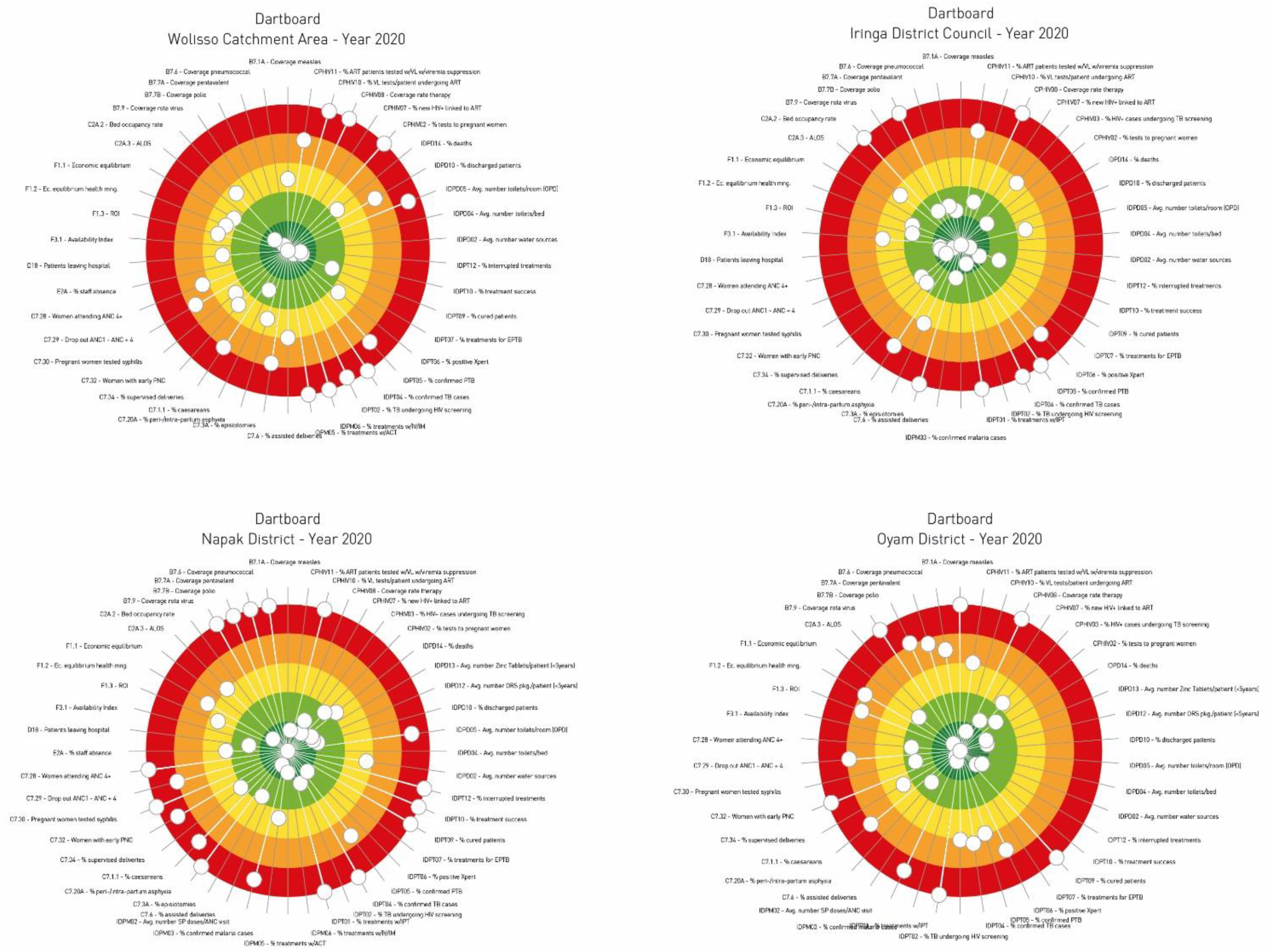

3. Results

4. Discussion

Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| PES | Performance Evaluation System. |

| HIC | High Income Country. |

| LMIC | Low-and-Middle-Income Country. |

| OECD | Organisation for Economic Co-operation and Development. |

| WHO | World Health Organization. |

| DHIS | District Health Information System. |

| CUAMM | Doctors with Africa CUAMM. |

| MES | Management and Healthcare Laboratory. |

| IRPES | Inter-Regional Performance Evaluation System. |

| RT | Research Team. |

| SAS | Statistical Analysis System. |

| ALOS | Average Length of Stay. |

| BOR | Bed Occupancy Rate. |

References

- Volmink, J.; Dare, L.; Clark, J. A theme issue “by, for, and about” Africa. BMJ 2005, 330, 684–685. [Google Scholar] [CrossRef] [PubMed]

- Jamison, D.T.; Summers, L.H.; Alleyne, G.; Arrow, K.J.; Berkley, S.; Binagwaho, A.; Bustreo, F.; Evans, D.; Feachem, R.G.A.; Frenk, J.; et al. Global health 2035: A world converging within a generation. Lancet 2013, 382, 1898–1955. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. Health in 2015: From MDGs, Millennium Development Goals to SDGs, Sustainable Development Goals; World Health Organization: Geneva, Switzerland, 2015. [Google Scholar]

- O’Donnell, O. Access to health care in developing countries: Breaking down demand side barriers. Cad. Saùde Pùblica 2007, 23, 2820–2834. [Google Scholar] [CrossRef] [PubMed]

- Kieny, M.P.; Bekedam, H.; Dovlo, D.; Fitzgerald, J.; Habicht, J.; Harrison, G.; Kluge, H.; Lin, V.; Menabde, N.; Mirza, Z.; et al. Strengthening health systems for universal health coverage and sustainable development. Bull. World Health Organ. 2017, 95, 537–539. [Google Scholar] [CrossRef]

- Peabody, J.W.; Taguiwalo, M.M.; Robalino, D.A.; Frenk, J. Improving the Quality of Care in Developing Countries. In Disease Control Priorities in Developing Countries, 2nd ed.; Oxford University Press: New York, NY, USA, 2006; pp. 1293–1308. [Google Scholar] [CrossRef]

- Plsek, P.E.; Greenhalgh, T. The challenge of complexity in health care. BMJ 2001, 323, 625–628. [Google Scholar] [CrossRef]

- Smith, R.D.; Hanson, K. Health Systems in Low- and Middle-Income Countries: An Economic and Policy Perspective; Oxford University Press: New York, NY, USA, 2012; ISBN 9780199566761. [Google Scholar]

- Lemieux-Charles, L.; Mcguire, W.; Barnsley, J.; Cole, D.; Champagne, F.; Sicotte, C. The use of multilevel performance indicators in managing performance in health care organizations. Manag. Decis. 2003, 41, 760–770. [Google Scholar] [CrossRef]

- Noto, G.; Corazza, I.; Kļaviņa, K.; Lepiksone, J.; Nuti, S. Health system performance assessment in small countries: The case study of Latvia. Int. J. Health Plan. Manag. 2019, 34, 1408–1422. [Google Scholar] [CrossRef]

- Arah, O.A.; Westert, G.P.; Hurst, J.; Klazinga, N.S. A conceptual framework for the OECD Health Care Quality Indicators Project. Int. J. Qual. Health Care 2006, 18, 5–13. [Google Scholar] [CrossRef]

- Smith, P.; Mossialos, E.; Papanicolas, I.; Leatherman, S. Performance Measurement and Professional Improvement; Cambridge University Press: Cambridge, UK, 2009; ISBN 9780511711800. [Google Scholar]

- World Health Organization. The World Health Report 2000. Health Systems: Improving Performance; World Health Organization: Geneva, Switzerland, 2000; Volume 49. [Google Scholar]

- Murray, C.; Frenk, A. A Framework for assessing the performance of health systems. Bull. World Health Organ. 2000, 78, 717–731. [Google Scholar]

- Sharma, A.; Shankar, P.; Aggarwal, A.K. Measurement of health system performance at district level: A study protocol. J. Public Health Res. 2017, 6, jphr-2017. [Google Scholar] [CrossRef]

- Bhattacharyya, O.; Mossman, K.; Ginther, J.; Hayden, L.; Sohal, R.; Cha, J.; Bopardikar, A.; MacDonald, J.A.; Parikh, H.; Shahin, I.; et al. Assessing health program performance in low- and middle-income countries: Building a feasible, credible, and comprehensive framework. Global. Health 2015, 11, 51. [Google Scholar] [CrossRef] [PubMed]

- Veillard, J.; Cowling, K.; Bitton, A.; Ratcliffe, H.; Kimball, M.; Barkley, S.; Mercereau, L.; Wong, E.; Taylor, C.; Hirschhorn, L.R.; et al. Better Measurement for Performance Improvement in Low- and Middle-Income Countries: The Primary Health Care Performance Initiative (PHCPI) Experience of Conceptual Framework Development and Indicator Selection. Milbank Q. 2017, 95, 836–883. [Google Scholar] [CrossRef] [PubMed]

- Alshamsan, R.; Lee, J.T.; Rana, S.; Areabi, H.; Millett, C. Comparative health system performance in six middle-income countries: Cross-sectional analysis using World Health Organization study of global ageing and health. J. R. Soc. Med. 2017, 110, 365–375. [Google Scholar] [CrossRef]

- McLaren, Z.M.; Sharp, A.R.; Zhou, J.; Wasserman, S.; Nanoo, A. Assessing healthcare quality using routine data: Evaluating the performance of the national tuberculosis programme in South Africa. Trop. Med. Int. Health 2017, 22, 171–179. [Google Scholar] [CrossRef] [PubMed]

- Nuti, S.; Seghieri, C.; Vainieri, M. Assessing the effectiveness of a performance evaluation system in the public health care sector: Some novel evidence from the Tuscany region experience. J. Manag. Gov. 2013, 17, 59–69. [Google Scholar] [CrossRef]

- Gray, M.; Pitini, E.; Kelley, T.; Bacon, N. Managing population healthcare. J. R. Soc. Med. 2017, 110, 434–439. [Google Scholar] [CrossRef] [PubMed]

- Vainieri, M.; Noto, G.; Ferre, F.; Rosella, L.C. A performance management system in healthcare for all seasons? Int. J. Environ. Res. Public Health 2020, 17, 5590. [Google Scholar] [CrossRef]

- Smith, P.C. Measuring Up. Improving Health System Performance in OECD Countries; Organization for Economic Cooperation and Development: Paris, France, 2002. [Google Scholar]

- Murray, C.J.L.; Evans, D. Health systems performance assessment: Goals, framework and overview. Debates, Methods and Empiricism; World Health Organization: Geneva, Switzerland, 2003. [Google Scholar]

- Kruk, M.E.; Gage, A.D.; Arsenault, C.; Jordan, K.; Leslie, H.H.; Roder-DeWan, S.; Adeyi, O.; Barker, P.; Daelmans, B.; Doubova, S.V.; et al. High-quality health systems in the Sustainable Development Goals era: Time for a revolution. Lancet Glob. Health 2018, 6, e1196–e1252. [Google Scholar] [CrossRef]

- Donabedian, A. The Quality of Care: How Can It Be Assessed? JAMA 1988, 260, 1743–1748. [Google Scholar] [CrossRef]

- Arah, O.A.; Klazinga, N.S.; Delnoij, D.M.J.; Ten Asbroek, A.H.A.; Custers, T. Conceptual frameworks for health systems performance: A quest for effectiveness, quality, and improvement. Int. J. Qual. Health Care 2003, 15, 377–398. [Google Scholar] [CrossRef]

- Expert Group on Health Systems Performance Assessment. So what?—Strategies across Europe to Assess Quality of Care; European Union: Bruxelles, Belgium, 2016; ISBN 9789279575631. [Google Scholar]

- Health Quality and Safety Commission of New Zealand. Quality Dashboards. 2021. Available online: https://www.hqsc.govt.nz/our-programmes/health-quality-evaluation/projects/quality-dashboards/ (accessed on 19 December 2022).

- Goodman, D.C.; Morden, N.E.; Ralston, S.L.; Chang, C.-H.; Parker, D.M.; Weinstein, S.J. The Dartmouth Atlas of Children’s Health Care in Northern New England; The Trustees of Dartmouth College: Hanover, New Hampshire, 2013. [Google Scholar]

- OECD/European Observatory on Health Systems and Policies. United Kingdom: Country Health Profile 2019, State of Health in the EU. In OECD Publishing, Paris/European Observatory on Health Systems and Policies, Brussels; OECD and World Health Organization: Bruxelles, Belgium, 2019; ISBN 9789264578661. [Google Scholar]

- OECD. Health at a Glance 2015; OECD Publishing: Paris, France, 2015. [Google Scholar]

- Nuti, S.; Noto, G.; Vola, F.; Vainieri, M. Let’s play the patients music: A new generation of performance measurement systems in healthcare. Manag. Decis. 2018, 56, 2252–2272. [Google Scholar] [CrossRef]

- Bititci, U.; Garengo, P.; Dörfler, V.; Nudurupati, S. Performance Measurement: Challenges for Tomorrow. Int. J. Manag. Rev. 2012, 14, 305–327. [Google Scholar] [CrossRef]

- Tashobya, C.K.; da Silveira, V.C.; Ssengooba, F.; Nabyonga-Orem, J.; Macq, J.; Criel, B. Health systems performance assessment in low-income countries: Learning from international experiences. Global. Health 2014, 10, 5. [Google Scholar] [CrossRef] [PubMed]

- Kumah, E.; Ankomah, S.E.; Fusheini, A.; Sarpong, E.K.; Anyimadu, E.; Quist, A.; Koomson, B. Frameworks for health systems performance assessment: How comprehensive is Ghana’s holistic assessment tool? Glob. Health Res. Policy 2020, 5, 10. [Google Scholar] [CrossRef] [PubMed]

- Ministry of Health of Ghana. Holistic Assessment of the Health Sector Programme of Work 2014; Ghana Health Service, Ministry of Health (Ghana): Accra, Ghana, 2014. [Google Scholar]

- Massyn, N.; Day, C.; Ndlovu, N.; Padayachee, T.; Africa, S.; Trust, H.S. District Health Barometer 2019–20; Health Systems Trust: Westville, South Africa, 2020; pp. 1–526. ISBN 9781928479055. Available online: https://www.hst.org.za/publications/District%20Health%20Barometers/DHB%202019-20%20Complete%20Book.pdf (accessed on 19 December 2022).

- World Health Organization. Global Tuberculosis Report 2020; World Health Organization: Geneva, Switzerland, 2020; Available online: http://library1.nida.ac.th/termpaper6/sd/2554/19755.pdf (accessed on 19 December 2022).

- World Health Organization. Progress Report on HIV, Viral Hepatitis and Sexually Transmitted Infections; World Health Organization: Geneva, Switzerland, 2019; Volume 1–48. [Google Scholar]

- Kasanen, E.; Lukka, K. The constructive approach in management accounting research. J. Manag. Account. Res. 1993, 5, 241–264. [Google Scholar]

- Labro, E.; Tuomela, T.S. On bringing more action into management accounting research: Process considerations based on two constructive case studies. Eur. Account. Rev. 2003, 12, 409–442. [Google Scholar] [CrossRef]

- Nørreklit, H.; Raffnsøe-Møller, M.; Mitchell, F. Quality in qualitative management accounting research. Qual. Res. Account. Manag. 2014, 1, 29–39. [Google Scholar] [CrossRef]

- Nuti, S.; Seghieri, C.; Vainieri, M.; Zett, S. Assessment and Improvement of the Italian Healthcare system: Firse evidence from a Pilot National Performance Evaluation System. J. Healthc. Manag. 2012, 57, 182–199. [Google Scholar] [CrossRef]

- Nuti, S.; Vola, F.; Bonini, A.; Vainieri, M. Making governance work in the health care sector: Evidence from a “natural experiment” in Italy. Health Econ. Policy Law 2016, 11, 17–38. [Google Scholar] [CrossRef]

- World Health Organization. Primary Health Care System. Case Study from Uganda; Publisher: Geneva, Switzerland, 2017; Available online: https://apps.who.int/iris/bitstream/handle/10665/341064/WHO-HIS-HSR-17.27-eng.pdf?sequence=1&isAllowed=y (accessed on 19 December 2022).

- World Health Organization; Alliance for Health Policy and Systems Research Primary Healthcare Systems (PRIMASYS). Comprehensive Case Study from United Republic of Tanzania; World Health Organisation: Geneva, Switzerland, 2017. [Google Scholar]

- Wang, H.; Ramana, G.N. Universal Health Coverage for Inclusive and Sustainable Development; Country Summary Report for Ethiopia; World Bank: Washington, DC, USA, 2014. [Google Scholar]

- Boex, J.; Fuller, L.; Malik, A. Decentralized Local Health Services in Tanzania. Urban Inst. 2015. Available online: https://www.urban.org/sites/default/files/publication/51206/2000215-Decentralized-Local-Health-Services-in-Tanzania.pdf (accessed on 19 December 2022).

- Ministry of Health. National Health Facility Master List 2018; Ministry of Health (Uganda): Kampala, Uganda, 2018; Available online: http://library.health.go.ug/health-infrastructure/health-facility-inventory/national-health-facility-master-facility-list-2018 (accessed on 19 December 2022).

- Reeve, C.; Humphreys, J.; Wakerman, J. A comprehensive health service evaluation and monitoring framework. Eval. Program Plann. 2015, 53, 91–98. [Google Scholar] [CrossRef]

- Papanicolas, I.; Smith, P. Health System Performance Comparison: An Agenda for Policy, Information and Research; McGraw-Hill Education (UK): Maidenhead, UK, 2013; ISBN 9780335247264. [Google Scholar]

- Ballantine, J.; Brignall, S.; Modell, S. Performance measurement and management in public health services: A comparison of U.K. and Swedish practice. Manag. Account. Res. 1998, 9, 71–94. [Google Scholar] [CrossRef]

- Belardi, P.; Corazza, I.; Bonciani, M.; Manenti, F.; Vainieri, M. Performance Evaluation System of Hospitals and Health Districts in Ethiopia, Tanzania and Uganda; Pacini Editore: Pisa, Italy, 2020. [Google Scholar]

- Tavoschi, L.; Belardi, P.; Mazzilli, S.; Manenti, F.; Pellizzer, G.; Abebe, D.; Azzimonti, G.; Nsubuga, J.B.; Oglio, G.D.; Vainieri, M. An integrated hospital-district performance evaluation for communicable diseases in low- and middle-income countries: Evidence from a pilot in three sub-Saharan countries. PLoS ONE 2022, 17, e0266225. [Google Scholar] [CrossRef] [PubMed]

- UNICEF. UNICEF Data Warehouse—Maternal, Child and Newborn Health. 2020. Available online: https://data.unicef.org/resources/data_explorer/unicef_f/?ag=UNICEF&df=GLOBAL_DATAFLOW&ver=1.0&dq=ETH+UGA+TZA.MNCH_ANC1+MNCH_ANC4..&startPeriod=2016&endPeriod=2020 (accessed on 19 December 2022).

- WHO. WHO Recommendations on Antenatal Care for a Positive Pregnancy Experience; World Health Organisation: Geneva, Switzerland, 2016. [Google Scholar]

- Bowerman, M.; Francis, G.; Ball, A.; Fry, J. The evolution of benchmarking in UK local authorities. Benchmarking Int. J. 2002, 9, 429–449. [Google Scholar] [CrossRef]

- World Health Organization. WHO Global Strategy on People-Centred and Integrated Health Services: Interim Report; World Health Organization: Geneva, Switzerland, 2015. [Google Scholar]

- Maslin-Prothero, S.E.; Bennion, A.E. Integrated team working: A literature review. Int. J. Integr. Care 2010, 10, e043. [Google Scholar] [CrossRef]

- Nuti, S.; Vainieri, M. Strategies and Tools to Manage Variation in Regional Governance Systems. In Handbook of Health Services Research; Springer Reference: Boston, MA, USA, 2014; p. 23. ISBN 9781489975737. [Google Scholar]

- Bevan, G.; Fasolo, B. Models of governance of public services: Empirical and behavioural analysis of ‘econs’ and ‘humans.’ In Behavioural Public Policy; Cambridge University Press: Cambridge, UK, 2013; pp. 38–62. ISBN 9781107337190. [Google Scholar]

- Evans, A. Amplifying accountability by benchmarking results at district and national levels. Dev. Policy Rev. 2018, 36, 221–240. [Google Scholar] [CrossRef]

| Framework | Unit of Analysis | Dimensions | Benchmarking | Approach | Visualization Tool | Comparison |

|---|---|---|---|---|---|---|

| WHO | Country |

| ✓ | Top-down | Ranking | Between Countries |

| OECD | Country |

| ✓ | Top-down | - | |

| Tuscany region IRPES Network | Health DistrictsHospitals Regional Healthcare Systems |

| ✓ | Bottom-up | Dartboard Stave | Within the reference Country |

| Activity | Task | Workshops/Meetings | Role of Professionals Involved | |

|---|---|---|---|---|

| 1 | Selection of the indicators | The RT assessed the most relevant information from existing literature and selected the first set of indicators to be applied in the selected contexts. | Two online meetings and one meeting in person between June and September 2019. One mission on the field (21 days) in the direction office of the St. Luke Hospital—Wolisso (Ethiopia) in September 2019. | The RT was involved in the literature review for the identification of the indicators to be applied in the PES and defined a preliminary list of indicators. |

| 2 | Feasibility analysis | In order to understand what indicators could be effectively included into the PES, the RT balanced professionals’ opportunities and costs of grabbing data from both digital and paper informative systems that were already in place. | Five online meetings and 11 meetings in person. One mission on the field (21 days) in the direction office of the St. Luke Hospital—Wolisso (Ethiopia) in September 2019. Three missions on the field (40 days) in the direction offices of Tosamaganga Designated Discrict Hospital (Tanzania), St. Kiziko Matany Hospital and Pope John XXIII Aber Hospital (Uganda) from February to March 2020. | This phase included the RT and four medical doctors from all the hospitals involved. Based on the results of the first phase, taking into consideration the available data at the health district and hospital level, the involved professionals tried to understand if the identified indicators were applicable in the PES or how they could be adapted in the selected settings. Four lists of available indicators were defined for each setting. |

| 3 | Data collection and data analysis | The RT supported the hospitals and health districts’ professionals in extracting aggregated data in a homogeneous way from different health registers and information systems. The RT calculated the indicators and produced the preliminary graphs for evaluation of indicators. | Eight online meetings in March and April 2020. | This phase included 2 experts in healthcare management, 11 experts in public health (statistical staff and medical doctors), 6 experts in monitoring and evaluation, and 1 expert in accounting and finance. The hospitals and health districts staff collected data, computed numerators and denominators, and shared them with the RT. By means of these elements, the RT calculated the indicators for the three years and produced the preliminary bar charts. |

| 4 | Standards identification | The RT worked closely with one public-health experts in order to identify standards to be applied to evaluate information collected and to perform graphical representations. | Seven online meetings from April to June 2020. | This phase included the RT and one more expert in public health. The professionals viewed the preliminary graphs produced after the calculation of the indicators and, by comparing the results with the main evidence in the international literature, they chose a set of standards tailored to the specific settings analysed. |

| 5 | System validation | The RT shared the preliminary results with a group of experts and professionals to receive their opinions and comments before the dissemination of the results. | Two online meetings in July 2020. | This phase included the RT and a group of experts in hospital management, public health, and infectious diseases. The RT shared the preliminary evaluation results, received opinions and suggestions from the group of experts involved in the first meeting, and validated the PES system in the second meeting. |

| 6 | Results dissemination | The RT organized a series of events for disseminating and returning results to healthcare managers of the selected settings to illustrate and eventually discuss how to use them. | A workshop in blended form (October 2020) and two online seminars (November 2020). Additionally, eight other online meetings between December 2020 and October 2021 involved the local staff in results presentation. | The RT organized some workshops for officially presenting the definitive results and two other seminars in Italy. The eight online meetings envisaged the presentation of the results of the PES to the local staff and aimed at raising their awareness of the relevance of this system as a management tool. |

| Country | Governance | Financing | Services Delivery | Domestic General Government Health Expenditure per Capita (Current USD—Year 2018) * |

|---|---|---|---|---|

| Ethiopia | Federal system of governance based on mutually agreed resource allocation criteria:

| Three main sources:

| Three main levels of delivery (public and private):

| $15.57 |

| Tanzania | Decentralised system:

| Three main sources:

| Three main levels of delivery (public and private):

| $48.30 |

| Uganda | The main administrative levels are:

| Three main sources:

| Three main levels of delivery (public and private):

| $22.06 |

| Country | Region | Health District 1 | Estimated Population (Year 2020) | Reference Hospital | Hospital Beds (2019) | Area (km2) | Population Density (Citizens per km2) |

|---|---|---|---|---|---|---|---|

| Ethiopia | Oromia region | 5 Woredas in Shoa-west Zone (Wolisso Town, Wolisso Rural, Ameya, Wonchi, Goro) | 633,359 | St. Luke—Wolisso Hospital | 208 | 27,000 | 22.6 |

| Tanzania | Iringa region | Iringa District Council | 308,009 | Tosamaganga District Designated Hospital | 165 | 19 256 | 15.6 |

| Uganda | Northern region | Napak District | 166,549 | St. Kizito—Matany Hospital | 250 | 4978.4 | 31.5 |

| Uganda | Northern region | Oyam District | 449,700 | Pope John XXIII—Aber Hospital | 217 | 2190.8 | 197.2 |

| Performance Dimension | Area | Number of Indicators |

|---|---|---|

| Regional Health Strategies | Vaccination Coverage | 6 |

| Hospital attraction | 2 | |

| Efficiency and Sustainability | Economic and financial viability | 3 |

| Per capita cost for health services | 7 | |

| Assets and liability analyses | 1 | |

| Inpatients efficiency | 2 | |

| Users, Staff and Communication | Users, staff, and communication | 4 |

| Emergency Care | Emergency Care | 1 |

| Governance and quality of supply | Hospital-territory integration | 2 |

| Healthcare demand management capability | 2 | |

| Care appropriateness of chronic diseases | 2 | |

| Diagnostic appropriateness | 3 | |

| Quality of process | 1 | |

| Surgery variation | 1 | |

| Repeated hospital admissions for any causes | 4 | |

| Clinical risk | 3 | |

| Maternal and Childcare | Maternal and Childcare at district level | 7 |

| Maternal and Childcare at hospital care | 13 | |

| Maternal and Childcare—Child Malnutrition | 10 | |

| Infectious Diseases | Infectious Diseases—Malaria | 9 |

| Infectious Diseases—Tuberculosis | 14 | |

| Infectious Diseases—Gastroenteritis | 14 | |

| Chronic Diseases | Chronic Diseases—HIV | 14 |

| Other Chronic Diseases | 3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Belardi, P.; Corazza, I.; Bonciani, M.; Manenti, F.; Vainieri, M. Evaluating Healthcare Performance in Low- and Middle-Income Countries: A Pilot Study on Selected Settings in Ethiopia, Tanzania, and Uganda. Int. J. Environ. Res. Public Health 2023, 20, 41. https://doi.org/10.3390/ijerph20010041

Belardi P, Corazza I, Bonciani M, Manenti F, Vainieri M. Evaluating Healthcare Performance in Low- and Middle-Income Countries: A Pilot Study on Selected Settings in Ethiopia, Tanzania, and Uganda. International Journal of Environmental Research and Public Health. 2023; 20(1):41. https://doi.org/10.3390/ijerph20010041

Chicago/Turabian StyleBelardi, Paolo, Ilaria Corazza, Manila Bonciani, Fabio Manenti, and Milena Vainieri. 2023. "Evaluating Healthcare Performance in Low- and Middle-Income Countries: A Pilot Study on Selected Settings in Ethiopia, Tanzania, and Uganda" International Journal of Environmental Research and Public Health 20, no. 1: 41. https://doi.org/10.3390/ijerph20010041

APA StyleBelardi, P., Corazza, I., Bonciani, M., Manenti, F., & Vainieri, M. (2023). Evaluating Healthcare Performance in Low- and Middle-Income Countries: A Pilot Study on Selected Settings in Ethiopia, Tanzania, and Uganda. International Journal of Environmental Research and Public Health, 20(1), 41. https://doi.org/10.3390/ijerph20010041