Public Opinion Manipulation on Social Media: Social Network Analysis of Twitter Bots during the COVID-19 Pandemic

Abstract

1. Introduction

2. Literature Review

2.1. COVID-19 and Social Networks

2.2. Social Bots and Humans on Social Media

2.3. Social Media Data and Social Bots Detection

3. Materials and Methods

3.1. Data

3.2. Social Bot Detection

3.3. LDA Topic Model

3.4. Social Network Analysis

- (1)

- Network scale, that is, the number of nodes and edges contained in the network structure.

- (2)

- Degree centrality. Usually, nodes with a higher degree centrality are in the core position and have a greater right to speak. If the network is directed, two distinct metrics of degree centrality, indegree and outdegree, are specified. The degree in these circumstances is equal to the sum of the indegree and outdegree.

- (3)

- Betweenness centrality. The node with higher betweenness centrality plays a more vital role as a bridge.

4. Results

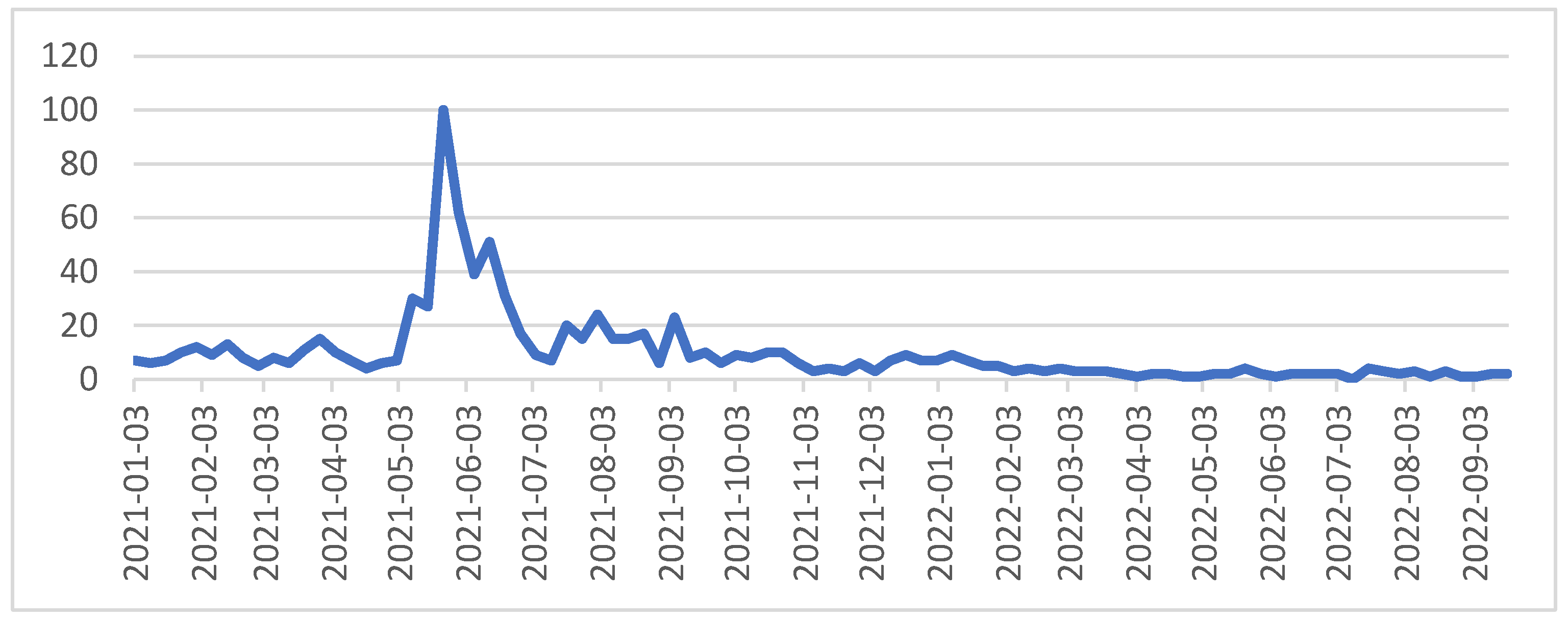

4.1. Corpus Analysis and Text Preprocessing

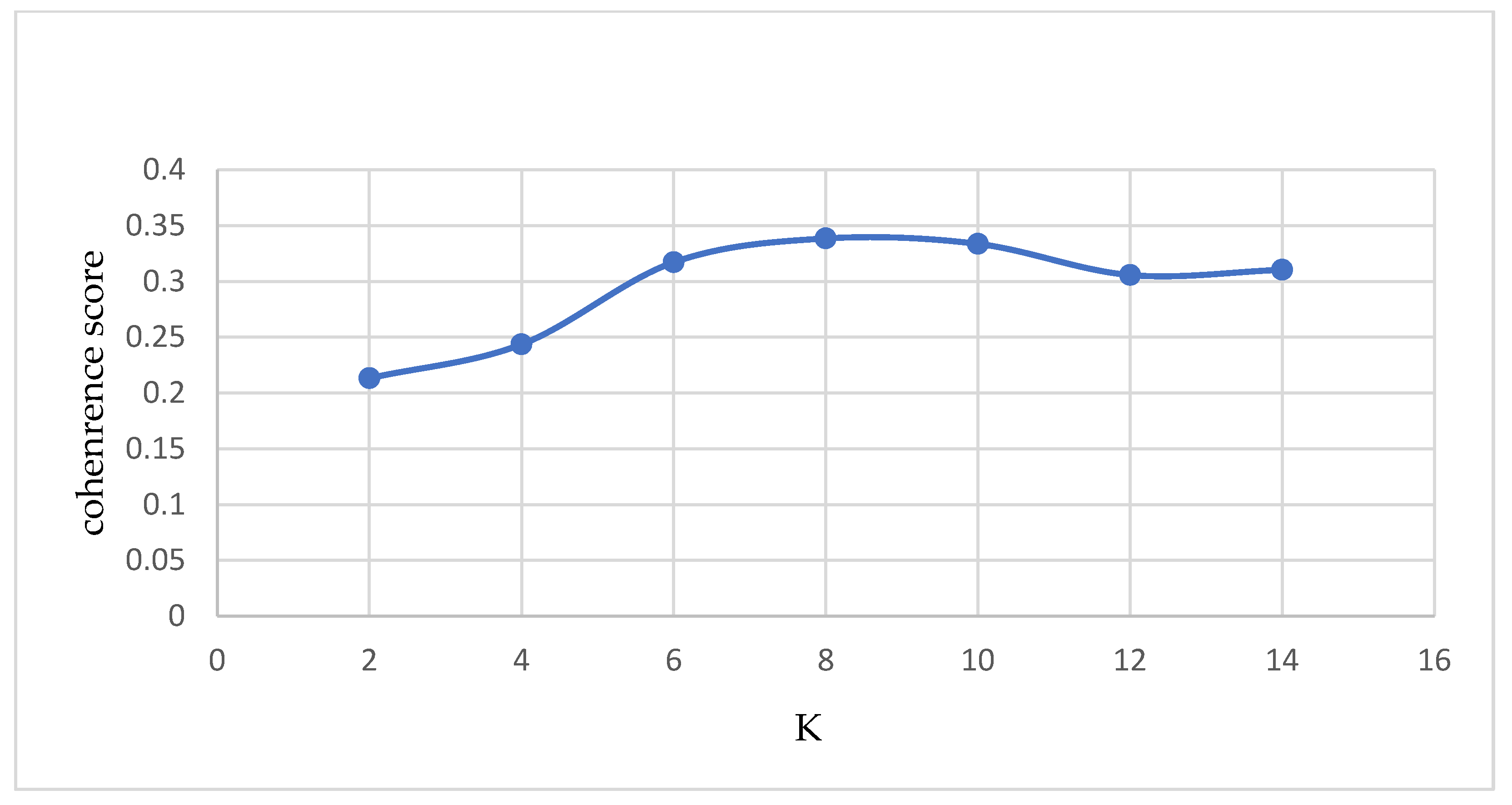

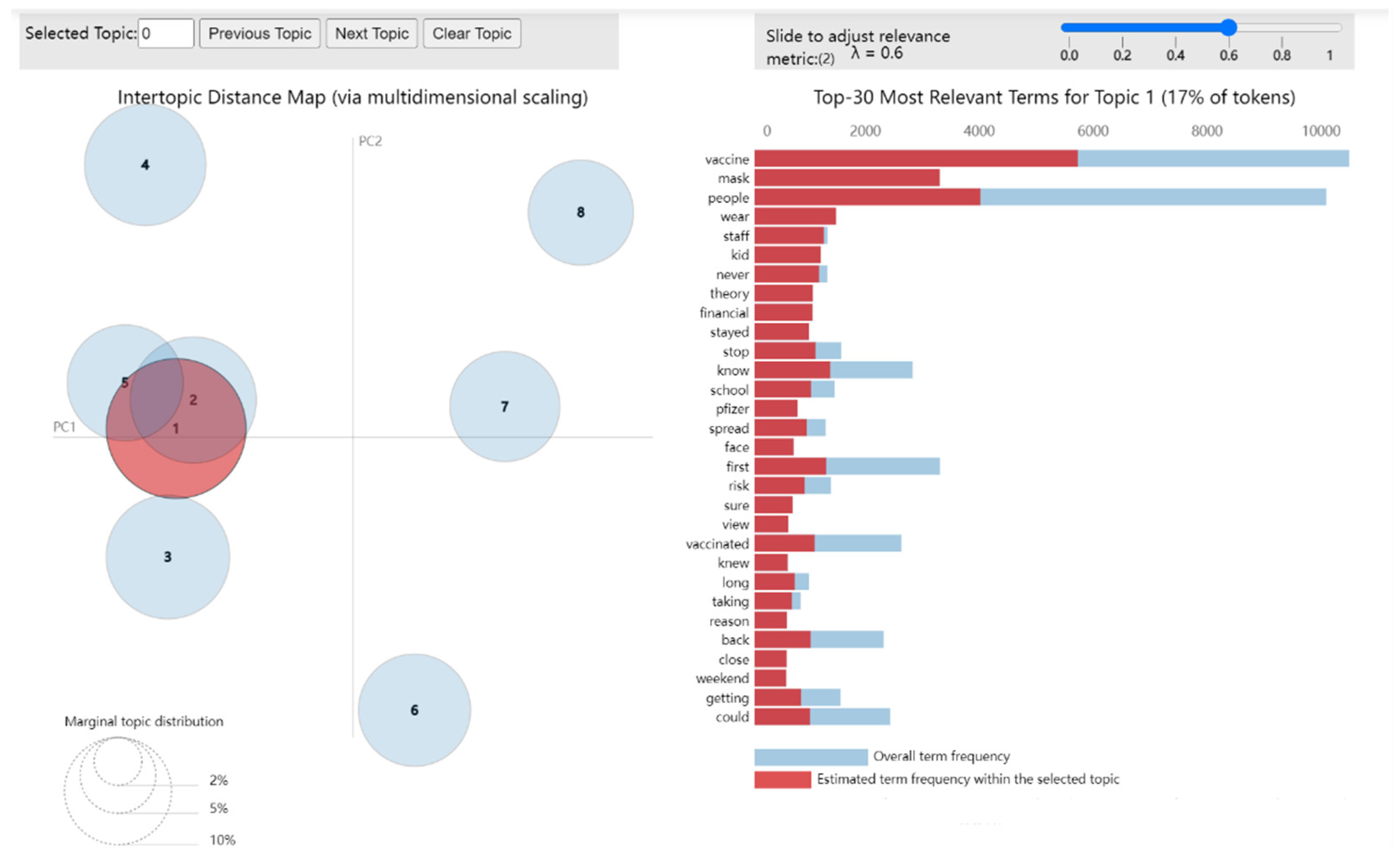

4.2. LDA Model Analysis

4.3. Social Network Analysis of Social Bots and Human Accounts

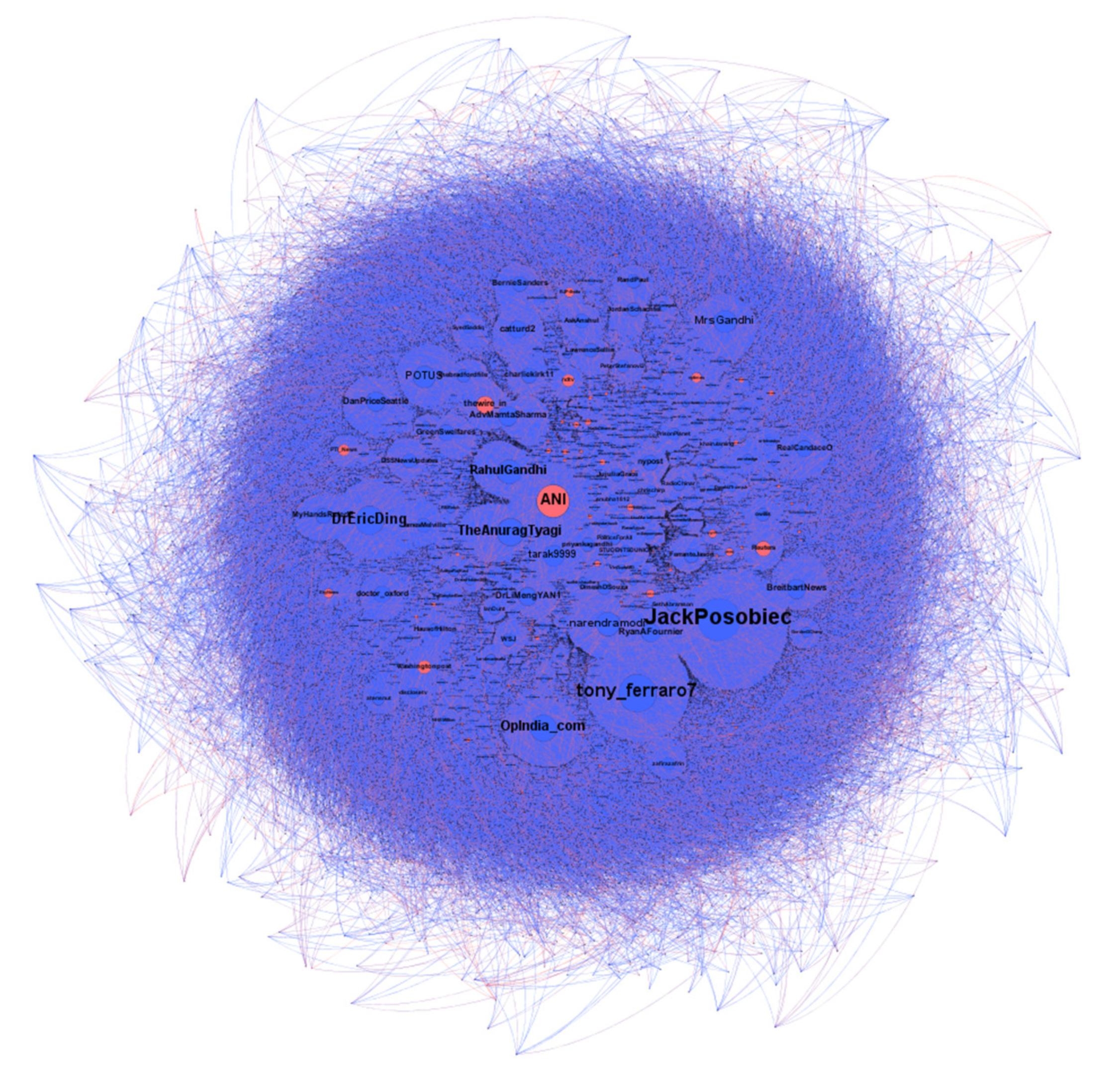

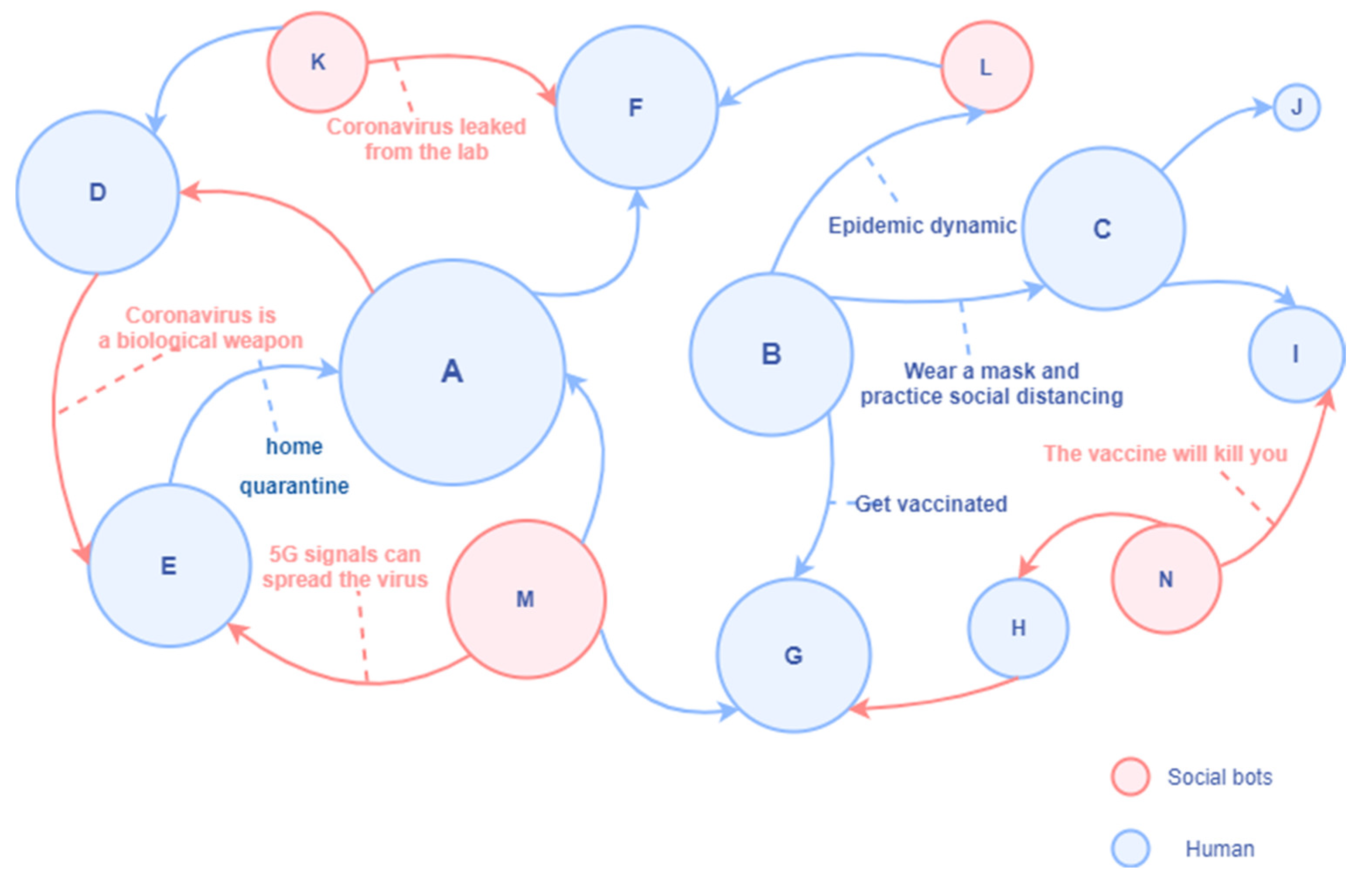

4.3.1. Social Network Structure

4.3.2. In-Degree Centrality Analysis

4.3.3. Out-Degree Centrality Analysis

4.3.4. Betweenness Centrality Analysis

5. Discussion

6. Conclusions

Limitations and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chan, A.K.; Nickson, C.P.; Rudolph, J.W.; Lee, A.; Joynt, G.M. Social media for rapid knowledge dissemination: Early experience from the COVID-19 pandemic. Anaesthesia 2020, 75, 1579–1582. [Google Scholar]

- Patwa, P.; Sharma, S.; Pykl, S.; Guptha, V.; Kumari, G.; Akhtar, M.S.; Ekbal, A.; Das, A.; Chakraborty, T. Fighting an Infodemic: Covid-19 Fake News Dataset. In Proceedings of the International Workshop on Combating Online Hostile Posts in Regional Languages during Emergency Situation, Online, 8 February 2021; Springer: Berlin, Germany, 2021; pp. 21–29. [Google Scholar]

- Mourad, A.; Srour, A.; Harmanani, H.; Jenainati, C.; Arafeh, M. Critical impact of social networks infodemic on defeating coronavirus COVID-19 pandemic: Twitter-based study and research directions. IEEE Trans. Netw. Serv. Manag. 2020, 17, 2145–2155. [Google Scholar]

- Sasidharan, S.; Singh, D.H.; Vijay, S.; Manalikuzhiyil, B. COVID-19: Pan (info) demic. Turk. J. Anaesthesiol. Reanim. 2020, 48, 438. [Google Scholar] [PubMed]

- Rathore, F.A.; Farooq, F. Information overload and infodemic in the COVID-19 pandemic. J. Pak. Med. Assoc. 2020, 70, 162–165. [Google Scholar]

- Patel, M.P.; Kute, V.B.; Agarwal, S.K.; On behalf of COVID-19 Working Group of Indian Society of Nephrology. “Infodemic” COVID 19: More Pandemic than the Virus. Indian J. Nephrol. 2020, 30, 188. [Google Scholar] [PubMed]

- Zarocostas, J. How to fight an infodemic. The Lancet 2020, 395, 676. [Google Scholar]

- Ferrara, E.; Varol, O.; Davis, C.; Menczer, F.; Flammini, A. The rise of social bots. Commun. ACM 2016, 59, 96–104. [Google Scholar]

- Keller, T.R.; Klinger, U. Social Bots in Election Campaigns: Theoretical, Empirical, and Methodological Implications. Polit. Commun. 2019, 36, 171–189. [Google Scholar] [CrossRef]

- Shao, C.C.; Ciampaglia, G.L.; Varol, O.; Yang, K.C.; Flammini, A.; Menczer, F. The spread of low-credibility content by social bots. Nat. Commun. 2018, 9, 1–9. [Google Scholar] [CrossRef]

- Himelein-Wachowiak, M.; Giorgi, S.; Devoto, A.; Rahman, M.; Ungar, L.; Schwartz, H.A.; Epstein, D.H.; Leggio, L.; Curtis, B. Bots and Misinformation Spread on Social Media: Implications for COVID-19. J. Med. Internet Res. 2021, 23, e26933. [Google Scholar] [CrossRef]

- Uyheng, J.; Carley, K.M. Bots and online hate during the COVID-19 pandemic: Case studies in the United States and the Philippines. J. Comput. Soc. Sci. 2020, 3, 445–468. [Google Scholar] [PubMed]

- Howard, P.N.; Woolley, S.; Calo, R. Algorithms, bots, and political communication in the US 2016 election: The challenge of automated political communication for election law and administration. J. Inf. Technol. Politics 2018, 15, 81–93. [Google Scholar] [CrossRef]

- Luo, C. Analyzing the impact of social networks and social behavior on electronic business during COVID-19 pandemic. Inf. Process. Manag. 2021, 58, 102667. [Google Scholar] [CrossRef]

- Pérez-Escoda, A.; Jiménez-Narros, C.; Perlado-Lamo-de-Espinosa, M.; Pedrero-Esteban, L.M. Social Networks’ Engagement During the COVID-19 Pandemic in Spain: Health Media vs. Healthcare Professionals. Int. J. Environ. Res. Public Health 2020, 17, 5261. [Google Scholar] [PubMed]

- Dow, B.J.; Johnson, A.L.; Wang, C.S.; Whitson, J.; Menon, T. The COVID-19 pandemic and the search for structure: Social media and conspiracy theories. Soc. Personal. Psychol. Compass 2021, 15, e12636. [Google Scholar] [CrossRef] [PubMed]

- Akpan, I.J.; Aguolu, O.G.; Kobara, Y.M.; Razavi, R.; Akpan, A.A.; Shanker, M. Association Between What People Learned About COVID-19 Using Web Searches and Their Behavior Toward Public Health Guidelines: Empirical Infodemiology Study. J. Med. Internet Res. 2021, 23, e28975. [Google Scholar] [CrossRef]

- Cinelli, M.; Quattrociocchi, W.; Galeazzi, A.; Valensise, C.M.; Brugnoli, E.; Schmidt, A.L.; Zola, P.; Zollo, F.; Scala, A. The COVID-19 social media infodemic. Sci. Rep. 2020, 10, 16598. [Google Scholar] [CrossRef]

- Shahsavari, S.; Holur, P.; Wang, T.; Tangherlini, T.R.; Roychowdhury, V. Conspiracy in the time of corona: Automatic detection of emerging COVID-19 conspiracy theories in social media and the news. J. Comput. Soc. Sci. 2020, 3, 279–317. [Google Scholar] [PubMed]

- Ahmed, W.; Vidal-Alaball, J.; Downing, J.; López Seguí, F. COVID-19 and the 5G Conspiracy Theory: Social Network Analysis of Twitter Data. J. Med. Internet Res. 2020, 22, e19458. [Google Scholar] [CrossRef] [PubMed]

- Tahmasbi, F.; Schild, L.; Ling, C.; Blackburn, J.; Stringhini, G.; Zhang, Y.; Zannettou, S. “Go eat a bat, Chang!”: On the Emergence of Sinophobic Behavior on Web Communities in the Face of COVID-19. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; pp. 1122–1133. [Google Scholar]

- Dubey, A.D. The Resurgence of Cyber Racism During the COVID-19 Pandemic and its Aftereffects: Analysis of Sentiments and Emotions in Tweets. JMIR Public Health Surveill. 2020, 6, e19833. [Google Scholar] [CrossRef] [PubMed]

- Vosoughi, S.; Roy, D.; Aral, S. The spread of true and false news online. Science 2018, 359, 1146–1151. [Google Scholar] [PubMed]

- Ahmed, W.; López Seguí, F.; Vidal-Alaball, J.; Katz, M.S. COVID-19 and the "Film Your Hospital" Conspiracy Theory: Social Network Analysis of Twitter Data. J. Med. Internet Res. 2020, 22, e22374. [Google Scholar] [CrossRef]

- Sternisko, A.; Cichocka, A.; Cislak, A.; Van Bavel, J.J. National Narcissism predicts the Belief in and the Dissemination of Conspiracy Theories During the COVID-19 Pandemic: Evidence From 56 Countries. Personal. Soc. Psychol. Bull. 2023, 49, 48–65. [Google Scholar] [CrossRef]

- Oleksy, T.; Wnuk, A.; Maison, D.; Łyś, A. Content matters. Different predictors and social consequences of general and government-related conspiracy theories on COVID-19. Personal. Individ. Differ. 2021, 168, 110289. [Google Scholar] [CrossRef]

- Gerts, D.; Shelley, C.D.; Parikh, N.; Pitts, T.; Watson Ross, C.; Fairchild, G.; Vaquera Chavez, N.Y.; Daughton, A.R. “Thought I’d Share First” and Other Conspiracy Theory Tweets from the COVID-19 Infodemic: Exploratory Study. JMIR Public Health Surveill. 2021, 7, e26527. [Google Scholar] [CrossRef] [PubMed]

- Bastos, M.T.; Mercea, D. The Brexit botnet and user-generated hyperpartisan news. Soc. Sci. Comput. Rev. 2019, 37, 38–54. [Google Scholar]

- Gorodnichenko, Y.; Pham, T.; Talavera, O. Social media, sentiment and public opinions: Evidence from Brexit and USElection. Eur. Econ. Rev. 2021, 136, 103772. [Google Scholar]

- Ferrara, E. Disinformation and Social Bot Operations in the Run Up to the 2017 French Presidential Election. First Monday 2017, 22. [Google Scholar] [CrossRef]

- Nizzoli, L.; Tardelli, S.; Avvenuti, M.; Cresci, S.; Tesconi, M.; Ferrara, E. Charting the landscape of online cryptocurrency manipulation. IEEE Access 2020, 8, 113230–113245. [Google Scholar]

- Cresci, S.; Lillo, F.; Regoli, D.; Tardelli, S.; Tesconi, M. Cashtag piggybacking: Uncovering spam and bot activity in stock microblogs on Twitter. ACM Trans. Web 2019, 13, 1–27. [Google Scholar]

- Marlow, T.; Miller, S.; Roberts, J.T. Bots and online climate discourses: Twitter discourse on President Trump’s announcement of US withdrawal from the Paris Agreement. Clim. Policy 2021, 21, 765–777. [Google Scholar]

- Broniatowski, D.A.; Jamison, A.M.; Qi, S.; AlKulaib, L.; Chen, T.; Benton, A.; Quinn, S.C.; Dredze, M. Weaponized health communication: Twitter bots and Russian trolls amplify the vaccine debate. Am. J. Public Health 2018, 108, 1378–1384. [Google Scholar] [PubMed]

- Suarez-Lledo, V.; Alvarez-Galvez, J. Assessing the Role of Social Bots During the COVID-19 Pandemic: Infodemic, Disagreement, and Criticism. J. Med. Internet Res. 2022, 24, e36085. [Google Scholar] [CrossRef] [PubMed]

- Allem, J.-P.; Escobedo, P.; Dharmapuri, L. Cannabis surveillance with Twitter data: Emerging topics and social bots. Am. J. Public Health 2020, 110, 357–362. [Google Scholar]

- Zhang, M.; Qi, X.; Chen, Z.; Liu, J. Social Bots’ Involvement in the COVID-19 Vaccine Discussions on Twitter. Int. J. Environ. Res. Public Health 2022, 19, 1651. [Google Scholar] [PubMed]

- Ruiz-Núñez, C.; Segado-Fernández, S.; Jiménez-Gómez, B.; Hidalgo, P.J.J.; Magdalena, C.S.R.; Pollo, M.d.C.Á.; Santillán-Garcia, A.; Herrera-Peco, I. Bots’ Activity on COVID-19 Pro and Anti-Vaccination Networks: Analysis of Spanish-Written Messages on Twitter. Vaccines 2022, 10, 1240. [Google Scholar] [PubMed]

- Shi, W.; Liu, D.; Yang, J.; Zhang, J.; Wen, S.; Su, J. Social Bots’ Sentiment Engagement in Health Emergencies: A Topic-Based Analysis of the COVID-19 Pandemic Discussions on Twitter. Int. J. Environ. Res. Public Health 2020, 17, 8701. [Google Scholar] [CrossRef] [PubMed]

- Xu, W.; Sasahara, K. Characterizing the roles of bots on Twitter during the COVID-19 infodemic. J. Comput. Soc. Sci. 2022, 5, 591–609. [Google Scholar] [CrossRef] [PubMed]

- Lanius, C.; Weber, R.; MacKenzie, W.I., Jr. Use of bot and content flags to limit the spread of misinformation among social networks: A behavior and attitude survey. Soc. Netw. Anal. Min. 2021, 11, 32. [Google Scholar] [CrossRef]

- Stella, M.; Ferrara, E.; De Domenico, M. Bots increase exposure to negative and inflammatory content in online social systems. Proc. Natl. Acad. Sci. 2018, 115, 12435–12440. [Google Scholar]

- Chang, H.-C.H.; Ferrara, E. Comparative analysis of social bots and humans during the COVID-19 pandemic. J. Comput. Soc. Sci. 2022, 5, 1409–1425. [Google Scholar] [CrossRef] [PubMed]

- Katz, A.; Tepper, Y.; Birk, O.; Eran, A. Web and social media searches highlight menstrual irregularities as a global concern in COVID-19 vaccinations. Sci. Rep. 2022, 12, 1–8. [Google Scholar]

- Babvey, P.; Capela, F.; Cappa, C.; Lipizzi, C.; Petrowski, N.; Ramirez-Marquez, J. Using social media data for assessing children’s exposure to violence during the COVID-19 pandemic. Child Abus. Negl. 2021, 116, 104747. [Google Scholar] [CrossRef]

- Swetland, S.B.; Rothrock, A.N.; Andris, H.; Davis, B.; Nguyen, L.; Davis, P.; Rothrock, S.G. Accuracy of health-related information regarding COVID-19 on Twitter during a global pandemic. World Med. Health Policy 2021, 13, 503–517. [Google Scholar] [CrossRef] [PubMed]

- Antenore, M.; Camacho Rodriguez, J.M.; Panizzi, E. A Comparative Study of Bot Detection Techniques with an Application in Twitter COVID-19 Discourse. Soc. Sci. Comput. Rev. 2022, 08944393211073733. [Google Scholar] [CrossRef]

- Mazza, M.; Cresci, S.; Avvenuti, M.; Quattrociocchi, W.; Tesconi, M. Rtbust: Exploiting temporal patterns for botnet detection on twitter. In Proceedings of the 10th ACM Conference on Web Science, Boston, MA, USA, 30 June 2019–3 July 2019; pp. 183–192. [Google Scholar]

- Martini, F.; Samula, P.; Keller, T.R.; Klinger, U. Bot, or not? Comparing three methods for detecting social bots in five political discourses. Big Data Soc. 2021, 8, 20539517211033566. [Google Scholar] [CrossRef]

- Boon-Itt, S.; Skunkan, Y. Public Perception of the COVID-19 Pandemic on Twitter: Sentiment Analysis and Topic Modeling Study. JMIR Public Health Surveill. 2020, 6, e21978. [Google Scholar] [CrossRef] [PubMed]

- Chen, E.; Lerman, K.; Ferrara, E. Tracking social media discourse about the covid-19 pandemic: Development of a public coronavirus twitter data set. JMIR Public Health Surveill. 2020, 6, e19273. [Google Scholar]

- Shahi, G.K.; Dirkson, A.; Majchrzak, T.A. An exploratory study of COVID-19 misinformation on Twitter. Online Soc. Netw. Media 2021, 22, 100104. [Google Scholar] [CrossRef] [PubMed]

- Hayawi, K.; Shahriar, S.; Serhani, M.A.; Taleb, I.; Mathew, S.S. ANTi-Vax: A novel Twitter dataset for COVID-19 vaccine misinformation detection. Public Health 2022, 203, 23–30. [Google Scholar] [CrossRef]

- Varol, O.; Ferrara, E.; Davis, C.A.; Menczer, F.; Flammini, A. Online human-bot interactions: Detection, estimation, and characterization. In Proceedings of the Eleventh International AAAI Conference on Web and Social Media, Montreal, QC, Canada, 15–18 May 2017. [Google Scholar]

- Yang, K.-C.; Ferrara, E.; Menczer, F. Botometer 101: Social bot practicum for computational social scientists. J. Comput. Soc. Sci. 2022, 5, 1511–1528. [Google Scholar] [CrossRef] [PubMed]

- Rauchfleisch, A.; Kaiser, J. The False positive problem of automatic bot detection in social science research. PLoS ONE 2020, 15, e0241045. [Google Scholar] [CrossRef]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Yang, Y.; Zhang, Y.; Zhang, X.; Cao, Y.; Zhang, J. Spatial evolution patterns of public panic on Chinese social networks amidst the COVID-19 pandemic. Int. J. Disaster Risk Reduct. 2022, 70, 102762. [Google Scholar] [CrossRef] [PubMed]

- Shah, A.M.; Yan, X.; Qayyum, A.; Naqvi, R.A.; Shah, S.J. Mining topic and sentiment dynamics in physician rating websites during the early wave of the COVID-19 pandemic: Machine learning approach. Int. J. Med. Inform. 2021, 149, 104434. [Google Scholar] [CrossRef] [PubMed]

- Cuaton, G.P.; Neo, J.F.V. A topic modeling analysis on the early phase of COVID-19 response in the Philippines. Int. J. Disaster Risk Reduct. 2021, 61, 102367. [Google Scholar] [CrossRef] [PubMed]

- Ginossar, T.; Cruickshank, I.J.; Zheleva, E.; Sulskis, J.; Berger-Wolf, T. Cross-platform spread: Vaccine-related content, sources, and conspiracy theories in YouTube videos shared in early Twitter COVID-19 conversations. Hum. Vaccines Immunother. 2022, 18, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Zhai, Y.; Song, X.; Chen, Y.; Lu, W. A Study of Mobile Medical App User Satisfaction Incorporating Theme Analysis and Review Sentiment Tendencies. Int. J. Environ. Res. Public Health 2022, 19, 7466. [Google Scholar] [PubMed]

- Wasserman, S.; Faust, K. Social Network Analysis: Methods and Applications; Cambridge University Press: Cambridge, UK, 1994. [Google Scholar]

- Zhao, Y.H.; Zhang, J.; Wu, M. Finding Users’ Voice on Social Media: An Investigation of Online Support Groups for Autism-Affected Users on Facebook. Int. J. Environ. Res. Public Health 2019, 16, 4804. [Google Scholar] [CrossRef] [PubMed]

- Ng, Y.-L.; Lin, Z. Exploring conversation topics in conversational artificial intelligence–based social mediated communities of practice. Comput. Hum. Behav. 2022, 134, 107326. [Google Scholar] [CrossRef]

- Viviani, M.; Crocamo, C.; Mazzola, M.; Bartoli, F.; Carrà, G.; Pasi, G. Assessing vulnerability to psychological distress during the COVID-19 pandemic through the analysis of microblogging content. Future Gener. Comput. Syst. 2021, 125, 446–459. [Google Scholar] [CrossRef]

- Sievert, C.; Shirley, K. LDAvis: A method for visualizing and interpreting topics. In Proceedings of the Workshop on Interactive Language Learning, Visualization, and Interfaces, Stroudsburg, PA, USA, 27 June 2014; pp. 63–70. [Google Scholar]

- Chuang, J.; Manning, C.D.; Heer, J. Termite: Visualization techniques for assessing textual topic models. In Proceedings of the International Working Conference on Advanced Visual Interfaces—AVI ‘12, Capri Island, Naples, Italy, 21–25 May 2012; Tortora, G., Levialdi, S., Tucci, M., Eds.; ACM: New York, NY, USA, 2012; p. 74, ISBN 9781450312875. [Google Scholar]

- Freeman, L.C. Centrality in social networks conceptual clarification. Soc. Netw. 1978, 1, 215–239. [Google Scholar]

- Combe, D.; Largeron, C.; Egyed-Zsigmond, E.; Géry, M. A comparative study of social network analysis tools. In Proceedings of the Web Intelligence & Virtual Enterprises, Saint Etienne, France, 11–13 October 2010. [Google Scholar]

- Wang, D.; Zhou, Y.; Qian, Y.; Liu, Y. The echo chamber effect of rumor rebuttal behavior of users in the early stage of COVID-19 epidemic in China. Comput. Hum. Behav. 2022, 128, 107088. [Google Scholar] [CrossRef] [PubMed]

- Castro-Martinez, A.; Méndez-Domínguez, P.; Sosa Valcarcel, A.; Castillo de Mesa, J. Social Connectivity, Sentiment and Participation on Twitter during COVID-19. Int. J. Environ. Res. Public Health 2021, 18, 8390. [Google Scholar] [CrossRef] [PubMed]

- Freitas, C.; Benevenuto, F.; Veloso, A.; Ghosh, S. An empirical study of socialbot infiltration strategies in the Twitter social network. Soc. Netw. Anal. Min. 2016, 6, 1–16. [Google Scholar] [CrossRef]

- Marin, A.; Wellman, B. Social network analysis: An introduction. SAGE Handb. Soc. Netw. Anal. 2011, 11, 25. [Google Scholar]

- González-Bailón, S.; De Domenico, M. Bots are less central than verified accounts during contentious political events. Proc. Natl. Acad. Sci. USA 2021, 118, e2013443118. [Google Scholar] [CrossRef] [PubMed]

- Frutos, R.; Javelle, E.; Barberot, C.; Gavotte, L.; Tissot-Dupont, H.; Devaux, C.A. Origin of COVID-19: Dismissing the Mojiang mine theory and the laboratory accident narrative. Environ. Res. 2022, 204, 112141. [Google Scholar] [CrossRef]

- Nie, J.-B. In the Shadow of Biological Warfare: Conspiracy Theories on the Origins of COVID-19 and Enhancing Global Governance of Biosafety as a Matter of Urgency. J. Bioethical Inq. 2020, 17, 567–574. [Google Scholar] [CrossRef]

- Tsamakis, K.; Tsiptsios, D.; Stubbs, B.; Ma, R.; Romano, E.; Mueller, C.; Ahmad, A.; Triantafyllis, A.S.; Tsitsas, G.; Dragioti, E. Summarising data and factors associated with COVID-19 related conspiracy theories in the first year of the pandemic: A systematic review and narrative synthesis. BMC Psychol. 2022, 10, 244. [Google Scholar] [CrossRef] [PubMed]

- Erokhin, D.; Yosipof, A.; Komendantova, N. COVID-19 Conspiracy Theories Discussion on Twitter. Soc. Media Soc. 2022, 8, 20563051221126051. [Google Scholar] [CrossRef]

- Yang, Z.; Luo, X.; Jia, H.P. Is It All a Conspiracy? Conspiracy Theories and People’s Attitude to COVID-19 Vaccination. Vaccines 2021, 9, 1051. [Google Scholar] [CrossRef]

- Gibson, K.E.; Sanders, C.E.; Lamm, A.J. Information Source Use and Social Media Engagement: Examining their Effects on Origin of COVID-19 Beliefs. SAGE Open 2021, 11, 21582440211061324. [Google Scholar] [CrossRef]

- Bolsen, T.; Palm, R.; Kingsland, J.T. Framing the Origins of COVID-19. Sci. Commun. 2020, 42, 562–585. [Google Scholar] [CrossRef]

- Barker, H.; Chen, C. Pandemic Outbreaks and the Language of Violence: Discussing the Origins of the Black Death and COVID-19. Chest 2022, 162, 196–201. [Google Scholar] [CrossRef] [PubMed]

- Budhwani, H.; Sun, R. Creating COVID-19 stigma by referencing the novel coronavirus as the “Chinese virus” on Twitter: Quantitative analysis of social media data. J. Med. Internet Res. 2020, 22, e19301. [Google Scholar] [CrossRef] [PubMed]

- Chew, C.; Eysenbach, G. Pandemics in the age of Twitter: Content analysis of Tweets during the 2009 H1N1 outbreak. PLoS ONE 2010, 5, e14118. [Google Scholar] [CrossRef] [PubMed]

- Cody, E.M.; Reagan, A.J.; Mitchell, L.; Dodds, P.S.; Danforth, C.M. Climate change sentiment on Twitter: An unsolicited public opinion poll. PLoS ONE 2015, 10, e0136092. [Google Scholar] [CrossRef] [PubMed]

- Colleoni, E.; Rozza, A.; Arvidsson, A. Echo chamber or public sphere? Predicting political orientation and measuring political homophily in Twitter using big data. J. Commun. 2014, 64, 317–332. [Google Scholar] [CrossRef]

- Chen, K.; Duan, Z.; Yang, S. Twitter as research data: Tools, costs, skill sets, and lessons learned. Politics Life Sci. 2022, 41, 114–130. [Google Scholar] [CrossRef]

| No. | Keyword | Frequency | No. | Keyword | Frequency |

|---|---|---|---|---|---|

| 1 | people | 9039 | 11 | home | 3284 |

| 2 | vaccine | 8806 | 12 | mask | 3208 |

| 3 | lockdown | 6509 | 13 | first | 3104 |

| 4 | case | 4965 | 14 | China | 3020 |

| 5 | India | 4606 | 15 | state | 2864 |

| 6 | need | 3909 | 16 | please | 2797 |

| 7 | death | 3903 | 17 | know | 2792 |

| 8 | Wuhan | 3903 | 18 | vaccination | 2729 |

| 9 | government | 3876 | 19 | life | 2608 |

| 10 | health | 3436 | 20 | country | 2577 |

| Before Text Preprocessing | After Text Preprocessing |

|---|---|

| RT @voguemagazine: Read how the healing power of Lodge 49 helped one writer break through their pandemic fog. https://t.co/V1JOVGKTXy accessed on 9 October 2022 | [vogue magazine, read, healing, power, lodge, helped, writer, break] |

| No. | Topic | High-Frequency Words |

|---|---|---|

| 0 | coronavirus origin-tracing | virus, china, wuhan, since, beginning, chinese, fauci, origin, trump, biden |

| 1 | Prevention and vaccine | lockdown, vaccine, best, headline, myhandsratede, since, second, government, rate, johnson |

| 2 | Social influence | home, stay, care, vaccine, people, lockdown, need, food, worker, doctor |

| 3 | Family influence | child, lost, parent, student, narendramodi, please, exam, govt, future, government |

| 4 | Virus variation | case, death, health, india, lockdown, total, variant, vote, indian, died |

| 5 | Preventive measures | vaccine, people, mask, wear, know, first, staff, kid, never, stop |

| 6 | Health effects | family, every, patient, panic, positive, friend, hospital, someone, buying, president |

| 7 | The public interest | people, lockdown, thank, first, country, free, vaccinated, around, social, mean |

| No. | Account | In-Degree | No. | Account | In-Degree |

|---|---|---|---|---|---|

| 1 | JackPosobiec * | 504 | 16 | RyanAFournier * | 213 |

| 2 | tony_ferraro7 | 442 | 17 | DrLiMengYAN1 | 208 |

| 3 | ANI * | 377 | 18 | catturd2 | 206 |

| 4 | BIGHIT_MUSIC * | 344 | 19 | thewire_in * | 200 |

| 5 | DrEricDing * | 335 | 20 | doctor_oxford * | 195 |

| 6 | TheAnuragTyagi | 314 | 21 | GreenSwelfares | 187 |

| 7 | OpIndia_com * | 310 | 22 | RealCandaceO * | 183 |

| 8 | RahulGandhi * | 280 | 23 | charliekirk11 | 176 |

| 9 | narendramodi * | 276 | 24 | BernieSanders * | 175 |

| 10 | MrsGandhi * | 262 | 25 | nypost * | 175 |

| 11 | POTUS * | 255 | 26 | MyHandsRatedE | 175 |

| 12 | tarak9999 * | 247 | 27 | Reuters * | 171 |

| 13 | DanPriceSeattle * | 226 | 28 | DSSNewsUpdates * | 167 |

| 14 | BreitbartNews * | 224 | 29 | JamesMelville * | 166 |

| 15 | AdvMamtaSharma | 223 | 30 | AskAnshul * | 163 |

| No. | Account | Out-Degree | No. | Account | Out-Degree |

|---|---|---|---|---|---|

| 1 | CoronaUpdateBot | 21 | 16 | CyberSecurityN8 | 9 |

| 2 | fengmanlou11 | 19 | 17 | DipMond81427857 | 9 |

| 3 | viralvideovlogs | 18 | 18 | Ken34205423 | 9 |

| 4 | HKLongman | 16 | 19 | PhotoLawn | 9 |

| 5 | Covid19Help10 | 15 | 20 | TALI189 | 9 |

| 6 | world_news_eng | 14 | 21 | peterandann | 9 |

| 7 | BotJammu | 13 | 22 | trackntracer | 9 |

| 8 | SLRTBot | 13 | 23 | roadtoserfdom3 | 8 |

| 9 | MiniMooJack | 12 | 24 | PankajC47069041 | 8 |

| 10 | aOraxoSizcr8Dlh | 12 | 25 | AyanAdhikari13 | 8 |

| 11 | KRS_Deshsevak | 11 | 26 | CoronaBot20 | 8 |

| 12 | scouts_uk | 11 | 27 | KRISHANMOHANKR6 | 8 |

| 13 | IRFANNKPCC | 10 | 28 | MKSafdar | 8 |

| 14 | hekhwthktiingh1 | 10 | 29 | MonaSmitte | 8 |

| 15 | B0tSci | 9 | 30 | Rubydawne1 | 8 |

| No. | Account | Betweenness Centrality | No. | Account | Betweenness Centrality |

|---|---|---|---|---|---|

| 1 | Nitin043 | 440.5 | 16 | dev009_sk | 123 |

| 2 | Ansaar_Al1 | 408 | 17 | chrischirp | 111 |

| 3 | yoursurajnaik | 363 | 18 | souravramyani | 111 |

| 4 | SomenMitra3 | 355.5 | 19 | RavinderKapur2 | 104 |

| 5 | Sitansh64621089 | 349.5 | 20 | sonumehrauk | 104 |

| 6 | PankajC47069041 | 322.5 | 21 | gourav_chakr | 99 |

| 7 | Ndlotus1 | 279.5 | 22 | doctor_oxford* | 94 |

| 8 | SPanda4485 | 269 | 23 | RajVB6 | 93 |

| 9 | imChikku_ | 256 | 24 | fascinatorfun | 89 |

| 10 | Magamiilyas | 206.5 | 25 | chinmoyee5 | 83 |

| 11 | Thecongressian | 197.5 | 26 | fekubawa | 81 |

| 12 | RamshettyVishnu | 186 | 27 | ukiswitheu | 80 |

| 13 | Drmandakini3 | 136 | 28 | BalharaAbhijeet | 78 |

| 14 | DrINCsupporter | 126 | 29 | erdocAA | 75 |

| 15 | Jagjit_INC | 125 | 30 | Abhishe32226771 | 75 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Weng, Z.; Lin, A. Public Opinion Manipulation on Social Media: Social Network Analysis of Twitter Bots during the COVID-19 Pandemic. Int. J. Environ. Res. Public Health 2022, 19, 16376. https://doi.org/10.3390/ijerph192416376

Weng Z, Lin A. Public Opinion Manipulation on Social Media: Social Network Analysis of Twitter Bots during the COVID-19 Pandemic. International Journal of Environmental Research and Public Health. 2022; 19(24):16376. https://doi.org/10.3390/ijerph192416376

Chicago/Turabian StyleWeng, Zixuan, and Aijun Lin. 2022. "Public Opinion Manipulation on Social Media: Social Network Analysis of Twitter Bots during the COVID-19 Pandemic" International Journal of Environmental Research and Public Health 19, no. 24: 16376. https://doi.org/10.3390/ijerph192416376

APA StyleWeng, Z., & Lin, A. (2022). Public Opinion Manipulation on Social Media: Social Network Analysis of Twitter Bots during the COVID-19 Pandemic. International Journal of Environmental Research and Public Health, 19(24), 16376. https://doi.org/10.3390/ijerph192416376