A Brief Online Implicit Bias Intervention for School Mental Health Clinicians

Abstract

:1. Introduction

1.1. Implicit Bias Interventions

1.2. Current Studies

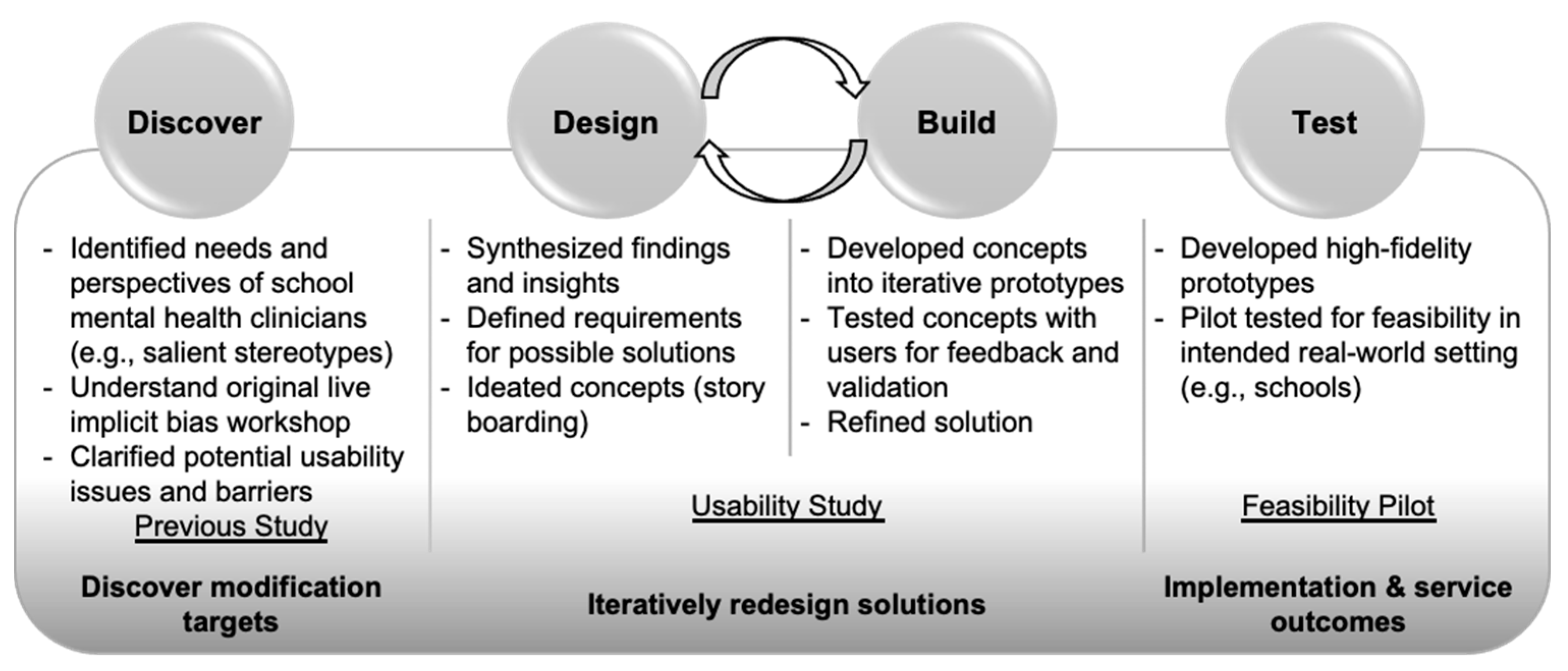

2. Intervention Development Study

2.1. Materials and Methods

2.1.1. Participants

2.1.2. Procedures

2.1.3. Measures

The Intervention Usability Scale (IUS)

Implicit Bias Knowledge Quiz

Concern for Discrimination Scale (CfD)

2.1.4. Data Analysis

2.2. Results

2.2.1. Qualitative Findings

2.2.2. Quantitative Findings

2.2.3. The VIBRANT Intervention

- VIBRANT Training Components

- 1. Learner engagement strategies & introduction (5 min)

- Review SMH clinicians’ existing relevant skills

- Highlight relevance of training to clinical practice

- Introduce prejudice & stereotyping w/a personally relevant example—stereotypes of “SMH counselors”

- 2. Didactic material to normalize implicit bias

- Evolutionary function, ubiquity (5 min)

- Automaticity of implicit prejudice & stereotyping (5 min)

- Photo observation task

- Stroop Task (color naming)

- 3. Implicit Association Test (7 minute)

- Learner completes Race IAT & receives feedback

- Addressing the bias blind spot (Pronin et al., 2002) [55]

- 4. Bias management strategies (case-based learning)

- Seeking-commonality (5 min)

- Perspective gaining (5 min)

- Counter stereotyping & individuation (10 min)

- 5. Strengthen Implementation Intentions (3 min)

- Make public commitment (email colleagues)

3. Feasibility Pilot Study

3.1. Materials and Methods

3.1.1. Participants

3.1.2. Procedures

3.1.3. Measures

Measures of Acceptability, Appropriateness, and Feasibility

Caseload Service Logs

Implicit Association Test (IAT)

3.1.4. Data Analysis

Data Validation and Identifying Outliers

Clinician Perception of Usability and Feasibility

Clinicians’ Implicit Bias Knowledge

Clinicians’ Use of De-Biasing Skills and Perceived Rapport with Students

Clinicians’ Implicit Bias

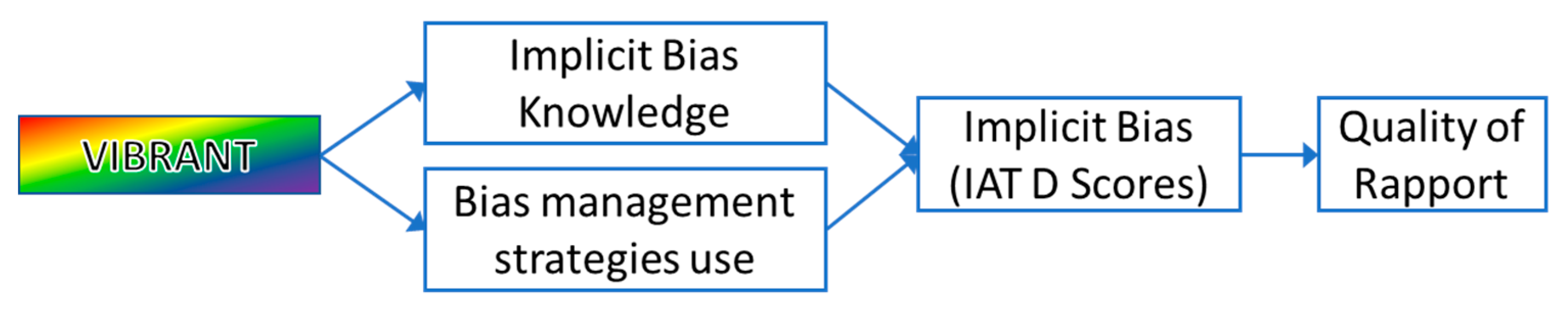

Exploring Mechanistic Relationships

3.2. Results

3.2.1. Usability and Feasibility

3.2.2. Implicit Bias Knowledge

3.2.3. Use of VIBRANT Bias-Management Strategies and Rapport

3.2.4. Growth in Use of De-Biasing Strategies and Rapport over Time

3.2.5. Clinicians’ Implicit Bias

3.2.6. Exploring Mechanistic Relationships

4. Discussion

4.1. Limitations

4.2. Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zestcott, C.A.; Blair, I.V.; Stone, J. Examining the Presence, Consequences, and Reduction of Implicit Bias in Health Care: A Narrative Review. Group Process. Intergroup Relat. 2016, 19, 528–542. [Google Scholar] [CrossRef] [Green Version]

- Ricks, T.N.; Abbyad, C.; Polinard, E. Undoing Racism and Mitigating Bias Among Healthcare Professionals: Lessons Learned During a Systematic Review. J. Racial Ethn. Health Disparities 2021, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Sabin, J.A.; Greenwald, A.G. The Influence of Implicit Bias on Treatment Recommendations for 4 Common Pediatric Conditions: Pain, Urinary Tract Infection, Attention Deficit Hyperactivity Disorder, and Asthma. Am. J. Public Health 2012, 102, 988–995. [Google Scholar] [CrossRef] [PubMed]

- Litchfield, I.; Moiemen, N.; Greenfield, S. Barriers to Evidence-Based Treatment of Serious Burns: The Impact of Implicit Bias on Clinician Perceptions of Patient Adherence. J. Burn Care Res. 2020, 41, 1297–1300. [Google Scholar] [CrossRef] [PubMed]

- Blair, I.V.; Steiner, J.F.; Fairclough, D.L.; Hanratty, R.; Price, D.W.; Hirsh, H.K.; Wright, L.A.; Bronsert, M.; Karimkhani, E.; Magid, D.J.; et al. Clinicians’ Implicit Ethnic/Racial Bias and Perceptions of Care among Black and Latino Patients. Ann. Fam. Med. 2013, 11, 43–52. [Google Scholar] [CrossRef] [Green Version]

- Black Parker, C.; McCall, W.V.; Spearman-McCarthy, E.V.; Rosenquist, P.; Cortese, N. Clinicians’ Racial Bias Contributing to Disparities in Electroconvulsive Therapy for Patients From Racial-Ethnic Minority Groups. Psychiatr. Serv. 2021, 72, 684–690. [Google Scholar] [CrossRef]

- Fadus, M.C.; Ginsburg, K.R.; Sobowale, K.; Halliday-Boykins, C.A.; Bryant, B.E.; Gray, K.M.; Squeglia, L.M. Unconscious Bias and the Diagnosis of Disruptive Behavior Disorders and ADHD in African American and Hispanic Youth. Acad. Psychiatry 2020, 44, 95–102. [Google Scholar] [CrossRef] [Green Version]

- Gopal, D.P.; Chetty, U.; O’Donnell, P.; Gajria, C.; Blackadder-Weinstein, J. Implicit Bias in Healthcare: Clinical Practice, Research and Decision Making. Future Healthc. J. 2021, 8, 40–48. [Google Scholar] [CrossRef]

- Fiscella, K.; Epstein, R.M.; Griggs, J.J.; Marshall, M.M.; Shields, C.G. Is Physician Implicit Bias Associated with Differences in Care by Patient Race for Metastatic Cancer-Related Pain? PLoS ONE 2021, 16, e0257794. [Google Scholar] [CrossRef]

- FitzGerald, C.; Hurst, S. Implicit Bias in Healthcare Professionals: A Systematic Review. BMC Med. Ethics 2017, 18, 19. [Google Scholar] [CrossRef] [Green Version]

- Maina, I.W.; Belton, T.D.; Ginzberg, S.; Singh, A.; Johnson, T.J. A Decade of Studying Implicit Racial/Ethnic Bias in Healthcare Providers Using the Implicit Association Test. Soc. Sci. Med. 2018, 199, 219–229. [Google Scholar] [CrossRef]

- Hall, W.J.; Chapman, M.V.; Lee, K.M.; Merino, Y.M.; Thomas, T.W.; Payne, B.K.; Eng, E.; Day, S.H.; Coyne-Beasley, T. Implicit Racial/Ethnic Bias Among Health Care Professionals and Its Influence on Health Care Outcomes: A Systematic Review. Am. J. Public Health 2015, 105, e60–e76. [Google Scholar] [CrossRef]

- Dehon, E.; Weiss, N.; Jones, J.; Faulconer, W.; Hinton, E.; Sterling, S. A Systematic Review of the Impact of Physician Implicit Racial Bias on Clinical Decision Making. Acad. Emerg. Med. 2017, 24, 895–904. [Google Scholar] [CrossRef] [Green Version]

- Mulchan, S.S.; Wakefield, E.O.; Santos, M. What COVID-19 Teaches Us About Implicit Bias in Pediatric Health Care. J. Pediatr. Psychol. 2021, 46, 138–143. [Google Scholar] [CrossRef] [PubMed]

- Nosek, B.A.; Smyth, F.L.; Hansen, J.J.; Devos, T.; Lindner, N.M.; Ranganath, K.A.; Smith, C.T.; Olson, K.R.; Chugh, D.; Greenwald, A.G. Pervasiveness and Correlates of Implicit Attitudes and Stereotypes. Eur. Rev. Soc. Psychol. 2007, 18, 36–88. [Google Scholar] [CrossRef]

- Nosek, B.A. Moderators of the Relationship between Implicit and Explicit Evaluation. J. Exp. Psychol. Gen. 2005, 134, 565. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Harris, R.; Cormack, D.; Stanley, J.; Curtis, E.; Jones, R.; Lacey, C. Ethnic Bias and Clinical Decision-Making among New Zealand Medical Students: An Observational Study. BMC Med. Educ. 2018, 18, 18. [Google Scholar] [CrossRef] [PubMed]

- Johnson, T.J.; Winger, D.G.; Hickey, R.W.; Switzer, G.E.; Miller, E.; Nguyen, M.B.; Saladino, R.A.; Hausmann, L.R. Comparison of Physician Implicit Racial Bias toward Adults versus Children. Acad. Pediatr. 2017, 17, 120–126. [Google Scholar] [CrossRef] [Green Version]

- Puumala, S.E.; Burgess, K.M.; Kharbanda, A.B.; Zook, H.G.; Castille, D.M.; Pickner, W.J.; Payne, N.R. The Role of Bias by Emergency Department Providers in Care for American Indian Children. Med. Care 2016, 54, 562. [Google Scholar] [CrossRef] [Green Version]

- Tsai, J.W.; Kesselheim, J.C. Addressing Implicit Bias in Pediatric Hematology-Oncology. Pediatr. Blood Cancer 2020, 67, e28204. [Google Scholar] [CrossRef] [PubMed]

- Sabin, J.A.; Rivara, F.P.; Greenwald, A.G. Physician Implicit Attitudes and Stereotypes about Race and Quality of Medical Care. Med. Care 2008, 46, 678–685. [Google Scholar] [CrossRef] [PubMed]

- Amodio, D.M.; Swencionis, J.K. Proactive Control of Implicit Bias: A Theoretical Model and Implications for Behavior Change. J. Pers. Soc. Psychol. 2018, 115, 255–275. [Google Scholar] [CrossRef] [PubMed]

- Whitford, D.K.; Emerson, A.M. Empathy Intervention to Reduce Implicit Bias in Pre-Service Teachers. Psychol. Rep. 2019, 122, 670–688. [Google Scholar] [CrossRef] [PubMed]

- Chapman, M.V.; Hall, W.J.; Lee, K.; Colby, R.; Coyne-Beasley, T.; Day, S.; Eng, E.; Lightfoot, A.F.; Merino, Y.; Simán, F.M.; et al. Making a Difference in Medical Trainees’ Attitudes toward Latino Patients: A Pilot Study of an Intervention to Modify Implicit and Explicit Attitudes. Soc. Sci. Med. 2018, 199, 202–208. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lai, C.K.; Marini, M.; Lehr, S.A.; Cerruti, C.; Shin, J.-E.L.; Joy-Gaba, J.A.; Ho, A.K.; Teachman, B.A.; Wojcik, S.P.; Koleva, S.P. Reducing Implicit Racial Preferences: I. A Comparative Investigation of 17 Interventions. J. Exp. Psychol. Gen. 2014, 143, 1765. [Google Scholar] [CrossRef] [PubMed]

- Blair, I.V.; Ma, J.E.; Lenton, A.P. Imagining Stereotypes Away: The Moderation of Implicit Stereotypes through Mental Imagery. J. Pers. Soc. Psychol. 2001, 81, 828. [Google Scholar] [CrossRef]

- Dasgupta, N.; Greenwald, A.G. On the Malleability of Automatic Attitudes: Combating Automatic Prejudice with Images of Admired and Disliked Individuals. J. Pers. Soc. Psychol. 2001, 81, 800. [Google Scholar] [CrossRef]

- Lai, C.K.; Skinner, A.L.; Cooley, E.; Murrar, S.; Brauer, M.; Devos, T.; Calanchini, J.; Xiao, Y.J.; Pedram, C.; Marshburn, C.K. Reducing Implicit Racial Preferences: II. Intervention Effectiveness across Time. J. Exp. Psychol. Gen. 2016, 145, 1001. [Google Scholar] [CrossRef]

- Forscher, P.S.; Lai, C.K.; Axt, J.R.; Ebersole, C.R.; Herman, M.; Devine, P.G.; Nosek, B.A. A Meta-Analysis of Procedures to Change Implicit Measures. J. Pers. Soc. Psychol. 2019, 117, 522–559. [Google Scholar] [CrossRef] [Green Version]

- Carnes, M.; Devine, P.G.; Baier Manwell, L.; Byars-Winston, A.; Fine, E.; Ford, C.E.; Forscher, P.; Isaac, C.; Kaatz, A.; Magua, W.; et al. The Effect of an Intervention to Break the Gender Bias Habit for Faculty at One Institution: A Cluster Randomized, Controlled Trial. Acad. Med. 2015, 90, 221–230. [Google Scholar] [CrossRef]

- Stone, J.; Moskowitz, G.B.; Zestcott, C.A.; Wolsiefer, K.J. Testing Active Learning Workshops for Reducing Implicit Stereotyping of Hispanics by Majority and Minority Group Medical Students. Stigma Health 2020, 5, 94–103. [Google Scholar] [CrossRef] [PubMed]

- Devine, P.G.; Forscher, P.S.; Austin, A.J.; Cox, W.T.L. Long-Term Reduction in Implicit Race Bias: A Prejudice Habit-Breaking Intervention. J. Exp. Soc. Psychol. 2012, 48, 1267–1278. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Devine, P.G.; Forscher, P.S.; Cox, W.T.L.; Kaatz, A.; Sheridan, J.; Carnes, M. A Gender Bias Habit-Breaking Intervention Led to Increased Hiring of Female Faculty in STEMM Departments. J. Exp. Soc. Psychol. 2017, 73, 211–215. [Google Scholar] [CrossRef]

- Drwecki, B.B.; Moore, C.F.; Ward, S.E.; Prkachin, K.M. Reducing Racial Disparities in Pain Treatment: The Role of Empathy and Perspective-Taking. Pain 2011, 152, 1001–1006. [Google Scholar] [CrossRef] [PubMed]

- Blatt, B.; LeLacheur, S.F.; Galinsky, A.D.; Simmens, S.J.; Greenberg, L. Does Perspective-Taking Increase Patient Satisfaction in Medical Encounters? Acad. Med. 2010, 85, 1445–1452. [Google Scholar] [CrossRef]

- Castillo, L.G.; Brossart, D.F.; Reyes, C.J.; Conoley, C.W.; Phoummarath, M.J. The Influence of Multicultural Training on Perceived Multicultural Counseling Competencies and Implicit Racial Prejudice. J. Multicult. Couns. Dev. 2007, 35, 243–255. [Google Scholar] [CrossRef]

- Boysen, G.A.; Vogel, D.L. The Relationship between Level of Training, Implicit Bias, and Multicultural Competency among Counselor Trainees. Train. Educ. Prof. Psychol. 2008, 2, 103. [Google Scholar] [CrossRef] [Green Version]

- Katz, A.D.; Hoyt, W.T. The Influence of Multicultural Counseling Competence and Anti-Black Prejudice on Therapists’ Outcome Expectancies. J. Couns. Psychol. 2014, 61, 299. [Google Scholar] [CrossRef]

- Santamaría-García, H.; Baez, S.; García, A.M.; Flichtentrei, D.; Prats, M.; Mastandueno, R.; Sigman, M.; Matallana, D.; Cetkovich, M.; Ibáñez, A. Empathy for Others’ Suffering and Its Mediators in Mental Health Professionals. Sci. Rep. 2017, 7, 1–13. [Google Scholar] [CrossRef]

- Merino, Y.; Adams, L.; Hall, W.J. Implicit Bias and Mental Health Professionals: Priorities and Directions for Research. Psychiatr. Serv. 2018, 69, 723–725. [Google Scholar] [CrossRef] [Green Version]

- Duong, M.T.; Bruns, E.J.; Lee, K.; Cox, S.; Coifman, J.; Mayworm, A.; Lyon, A.R. Rates of Mental Health Service Utilization by Children and Adolescents in Schools and Other Common Service Settings: A Systematic Review and Meta-Analysis. Adm. Policy Ment. Health Ment. Health Serv. Res. 2020, 48, 420–439. [Google Scholar] [CrossRef]

- Lyon, A.R.; Munson, S.A.; Renn, B.N.; Atkins, D.C.; Pullmann, M.D.; Friedman, E.; Areán, P.A. Use of Human-Centered Design to Improve Implementation of Evidence-Based Psychotherapies in Low-Resource Communities: Protocol for Studies Applying a Framework to Assess Usability. JMIR Res. Protoc. 2019, 8, e14990. [Google Scholar] [CrossRef]

- Turner, C.; Lewis, J.; Nielsen, J. Determining Usability Test Sample Size. Int. Encycl. Ergon. Hum. Factors 2006, 3, 3084–3088. [Google Scholar]

- Tullis, T.; Albert, B. Measuring the User Experience: Collecting, Analyzing, and Presenting Usability Metrics, 2nd ed.; Interactive Technologies; Morgan Kaufmann Publishers Inc.: Burlington, MA, USA; Elsevier Science & Technology: Amsterdam, The Netherlands, 2013; ISBN 978-0-12-415781-1. [Google Scholar]

- Nielsen, J. Iterative User-Interface Design. Computer 1993, 26, 32–41. [Google Scholar] [CrossRef]

- Hwang, W.; Salvendy, G. Number of People Required for Usability Evaluation: The 10 ± 2 Rule. Commun. ACM 2010, 53, 130–133. [Google Scholar] [CrossRef]

- Lyon, A.R.; Liu, F.F.; Connors, E.H.; King, K.M.; Coifman, J.I.; McRee, E.; Cook, H.; Ludwig, K.A.; Dorsey, S.; Law, A.; et al. Is More Consultation Better? Initial Trial Examining the Effects of Consultation Dose Following Online Training for Measurement-Based Care on Implementation Mechanisms and Outcomes. under review.

- Hoge, M.A.; Stuart, G.W.; Morris, J.A.; Huey, L.Y.; Flaherty, M.T.; Paris, M., Jr. Behavioral Health Workforce Development in the United States. In Substance Abuse and Addiction: Breakthroughs in Research and Practice; IGI Global: Hershey, PA, USA, 2019; pp. 433–455. [Google Scholar]

- Ludwig, K. The Brief Intervention Strategy for School Clinicians (BRISC): An Overview. Presented at the 2019 Northwest PBIS Conference, Portland, OR, USA, 28 October 2019. [Google Scholar]

- Lyon, A.R.; Pullmann, M.D.; Jacobson, J.; Osterhage, K.; Al Achkar, M.; Renn, B.N.; Munson, S.A.; Areán, P.A. Assessing the Usability of Complex Psychosocial Interventions: The Intervention Usability Scale. Implement. Res. Pract. 2021, 2, 2633489520987828. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A “quick and Dirty” Usability Scale. In Usability Evaluation in Industry; CRC Press: London, UK, 1996; pp. 207–212. ISBN 978-0-429-15701-1. [Google Scholar]

- Sauro, J. A Practical Guide to the System Usability Scale: Background, Benchmarks & Best Practices; Measuring Usability LLC: Denver, CO, USA, 2011. [Google Scholar]

- Gutierrez, B.; Kaatz, A.; Chu, S.; Ramirez, D.; Samson-Samuel, C.; Carnes, M. “Fair Play”: A Videogame Designed to Address Implicit Race Bias through Active Perspective Taking. Games Health J. 2014, 3, 371–378. [Google Scholar] [CrossRef] [PubMed]

- Stone, J.; Moskowitz, G.B. Non-Conscious Bias in Medical Decision Making: What Can Be Done to Reduce It? Med. Educ. 2011, 45, 768–776. [Google Scholar] [CrossRef]

- Pronin, E.; Lin, D.Y.; Ross, L. The bias blind spot: Perceptions of bias in self versus others. Personal. Soc. Psychol. Bull. 2002, 28, 369–381. [Google Scholar] [CrossRef]

- Sim, J. Should Treatment Effects Be Estimated in Pilot and Feasibility Studies? Pilot Feasibility Stud. 2019, 5, 107. [Google Scholar] [CrossRef]

- Pearson, N.; Naylor, P.-J.; Ashe, M.C.; Fernandez, M.; Yoong, S.L.; Wolfenden, L. Guidance for Conducting Feasibility and Pilot Studies for Implementation Trials. Pilot Feasibility Stud. 2020, 6, 167. [Google Scholar] [CrossRef]

- Bruns, E.J.; Pullmann, M.D.; Nicodimos, S.; Lyon, A.R.; Ludwig, K.; Namkung, N.; McCauley, E. Pilot Test of an Engagement, Triage, and Brief Intervention Strategy for School Mental Health. School Ment. Health 2019, 11, 148–162. [Google Scholar] [CrossRef]

- Bruns, E.; Lee, K.; Davis, C.; Pullmann, M.; Ludwig, K. Effectiveness of a Brief School-Based Mental Health Strategy for High School Students: Results of a Randomized Study. Prev. Sci. Forthcoming.

- Connors, E.; Lawson, G.; Wheatley-Rowe, D.; Hoover, S. Exploration, Preparation, and Implementation of Standardized Assessment in a Multi-Agency School Behavioral Health Network. Adm. Policy Ment. Health Ment. Health Serv. Res. 2021, 48, 464–481. [Google Scholar] [CrossRef]

- Weiner, B.J.; Lewis, C.C.; Stanick, C.; Powell, B.J.; Dorsey, C.N.; Clary, A.S.; Boynton, M.H.; Halko, H. Psychometric Assessment of Three Newly Developed Implementation Outcome Measures. Implement. Sci. 2017, 12, 108. [Google Scholar] [CrossRef] [PubMed]

- Greenwald, A.G. The Implicit Association Test at Age 20: What Is Known and What Is Not Known about Implicit Bias; University of Washington: Seattle, WA, USA, 2020. [Google Scholar]

- Bar-Anan, Y.; Nosek, B.A. A Comparative Investigation of Seven Indirect Attitude Measures. Behav. Res. Methods 2014, 46, 668–688. [Google Scholar] [CrossRef] [Green Version]

- Greenwald, A.G.; Nosek, B.A.; Banaji, M.R. Understanding and Using the Implicit Association Test: I. An Improved Scoring Algorithm. J. Pers. Soc. Psychol. 2003, 85, 197. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, F.F.; McRee, E.; Coifman, J.; Stone, J.; Lai, C.K.; Lyon, A.R. School Mental Health Professionals’ Stereotype Knowledge and Implicit Bias toward Black and Latinx Youth. under review.

- Miller, J. Reaction Time Analysis with Outlier Exclusion: Bias Varies with Sample Size. Q. J. Exp. Psychol. 1991, 43, 907–912. [Google Scholar] [CrossRef] [PubMed]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- IBM Corp. IBM SPSS Statistics for Windows; IBM Corp.: Armonk, NY, USA, 2021. [Google Scholar]

- Hedges, L.V. Distribution Theory for Glass’s Estimator of Effect Size and Related Estimators. J. Educ. Behav. Stat. 1981, 6, 107–128. [Google Scholar] [CrossRef]

- McCambridge, J.; Witton, J.; Elbourne, D.R. Systematic Review of the Hawthorne Effect: New Concepts Are Needed to Study Research Participation Effects. J. Clin. Epidemiol. 2014, 67, 267–277. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thabane, L.; Mbuagbaw, L.; Zhang, S.; Samaan, Z.; Marcucci, M.; Ye, C.; Thabane, M.; Giangregorio, L.; Dennis, B.; Kosa, D. A Tutorial on Sensitivity Analyses in Clinical Trials: The What, Why, When and How. BMC Med. Res. Methodol. 2013, 13, 92. [Google Scholar] [CrossRef] [Green Version]

| VIBRANT Data Collection | T1 | T2 | T3 | T4 | T5 | T6 |

|---|---|---|---|---|---|---|

| Base-Line | Post | Week 2 | Week 6 | Week 14 | Week 24 | |

| Clinician Demographics Form | X | |||||

| Intervention Usability Scale | x | |||||

| Acceptability, Appropriateness, Feasibility Measures | x | |||||

| Qualitative Feedback | x | x | ||||

| Implicit Bias Knowledge Quiz | X | x | x | x | ||

| Implicit Association Test | X | x | x | x | x | |

| Clinician Caseload Service Log | x | x | x | x |

| Overall Sample | Black/Latinx | Non-Black/Latinx | |

|---|---|---|---|

| Strategies | |||

| Weeks Post-Training | M(SD) | M(SD) | M(SD) |

| 2 weeks | 1.57 (0.79) | 1.55 (0.91) | 1.67 (0.62) |

| 6 weeks | 1.69 (0.92) | 1.71 (1.01) | 1.75 (0.81) |

| 14 weeks | 2.00 (0.84) | 2.05 (0.73) | 2.06 (0.92) |

| 24 weeks | 1.74 (0.83) | 1.80 (0.80) | 1.72 (0.85) |

| B(SE) | B(SE) | B(SE) | |

| Intercept | 1.66(0.19) | 1.64(0.18) | 1.64(0.18) |

| Slope | 0.04 (0.01) * | 0.04(0.01) * | 0.04(0.01) * |

| Rapport | |||

| Weeks Post-Training | M(SD) | M(SD) | M(SD) |

| 2 weeks | 7.36 (1.68) | 7.41 (1.71) | 7.38 (1.51) |

| 6 weeks | 7.44 (1.67) | 7.34 (1.77) | 7.63 (1.58) |

| 14 weeks | 7.69 (1.57) | 7.97 (1.53) | 7.29 (1.58) |

| 24 weeks | 7.76 (1.46) | 8.00 (1.63) | 7.55 (1.52) |

| B(SE) | B(SE) | B(SE) | |

| Intercept | 7.55 (0.37) | 7.59 (0.38) | 7.59 (0.38) |

| Slope | 0.02 (0.01) * | 0.03 (0.01) *** | 0.01 (0.01) |

| Strategy Use | |||

| Implicit Association Test | Week 2 | Week 14 | Week 24 |

| Black/White_Obedient/Disobedient | −0.52732 | −0.35456 | −0.14593 |

| Black/White_Good/Bad | −0.03476 | 0.252209 | −0.40286 |

| Latinx/White_Academic Failure/Success | −0.48102 | −0.45801 | −0.38922 |

| Latinx/White_Good/Bad | −0.35073 | 0.046415 | 0.158649 |

| Rapport w/Reference Group | |||

| Implicit Association Test | Week 2 | Week 14 | Week 24 |

| Black/White_Obedien/Disobedient | −0.68675 | −0.76049 | −0.27601 |

| Black/White_Good/Bad | −0.03455 | −0.36688 | −0.12346 |

| Latinx/White_Academic Failure/Success | −0.17831 | −0.52114 | −0.66884 |

| Latinx/White_Good/Bad | −0.19024 | −0.20642 | −0.46028 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, F.F.; Coifman, J.; McRee, E.; Stone, J.; Law, A.; Gaias, L.; Reyes, R.; Lai, C.K.; Blair, I.V.; Yu, C.-l.; et al. A Brief Online Implicit Bias Intervention for School Mental Health Clinicians. Int. J. Environ. Res. Public Health 2022, 19, 679. https://doi.org/10.3390/ijerph19020679

Liu FF, Coifman J, McRee E, Stone J, Law A, Gaias L, Reyes R, Lai CK, Blair IV, Yu C-l, et al. A Brief Online Implicit Bias Intervention for School Mental Health Clinicians. International Journal of Environmental Research and Public Health. 2022; 19(2):679. https://doi.org/10.3390/ijerph19020679

Chicago/Turabian StyleLiu, Freda F., Jessica Coifman, Erin McRee, Jeff Stone, Amy Law, Larissa Gaias, Rosemary Reyes, Calvin K. Lai, Irene V. Blair, Chia-li Yu, and et al. 2022. "A Brief Online Implicit Bias Intervention for School Mental Health Clinicians" International Journal of Environmental Research and Public Health 19, no. 2: 679. https://doi.org/10.3390/ijerph19020679

APA StyleLiu, F. F., Coifman, J., McRee, E., Stone, J., Law, A., Gaias, L., Reyes, R., Lai, C. K., Blair, I. V., Yu, C.-l., Cook, H., & Lyon, A. R. (2022). A Brief Online Implicit Bias Intervention for School Mental Health Clinicians. International Journal of Environmental Research and Public Health, 19(2), 679. https://doi.org/10.3390/ijerph19020679