Abstract

Pneumoconiosis is a group of occupational lung diseases induced by mineral dust inhalation and subsequent lung tissue reactions. It can eventually cause irreparable lung damage, as well as gradual and permanent physical impairments. It has affected millions of workers in hazardous industries throughout the world, and it is a leading cause of occupational death. It is difficult to diagnose early pneumoconiosis because of the low sensitivity of chest radiographs, the wide variation in interpretation between and among readers, and the scarcity of B-readers, which all add to the difficulty in diagnosing these occupational illnesses. In recent years, deep machine learning algorithms have been extremely successful at classifying and localising abnormality of medical images. In this study, we proposed an ensemble learning approach to improve pneumoconiosis detection in chest X-rays (CXRs) using nine machine learning classifiers and multi-dimensional deep features extracted using CheXNet-121 architecture. There were eight evaluation metrics utilised for each high-level feature set of the associated cross-validation datasets in order to compare the ensemble performance and state-of-the-art techniques from the literature that used the same cross-validation datasets. It is observed that integrated ensemble learning exhibits promising results (92.68% accuracy, 85.66% Matthews correlation coefficient (MCC), and 0.9302 area under the precision–recall (PR) curve), compared to individual CheXNet-121 and other state-of-the-art techniques. Finally, Grad-CAM was used to visualise the learned behaviour of individual dense blocks within CheXNet-121 and their ensembles into three-color channels of CXRs. We compared the Grad-CAM-indicated ROI to the ground-truth ROI using the intersection of the union (IOU) and average-precision (AP) values for each classifier and their ensemble. Through the visualisation of the Grad-CAM within the blue channel, the average IOU passed more than 90% of the pneumoconiosis detection in chest radiographs.

1. Introduction

Clinical expertise and observer variability are reduced by computer-aided diagnosis (CADx) systems, which have become increasingly popular in medical imaging. The last few de-convolutional neural networks (CNNs)-based data-driven deep learning (DL) algorithms have performed well for chest X-ray (CXR) screening. However, due to the low prevalence of some diseases and restrictions on sharing patient data, transfer learning of CNN models became a popular technique, in which pre-trained CNN models from one application domain were used to provide a foundation for new CNN models in a different application domain. Transfer learning improves a model’s performance if it has been trained on datasets of a related domain to those of the problem being solved [1]. Therefore, if the goal is to detect any disease from medical images, then a model that has already gained knowledge from a similar domain should be selected. This can reduce learning time and improve the performance with a small training dataset of a CNN in the new application domain [2]. To combat the issue of limited data, feature extraction could be performed via transfer learning of deep learning models, with the resulting features being fed into new classifiers. In many medical image classifications [3,4,5,6,7], deep learning models were used as a feature extractor, where the extracted features were fed to different machine learning classifiers, such as Naive Bayes [8], Multilayer Perceptron (MLP) [9], Support Vector Machines (SVM) [10], K-Nearest Neighbours (KNN) [11], Random Forest (RF) [12], and Decision Trees (DT) [13].

Deep learning models are critical for establishing trust and demonstrating their ability to integrate with computer-aided detection. Over the last year, the interpretation and visualisation of deep CNN features has grown in popularity for understanding the modelling and behaviour of the features learned across the trained model’s convolutional layers [14,15]. It was observed that the convolutional layers of CNN retain all spatial information depending on the increased variance of the input image. This spatial information may differ on the depth of the convolutional layer or block within CNN structure, which is lost in fully-connected layers. As a result, the final convolutional layers or blocks represent the best understanding of the information between all layers. The neurons in the layer search for pertinent information on the specific classes of the image. In [15], a visualisation strategy was presented called class-activation-map (CAM) to locate image regions of interest (ROIs), which are relevant to an image category. However, the use of this strategy is limited because it only works with deep learning architecture. Subsequently, a common CAM technique was proposed based on the gradient-weighted CNN input class activation mapping, referred to as Grad-CAM with universal tuning form [16], which the information based on the convolutional layer gradient uses to assign significance values to each neuron in the ROI. Medical image processing researchers also applied Grad-CAM to explain the disease predictions of the CNN model and interpreted the depictions learned with CXR [17,18,19,20,21,22]. The concept behind Grad-CAM is to compute the gradient of the ranking score in relation to the CNN characteristics map. It highlights the specific ROIs based on the greatest gradient score.

In this paper, we have proposed an ensemble technique of multidimensional features learned from CNN to detect and visualise pneumoconiosis disease in CXRs. Our list of contributions is summarised below:

- I.

- We have utilised posterior–anterior (PA) CXRs databases compiled by Coal Services Health NSW, St. Vincent’s Hospital Sydney, Wesley Medical Imaging Queensland, and the International Labour Organization (ILO).

- II.

- We have applied an efficient CNN architecture, CheXNet-121 to learn and extract multidimensional features from three different folds of database.

- III.

- Individually for each fold, four different sets of multidimensional features (F1024, F512, F256, and F128) were extracted by using the supreme dense block functionality of the CheXNet-121 architecture.

- IV.

- In order to detect pneumoconiosis, extracted features were used as input for nine traditional machine learning classifiers, such as SVM-SF (SVM with SF kernel), SVM-RBF (SVM with RBF kernel), SVM-PF (SVM with PF kernel), Gaussian Naive Bayes (GNB), Multi-layer Perceptron (MLP), Radius Neighbours (NB), K-Nearest neighbours (KNN), Decision Trees (DTs), and Extra Decision Trees (EDTs)

- V.

- To determine the optimal prediction label, an ensemble of nine decisions was made using the majority voting (MVOT) system.

- VI.

- Ensemble learning performance on each dataset associated with four feature sets was assessed using eight metrics: TPR (true positive rate or recall or sensitivity), TNR (true negative rate or specificity), precision, FPR (false positive rate), FNR (false negative rate), F1-Score, ACC (accuracy), and MCC (Matthews correlation coefficient).

- VII.

- To compare the efficiency of the classifiers, AUC-PR (area under the precision–recall curve) and AP (average precision) values were calculated.

- VIII.

- Grad-CAM was applied to produce a coarse localised map highlighting the most important ROIs in the lung to predict the disease of coal workers

- IX.

- Additionally, the Grad-CAM localisation outputs on the RGB image were split into red, green, and blue channels, where each colour specified clearer discriminative ROIs.

- X.

- Finally, we have compared the ROI highlighted by Grad-CAM with the ground-truth ROI based on the intersection of the union (IOU) and average-precision (AP) values of each classifier and their ensemble.

2. Research Background

This section provides context for this study, including previous studies and findings for pneumoconiosis classification on the same dataset using various classical, traditional machine, and deep learning methods. Furthermore, Section 2.2 summarises the various machine learning classifiers used in this study.

2.1. Previous Study

Radiology shows an increase or decrease in lung density on chest X-rays. Pulmonary opacities are dense abnormalities on chest X-rays. Pulmonary opacities include consolidation, interstitial, and atelectasis. Interstitial pulmonary opacities cause pneumoconiosis [23]. The international labour organisation (ILO) divides pneumoconiosis into two types: parenchymal and pleural. Small opacities (round or irregular) of 1.5 mm diameter (round) or 1.5 mm width (irregular) or less than or equal to 50 mm in size indicate parenchymal abnormalities [24]. An abnormal CXR wall shows angle obliteration and thickness diffusion in pleural abnormalities [25]. Previously, pneumoconiosis was classified using classical, traditional machine learning, and deep learning methods.

In classical methods, the abundance of small round opacities and ILO extent properties indicated normal and abnormal lungs [26,27,28,29,30,31,32,33,34,35]. Backpropagation neural networks were used to determine the shape and size of round opacities in region of interest (ROI) images [36,37,38], and the abnormalities were classified and compared to the standard ILO opacity measurement. Using the same data set that was used in this study, this method worked 83.0% of the time to find pneumoconiosis [39].

Handcrafted feature extraction or selection were used in traditional machine learning for pneumoconiosis detection. Handcrafted features like texture [30,32] were extracted from the left–right lung zones [40,41,42,43]. Following feature selection, they were fed into various machine learning classifiers, including support vector machines (SVM) [40,41,43,44,45,46,47,48,49,50,51], decision trees (DT) [47,48], random trees (RT) [44,49,50,51], artificial neural networks (ANNs) [52,53,54], K-nearest neighbours (KNN) [55], self-organizing map (SOM) [55], backpropagation (BP), the radial basis function (RBF) neural networks (NN) [44,49,50,51,55,56], and Ensemble classifier [41,43,48]. Among the classifiers, SVM had the best overall detection accuracy, with a 73.17 percent success rate when using the same dataset as this study.

Recent advances in deep learning have been made possible by high-dimensional data representation [57,58]. In medical image processing [59], deep CNNs outperform humans in detecting cancer markers in blood and skin [60,61,62], malaria in blood cells [63], and respiratory diseases in chest X-rays [39,64,65,66,67,68,69,70,71,72].

On the same dataset, we have used different deep learning approaches. We first implemented convolutional neural networks (CNN) with and without transfer learning, using the base models, DenseNet-121 [19] and CheXNet-121 [18]. Furthermore, with an overall accuracy of 90.24 percent, we were able to develop a cascade model that outperformed others in detecting pneumoconiosis. Prior research using the same dataset revealed that the pre-trained deep learning model, CheXNet-121, outperformed classical and traditional approaches.

2.2. Machine Learning Classifiers

In machine learning, support vector machine (SVM) networks can be used as either a linear or a non-linear classifier. In the non-linear case, SVM can be utilized to address binary classification problems with different kernel functions which maximize the marginal distances between two classes. Sigmoid, radial basis, and polynomial are three popular kernel functions for SVM. One of the activation functions of SVM-SF (sigmoid function) is hyperbolic tangent function; SVM-RBF (radial basis function) uses exponential functions as its activation function, which measures the Euclidean distance between the classes; SVM-PF (polynomial function) reduces the similarity of vectors that optimize the network’s learning capacities [73,74].

In supervised learning, a simple classification algorithm called Gaussian Naïve-Bayes (GNB), which is based on Bayes probability theorem, can be trained to work for small sets of features vectors [74]. Each output feature vector from a multi-layer perceptron (MLP) is an input to the following layer, and a non-linear activation function forecasts the probabilities of unknown vectors [73]. MLP can classify the datasets which are not linearly separable. Both the radius neighbours (RN) and the k-nearest neighbours (KNN) classifiers operate in a specific vector space where each feature vector maintains a constant separation from a random vector point. The term “radius of neighbours” refers to the fixed distance. The KNN displays the vectors that would connect the top k nearest neighbours’ features in the same vector space [75]. In a complex variable problem, classification becomes more difficult due to the complexity of feature patterns. In this case, a model randomly selects some target feature sets that are called decision trees (DTs), where the leaves and branches of DTs represent the class labels and features of these labels. For the complicity of features a single DT sometimes over-fits the training dataset, while the extra decision trees (EDTs) reduce the overfitting of the training model that can optimize the prediction probabilities [76]. A wide range of real-world applications, including medical image analysis, have shown that machine learning classifiers are extremely effective in a variety of situations [77,78,79,80]. In recent years, due to the low prevalence of some diseases and restrictions on the sharing of patient data, the machine learning classifiers summarised above have merged CNN features to great effect as well [3,4,5,6,7].

3. Datasets and Methods

The first part of this section discusses our dataset and how it was processed using randomised cross-validation to perform proposed ensemble technique. In contrast, the rest of the section describes the details of the detection and visualisation techniques implemented in this research.

3.1. Datasets

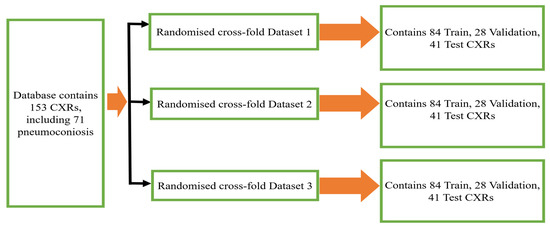

We have made use of the posterior–anterior (PA) CXRs databases that were accumulated by the Coal Services Health NSW, St. Vincent’s Hospital Sydney, Wesley Medical Imaging Queensland, and ILO. The database contained total 153 images, including 71 pneumoconiosis CXRs. To keep the training data balanced, 112 X-rays (56 normal and 56 pneumoconiosis) were used for training and 41 X-rays (26 normal and 15 pneumoconiosis) were used for testing. 25% of the training data was kept as a validation data set in order to select the best model weights based on validation performance. We repeated the randomised selection three times before dividing our total dataset into three folds, namely randomised 3 cross-fold Dataset 1, Dataset 2, and Dataset 3, as shown in Figure 1.

Figure 1.

Three randomized cross-fold datasets of original database.

3.2. Method

Gao Huang et al. developed DenseNet-121, a CNN with dense connections between layers that was trained on the ImageNet database of 1000 classes, in 2017 [19]. The four dense blocks were utilised to make these connections, which consisted of joining them in such a way that their output sizes were similar. Rajpurkar, P. et al. transferred DenseNet-121’s knowledge from the ImageNet domain to the Chest X-ray domain and released hybrid CheXNet-121 pre-trained model [18]. This CheXNet-121 model was trained on ChestX-ray14, which contains 112,120 frontal X-ray pictures from 30,805 individuals [81]. This study proposes a subsequent implementation of transfer learning to apply CheXNet-121’s knowledge to a tiny dataset of X-ray diseases that includes none of those 14 classes.

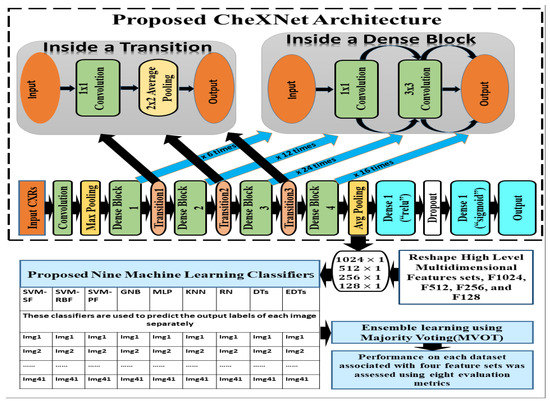

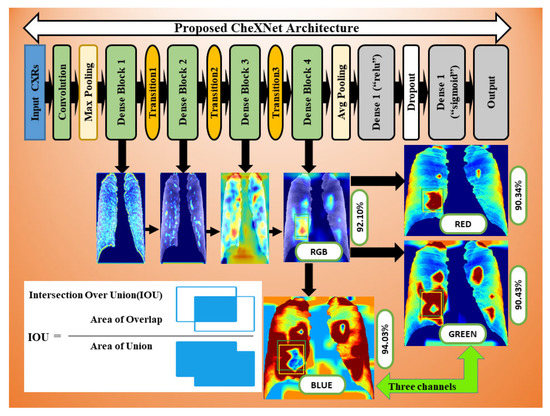

The CheXNet-121 architecture represents a discriminating level of characteristics after each convolutional block that is more robust with bigger datasets. The CheXNet-121 has four convolutional dense blocks followed three transition layers which are fully connected, as demonstrated in Figure 2. Each of the four dense blocks was made up of 6, 12, 24, and 16 times of the 1 × 1 convolution and 3 × 3 convolution, with features being multidimensional, as directed by arrows in Figure 2. On the input feature maps, each layer adds a few new features in a dense block, which causes the feature size to increase. The transition layers can do downsampling by using a batch-norm layer, a 1 × 1 convolution operation, and then a 2 × 2 average pooling outside dense blocks, as shown in Figure 2. This makes sure that the size of the feature map inside dense blocks stays the same so that features can be concatenated. The initial dense blocks’ features are regarded as low-level in comparison to the fourth dense blocks.

Figure 2.

The details of our proposed ensemble learning of pneumoconiosis detection.

In this section, we have proposed an ensemble learning using nine machine learning algorithms to classify multidimensional high-level CheXNet-121 features learned from chest X-rays. The CheXNet-121 model was used as a feature extractor by removing the last layer close to the output layer. Next, a global average pooling layer was added which converted the output of the model into one-dimensional vectors. The resolution of input X-ray images is pixels with three channels. Before use of the model, we compiled it using an Adam optimizer with low learning rate and binary cross entropy as a loss function. The main contribution of this section is summarized as follows:

Firstly, we extracted four sets of multidimensional high-level CheXNet-121 features (F1024, F512, F256 and F128) independently from the three randomized cross-fold datasets as discussed in Figure 1. Here, each feature set indicates the number of features extracted from each image. Secondly, extracted features were used as the input of nine classifiers, including SVM-SF (support vector machine with sigmoid function kernel), SVM-RBF (support vector machine with radial basis function kernel), SVM-PF (SVM with polynomial function kernel), Gaussian Naive Bayes (GNB), multi-layer perceptron (MLP), radius neighbours (NB), k-nearest neighbours (KNN), decision trees (DTs), and extra decision tree (EDTs), as clearly mentioned in Figure 2. Thirdly, the optimal prediction label was calculated using the strategies of a majority voting (MVOT) system. Finally, the ensemble learning performances among three randomized cross-fold datasets were computed using eight metrics: TPR (true positive rate or recall or sensitivity), TNR (true negative rate or specificity), precision, FPR (false positive rate), FNR (false negative rate), F1-Score, ACC (accuracy), and MCC (Matthews correlation coefficient). Here, the MCC provides a more accurate statistical measure based on the four confusion matrix values of true positives, false negatives, true negatives, and false positives. Therefore, a model will achieve a higher MCC score if and only if it achieves a good return in the four matrix values.

The hyper parameters of all classification algorithms were chosen based on experiments aiming to achieve the best performance of each model on validation features. For SVM, we tested different values for each parameter; for example, five values are assessed for the penalty , and the parameter gamma, was assigned a set of values as . For GNB we set only the variance smoothing parameters which were stable despite the features variances. For MLP, we used the default hidden layer size 100, rectifier linear units (relu) as its activation function, L2 penalty weights regularization, Adam optimizer, and initial learning rates with maximum iteration 10,000. The range of k neighbours was tuned odd number from (1 to 84) in KNN, and fractional radius neighbour parameters from (1.5 to 5.00), which may vary depending on the training feature sets used in RN. For the maximum depth of trees, it was tuned with odd integer numbers from 1 to 30. The other parameters of all classifiers were chosen as default values of the algorithms. The best tuned parameters were used for training and testing, which gave optimal performance on the extracted feature sets, F1024, F512, F256, and F128.

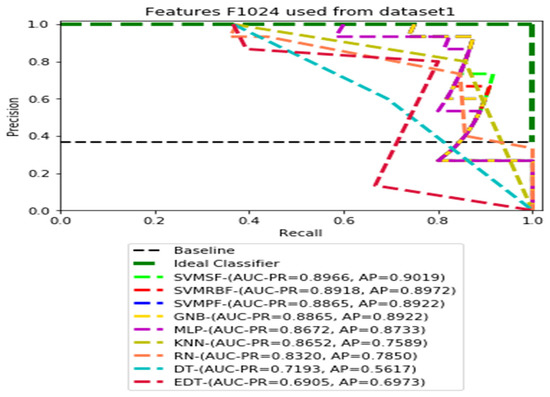

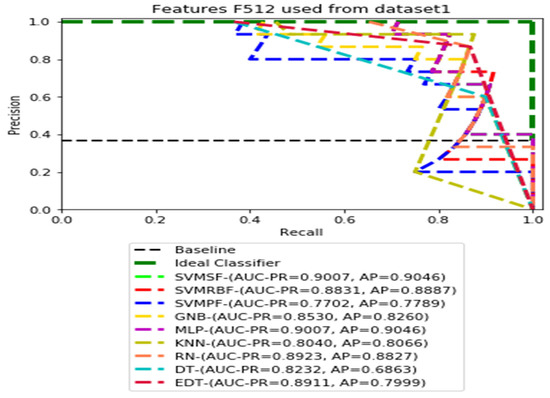

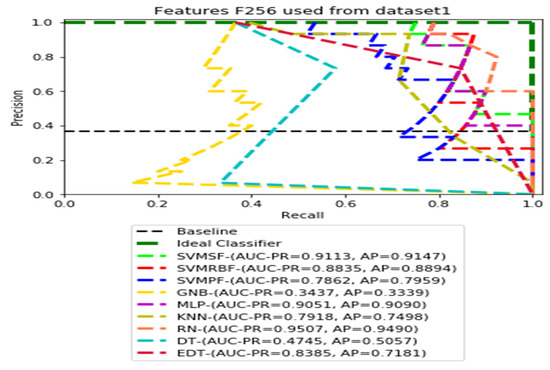

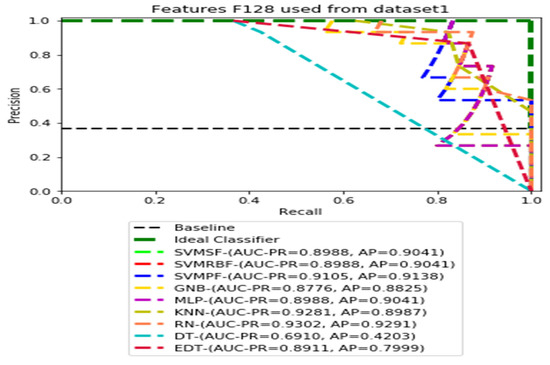

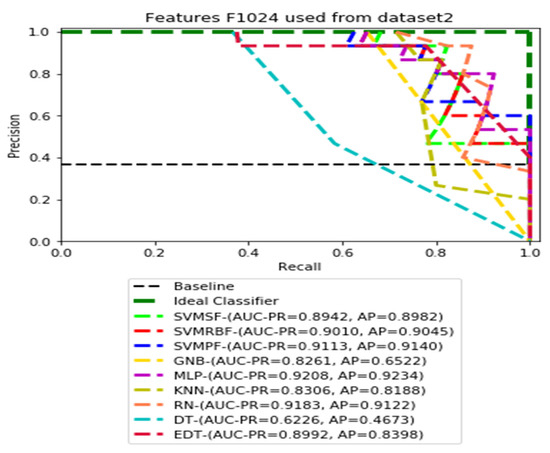

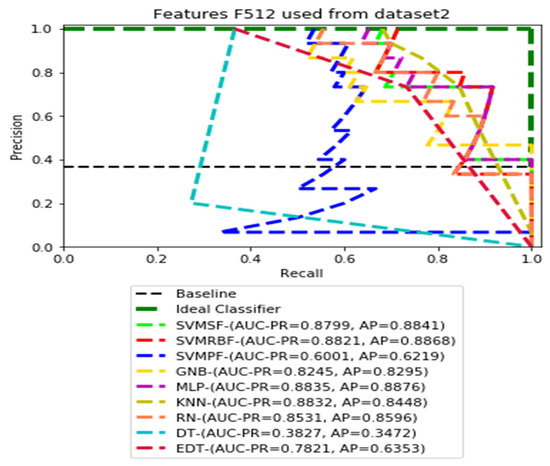

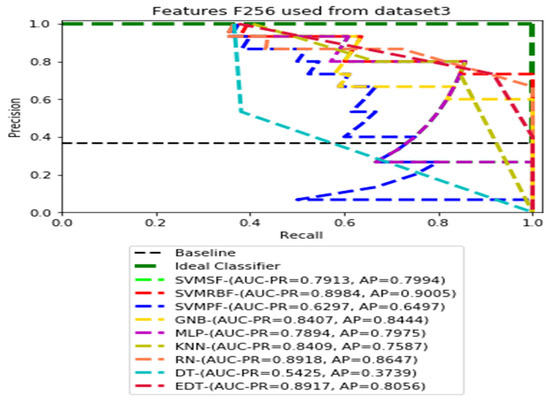

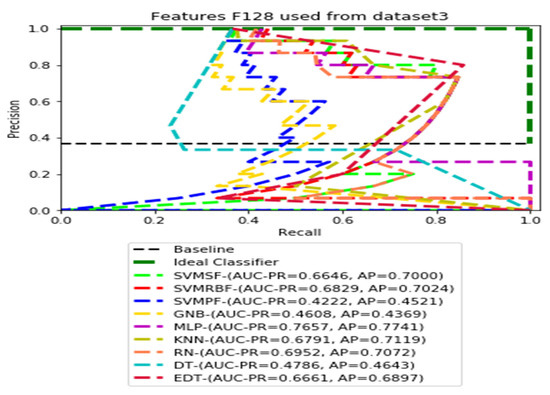

The recall and precision values for each test CXR utilising the feature set of its associated dataset were presented in Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12, Figure 13 and Figure 14 to demonstrate how well a single classifier performed in an ensemble. As a result, using their AUC-PR (area under the precision-recall curve) and AP (average precision) values made comparing efficiency easier. Trapezoidal numerical integration of the precision–recall curve yields the AUC value. The weighted average of the precision obtained at each threshold, with the recall increase being the preceding threshold used as weight, is how AP summarises a graph. This implementation is not interpolated, as opposed to the PR curve AUC calculation, which uses linear interpolation and can be overly optimistic.

Figure 3.

PR curves using feature set F1024 as the input of nine classifiers with Dataset1.

Figure 4.

PR curves using feature set F512 as the input of nine classifiers with Dataset 1.

Figure 5.

PR curves using feature set F256 as the input of nine classifiers with the Dataset 1.

Figure 6.

PR curves using feature set F128 as the input of nine classifiers with the Dataset 1.

Figure 7.

PR curves using feature set F1024 as the input of nine classifiers with Dataset 2.

Figure 8.

PR curves using feature set F512 as the input of nine classifiers with Dataset 2.

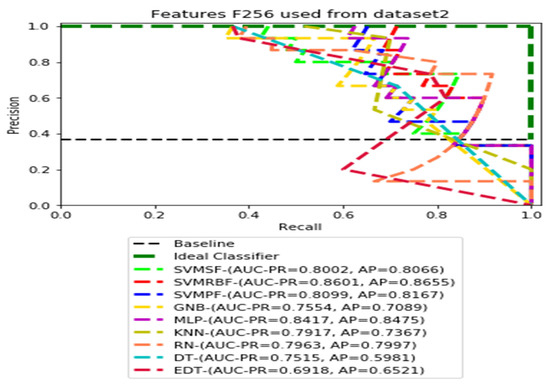

Figure 9.

PR curves using feature set F256 as the input of nine classifiers with Dataset 2.

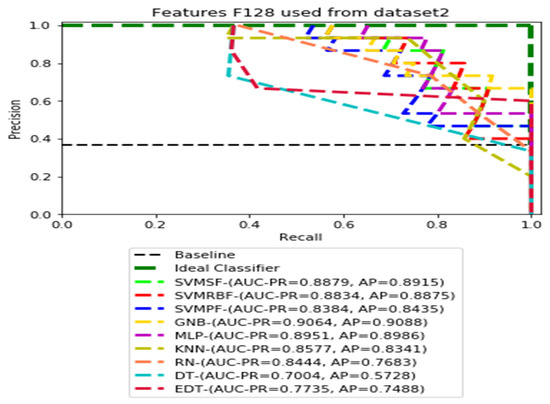

Figure 10.

PR curves using feature set F128 as the input of nine classifiers with Dataset 2.

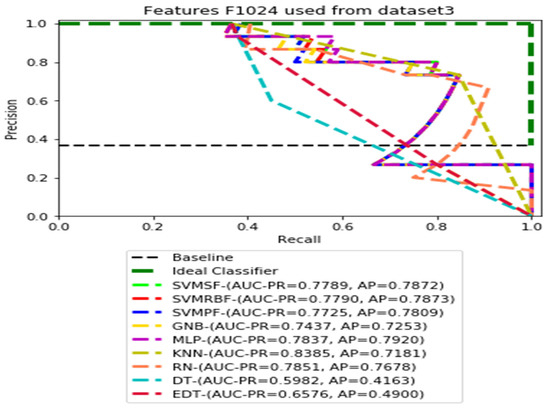

Figure 11.

PR curves using feature set F1024 as the input of nine classifiers with Dataset 3.

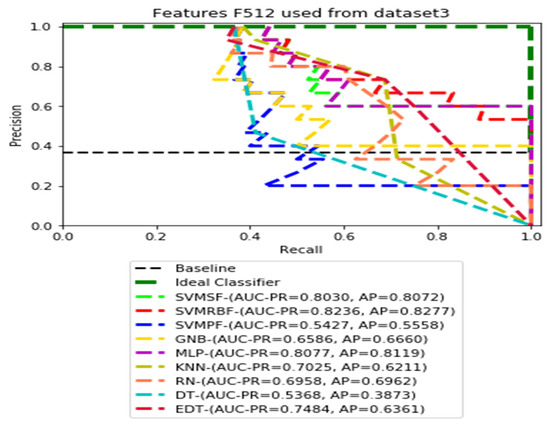

Figure 12.

PR curves using feature set F512 as the input of nine classifiers with Dataset 3.

Figure 13.

PR curves using feature set F256 as the input of nine classifiers with Dataset 3.

Figure 14.

PR curves using feature set F128 as the input of nine classifiers with Dataset 3.

Additionally, we applied the Grad-CAM (gradient-weighted class activation mapping) technique to interpret the most important ROIs that contributed to the classification of pneumoconiosis disease. Finally, we have interpreted the localisation performance of Grad-CAM through four convolutional blocks within the CheXNet-121 architecture, as demonstrated in Figure 2. Next, we split the Grad-CAM image of the high-level feature block into red, green, and blue, as shown in Figure 15. We compared all predicted ROIs with ground truth ROIs based on the measurement of the intersecting area over the union (IOU) between them and best AP values of each classifier and their ensemble. IOU is used to measure the performance of object detection from the overlapping relationship area between the ground-truth and predicted bounding box provided for a particular input image [82,83]. The details proposed Grad-CAM technique of pneumoconiosis detection is presented in Figure 15.

Figure 15.

Workflow as the Grad-CAM constructed from four convolutional block CAMs using CheXNet-121.

We implemented our proposed model using the Keras version of CheXNet-121 with scikit-learn 0.19.1 machine learning classifiers, matplotlib 3.1.3 libraries, and Python 3. The experiments were conducted on a high-performance computing system at the University of Newcastle.

4. Results and Discussions

The ensemble outcomes for the nine traditional machine learning classifiers—SVM-SF, SVM-RBF, SVM-PF, GNB, MLP, KNN, RN, DTs, and EDTs—based on the majority voting (MVOT) system are presented in this section. Table 1 displays the results of our investigation into the ensemble learning performance of nine classifiers using eight evaluations based on the MVOT confusion matrix. For the randomised cross-fold Datasets 1 and 2, the ensemble classifiers attained an accuracy of 90.24 percent for the feature sets F1024 and F512, as discussed in Figure 1.

Table 1.

Ensemble results of proposed classifiers among the four feature sets though three datasets (Dataset 1, Dataset 2 and Dataset 3).

For the features sets F256 and F128, the ensemble classifiers achieved an accuracy of 92.68% and 90.24% for same Dataset 1 and 2. For features sets F1024 and F512, the ensemble classifiers achieved an accuracy of 87.80% for Dataset 3. For the features sets F256 and F128, the ensemble classifiers achieved an accuracy of 85.37% for Dataset 3. Therefore, average and maximum accuracies of 90.24% and 92.68% were achieved among the three datasets by the proposed ensemble learning method. Furthermore, we found that the highest MCC score, 85.86 percent, was attained on our imbalanced testing dataset. A low false positive rate (FPR) and false negative rate FNR was also achieved by the ensemble of nine classifiers. For the purpose of pneumoconiosis disease detection, the proposed integrated method also fares better than the alternatives on measures such as precision, recall, specificity, and F1-score.

In our proposed datasets, we used a balanced distribution of two classes during training and validation, but the test was imbalanced. Therefore, all test datasets contained 36.58 percent (15 out of 41) of pneumoconiosis and 63.42 percent (26 out of 41) of normal images. The precision–recall (PR) values for each test data set were plotted with the corresponding feature sets F1014, F512, F256, and F128, respectively. Then, the values AUC-PR (area under the PR curve) and AP (average accuracy) were computed to provide a different perspective on the evaluation of the result of the binary classifier. The Lager AUC-PR value shows better model performance in order for the PR curve to move to the ideal classifier. Varying according to the imbalanced data, the baseline of the PR curve is the horizontal line parallel to the x-axis value of the positive rate that would be the lowest precision value. Almost all real-world examples will be somewhere between ideal and baseline, which is not perfect but provides better forecasts than the ‘baseline’.

We demonstrate the AUC-PR and AP values for each classifier that has used three different datasets in the ensemble learning process using four dimensions of CheXNet-121 features in the following figures. All of the PR curves have demonstrated the equilibrium between the positive prediction values and the true positive rate through the application of a variety of probability thresholds. The average precision (AP) is a representation of the mean of the precision values obtained at each recall of a new positive sample. This indicates whether a classifier is capable of accurately identifying all instances of pneumoconiosis without mistakenly labelling an excessive number of normal occurrences as pneumoconiosis. As a consequence of this, the AP is high when the classifier is capable of accurately detecting pneumoconiosis disease in chest X-ray radiographs.

Figure 3, Figure 4, Figure 5 and Figure 6 show the PR curves in an ensemble of nine classifier performances using the corresponding CheXNet-121 feature sets, F1024, F512, F256, and F128 of Dataset 1. The highest AUC-PR and AP values on F1024 were 0.8966 and 0.9019, respectively, achieved by the same SVM-SF classifier. The highest AUC-PR and AP in the RP curves on F512 were 0.9007 and 0.9046, respectively, achieved by the two classifiers, SVM-SF and MLP. The highest AUC-PR and AP pairs obtained by the same RN classifier in the PR curves of F256 and F128, respectively, are (0.9507 and 0.9490) and (0.9302 and 0.9291).

Figure 7, Figure 8, Figure 9 and Figure 10 show the PR curves in an ensemble of nine classifier performances using CheXNet-121 feature sets F1024, F512, F256, and F128 from Dataset 2. The highest AUC-PR and AP pairs in the PR curves of F1024 and F512 are (0.9208 and 0.9234) and (0.8835 and 0.8876), performed by the same MLP classifier. The pairs of AUC-PR and AP (0.9601 and 0.8655) and AP (0.9064 and 0.9088), on the other hand, were produced as the highest on F256 and F128 with two isolated classifiers, SVMRBF and GNB.

Figure 11, Figure 12, Figure 13 and Figure 14 show the PR curves in an ensemble of nine classifier performances using feature sets F1024, F512, F256, and F128 from Dataset 3. With the help of the set of high-dimension extracted CheXNet-121 features, F1024, KNN, and MLP achieved the highest AUC-PR and AP values of 0.8385 and 0.7920 out of nine classifiers. By obtaining the highest AUC-PR and AP, the SVM variant, SVM-RBF, demonstrated effectiveness in the detection of pneumoconiosis using both F512 and F256. MLP obtained the lowest AUC-PR and AP values for F128 Dataset 3.

The ensemble technique showed that a set of nine classifiers for Datasets 1–3 performed average precision and recall values with F128 and F1024 testing sets. In most classifiers, positive predictive values increased, consistent with higher true positive rates. Most of the PR curve is oriented toward the ideal classifier and enclosed by the baseline. Although the proposed ensemble methods have increased the accuracies of Dataset 2 from 87.80% to 90.24%, and Dataset 3 from 85.37% to 87.80%, they did not improve the accuracy of Dataset 1 from 92.68%. The optimum pneumoconiosis detection accuracy and MCC scores of 92.68% and 85.86% were obtained with the F128 features. Thus, the higher MCC score indicated that the ensemble method obtained a good prediction across categories of the confusion matrix, true positives, false negatives, true negatives, and false positives, respectively. Maximum AP (average precision) values of 0.9490, 0.9234, and 0.9005 for Datasets 1–3 indicate RN, MLP, and SVMRBF were more accurate at detecting pneumoconiosis in chest X-rays.

Grad-CAM was used to calculate the automatic differentiation of the selected class final score with respect to the weights in each CheXNet-121 block’s feature map. The Grad-CAM is created by averaging multiple CAMs (class activation mapping) generated by the CheXNet-121 model’s four convolutional blocks. Figure 15 depicts the workflow.

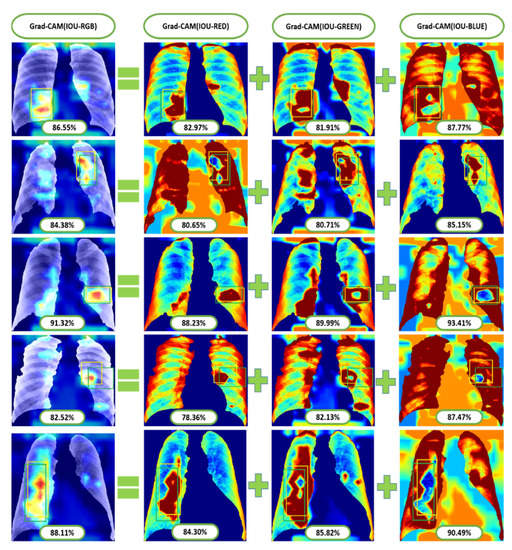

We avoided CAMs before the final block in this study because the deepest convolution layer of each CNN model generates efficient characteristic maps. To demonstrate the efficacy of our technique, we separated three colour channels from the proposed Grad-RGB CAM’s outputs using the CheXNet-121 model and quantitatively compared their visual ROI localisation performance in terms of IOU and AP values. IOU evaluates the accuracy of the Grad-CAM highlighted region of interest (ROI) by splitting and combining the test image’s red, green, and blue channels, as shown in Figure 15.

Grad-CAM demonstrated that the ROI channels’ variance in intensity reflects the relative importance of each channel to the class. The ground-truth (green-bounding) and predicted (yellow-bounding) boxes represented the precise coordinates of the suspicious and Grad-CAM highlighted ROIs within the pneumoconiosis CXRs, respectively, and were used to calculate the IOU.

In Figure 15, we visualised the Grad-CAM outputs of each convolutional block within the CheXNet-121 architecture. In Section 3.2, the chemistry of these blocks is described in detail using Figure 2. The initial two convolutional blocks highlighted the overall pixels, size and shape of pneumoconiosis using Grad-CAM. The third block highlighted several suspected areas in the pneumoconiosis images. Our objective was to predict the most conspicuous suspect ROIs that matched with the most ground-truth ROIs, which we noticed from the high-level feature block’s outputs. Therefore, we avoided the first three blocks’ outputs in the measurements of intersection over union (IOU). The formula of IOU calculation has been attached in Figure 15.

As we know that the lungs of pneumoconiosis patients look black instead of a healthy pink, we turned our IOU calculation into three channels of input red-green-blue (RGB) images, as in Figure 15. Consequently, we observed the IOU-RGB differences in the accuracy of each channel. In Figure 15 and Figure 16, we showed a total of six Grad-CAM applications using two images from each of the three different test sets, where IOU accuracy has been demonstrated at each RGB and its separate channels independently. The comparison of IOU-RGB with each channel focused different accuracy among them, where only the IOU-BLUE has shown higher accuracy than IOU-RGB with the same bounding boxes.

Figure 16.

Some examples of Grad-CAM with IOU calculated from test positive samples of the most conspicuous suspect areas.

Table 2 demonstrates the comparison between average-IOU and average positive prediction value (PPV or precision) through three cross-fold datasets. The average IOU passed an average of more than 85% of the pneumoconiosis detection in chest radiographs through the visualisation of the Grad-CAM datasets, where the average maximum was 89.32% for test Dataset 1.

Table 2.

Comparison of IOU and AP though three different datasets.

Ensemble learning with the MVOT and the proposed method gave AP accuracy of 100% on test Dataset 3, but only 92.31 percent on the other test datasets. We also made a list of the highest AP that nine classifiers got from the precision–recall curve in Table 2. The RN classifier in Dataset 1 got the highest AP, which was 94.90 percent. SVMRBF used three-fold datasets of pneumoconiosis disease to figure out the overall 90 percent AP. The proposed ensemble learning achieved 92.68 percent pneumoconiosis detection accuracy in chest X-ray radiographs, as shown in Table 1.

In this research, we have presented a deep learning-based method with ensemble learning. The experimental results show that it performed well for the detection of pneumoconiosis and achieved an accuracy of 92.68%. An average precision of 92.31%, 92.31%, and 100.00% were achieved by the proposed technique. The proposed CheXNet-121 model visualisation helped to interpret the model’s behaviour, compensated for the error of missing ROIs using individual CNN models, and demonstrated superior ROI detection and localisation performance compared to any individual constituent model.

5. Conclusions

We conducted a study to identify pneumoconiosis disease in CXRs using the deep learning technique. We explored deep learning applications through ensemble learning. The experimental findings show that the proposed methodology is an encouraging and better approach than applying a single model individually and other state-of-the-art approaches. In addition, the Grad-CAM visualisation helped interpret the behaviour of the model and demonstrated discrimination in detecting and localising ROIs within each RGB channel when compared to any individual convolutional block in the deep learning model. The experimental results show that the proposed method can be used for pre-screening of chest X-rays for the effective detection of pneumoconiosis. Expert radiologists can then spend more time on those X-rays that were flagged as having pneumoconiosis by the proposed model. We think the proposed frameworks are useful for the development of robust models in the classification of medical images and localisation of the superior ROI.

Author Contributions

Conceptualization, L.D.; Data curation, L.D., S.L., P.S. and D.W.; Formal analysis, L.D., Z.F., P.S., S.L. and D.W.; Software, L.D.; Investigation, L.D., Z.F., P.S., S.L. and D.W.; Methodology, L.D.; Visualization, L.D.; Resources, L.D., Z.F., P.S., S.L. and D.W.; Writing—original draft, L.D.; Writing—Review & Editing, L.D., Z.F., P.S., S.L. and D.W.; Funding acquisition, Z.F.; Supervision, S.L., P.S. and D.W. All authors have read and agreed to the published version of the manuscript.

Funding

College of Computer Science and Technology, Huaqiao University, Xiamen 361021, China. This work was supported by the Scientific Research Funds, No. 21BS122, of Huaqiao University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We are grateful to the Coal Services Health and Safety Trust, Australia, Project No. 20647 for providing a portion of the funding for this study. We all thank you to the Scientific Research Funds of Huaqiao University, China for their assistance with the research.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Olivas, E.S.; Guerrero, J.D.M.; Martinez-Sober, M.; Magdalena-Benedito, J.R.; Serrano, L. (Eds.) Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques—2 Volumes; IGI Publishing: Hershey, PA, USA, 2009. [Google Scholar]

- Devnath, L.; Luo, S.; Summons, P.; Wang, D. Tuberculosis (TB) Classification in Chest Radiographs using Deep Convolutional Neural Networks. Int. J. Adv. Sci. Eng. Technol. 2018, 6, 68–74. [Google Scholar]

- Antropova, N.; Abe, H.; Giger, M.L. Use of clinical MRI maximum intensity projections for improved breast lesion classification with deep convolutional neural networks. J. Med. Imag. 2018, 5, 014503. [Google Scholar] [CrossRef] [PubMed]

- Nóbrega, R.V.M.D.; Peixoto, S.A.; da Silva, S.P.P.; Filho, P.P.R. Lung Nodule Classification via Deep Transfer Learning in CT Lung Images. In Proceedings of the 2018 IEEE 31st International Symposium on Computer-Based Medical Systems (CBMS), Karlstad, Sweden, 18–21 June 2018; IEEE: New York, NY, USA, 2018; pp. 244–249. [Google Scholar]

- Huynh, B.Q.; Li, H.; Giger, M.L. Digital mammographic tumor classification using transfer learning from deep convolutional neural networks. J. Med. Imaging 2016, 3, 034501. [Google Scholar] [CrossRef]

- Vogado, L.H.; Veras, R.M.; Araujo, F.H.; Silva, R.R.; Aires, K.R. Leukemia diagnosis in blood slides using transfer learning in CNNs and SVM for classification. Eng. Appl. Artif. Intell. 2018, 72, 415–422. [Google Scholar] [CrossRef]

- Zhang, B.; Qi, S.; Monkam, P.; Li, C.; Yang, F.; Yao, Y.-D.; Qian, W. Ensemble Learners of Multiple Deep CNNs for Pulmonary Nodules Classification Using CT Images. IEEE Access 2019, 7, 110358–110371. [Google Scholar] [CrossRef]

- Shih, F.Y. Image Processing and Pattern Recognition: Fundamentals and Techniques; John Wiley and Sons: Hoboken, NJ, USA, 2010. [Google Scholar]

- Haykin, S. Neural Networks and Learning Machines, 3rd ed.; Pearson Education, Inc.: Upper Saddle River, NJ, USA, 2009. [Google Scholar]

- Vapnik, V.N. Statistical Learning Theory; Joha Wiley & Sona, Inc.: Hoboken, NJ, USA, 1998. [Google Scholar]

- Cover, T.M.; Hart, P. Nearest Neighbor Pattern Classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and Regression by RandomForest. R News 2002, 2, 18–22. [Google Scholar]

- Suarez, A.; Lutsko, J. Globally optimal fuzzy decision trees for classification and regression. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 1297–1311. [Google Scholar] [CrossRef]

- Mahendran, A.; Vedaldi, A. Understanding deep image representations by inverting them. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 5188–5196. [Google Scholar]

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-ray8: Hospital-scale chest X-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. IEEE CVPR 2017, 2017, 3462–3471. [Google Scholar]

- Rajpurkar, P.; Irvin, J.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.; Shpanskaya, K.; et al. CheXNet: Radiologist-Level Pneumonia Detection on Chest X-rays with Deep Learning. arXiv Prepr. 2017. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Chouhan, V.; Singh, S.K.; Khamparia, A.; Gupta, D.; Tiwari, P.; Moreira, C.; Damaševičius, R.; de Albuquerque, V.H.C. A Novel Transfer Learning Based Approach for Pneumonia Detection in Chest X-ray Images. Appl. Sci. 2020, 10, 559. [Google Scholar] [CrossRef]

- Rajaraman, S.; Candemir, S.; Xue, Z.; Alderson, P.O.; Kohli, M.; Abuya, J.; Thoma, G.R.; Antani, S. A novel stacked generalization of models for improved TB detection in chest radiographs. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBS), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Pasa, F.; Golkov, V.; Pfeiffer, F.; Cremers, D.; Pfeiffer, D. Efficient Deep Network Architectures for Fast Chest X-ray Tuberculosis Screening and Visualization. Sci. Rep. 2019, 9, 6268. [Google Scholar] [CrossRef]

- Pulmonary opacities on chest X-ray • LITFL • CCC Differential Diagnosis. Available online: https://litfl.com/pulmonary-opacities-on-chest-x-ray/ (accessed on 6 August 2020).

- Devnath, L.; Summons, P.; Luo, S.; Wang, D.; Shaukat, K.; Hameed, I.A.; Aljuaid, H. Computer-Aided Diagnosis of Coal Workers’ Pneumoconiosis in Chest X-ray Radiographs Using Machine Learning: A Systematic Literature Review. Int. J. Environ. Res. Public Health 2022, 19, 6439. [Google Scholar] [CrossRef]

- International Labour Organization. Occupational Safety and Health Series No. 22 (Rev. 2011). Guidelines for the Use of the ILO International Classification of Radiographs of Pneumoconioses, Revised Edition 2011. 2011. Available online: http://www.ilo.org/global/topics/safety-and-health-at-work/resources-library/publications/WCMS_168260/lang--en/index.htm (accessed on 7 August 2020).

- Kruger, R.P.; Thompson, W.B.; Turner, A.F. Computer Diagnosis of Pneumoconiosis. IEEE Trans. Syst. Man Cybern. 1974, SMC-4, 40–49. [Google Scholar] [CrossRef]

- Turner, A.F.; Kruger, R.P.; Thompson, W.B. Automated computer screening of chest radiographs for pneumoconiosis. Investig. Radiol. 1976, 11, 258–266. [Google Scholar] [CrossRef]

- Hall, E.L.; Crawford, W.O.; Roberts, F.E. Computer Classification of Pneumoconiosis from Radiographs of Coal Workers. IEEE Trans. Biomed. Eng. 1975, BME-22, 518–527. [Google Scholar] [CrossRef]

- Jagoe, J.R.; Paton, K.A. Reading chest radiographs for pneumoconiosis by computer. Br. J. Ind. Med. 1975, 32, 267–272. [Google Scholar] [CrossRef]

- Ledley, R.S.; Huang, H.K.; Rotolo, L.S. A texture analysis method in classification of coal workers’ pneumoconiosis. Comput. Biol. Med. 1975, 5, 53–67. [Google Scholar] [CrossRef]

- Jagoe, J.R.; Paton, K.A. Measurement of Pneumoconiosis by Computer. IEEE Trans. Comput. 1976, C-25, 95–97. [Google Scholar] [CrossRef]

- Kobatake, H.; Oh’ishi, K.; Miyamichi, J. Automatic diagnosis of pneumoconiosis by texture analysis of chest X-ray images. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Dalas, TX, USA, 6–9 April 1987; Volume 12, pp. 610–613. [Google Scholar]

- Katsuragawa, S.; Doi, K.; MacMahon, H.; Nakamori, N.; Sasaki, Y.; Fennessy, J.J. Quantitative computer-aided analysis of lung texture in chest radiographs. Radiographics 1990, 10, 257–269. [Google Scholar] [CrossRef] [PubMed]

- Murray, V.; Pattichis, M.S.; Davis, H.; Barriga, E.S.; Soliz, P. Multiscale AM-FM analysis of pneumoconiosis X-ray images. In Proceedings of the International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 4201–4204. [Google Scholar]

- Chen, X.; Hasegawa, J.-I.; Toriwaki, J.-I. Quantitative diagnosis of pneumoconiosis based on recognition of small rounded opacities in chest X-ray images. In Proceedings of the International Conference on Pattern Recognition, Rome, Italy, 14 May–17 November 1988; IEEE: New York, NY, USA, 1988; pp. 462–464. [Google Scholar]

- Kondo, T.; Kondo, H. Computer-aided Diagnosis for Pneumoconiosis Using Neural Network. Int. J. Biomed. Soft Comput. Hum. Sci. Off. J. Biomed. Fuzzy Syst. Assoc. 2001, 7, 13–18. [Google Scholar] [CrossRef]

- Kouda, T.; Kondo, H. Automatic Detection of Interstitial Lung Disease using Neural Network. Int. J. Fuzzy Log. Intell. Syst. 2002, 2, 15–19. [Google Scholar] [CrossRef]

- Kondo, H.; Kouda, T. Detection of pneumoconiosis rounded opacities using neural network. In Proceedings of the Annual Conference of the North American Fuzzy Information Processing Society—NAFIPS, Vancouver, BC, Canada, 25–28 July 2001; Volume 3, pp. 1581–1585. [Google Scholar]

- Arzhaeva, Y.; Wang, D.; Devnath, L.; Amirgholipour, S.K.; McBean, R.; Hillhouse, J.; Luo, S.; Meredith, D.; Newbigin, K. Development of Automated Diagnostic Tools for Pneumoconiosis Detection from Chest X-ray Radiographs; The Final Report Prepared for Coal Services Health and Safety Trust; Coal Services Health and Safety Trust: Sydney, Australia, 2019. [Google Scholar]

- Yu, P.; Zhao, J.; Xu, H.; Sun, X.; Mao, L. Computer aided detection for pneumoconiosis based on Co-occurrence matrices analysis. In Proceedings of the 2009 2nd International Conference on Biomedical Engineering and Informatics, Tianjin, China, 17–19 October 2009; IEEE: New York, NY, USA, 2009; pp. 1–4. [Google Scholar]

- Yu, P.; Oh’ishi, K.; Miyamichi, J. An automatic computer-aided detection scheme for pneumoconiosis on digital chest radiographs. J. Digit. Imaging 2011, 24, 382–393. [Google Scholar] [CrossRef]

- Xu, H.; Tao, X.; Sundararajan, R.; Yan, W.; Annangi, P.; Sun, X.; Mao, L. Computer Aided Detection for Pneumoconiosis Screening on Digital Chest Radiographs. 2010, pp. 129–138. Available online: https://www.lungworkshop.org/2010/proc2010/xu.pdf (accessed on 7 August 2020).

- Sundararajan, R.; Xu, H.; Annangi, P.; Tao, X.; Sun, X.; Mao, L. A multiresolution support vector machine based algorithm for pneumoconiosis detection from chest radiographs. In Proceedings of the 2010 7th IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Rotterdam, The Netherlands, 14–17 April 2010; pp. 1317–1320. [Google Scholar]

- Abe, K.; Tahori, T.; Minami, M.; Nakamura, M.; Tian, H. Computer-Aided Diagnosis of Pneumoconiosis X-ray Images Scanned with a common CCD scanner. Autom. Control Intell. Syst. 2013, 1, 24–33. [Google Scholar] [CrossRef][Green Version]

- Masumoto, Y.; Kawashita, I.; Okura, Y.; Nakajima, M.; Okumura, E.; Ishida, T. Computerized classification of pneumoconiosis radiographs based on grey level co-occurrence matrices. Nihon Hoshasen Gijutsu Gakkai Zasshi 2011, 67, 336–345. [Google Scholar] [CrossRef] [PubMed]

- Yu, P.; Zhao, J.; Xu, H.; Yang, C.; Sun, X.; Chen, S.; Mao, L. Computer aided detection for pneumoconiosis based on histogram analysis. In Proceedings of the 2009 1st International Conference on Information Science and Engineering, Nanjing, China, 26–28 December 2009; pp. 3625–3628. [Google Scholar]

- Zhu, B.; Chen, H.; Chen, B.; Xu, Y.; Zhang, K. Support Vector Machine Model for Diagnosing Pneumoconiosis Based on Wavelet Texture Features of Digital Chest Radiographs. J. Digit. Imaging 2013, 27, 90–97. [Google Scholar] [CrossRef]

- Zhu, B.; Luo, W.; Li, B.; Chen, B.; Yang, Q.; Xu, Y.; Wu, X.; Chen, H.; Zhang, K. The development and evaluation of a computerized diagnosis scheme for pneumoconiosis on digital chest radiographs. Biomed. Eng. Online 2014, 13, 141. [Google Scholar] [CrossRef]

- Abe, K.; Minami, M.; Miyazaki, R.; Tian, H. Application of a Computer-aid Diagnosis of Pneumoconiosis for CR X-ray Images. J. Biomed. Eng. Med. Imaging 2014, 1, 113–122. [Google Scholar] [CrossRef]

- Nakamura, M.; Abe, K.; Minami, M. Quantitative evaluation of pneumoconiosis in chest radiographs obtained with a CCD scanner. In Proceedings of the 2nd International Conference on the Applications of Digital Information and Web Technologies, London, UK, 4–6 August 2009; pp. 646–651. [Google Scholar]

- Nakamura, M.; Abe, K.; Minami, M. Extraction of Features for Diagnosing Pneumoconiosis from Chest Radiographs Obtained with a CCD Scanner. J. Digit. Inf. Manag. 2010, 8, 147–152. [Google Scholar]

- Okumura, E.; Kawashita, I.; Ishida, T. Computerized analysis of pneumoconiosis in digital chest radiography: Effect of artificial neural network trained with power spectra. J. Digit. Imaging 2011, 24, 1126–1132. [Google Scholar] [CrossRef]

- Okumura, E.; Kawashita, I.; Ishida, T. Development of CAD based on ANN analysis of power spectra for pneumoconiosis in chest radiographs: Effect of three new enhancement methods. Radiol. Phys. Technol. 2014, 7, 217–227. [Google Scholar] [CrossRef] [PubMed]

- Cai, C.X.; Zhu, B.Y.; Chen, H. Computer-aided diagnosis for pneumoconiosis based on texture analysis on digital chest radiographs. Appl. Mech. Mater. 2013, 241, 244–247. [Google Scholar] [CrossRef]

- Pattichis, M.S.; Pattichis, C.S.; Christodoulou, C.I.; James, D.; Ketai, L.; Soliz, P. A screening system for the assessment of opacity profusion in chest radiographs of miners with pneumoconiosis. In Proceedings of the IEEE Southwest Symposium on Image Analysis and Interpretation, Sante Fe, NM, USA, 7–9 April 2002; pp. 130–133. [Google Scholar]

- Soliz, P.; Pattichis, M.S.; Ramachandran, J.; James, D.S. Computer-assisted diagnosis of chest radiographs for pneumoconioses. In Proceedings of the Medical Imaging 2001: Image Processing, San Diago, CA, USA, 17–22 February 2001; Volume 4322, pp. 667–675. [Google Scholar]

- Zamzmi, G.; Rajaraman, S.; Antani, S. Unified Representation Learning for Efficient Medical Image Analysis. arXiv 2020. [Google Scholar] [CrossRef]

- Rajaraman, S.; Antani, S. Visualizing Salient Network Activations in Convolutional Neural Networks for Medical Image Modality Classification. Commun. Comput. Inf. Sci. 2018, 1036, 42–57. [Google Scholar] [CrossRef]

- Sivaramakrishnan, R.; Antani, S.; Candemir, S.; Xue, Z.; Thoma, G.; Alderson, P.; Abuya, J.; Kohli, M. Comparing deep learning models for population screening using chest radiography. In Medical Imaging 2018: Computer-Aided Diagnosis; SPIE: Bellingham, WA, USA, 2018; Volume 10575, pp. 322–332. [Google Scholar] [CrossRef]

- Rajaraman, S.; Silamut, K.; Hossain, M.A.; Ersoy, I.; Maude, R.J.; Jaeger, S.; Thoma, G.R.; Antani, S.K. Understanding the learned behavior of customized convolutional neural networks toward malaria parasite detection in thin blood smear images. J. Med. Imaging 2018, 5, 034501. [Google Scholar] [CrossRef] [PubMed]

- Thamizhvani, T.R.; Lakshmanan, S.; Sivaramakrishnan, R. Computer Aided Diagnosis of Skin Tumours from Dermal Images. Lect. Notes Comput. Vis. Biomech. 2018, 28, 349–365. [Google Scholar] [CrossRef]

- Thamizhvani, T.R.; Lakshmanan, S.; Sivaramakrishnan, R. Mobile application-based computer-aided diagnosis of skin tumours from dermal images. Imaging Sci. J. 2018, 66, 382–391. [Google Scholar] [CrossRef]

- Sivaramakrishnan, R.; Antani, S.; Jaeger, S. Visualizing Deep Learning Activations for Improved Malaria Cell Classification. 2017, pp. 40–47. Available online: http://proceedings.mlr.press/v69/sivaramakrishnan17a (accessed on 7 August 2020).

- Rajaraman, S.; Cemir, S.; Xue, Z.; Alderson, P.; Thoma, G.; Antani, S. A Novel Stacked Model Ensemble for Improved TB Detection in Chest Radiographs. Med. Imaging 2019, 1–26. [Google Scholar] [CrossRef]

- Rajaraman, S.; Antani, S.K. Modality-Specific Deep Learning Model Ensembles Toward Improving TB Detection in Chest Radiographs. IEEE Access 2020, 8, 27318–27326. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Rong, R.; Li, Q.; Yang, D.M.; Yao, B.; Luo, D.; Zhang, X.; Zhu, X.; Luo, J.; Liu, Y.; et al. A deep learning-based model for screening and staging pneumoconiosis. Sci. Rep. 2021, 11, 2201. [Google Scholar] [CrossRef]

- Devnath, L.; Luo, S.; Summons, P.; Wang, D. Automated detection of pneumoconiosis with multilevel deep features learned from chest X-ray radiographs. Comput. Biol. Med. 2021, 129, 104125. [Google Scholar] [CrossRef]

- Wang, D.; Arzhaeva, Y.; Devnath, L.; Qiao, M.; Amirgholipour, S.; Liao, Q.; McBean, R.; Hillhouse, J.; Luo, S.; Meredith, D.; et al. Automated Pneumoconiosis Detection on Chest X-Rays Using Cascaded Learning with Real and Synthetic Radiographs. In Proceedings of the 2020 Digital Image Computing: Techniques and Applications (DICTA), Melbourne, Australia, 29 November–2 December 2020; IEEE: Melbourne, Australia, 2020; pp. 1–6. [Google Scholar]

- Devnath, L.; Luo, S.; Summons, P.; Wang, D. An accurate black lung detection using transfer learning based on deep neural networks. In Proceedings of the International Conference Image and Vision Computing New Zealand, Dunedin, New Zealand, 2–4 December 2019. [Google Scholar]

- Devnath, L.; Luo, S.; Summons, P.; Wang, D. Performance comparison of deep learning models for black lung detection on chest X-ray radiographs. In Proceedings of the ACM International Conference Proceeding Series, Association for Computing Machinery, New York, NY, USA; 2020; pp. 152–154. [Google Scholar] [CrossRef]

- Wang, X.; Yu, J.; Zhu, Q.; Li, S.; Zhao, Z.; Yang, B.; Pu, J. Potential of deep learning in assessing pneumoconiosis depicted on digital chest radiography. Occup. Environ. Med. 2020, 77, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Zheng, R.; Deng, K.; Jin, H.; Liu, H.; Zhang, L. An improved CNN-based pneumoconiosis diagnosis method on X-ray chest film. In Human Centered Computing; Milošević, D., Tang, Y., Zu, Q., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2019; Volume 11956. [Google Scholar] [CrossRef]

- Rejani, Y.I.A.; Selvi, S.T. Early Detection of Breast Cancer using SVM Classifier Technique. Int. J. Comput. Sci. Eng. 2009, 1, 127–130. [Google Scholar]

- Amami, R. Practical Selection of SVM Supervised Parameters with Different Feature Representations for Vowel Recognition. Int. J. Digit. Content Technol. Its Appl. 2013, 7, 418–424. [Google Scholar]

- Altman, N.S. An introduction to kernel and nearest-neighbor nonparametric regression. Am. Stat. 1992, 46, 175–185. [Google Scholar]

- Marée, R.; Geurts, P.; Piater, J.; Wehenkel, L. A generic approach for image classification based on decision tree ensembles and local sub-windows. In Proceedings of the 6th Asian Conference on Computer Vision, Jeju, Korea, 27–30 January 2004; Volume 2, pp. 860–865. [Google Scholar]

- de Bruijne, M. Machine learning approaches in medical image analysis: From detection to diagnosis. Med. Image Anal. 2016, 33, 94–97. [Google Scholar] [CrossRef]

- Lundervold, A.S.; Lundervold, A. An overview of deep learning in medical imaging focusing on MRI. Z. Med. Phys. 2019, 29, 102–127. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 1–21. [Google Scholar] [CrossRef] [PubMed]

- NIH Chest X-ray Dataset of 14 Common Thorax Disease Categories—Academic Torrents. Available online: https://academictorrents.com/details/557481faacd824c83fbf57dcf7b6da9383b3235a (accessed on 7 August 2020).

- Everingham, M.; Eslami, S.M.A.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes Challenge: A Retrospective. Int. J. Comput. Vis. 2014, 111, 98–136. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).