1. Introduction

The definition of health is “a condition of complete physical, mental, and social well-being and not merely the absence of disease or infirmity”. The digital era has brought an unprecedented volume of easily accessible information, including media coverage of current financial events. Daily, the inflection of news articles can elicit emotional responses from readers, and there is evidence of an increase in mental health issues in response to coverage of the recent global financial instability and inflation news [

1]. Given the importance and pertinence of this type of information exposure, its daily impact on the general population’s mental health warrants further investigation. Information has economic value because it allows individuals, financial institutions, and government agencies to make decisions that have better-projected payoffs than decisions made without it. Text from digital media (DM), which includes news, events, analyst reports, and public opinions relating to finance, is a substantial source of information that can be used to inform financial policies and decisions. Measuring the targeted information content connected to public attitudes in the text is thus an essential task, not only from the standpoint of the public but also from the perspective of all governmental and non-governmental financial institutions. Due to ambiguities, language variances, syntax, and subjectivity, quantifying the targeted information content of the text can be difficult [

2,

3].

The proliferation of specialized texts in languages spoken by billions worldwide serves as a standard for information extraction and polarity analysis systems. Using computational linguistics approaches and techniques on documents containing common usage of general language, particularly news stories, public sentiment, whether openly voiced or secretly recorded, is being assessed. Significant changes have occurred in the financial and DM realms due to the rapid expansion of the internet. The rise of DM has posed a challenge to traditional print media, altering people’s jobs and lifestyles. In comparison to traditional media, DM has low costs, great efficiency, broad reach, and high risk [

4,

5]. Microblogging, e-newspapers, and news channels are examples of new DM that provide financial data. Sentiment analysis and key features of online financial texts assist in determining the public’s sentiment state, offering quick access to public thoughts and attitudes and allowing users to get the information they require quickly. As a result, risk management, public opinion research, and government regulation can all benefit from it [

6].

Sentiment analysis or opinion mining is the computational examination of people’s opinions, feelings, assessments, attitudes, moods, and emotions. It is one of the most active research domains in natural language processing (NLP), data mining, information retrieval, and DM mining. Its research and applications have shifted to management and social sciences in recent years, owing to its prominence in general governmental, financial, and social problems. The purpose is to create a structure out of an unstructured natural language text regarding finance and related concepts [

7,

8,

9].

Perceiving emotions is a vital component of human intelligence emulation since it is one of the most fundamental components of personal development and advancement. Not just for the betterment of artificial intelligence (AI), but also the closely related subject of polarity recognition, sentiment analysis is critical [

10,

11]. The potential to automatically record public attitudes regarding social events, political movements, marketing efforts, and buying patterns has aroused scientific and public interest in the fascinating open challenges in sentimental analysis (SA), categorization, and prediction connected to finance [

12]. This has offered to ascend to sentiment analysis, which extracts people’s opinions from ever-increasing volumes of digital data via human–computer interaction (HCI), information retrieval, and multimodal signal processing [

13].

Because of the rapid growth of DM platforms, there is now an enormous amount of information on the internet. Users can now share their financial opinions on the internet. User-generated material can be beneficial to businesses at all levels. In this DM era, finding ways to exploit such content becomes critical [

14]. Sentiment analysis, often known as opinion mining, is one method of mining user opinion. These two names are sometimes used interchangeably, although they are distinct. Opinion mining is a way of discovering people’s feelings, attitudes, and views regarding a specific topic, whereas sentiment analysis is a way of evaluating people’s opinions, recognizing the sentiment represented in the text, classifying its polarity, and identifying additional sentiment. As a result, sentiment analysis is now regarded as a classification task [

15].

Formerly, sentiment analysis was limited to a single domain, but cross-domain sentiment analysis research is currently underway. Previous sentiment analysis research centered on highly subjective texts, e.g., product reviews, movie reviews, and service evaluations, but thanks to the Guardian dataset [

16], sentiment analysis has also made its way into newsrooms. The categorization of news is significantly different because the text’s author offers an opinion on product reviews, headlines, and content. In general, news items are objective, and what influences people’s thoughts and sentiments about a specific subject is the text of the reporter or author addressing the issue addressed in the news item, rather than the people’s text [

17]. These news items inform various government and financial entities about how the public views and thinks about financial concerns. It can assist them in learning information, such as the quality of their job, the impact of their policies, and their public image. Instead of manually going through all items on DM, the entity in question will benefit from automatically classifying financial material into the appropriate category. The Guardian is regarded as one of the most widely used and well-known DM platforms for up-to-date and accurate financial and government news. As a result, the news in these newspapers occasionally expresses people’s feelings on various themes. Communication and information technologies have significantly impacted the world [

18].

Interpersonal relationships, communication patterns, social arguments, political disputes, and DM, for instance, have all altered how people use technology. Political scientists, media & communication experts, sociologists, and experts from the international association have all researched countless stages of social media use [

19]. The creative and evolving field of social computing analyses and models social behaviors and events across a variety of platforms. Additionally, this generates innovative and interactive applications that help governmental and financial institutions produce successful outcomes. The reporters or authors of DM can also use the social media content that is available about certain people to express their opinions or feelings on an occasion, problem, or item. It is essential for dissecting this haphazard and inconsistent data to draw conclusions about various topics [

20,

21]. In addition, the digital platforms that make these data available have a far more formless shape, making mining difficult. The financial text can now be retrieved via a number of DM platforms. One of these is the Guardian application programming interface (API), which gathered text on a certain subject. The following list includes the top four justifications for using The Guardian API [

22,

23].

Dissimilar people read The Guardian DM platform for reading news, published daily and presenting the information related to all aspects of life and concerning what people think about the matter under debate, so it is a reliable and reputable source for sentiment or opinion analysis.

A large number of articles related to financial matters are posted daily in The Guardian newspaper, so it is growing daily.

The Guardian readers and the consistent users have varied sentiments about diverse topics. Therefore, text posted related to financial matters on DM platform can be collected by using the specific The Guardian API.

The readers of The Guardian are from all over the world. However, the readers from the United Kingdom prevail over the data that can be collected in English.

Machine learning (ML) methods can be broadly divided into two categories: supervised learning, in which the learning data is presented and provided by the user, and unsupervised learning, in which the learning data is learned as a clustering approach by taking into account the vastness of the dataset [

24,

25,

26,

27,

28]. Evolutionary algorithms are crucial in this regard; they have been used in a range of optimization tasks, including picture classification, global optimization, text classification, and parallel machine scheduling, to name a few. The arithmetic optimization algorithm is mathematically conceptualized and developed to execute optimization procedures in various search spaces, akin to the reptile search algorithm, a nature-inspired meta-heuristic optimizer influenced by crocodile hunting behavior [

29,

30,

31,

32,

33].

1.1. Motivation

Public feelings associated with finance are gaining significant importance as they help individuals, financial and non-financial institutions, and the government to understand the financial condition, impact of deployed policies, counter-response, and public mental health.

1.2. Research Gap

The fundamental problem addressed in this study is to classify public sentiments from the massively available textual dataset based on the financial news primarily published on DM to identify people’s overall views about financial matters that ultimately impact the public mental health.

1.3. Objectives

The objectives of the proposed study are as follow:

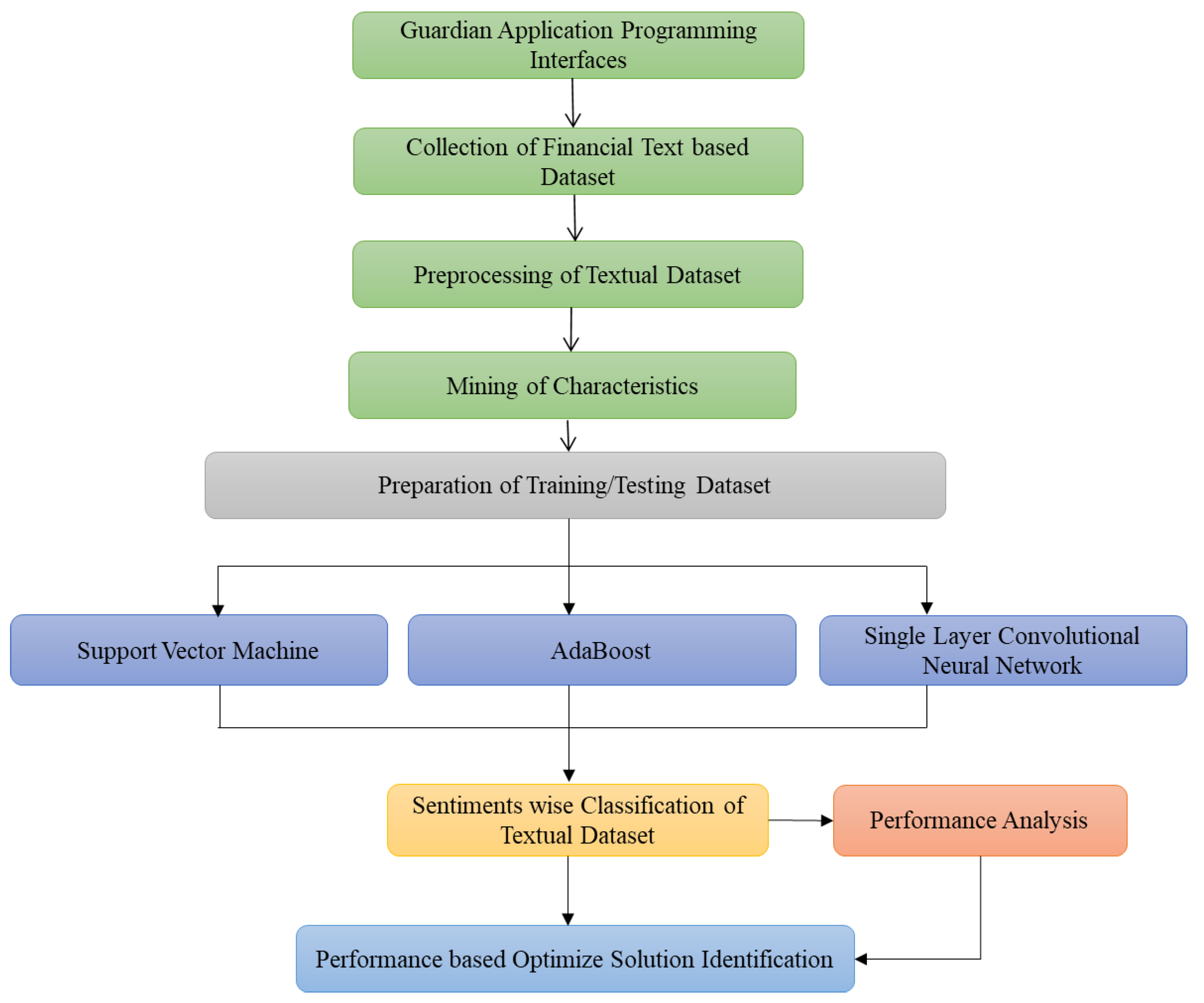

Firstly, the dataset collected through The Guardian API is based on public sentiments related to financial news content.

Secondly, the dataset based on financial news content is preprocessed for appropriate and efficient classification into four primary sentimental attributes: neutral, glad, depressed, and annoyed, selected through the Circumplex model as shown in

Figure 1.

Thirdly, we worked out mainly for the individual sentimental classification with precise accuracy from the mixed state content based on The Guardian dataset (text) using two ML techniques, i.e., support vector machine (SVM) and AdaBoost, and one deep learning (DL) technique, the single layer convolutional neural network (SLCNN).

1.4. Contribution of the Study

The study can be helpful for financial, non-financial, and governmental organizations to estimate the impact of their policies through identifying public sentiments that affect public opinion about financial matters and, ultimately, their mental health without direct interaction or survey.

Our goal is to use preprocessed datasets from digital platforms to build an ML-based model for assessing the intended content of financial news. We shall investigate how public perception and mental health via financial news in DM are influenced. Sentiment signals have been employed as a common linguistic characteristic for representing target information content hidden in financial literature. We create a baseline to represent financial news material using frequency-based attributes extracted from emotive words. The ultimate goal of this research would be to create a precise ML-based tool to assist public financial and non-financial institutions.

Numerous investigators employ a variety of ways to categorise various data types as a result of the daily development and expansion of ML methods, including text mining and classification [

34,

35]. So, for the four groups that do not change frequently in the current study, supervised learning approaches are applied. The preprocessed dataset is required to classify the Guardian-based dataset using ML algorithms and techniques. The Guardian API can access nearly 3085 different contents. Finding the right classification methods is important when the dataset is created in order to classify it. To categorise obtained datasets related to finance, the SVM, AdaBoost, and SLCNN are used because they are capable of handling a high dimensional volumetric dataset [

36].

The paper’s organization is as follows. Related research has been explained in

Section 2 to highlight the available literature in the related domain, in addition to mathematical models of the deployed ML methods in

Section 3. Data collection and feature selection have been explained in

Section 4 to describe the whole procedure of preprocessing. Material and methods used have been explained in

Section 5 to discuss the procedure of classification and its significance.

Section 6 comprises the experimental results and performance comparison of all techniques to highlight their superiority over other existing techniques. Lastly, a discussion and conclusion are presented in

Section 7 and

Section 8 respectively to conclude the research.

2. Related Research

In this section, a literature study is carried out to throw light on attempts of different researchers to enhance understanding of mental health through sentimental analysis and efficient financial text mining and classification. To model and predict people’s sentiments about financial matters can assist public health, financial and governmental organizations in their policy modification and development. Several pieces of research related to the NLP, sentimental analysis, and ML-based algorithms highlight their applications in various fields, proving to be a great source of guidance for the proposed idea.

Using a comprehensive set of NLP techniques and a benchmark of pre-pandemic posts, researchers unearthed patterns of how specific mental health issues emerge in language and recognized at-risk users. Moreover, it evidenced that textual analysis is sensitive to uncovering mental health complaints as they show up in real-time, identifying vulnerable groups and alarming themes during COVID-19, and therefore may be helpful during the continuing pandemic and other world-altering events, such as elections, economic circumstances, and protests [

37].

To separate the sentiments from the financial text is done under sentimental analysis. In the sentimental analysis, ML has improved financial text mining, classification, and prediction techniques. The number of publications has grown exponentially to automated sentiment identification through the textual dataset. The text has irregular shapes, and the lexicon makes its sentimental analysis complex and challenging [

38,

39].

There have been numerous studies on sentiment analysis in the news, with the majority focusing on news stories. Sentiment categorization for online comments on Chinese news was accomplished using supervised ML algorithms. Using Ajax technology, the comments were scraped from SINA [

40]. To perform Chinese word segmentation, they employed the ICTCLAS toolset, which includes word segmentation, part of speech tagging, and recognition of unknown terms. Candidate feature determination, feature filtering using information gain, and feature weighing with TF-IDF were all utilized to select features. The SVM and KNN classifiers are utilized. On top stories, SVM outperformed KNN with an accuracy of 60.96%, according to their testing [

41].

Another study used common-sense knowledge bases, such as ConceptNet and SenticNet, to develop a sentic computing technique for sentiment analysis of news items from the MPQA corpus. The goal of the study was to use sentiment analysis on individual sentences. A semantic parser, a sentiment analyzer, and a replicated version of the SenticNet database made up the opinion engine. To extract common-sense concepts, each sentence was analyzed semantically. After that, the semantic analyzer linked these concepts to SenticNet sentic vectors. The sentic vector expressed only the feeling described in the statement, not its polarity. Using a polarity measure, the sentic vector was then converted to a polarity score in the range [–1, 1]. The sentic vectors for each thought were derived from the Hourglass of emotions, which classifies sentiments into four categories [

42].

The evaluation of Guardian data has been the subject of extensive research in recent years. In their research, the authors suggested a method for dividing student data collected by The Guardian into various groups in order to identify the myriad issues that students face. Therefore, they demonstrated and provided the logical mythos for influencing the emotions expressed on several disparate social media platforms. They have also examined the text and data utilizing advanced annotation, grammar, semantic networks, and vocabulary acquisition. Text classification and basic data gathering methodologies are presented and suggested [

43].

The authors have presented research that normalizes irrelevant headlines and content as well as noisy data and categorizes them as positive or negative depending on their polarity. Additionally, they use mixed model approaches to develop phrases that express diverse emotions, and the words they produce are subsequently used as crucial indicators in the classification model. By utilizing various financial message boards, authors have developed a new method for predicting stock market sentiment. They have also automated a projection for the reserve market based on web views [

44].

Social computing is a creative and evolving computer model for analyzing and simulating social activities and events across multiple platforms. Using several classifiers, including max entropy and ensembles classifiers, the exactness of the classification technique with a selected characteristic vector is validated for a variety of electrical products. In comparable research, authors have analyzed the classification performance of SMO, RF, NB, and SVM for the Guardian data [

45,

46].

On social media platforms, satirical news is a common problem that can be false and destructive. The research provided an ensemble technique for identifying satirical news in Turkish headlines. The feature sets were extracted using linguistic and psychological features in the described scheme. Five supervised learning techniques (Naive Bayes algorithm, logistic regression, support vector machines, random forest, and k-nearest neighbor algorithm) were combined with three widely used ensemble methods (AdaBoost, bagging, and random subspace) in the classification phase. Based on the findings, it was determined that the random forest algorithm produced the best results for detecting satire in Turkish. Using the recurrent neural network architecture with an attention mechanism, deep learning (DL) based architectures obtained improved classification accuracy (CA) [

47].

Another study used naive Bayes to conduct trials based on various individual attributes obtained from Facebook to predict distinctive personalities. These traits are expressed in the form of English terms that are based on LIWC categories, such as various programs or goals, activity logs, structural networks, and other crucial personal information. Using WEKA, the entire analysis was carried out [

48].

Semi-supervised algorithms have also been widely used in the sentiment analysis of news stories. According to the hypothesis, news items can be used to predict real-time changes in stock price direction. Trend segmentation based on linear regression and grouping of intriguing patterns were utilized in their study [

49]. They categorized and aligned news articles of importance relative to the trend labels using the semi-supervised Incremental K-Means technique to partition data into two groups. They defined and quantified a cluster discriminating coefficient and a cluster similarity coefficient. To compute a word’s weight in a document, the two metrics were combined with a term frequency score for each word [

50]. These properties were employed as input features for ML using a support vector machine on the clustered documents. The positive examples were the clusters of documents that were preserved after preprocessing, while the negative examples were the clusters of documents that were deleted after training two SVM classifiers. The system was tested against a database of around 350,000 financial news stories and the stock prices associated with them, collected from Reuters 3000 Xtra. Their forecasts had a high success rate, indicating they were correct [

51].

Due to the vast amount of data accessible, a research group discovered that instance selection and feature selection are critical tasks for attaining scalability in ML-based sentiment categorization. They investigated the effectiveness of fifteen benchmark instance selection procedures in text classification to see how well they could predict the outcome. Decision tree classifiers (C4.5 algorithm) and radial basis function networks regarding classification accuracy and data reduction rates are evaluated, e.g., in terms of selection strategies [

52].

According to another study, the vector space model and term weighting approaches are valuable techniques for representing text files. This research thoroughly examined Turkish sentiment analysis, including nine supervised and unsupervised word weighting techniques. The prediction effectiveness of word weighting schemes is investigated using four supervised learning algorithms (Naïve Bayes, support vector machines, k-nearest neighbor algorithm, and logistic regression) and three ensemble learning approaches (AdaBoost, Bagging, and random subspace). The results show that supervised term weighting models beat unsupervised term weighting models in terms of accuracy [

53].

According to a group of experts, feature selection is important in constructing strong and effective classification models while lowering training time. They suggested an ensemble feature selection strategy, which combines the distinct feature lists produced by several feature selection techniques to provide a more resilient and effective feature subset. The suggested genetic rank aggregation-based feature selection model is an efficient method that beats individual filter-based feature selection methods on sentiment classification, according to experimental results [

36,

54].

Researchers demonstrated a DL-based technique for sentiment analysis of Twitter product reviews. The proposed architecture combines CNN-LSTM architecture with TF-IDF weighted Glove word embedding. According to the empirical data, the suggested DL architecture surpasses traditional DL approaches [

55].

The review examined the prediction performance of five statistical keyword extraction strategies that used classification algorithms and ensemble methods for scientific text document classification. The paper compares and contrasts five commonly used ensemble approaches with base learning algorithms. The classification schemes are compared in terms of classification accuracy, F-measure, and area under curve values. The empirical analysis is validated using the two-way ANOVA test. Combining a bagging ensemble of random forest with a most-frequent-based keyword extraction technique yields outstanding text classification results, according to the results [

56].

According to another article, extracting an efficient feature set to represent text documents is critical in developing a reliable text genre classification scheme with excellent prediction performance. Ensemble learning, which integrates the outputs of individual classifiers to produce a robust classification scheme, is also a prominent research area in ML. An ensemble classification system is proposed based on the empirical study, which merges the random subspace ensemble of random forest with four characteristics (features used in authorship attribution, character n-grams, part of speech n-grams, and the frequency of the most discriminative words). The proposed technique achieved the highest average prediction accuracy for the language function analysis (LFA) database [

57].

This research uses supervised hybrid clustering to split data samples of each class into clusters using the cuckoo search algorithm and k-means to give training subsets with greater diversity. The majority voting rule is used to integrate the predictions of individual classifiers after they have been trained on a variety of training subsets. Using eleven text benchmarks, the proposed ensemble classifier’s predicted performance is compared to that of traditional classification algorithms and ensemble learning approaches. The given ensemble classifier outperforms standard classification algorithms and ensemble learning approaches for text categorization, according to the findings of the experiments [

58].

The study proposed a sentiment analysis strategy based on ML for students’ evaluations of higher education institutions. They used traditional text representation systems and ML classifiers to assess a dataset of around 700 student reviews written in Turkish. Three traditional text representation systems and three N-gram models were considered in the experimental investigation, along with four classifiers. Four ensemble learners’ prediction performance has also been assessed. The empirical findings show that an ML-based method can improve students’ perceptions of higher education institutions [

59].

Another work used traditional supervised learning methods, ensemble learning, and DL principles to develop an effective sentiment categorization scheme with strong prediction performance in Massive open online courses (MOOC) reviews. In the field of sentiment analysis on educational data mining, DL-based architectures outperform ensemble learning methods and supervised learning methods, according to the empirical investigation. In combination with GloVe word embedding scheme-based representation, long short-term memory networks have produced the best prediction performance for all of the compared solutions [

60].

The study aimed to develop a methodology for identifying sarcasm in social media data. The authors used the inverse gravity moment-based term weighted word embedding model using trigrams to characterize text documents. By maintaining the word-ordering information, essential words/terms have higher values. A three-layer stacked bidirectional long short-term memory architecture was presented to recognize sarcastic text documents. Three neural language models, two unsupervised term weighting functions, and eight supervised term weighting functions were tested in the empirical study. The provided model performs well in detecting sarcasm [

61].

Another study described a DL-based system for detecting sarcasm. In this context, the prediction performance of a topic-enriched word embedding scheme was compared to typical word embedding procedures. In addition to word-embedding based feature sets, traditional lexical, pragmatic, implicit incongruity, and explicit incongruity-based feature sets are considered. The experimental investigation looked at six subsets of Twitter posts, ranging from 5000 to 30,000. The results of the studies suggest that traditional feature sets paired with topic-enriched word embedding approaches can offer promising results for sarcasm detection [

62].

For text sentiment categorization, the researchers of this work presented a hybrid ensemble pruning technique based on clustering and randomized search. In addition, a consensus clustering technique is provided to cope with the instability of clustering results. The ensemble’s classifiers are then sorted into groups depending on their prediction abilities. Then, based on their pairwise diversity, two classifiers from each cluster are chosen as candidate classifiers. The search space for candidate classifiers is examined using a multi-objective evolutionary method based on elitist Pareto. The suggested technique is tested on twelve balanced and unbalanced benchmark text categorization difficulties for the evaluation task. In addition, the recommended method is compared against three ensemble approaches (AdaBoost, bagging, and random subspace) and three ensemble pruning algorithms (ensemble selection, bagging ensemble selection, and LibD3C algorithm). The results show that consensus clustering and multi-objective evolutionary algorithms based on Elitist Pareto can be successfully employed in ensemble pruning [

63].

A well-known method for understanding and expressing personal opinions, feelings, thoughts, and mental health is text-based sentiment analysis. These people frequently use subjective prose to convey common emotions, moods, feelings, ideas, and reactions. Since most real-world data are amorphous and unstructured, this presents a significant obstacle for emotive analysis. As a result, numerous studies have made impressive attempts to extract meaningful and valuable information from these unstructured and amorphous datasets in recent years. The suggested study is essentially an extension of previous work in the described domain, in which sentimental analysis was performed on a real-time textual dataset pertaining to finance collected via The Guardian API for mental health monitoring.

Table 1 presents a summary of the work already done in the identified domain to highlight the area that needs further exploration.

4. Dataset Collection and Features Selection

The sentimental analysis and classification based on the financial text published online in The Guardian newspaper are done to identify the sentiments of the people about financial matters. Therefore, it is necessary to collect headlines and detailed news from newspapers through The Guardian API; it fetches Corpus, a collection of documents from The Guardian open platform. The length of the headline and content can vary, but The Guardian delivers a more reliable dataset with complete information and better structure than microblogging platforms. Therefore, the content’s reporter or author must provide the whole message or people’s sentiments by presenting exhaustive information. It is the reason to express the entire scenario related to public sentiments with a comprehensive paragraph because this single paragraph is representative of public opinion or sentiments. The Guardian API offers the capability to retrieve such headlines or paragraphs for a specific topic. We need to insert the API key to proceed with dataset acquisition, then provide the query and set the time frame for retrieving the articles. Further, we must define which features to retrieve from the Guardian platform. Finally, it retrieves the articles. We retrieve 3085 articles mentioning financial text between December 2020 and December 2021. The text will include the article headline and content. In contrast, these article headlines and content are classified into four major sentimental attributes: neutral, glad, depressed, and annoyed.

When the data are collected, the dataset is preprocessed, and unnecessary stuff is removed from these article headlines and content. So, these elements are mandatory for learning the size of that groups. These words are used as elements. The bag of words approach extracts the essential characteristics, and it groups the words from each article headline and content and makes the vector of every article headline and content comprising words. Several researchers have used n-grams in place of these words. Therefore, the grammatical method enhances the position and dimension of the data set. It also uses a unigram, a bigram, and a trigram that compares the pattern. Therefore, these words are selected as features.

In order to generate the dataset, word frequencies were also used. Not all credentials of words are valuable information. Most of the research often avoided prevalent words, did not provide helpful information about the unit and group, and described the utmost general nouns relative to the human language in which most of the text lies. Hence, the general words were isolated from the data set by eliminating high-frequency data. Therefore, the textual dataset’s lowest and maximum cut-off values had to be chosen to find the most refined set of characteristics.

5. Materials and Methods

There are two primary learning methods in ML. One is supervised learning and the second is unsupervised learning. The designer provides the system with learning data for training the system in supervised learning, and in unsupervised learning, the system learns the patterns from the data itself. As regards the present situation, the dataset is shapeless and unstructured. The supervised learning mode is much more pertinent. So, the value of A and value of B were selected as frequency-limited, i.e., lower and upper cut-off values that maximize efficiency.

Figure 3 depicts the frequency ranges of the selected data.

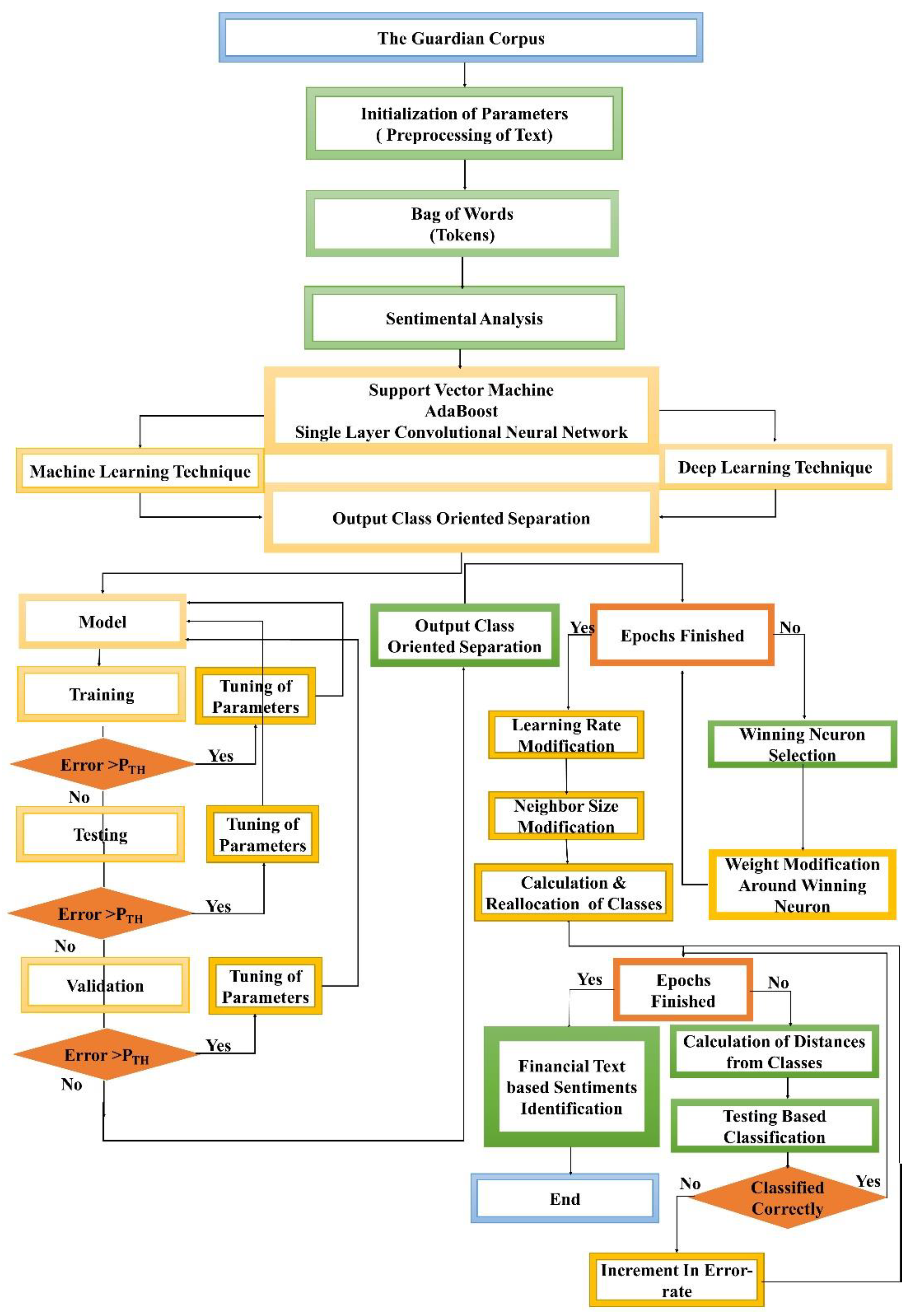

The proposed work is about text-based sentimental classification using ML techniques: SVM, AdaBoost, and SLCNN. For this purpose, we selected the financial text-based dataset from Guardian containing sentiments. One of the key purposes of this study is to classify public sentiments based on the text primarily used in our daily lives for reporting and writing. Guardian API offers the capability to retrieve relevant headlines and content from articles for a specific query in the specified format. Every query might make more than two thousand text-based results simultaneously. At the same time, these text-based headlines and content are classified into four primary sentimental attributes: neutral, glad, depressed, and annoyed. Proposed attributes and values (text-based) are shown in

Table 2.

The sentimental classification for mental health monitoring based on the financial text published in the Guardian newspaper online is an emergent area that wants more consideration. Firstly, the Guardian dataset is collected through Guardian API, and then preprocessed. Secondly, the unnecessary stuff is removed from the perfect text, and any feature selection approach is deployed. Thirdly, most data are labeled manually as neutral, glad, depressed, and annoyed headlines and content for preparing the dataset and then its division into two categories training dataset and testing dataset. Finally, the extracted features and their values in the training dataset are used as input to the identified classifiers for modeling and classifying text into the defined four sentiments. Every processing phase is deliberated comprehensively into the below-mentioned subparts. Numerous researchers have also tested various methods for supervised learning, and it has been recognized that the techniques below deliver the most significant and acceptable results compared to other techniques mentioned in the existing literature. Based on their comparative performance for textual data analysis, we have identified three strategies (two ML techniques, SVM and AdaBoost, and one DL technique, SLCNN) in this suggested study.

Figure 4 depicts the steps for carrying out the process of financial text-based sentimental classification and mental health monitoring.

The whole research work was carried out in Orange 3.30.2 (University of Ljubljana, Ljubljana, Slovenia). We collected the Guardian dataset from December 2020 to December 2021 using the Guardian API; it contains different types of headlines and content in textual format. In our daily lives, almost everyone reads the newspaper on digital platforms, representing public opinion about domestic or social issues. In this study, we just considered public financial matters gathered through the Guardian API in the form of text. This news consists of neutral, positive, negative, or compound gestures and affects public sentiments. Initially, we preprocessed these headlines and content-based textual datasets and converted them to a refined dataset.

5.1. System Specifications

Experiments were conducted on Lenovo Mobile Workstation equipped with Processor: 11th Generation Intel Core i9, Operating System: Windows 11 Pro 64, Memory: 128 GB DDR4, Hard Drive: 2 TB SSD, Graphics: NVIDIA RTX A4000. We used Anaconda Prompt (Jupiter notebook) and Orange-v3.5 tools for the experimentation and results of our proposed scheme, and the language used in it is Python.

5.2. Preprocessing

The sentiment dataset was obtained via the Guardian API and contains headlines and content that are neutral, positive, negative, or compound. The anticipated preprocessing scheme divides the text into smaller units (tokens), filters them, performs normalization (stemming, lemmatization), generates n-grams, and labels tokens with part-of-speech labels. The configurations and parameters for preprocessing are listed in

Table 3.

5.3. Sentiment Analysis

Sentiment analysis forecasts neutral, polar, and compound sentiments for each headline and paragraph in the Guardian newspaper. We used the Vader sentiment modules from Natural Language Toolkit and the Data Science Lab’s multilingual sentiment lexicons. They are all lexicon-based. Vader is only able to communicate in English. Then, using Corpus Viewer, we can view four new features that have been appended to each financial news item via the Vader method: positive score, negative score, neutral score, and compound score. We can see the new features below where the compound was sorted by score. Compound sentiment measures the overall sentiment of financial news, where −1 indicates the most negative sentiment and 1 indicates the most positive sentiment, as shown in

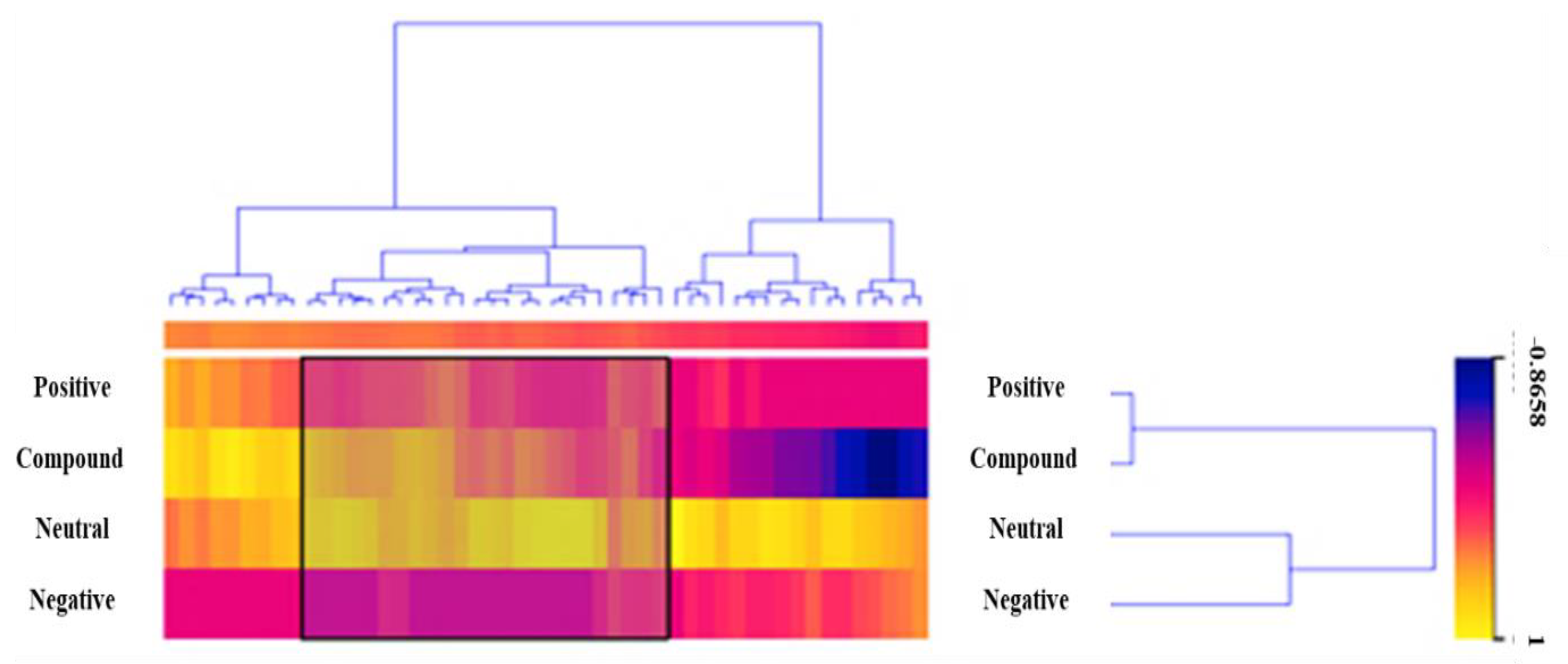

Table 4.

Now it’s time to visualize the data. We have some features that we are not interested in at the moment, and we will remove them using Select Columns. Due to the fact that we removed News ID via Select Columns. Then we can reduce the size of our dataset to make it easier to visualize. Data Sampler should be used to retain a random 10% of headlines and content. There were 3085 headlines and content related to financial news, but we visualized only 309 using a Heat Map. Now that the dataset has been filtered, it is passed to the Heat Map. Merge by k-means is used to group headlines and content with the same polarity. Rows and columns then cluster the data to create a visualization of similar headlines and content, as illustrated in

Figure 5.

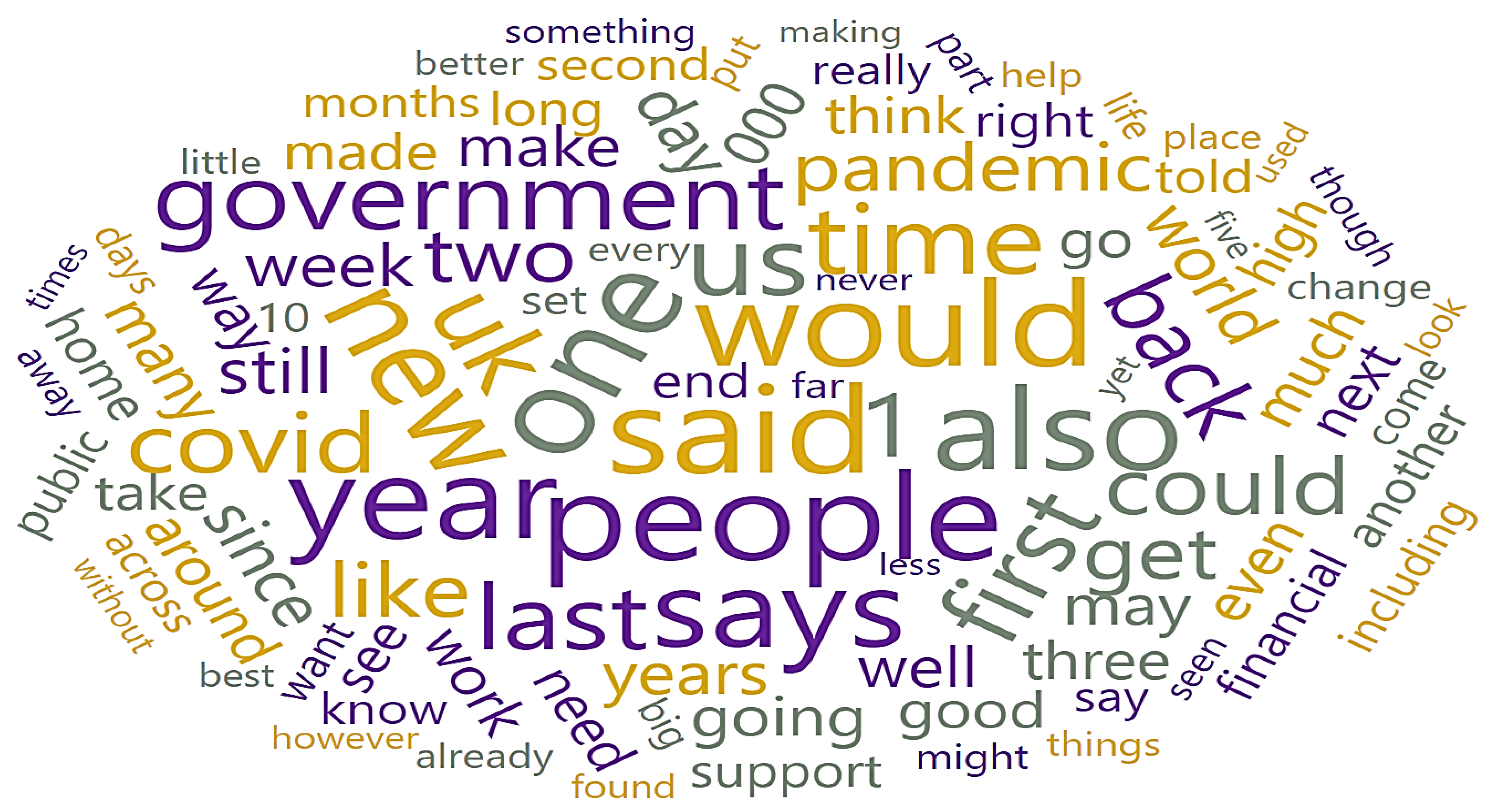

5.4. Word Cloud

As illustrated in

Figure 6, a Word Cloud displays tokens from the corpus, with their size denoting the word’s frequency in the corpus or an average bag of words count that summarizes the frequency of use of each word (weight). The outputs contain a subset of the word cloud’s tokens.

5.5. Bag of Words

The Bag of Words model generates a corpus of financial news with word counts. The count can be absolute, binary (includes or excludes), or sublinear (logarithm of the term frequency).

It evaluates ML algorithms. Numerous sampling strategies are available, including using distinct test data. It accomplishes two tasks. To begin, it displays a table containing various performance metrics for classifiers, such as classification accuracy and area under the curve. Second, it generates evaluation results those other modules can use to analyze classifier performance, such as ROC analysis or confusion matrix.

5.6. Text Mining through Classification Techniques

In this study, SVM, AdaBoost, and SLCNN models were deployed to classify financial news-related sentiments into four categories: neutral, glad, depressed, and annoyed, to monitor mental health. The model was tested for its ability to classify four sentiments. For model evaluation, quantitative measurements, such as area under the curve (AUC), classification accuracy (CA), F1-measure, precision, and recall. The whole process is explained in Algorithm 1:

| Algorithm 1 |

Input data:Transformation of input text- 2.

Convertion of all text to lowercase - 3.

Removal of accents - 4.

Parsing of HTML tags - 5.

Removal of URLs

Tokenization of text- 6.

Retention of complete sentences - 7.

Whitespace separation - 8.

Word & Punctuation separation

Normalization through stemming and lemmatization- 9.

Porter Stemmer application or Snowball Stemmer application - 10.

WordNet Lemmatizer application for a network of cognitive synonyms to tokens - 11.

UDPipe application for normalization of data - 12.

Lemmagen application for normalization of data - 13.

Filteration of words - 14.

Stopwords removal from text - 15.

N-grams range converts tokens to n-grams - 16.

Part-of-Speech tagger performs part-of-speech tagging on tokens - 17.

Averaged Perceptron Tagger uses Matthew Honnibal’s averaged perceptron tagger to perform POS tagging - 18.

Treebank POS Tagger (MaxEnt) performs POS tagging using a Penn Treebank trained model

Term Frequency Identification- 19.

Term frequency identification to retain most frequent words appears in a document - 20.

Binary checks for the presence or absence of a word in the document - 21.

Sublinear takes the logarithm of the term frequency into account

Classification using SVM, AdaBoost, and SLCNN- 22.

Sentiment classification

|

Figure 7 summarizes this triad procedure comprehensively, displaying the overall flowchart of the proposed work to illustrate how the system learns through the ML techniques (SVM and AdaBoost) and DL technique (SLCNN) to classify the financial text into the identified sentimental classes. Finally, an optimized mental health identification solution is developed through sentimental analysis of the financial text.

7. Discussion

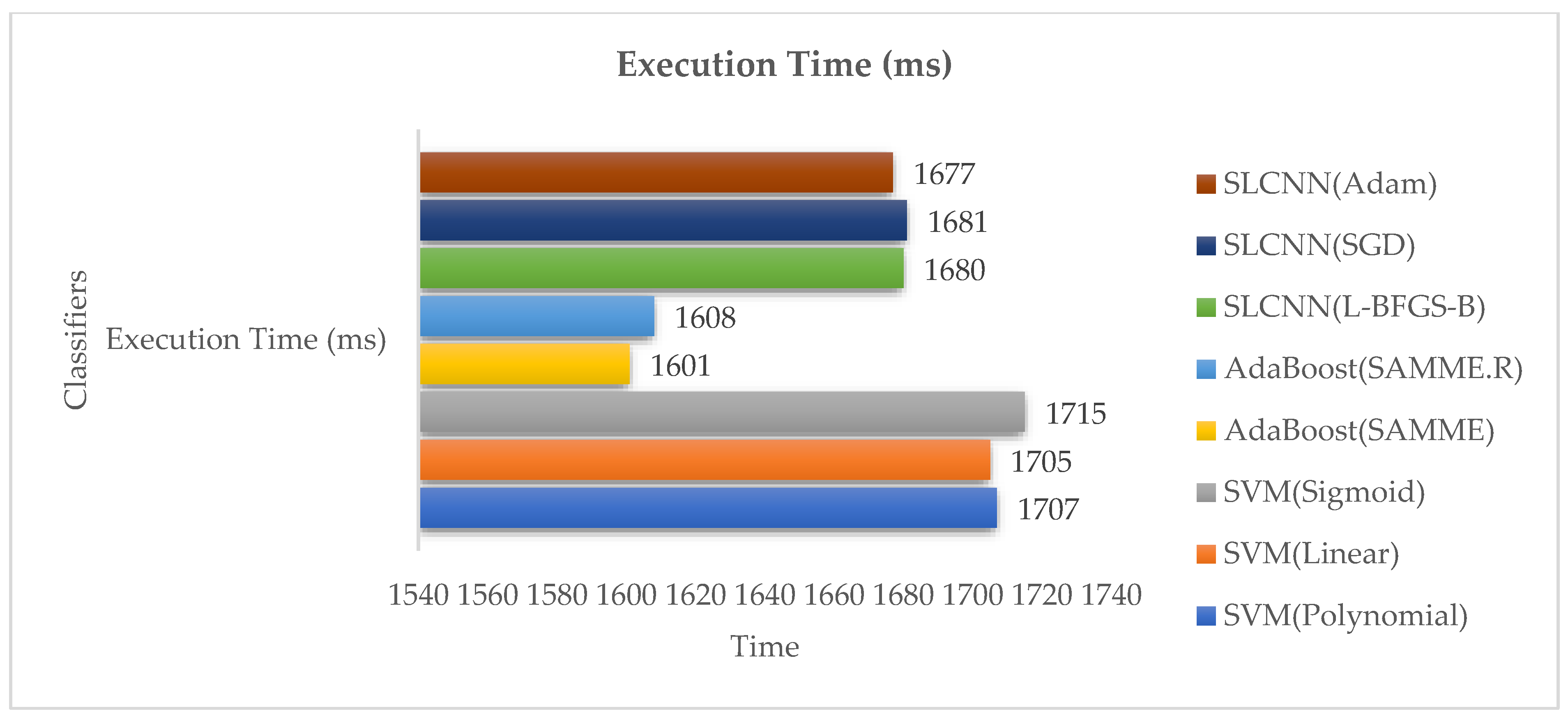

SVM is more compact in memory and computationally efficient due to its single instance training procedure. It can accelerate convergence for larger datasets by utilizing more prevalent parameter tweaks. The steps taken towards the loss function minima exhibit instabilities because of the constant updates, which can cause shifting away from the local minimums of the loss function, and ultimately it may take longer to approximate the loss function minima. Frequent updates use all of the resources at their disposal to process one training sample at a time, thus becoming computationally expensive. This approach does not take advantage of vectorized operations because it only works with one sample at a time. Adaboost is less prone to overfitting than other methods since the input parameters are not simultaneously tuned. Adaboost can be used to increase the accuracy of weak classifiers. Adaboost’s primary disadvantage is that it requires a high-quality dataset. Before implementing an Adaboost algorithm, avoiding noisy data and outliers is necessary. The SLCNN is exceptionally accurate in classifying text. Detects critical traits automatically and without human intervention. Additionally, it is capable of weight sharing and its reliance on initial parameter adjustment (for a good point) enabled SLCNN to avoid local optima. Thus, a shortcoming of SLCNNs is the significant effort needed to initialize them appropriately for the given task. This would necessitate some domain expertise.

In addition, we evaluated the performance of SLCNN in comparison to that of two widely used and well-known ML classifiers. In numerous studies of text classification, DL models have been compared to ML models, but no comparisons have been performed in the literature for financial text classification because DL models have not been employed for sentimental analysis through financial text classification. SLCNN outperforms the other models for two reasons: (a) its multiple filters of varying sizes and structure of hidden layers that captured high-level features from the text; and (b) convolving filters of variable size (window size) can extract variable-length features (n-grams), making it more suitable for financial text classification for mental health monitoring via sentimental analysis. In contrast to ML classifiers, the SLCNN classifier achieves a maximum accuracy of 93.9% on tens of thousands of characteristics from a finance related textual dataset. The primary issue with ML classifiers is that their effectiveness depends on feature selection methods, and comparative studies have shown that no feature selection strategy is effective with all ML classifiers [

71].

So, all the above-mentioned pros and cons, along with the experimental results, help us identify an optimized technique for classification using the Guardian-based textual dataset related to financial matters of the public and provide us insights about public mental health.

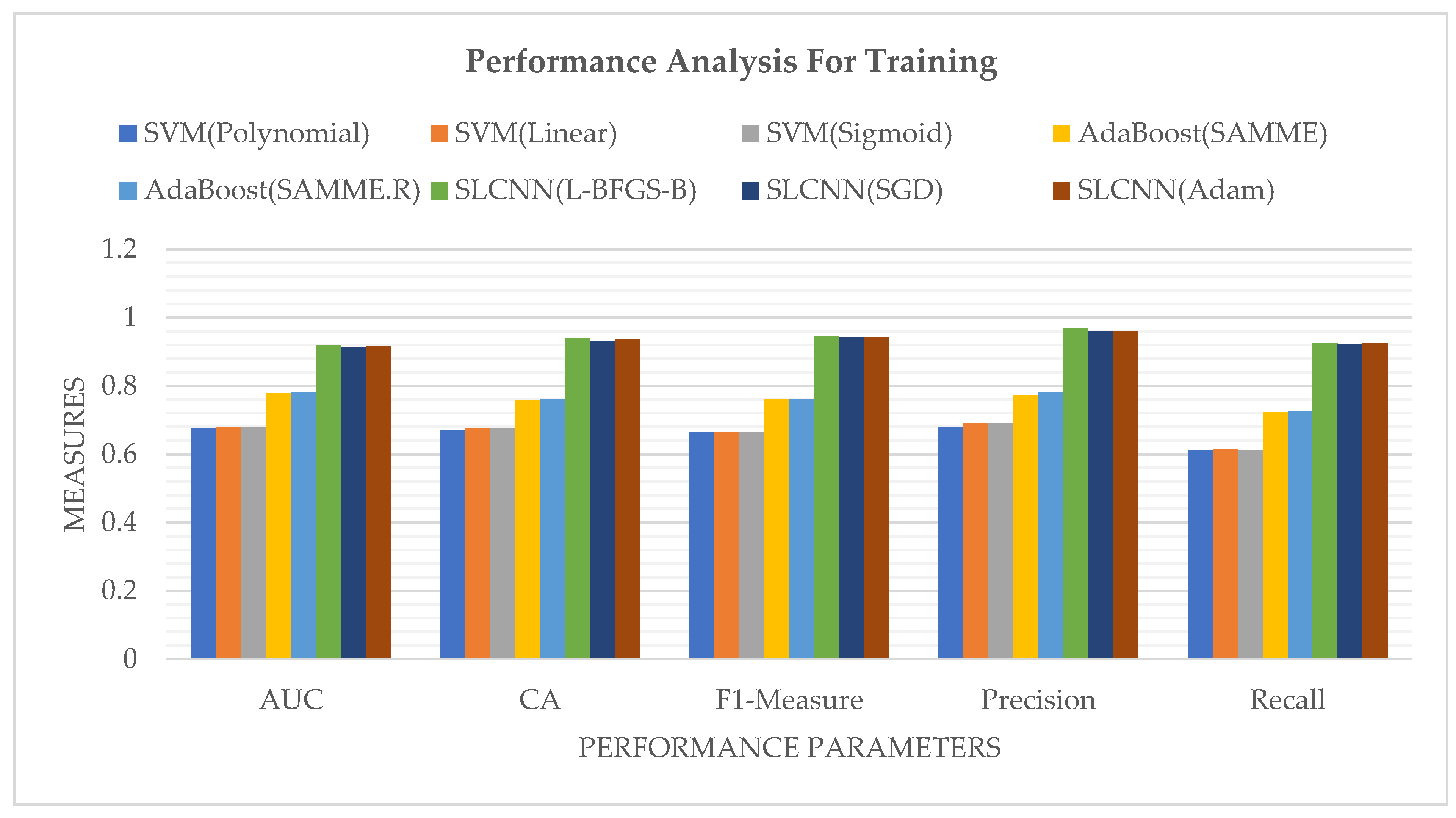

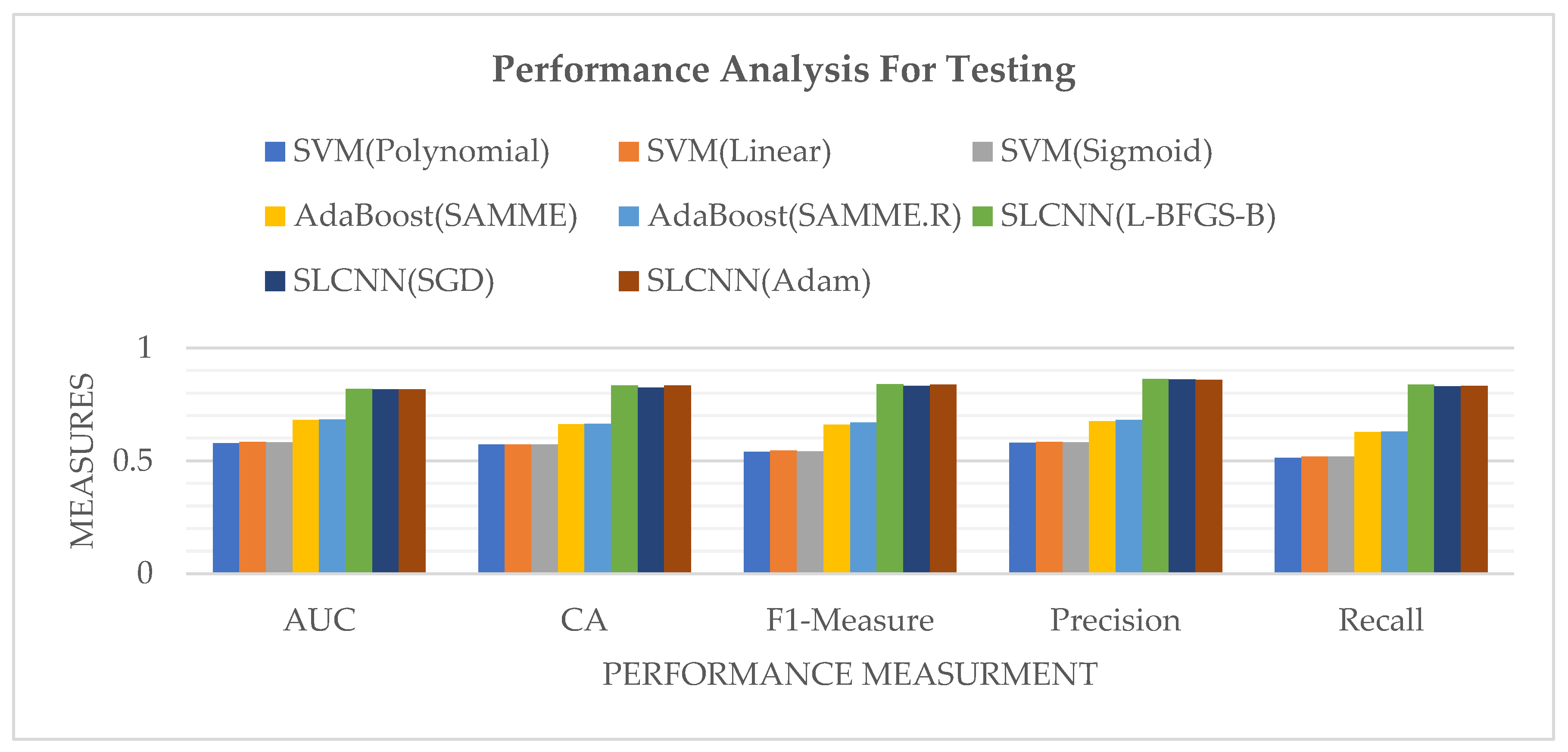

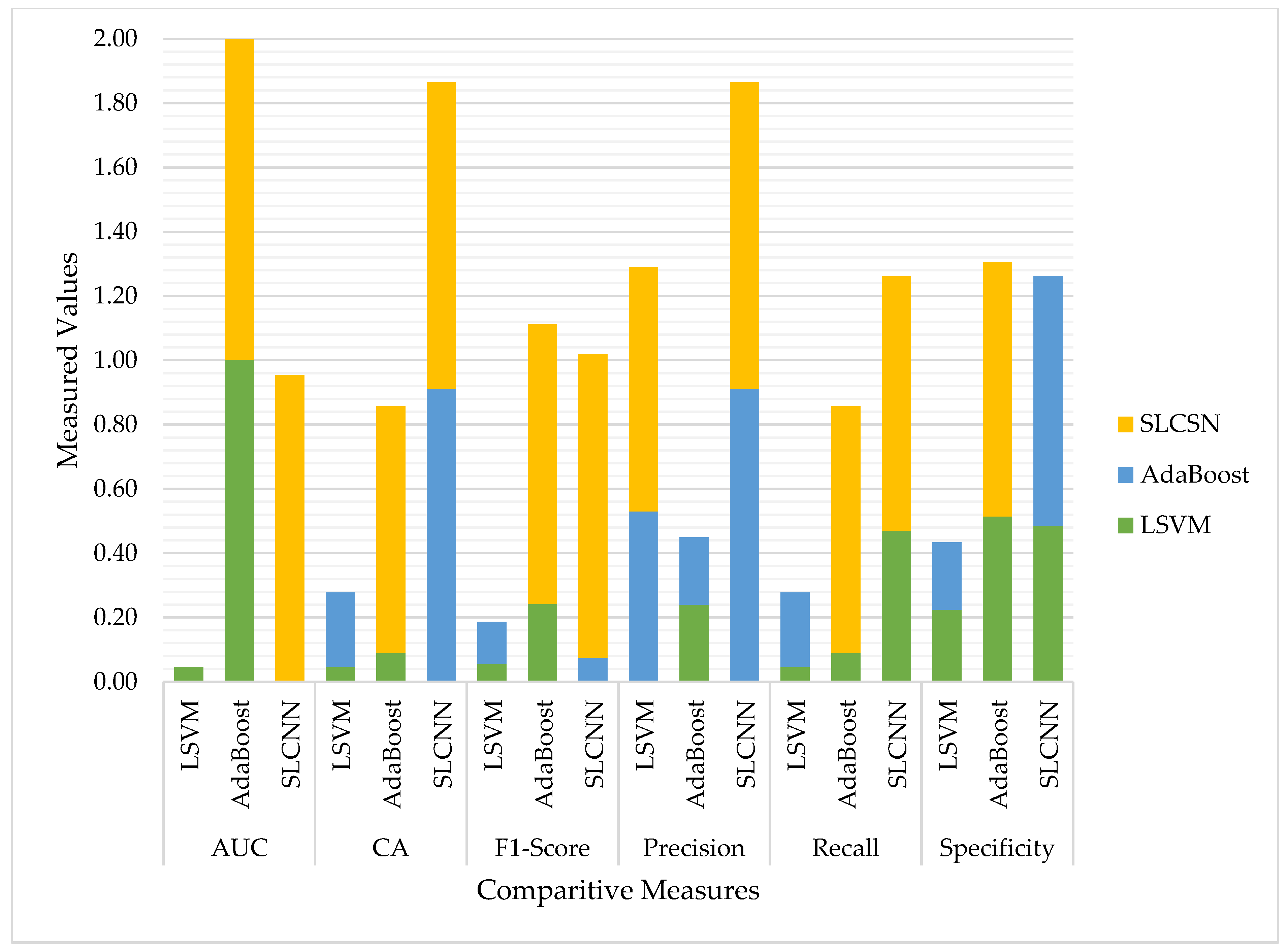

Table 10 compares the performance of the SLCNN and AdaBoost networks to the SVM network for the Guardian dataset containing financial text. In general, increasing the structural complexity of ML models improves sentiment classification performance. To begin, incorporating the SVM and AdaBoost modules enhances classification performance, particularly for financial textual inputs. The AUCs for SVM and AdaBoost were significantly lower than those for SLCNN with textual inputs on the same dataset.

On the other hand, the CA of textual inputs with SLCNN and AdaBoost was generally greater than that of textual inputs with SVM. Additionally, we compared the three models identified using SVM, AdaBoost, and SLCNN using six performance metrics: AUC, CA, F1-Measure, Precision, Recall, and Specificity. The results indicated that SLCNN was more effective than the others in our experiment.

Hence, this is a quantitative analysis of public sentiments obtained through newspaper articles published on DM platforms such as the Guardian in the aftermath of the 2020 COVID-19 outbreak. Using data from the Guardian, we examine how the general public feels about different financial policies and challenges as well as their mental health. We discover that, despite the drawbacks of lockdowns, public opinion is more positive than negative. Although the majority of the headlines and content are considered neutral, the rest is primarily positive. Additionally, the fact that none of the examples have more negative than positive thoughts is comforting. Due to the regulations implemented by financial and political institutions, the results of these assessments can be used to better understand how Guardian users perceive their financial conditions and mental health. The most recent findings offer a starting point for assessing public debate on financial issues as well as recommendations for leading a healthy lifestyle in a period of challenging economic conditions brought on by the pandemic. The information obtained can assist public health officials and policymakers in determining how individuals are coping with financial stress during these extraordinary times and what types of relief should be made available to the public for the betterment of mental health.

8. Conclusions, Limitations, and Future Work

SA is a subfield of NLP aiming to classify the sentiment expressed in a free text automatically. It has found practical applications across a wide range of societal contexts, including marketing, economy, public health, and politics. This study aimed to establish the state of the art in SA related to health and well-being by using ML techniques. We aimed to capture the perspective of healthy as well as individuals whose health and well-being are affected, utilizing the available financial dataset of The Guardian newspaper, based on the financial policies of the government and non-government organizations.

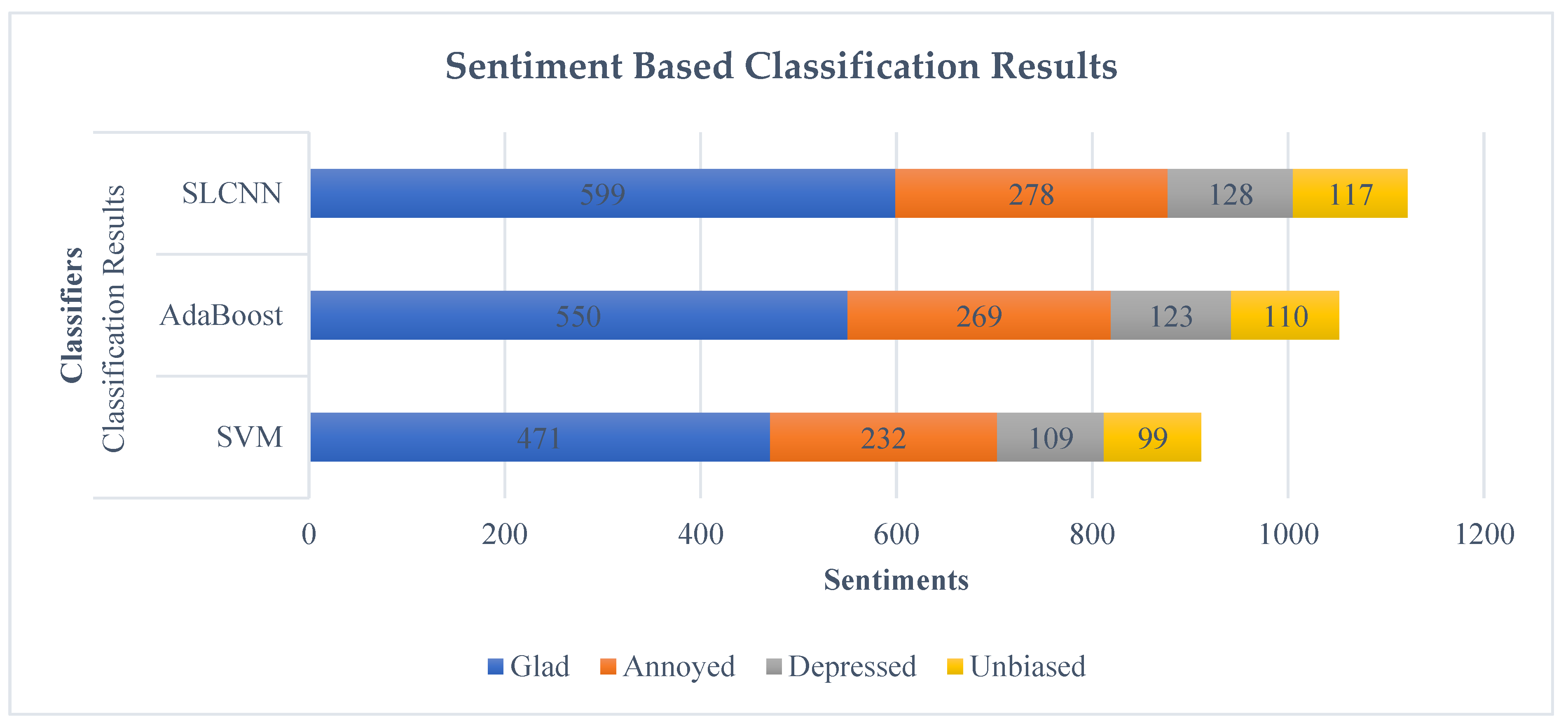

The dataset was collected through Guardian API and individual sentiments were classified based on four primary sentiments, i.e., neutral, glad, depressed, and annoyed. We compared three ML based techniques, namely SVM, AdaBoost, and SLCNN, which quickly classified the given text-based dataset into one of four selected individual sentiments. Owing to the daily growth and expansion of ML methods, numerous researchers tend to use these techniques in classifying textual data. SLCNN is considered the best classification method because it has 83.4% accuracy, whereas SVM and AdaBoost have 57.2% and 66.4%, respectively. Hence, it is used when the dependent variable or dependent target is categorical.

The limitations of this investigation include the magnitude of the dataset and the timeframe during which the data were gathered. In order to track how perceptions shift over time, it would be interesting to have data spanning a wider time frame, particularly as the pandemic comes to an end.

Another exciting future path would be to categorize headlines and material according to emotions, such as neutral, glad, depressed, and annoyed, to correctly perceive and reveal the feelings of headlines and content without labeling them.