Development of a Quantitative Preference Instrument for Person-Centered Dementia Care—Stage 2: Insights from a Formative Qualitative Study to Design and Pretest a Dementia-Friendly Analytic Hierarchy Process Survey

Abstract

:1. Introduction

2. Materials and Methods

2.1. Qualitative Approach

2.2. Theoretical Framework

2.3. Researcher Characteristics and Reflexivity

2.4. Sampling Strategy and Process

2.5. Sampling Adequacy

2.6. Sample

2.7. Ethical Review

2.8. Data Collection Methods, Sources and Instruments

2.9. Data Processing and Analysis, Incl. Techniques to Enhance Trustworthiness

3. Results

3.1. Content

3.1.1. Survey Title Page for PlwD

3.1.2. Survey Title Page for Physicians

3.1.3. Description of (Sub)Criteria for PlwD

3.1.4. Formerly Merged Criteria Demerged

3.1.5. AHP Axiom 2

3.1.6. Introduction of Sub-Criteria in the PlwD Version of the AHP Survey

3.1.7. Appropriateness of (Sub)Criteria

3.1.8. Validity and Inconsistency in the AHP Survey

3.1.9. Heterogeneity of Respondents

3.1.10. Sociodemographic Questions for PlwD

3.2. Format

3.2.1. Outline of the Survey

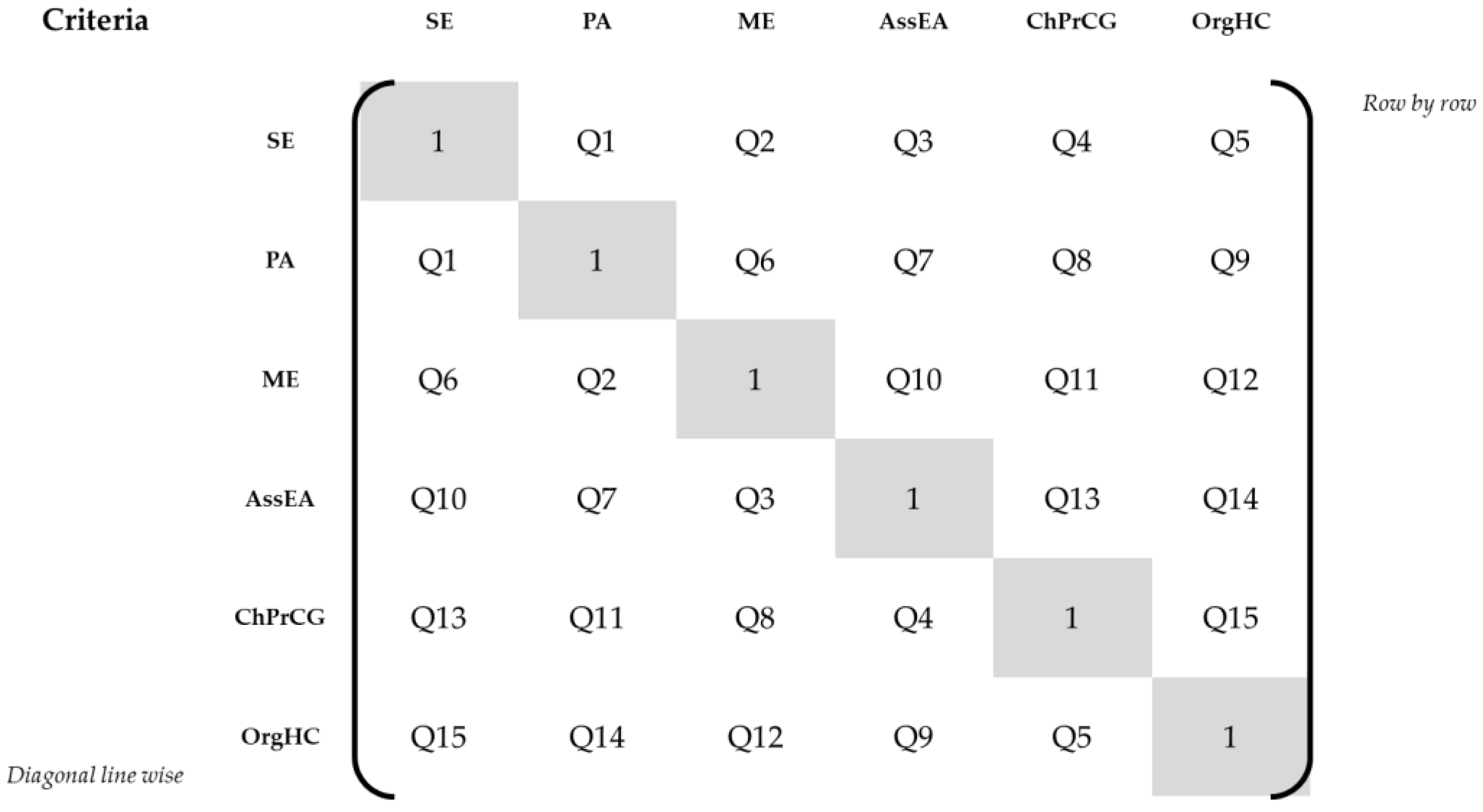

3.2.2. Sequence of Criteria-Related Pairwise Comparison Questions

3.2.3. Length of Survey

3.2.4. Formatting

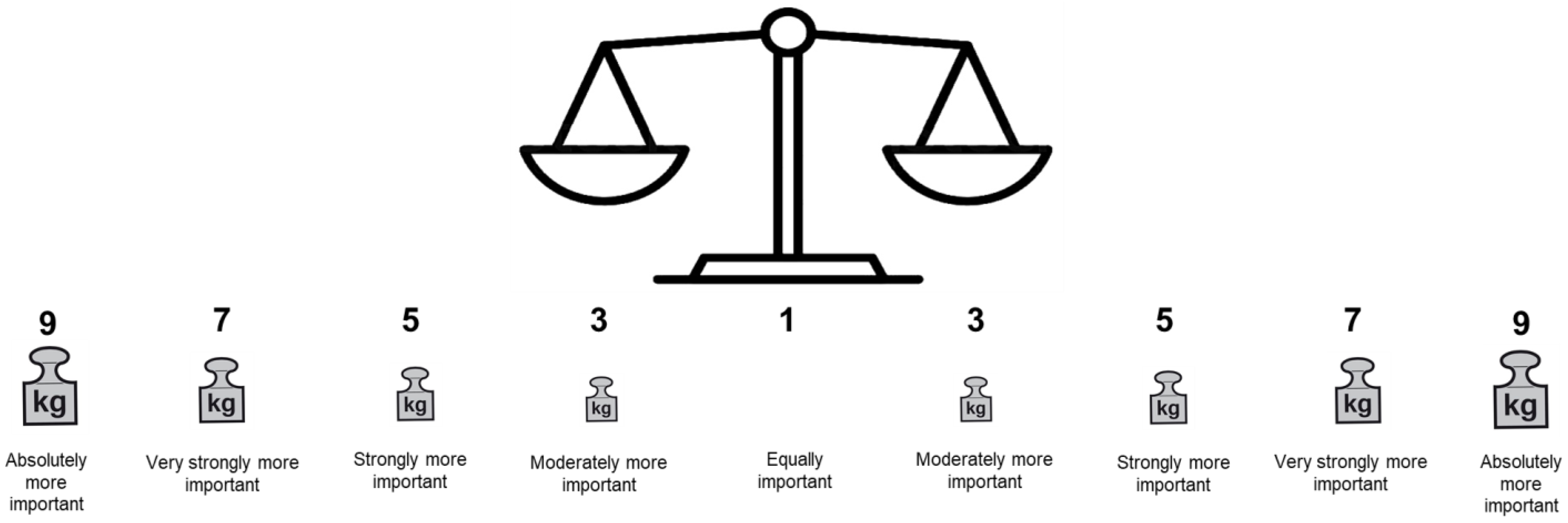

3.2.5. Layout: Transformation of the AHP Rating Scale

3.2.6. Explanation of Survey Procedure with Pairwise Comparisons

3.2.7. Simplification of Pairwise Comparisons

3.2.8. Assistance during Patient Survey

3.2.9. Perspective during Responses to Pairwise Comparisons

3.3. Language

3.3.1. Laypeople Words for “Criteria” and “Sub-Criteria” in a German AHP Survey

3.3.2. Avoid Long Sentences

3.3.3. Choice of Words Matters

3.3.4. Use of Icons as Visual Aids

4. Discussion

Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Prince, M.; Comas-Herrera, A.; Knapp, M.; Guerchet, M.; Karagiannidou, M. World Alzheimer Report 2016. Improving Healthcare for People Living with Dementia: Coverage, Quality and Costs Now and in the Future; Alzheimer’s Disease International: London, UK, 2016. [Google Scholar]

- World Health Organization Dementia Fact Sheet. Available online: https://www.who.int/news-room/fact-sheets/detail/dementia (accessed on 27 April 2022).

- Vos, T.; Lim, S.S.; Abbafati, C.; Abbas, K.M.; Abbasi, M.; Abbasifard, M.; Abbasi-Kangevari, M.; Abbastabar, H.; Abd-Allah, F.; Abdelalim, A.; et al. Global burden of 369 diseases and injuries in 204 countries and territories, 1990–2019: A systematic analysis for the global burden of disease study 2019. Lancet 2020, 396, 1204–1222. [Google Scholar] [CrossRef]

- Prince, M.; Bryce, R.; Ferri, C. World Alzheimer Report 2011. The Benefits of Early Diagnosis and Intervention; Alzheimer’s Disease International: London, UK, 2011. [Google Scholar]

- Alzheimer’s Association Dementia Care Practice Recommendations. Committed to Improving the Quality of Life for People Living with Dementia. Available online: https://www.alz.org/professionals/professional-providers/dementia_care_practice_recommendations (accessed on 27 April 2022).

- National Institute for Health and Care Excellence. Dementia: Assessment, Management and Support for People Living with Dementia and Their Carers (ng97); National Institute for Health and Care Excellence: London, UK, 2018.

- The National Board of Health and Welfare. Nationella Riktlinjer för Vård Och Omsorg Vid Demenssjukdom. Stöd för Styrning Och Ledning; The National Board of Health and Welfare Sweden: Stockholm, Sweden, 2017. [Google Scholar]

- NHMRC Partnership Centre for Dealing with Cognitive and Related Functional Decline in Older People. Clinical Practice Guidelines and Principles of Care for People with Dementia; NHMRC Partnership Centre for Dealing with Cognitive and Related Functional Decline in Older People: Sydney, Australia, 2016. [Google Scholar]

- Flanders Centre of Expertise on Dementia. You and Me, Together We Are Human—A Reference Framework for Quality of Life, Housing and Care for People with Dementia; EPO Publishing Company: Antwerpen, Belgium, 2018. [Google Scholar]

- Savaskan, E.; Bopp-Kistler, I.; Buerge, M.; Fischlin, R.; Georgescu, D.; Giardini, U.; Hatzinger, M.; Hemmeter, U.; Justiniano, I.; Kressig, R.W.; et al. Empfehlungen zur diagnostik und therapie der behavioralen und psychologischen symptome der demenz (bpsd). Praxis 2014, 103, 135–148. [Google Scholar] [CrossRef] [PubMed]

- Danish Health Authority. National Klinisk Retningslinje for Forebyggelse og Behandling af Adfærdsmæssige og Psykiske Symptomer hos Personer med Demens; Danish Health Authority: Copenhagen, Denmark, 2019.

- Norwegian Ministry of Health and Care Services. Dementia Plan 2020—A More Dementia-Friendly Society; Norwegian Ministry of Health and Care Services: Oslo, Norway, 2015. [Google Scholar]

- Morgan, S.; Yoder, L. A concept analysis of person-centered care. J. Holist. Nurs. 2012, 30, 6–15. [Google Scholar] [CrossRef]

- Kitwood, T.M.; Kitwood, T. Dementia Reconsidered: The Person Comes First; Open University Press: Buckingham, UK, 1997; Volume 20. [Google Scholar]

- Kitwood, T.; Bredin, K. Towards a theory of dementia care: Personhood and well-being. Ageing Soc. 1992, 12, 269–287. [Google Scholar] [CrossRef]

- Wehrmann, H.; Michalowsky, B.; Lepper, S.; Mohr, W.; Raedke, A.; Hoffmann, W. Priorities and preferences of people living with dementia or cognitive impairment—A systematic review. Patient Prefer. Adherence 2021, 15, 2793–2807. [Google Scholar] [CrossRef]

- Lepper, S.; Rädke, A.; Wehrmann, H.; Michalowsky, B.; Hoffmann, W. Preferences of cognitively impaired patients and patients living with dementia: A systematic review of quantitative patient preference studies. J. Alzheimer’s Dis. 2020, 77, 885–901. [Google Scholar] [CrossRef]

- Ho, M.H.; Chang, H.R.; Liu, M.F.; Chien, H.W.; Tang, L.Y.; Chan, S.Y.; Liu, S.H.; John, S.; Traynor, V. Decision-making in people with dementia or mild cognitive impairment: A narrative review of decision-making tools. J. Am. Med. Dir. Assoc. 2021, 22, 2056–2062.e2054. [Google Scholar] [CrossRef]

- Harrison Dening, K.; King, M.; Jones, L.; Vickerstaff, V.; Sampson, E.L. Correction: Advance care planning in dementia: Do family carers know the treatment preferences of people with early dementia? PLoS ONE 2016, 11, e0161142. [Google Scholar] [CrossRef] [Green Version]

- Van Haitsma, K.; Curyto, K.; Spector, A.; Towsley, G.; Kleban, M.; Carpenter, B.; Ruckdeschel, K.; Feldman, P.H.; Koren, M.J. The preferences for everyday living inventory: Scale development and description of psychosocial preferences responses in community-dwelling elders. Gerontologist 2013, 53, 582–595. [Google Scholar] [CrossRef]

- Mühlbacher, A. Ohne Patientenpräferenzen kein sinnvoller Wettbewerb. Dtsch. Ärzteblatt 2017, 114, 1584–1590. [Google Scholar]

- Groenewoud, S.; Van Exel, N.J.A.; Bobinac, A.; Berg, M.; Huijsman, R.; Stolk, E.A. What influences patients’ decisions when choosing a health care provider? Measuring preferences of patients with knee arthrosis, chronic depression, or alzheimer’s disease, using discrete choice experiments. Health Serv. Res. 2015, 50, 1941–1972. [Google Scholar] [CrossRef] [PubMed]

- Mühlbacher, A.C.; Kaczynski, A.; Zweifel, P.; Johnson, F.R. Experimental measurement of preferences in health and healthcare using best-worst scaling: An overview. Health Econ. Rev. 2016, 6, 2. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mühlbacher, A.C.; Kaczynski, A. Der analytic hierarchy process (ahp): Eine methode zur entscheidungsunterstützung im gesundheitswesen. Pharm. Ger. Res. Artic. 2013, 11, 119–132. [Google Scholar] [CrossRef] [Green Version]

- Danner, M.; Hummel, J.M.; Volz, F.; van Manen, J.G.; Wiegard, B.; Dintsios, C.-M.; Bastian, H.; Gerber, A.; Ijzerman, M.J. Integrating patients’ views into health technology assessment: Analytic hierarchy process (ahp) as a method to elicit patient preferences. Int. J. Technol. Assess. Health Care 2011, 27, 369–375. [Google Scholar] [CrossRef] [Green Version]

- Thokala, P.; Devlin, N.; Marsh, K.; Baltussen, R.; Boysen, M.; Kalo, Z.; Longrenn, T.; Mussen, F.; Peacock, S.; Watkins, J. Multiple criteria decision analysis for health care decision making—An introduction: Report 1 of the ispor mcda emerging good practices task force. Value Health 2016, 19, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Marsh, K.; IJzerman, M.; Thokala, P.; Baltussen, R.; Boysen, M.; Kaló, Z.; Lönngren, T.; Mussen, F.; Peacock, S.; Watkins, J. Multiple criteria decision analysis for health care decision making—Emerging good practices: Report 2 of the ispor mcda emerging good practices task force. Value Health 2016, 19, 125–137. [Google Scholar] [CrossRef] [Green Version]

- Hollin, I.L.; Craig, B.M.; Coast, J.; Beusterien, K.; Vass, C.; DiSantostefano, R.; Peay, H. Reporting formative qualitative research to support the development of quantitative preference study protocols and corresponding survey instruments: Guidelines for authors and reviewers. Patient-Patient-Cent. Outcomes Res. 2020, 13, 121–136. [Google Scholar] [CrossRef]

- Kløjgaard, M.E.; Bech, M.; Søgaard, R. Designing a stated choice experiment: The value of a qualitative process. J. Choice Model. 2012, 5, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Alzheimer’s Society Tips for Dementia-Friendly Surveys. Tips from People with Dementia on How They Like to Use Surveys in Different Ways Including Face-to-Face, Video, Paper, Telephone and Online. Available online: https://www.alzheimers.org.uk/dementia-professionals/dementia-experience-toolkit/research-methods/tips-dementia-friendly-surveys (accessed on 27 April 2022).

- Mohr, W.; Rädke, A.; Afi, A.; Mühlichen, F.; Platen, M.; Michalowsky, B.; Hoffmann, W. Development of a quantitative instrument to elicit patient preferences for person-centered dementia care stage 1: A formative qualitative study to identify patient relevant criteria for experimental design of an analytic hierarchy process. Int. J. Environ. Res. Public Health 2022, 19, 7629. [Google Scholar] [CrossRef]

- Drennan, J. Cognitive interviewing: Verbal data in the design and pretesting of questionnaires. J. Adv. Nurs. 2003, 42, 57–63. [Google Scholar] [CrossRef] [Green Version]

- Green, J.; Thorogood, N. Qualitative Methods for Health Research, 3rd ed.; SAGE Publications, Inc: Thousand Oaks, CA, USA, 2018. [Google Scholar]

- Kleinke, F.; Michalowsky, B.; Rädke, A.; Platen, M.; Mühlichen, F.; Scharf, A.; Mohr, W.; Penndorf, P.; Bahls, T.; van den Berg, N.; et al. Advanced nursing practice and interprofessional dementia care (independent): Study protocol for a multi-center, cluster-randomized, controlled, interventional trial. Trials 2022, 23, 290. [Google Scholar] [CrossRef] [PubMed]

- Eichler, T.; Thyrian, J.R.; Dreier, A.; Wucherer, D.; Köhler, L.; Fiß, T.; Böwing, G.; Michalowsky, B.; Hoffmann, W. Dementia care management: Going new ways in ambulant dementia care within a gp-based randomized controlled intervention trial. Int. Psychogeriatr. 2014, 26, 247–256. [Google Scholar] [CrossRef] [PubMed]

- Van den Berg, N.; Heymann, R.; Meinke, C.; Baumeister, S.E.; Fleßa, S.; Hoffmann, W. Effect of the delegation of gp-home visits on the development of the number of patients in an ambulatory healthcare centre in germany. BMC Health Serv. Res. 2012, 12, 355. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mohr, W.; Raedke, A.; Michalowsky, B.; Hoffmann, W. Elicitation of quantitative, choice-based preferences for person-centered care among people living with dementia in comparison to physicians’ judgements in germany: Study protocol for the mixed-methods predemcare-study. BMC Geriatr. 2022, 22, 567. [Google Scholar] [CrossRef]

- German Center for Neurodegenerative Diseases e.V. (DZNE). Predemcare: Moving towards Person-Centered Care of People with Dementia—Elicitation of Patient and Physician Preferences for Care. Available online: https://www.dzne.de/en/research/studies/projects/predemcare/ (accessed on 27 April 2022).

- Creswell, J.W.; Clark, V.L.P. Designing and Conducting Mixed Methods Research, 3rd ed.; SAGE Publcations, Inc: Thousand Oaks, CA, USA, 2017. [Google Scholar]

- Mohr, W.; Rädke, A.; Afi, A.; Edvardsson, D.; Mühlichen, F.; Platen, M.; Roes, M.; Michalowsky, B.; Hoffmann, W. Key intervention categories to provide person-centered dementia care: A systematic review of person-centered interventions. J. Alzheimer’s Dis. JAD 2021, 84, 343–366. [Google Scholar] [CrossRef]

- Mühlbacher, A.C.; Kaczynski, A. Making good decisions in healthcare with multi-criteria decision analysis: The use, current research and future development of mcda. Appl. Health Econ. Health Policy 2016, 14, 29–40. [Google Scholar] [CrossRef]

- Kuruoglu, E.; Guldal, D.; Mevsim, V.; Gunvar, T. Which family physician should i choose? The analytic hierarchy process approach for ranking of criteria in the selection of a family physician. BMC Med. Inform. Decis. Mak. 2015, 15, 63. [Google Scholar] [CrossRef]

- Bamberger, M.; Mabry, L. Realworld Evaluation: Working Under Budget, Time, Data, and Political Constraints; SAGE Publications: Thousand Oaks, CA, USA, 2019. [Google Scholar]

- Asch, S.; Connor, S.E.; Hamilton, E.G.; Fox, S.A. Problems in recruiting community-based physicians for health services research. J. Gen. Intern. Med. 2000, 15, 591–599. [Google Scholar] [CrossRef] [Green Version]

- Mühlbacher, A.C.; Rudolph, I.; Lincke, H.-J.; Nübling, M. Preferences for treatment of attention-deficit/hyperactivity disorder (adhd): A discrete choice experiment. BMC Health Serv. Res. 2009, 9, 149. [Google Scholar] [CrossRef] [Green Version]

- Mühlbacher, A.; Bethge, S.; Kaczynski, A.; Juhnke, C. Objective criteria in the medicinal therapy for type ii diabetes: An analysis of the patients’ perspective with analytic hierarchy process and best-worst scaling. Gesundh. (Bundesverb. Der Arzte Des Offentlichen Gesundh.) 2015, 78, 326–336. [Google Scholar]

- Mühlbacher, A.C.; Bethge, S. Patients’ preferences: A discrete-choice experiment for treatment of non-small-cell lung cancer. Eur. J. Health Econ. 2015, 16, 657–670. [Google Scholar] [CrossRef] [Green Version]

- Mühlbacher, A.C.; Kaczynski, A. The expert perspective in treatment of functional gastrointestinal conditions: A multi-criteria decision analysis using ahp and bws. J. Multi-Criteria Decis. Anal. 2016, 23, 112–125. [Google Scholar] [CrossRef]

- Mühlbacher, A.C.; Kaczynski, A.; Dippel, F.-W.; Bethge, S. Patient priorities for treatment attributes in adjunctive drug therapy of severe hypercholesterolemia in germany: An analytic hierarchy process. Int. J. Technol. Assess. Health Care 2018, 34, 267–275. [Google Scholar] [CrossRef] [PubMed]

- Weernink, M.G.; van Til, J.A.; Groothuis-Oudshoorn, C.G.; IJzerman, M.J. Patient and public preferences for treatment attributes in parkinson’s disease. Patient-Patient-Cent. Outcomes Res. 2017, 10, 763–772. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kalbe, E.; Kessler, J.; Calabrese, P.; Smith, R.; Passmore, A.; Brand, M.A.; Bullock, R. Demtect: A new, sensitive cognitive screening test to support the diagnosis of mild cognitive impairment and early dementia. Int. J. Geriatr. Psychiatry 2004, 19, 136–143. [Google Scholar] [CrossRef] [PubMed]

- Folstein, M.F.; Folstein, S.E.; McHugh, P.R. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 1975, 12, 189–198. [Google Scholar] [CrossRef]

- Kessler, J.; Denzler, P.; Markowitsch, H. Mini-Mental-Status-Test (MMST). Deutsche Fassung; Hogrefe Testzentrale: Göttingen, Germany, 1990. [Google Scholar]

- Beuscher, L.; Grando, V.T. Challenges in conducting qualitative research with individuals with dementia. Res. Gerontol. Nurs. 2009, 2, 6–11. [Google Scholar] [CrossRef] [Green Version]

- Danner, M.; Vennedey, V.; Hiligsmann, M.; Fauser, S.; Gross, C.; Stock, S. How well can analytic hierarchy process be used to elicit individual preferences? Insights from a survey in patients suffering from age-related macular degeneration. Patient 2016, 9, 481–492. [Google Scholar] [CrossRef]

- Saaty, T.L. A scaling method for priorities in hierarchical structures. J. Math. Psychol. 1977, 15, 234–281. [Google Scholar] [CrossRef]

- Hummel, J.M.; Bridges, J.F.P.; Ijzerman, M.J. Group decision making with the analytic hierarchy process in benefit-risk assessment: A tutorial. Patient Patient-Cent. Outcomes Res. 2014, 7, 129–140. [Google Scholar] [CrossRef]

- Lifesize, I. Lifesize Meeting Solutions. Available online: https://www.lifesize.com/en/meeting-solutions/ (accessed on 27 April 2022).

- Coast, J.; Al-Janabi, H.; Sutton, E.J.; Horrocks, S.A.; Vosper, A.J.; Swancutt, D.R.; Flynn, T.N. Using qualitative methods for attribute development for discrete choice experiments: Issues and recommendations. Health Econ. 2012, 21, 730–741. [Google Scholar] [CrossRef] [PubMed]

- Goepel, K.D. Implementing the Analytic Hierarchy Process as a Standard Method for Multi-Criteria Decision Making in Corporate Enterprises—A New ahp Excel Template with Multiple Inputs; International Symposium on the Analytic Hierarchy Process: Kuala Lumpur, Malaysia, 2013; pp. 1–10. [Google Scholar]

- Expert Choice. Expert Choice Comparion®; Expert Choice: Arlington, VA, USA, 2022. [Google Scholar]

- Forman, E.; Peniwati, K. Aggregating individual judgments and priorities with the analytic hierarchy process. Eur. J. Oper. Res. 1998, 108, 165–169. [Google Scholar] [CrossRef]

- Saaty, T.L. Highlights and critical points in the theory and application of the analytic hierarchy process. Eur. J. Oper. Res. 1994, 74, 426–447. [Google Scholar] [CrossRef]

- Hummel, J.M.; Steuten, L.G.; Groothuis-Oudshoorn, C.; Mulder, N.; IJzerman, M.J. Preferences for colorectal cancer screening techniques and intention to attend: A multi-criteria decision analysis. Appl. Health Econ. Health Policy 2013, 11, 499–507. [Google Scholar] [CrossRef]

- Hovestadt, G.; Eggers, N. Soziale Ungleichheit in der Allgemein Bildenden Schule: Ein Überblick Über den Stand der empirischen Forschung Unter Berücksichtigung Berufsbildender Wege zur Hochschulreife und der Übergänge zur Hochschule; CSEC GmbH, Ed.; Hans-Böckler-Stiftung: Rheine, Germany, 2007; pp. 7–8. [Google Scholar]

- Clarkson, P.; Hughes, J.; Xie, C.; Larbey, M.; Roe, B.; Giebel, C.M.; Jolley, D.; Challis, D.; HoSt-D (Home Support in Dementia) Programme Management Group. Overview of systematic reviews: Effective home support in dementia care, components and impacts—stage 1, psychosocial interventions for dementia. J. Adv. Nurs. 2017, 73, 2845–2863. [Google Scholar] [CrossRef] [PubMed]

- Murdoch, B.E.; Chenery, H.J.; Wilks, V.; Boyle, R.S. Language disorders in dementia of the alzheimer type. Brain Lang. 1987, 31, 122–137. [Google Scholar] [CrossRef]

- Reilly, J.; Troche, J.; Grossman, M. Language processing in dementia. In The Handbook of Alzheimer’s Disease and Other Dementias; Budson, A.E., Kowall, N.W., Eds.; John Wiley & Sons, Incorporated: Chichester, UK, 2014; pp. 336–368. [Google Scholar]

- Joubert, S.; Vallet, G.T.; Montembeault, M.; Boukadi, M.; Wilson, M.A.; Laforce, R., Jr.; Rouleau, I.; Brambati, S.M. Comprehension of concrete and abstract words in semantic variant primary progressive aphasia and alzheimer’s disease: A behavioral and neuroimaging study. Brain Lang. 2017, 170, 93–102. [Google Scholar] [CrossRef]

- Saaty, R.W. The analytic hierarchy process—What it is and how it is used. Math. Model. 1987, 9, 161–176. [Google Scholar] [CrossRef] [Green Version]

- Saaty, T.L. Axiomatic foundation of the analytic hierarchy process. Manag. Sci. 1986, 32, 841–855. [Google Scholar] [CrossRef]

- Institute for Quality and Efficiency in Healthcare (IQWiG). Analytic Hierarchy Process (ahp)—Pilot Project to Elicit Patient Preferences in the Indication “Depression”; IQWiG: Cologne, Germany, 2013. [Google Scholar]

- Ozdemir, M.S. Validity and inconsistency in the analytic hierarchy process. Appl. Math. Comput. 2005, 161, 707–720. [Google Scholar] [CrossRef]

- Brod, M.; Stewart, A.L.; Sands, L.; Walton, P. Conceptualization and measurement of quality of life in dementia: The dementia quality of life instrument (dqol). Gerontologist 1999, 39, 25–35. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- IJzerman, M.J.; van Til, J.A.; Snoek, G.J. Comparison of two multi-criteria decision techniques for eliciting treatment preferences in people with neurological disorders. Patient Patient-Cent. Outcomes Res. 2008, 1, 265–272. [Google Scholar] [CrossRef] [PubMed]

- Dolan, J.G. Shared decision-making–transferring research into practice: The analytic hierarchy process (ahp). Patient Educ. Couns. 2008, 73, 418–425. [Google Scholar] [CrossRef] [Green Version]

- Van Til, J.A.; Renzenbrink, G.J.; Dolan, J.G.; IJzerman, M.J. The use of the analytic hierarchy process to aid decision making in acquired equinovarus deformity. Arch. Phys. Med. Rehabil. 2008, 89, 457–462. [Google Scholar] [CrossRef] [PubMed]

- Expert Choice Expert Choice Comparion Help Center—Inconsistency Ratio. Available online: https://comparion.knowledgeowl.com/help/inconsistency-ratio (accessed on 18 January 2022).

- Everhart, M.A.; Pearlman, R.A. Stability of patient preferences regarding life-sustaining treatments. Chest 1990, 97, 159–164. [Google Scholar] [CrossRef] [Green Version]

- Van Haitsma, K.; Abbott, K.M.; Arbogast, A.; Bangerter, L.R.; Heid, A.R.; Behrens, L.L.; Madrigal, C. A preference-based model of care: An integrative theoretical model of the role of preferences in person-centered care. Gerontologist 2020, 60, 376–384. [Google Scholar] [CrossRef]

- Kievit, W.; Tummers, M.; van Hoorn, R.; Booth, A.; Mozygemba, K.; Refolo, P.; Sacchini, D.; Pfadenhauer, L.; Gerhardus, A.; Van der Wilt, G.J. Taking patient heterogeneity and preferences into account in health technology assessments. Int. J. Technol. Assess. Health Care 2017, 33, 562–569. [Google Scholar] [CrossRef]

- Kaczynski, A.; Michalowsky, B.; Eichler, T.; Thyrian, J.R.; Wucherer, D.; Zwingmann, I.; Hoffmann, W. Comorbidity in dementia diseases and associated health care resources utilization and cost. J. Alzheimer’s Dis. 2019, 68, 635–646. [Google Scholar] [CrossRef]

- Graneheim, U.H.; Lundman, B. Qualitative content analysis in nursing research: Concepts, procedures and measures to achieve trustworthiness. Nurse Educ. Today 2004, 24, 105–112. [Google Scholar] [CrossRef]

| Characteristic | n (%) | |

|---|---|---|

| Age | 60–71 | 2 (18.2) |

| 71–80 | 4 (36.4) | |

| 81–90 | 3 (27.2) | |

| >90 | 2 (18.2) | |

| Gender | Female | 6 (54.5) |

| Male | 5 (45.5) | |

| Family status | Married | 6 (54.5) |

| Widowed | 4 (36.4) | |

| Divorced or separated | 1 (9.1) | |

| Highest educational degree | No degree | 1 (9.1) |

| 8th/9th grade | 1 (9.1) | |

| 10th grade | 2 (18.2) | |

| Degree from a technical/vocational college | 4 (36.4) | |

| Degree from a university of applied sciences or university | 3 (27.2) | |

| Previous occupation [65] | Skilled worker | 2 (18.2) |

| Employee with limited decision-making powers (e.g., cashier) | 6 (54.5) | |

| Lower grade with high qualification in employment (e.g., doctor, professor, engineer) | 3 (27.2) | |

| Monthly net income | EUR 1001–1500 | 1 (9.1) |

| EUR 1501–2000 | 3 (27.2) | |

| Not known | 3 (27.3) | |

| Prefer not to say | 4 (36.4) | |

| Stage of cognitive impairment a | Early | 9 (81.8) |

| Moderate | 2 (18.2) | |

| Non-pharmacological treatment | 7 (63.6) | |

| Memory work (e.g., memory exercises, rehabilitation) | 2 (28.6) b | |

| Occupational therapy | 2 (28.6) b | |

| Physical training (e.g., physiotherapy, sports groups) | 7 (100.0) b | |

| Artistic therapy (e.g., music therapy, art therapy, dance therapy, theater therapy) | 1 (14.29) b | |

| Other (speech therapy) | 1(14.29) b | |

| Self-rated general health | Good | 5 (45.5) |

| Satisfactory | 5 (45.5) | |

| Less good | 1 (9.1) |

| PT1 | PT2 | PT3 | PT4 | PT5 | PT6 | PT7 | PT8 | PT9 | PT10 | PT11 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Examples | |||||||||||

| No social exchange | 1/9 | 1/3 | 1/3 | 1/7 | 1/5 | 1/6 | 1/5 | 1/5 | 1/3 | 1/3 | 1/5 |

| A lot of social exchange | 9 | 3 | 3 | 7 | 5 | 6 | 5 | 5 | 3 | 3 | 5 |

| Social relationships | 9 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| Additional cost | 1/9 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| Criteria | |||||||||||

| Social exchange | 9 | 1/5 | 3 | 5 | 5 | 5 | 5 | 9 | 3 | 1 | 6 |

| Physical activities | 1/9 | 5 | 1/3 | 1/5 | 1/5 | 1/5 | 1/5 | 1/9 | 1/3 | 1 | 1/6 |

| Social exchange (2) | 7 | 3 | 7 | 1/6 | 1 | 5 | 5 | 9 | 3 | 1 | 7 |

| Physical activities (2) | 1/7 | 1/3 | 1/7 | 6 | 1 | 1/5 | 1/5 | 1/9 | 1/3 | 1 | 1/7 |

| Social exchange (3) | 9 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| Physical activities (3) | 1/9 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| Sub-criteria | |||||||||||

| Indirect contact | 1/9 | 1/3 | 1/5 | 1/6 | 1/5 | 1/3 | 1/5 | 6 | 1/3 | 1 | 7 |

| Direct contact | 9 | 3 | 5 | 6 | 5 | 3 | 5 | 1/6 | 3 | 1 | 1/7 |

| Indirect contact (2) | 1/9 | 1/3 | 1/5 | 1/6 | 1/5 | 1/3 | 1/5 | 7 | 1/3 | 1 | 7 |

| Direct contact (2) | 9 | 3 | 5 | 6 | 5 | 3 | 5 | 1/7 | 3 | 1 | 1/7 |

| (a) | |||

|---|---|---|---|

| PT | Principal Eigenvalue | GCI | CR (in %) |

| 1 | 8.826 | 1.46 | 45.1% |

| 2 | 7.265 | 0.7 | 20.2% |

| 3 | 7.795 | 0.96 | 28.6% |

| 4 | 9.271 | 1.67 | 52.2% |

| 5 | 6.599 | 0.35 | 9.6% |

| 6 | 11.382 | 2.63 | 85.9% |

| 7 | 9.574 | 1.77 | 57.0% |

| 8 | 7.631 | 0.88 | 26.0% |

| 9 | 6.819 | 0.46 | 13.1% |

| 10 | 6.504 | 0.29 | 8.0% |

| 11 | 9.814 | 1.85 | 60.8% |

| (b) | |||

| Consistency n = 11 | |||

| Principal Eigenvalue | 6.237 | ||

| CI | 0.14 | ||

| CR (in %), GM | 3.8% | ||

| CR (in %), AM | 36.9% | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mohr, W.; Rädke, A.; Afi, A.; Mühlichen, F.; Platen, M.; Scharf, A.; Michalowsky, B.; Hoffmann, W. Development of a Quantitative Preference Instrument for Person-Centered Dementia Care—Stage 2: Insights from a Formative Qualitative Study to Design and Pretest a Dementia-Friendly Analytic Hierarchy Process Survey. Int. J. Environ. Res. Public Health 2022, 19, 8554. https://doi.org/10.3390/ijerph19148554

Mohr W, Rädke A, Afi A, Mühlichen F, Platen M, Scharf A, Michalowsky B, Hoffmann W. Development of a Quantitative Preference Instrument for Person-Centered Dementia Care—Stage 2: Insights from a Formative Qualitative Study to Design and Pretest a Dementia-Friendly Analytic Hierarchy Process Survey. International Journal of Environmental Research and Public Health. 2022; 19(14):8554. https://doi.org/10.3390/ijerph19148554

Chicago/Turabian StyleMohr, Wiebke, Anika Rädke, Adel Afi, Franka Mühlichen, Moritz Platen, Annelie Scharf, Bernhard Michalowsky, and Wolfgang Hoffmann. 2022. "Development of a Quantitative Preference Instrument for Person-Centered Dementia Care—Stage 2: Insights from a Formative Qualitative Study to Design and Pretest a Dementia-Friendly Analytic Hierarchy Process Survey" International Journal of Environmental Research and Public Health 19, no. 14: 8554. https://doi.org/10.3390/ijerph19148554

APA StyleMohr, W., Rädke, A., Afi, A., Mühlichen, F., Platen, M., Scharf, A., Michalowsky, B., & Hoffmann, W. (2022). Development of a Quantitative Preference Instrument for Person-Centered Dementia Care—Stage 2: Insights from a Formative Qualitative Study to Design and Pretest a Dementia-Friendly Analytic Hierarchy Process Survey. International Journal of Environmental Research and Public Health, 19(14), 8554. https://doi.org/10.3390/ijerph19148554