Development and Validation of a Clinical Practicum Assessment Tool for the NAACLS-Accredited Biomedical Science Program

Abstract

:1. Introduction

- What is already known on this topic: Tools are available to assess the quality of biomedical sciences practical courses in terms of content, preceptor quality, and competencies, because this information is essential for continuous quality improvement over time.

- What this study adds: This clinical practicum assessment tool is the first tool to be validated for assessing student perspectives on their clinical placements in biomedical sciences.

- How this study might affect research, practice, or policy: The clinical practicum assessment tool has value for continuous improvement and accreditation purposes and could help inform MLS program directors about ways to improve their clinical practicum based on high-quality evidence.

2. Materials and Methods

2.1. CPAT-QU Development and Description

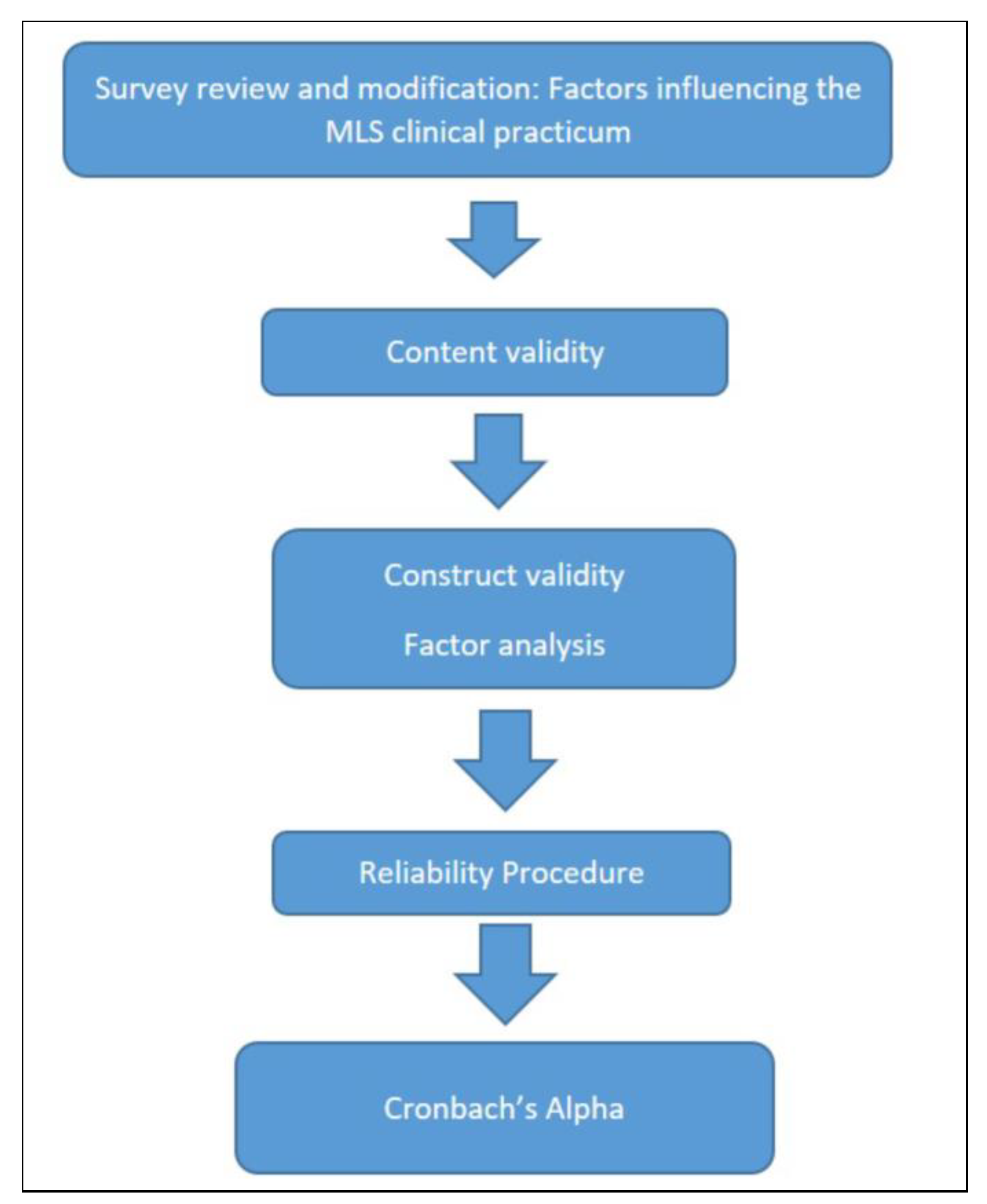

2.2. CPAT-QU Validation

2.2.1. Readability

2.2.2. Content Validity

2.2.3. Construct Validity

2.2.4. Reliability

2.3. Participants

2.4. Statistical Analysis

3. Results

3.1. Readability and Understandability

3.2. Content Validity

3.3. Construct Validity

3.4. Reliability

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Walz, S.E. Education & Training in Laboratory Medicine in the United States. EJIFCC 2013, 24, 1–3. [Google Scholar]

- Scanlan, P.M. A Review of Bachelor’s Degree Medical Laboratory Scientist Education and Entry Level Practice in the United States. EJIFCC 2013, 24, 5–13. [Google Scholar] [PubMed]

- Afrashtehfar, K.I.; Maatouk, R.M.; McCullagh, A.P.G. Flipped Classroom Questions. Br. Dent. J. 2022, 232, 285. [Google Scholar] [CrossRef]

- Bennett, A.; Garcia, E.; Schulze, M.; Bailey, M.; Doyle, K.; Finn, W.; Glenn, D.; Holladay, E.B.; Jacobs, J.; Kroft, S.; et al. Building a Laboratory Workforce to Meet the Future: ASCP Task Force on the Laboratory Professionals Workforce. Am. J. Clin. Pathol. 2014, 141, 154–167. [Google Scholar] [CrossRef] [PubMed]

- Sayed, S.; Cherniak, W.; Lawler, M.; Tan, S.Y.; El Sadr, W.; Wolf, N.; Silkensen, S.; Brand, N.; Looi, L.M.; Pai, S.A.; et al. Improving Pathology and Laboratory Medicine in Low-Income and Middle-Income Countries: Roadmap to Solutions. Lancet 2018, 391, 1939–1952. [Google Scholar] [CrossRef]

- Fleming, K.A.; Horton, S.; Wilson, M.L.; Atun, R.; DeStigter, K.; Flanigan, J.; Sayed, S.; Adam, P.; Aguilar, B.; Andronikou, S.; et al. The Lancet Commission on Diagnostics: Transforming Access to Diagnostics. Lancet 2021, 398, 1997–2050. [Google Scholar] [CrossRef]

- Wilson, M.L.; Fleming, K.A.; Kuti, M.A.; Looi, L.M.; Lago, N.; Ru, K. Access to Pathology and Laboratory Medicine Services: A Crucial Gap. Lancet 2018, 391, 1927–1938. [Google Scholar] [CrossRef]

- McDonald, B. Improving Learning through Meta Assessment. Act. Learn. High. Educ. 2010, 11, 119–129. [Google Scholar] [CrossRef]

- Afrashtehfar, K.I.; Assery, M.K.A.; Bryant, S.R. Patient Satisfaction in Medicine and Dentistry. Int. J. Dent. 2020, 2020, 6621848. [Google Scholar] [CrossRef]

- NAACLS—National Accrediting Agency for Clinical Laboratory Science—Starting a NAACLS Accredited or Approved Program. Available online: https://www.naacls.org/Program-Directors/Fees/Procedures-for-Review-Initial-and-Continuing-Accre.aspx (accessed on 13 January 2022).

- ASCLS Members. Available online: https://members.ascls.org/store_category.asp?id=69 (accessed on 14 January 2022).

- Beck, S.J.; Doig, K. CLS Competencies Expected at Entry-Level and Beyond. Clin. Lab. Sci. 2002, 15, 220–228. [Google Scholar]

- Beck, S.; Moon, T.C. An Algorithm for Curriculum Decisions in Medical Laboratory Science Education. Am. Soc. Clin. Lab. Sci. 2017, 30, 105–111. [Google Scholar] [CrossRef]

- Alwin, D.F.; Baumgartner, E.M.; Beattie, B.A. Number of Response Categories and Reliability in Attitude Measurement†. J. Surv. Stat. Methodol. 2018, 6, 212–239. [Google Scholar] [CrossRef]

- Jindal, P.; MacDermid, J. Assessing Reading Levels of Health Information: Uses and Limitations of Flesch Formula. Educ. Health 2017, 30, 84. [Google Scholar] [CrossRef] [PubMed]

- Singh, R.; Agarwal, T.M.; Al-Thani, H.; Al Maslamani, Y.; El-Menyar, A. Validation of a Survey Questionnaire on Organ Donation: An Arabic World Scenario. J. Transplant. 2018, 2018, 9309486. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- DeVon, H.A.; Block, M.E.; Moyle-Wright, P.; Ernst, D.M.; Hayden, S.J.; Lazzara, D.J.; Savoy, S.M.; Kostas-Polston, E. A Psychometric Toolbox for Testing Validity and Reliability. J Nurs. Scholarsh. 2007, 39, 155–164. [Google Scholar] [CrossRef] [PubMed]

- Daelmans, H.E.M.; Hoogenboom, R.J.I.; Donker, A.J.M.; Scherpbier, A.J.J.A.; Stehouwer, C.D.A.; van der Vleuten, C.P.M. Effectiveness of Clinical Rotations as a Learning Environment for Achieving Competences. Med. Teach. 2004, 26, 305–312. [Google Scholar] [CrossRef] [PubMed]

- Scott, C.S.; Irby, D.M.; Gilliland, B.C.; Hunt, D.D. Evaluating Clinical Skills in an Undergraduate Medical Education Curriculum. Teach. Learn. Med. 1993, 5, 49–53. [Google Scholar] [CrossRef]

- Mortazavi, D.R. Critical Look at Challenges in The Medical Laboratory Science Training in The Workplace. Int. J. Med. Sci. Educ. 2020, 7, 1–4. [Google Scholar]

- Isabel, J.M. Clinical Education: MLS Student Perceptions. Clin. Lab. Sci. 2016, 29, 66–71. [Google Scholar] [CrossRef]

- Griffiths, M.; Creedy, D.; Carter, A.; Donnellan-Fernandez, R. Systematic Review of Interventions to Enhance Preceptors’ Role in Undergraduate Health Student Clinical Learning. Nurse Educ. Pract. 2022, 62, 103349. [Google Scholar] [CrossRef]

- Schill, J.M. The Professional Socialization of Early Career Medical Laboratory Scientists. Clin. Lab. Sci. 2017, 30, 15–22. [Google Scholar] [CrossRef]

- Stuart, J.M.; Fenn, J.P. Job Selection Criteria and the Influence of Clinical Rotation Sites for Senior Medical Laboratory Science Students. Lab. Med. 2004, 35, 76–78. [Google Scholar] [CrossRef]

- Al-Enezi, N.; Shah, M.A.; Chowdhury, R.I.; Ahmad, A. Medical Laboratory Sciences Graduates: Are They Satisfied at Work? Educ. Health 2008, 21, 100. [Google Scholar]

- Bashawri, L.A.M.; Ahmed, M.A.; Bahnassy, A.A.L.; Al-Salim, J.A. Attitudes of Medical Laboratory Technology Graduates towards the Internship Training Period at King Faisal University. J. Fam. Community Med. 2006, 13, 89–93. [Google Scholar]

- Koo, H.Y.; Lee, B.R. Development of a Protocol for Guidance in the Pediatric Nursing Practicum in South Korea: A Methodology Study. Child Health Nurs. Res. 2022, 28, 51–61. [Google Scholar] [CrossRef]

| Domain | Item * | Experts in Agreement (N) | Relevance CVI |

|---|---|---|---|

| Content of MLS clinical practicum | 1. Organization | 6 | 1.00 |

| 2. Content of the clinical training rotations | 6 | 1.00 | |

| 3. Evaluation criteria of the clinical training rotations | 6 | 1.00 | |

| 4. Length of the clinical training rotations | 6 | 1.00 | |

| Preceptors mastering MLS clinical practicum | 1. Instructor’s attitude | 5 | 0.83 |

| 2. Command of material, knowledge, and expertise | 6 | 1.00 | |

| 3. Ability to convey knowledge and expertise | 5 | 0.83 | |

| 4. Interest in clinical teaching and training | 5 | 0.83 | |

| Skills and competencies of MLS clinical practicum | 1. Recall of basic knowledge and comprehension | 6 | 1.00 |

| 2. Awareness of organizational structure, lab management, safety, infection prevention control measures, and quality | 6 | 1.00 | |

| 3. Awareness of financial management, budget, lab staffing, HR laws, and regulation of the degree profession | 3 | 0.50 | |

| 4. Application and interpretation of content or lab result | 6 | 1.00 | |

| 5. Critical analysis, decision-making, problem solving | 5 | 0.83 | |

| 6. Ability to retrieve/locate information from a range of sources | 6 | 1.00 | |

| 7. Readiness; an awareness of and ready to analyze samples or observe | 6 | 1.00 | |

| 8. Competence and confidence with performing a task or analyzing samples | 6 | 1.00 | |

| 9. Proficiency and adaptation, ability to alter performance successfully | 6 | 1.00 | |

| 10. Research skills, such as planning and designing experiments | 4 | 0.67 | |

| 11. Using technology in communication skills and information exchange | 5 | 0.83 | |

| 12. Report writing and written communication skills | 6 | 1.00 | |

| 13. Oral presentation and verbal communication skills | 4 | 0.67 | |

| 14. Appreciation of ethical scientific behavior | 5 | 0.83 | |

| 15. Leadership skills | 4 | 0.67 | |

| 16. Team working skills | 6 | 1.00 | |

| 17. Time management and organizational skills | 6 | 1.00 | |

| 18. Ability to have and use own initiative | 6 | 1.00 | |

| 19. Possession of independent learning required for continuing professional development | 5 | 0.83 | |

| S-CVI/Average | 0.90 | ||

| Total agreement | 16.00 | ||

| S-CVI/UA | 0.59 | ||

| Component | Initial Eigenvalues | Rotation Sums of Squared Loadings | ||

|---|---|---|---|---|

| Total | Variance % | Cumulative % | Total | |

| 1 | 3.448 | 43.102 | 43.102 | 2.852 |

| 2 | 1.602 | 20.029 | 63.131 | 2.198 |

| Variables | Component | |

|---|---|---|

| 1 | 2 | |

| 1. Content of the clinical practicum | 0.609 | −0.076 |

| 2. Instructors mastering the clinical practicum | 0.565 | −0.252 |

| 3. Cognitive knowledge and skills developed during the clinical practicum | 0.773 | −0.416 |

| 4. Cognitive knowledge and skills used in the degree profession | 0.566 | 0.589 |

| 5. Psychomotor skills developed during the clinical practicum | 0.826 | −0.309 |

| 6. Psychomotor skills used in the degree profession | 0.623 | 0.649 |

| 7. Affective skills developed during the clinical practicum | 0.671 | −0.429 |

| 8. Affective skills used in the degree profession | 0.566 | 0.558 |

| Variable | Cronbach’s Alpha |

|---|---|

| Content of the clinical practicum | 0.80 |

| Instructors mastering the clinical practicum | 0.76 |

| Cognitive knowledge and skills developed during the clinical practicum | 0.89 |

| Cognitive knowledge and skills used in the degree profession | 0.76 |

| Psychomotor skills developed during the clinical practicum | 0.81 |

| Psychomotor skills used in the degree profession | 0.85 |

| Affective skills developed during the clinical practicum | 0.86 |

| Affective skills used in the degree profession | 0.92 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abunada, T.; Abdallah, A.M.; Singh, R.; Abu-Madi, M. Development and Validation of a Clinical Practicum Assessment Tool for the NAACLS-Accredited Biomedical Science Program. Int. J. Environ. Res. Public Health 2022, 19, 6651. https://doi.org/10.3390/ijerph19116651

Abunada T, Abdallah AM, Singh R, Abu-Madi M. Development and Validation of a Clinical Practicum Assessment Tool for the NAACLS-Accredited Biomedical Science Program. International Journal of Environmental Research and Public Health. 2022; 19(11):6651. https://doi.org/10.3390/ijerph19116651

Chicago/Turabian StyleAbunada, Taghreed, Atiyeh M. Abdallah, Rajvir Singh, and Marawan Abu-Madi. 2022. "Development and Validation of a Clinical Practicum Assessment Tool for the NAACLS-Accredited Biomedical Science Program" International Journal of Environmental Research and Public Health 19, no. 11: 6651. https://doi.org/10.3390/ijerph19116651

APA StyleAbunada, T., Abdallah, A. M., Singh, R., & Abu-Madi, M. (2022). Development and Validation of a Clinical Practicum Assessment Tool for the NAACLS-Accredited Biomedical Science Program. International Journal of Environmental Research and Public Health, 19(11), 6651. https://doi.org/10.3390/ijerph19116651