Predicting Future Geographic Hotspots of Potentially Preventable Hospitalisations Using All Subset Model Selection and Repeated K-Fold Cross-Validation

Abstract

1. Introduction

2. Materials and Methods

2.1. Defining Hotspots Based on Age-Sex Standardized Rates

2.2. Future Prediction of Hotspots

2.3. Cross-Validation for Longitudinal Data

2.4. A New Formulation of K-Fold Cross-Validation for Longitudinal Data

- 1.

- Leaving out data from fold , fit the model in Equation (9) to the training dataset comprising folds, and select a classification threshold;

- 2.

- Apply the fitted model and the selected threshold to the test dataset, which comprises data from the kth fold, shifted forwards years in time. Compare the predictions to the observed values to obtain a value for the performance metric of interest.

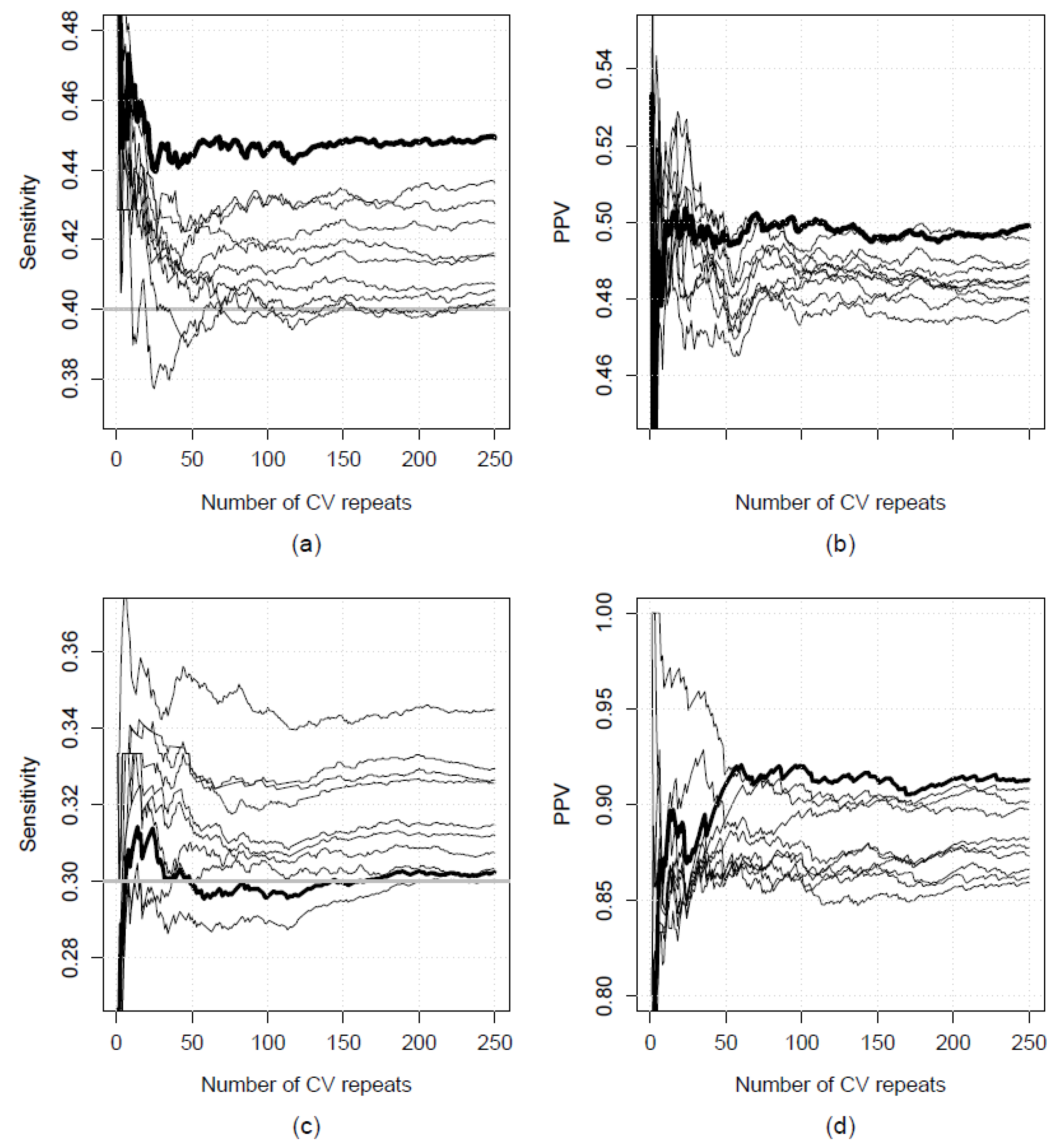

2.5. Repeated K-Fold Cross-Validation

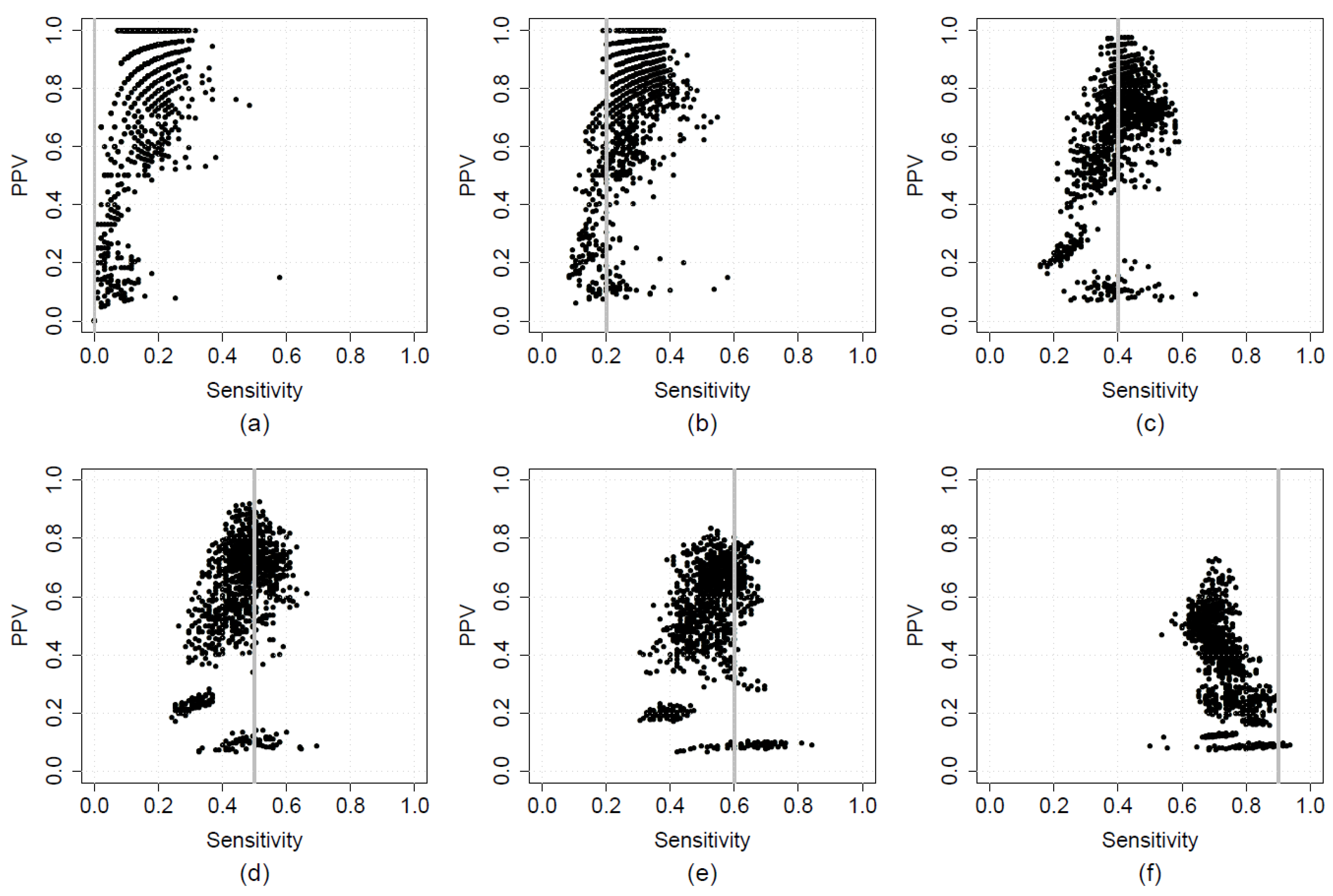

2.6. Optimising Sensitivity and PPV in A Two-Step Calibration-Implementation Approach

2.7. Real-World Applications: Data and Methods

- the percentage of individuals identifying as Aboriginal and/or Torres Strait Islander (hereafter “Aboriginal”);

- the percentage of individuals aged 75 years or older (along with a quadratic term);

- the percentage of male individuals (centred around the mean within each financial year);

- rurality (metropolitan or regional);

- accessibility to emergency department (ED) and general practice (GP; in the US read “family practice”); and

- four Socio-Economic Indices for Areas (SEIFAs): (i) the Index of Relative Socio-Economic Disadvantage (IRSD), (ii) the Index of Relative Socio-Economic Advantage and Disadvantage (IRSAD), (iii) the Index of Education and Occupation (IEO), and (iv) the Index of Economic Resources (IER) [58].

3. Results

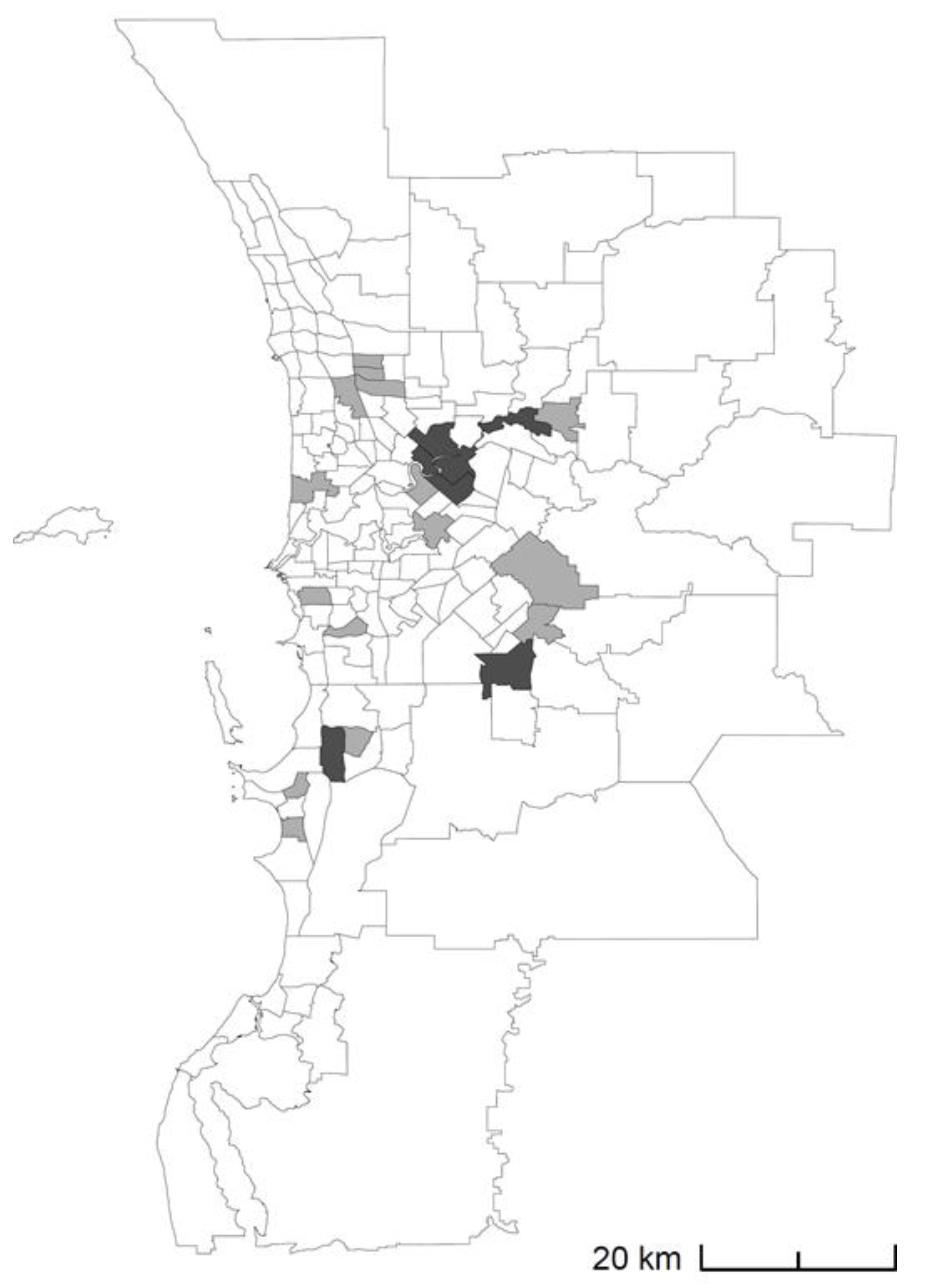

3.1. Results from Real-World Applications

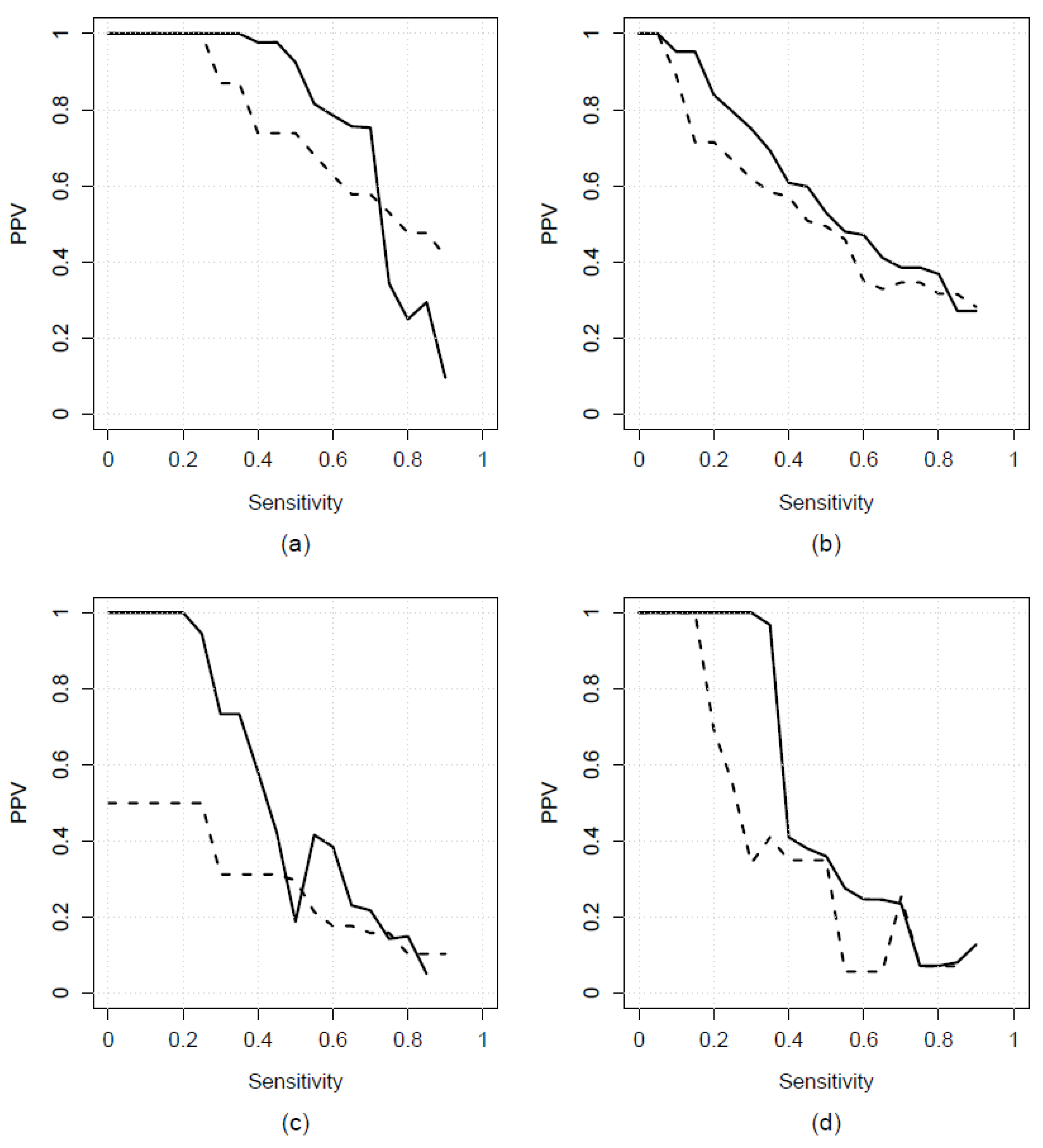

3.2. Comparison to Existing Methods

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ansari, Z. The concept and usefulness of ambulatory care sensitive conditions as indicators of quality and access to primary health care. Aust. J. Prim. Health 2007, 13, 91–110. [Google Scholar] [CrossRef]

- Rosano, A.; Loha, C.A.; Falvo, R.; Van der Zee, J.; Ricciardi, W.; Guasticchi, G.; De Belvis, A.G. The relationship between avoidable hospitalization and accessibility to primary care: A systematic review. Eur. J. Public Health 2013, 23, 356–360. [Google Scholar] [CrossRef]

- Council of Australian Governments (COAG). National Healthcare Agreement: PI 18-Selected Potentially Preventable Hospitalisations. 2021. Available online: https://meteor.aihw.gov.au/content/index.phtml/itemId/725793 (accessed on 1 August 2021).

- Mazumdar, S.; Chong, S.; Arnold, L.; Jalaludin, B. Spatial clusters of chronic preventable hospitalizations (ambulatory care sensitive conditions) and access to primary care. J Public Health 2020, 42, e134–e141. [Google Scholar] [CrossRef]

- Gibson, O.R.; Segal, L.; McDermott, R.A. A systematic review of evidence on the association between hospitalisation for chronic disease related ambulatory care sensitive conditions and primary health care resourcing. BMC Health Serv. Res. 2013, 13, 336. [Google Scholar] [CrossRef]

- Longman, J.M.; Rix, E.; Johnston, J.J.; Passey, M.E. Ambulatory care sensitive chronic conditions: What can we learn from patients about the role of primary health care in preventing admissions? Aust. J. Prim. Health 2018, 24, 304–310. [Google Scholar] [CrossRef] [PubMed]

- Duckett, S.; Griffiths, K. Perils of Place: Identifying Hotspots of Health Inequalities; Grattan Institute: Melbourne, Australia, 2016; Available online: https://grattan.edu.au/wp-content/uploads/2016/07/874-Perils-of-Place.pdf (accessed on 1 August 2021).

- Kim, A.M.; Park, J.H.; Yoon, T.H.; Kim, Y. Hospitalizations for ambulatory care sensitive conditions as an indicator of access to primary care and excess of bed supply. BMC Health Serv. Res. 2019, 19, 259. [Google Scholar] [CrossRef]

- Muenchberger, H.; Muenchberger, E.K. Determinants of Avoidable Hospitalisation in Chronic Disease: Development of a Predictor Matrix; Griffith University: Meadowbrook, Australia, 2008. [Google Scholar]

- Orueta, J.F.; García-Alvarez, A.; Grandes, G.; Nuño-Solinís, R. Variability in potentially preventable hospitalisations: An observational study of clinical practice patterns of general practitioners and care outcomes in the Basque Country (Spain). BMJ Open 2015, 5, e007360. [Google Scholar] [CrossRef]

- Gao, L.J.; Moran, L.E.; Li, Y.-F.; Almenoff, P.L. Predicting Potentially Avoidable Hospitalizations. Med. Care. 2014, 52, 164–171. [Google Scholar] [CrossRef] [PubMed]

- Louis, D.Z.; Robeson, M.; McAna, J.; Maio, V.; Keith, S.W.; Liu, M.; Gonnella, J.S.; Grilli, R. Predicting risk of hospitalisation or death: A retrospective population-based analysis. BMJ Open 2014, 4, e005223. [Google Scholar] [CrossRef] [PubMed]

- Oliver-Baxter, J.; Bywood, P.; Erny-Albrecht, K. Predictive Risk Models to Identify People with Chronic Conditions at Risk of Hospitalisation; PHCRIS Policy Issue Review; Primary Health Care Research & Information Service: Adelaide, Australia, 2015; Available online: https://dspace.flinders.edu.au/xmlui/bitstream/handle/2328/36226/PIR_Predictive%20risk%20model.pdf?sequence=1&isAllowed=y (accessed on 1 August 2021).

- Ansari, Z.; Haider, S.I.; Ansari, H.; de Gooyer, T.; Sindall, C. Patient characteristics associated with hospitalisations for ambulatory care sensitive conditions in Victoria, Australia. BMC Health Serv. Res. 2012, 12, 475. [Google Scholar] [CrossRef]

- Khanna, S.; Rolls, D.A.; Boyle, J.; Xie, Y.; Jayasena, R.; Hibbert, M.; Georgeff, M. A risk stratification tool for hospitalisation in Australia using primary care data. Sci Rep. 2019, 9, 5011. [Google Scholar] [CrossRef] [PubMed]

- Maust, D.T.; Kim, H.M.; Chiang, C.; Langa, K.M.; Kales, H.C. Predicting Risk of Potentially Preventable Hospitalization in Older Adults with Dementia. J. Am. Geriatr. Soc. 2019, 67, 2077–2084. [Google Scholar] [CrossRef] [PubMed]

- Vallée, J. Challenges in targeting areas for public action. Target areas at the right place and at the right time. J. Epidemiol. Community Health 2017, 71, 945–946. [Google Scholar] [CrossRef]

- Hardt, N.S.; Muhamed, S.; Das, R.; Estrella, R.; Roth, J. Neighborhood-level hot spot maps to inform delivery of primary care and allocation of social resources. Perm. J. 2013, 17, 4–9. [Google Scholar] [CrossRef] [PubMed]

- Falster, M.O.; Jorm, L.R.; Douglas, K.A.; Blyth, F.M.; Elliott, R.F.; Leyland, A.H. Sociodemographic and Health Characteristics, Rather Than Primary Care Supply, are Major Drivers of Geographic Variation in Preventable Hospitalizations in Australia. Med. Care 2015, 53, 436–445. [Google Scholar] [CrossRef]

- Dimitrovová, K.; Costa, C.; Santana, P.; Perelman, J. Evolution and financial cost of socioeconomic inequalities in ambulatory care sensitive conditions: An ecological study for Portugal, 2000–2014. Int. J. Equity Health 2017, 16, 145. [Google Scholar] [CrossRef]

- Gavidia, T.; Varhol, R.; Koh, C.; Xiao, A.; Mai, Q.; Liu, Y.; Turdukulov, U.; Parsons, S.; Veenendaal, B.; Somerford, P. Geographic Variation in Primary Healthcare Service Utilisation and Potentially Preventable Hospitalisations in Western Australia; Department of Health WA, Curtin University, WA Primary Health Alliance, FrontierSI: Perth, Australia, 2019. Available online: https://ww2.health.wa.gov.au/~/media/Files/Corporate/Reports-and-publications/Geographic-variation-in-PHC/Geographic-variation-in-PHC-service-utilisation-and-PPHs.pdf (accessed on 1 August 2021).

- Gygli, N.; Zúñiga, F.; Simon, M. Regional variation of potentially avoidable hospitalisations in Switzerland: An observational study. BMC Health Serv. Res. 2021, 21, 849. [Google Scholar] [CrossRef] [PubMed]

- Wilk, P.; Ali, S.; Anderson, K.K.; Clark, A.F.; Cooke, M.; Frisbee, S.J.; Gilliland, J.; Haan, M.; Harris, S.; Kiarasi, S.; et al. Geographic variation in preventable hospitalisations across Canada: A cross-sectional study. BMJ Open 2020, 10, e037195. [Google Scholar] [CrossRef]

- Busby, J.; Purdy, S.; Hollingworth, W. A systematic review of the magnitude and cause of geographic variation in unplanned hospital admission rates and length of stay for ambulatory care sensitive conditions. BMC Health Serv. Res. 2015, 15, 324. [Google Scholar] [CrossRef]

- Busby, J.; Purdy, S.; Hollingworth, W. Opportunities for primary care to reduce hospital admissions: A cross-sectional study of geographical variation. Br. J. Gen. Pr. 2016, 67, e20–e28. [Google Scholar] [CrossRef]

- Chang, B.A.; Pearson, W.S.; Owusu-Edusei, K., Jr. Correlates of county-level nonviral sexually transmitted infection hot spots in the US: Application of hot spot analysis and spatial logistic regression. Ann. Epidemiol. 2017, 27, 231–237. [Google Scholar] [CrossRef]

- Mercier, G.; Georgescu, V.; Bousquet, J. Geographic Variation in Potentially Avoidable Hospitalizations In France. Health Aff. 2015, 34, 836–843. [Google Scholar] [CrossRef] [PubMed]

- O’Cathain, A.; Knowles, E.; Maheswaran, R.; Pearson, T.; Turner, J.; Hirst, E.; Goodacre, S.; Nicholl, J. A system-wide approach to explaining variation in potentially avoidable emergency admissions: National ecological study. BMJ Qual. Saf. 2013, 23, 47–55. [Google Scholar] [CrossRef] [PubMed]

- Torio, C.M.; Andrews, R.M. Geographic Variation in Potentially Preventable Hospitalizations for Acute and Chronic Conditions, 2005–2011; HCUP Statistical Brief #178; Agency for Healthcare Research and Quality: Rockville, MD, USA, 2014.

- Fuller, T.L.; Gilbert, M.; Martin, V.; Cappelle, J.; Hosseini, P.; Njabo, K.Y.; Aziz, S.A.; Xiao, X.; Daszak, P.; Smith, T.B. Predicting Hotspots for Influenza Virus Reassortment. Emerg. Infect. Dis. 2013, 19, 581–588. [Google Scholar] [CrossRef]

- Falster, M.; Jorm, L. A Guide to the Potentially Preventable Hospitalisations Indicator in Australia; Centre for Big Data Research in Health, University of New South Wales in consultation with Australian Commission on Safety and Quality in Health Care and Australian Institute of Health and Welfare: Sydney, Australia, 2017. Available online: https://www.safetyandquality.gov.au/sites/default/files/migrated/A-guide-to-the-potentially-preventable-hospitalisations-indicator-in-Australia.pdf (accessed on 1 August 2021).

- Longman, J.M.; Passey, M.E.; Ewald, D.P.; Rix, E.F.; Morgan, G. Admissions for chronic ambulatory care sensitive conditions—A useful measure of potentially preventable admission? BMC Health Serv. Res. 2015, 15, 472. [Google Scholar] [CrossRef]

- Public Health Information Development Unit (PHIDU). Social Health Atlas of Australia: Population Health Areas (online). 2018. Available online: https://phidu.torrens.edu.au (accessed on 1 August 2021).

- Australian Atlas of Healthcare Variation. Australian Commission on Safety and Quality in Health Care. 2018. Available online: http://www.safetyandquality.gov.au/atlas/ (accessed on 1 August 2021).

- Dartmouth Institute. Dartmouth Atlas of Health Care. 1996. Available online: www.dartmouthatlas.org (accessed on 1 August 2021).

- NHS Atlas of Variation in Healthcare. National Health Service. 2010. Available online: https://fingertips.phe.org.uk/profile/atlas-of-variation (accessed on 1 August 2021).

- Crighton, E.J.; Ragetlie, R.; Luo, J.; To, T.; Gershon, A. A spatial analysis of COPD prevalence, incidence, mortality and health service use in Ontario. Health Rep. 2015, 26, 10–18. [Google Scholar] [PubMed]

- Ibáñez-Beroiz, B.; Librero, J.; Bernal-Delgado, E.; García-Armesto, S.; Villanueva-Ferragud, S.; Peiró, S. Joint spatial modeling to identify shared patterns among chronic related potentially preventable hospitalizations. BMC Med. Res. Methodol. 2014, 14, 74. [Google Scholar] [CrossRef][Green Version]

- Department of Health [Western Australia]. Lessons of Location: Potentially Preventable Hospitalisation—Hotspots in Western Australia 2017. Available online: https://ww2.health.wa.gov.au/~/media/Files/Corporate/Reports-and-publications/Lessons-of-Location/Lessons-of-Location-2017.pdf (accessed on 1 August 2021).

- Manderbacka, K.; Arffman, M.; Satokangas, M.; Keskimäki, I. Regional variation of avoidable hospitalisations in a universal health care system: A register-based cohort study from Finland 1996−2013. BMJ Open 2019, 9, e029592. [Google Scholar] [CrossRef]

- Health Performance Council [South Australia]. Hotspots of Potentially Preventable Hospital Admissions—Pinpointing Potential Health Inequalities by Analysis of South Australian Public Hospital Admitted Patient Care Data. 2019. Available online: https://apo.org.au/sites/default/files/resource-files/2019-11/apo-nid269836.pdf (accessed on 1 August 2021).

- Geographic Terms and Concepts—Block Groups. United States Census Bureau. 2012. Available online: https://www.census.gov/geo/reference/gtc/gtc_bg.html?cssp=SERP (accessed on 1 August 2021).

- Middle Layer Super Output Areas. Full Extent Boundaries in England and Wales. Office for National Statistics. 2011. Available online: http://geoportal.statistics.gov.uk/datasets?q=MSOA_Boundaries_2011&sort=name (accessed on 1 August 2021).

- Australian Bureau of Statistics. Australian Statistical Geography Standard (ASGS): Volume 1-Main Structure and Greater Capital City Statistical Areas. 2011. Available online: https://www.ausstats.abs.gov.au/ausstats/subscriber.nsf/0/D3DC26F35A8AF579CA257801000DCD7D/$File/1270055001_july%202011.pdf (accessed on 1 August 2021).

- Eayres, D. Commonly Used Public Health Statistics and Their Confidence Intervals; National Centre for Health Outcomes Development: 2008. Available online: https://fingertips.phe.org.uk/documents/APHO%20Tech%20Briefing%203%20Common%20PH%20Stats%20and%20CIs.pdf (accessed on 1 August 2021).

- Miller, A. Finding subsets which fit well. In Subset Selection in Regression; Chapman and Hall/CRC: New York, NY, USA, 2002. [Google Scholar]

- Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection. In Proceedings of the Fourteenth International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 20–25 August 1995; Morgan Kaufmann: San Mateo, CA, USA, 1995; Volume 2, pp. 1137–1143. [Google Scholar]

- Kim, J.-H. Estimating classification error rate: Repeated cross-validation, repeated hold-out and bootstrap. Comput. Stat. Data Anal. 2009, 53, 3735–3745. [Google Scholar] [CrossRef]

- Kuhn, M.; Johnson, K. Applied Predictive Modeling; Springer: New York, NY, USA, 2018. [Google Scholar]

- Bergmeir, C.; Benítez, J.M. On the use of cross-validation for time series predictor evaluation. Inf. Sci. 2012, 191, 192–213. [Google Scholar] [CrossRef]

- Tashman, L.J. Out-of-sample tests of forecasting accuracy: An analysis and review. Int. J. Forecast. 2000, 16, 437–450. [Google Scholar] [CrossRef]

- Ounpraseuth, S.; Lensing, S.Y.; Spencer, H.J.; Kodell, R.L. Estimating misclassification error: A closer look at cross-validation based methods. BMC Res. Notes 2012, 5, 656. [Google Scholar] [CrossRef] [PubMed]

- Molinaro, A.M.; Simon, R.; Pfeiffer, R.M. Prediction error estimation: A comparison of resampling methods. Bioinformatics 2005, 21, 3301–3307. [Google Scholar] [CrossRef] [PubMed]

- Holman, C.D.J.; Bass, A.J.; Rouse, I.L.; Hobbs, M.S. Population-based linkage of health records in Western Australia: Development of a health services research linked database. Aust. N Z J Public Health 1999, 23, 453–459. [Google Scholar] [CrossRef]

- National Centre for Classification in Health (NCCH). The International Statistical Classification of Diseases and Related Health Problems, Tenth Revision, Australian Modification (ICD-10-AM), 7th ed.; National Centre for Classification in Health: Lidcombe, NSW, Australia, 2010. [Google Scholar]

- Scheepers, J. High Risk Foot Project. Improving High Risk Foot Management in the NMHS: NMHS Public Health & Ambulatory Care; Department of Health, Western Australia: East Perth, Australia, 2014. [Google Scholar]

- Australian Institute of Health and Welfare. Principles on the Use of Direct Age-Standardisation in Administrative Data Collections: For Measuring the Gap between Indigenous and Non-Indigenous Australians; Australian Institute of Health and Welfare (AIHW): Canberra, Australia, 2011.

- Socio-Economic Indexes for Areas. 2016 ed.: Australian Bureau of Statistics, 2016, SEIFA Provides Measures of Socio-Economic Conditions by Geographic Area. Available online: https://www.abs.gov.au/ausstats/abs@.nsf/mf/2033.0.55.001 (accessed on 1 August 2021).

- Khan, A.A. An integrated approach to measuring potential spatial access to health care services. Socio-Econ. Plan. Sci. 1992, 26, 275–287. [Google Scholar] [CrossRef]

- Maechler, M.; Stahel, W.; Ruckstuhl, A.; Keller, C.; Halvorsen, K.; Hauser, A.; Buser, C.; Gygax, L.; Venables, B.; Plate, T.; et al. sfsmisc: Utilities from “Seminar fuer Statistik” ETH Zurich. 2016. Available online: https://rdrr.io/cran/sfsmisc/ (accessed on 1 August 2021).

- Firth, D. Bias Reduction of Maximum Likelihood Estimates. Biometrika 1993, 80, 27–38. [Google Scholar] [CrossRef]

- Heinze, G.; Schemper, M. A solution to the problem of separation in logistic regression. Stat Med. 2002, 21, 2409–2419. [Google Scholar] [CrossRef]

- van Smeden, M.; de Groot, J.A.H.; Moons, K.; Collins, G.S.; Altman, D.G.; Eijkemans, M.J.; Reitsma, J.B. No rationale for 1 variable per 10 events criterion for binary logistic regression analysis. BMC Med. Res. Methodol. 2016, 16, 163. [Google Scholar] [CrossRef]

- Arlot, S.; Celisse, A. A survey of cross-validation procedures for model selection. Stat. Surv. 2010, 4, 40–79. [Google Scholar] [CrossRef]

- Breiman, L. Statistical Modeling: The Two Cultures (with comments and a rejoinder by the author). Stat. Sci. 2001, 16, 199–231. [Google Scholar] [CrossRef]

- Shmueli, G. To Explain or to Predict? Stat. Sci. 2010, 25, 289–310. [Google Scholar] [CrossRef]

- Hughes, J.S. Should the Positive Predictive Value be Used to Validate Complications Measures? Med. Care. 2016, 55, 87. [Google Scholar] [CrossRef]

- Collins, G.S.; De Groot, J.A.; Dutton, S.; Omar, O.; Shanyinde, M.; Tajar, A.; Voysey, M.; Wharton, R.; Yu, L.-M.; Moons, K.G.; et al. External validation of multivariable prediction models: A systematic review of methodological conduct and reporting. BMC Med. Res. Methodol. 2014, 14, 40. [Google Scholar] [CrossRef] [PubMed]

- Steyerberg, E.W.; Vickers, A.; Cook, N.R.; Gerds, T.A.; Gonen, M.; Obuchowski, N.; Pencina, M.J.; Kattan, M.W. Assessing the Performance of Prediction Models. Epidemiology 2010, 21, 128–138. [Google Scholar] [CrossRef] [PubMed]

- Braga-Neto, U.M.; Dougherty, E.R. Is cross-validation valid for small-sample microarray classification? Bioinformatics 2004, 20, 374–380. [Google Scholar] [CrossRef]

- Forman, G.; Scholz, M. Apples-to-apples in cross-validation studies. ACM SIGKDD Explor. Newsl. 2010, 12, 49–57. [Google Scholar] [CrossRef]

- Ashton, C.M.; Pietz, K. Setting a New Standard for Studies of Geographic Variation in Hospital Utilization Rates. Med. Care. 2005, 43, 1–3. Available online: https://www.jstor.org/stable/3768336 (accessed on 1 August 2021).

- Schwartz, M.; Peköz, E.A.; Ash, A.S.; Posner, M.A.; Restuccia, J.D.; Iezzoni, L.I. Do variations in disease prevalence limit the usefulness of population-based hospitalization rates for studying variations in hospital admissions? Med. Care. 2005, 43, 4–11. Available online: http://www.jstor.org/stable/3768337 (accessed on 1 August 2021).

- Manley, D. “Scale, Aggregation, and the Modifiable Areal Unit Problem” in The Handbook of Regional Scienc; Fischer, M.M., Nijkamp, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2014; Chapter 59; pp. 1157–1171. [Google Scholar]

- Tuson, M.; Yap, M.; Kok, M.R.; Boruff, B.; Murray, K.; Vickery, A.; Turlach, B.A.; Whyatt, D. Overcoming inefficiencies arising due to the impact of the modifiable areal unit problem on single-aggregation disease maps. Int. J. Health Geogr. 2020, 19, 40. [Google Scholar] [CrossRef]

| PPH | Validation Statistics (95% Quantile Interval) | ||||||

|---|---|---|---|---|---|---|---|

| N (%) Events 1 | Sensitivity Threshold | Sensitivity | Specificity | PPV | NPV | N (%) Predicted 1 | |

| State-wide models | |||||||

| HRF | 14 (6.3) | 0.5 | 0.531 (0.421–0.632) | 0.993 (0.980–1.000) | 0.872 (0.717–1.000) | 0.958 (0.948–0.966) | 11 (5) |

| COPD | 16 (7.2) | 0.4 | 0.412 (0.286–0.524) | 0.972 (0.955–0.985) | 0.602 (0.468–0.750) | 0.941 (0.929–0.951) | 17 (7.7) |

| HF | 5 (2.3) | 0.3 | 0.347 (0.250–0.481) | 0.990 (0.976–1.000) | 0.66 (0.444–1.000) | 0.964 (0.958–0.971) | 9 (4.1) |

| T2D | 12 (5.4) | 0.3 | 0.302 (0.200–0.333) | 0.998 (0.986–1.000) | 0.913 (0.625–1.000) | 0.952 (0.945–0.954) | 5 (2.3) |

| Metropolitan models | |||||||

| HRF | 10 (6.7) | 0.5 | 0.529 (0.364–0.636) | 0.987 (0.971–1.000) | 0.766 (0.556–1.000) | 0.963 (0.951–0.972) | 7 (4.7) |

| COPD | 11 (7.4) | 0.4 | 0.449 (0.286–0.571) | 0.953 (0.926–0.978) | 0.499 (0.378–0.636) | 0.943 (0.928–0.955) | 8 (5.4) |

| HF | 5 (3.4) | 0.3 | 0.500 (0.500–0.500) | 0.971 (0.952–0.986) | 0.319 (0.222–0.500) | 0.986 (0.986–0.986) | 2 (1.3) |

| T2D | 4 (2.7) | 0.3 | 0.325 (0.125–0.375) | 0.956 (0.943–0.972) | 0.297 (0.143–0.375) | 0.961 (0.951–0.965) | 7 (4.7) |

| Variable | Model | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

| Number of past consecutive years classified as a hotspot | 1.57 | 1.56 | 1.57 | 1.56 | 1.56 | 1.55 | 1.64 | 1.56 | 1.56 | 1.36 |

| IER percentile | 0.93 | |||||||||

| IEO percentile | 0.99 | |||||||||

| Percentage of Aboriginal individuals 1 | 3.43 | 3.44 | 3.59 | 3.33 | 3.47 | 6.03 | 2.55 | 3.55 | 3.36 | 1.20 |

| Percentage of male individuals 2 | 0.71 | 0.76 | 0.73 | 0.71 | 0.74 | 0.87 | 0.75 | 0.73 | 0.83 | |

| Percentage of individuals aged 75 or above | 0.99 | 0.99 | ||||||||

| Weighted distance to nearest ED (km) 1 | 1.05 | 1.02 | 1.02 | 0.74 | 1.06 | |||||

| Weighted distance to nearest GP (km) 1 | 0.43 | |||||||||

| GP accessibility index percentile | 1.00 | 1.00 | 1.00 | |||||||

| Validation statistics | ||||||||||

| Sensitivity | 0.531 | 0.504 | 0.530 | 0.528 | 0.520 | 0.504 | 0.527 | 0.517 | 0.526 | 0.516 |

| PPV | 0.872 | 0.870 | 0.869 | 0.868 | 0.867 | 0.865 | 0.864 | 0.862 | 0.861 | 0.859 |

| Variable | Model | |||||||

|---|---|---|---|---|---|---|---|---|

| State-Wide | Metropolitan | |||||||

| HRF | COPD | HF | T2D | HRF | COPD | HF | T2D | |

| Number of consecutive past years classified as a hotspot | √ | √ | √ | √ | √ | |||

| IRSAD percentile | √ | |||||||

| IER percentile | √ | √ | ||||||

| IEO percentile | √ | |||||||

| Percentage of Aboriginal individuals 1 | √ | √ | √ | √ | √ | √ | ||

| Percentage of male individuals 2 | √ | √ | √ | |||||

| Percentage of individuals aged 75 or above | √ | √ | √ | √ | ||||

| Percentage of individuals aged 75 or above (quadratic term) | √ | √ | √ | |||||

| Number of consecutive past years classified as a hotspot: IER percentile 3 | √ | |||||||

| Weighted distance to nearest ED (km) 1 | √ | √ | √ | |||||

| Weighted distance to nearest GP (km) 1 | √ | √ | ||||||

| GP accessibility index percentile | √ | |||||||

| Model | PPH | Method | |||

|---|---|---|---|---|---|

| Current Hotspots | Past Persistent Hotspots | ||||

| Sensitivity | PPV | Sensitivity | PPV | ||

| State-wide | HRF | 0.684 | 0.406 | 0.421 | 0.8 |

| COPD | 0.667 | 0.333 | 0.143 | 0.6 | |

| HF | 0.583 | 0.28 | 0.083 | 0.5 | |

| T2D | 0.533 | 0.286 | 0.267 | 0.571 | |

| Metropolitan | HRF | 0.545 | 0.273 | 0.364 | 0.571 |

| COPD | 0.429 | 0.24 | 0.071 | 0.333 | |

| HF | 0.5 | 0.143 | 0 | NA | |

| T2D | 0.125 | 0.063 | 0 | 0 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tuson, M.; Turlach, B.; Murray, K.; Kok, M.R.; Vickery, A.; Whyatt, D. Predicting Future Geographic Hotspots of Potentially Preventable Hospitalisations Using All Subset Model Selection and Repeated K-Fold Cross-Validation. Int. J. Environ. Res. Public Health 2021, 18, 10253. https://doi.org/10.3390/ijerph181910253

Tuson M, Turlach B, Murray K, Kok MR, Vickery A, Whyatt D. Predicting Future Geographic Hotspots of Potentially Preventable Hospitalisations Using All Subset Model Selection and Repeated K-Fold Cross-Validation. International Journal of Environmental Research and Public Health. 2021; 18(19):10253. https://doi.org/10.3390/ijerph181910253

Chicago/Turabian StyleTuson, Matthew, Berwin Turlach, Kevin Murray, Mei Ruu Kok, Alistair Vickery, and David Whyatt. 2021. "Predicting Future Geographic Hotspots of Potentially Preventable Hospitalisations Using All Subset Model Selection and Repeated K-Fold Cross-Validation" International Journal of Environmental Research and Public Health 18, no. 19: 10253. https://doi.org/10.3390/ijerph181910253

APA StyleTuson, M., Turlach, B., Murray, K., Kok, M. R., Vickery, A., & Whyatt, D. (2021). Predicting Future Geographic Hotspots of Potentially Preventable Hospitalisations Using All Subset Model Selection and Repeated K-Fold Cross-Validation. International Journal of Environmental Research and Public Health, 18(19), 10253. https://doi.org/10.3390/ijerph181910253