Practice Effects, Test–Retest Reliability, and Minimal Detectable Change of the Ruff 2 and 7 Selective Attention Test in Patients with Schizophrenia

Abstract

:1. Introduction

2. Materials and Methods

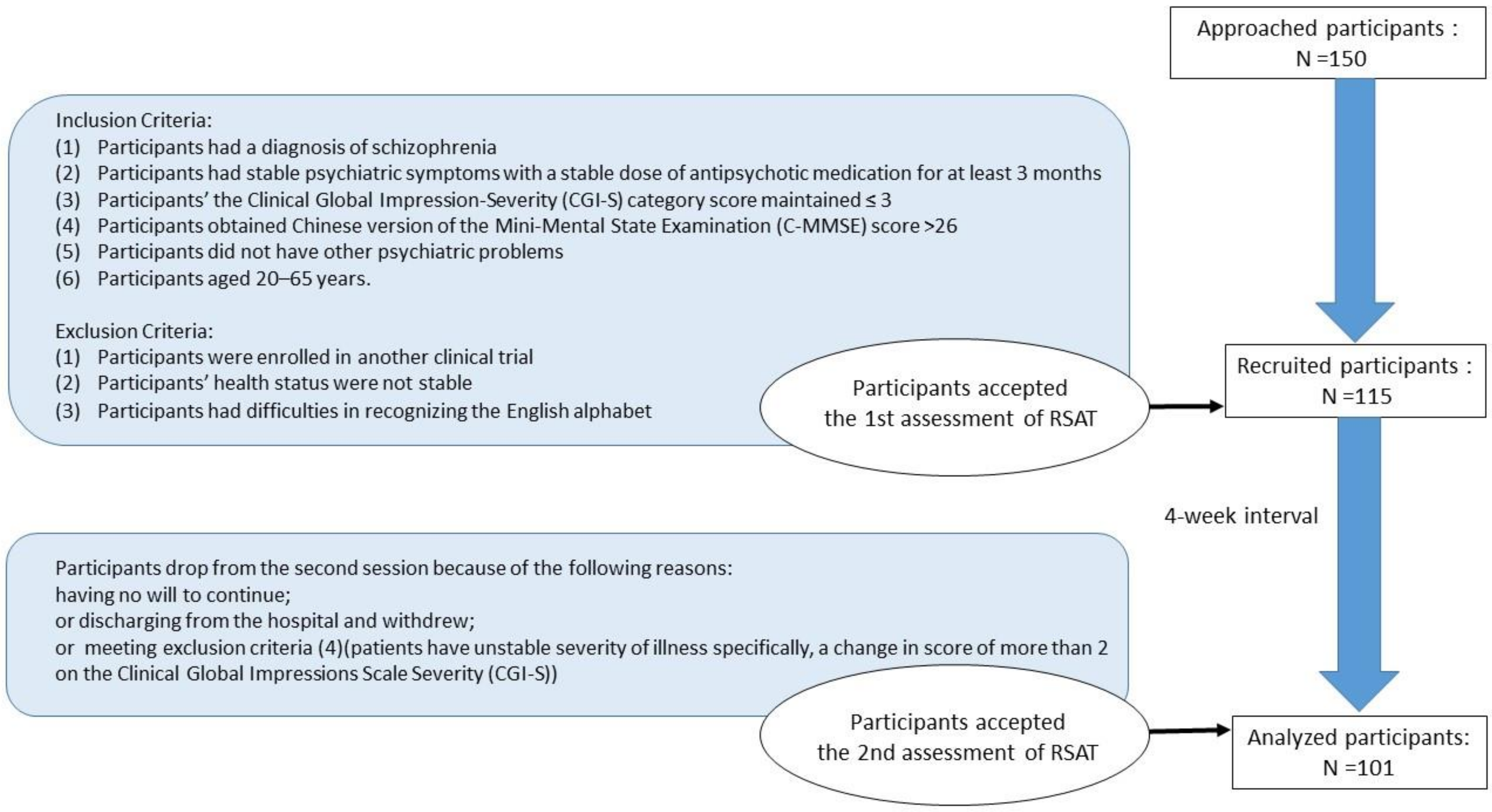

2.1. Participants

2.2. Procedure

2.3. Measures

2.3.1. Ruff 2 and 7 Selective Attention Test (RSAT)

2.3.2. Clinical Global Impression Scale–Severity (CGI-S)

2.3.3. Chinese Version of the Mini-Mental State Examination (C-MMSE)

2.4. Statistical Analysis

2.4.1. Test–Retest Reliability

2.4.2. Practice Effects

2.4.3. Minimal Detectable Change (MDC)

3. Results

3.1. Demographic and Clinical Characteristics of Participant Subsections

3.2. Test–Retest Reliability

3.3. Practice Effect

3.4. Minimal Detectable Change (MDC)

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Gerretsen, P.; Voineskos, A.N.; Graff-Guerrero, A.; Menon, M.; Pollock, B.G.; Mamo, D.C.; Mulsant, B.H.; Rajji, T.K. Insight into Illness and Cognition in Schizophrenia in Earlier and Later Life. J. Clin. Psychiatry 2017, 78, e390–e397. [Google Scholar] [CrossRef]

- Sharma, T.; Antonova, L. Review Cognitive function in schizophrenia. Deficits, functional consequences, and future treatment. Psychiatr. Clin. N. Am. 2003, 26, 25–40. [Google Scholar] [CrossRef]

- Gold, J.M.; Robinson, B.; Leonard, C.J.; Hahn, B.; Chen, S.; McMahon, R.P.; Luck, S.J. Selective Attention, Working Memory, and Executive Function as Potential Independent Sources of Cognitive Dysfunction in Schizophrenia. Schizophr. Bull. 2018, 44, 1227–1234. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Luck, S.J.; Gold, J.M. The construct of attention in schizophrenia. Biol. Psychiatry 2008, 64, 34–39. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Leshem, R.; Icht, M.; Bentaur, R.; Ben-David, B.M. Processing of emotions in speech in forensic patients with schizophrenia: Impairments in identification, selective attention, and integration of speech channels. Front. Psychiatry 2020, 11, 601763. [Google Scholar] [CrossRef] [PubMed]

- American Psychiatric Association. The American Psychiatric Association Practice Guideline for the Treatment of Patients with Schizophrenia, 3rd ed.; American Psychiatric Publishing: Washington, DC, USA, 2020; pp. 7–27. [Google Scholar]

- Kalkstein, S.; Hurford, I.; Gur, R.C. Neurocognition in schizophrenia. Curr. Top. Behav. Neurosci. 2010, 4, 373–390. [Google Scholar] [CrossRef] [PubMed]

- Kindler, J.; Lim, C.K.; Weickert, C.S.; Boerrigter, D.; Galletly, C.; Liu, D.; Jacobs, K.R.; Balzan, R.; Bruggemann, J.; O’Donnell, M.; et al. Dysregulation of kynurenine metabolism is related to proinflammatory, cytokines, attention, and prefrontal cortex volume in schizophrenia. Mol. Psychiatry 2020, 25, 2860–2872. [Google Scholar] [CrossRef] [Green Version]

- Mayer, A.R.; Hanlon, F.M.; Teshiba, T.M.; Klimaj, S.D.; Ling, J.F.; Dodd, A.B.; Calhoun, V.D.; Bustillo, J.R.; Toulouse, T. An fMRI study of multimodal selective attention in schizophrenia. Br. J. Psychiatry 2015, 207, 420–428. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nuechterlein, K.H.; Barch, D.M.; Gold, J.M.; Goldberg, T.E.; Green, M.F.; Heaton, R.K. Identification of separable cognitive factors in schizophrenia. Schizophr. Res. 2004, 72, 29–39. [Google Scholar] [CrossRef]

- Oranje, B.; Aggernaes, B.; Rasmussen, H.; Ebdrup, B.H.; Glenthoj, B.Y. Selective attention and mismatch negativity in antipsychotic-naïve, first-episode schizophrenia patients before and after 6 months of antipsychotic monotherapy. Psychol. Med. 2017, 47, 2155–2165. [Google Scholar] [CrossRef]

- Lin, S.H.; Yeh, Y.Y. Domain-specific control of selective attention. PLoS ONE 2014, 9, e98260. [Google Scholar] [CrossRef] [Green Version]

- De Fockert, J.W.; Rees, G.; Frith, C.D.; Lavie, N. The role of working memory in visual selective attention. Science 2001, 291, 1803–1806. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lavie, N.; Hirst, A.; DeFockert, J.W.; Viding, E. Load theory of selective attention and cognitive control. J. Exp. Psychol. Gen. 2004, 133, 339–354. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Iwanami, A.; Isono, H.; Okajima, Y.; Noda, Y.; Kamijima, K. Event-related potentials during a selective attention task with short interstimulus intervals in patients with schizophrenia. J. Psychiatry Neurosci. 1998, 23, 45–50. [Google Scholar] [PubMed]

- Silberstein, J.; Harvey, P. Cognition, social cognition, and Self-assessment in schizophrenia: Prediction of different elements of everyday functional outcomes. CNS Spectr. 2019, 24, 88–93. [Google Scholar] [CrossRef]

- Atkinson, G.; Nevill, A.M. Statistical methods for assessing measurement error (reliability) in variables relevant to sports medicine. Sport Med. 1998, 26, 217–238. [Google Scholar] [CrossRef]

- Downing, S.M. Reliability: On the reproducibility of assessment data. Med. Educ. 2004, 38, 1006–1012. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aldridge, V.K.; Dovey, T.M.; Wade, A. Assessing Test–retest Reliability of Psychological Measures. Eur. Psychol. 2017, 22, 207–218. [Google Scholar] [CrossRef]

- Chen, Y.M.; Huang, Y.J.; Huang, C.Y.; Lin, G.H.; Liaw, L.J.; Hsieh, C.L. Test–retest reliability and minimal detectable change of two simplified 3-point balance measures in patients with stroke. Eur. J. Phys. Rehabil. Med. 2017, 53, 719–724. [Google Scholar] [CrossRef]

- Portney, L.G.; Watkins, M.P. Foundations of Clinical Research: Applications to Practice, 3rd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2009; pp. 64–65. [Google Scholar]

- Lang, X.; Zhang, W.; Song, X.; Zhang, G.; Du, X.; Zhou, Y.; Li, Z.; Zhang, X.Y. FOXP2 contributes to the cognitive impairment in chronic patients with schizophrenia. Aging 2019, 11, 6440–6448. [Google Scholar] [CrossRef]

- Green, M.F.; Nuechterlein, K.H.; Gold, J.M.; Barch, D.M.; Cohen, J.; Essock, S.; Fenton, W.S.; Frese, F.; Goldberg, T.E.; Heaton, R.K.; et al. Approaching a consensus cognitive battery for clinical trials in schizophrenia: The NIMH-MATRICS conference to select cognitive domains and test criteria. Biol. Psychiatry. 2004, 56, 301–307. [Google Scholar] [CrossRef]

- Pereira, D.R.; Costa, P.; Cerqueira, J.J. Repeated assessment and practice effects of the written Symbol Digit Modalities Test using a short inter-test interval. Arch. Clin. Neuropsychol. 2015, 30, 424–434. [Google Scholar] [CrossRef] [Green Version]

- Tang, S.F.; Chen, I.H.; Chiang, H.Y.; Wu, C.T.; Hsueh, I.P.; Yu, W.H.; Hsieh, C.L. A comparison between the original and Tablet-based Symbol Digit Modalities Test in patients with schizophrenia: Test–retest agreement, random measurement error, practice effect, and ecological validity. Psychiatry Res. 2018, 260, 199–206. [Google Scholar] [CrossRef]

- Duff, K.; Beglinger, L.J.; Schultz, S.K.; Moser, D.J.; McCaffrey, R.J.; Haase, R.F.; Westervelt, H.J.K.; Langbehn, D.R.; Paulsen, J.S.; Huntington’s Study Group. Practice effects in the prediction of long-term cognitive outcome in three patient samples: A novel prognostic index. Arch. Clin Neuropsychol. 2007, 22, 15–24. [Google Scholar] [CrossRef] [Green Version]

- Furlan, L.; Annette, S. The applicability of standard error of measurement and minimal detectable change to motor learning research—A behavioral study. Front. Hum. Neurosci. 2018, 12, 95. [Google Scholar] [CrossRef]

- Ruff, R.M.; Niemann, H.; Allen, C.C.; Farrow, C.E.; Wylie, T. The Ruff 2 and 7 Selective Attention Test: A Neuropsychological Application. Percept. Mot. Skills 1992, 75, 1311–1319. [Google Scholar] [CrossRef] [PubMed]

- Ruff, R.M.; Evans, R.W.; Light, R.H. Automatic detection vs controlled search: A paper-and-pencil approach. Percept. Mot. Skills. 1986, 62, 407–416. [Google Scholar] [CrossRef] [Green Version]

- Weiss, K.M. A simple clinical assessment of attention in schizophrenia. Psychiatry Res. 1996, 60, 147–154. [Google Scholar] [CrossRef]

- Messinis, L.; Kosmidis, M.H.; Tsakona, I.; Georgiou, V.; Aretouli, E.; Papathanasopoulos, P. Ruff 2 and 7 Selective Attention Test: Normative data, discriminant validity and test–retest reliability in Greek adults. Arch. Clin. Neuropsychol. 2007, 22, 773–785. [Google Scholar] [CrossRef] [Green Version]

- Kadri, A.; Slimani, M.; Bragazzi, N.L.; Tod, D.; Azaiez, F. Effect of Taekwondo Practice on Cognitive Function in Adolescents with Attention Deficit Hyperactivity Disorder. Int. J. Environ. Res. Public Health 2019, 16, 204. [Google Scholar] [CrossRef] [Green Version]

- Cicerone, K.D.; Azulay, J. Diagnostic utility of attention measures in postconcussion syndrome. Clin. Neuropsychol. 2002, 16, 280–289. [Google Scholar] [CrossRef]

- Knight, R.G.; McMahon, J.; Skeaff, C.M.; Green, T.J. Reliable d change indices for the ruff 2 and 7 selective attention test in older adults. Appl. Neuropsychol. 2010, 17, 239–245. [Google Scholar] [CrossRef]

- Fernández-Marcos, T.; de la Fuente, C.; Santacreu, J. Test–retest reliability and convergent validity of attention measures. Appl. Neuropsychol. Adult 2018, 25, 464–472. [Google Scholar] [CrossRef]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; American Psychiatric Publishing: Washington, DC, USA, 2013; pp. 87–122. [Google Scholar]

- Pinna, F.; Deriu, L.; Diana, E.; Perra, V.; Randaccio, R.P.; Sanna, L.; Tusconi, M.; Carpiniello, B.; Cagliari Recovery Study Group. Clinical Global Impression-severity score as a reliable measure for routine evaluation of remission in schizophrenia and schizoaffective disorders. Ann. Gen. Psychiatry 2015, 14, 6. [Google Scholar] [CrossRef] [Green Version]

- Guo, N.W.; Liu, H.C.; Wong, P.F.; Liao, K.K.; Yan, S.H.; Lin, K.P.; Hsu, T.C. Chinese version and norms of the Mini-Mental State Examination. J. Rehabil. Med. Assoc. 1988, 16, e59. [Google Scholar]

- Wu, B.J.; Lin, C.H.; Tseng, H.F.; Liu, W.M.; Chen, W.C.; Huang, L.S.; Sun, H.J.; Chiang, S.K.; Lee, S.M. Validation of the Taiwanese Mandarin version of the Personal and Social Performance scale in a sample of 655 stable schizophrenic patients. Schizophr. Res. 2013, 146, 34–39. [Google Scholar] [CrossRef]

- Dymowski, A.R.; Owens, J.A.; Ponsford, J.L.; Willmott, C. Speed of processing and strategic control of attention after traumatic brain injury. J. Clin. Exp. Neuropsychol. 2015, 37, 1024–1035. [Google Scholar] [CrossRef]

- Ruff, R.M.; Allen, C.C. Ruff 2 & 7 Selective Attention Test: Professional Manual; Psychological Assessment Resources: Lutz, FL, USA, 1996; pp. 1–65. [Google Scholar]

- Clark, S.L.; Souza, R.P.; Adkins, D.E.; Åberg, K.; Bukszár, J.; McClay, J.L.; Sullivan, P.F.; van den Oord, E.J.C.G. Genome-wide association study of patient-rated and clinician-rated global impression of severity during antipsychotic treatment. Pharmacogenet. Genomics 2013, 23, 69–77. [Google Scholar] [CrossRef] [Green Version]

- Huang, R.R.; Chen, Y.S.; Chen, C.C.; Chou, F.H.C.; Su, S.F.; Chen, M.C.; Kou, M.H.; Chang, L.H. Quality of life and its associated factors among patients with two common types of chronic mental illness living in Kaohsiung City. Psychiatry Clin. Neurosci. 2012, 66, 482–490. [Google Scholar] [CrossRef] [PubMed]

- Guy, W. CGI Clinical Global Impressions; Government Printing Office: Washington, DC, USA, 1976; pp. 218–222. [Google Scholar]

- Leucht, S.; Davis, J.M.; Engel, R.R.; Kissling, W.; Kane, J.M. Definitions of response and remission in schizophrenia: Recommendations for their use and their presentation. Acta Psychiatr. Scand. 2009, 119, 7–14. [Google Scholar] [CrossRef] [PubMed]

- Haro, J.M.; Kamath, S.A.; Ochoa, S.; Novick, D.; Rele, K.; Fargas, A.; Rodríguez, M.J.; Rele, R.; Orta, J.; Kharbeng, A.; et al. SOHO Study Group. The Clinical Global Impression-Schizophrenia scale: A simple instrument to measure the diversity of symptoms present in schizophrenia. Acta Psychiatr. Scand. Suppl. 2003, 416, 16–23. [Google Scholar] [CrossRef]

- Allen, M.H.; Daniel, D.G.; Revicki, D.A.; Canuso, C.M.; Turkoz, I.; Fu, D.J.; Alphs, L.; Ishak, K.J.; Lindenmayer, J.P. Development and psychometric evaluation of a clinical global impression for schizoaffective disorder scale. Innov. Clin. Neurosci. 2012, 9, 15–24. [Google Scholar]

- Folstein, M.F.; Folstein, S.E.; McHugh, P.R. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 1975, 12, 189–198. [Google Scholar] [CrossRef]

- Folstein, M.F.; Robins, L.N.; Helzer, J.E. The Mini-Mental State Examination. Arch. Gen. Psychiat. 1983, 40, 812. [Google Scholar] [CrossRef] [PubMed]

- Rankin, G.; Stokes, M. Reliability of assessment tools in rehabilitation: An illustration of appropriate statistical analyses. Clin. Rehabil. 1998, 12, 187–199. [Google Scholar] [CrossRef]

- Prince, B.; Makrides, L.; Richman, J. Research methodology and applied statistics. Part 2: The literature search. Physiother. Can. 1980, 32, 201–206. [Google Scholar]

- Shrout, P.E.; Fleiss, J.L. Intraclass correlations: Uses in assessing rater reliability. Psychol. Bull. 1979, 86, 420–428. [Google Scholar] [CrossRef]

- Bushnell, C.D.; Johnston, D.C.C.; Goldstein, L.B. Retrospective assessment of initial stroke severity comparison of the NIH stroke scale and the Canadian Neurological Scale. Stroke 2001, 32, 656–660. [Google Scholar] [CrossRef]

- Kazis, L.E.; Anderson, J.J.; Meenan, R.F. Effect sizes for interpreting changes in health status. Med. Care. 1989, 27, S178–S189. [Google Scholar] [CrossRef] [PubMed]

- Cohen, R.M.; Nordahl, T.E.; Semple, W.E.; Andreason, P.; Pickar, D. Abnormalities in the distributed network of sustained attention predict neuroleptic treatment response in schizophrenia. Neuropsychopharmacology 1998, 19, 36–47. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Lawrence Erlbaum Associates: Hillsdal, NJ, USA, 1988. [Google Scholar]

- Bland, J.M.; Altman, D. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet 1986, 327, 307–310. [Google Scholar] [CrossRef]

- Dobson, F.; Hinman, R.S.; Hall, M.; Marshall, C.J.; Sayer, T.; Anderson, C.; Newcomb, N.; Stratford, P.W.; Bennell, K.L. Reliability and measurement error of the Osteoarthritis Research Society International (OARSI) recommended performance-based tests of physical function in people with hip and knee osteoarthritis. Osteoarthr. Cartil. 2017, 25, 1792–1796. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liaw, L.J.; Hsieh, C.L.; Lo, S.K.; Chen, H.M.; Lee, S.; Lin, J.H. The relative and absolute reliability of two balance performance measures in chronic stroke patients. Disabil. Rehabil. 2008, 30, 656–661. [Google Scholar] [CrossRef] [PubMed]

- Bland, J.M.; Altman, D.G. Applying the right statistics: Analyses of measurement studies. Ultrasound Obstet. Gynecol. 2003, 22, 85–93. [Google Scholar] [CrossRef]

- Beckerman, H.; Roebroeck, M.E.; Lankhorst, G.J.; Becher, J.G.; Bezemer, P.D.; Verbeek, A.L.M. Smallest real difference, a link between reproducibility and responsiveness. Qual. Life Res. 2001, 10, 571–578. [Google Scholar] [CrossRef] [PubMed]

- Lemay, S.; Bédard, M.A.; Rouleau, I.; Tremblay, P.L.G. Practice effect and test–retest reliability of attentional and executive tests in middle-aged to elderly subjects. Clin. Neuropsychol. 2004, 18, 284–302. [Google Scholar] [CrossRef]

- Hunter, J.P.; Marshal, R.N.; McNair, P. Reliability of Biomechanical Variables of Sprint Running. Med. Sci. Sports Exerc. 2004, 36, 850–861. [Google Scholar] [CrossRef]

- Souza, A.C.D.; Alexandre, N.M.C.; Guirardello, E.D.B. Psychometric properties in instruments evaluation of reliability and validity. Epidemiol. Serv. Saúde 2017, 26, 649–659. [Google Scholar] [CrossRef]

- Hutcheon, J.A.; Chiolero, A.; Hanley, J.A. Random measurement error and regression dilution bias. BMJ 2010, 340, c2289. [Google Scholar] [CrossRef] [Green Version]

- Muliady, S.; Malik, K.; Amir, N.; Kaligis, F. Validity and reliability of the indonesian version of brief assessment of cognition in schizophrenia (BACS-I). J. Int. Dent. Med. Res. 2019, 12, 263–267. [Google Scholar]

- Chen, K.W.; Lin, G.H.; Chen, N.C.; Wang, J.K.; Hsieh, C.L. Practice Effects and Test–Retest Reliability of the Continuous Performance Test, Identical Pairs Version in Patients with Schizophrenia over Four Serial Assessments. Arch Clin. Neuropsychol. 2020, 35, 545–552. [Google Scholar] [CrossRef] [PubMed]

- Shih, Y.N.; Hsu, J.L.; Wang, Y.C.; Wu, C.C.; Liao, Y. Test–retest reliability and criterion-related validity of Shih–Hsu test of attention between people with and without schizophrenia. Br. J. Occup. Ther. 2021, 0308022621991774. [Google Scholar] [CrossRef]

- Chiu, E.C.; Lee, S.C. Test–retest reliability of the Wisconsin Card Sorting Test in people with schizophrenia. Disabil. Rehabil. 2021, 43, 996–1000. [Google Scholar] [CrossRef]

- Chen, K.W.; Lee, Y.C.; Yu, T.Y.; Cheng, L.J.; Chao, C.Y.; Hsieh, C.L. Test–retest reliability and convergent validity of the test of nonverbal intelligence-in patients with schizophrenia. BMC Psychiatry 2021, 21, 39. [Google Scholar] [CrossRef] [PubMed]

| Variable | Mean | SD | |

|---|---|---|---|

| Age | 44.0 | 9.2 | |

| Onset | 23.3 | 6.5 | |

| Psychiatric history in years | 20.7 | 9.2 | |

| Education in years | 9.4 | 1.9 | |

| C-MMSE | 29.9 | 2.5 | |

| Variable | N | % | |

| Gender (Male/Female) | 60/41 | 59.4/40.6 | |

| CGI-S | Not at all | 11 | 10.9 |

| Mild | 52 | 51.5 | |

| Borderline | 38 | 37.6 | |

| Measure | 1st Test M (SD) | 2nd Test M (SD) | Difference M (SD) | p-Values | Effect Size | ICC (95% CI) | SEM | MDC (MDC %) | Heteroscedasticity (Pearson r) |

|---|---|---|---|---|---|---|---|---|---|

| ADS | 143.3 (49.7) | 150.3 (53.6) | 7.03 (21.3) | 0.001 * | −0.14 | 0.91 (0.86–0.94) | 14.9 | 41.3 (28.1) | 0.38 |

| ADE | 5.8 (7.4) | 4.5 (6.1) | −1.4 (5.1) | 0.008 * | 0.18 | 0.70 (0.59–0.79) | 4.1 | 11.3 (218.9) | 0.58 |

| ADA | 95.8 (5.2) | 97.1 (4.5) | 1.2 (3.7) | 0.001 * | −0.24 | 0.69 (0.56–0.79) | 2.9 | 8.0(8.3) | −0.58 |

| CSS | 118.3 (38.0) | 129.7 (40.3) | 11.4 (20.1) | 0.000 * | −0.30 | 0.83 (0.66–0.90) | 15.7 | 43.4 (35.0) | 0.28 |

| CSE | 10.8 (9.2) | 8.8 (7.8) | −2.1 (6.0) | 0.001 * | 0.23 | 0.73 (0.60–0.82) | 4.8 | 13.3 (135.5) | 0.41 |

| CSA | 91.3 (8.5) | 93.4 (7.0) | 2.1 (4.7) | 0.000 * | −0.25 | 0.79 (0.65–0.87) | 3.9 | 10.8 (11.6) | 0.31 |

| Author | Year | Participants | Task | Results |

|---|---|---|---|---|

| Lemay et al. [62] | 2004 | Middle-aged to elderly | RSAT | Speed ICC: 0.82 Accuracy ICC: 0.68 |

| Messinis et al. [31] | 2007 | Greek adults | RSAT | Speed ICC: 0.94–0.98 Accuracy ICC: 0.73–0.89 |

| Knight et al. [34] | 2010 | Older adults | RSAT | Speed SEM: 7.46–7.93 Speed practice effect: 3.4–6.1 Accuracy SEM: 3.00–3.65 Accuracy practice effect: 0.1–0.3 |

| Tang et al. [25] | 2018 | Patients with schizophrenia | SDMT | ICC: 0.91–0.94 SEM: 3.0–3.8 Effect sizes: 0.02–0.35 |

| T-SDMT | ICC: 0.89–0.94 SEM: 2.5–3.3 Effect sizes: 0.07–0.43 | |||

| Muliady et al. [66] | 2019 | Patients with schizophrenia | BACS-I | ICC: 0.94 |

| Chen et al. [67] | 2020 | Patients with schizophrenia | CPT-IP | ICC: 0.62–0.88 MDC%: 33.8–110.8 Effect sizes: −0.13–0.24 |

| Shih et al. [68] | 2021 | Patients with schizophrenia | SHTA | ICC: 0.67 MDC%: 12.1 |

| Chiu et al. [69] | 2021 | Patients with schizophrenia | WCST | ICC: 0.7 MDC: 3.3–42.0 Effect sizes: 0.03–0.13 |

| Chen et al. [70] | 2021 | Patients with schizophrenia | TONI-4 | ICC: 0.73 MDC: 5.1, MDC%: 14.2 Effect sizes: −0.03 |

| The Presented Methods | 2021 | Patients with schizophrenia | RSAT | ICC: 0.69–0.91 MDC: 8.0–43.4, MDC%: 8.3–218.9 Effect sizes: −0.14–0.30 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, P.; Li, P.-C.; Liu, C.-H.; Lin, H.-Y.; Huang, C.-Y.; Hsieh, C.-L. Practice Effects, Test–Retest Reliability, and Minimal Detectable Change of the Ruff 2 and 7 Selective Attention Test in Patients with Schizophrenia. Int. J. Environ. Res. Public Health 2021, 18, 9440. https://doi.org/10.3390/ijerph18189440

Lee P, Li P-C, Liu C-H, Lin H-Y, Huang C-Y, Hsieh C-L. Practice Effects, Test–Retest Reliability, and Minimal Detectable Change of the Ruff 2 and 7 Selective Attention Test in Patients with Schizophrenia. International Journal of Environmental Research and Public Health. 2021; 18(18):9440. https://doi.org/10.3390/ijerph18189440

Chicago/Turabian StyleLee, Posen, Ping-Chia Li, Chin-Hsuan Liu, Hung-Yu Lin, Chien-Yu Huang, and Ching-Lin Hsieh. 2021. "Practice Effects, Test–Retest Reliability, and Minimal Detectable Change of the Ruff 2 and 7 Selective Attention Test in Patients with Schizophrenia" International Journal of Environmental Research and Public Health 18, no. 18: 9440. https://doi.org/10.3390/ijerph18189440

APA StyleLee, P., Li, P.-C., Liu, C.-H., Lin, H.-Y., Huang, C.-Y., & Hsieh, C.-L. (2021). Practice Effects, Test–Retest Reliability, and Minimal Detectable Change of the Ruff 2 and 7 Selective Attention Test in Patients with Schizophrenia. International Journal of Environmental Research and Public Health, 18(18), 9440. https://doi.org/10.3390/ijerph18189440