Six Sigma in Health Literature, What Matters?

Abstract

:1. Introduction

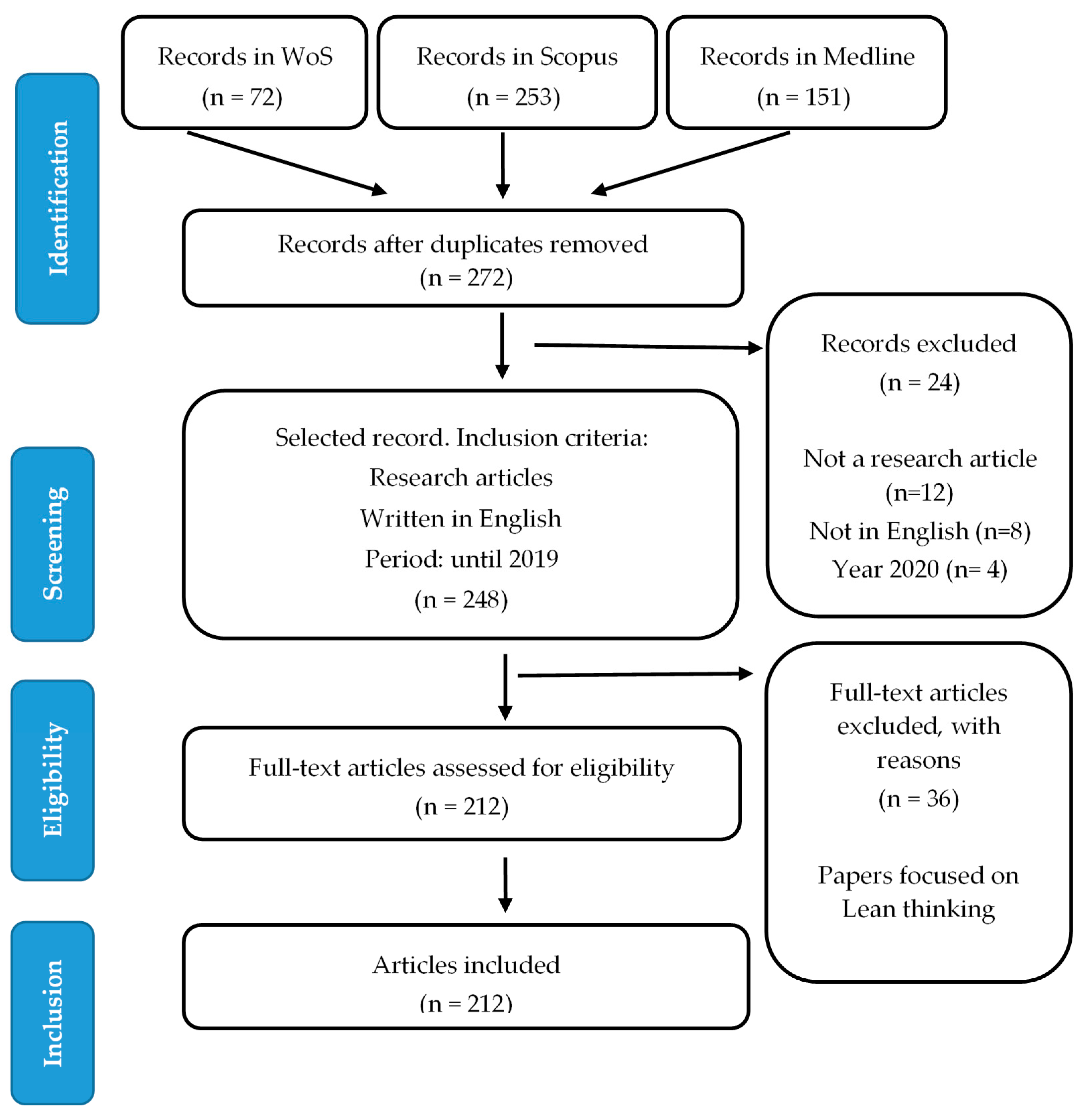

2. Method

2.1. Search Strategy

- 1st level (terms related to the methodology should appear in the title): “six sigma” or “six-sigma” or DMAIC;

- 2nd level (terms related to quality processes should be in the Topic for WoS and Medline or in TITLE-ABS-KW for Scopus): “quality systems”, “quality improvement”, or “quality management”;

- 3rd level (the activity sector should be in the Topic for WoS and Medline or in TITLE-ABS-KW for Scopus): “health *”.

2.2. Variables Examined

2.2.1. Dependent Variable

2.2.2. Independent Variables

3. Results

3.1. Traditional Metrics Models

3.2. Altmetrics Models

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- De Koning, H.; Verver, J.P.; van den Heuvel, J.; Bisgaard, S.; Does, R.J.M.M. Lean six sigma in healthcare. J. Healthc. Qual. 2006, 28, 4–11. [Google Scholar] [CrossRef]

- Snee, R.D. Six-Sigma: The evolution of 100 years of business improvement methodology. Int. J. Six Sigma Compet. Advant. 2004, 1, 4–20. [Google Scholar] [CrossRef]

- Antony, J. Six sigma for service processes. Bus. Process Manag. J. 2006, 12, 234–248. [Google Scholar] [CrossRef]

- Kwak, Y.H.; Anbari, F.T. Benefits, obstacles, and future of six sigma approach. Technovation 2006, 26, 708–715. [Google Scholar] [CrossRef]

- Niñerola, A.; Sánchez-Rebull, M.V.; Hernández-Lara, A.B. Quality improvement in healthcare: Six Sigma systematic review. Health Policy 2020. [Google Scholar] [CrossRef] [PubMed]

- Improta, G.; Balato, G.; Romano, M.; Ponsiglione, A.M.; Raiola, E.; Russo, M.A.; Rosa, D.; Triassi, M.; Cesarelli, M. Improving performances of the knee replacement surgery process by applying DMAIC principles. J. Eval. Clin. Pract. 2017, 23, 1401–1407. [Google Scholar] [CrossRef]

- Kobo-Greenhut, A.; Holzman, K.; Raviv, O.; Arad, J.; Ben Shlomo, I. Applying health-six-sigma principles helps reducing the variability of length of stay in the emergency department. Int. J. Qual. Health Care 2021, 33. [Google Scholar] [CrossRef]

- Scala, A.; Ponsiglione, A.M.; Loperto, I.; Della Vecchia, A.; Borrelli, A.; Russo, G.; Triassi, M.; Improta, G. Lean Six Sigma Approach for Reducing Length of Hospital Stay for Patients with Femur Fracture in a University Hospital. Int. J. Environ. Res. Public Health 2021, 18, 2843. [Google Scholar] [CrossRef] [PubMed]

- Hundal, G.S.; Thiyagarajan, S.; Alduraibi, M.; Laux, C.M.; Furterer, S.L.; Cudney, E.A.; Antony, J. Lean Six Sigma as an organizational resilience mechanism in health care during the era of COVID-19. Int. J. Lean Six Sigma 2021. [Google Scholar] [CrossRef]

- Barberato Henrique, D.; Godinho Filho, M. A systematic literature review of empirical research in Lean and Six Sigma in healthcare. Total Qual. Manag. Bus. Excell. 2018, 31, 429–449. [Google Scholar] [CrossRef]

- Garfield, E. Citation analysis as a tool in journal evaluation. Science 1972, 178, 471–479. [Google Scholar] [CrossRef]

- Hou, J.; Ma, D. How the high-impact papers formed? A study using data from social media and citation. Scientometrics 2020, 125, 2597–2615. [Google Scholar] [CrossRef]

- Warren, V.T.; Patel, B.; Boyd, C.J. Analyzing the relationship between Altmetric score and literature citations in the Implantology literature. Clin. Implant Dent. Relat. Res. 2020, 22, 54–58. [Google Scholar] [CrossRef]

- Purkayastha, A.; Palmaro, E.; Falk-Krzesinski, H.J.; Baas, J. Comparison of two article-level, field-independent citation metrics: Field-Weighted Citation Impact (FWCI) and Relative Citation Ratio (RCR). J. Informetr. 2019, 13, 635–642. [Google Scholar] [CrossRef]

- Adam, D. The counting house. Nature 2002, 415, 726–729. [Google Scholar] [CrossRef] [PubMed]

- Barbic, D.; Tubman, M.; Lam, H.; Barbic, S. An Analysis of Altmetrics in Emergency Medicine. Acad. Emerg. Med. 2016, 23, 251–268. [Google Scholar] [CrossRef] [PubMed]

- Priem, J.; Groth, P.; Taraborelli, D. The Altmetrics Collection. PLoS ONE 2012, 7, e48753. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bornmann, L. Alternative metrics in scientometrics: A meta-analysis of research into three altmetrics. Scientometrics 2015, 103, 1123–1144. [Google Scholar] [CrossRef] [Green Version]

- Wei, M.; Noroozi Chakoli, A. Evaluating the relationship between the academic and social impact of open access books based on citation behaviors and social media attention. Scientometrics 2020, 125, 2401–2420. [Google Scholar] [CrossRef]

- Ali, O.; Karim, S.M.; Nasim, A.; Leila, H.; Alireza, I.-M. Do altmetrics correlate with citations? A study based on the 1,000 most-cited articles. Inf. Discov. Deliv. 2019, 47, 192–202. [Google Scholar] [CrossRef]

- Erdt, M.; Nagarajan, A.; Sin, S.C.J.; Theng, Y.L. Altmetrics: An analysis of the state-of-the-art in measuring research impact on social media. Scientometrics 2016, 109, 1117–1166. [Google Scholar] [CrossRef]

- Costas, R.; Zahedi, Z.; Wouters, P. Do “altmetrics” correlate with citations? Extensive comparison of altmetric indicators with citations from a multidisciplinary perspective. J. Assoc. Inf. Sci. Technol. 2015, 66, 2003–2019. [Google Scholar] [CrossRef]

- Barnes, C. The Use of Altmetrics as a Tool for Measuring Research Impact. Aust. Acad. Res. Libr. 2015, 46, 121–134. [Google Scholar] [CrossRef] [Green Version]

- Wooldridge, J.; King, M.B. Altmetric scores: An early indicator of research impact. J. Assoc. Inf. Sci. Technol. 2019, 70, 271–282. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Int. J. Surg. 2010, 8, 336–341. [Google Scholar] [CrossRef] [Green Version]

- Mongeon, P.; Paul-Hus, A. The journal coverage of Web of Science and Scopus: A comparative analysis. Scientometrics 2016, 106, 213–228. [Google Scholar] [CrossRef]

- VanRaan, A. The use of bibliometric analysis in research performance assessment and monitoring of interdisciplinary scientific developments. Tech.–Theor. Prax. 2003, 1, 20–29. [Google Scholar]

- Hassan, S.U.; Gillani, U.A. Altmetrics of” altmetrics” using Google Scholar, Twitter, Mendeley, Facebook, Google-plus, CiteULike, Blogs and Wiki. arXiv 2016, arXiv:1603.07992. [Google Scholar]

- Didegah, F.; Bowman, T.D.; Holmberg, K. On the differences between citations and altmetrics: An investigation of factors driving altmetrics vs. citations for Finnish articles. J. Assoc. Inf. Sci. Technol. 2018, 69, 832–843. [Google Scholar] [CrossRef]

- Marin-Anglada, Q.; Hernández-Lara, A.B. Research on sharing economy: Why are some articles more cited than others? Econ. Res. Istraz. 2020, 33, 2787–2805. [Google Scholar] [CrossRef]

- van der Zwaard, S.; de Leeuw, A.W.; Meerhoff, L.R.A.; Bodine, S.C.; Knobbe, A. Articles with impact: Insights into 10 years of research with machine learning. J. Appl. Physiol. 2020, 129, 967–979. [Google Scholar] [CrossRef] [PubMed]

- Ioannidis, J.P.A.; Klavans, R.; Boyack, K.W. Multiple Citation Indicators and Their Composite across Scientific Disciplines. PLoS Biol. 2016, 14, e1002501. [Google Scholar] [CrossRef] [PubMed]

- Yan, W.; Zhang, Y.; Hu, T.; Kudva, S. How does scholarly use of academic social networking sites differ by academic discipline? A case study using ResearchGate. Inf. Process. Manag. 2021, 58, 102430. [Google Scholar] [CrossRef]

- Antonakis, J.; Bastardoz, N.; Liu, Y.; Schriesheim, C.A. What makes articles highly cited? Leadersh. Q. 2014, 25, 152–179. [Google Scholar] [CrossRef] [Green Version]

- Times Higher Education World University Rankings. Available online: https://www.timeshighereducation.com/world-university-rankings/2021/world-ranking#!/page/0/length/25/sort_by/rank/sort_order/asc/cols/scores (accessed on 5 June 2020).

- Caulley, L.; Cheng, W.; Catalá-López, F.; Whelan, J.; Khoury, M.; Ferraro, J.; Husereau, D.; Altman, D.G.; Moher, D. Citation impact was highly variable for reporting guidelines of health research: A citation analysis. J. Clin. Epidemiol. 2020, 127, 96–104. [Google Scholar] [CrossRef] [PubMed]

- Pech, G.; Delgado, C. Assessing the publication impact using citation data from both Scopus and WoS databases: An approach validated in 15 research fields. Scientometrics 2020, 125, 909–924. [Google Scholar] [CrossRef]

- RStudio Team. RStudio: Integrated Development Environment for R; RStudio Team: Boston, MA, USA, 2015; Available online: http://www.rstudio.com/ (accessed on 22 September 2020).

- Onyancha, O.B. Research Excellence in the Era of Online Attention: Altmetrics of South Africa’s Highly Cited Papers in Selected Research Fields. Publ. Res. Q. 2020, 36, 169–185. [Google Scholar] [CrossRef]

- Araújo, R.; Sorensen, A.A.; Konkiel, S.; Bloem, B.R. Top Altmetric Scores in the Parkinson’s Disease Literature. J. Parkinsons Dis. 2017, 7, 81–87. [Google Scholar] [CrossRef] [Green Version]

- Baek, S.; Yoon, D.Y.; Lim, K.J.; Hong, J.H.; Moon, J.Y.; Seo, Y.L.; Yun, E.J. Top-cited articles versus top Altmetric articles in nuclear medicine: A comparative bibliometric analysis. Acta Radiol. 2020, 61, 1343–1349. [Google Scholar] [CrossRef]

- Livas, C.; Delli, K. Looking beyond traditional metrics in orthodontics: An altmetric study on the most discussed articles on the web. Eur. J. Orthod. 2018, 40, 193–199. [Google Scholar] [CrossRef]

- The Declaration on Research Assessment San Francisco Declaration on Research Assessment. 2021. Available online: https:/sfdora.org/read/ (accessed on 25 July 2021).

- Lindsay, J.M. PlumX from Plum Analytics: Not Just Altmetrics. J. Electron. Resour. Med. Libr. 2016, 13, 8–17. [Google Scholar] [CrossRef]

| N | Mean | sd | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. Citations per year (c/y) | 212 | 2.42 | 3.18 | 1 | ||||||||||||

| 2. FWCI | 212 | 1.52 | 1.90 | 0.570 *** | 1 | |||||||||||

| 3. Mendeley readers | 212 | 16.46 | 24.60 | 0.581 *** | 0.607 *** | 1 | ||||||||||

| 4. Abstract views | 157 | 76.30 | 131.47 | 0.215 * | 0.508 *** | 0.524 *** | 1 | |||||||||

| 5. URS | 76 | 46.39 | 23.61 | 0.128 | −0.199 | −0.141 | −0.165 | 1 | ||||||||

| 6. N_Authors | 212 | 3.68 | 2.80 | −0.327 * | −0.371 | −0.458 ** | −0.308 | 0.182 | 1 | |||||||

| 7. 1st author citations | 212 | 217.36 | 772.18 | −0.027 | −0.108 | −0.198 ** | −0.292 | −0.131 | −0.078 ** | 1 | ||||||

| 8. 1st author N_papers | 212 | 7.95 | 9.95 | 0.158 ** | −0.121 * | −0.198 ** | −0.157 | −0.076 | −0.057 ** | 0.589 *** | 1 | |||||

| 9. N_fields | 212 | 2.15 | 1.07 | −0.336 | −0.505 | −0.536 | −0.554 * | 0.121 * | 0.309 | 0.023 | 0.312 | 1 | ||||

| 10. SJR | 212 | 0.61 | 0.55 | −0.342 ** | −0.287 * | −0.188 ** | 0.159 | −0.377 | 0.119 *** | 0.154 ** | −0.154 ** | −0.185 | 1 | |||

| 11. Paper tenure | 212 | 8.36 | 5.41 | 0.178 | −0.132 | −0.302 *** | −0.336 ** | −0.009 *** | 0.116 *** | 0.168 | 0.409 | 0.190 | 0.081 *** | 1 | ||

| 12. N_references | 159 | 28.52 | 22.17 | 0.427 *** | −0.015 | 0.397 ** | 0.185 | −0.176 | −0.101 | −0.036 | 0.078 | 0.027 | −0.065 | −0.186 * | 1 | |

| 13. N_keywords | 212 | 2.94 | 2.76 | 0.369 *** | 0.260 | 0.215 *** | 0.262 * | 0.012 | −0.202 * | 0.181 | 0.375 | 0.146 *** | −0.239 | −0.076 *** | 0.576 * | 1 |

| Citations Per Year | FWCI | |||||||

|---|---|---|---|---|---|---|---|---|

| Model 1a: Bibliometric | Model 1b: Content | Model 2a: Bibliometric | Model 2b: Content | |||||

| EST | SE | EST | SE | EST | SE | EST | SE | |

| Intercept | 0.157 | 0.366 | −0.346 | 0.527 | 0.732 | 0.418 + | −0.587 | 0.623 |

| N_Authors | 0.028 | 0.024 | −0.003 | 0.029 | ||||

| N_Keywords | 0.049 | 0.029 | 0.017 | 0.034 | ||||

| Author_Prof | 0.383 | 0.198 + | 0.348 | 0.227 | ||||

| Author_Both | −0.125 | 0.163 | −0.033 | 0.190 | ||||

| 1st author citations | −0.001 | 0.001 | 0.000 | 0.000 | ||||

| 1st author N_papers | 0.030 | 0.009 ** | 0.008 | 0.012 | ||||

| N_Fields | −0.057 | 0.068 | −0.120 | 0.080 | ||||

| Q2 | −0.273 | 0.183 | −0.323 | 0.208 | ||||

| Q3 | −1.821 | 0.342 *** | −2.263 | 0.450 *** | ||||

| Q4 | −2.026 | 0.614 *** | −2.261 | 0.802 ** | ||||

| SJR | −0.144 | 0.167 | −0.308 | 0.201 | ||||

| Paper tenure | 0.038 | 0.015 * | 0.027 | 0.017 | ||||

| N_references | 0.014 | 0.003 *** | 0.007 | 0.004 + | ||||

| OBJcost | 0.379 | 0.241 | 0.149 | 0.292 | ||||

| OBJtime | 0.917 | 0.269 *** | 0.743 | 0.327 * | ||||

| OBJwaste | 1.478 | 0.432 *** | 1.312 | 0.521 * | ||||

| OBJerror | 0.256 | 0.229 | 0.184 | 0.280 | ||||

| Laboratory | −0.080 | 0.465 | −0.568 | 0.590 | ||||

| Management | −0.251 | 0.438 | −0.605 | 0.512 | ||||

| Med&Pharma | 0.108 | 0.570 | 0.060 | 0.658 | ||||

| Nursing | −1.146 | 0.608 + | −0.635 | 0.605 | ||||

| Obstetric | −0.670 | 0.685 | −1.008 | 0.847 | ||||

| Pediatric | −2.902 | 1.272 * | −2.627 | 1.390 | ||||

| Radiology | −0.153 | 0.480 | 0.339 | 0.512 | ||||

| Rehab | 0.838 | 0.846 | 0.699 | 1.055 | ||||

| Surgery&Anesthesiology | 0.206 | 0.402 | 0.059 | 0.462 | ||||

| Trauma | 0.560 | 0.414 | 0.057 | 0.491 | ||||

| UCI&Emergency | −0.640 | 0.457 | −0.520 | 0.518 | ||||

| K_DMAIC | 0.362 | 0.399 | −0.059 | 0.516 | ||||

| K_Healthcare | 0.635 | 0.254 * | 0.667 | 0.299 * | ||||

| K_Hospital | −0.063 | 0.426 | −0.350 | 0.513 | ||||

| K_Lean | 0.249 | 0.308 | 0.252 | 0.356 | ||||

| K_LSS | 0.309 | 0.257 | 0.543 | 0.325 + | ||||

| K_Process improvement | 1.384 | 0.455 ** | 1.827 | 0.507 *** | ||||

| K_Quality improvement | 0.510 | 0.234 * | 0.785 | 0.283 ** | ||||

| K_Quality management | 0.516 | 0.341 | 0.440 | 0.435 | ||||

| K_SS | −0.110 | 0.224 | −0.198 | 0.272 | ||||

| K_Waiting time | −0.049 | 0.307 | 0.086 | 0.396 | ||||

| AIC | 611.88 | 302.72 | 516.15 | 261.33 | ||||

| Log-likelihood | −290.94 (df = 15) | −124.36 (df = 27) | −243.075 (df = 15) | −103.67 (df = 27) | ||||

| Lrtest null. Model (Chi squared) | 100.49 *** | 70.087 *** | 63.319 *** | 58.399 *** | ||||

| Wald test (F) | 7.509 *** | 6.299 *** | 3.521 *** | 3.428 *** | ||||

| Mendeley Readers | Abstract Views | |||||||

|---|---|---|---|---|---|---|---|---|

| Model 3a: Bibliometric | Model 3b: Content | Model 4a: Bibliometric | Model 4b: Content | |||||

| EST | SE | EST | SE | EST | SE | EST | SE | |

| Intercept | 2.841 | 0.340 *** | 1.230 | 0.581 * | 5.410 | 0.665 *** | 2.092 | 0.926 * |

| N_Authors | −0.006 | 0.025 | 0.130 | 0.047 ** | ||||

| N_Keywords | 0.079 | 0.028 ** | 0.139 | 0.053 ** | ||||

| Author_Prof | 0.005 | 0.192 | −0.213 | 0.375 | ||||

| Author_Both | 0.189 | 0.149 | −0.381 | 0.291 | ||||

| 1st author citations | 0.000 | 0.000 | −0.0001 | 0.0002 | ||||

| 1st author N_papers | 0.013 | 0.010 | 0.006 | 0.019 | ||||

| N_Fields | −0.170 | 0.063 ** | −0.265 | 0.125 * | ||||

| Q2 | 0.163 | 0.180 | −0.598 | 0.348 + | ||||

| Q3 | −0.819 | 0.119 *** | −0.422 | 0.460 | ||||

| Q4 | −0.962 | 0.352 ** | −1.691 | 0.676 * | ||||

| SJR | 0.145 | 0.165 | −0.879 | 0.326 ** | ||||

| Paper tenure | −0.074 | 0.015 *** | −0.072 | 0.031 * | ||||

| N_references | 0.011 | 0.003 *** | −0.007 | 0.006 | ||||

| OBJcost | 0.777 | 0.316 * | 0.537 | 0.488 | ||||

| OBJtime | 0.629 | 0.293 * | 1.336 | 0.458 ** | ||||

| OBJwaste | 1.795 | 0.649 ** | −1.169 | 1.014 | ||||

| OBJerror | 0.324 | 0.258 | 0.051 | 0.397 | ||||

| Laboratory | 0.699 | 0.505 | −0.075 | 0.729 | ||||

| Management | 0.729 | 0.502 | −2.674 | 0.758 *** | ||||

| Med&Pharma | 0.259 | 0.631 | −0.958 | 0.960 | ||||

| Nursing | 0.077 | 0.549 | 0.320 | 0.755 | ||||

| Obstetric | 0.850 | 0.756 | −2.598 | 1.087 * | ||||

| Pediatric | −1.730 | 1.076 | −2.195 | 1.602 | ||||

| Radiology | 0.431 | 0.550 | −0.899 | 0.858 | ||||

| Rehab | 1.070 | 1.022 | 3.298 | 1.460 * | ||||

| Surgery&Anesthesiology | 0.414 | 0.482 | −0.049 | 0.709 | ||||

| Trauma | 0.381 | 0.532 | −1.599 | 0.752 * | ||||

| UCI&Emergency | 0.469 | 0.508 | −1.215 | 0.739 + | ||||

| K_DMAIC | 0.268 | 0.457 | 0.092 | 0.692 | ||||

| K_Healthcare | 0.573 | 0.297 + | 2.084 | 0.462 *** | ||||

| K_Hospital | −0.558 | 0.475 | 1.527 | 0.797 + | ||||

| K_Lean | 0.200 | 0.359 | −1.338 | 0.546 * | ||||

| K_LSS | 0.684 | 0.285 * | 2.109 | 0.510 *** | ||||

| K_Process improvement | 1.405 | 0.583 ** | 3.349 | 0.963 *** | ||||

| K_Quality improvement | 0.853 | 0.243 *** | 1.684 | 0.420 *** | ||||

| K_Quality management | −0.005 | 0.360 | 2.990 | 0.712 *** | ||||

| K_SS | −0.507 | 0.247 * | 0.185 | 0.464 | ||||

| K_Waiting time | 0.022 | 0.389 | −0.123 | 0.610 | ||||

| AIC | 1179 | 625.23 | 1385 | 700.36 | ||||

| Log-likelihood | −574.51 (df = 15) | −285.62 (df = 27) | −677.5 (df = 15) | −323.18 (df = 27) | ||||

| Lrtest null. Model (Chi squared) | 112.22 *** | 66.136 *** | 32.128 ** | 52.338 ** | ||||

| Wald test (F) | 11.51 *** | 3.289 *** | 3.2318 *** | 6.063 *** | ||||

| Citations Per Year | FWCI | Mendeley Readers | Abstract Views | |

|---|---|---|---|---|

| 1st author N_papers | positive | |||

| N_Fields | negative | negative | ||

| Q3 | negative | negative | negative | |

| Q4 | negative | negative | negative | negative |

| Paper tenure | positive | negative | negative | |

| N_references | positive | positive | positive | |

| OBJtime | positive | positive | positive | positive |

| OBJwaste | positive | positive | positive | |

| K_Healthcare | positive | positive | positive | positive |

| K_Process improvement | positive | positive | positive | positive |

| K_Quality improvement | positive | positive | positive | positive |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hernández-Lara, A.-B.; Sánchez-Rebull, M.-V.; Niñerola, A. Six Sigma in Health Literature, What Matters? Int. J. Environ. Res. Public Health 2021, 18, 8795. https://doi.org/10.3390/ijerph18168795

Hernández-Lara A-B, Sánchez-Rebull M-V, Niñerola A. Six Sigma in Health Literature, What Matters? International Journal of Environmental Research and Public Health. 2021; 18(16):8795. https://doi.org/10.3390/ijerph18168795

Chicago/Turabian StyleHernández-Lara, Ana-Beatriz, Maria-Victoria Sánchez-Rebull, and Angels Niñerola. 2021. "Six Sigma in Health Literature, What Matters?" International Journal of Environmental Research and Public Health 18, no. 16: 8795. https://doi.org/10.3390/ijerph18168795

APA StyleHernández-Lara, A.-B., Sánchez-Rebull, M.-V., & Niñerola, A. (2021). Six Sigma in Health Literature, What Matters? International Journal of Environmental Research and Public Health, 18(16), 8795. https://doi.org/10.3390/ijerph18168795