Viewing Natural vs. Urban Images and Emotional Facial Expressions: An Exploratory Study

Abstract

:1. Introduction

1.1. Positive Effects of Viewing Surrogate Nature

1.2. Ekman’s Six Basic Emotions

1.3. Measurement of Emotional Facial Expressions

1.4. Validation of Software for Automated Facial Expression Analysis

1.5. Facial Expressions While Viewing Natural Environment

1.6. The Goals

2. Materials and Methods

2.1. Participants

2.2. Ethical Approval

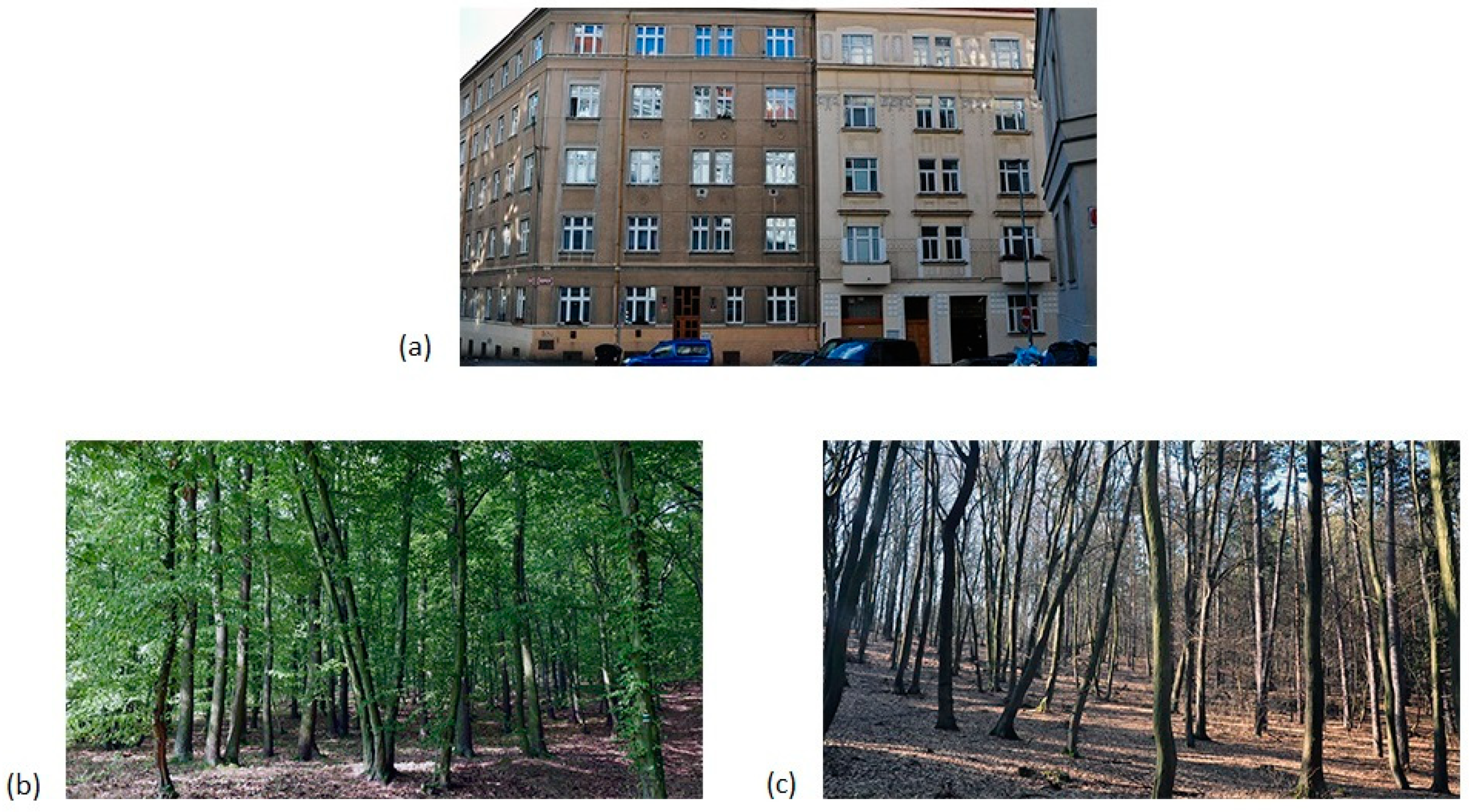

2.3. Stimulus Material

2.4. Apparatus

2.5. Procedure

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bowler, D.E.; Buyung-Ali, L.M.; Knight, T.M.; Pullin, A.S. A Systematic Review of Evidence for the Added Benefits to Health of Exposure to Natural Environments. BMC Public Health 2010, 10, 456. [Google Scholar] [CrossRef] [Green Version]

- Bratman, G.N.; Hamilton, J.P.; Daily, G.C. The Impacts of Nature Experience on Human Cognitive Function and Mental Health: Nature Experience, Cognitive Function, and Mental Health. Ann. N. Y. Acad. Sci. 2012, 1249, 118–136. [Google Scholar] [CrossRef]

- McMahan, E.A.; Estes, D. The Effect of Contact with Natural Environments on Positive and Negative Affect: A Meta-Analysis. J. Posit. Psychol. 2015, 10, 507–519. [Google Scholar] [CrossRef]

- Browning, M.H.E.M.; Mimnaugh, K.J.; van Riper, C.J.; Laurent, H.K.; LaValle, S.M. Can Simulated Nature Support Mental Health? Comparing Short, Single-Doses of 360-Degree Nature Videos in Virtual Reality with the Outdoors. Front. Psychol. 2020, 10, 2667. [Google Scholar] [CrossRef] [Green Version]

- Lewinski, P.; den Uyl, T.M.; Butler, C. Automated Facial Coding: Validation of Basic Emotions and FACS AUs in FaceReader. J. Neurosci. Psychol. Econ. 2014, 7, 227–236. [Google Scholar] [CrossRef] [Green Version]

- Calvo, M.G.; Nummenmaa, L. Perceptual and Affective Mechanisms in Facial Expression Recognition: An Integrative Review. Cogn. Emot. 2016, 30, 1081–1106. [Google Scholar] [CrossRef] [PubMed]

- Geiger, M.; Wilhelm, O. Computerized Facial Emotion Expression Recognition. In Digital Phenotyping and Mobile Sensing; Baumeister, H., Montag, C., Eds.; Studies in Neuroscience, Psychology and Behavioral Economics; Springer International Publishing: Cham, Switzerland, 2019; pp. 31–44. ISBN 978-3-030-31619-8. [Google Scholar]

- Berman, M.G.; Jonides, J.; Kaplan, S. The Cognitive Benefits of Interacting with Nature. Psychol. Sci. 2008, 19, 1207–1212. [Google Scholar] [CrossRef] [PubMed]

- Johnsen, S.Å.K.; Rydstedt, L.W. Active Use of the Natural Environment for Emotion Regulation. Eur. J. Psychol. 2013, 9, 798–819. [Google Scholar] [CrossRef]

- Lee, K.E.; Williams, K.J.H.; Sargent, L.D.; Williams, N.S.G.; Johnson, K.A. 40-Second Green Roof Views Sustain Attention: The Role of Micro-Breaks in Attention Restoration. J. Environ. Psychol. 2015, 42, 182–189. [Google Scholar] [CrossRef]

- Martínez-Soto, J.; Gonzales-Santos, L.; Barrios, F.A.; Lena, M.E.M.-L. Affective and Restorative Valences for Three Environmental Categories. Percept. Mot. Skills 2014, 119, 901–923. [Google Scholar] [CrossRef]

- Staats, H.; Kieviet, A.; Hartig, T. Where to Recover from Attentional Fatigue: An Expectancy-Value Analysis of Environmental Preference. J. Environ. Psychol. 2003, 23, 147–157. [Google Scholar] [CrossRef]

- Akers, A.; Barton, J.; Cossey, R.; Gainsford, P.; Griffin, M.; Micklewright, D. Visual Color Perception in Green Exercise: Positive Effects on Mood and Perceived Exertion. Environ. Sci. Technol. 2012, 46, 8661–8666. [Google Scholar] [CrossRef] [PubMed]

- Bornioli, A.; Parkhurst, G.; Morgan, P.L. Psychological Wellbeing Benefits of Simulated Exposure to Five Urban Settings: An Experimental Study from the Pedestrian’s Perspective. J. Transp. Health 2018, 9, 105–116. [Google Scholar] [CrossRef]

- de Kort, Y.A.W.; Meijnders, A.L.; Sponselee, A.A.G.; IJsselsteijn, W.A. What’s Wrong with Virtual Trees? Restoring from Stress in a Mediated Environment. J. Environ. Psychol. 2006, 26, 309–320. [Google Scholar] [CrossRef]

- Mayer, F.S.; Frantz, C.M.; Bruehlman-Senecal, E.; Dolliver, K. Why Is Nature Beneficial?: The Role of Connectedness to Nature. Environ. Behav. 2009, 41, 607–643. [Google Scholar] [CrossRef]

- Pilotti, M.; Klein, E.; Golem, D.; Piepenbrink, E.; Kaplan, K. Is Viewing a Nature Video After Work Restorative? Effects on Blood Pressure, Task Performance, and Long-Term Memory. Environ. Behav. 2015, 47, 947–969. [Google Scholar] [CrossRef]

- Snell, T.L.; McLean, L.A.; McAsey, F.; Zhang, M.; Maggs, D. Nature Streaming: Contrasting the Effectiveness of Perceived Live and Recorded Videos of Nature for Restoration. Environ. Behav. 2019, 51, 1082–1105. [Google Scholar] [CrossRef]

- Tabrizian, P.; Baran, P.K.; Smith, W.R.; Meentemeyer, R.K. Exploring Perceived Restoration Potential of Urban Green Enclosure through Immersive Virtual Environments. J. Environ. Psychol. 2018, 55, 99–109. [Google Scholar] [CrossRef]

- van den Berg, A.E.; Koole, S.L.; van der Wulp, N.Y. Environmental Preference and Restoration: (How) Are They Related? J. Environ. Psychol. 2003, 23, 135–146. [Google Scholar] [CrossRef]

- Browning, M.H.E.M.; Saeidi-Rizi, F.; McAnirlin, O.; Yoon, H.; Pei, Y. The Role of Methodological Choices in the Effects of Experimental Exposure to Simulated Natural Landscapes on Human Health and Cognitive Performance: A Systematic Review. Environ. Behav. 2020, 53, 001391652090648. [Google Scholar] [CrossRef]

- Chirico, A.; Ferrise, F.; Cordella, L.; Gaggioli, A. Designing Awe in Virtual Reality: An Experimental Study. Front. Psychol. 2018, 8, 2351. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chirico, A.; Gaggioli, A. When Virtual Feels Real: Comparing Emotional Responses and Presence in Virtual and Natural Environments. Cyberpsychol. Behav. Soc. Netw. 2019, 22, 220–226. [Google Scholar] [CrossRef] [PubMed]

- Felnhofer, A.; Kothgassner, O.D.; Schmidt, M.; Heinzle, A.-K.; Beutl, L.; Hlavacs, H.; Kryspin-Exner, I. Is Virtual Reality Emotionally Arousing? Investigating Five Emotion Inducing Virtual Park Scenarios. Int. J. Hum. Comput. Stud. 2015, 82, 48–56. [Google Scholar] [CrossRef]

- Higuera-Trujillo, J.L.; López-Tarruella Maldonado, J.; Llinares Millán, C. Psychological and Physiological Human Responses to Simulated and Real Environments: A Comparison between Photographs, 360° Panoramas, and Virtual Reality. Appl. Ergon. 2017, 65, 398–409. [Google Scholar] [CrossRef]

- Hartig, T.; Korpela, K.; Evans, G.W.; Gärling, T. A Measure of Restorative Quality in Environments. Scand. Hous. Plan. Res. 1997, 14, 175–194. [Google Scholar] [CrossRef]

- Pretty, J.; Peacock, J.; Sellens, M.; Griffin, M. The Mental and Physical Health Outcomes of Green Exercise. Int. J. Environ. Health Res. 2005, 15, 319–337. [Google Scholar] [CrossRef]

- Ulrich, R.S. Natural Versus Urban Scenes: Some Psychophysiological Effects. Environ. Behav. 1981, 13, 523–556. [Google Scholar] [CrossRef]

- Cacioppo, J.T.; Petty, R.E.; Losch, M.E.; Kim, H.S. Electromyographic Activity over Facial Muscle Regions Can Differentiate the Valence and Intensity of Affective Reactions. J. Pers. Soc. Psychol. 1986, 50, 260–268. [Google Scholar] [CrossRef]

- Chang, C.-Y.; Chen, P.-K. Human Response to Window Views and Indoor Plants in the Workplace. Hort. Sci. 2005, 40, 1354–1359. [Google Scholar] [CrossRef] [Green Version]

- Chang, C.-Y.; Hammitt, W.E.; Chen, P.-K.; Machnik, L.; Su, W.-C. Psychophysiological Responses and Restorative Values of Natural Environments in Taiwan. Landsc. Urban. Plan. 2008, 85, 79–84. [Google Scholar] [CrossRef]

- Wei, H.; Hauer, R.J.; He, X. A Forest Experience Does Not Always Evoke Positive Emotion: A Pilot Study on Unconscious Facial Expressions Using the Face Reading Technology. For. Policy Econ. 2021, 123, 102365. [Google Scholar] [CrossRef]

- Wei, H.; Ma, B.; Hauer, R.J.; Liu, C.; Chen, X.; He, X. Relationship between Environmental Factors and Facial Expressions of Visitors during the Urban Forest Experience. Urban. For. Urban. Green. 2020, 53, 126699. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Measuring Facial Movement. J. Nonverbal. Behav. 1976, 1, 56–75. [Google Scholar] [CrossRef]

- Russell, J.A.; Mehrabian, A. Evidence for a Three-Factor Theory of Emotions. J. Res. Pers. 1977, 11, 273–294. [Google Scholar] [CrossRef]

- Ekman, P. Universal facial expressions of emotion. Calif. Ment. Health Res. Dig. 1970, 8, 151–158. [Google Scholar]

- Fridlund, A.J.; Cacioppo, J.T. Guidelines for Human Electromyographic Research. Psychophysiology 1986, 23, 567–589. [Google Scholar] [CrossRef]

- N. I. Technology. Face Reader. 2007. Available online: http://noldus.com/facereader (accessed on 5 June 2021).

- Research/Products/Facereader iMotions. Facial Expression Analysis: The Definitive Guide. 2016. Available online: https://imotions.com/facialexpression-guide-ebook/ (accessed on 5 June 2021).

- Beringer, M.; Spohn, F.; Hildebrandt, A.; Wacker, J.; Recio, G. Reliability and Validity of Machine Vision for the Assessment of Facial Expressions. Cogn. Syst. Res. 2019, 56, 119–132. [Google Scholar] [CrossRef]

- Kulke, L.; Feyerabend, D.; Schacht, A. A Comparison of the Affectiva iMotions Facial Expression Analysis Software with EMG for Identifying Facial Expressions of Emotion. Front. Psychol. 2020, 11, 329. [Google Scholar] [CrossRef]

- Del Líbano, M.; Calvo, M.G.; Fernández-Martín, A.; Recio, G. Discrimination between Smiling Faces: Human Observers vs. Automated Face Analysis. Acta Psychol. 2018, 187, 19–29. [Google Scholar] [CrossRef]

- Joye, Y.; Bolderdijk, J.W. An Exploratory Study into the Effects of Extraordinary Nature on Emotions, Mood, and Prosociality. Front. Psychol. 2015, 5, 1577. [Google Scholar] [CrossRef]

- Daniel, T.C.; Boster, R.S. Measuring Landscape Esthetics: The Scenic Beauty Estimation Method; Res. Pap. RM-RP-167; U.S. Department of Agriculture, Forest Service, Rocky Mountain Range and Experiment Station: Fort Collins, CO, USA, 1976.

- Otamendi, F.J.; Sutil Martín, D.L. The Emotional Effectiveness of Advertisement. Front. Psychol. 2020, 11, 2088. [Google Scholar] [CrossRef] [PubMed]

| Emotion | Urban Scenes | Forest with Foliage | Forest without Foliage | |||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | |

| Anger | 0.210 | 0.819 | 0.106 | 0.346 | 0.160 | 0.571 |

| Contempt | 0.249 | 0.204 | 0.257 | 0.324 | 0.326 | 0.444 |

| Disgust | 0.483 | 0.152 | 0.522 | 0.413 | 0.474 | 0.160 |

| Fear | 0.219 | 0.743 | 0.126 | 0.442 | 0.126 | 0.525 |

| Joy | 0.143 | 0.789 | 0.098 | 0.675 | 0.143 | 0.768 |

| Sadness | 0.288 | 1.076 | 0.264 | 0.905 | 0.265 | 0.781 |

| Surprise | 0.468 | 1.760 | 0.370 | 1.116 | 0.330 | 0.914 |

| Emotion | df | F | p |

|---|---|---|---|

| Anger | 2, 128 | 1.112 | 0.332 |

| Contempt | 2, 128 | 1.558 | 0.214 |

| Disgust | 2, 128 | 0.728 | 0.485 |

| Fear | 2, 128 | 2.148 | 0.121 |

| Joy | 2, 128 | 0.050 | 0.608 |

| Sadness | 2, 128 | 0.123 | 0.884 |

| Surprise | 2, 128 | 1.640 | 0.200 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Franěk, M.; Petružálek, J. Viewing Natural vs. Urban Images and Emotional Facial Expressions: An Exploratory Study. Int. J. Environ. Res. Public Health 2021, 18, 7651. https://doi.org/10.3390/ijerph18147651

Franěk M, Petružálek J. Viewing Natural vs. Urban Images and Emotional Facial Expressions: An Exploratory Study. International Journal of Environmental Research and Public Health. 2021; 18(14):7651. https://doi.org/10.3390/ijerph18147651

Chicago/Turabian StyleFraněk, Marek, and Jan Petružálek. 2021. "Viewing Natural vs. Urban Images and Emotional Facial Expressions: An Exploratory Study" International Journal of Environmental Research and Public Health 18, no. 14: 7651. https://doi.org/10.3390/ijerph18147651

APA StyleFraněk, M., & Petružálek, J. (2021). Viewing Natural vs. Urban Images and Emotional Facial Expressions: An Exploratory Study. International Journal of Environmental Research and Public Health, 18(14), 7651. https://doi.org/10.3390/ijerph18147651