An Interaction-Based Bayesian Network Framework for Surgical Workflow Segmentation

Abstract

1. Introduction

2. Materials and Methods

2.1. Experiment Setup and Data Collection

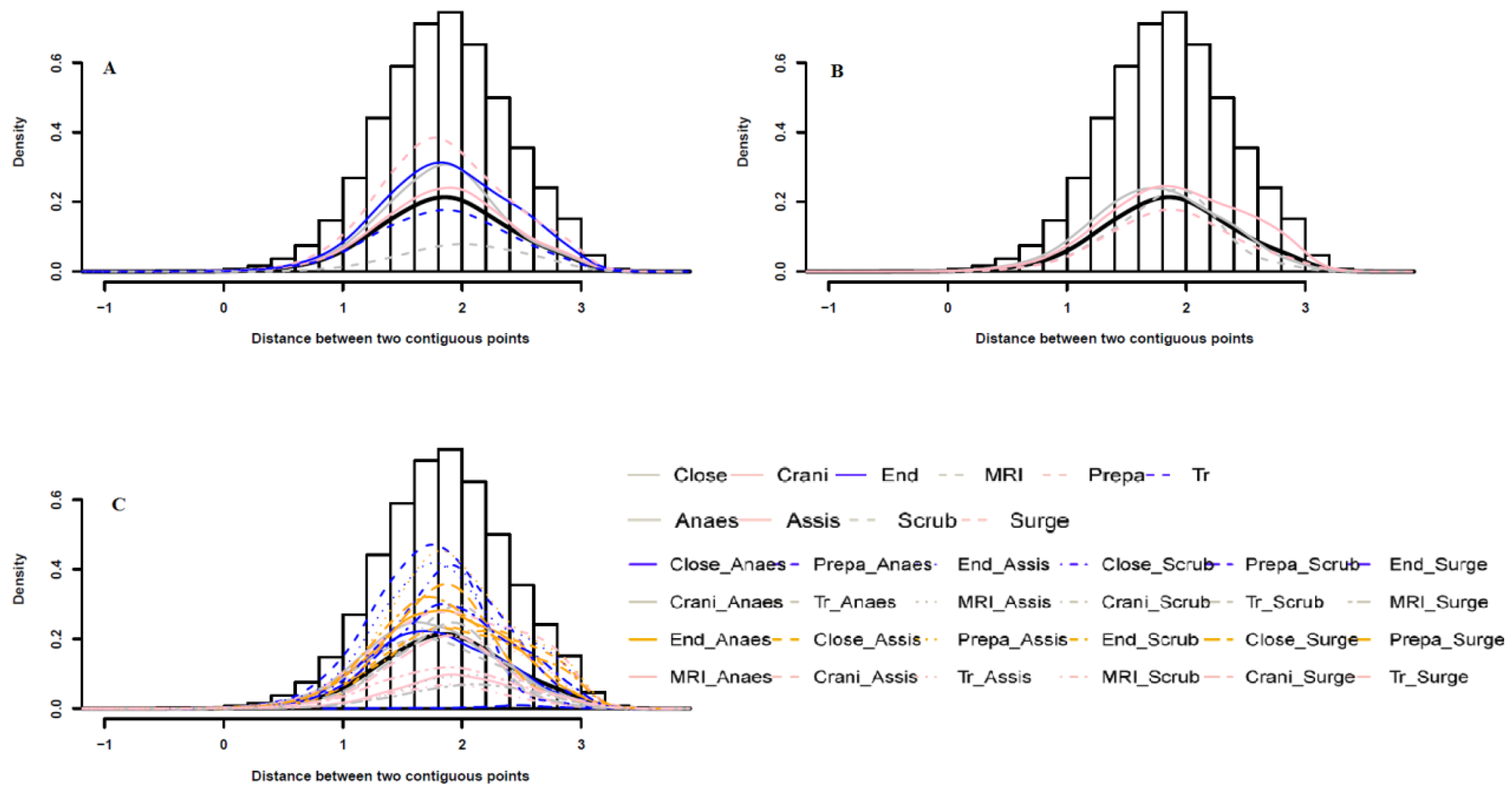

2.2. Interaction Measurement between Surgical Staff

2.3. Bayesian Network-Based Surgical Phase Classification

3. Results

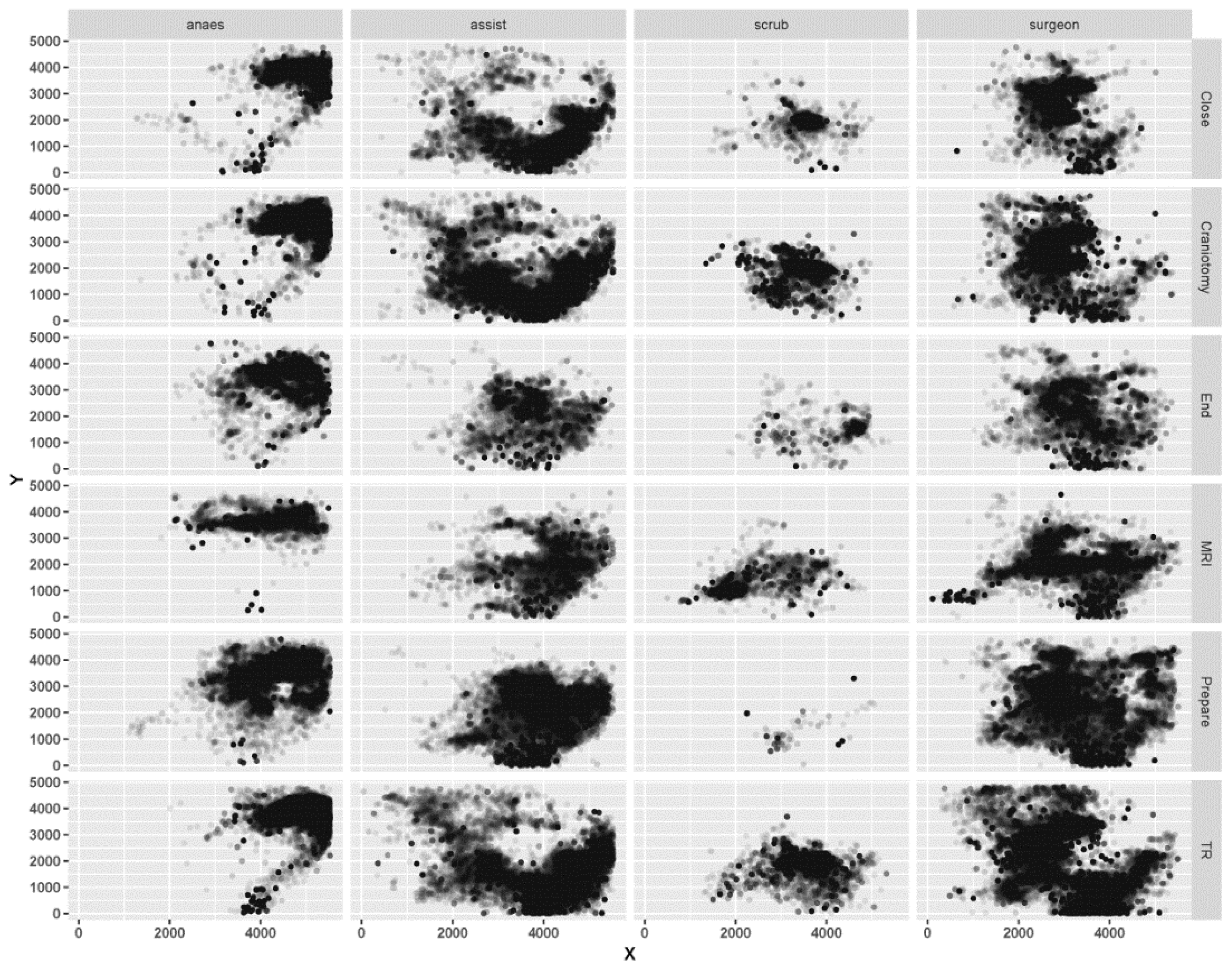

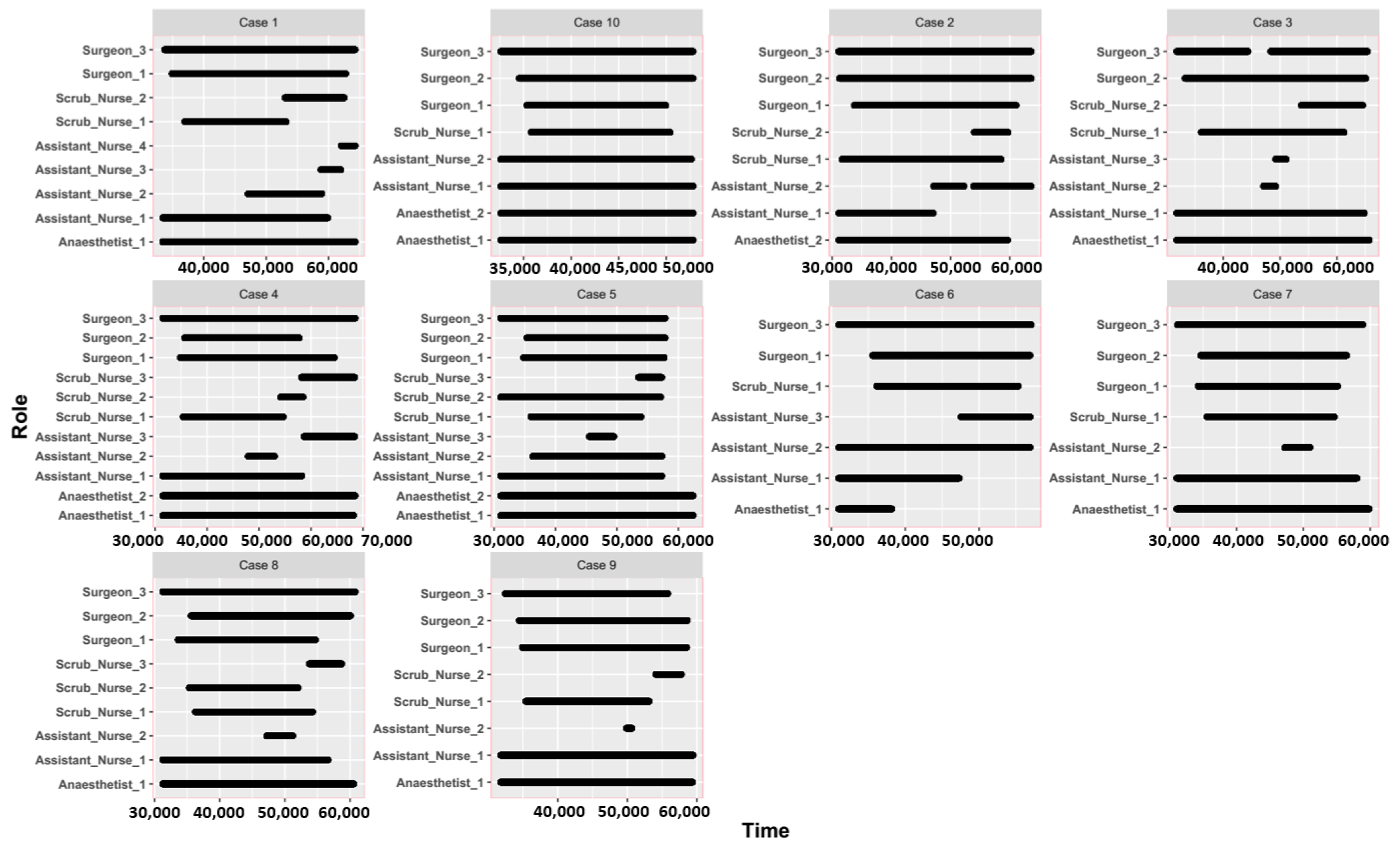

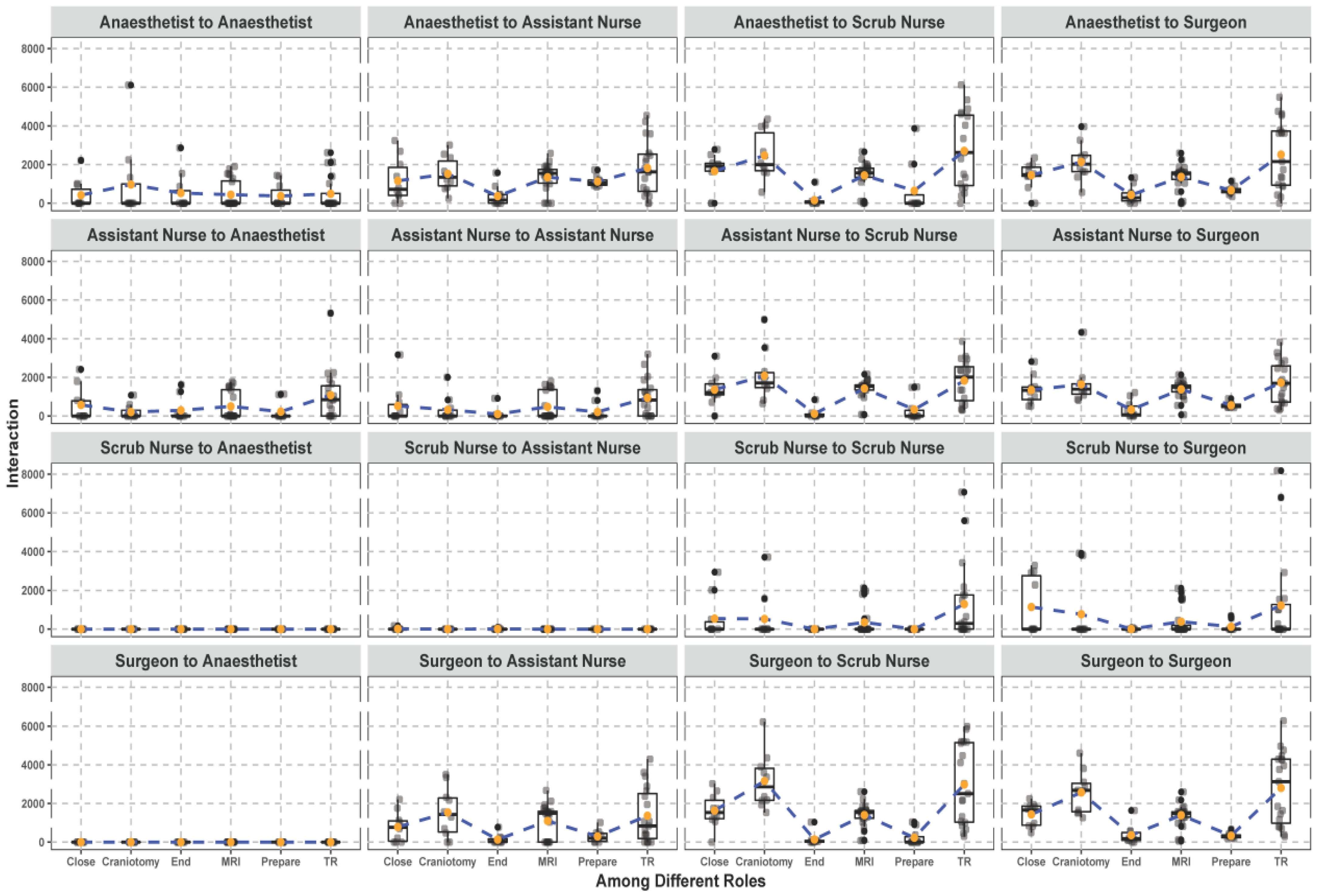

3.1. Spatial and Temporal Patterns between Different Surgical Staff

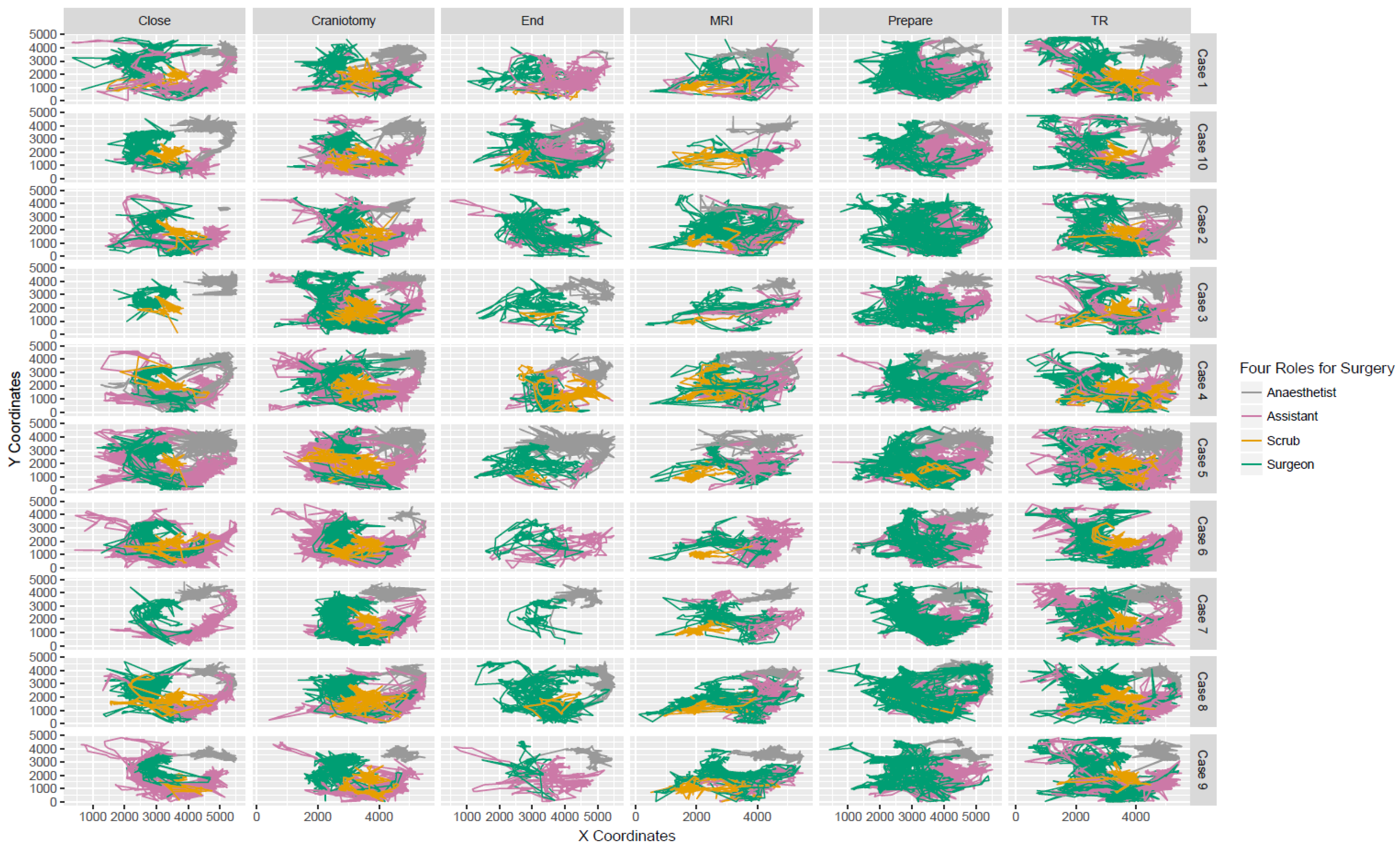

3.2. Trajectories and Interactions between Different Surgical Staff

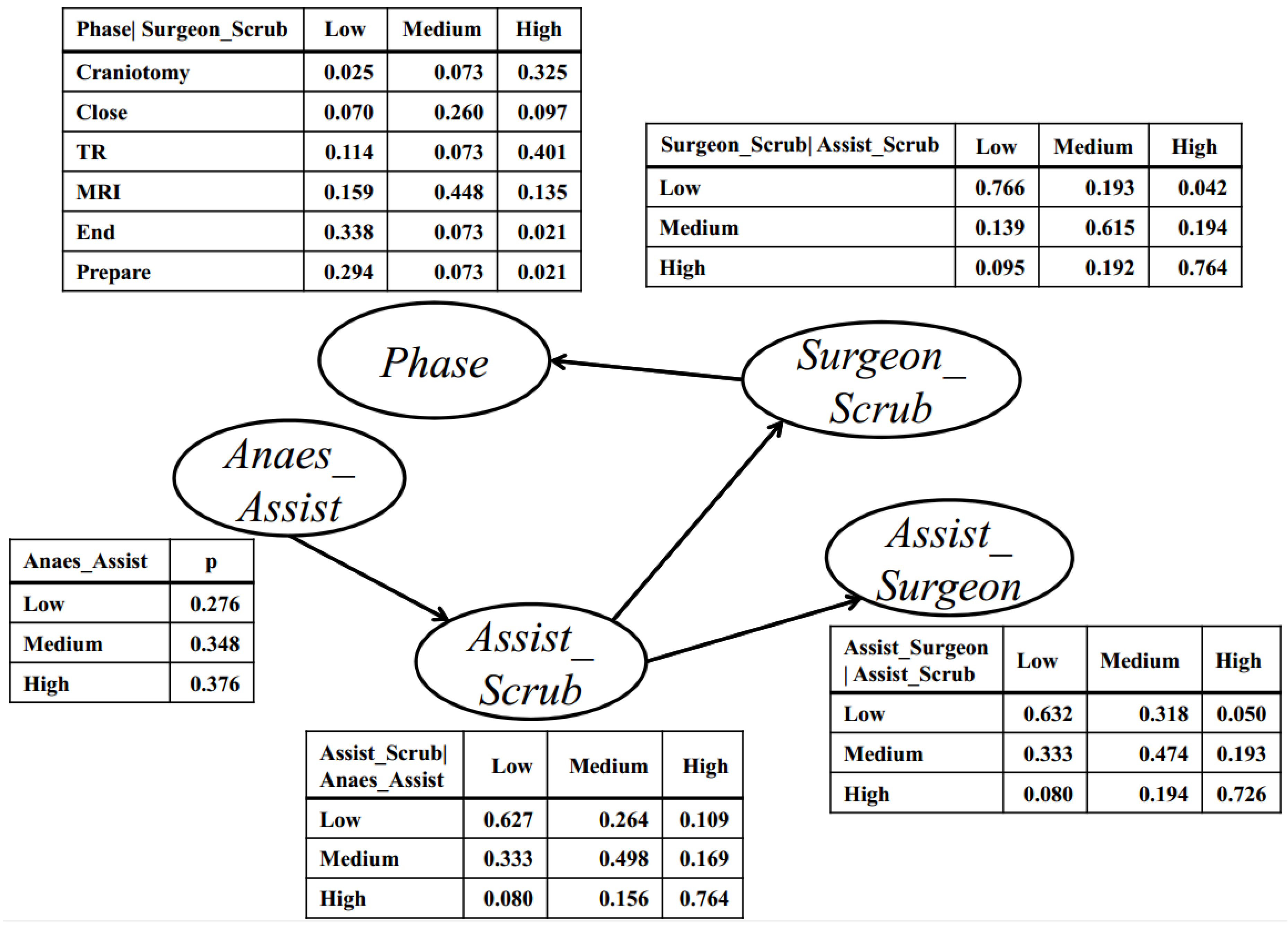

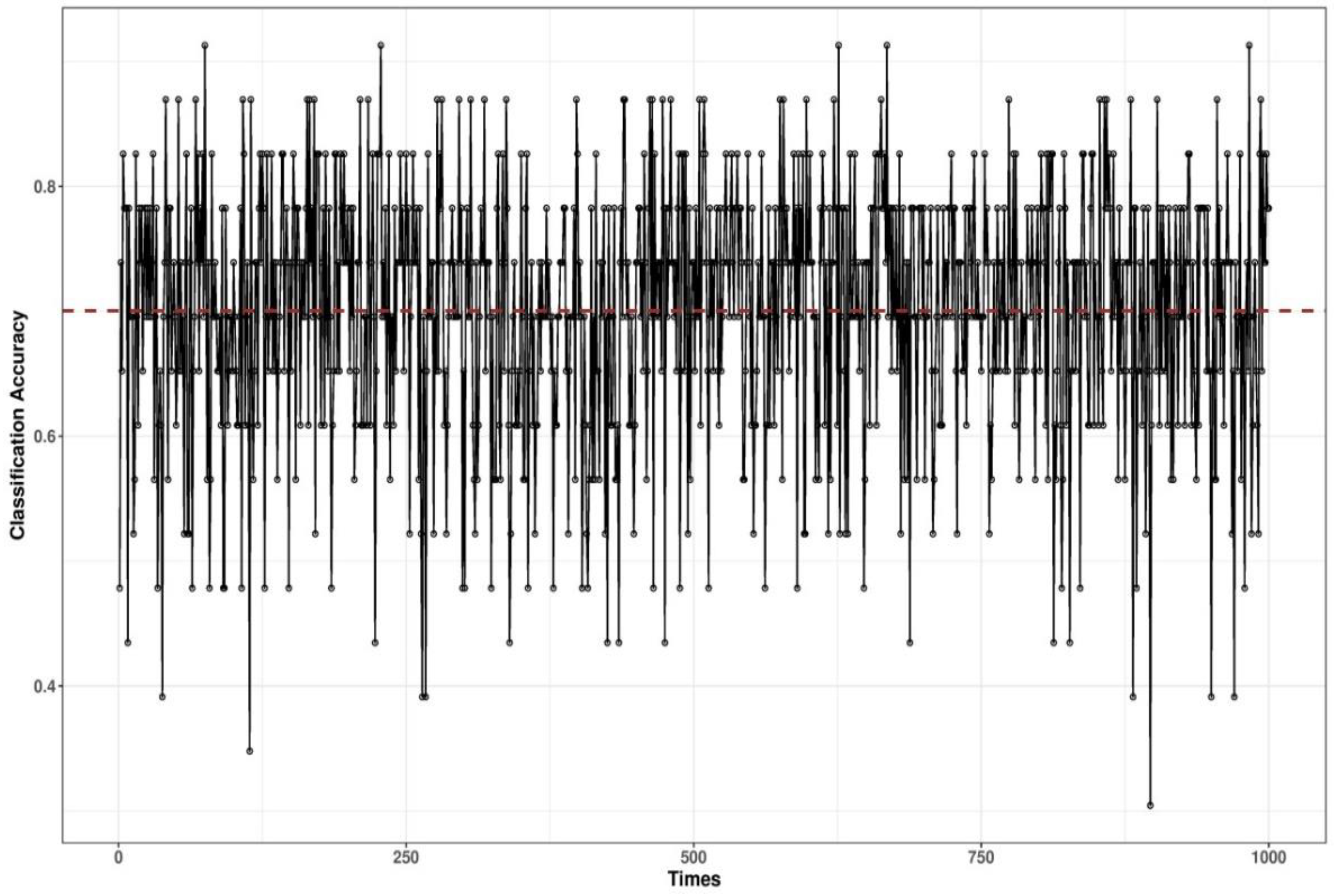

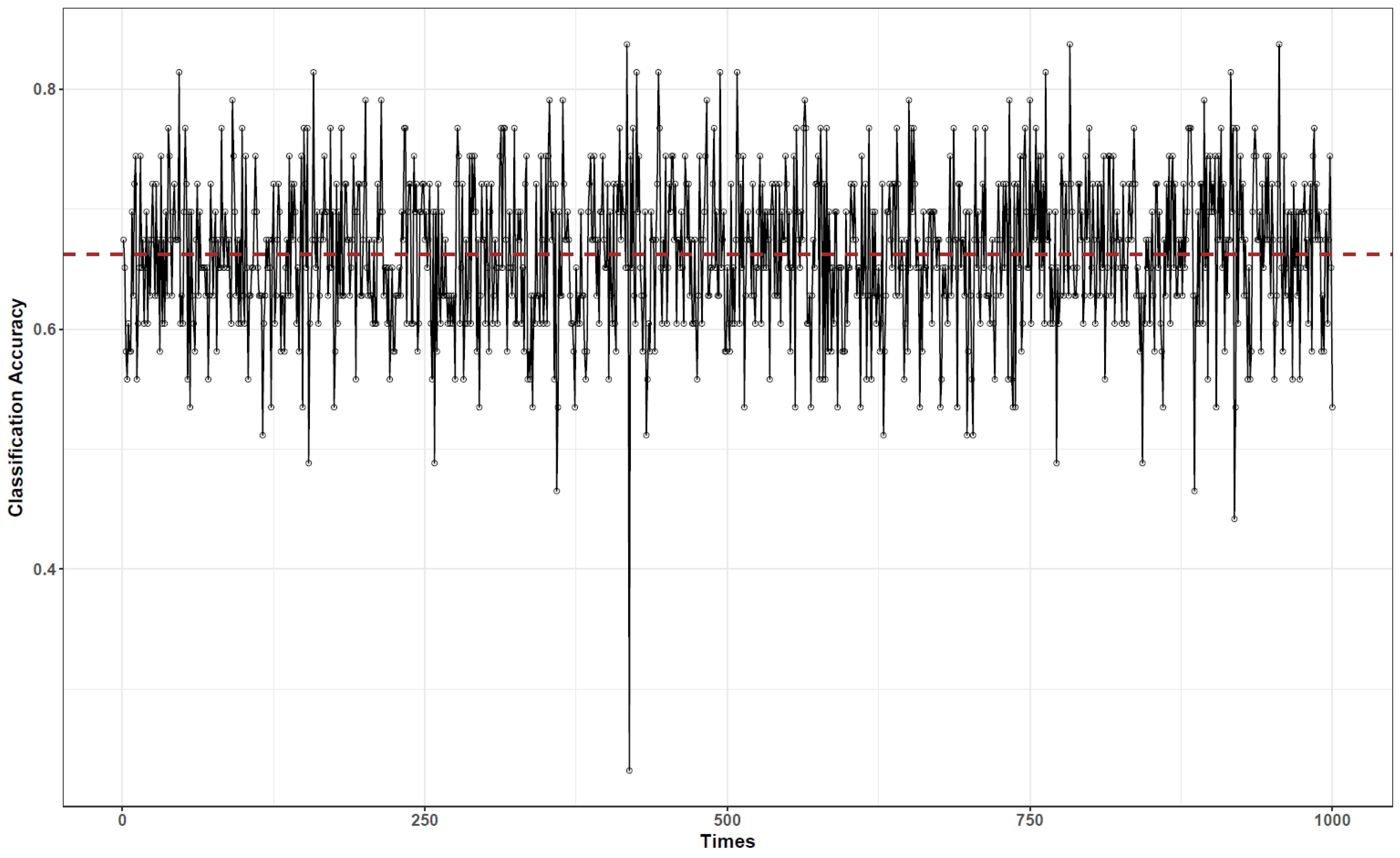

3.3. Bayesian Network-Based Surgical Phase Classification

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ramesh, S.; Dall’Alba, D.; Gonzalez, C.; Yu, T.; Mascagni, P.; Mutter, D.; Marescaux, J.; Fiorini, P.; Padoy, N. Multi-Task Temporal Convolutional Networks for Joint Recognition of Surgical Phases and Steps in Gastric Bypass Procedures. arXiv 2021, arXiv:2102.12218. [Google Scholar]

- Qin, Y.; Pedram, S.A.; Feyzabadi, S.; Allan, M.; McLeod, A.J.; Burdick, J.W.; Azizian, M. Temporal segmentation of surgical sub-tasks through deep learning with multiple data sources. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020. [Google Scholar]

- Pan, J.; Liu, W.; Ge, P.; Li, F.; Shi, W.; Jia, L.; Qin, H. Real-time segmentation and tracking of excised corneal contour by deep neural networks for DALK surgical navigation. Comput. Methods Prog. Biomed. 2020, 197, 105679. [Google Scholar] [CrossRef] [PubMed]

- Kitaguchi, D.; Takeshita, N.; Matsuzaki, H.; Takano, H.; Owada, Y.; Enomoto, T.; Oda, T.; Miura, H.; Yamanashi, T.; Watanabe, M.; et al. Real-time automatic surgical phase recognition in laparoscopic sigmoidectomy using the convolutional neural network-based deep learning approach. Surg. Endosc. 2020, 34, 4924–4931. [Google Scholar] [CrossRef]

- Flood, A.B.; Scott, W.R.; Ewy, W.; Forrest, W.H. Effectiveness in professional organizations: The impact of surgeons and surgical staff organizations on the quality of care in hospitals. Health Serv. Res. 1982, 17, 341–366. [Google Scholar]

- Yoshimitsu, K.; Muragaki, Y.; Maruyama, T.; Yamato, M.; Iseki, H. Development and initial clinical testing of “OPECT”: An innovative device for fully intangible control of the intraoperative image-displaying monitor by the surgeon. Neurosurgery 2014, 10, 46–50. [Google Scholar] [CrossRef]

- Dergachyova, O.; Bouget, D.; Huaulmé, A.; Morandi, X.; Jannin, P. Automatic data-driven real-time segmentation and recognition of surgical workflow. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 1–9. [Google Scholar] [CrossRef]

- Loukas, C.; Georgiou, E. Surgical workflow analysis with Gaussian mixture multivariate autoregressive (GMMAR) models: A simulation study. Comput. Aided Surg. 2013, 18, 47–62. [Google Scholar] [CrossRef]

- Padoy, N.; Blum, T.; Ahmadi, S.A.; Feussner, H.; Berger, M.O.; Navab, N. Statistical modeling and recognition of surgical workflow. Med. Image Anal. 2012, 16, 632–641. [Google Scholar] [CrossRef] [PubMed]

- Lalys, F.; Riffaud, L.; Morandi, X.; Jannin, P. Surgical phases detection from microscope videos by combining SVM and HMM. In International MICCAI Workshop on Medical Computer Vision; Springer: Berlin/Heidelberg, Germany, 2010; pp. 54–62. [Google Scholar] [CrossRef]

- Nara, A.; Allen, C.; Izumi, K. Surgical Phase Recognition using Movement Data from Video Imagery and Location Sensor Data. Adv. Geocomput. 2017, 229–237. [Google Scholar] [CrossRef]

- Padoy, N.; Blum, T.; Essa, I.; Feussner, H.; Berger, M.O.; Navab, N. A boosted segmentation method for surgical workflow analysis. Int. Conf. Med. Image Comput. Comput. Assist. Interv. 2007, 10, 102–109. [Google Scholar]

- Zisimopoulos, O.; Flouty, E.; Luengo, I.; Giataganas, P.; Nehme, J.; Chow, A.; Stoyanov, D. DeepPhase: Surgical Phase Recognition in CATARACTS Videos. arXiv 2018, arXiv:1807.10565. [Google Scholar]

- Colace, F.; Lombardi, M.; Pascale, F.; Santaniello, D. A multilevel graph representation for big data interpretation in real scenarios. In Proceedings of the 2018 3rd International Conference on System Reliability and Safety (ICSRS), Barcelona, Spain, 23–25 November 2018. [Google Scholar]

- Krauss, A.; Muensterer, O.J.; Neumuth, T.; Wachowiak, R.; Donaubauer, B.; Korb, W.; Burgert, O. Workflow analysis of laparoscopic nissen fundoplication in infant pigs—A model for surgical feedback and training. J. Laparoendosc. Adv. A 2009, 19, s117–s122. [Google Scholar] [CrossRef] [PubMed]

- Nara, A.; Izumi, K.; Iseki, H.; Suzuki, T.; Nambu, K.; Sakurai, Y. Surgical workflow analysis based on staff’s trajectory patterns. In M2CAI Workshop; MICCAI: London, UK, 2009. [Google Scholar]

- James, A.; Vieira, D.; Lo, B.; Darzi, A.; Yang, G. Eye-gaze driven surgical workflow segmentation. Med. Image Comput. Comput. Assist. Interv. 2007, 10, 110–117. [Google Scholar] [PubMed]

- Blum, T.; Padoy, N.; Feußner, H.; Navab, N. Modeling and online recognition of surgical phases using hidden markov models. Med. Image Comput. Comput. Assist. Interv. 2008, 11, 627–635. [Google Scholar]

- Ahmadi, S.A.; Sielhorst, T.; Stauder, R.; Horn, M.; Feussner, H.; Navab, N. Recovery of surgical workflow without explicit models. Med. Image Comput. Comput. Assist. Interv. 2006, 9, 420–428. [Google Scholar]

- Leong, J.J.; Nicolaou, M.; Atallah, L.; Mylonas, G.P.; Darzi, A.W.; Yang, G.Z. HMM assessment of quality of movement trajectory in laparoscopic surgery. Med. Image Comput. Comput. Assist. Interv. 2006, 9, 752–759. [Google Scholar] [PubMed]

- Giannarou, S.; Yang, G.Z. Content-based surgical workflow representation using probabilistic motion modeling. In International Workshop on Medical Imaging and Virtual Reality; Springer: Berlin/Heidelberg, Germany, 2010; pp. 314–323. [Google Scholar] [CrossRef]

- Stauder, R.; Ergün, K.; Nassir, N. Learning-based Surgical Workflow Detection from Intra-Operative Signals. arXiv 2017, arXiv:1706.00587. [Google Scholar]

- Korkiakangas, T.; Weldon, S.-M.; Bezemer, J.; Kneebone, R. Nurse–surgeon object transfer: Video analysis of communication and situation awareness in the operating theatre. Int. J. Nurs. Stud. 2014, 51, 1195–1206. [Google Scholar] [CrossRef] [PubMed]

- Bezemer, J.; Murtagh, G.; Cope, A.; Kress, G.; Kneebone, R. The Practical Accomplishment of Surgical Work in the Operating Theater. Symb. Interact. 2011, 34, 398–414. [Google Scholar] [CrossRef]

- Crandall, D.J.; Backstrom, L.; Cosley, D.; Suri, S.; Huttenlocher, D.; Kleinberg, J. Inferring social ties from geographic coincidences. Proc. Natl. Acad. Sci. USA 2010, 107, 22436–22441. [Google Scholar] [CrossRef] [PubMed]

- Laurila, J.K.; Gatica-Perez, D.; Aad, I.; Bornet, O.; Do, T.M.T.; Dousse, O.; Eberle, J.; Miettinen, M. The mobile data challenge: Big data for mobile computing research. In Proceedings of the 10th International Conference on Pervasive Computing Workshop Nokia Mobile Data Challenge, Newcastle, UK, 18–22 June 2012; 2012; pp. 1–8. [Google Scholar]

- Kang, C.; Gao, S.; Lin, X.; Xiao, Y.; Yuan, Y.; Liu, Y.; Ma, X. Analyzing and geo-visualizing individual human mobility patterns using mobile call records. In Proceedings of the Eighteenth International Conference on Geoinformatics, Beijing, China, 18–20 June 2010; pp. 1–7. [Google Scholar]

- Tatem, A.J.; Huang, Z.; Narib, C.; Kumar, U.; Kandula, D.; Pindolia, D.K.; Smith, D.L.; Cohen, J.M.; Graupe, B.; Uusiku, P.; et al. Integrating rapid risk mapping and mobile phone call record data for strategic malaria elimination planning. Malar. J. 2014, 13, 52. [Google Scholar] [CrossRef]

- Lee, J.Y.; Kwan, M.P. Visualization of Socio-Spatial Isolation Based on Human Activity Patterns and Social Networks in Space-Time. Tijdschr. Voor Econ. Soc. Geogr. 2011, 102, 468–485. [Google Scholar] [CrossRef]

- Sevtsuk, A.; Ratti, C. Does urban mobility have a daily routine? Learning from the aggregate data of mobile networks. J. Urban Technol. 2010, 17, 41–60. [Google Scholar] [CrossRef]

- Yang, S.; Yang, X.; Zhang, C.; Spyrou, E. Using social network theory for modeling human mobility. IEEE Netw. 2010, 24, 6–13. [Google Scholar] [CrossRef]

- Vlachos, M.; Kollios, G.; Gunopulos, D. Discovering similar multidimensional trajectories. In Proceedings of the 18th Int’l Conf. on Data Engineering, San Jose, CA, USA, 26 February–1 March 2002; pp. 673–684. [Google Scholar]

- Zhang, K.; Taylor, M.A. Effective arterial road incident detection: A Bayesian network based algorithm. Transp. Res. C Emerg. Technol. 2006, 14, 403–417. [Google Scholar] [CrossRef]

- Korb, K.B.; Nicholson, A.E. Bayesian Artificial Intelligence; CRC press: Boca Raton, FL, USA, 2011. [Google Scholar]

- Daly, R.; Shen, Q.; Aitken, S. Review: Learning Bayesian networks: Approaches and issues. Knowl. Eng. Rev. 2011, 26, 99–157. [Google Scholar] [CrossRef]

- Kocabas, V.; Dragicevic, S. Bayesian networks and agent-based modeling approach for urban land-use and population density change: A BNAS model. J. Geogr. Syst. 2008, 15, 403–426. [Google Scholar] [CrossRef]

- Barton, D.N.; Saloranta, T.; Moe, S.J.; Eggestad, H.O.; Kuikka, S. Bayesian belief networks as a meta-modeling tool in integrated river basin management—Pros and cons in evaluating nutrient abatement decisions under uncertainty in a Norwegian river basin. Ecol. Econ. 2008, 66, 91–104. [Google Scholar] [CrossRef]

- Larrañaga, P.; Karshenas, H.; Bielza, C.; Santana, R. A review on evolutionary algorithms in Bayesian network learning and inference tasks. Inf. Sci. 2013, 233, 109–125. [Google Scholar] [CrossRef]

- Margaritis, D. Learning Bayesian Network Model Structure from Data; Carnegie Mellon University School of Computer Science: Pittsburgh, PA, USA, 2003. [Google Scholar]

- Gámez, J.A.; Mateo, J.L.; Puerta, J.M. Learning Bayesian networks by hill climbing: Efficient methods based on progressive restriction of the neighborhood. Data Min. Knowl. Disc. 2011, 22, 106–148. [Google Scholar] [CrossRef]

- Downs, J.A.; Horner, M.W. A Characteristic-Hull Based Method for Home Range Estimation. Trans. Gis. 2009, 13, 527–537. [Google Scholar] [CrossRef]

- Heaton, J. Bayesian Networks for Predictive Modeling. Forecast. Futur. 2013, 7, 6–10. [Google Scholar]

- Gonzalez, M.C.; Hidalgo, C.A.; Barabasi, A.L. Understanding individual human mobility patterns. Nature 2008, 453, 779–782. [Google Scholar] [CrossRef]

- Palmer, J.R.; Espenshade, T.J.; Bartumeus, F.; Chung, C.Y.; Ozgencil, N.E.; Li, K. New approaches to human mobility: Using mobile phones for demographic research. Demography 2013, 50, 1105–1128. [Google Scholar] [CrossRef]

- Wong, D.W.; Shaw, S.L. Measuring segregation: An activity space approach. J. Geogr. Syst. 2011, 13, 127–145. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, N.; Nara, A.; Izumi, K. An Interaction-Based Bayesian Network Framework for Surgical Workflow Segmentation. Int. J. Environ. Res. Public Health 2021, 18, 6401. https://doi.org/10.3390/ijerph18126401

Luo N, Nara A, Izumi K. An Interaction-Based Bayesian Network Framework for Surgical Workflow Segmentation. International Journal of Environmental Research and Public Health. 2021; 18(12):6401. https://doi.org/10.3390/ijerph18126401

Chicago/Turabian StyleLuo, Nana, Atsushi Nara, and Kiyoshi Izumi. 2021. "An Interaction-Based Bayesian Network Framework for Surgical Workflow Segmentation" International Journal of Environmental Research and Public Health 18, no. 12: 6401. https://doi.org/10.3390/ijerph18126401

APA StyleLuo, N., Nara, A., & Izumi, K. (2021). An Interaction-Based Bayesian Network Framework for Surgical Workflow Segmentation. International Journal of Environmental Research and Public Health, 18(12), 6401. https://doi.org/10.3390/ijerph18126401