Inter-Rater Reliability of Ergonomic Work Demands for Childcare Workers Using the Observation Instrument TRACK

Abstract

1. Introduction

2. Materials and Methods

2.1. TRACK Observation Tool

Description of TRACK

2.2. Reliability Evaluation

2.2.1. Study Population and Sample Size

2.2.2. Raters and Rater Training

2.2.3. Observation Procedures

2.2.4. Inter-Rater Reliability

2.2.5. Data Collection

2.3. Data Analyses

2.3.1. Data Processing

2.3.2. Statistical Analyses

2.4. Ethical Considerations

3. Results

3.1. Inter-Rater Reliability of Settings

3.2. Inter-Rater Reliability of Ergonomic Exposures

3.3. Inter-Rater Reliability of Work Situations

3.4. Inter-Rater Reliability of Disturbances

4. Discussion

4.1. Inter-Rater Reliability between Different Items

4.1.1. Ergonomic Exposures

- It is easier to correctly observe the occurrence of easily recognisable and well-defined items involving large body parts or movements such as Squat, Carry and Sit on floor vs. more poorly defined short-lasting finicky items such as Manual handling.

- It is easier to correctly observe the duration for longer lasting items such as Sit on floor vs. shorter lasting items such as Squat.

4.1.2. Work Situations

4.1.3. Settings

4.2. Applicability of the TRACK Instrument for Assessing Ergonomic Work Demands in Childcare Work

4.3. Strengths and Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AC1 | = Gwet’s agreement coefficient |

| CW | = Childcare worker |

| IRR | = Inter-rater reliability |

| RCT | = Randomised controlled trial |

| TRACK | = observaTion woRk demAnds Childcare worK |

References

- Rasmussen, C.D.N.; Hendriksen, P.R.; Svendsen, M.J.; Ekner, D.; Hansen, K.; Sorensen, O.H.; Svendsen, S.W.; van der Beek, A.J.; Holtermann, A. Improving work for the body—A participatory ergonomic intervention aiming at reducing physical exertion and musculoskeletal pain among childcare workers (the TOY-project): Study protocol for a wait-list cluster-randomized controlled trial. Trials 2018, 19, 411. [Google Scholar] [CrossRef]

- Gratz, R.R.; Claffey, A. Adult health in child care: Health status, behaviors, and concerns of teachers, directors, and family child care providers. Early Child. Res. Q. 1996, 11, 243–267. [Google Scholar] [CrossRef]

- King, P.M.; Gratz, R.; Kleiner, K. Ergonomic recommendations and their impact on child care workers’ health. Work Read. Mass. 2006, 26, 13–17. [Google Scholar]

- Gratz, R.R.; Claffey, A.; King, P.; Scheuer, G. The Physical Demands and Ergonomics of Working with Young Children. Early Child Dev. Care 2002, 172, 531–537. [Google Scholar] [CrossRef]

- Dempsey, P.G.; McGorry, R.W.; Maynard, W.S. A survey of tools and methods used by certified professional ergonomists. Appl. Ergon. 2005, 36, 489–503. [Google Scholar] [CrossRef] [PubMed]

- Takala, E.-P.; Pehkonen, I.; Forsman, M.; Hansson, G.-Å.; Mathiassen, S.E.; Neumann, W.P.; Sjøgaard, G.; Veiersted, K.B.; Westgaard, R.H.; Winkel, J. Systematic evaluation of observational methods assessing biomechanical exposures at work. Scand. J. Work Environ. Health 2010, 36, 3–24. [Google Scholar] [CrossRef] [PubMed]

- Rahman, M.N.A.; Mohamad, S.S. Review on pen-and-paper-based observational methods for assessing ergonomic risk factors of computer work. Work Read. Mass. 2017, 57, 69–77. [Google Scholar] [CrossRef]

- Pehkonen, I.; Ketola, R.; Ranta, R.; Takala, E.P. A video-based observation method to assess musculoskeletal load in kitchen work. Int. J. Occup. Saf. Ergon. 2009, 15, 75–88. [Google Scholar] [CrossRef][Green Version]

- Zare, M.; Biau, S.; Brunet, R.; Roquelaure, Y. Comparison of three methods for evaluation of work postures in a truck assembly plant. Ergonomics 2017, 60, 1551–1563. [Google Scholar] [CrossRef]

- Denis, D.; Lortie, M.; Rossignol, M. Observation procedures characterizing occupational physical activities: Critical review. Int. J. Occup. Saf. Ergon. 2000, 6, 463–491. [Google Scholar] [CrossRef]

- Chung, M.K.; Lee, I.; Kee, D. Quantitative postural load assessment for whole body manual tasks based on perceived discomfort. Ergonomics 2005, 48, 492–505. [Google Scholar] [CrossRef] [PubMed]

- Sukadarin, E.H.; Deros, B.M.; Ghani, J.A.; Mohd Nawi, N.S.; Ismail, A.R. Postural assessment in pen-and-paper-based observational methods and their associated health effects: A review. Int. J. Occup. Saf. Ergon. 2016, 22, 389–398. [Google Scholar] [CrossRef] [PubMed]

- Stanton, N.; Hedge, A.; Brookhuis, K.; Salas, E.; Hendrick, H. Handbook of Human Factors and Ergonomic Methods; CRC Press: Boca Raton, FL, USA, 2004. [Google Scholar]

- Burdorf, A.; van der Beek, A. Exposure assessment strategies for work-related risk factors for musculoskeletal disorders. Scand. J. Work Environ. Health 1999, 25 (Suppl. S4), 25–30. [Google Scholar]

- Kilbom, A. Assessment of physical exposure in relation to work-related musculoskeletal disorders--what information can be obtained from systematic observations? Scand. J. Work Environ. Health 1994, 20, 30–45. [Google Scholar] [PubMed]

- Johnsson, C.; Kjellberg, K.; Kjellberg, A.; Lagerström, M. A direct observation instrument for assessment of nurses’ patient transfer technique (DINO). Appl. Ergon. 2004, 35, 591–601. [Google Scholar] [CrossRef] [PubMed]

- David, G.C. Ergonomic methods for assessing exposure to risk factors for work-related musculoskeletal disorders. Occup. Med. Oxf. Engl. 2005, 55, 190–199. [Google Scholar] [CrossRef] [PubMed]

- Village, J.; Trask, C.; Luong, N.; Chow, Y.; Johnson, P.; Koehoorn, M.; Teschke, K. Development and evaluation of an observational Back-Exposure Sampling Tool (Back-EST) for work-related back injury risk factors. Appl. Ergon. 2009, 40, 538–544. [Google Scholar] [CrossRef]

- Gupta, N.; Heiden, M.; Mathiassen, S.E.; Holtermann, A. Prediction of objectively measured physical activity and sedentariness among blue-collar workers using survey questionnaires. Scand. J. Work Environ. Health 2016, 42, 237–245. [Google Scholar] [CrossRef][Green Version]

- Koch, M.; Lunde, L.K.; Gjulem, T.; Knardahl, S.; Veiersted, K.B. Validity of Questionnaire and Representativeness of Objective Methods for Measurements of Mechanical Exposures in Construction and Health Care Work. PLoS ONE 2016, 11, e0162881. [Google Scholar] [CrossRef]

- Kwak, L.; Proper, K.I.; Hagstromer, M.; Sjostrom, M. The repeatability and validity of questionnaires assessing occupational physical activity—A systematic review. Scand. J. Work Environ. Health 2011, 37, 6–29. [Google Scholar] [CrossRef]

- Prince, S.A.; Adamo, K.B.; Hamel, M.E.; Hardt, J.; Connor Gorber, S.; Tremblay, M. A comparison of direct versus self-report measures for assessing physical activity in adults: A systematic review. Int. J. Behav. Nutr. Phys. Act. 2008, 5, 56. [Google Scholar] [CrossRef] [PubMed]

- Kathy Cheng, H.Y.; Cheng, C.Y.; Ju, Y.Y. Work-related musculoskeletal disorders and ergonomic risk factors in early intervention educators. Appl. Ergon. 2013, 44, 134–141. [Google Scholar] [CrossRef] [PubMed]

- McGrath, B.J.; Huntington, A.D. The Health and Wellbeing of Adults Working in Early Childhood Education. Australas. J. Early Child. 2007, 32, 33–38. [Google Scholar] [CrossRef]

- Burford, E.M.; Ellegast, R.; Weber, B.; Brehmen, M.; Groneberg, D.; Sinn-Behrendt, A.; Bruder, R. The comparative analysis of postural and biomechanical parameters of preschool teachers pre- and post-intervention within the ErgoKiTa study. Ergonomics 2017, 60, 1718–1729. [Google Scholar] [CrossRef] [PubMed]

- Labaj, A.; Diesbourg, T.; Dumas, G.; Plamondon, A.; Mercheri, H.; Larue, C. Posture and lifting exposures for daycare workers. Int. J. Ind. Ergon. 2016, 54, 83–92. [Google Scholar] [CrossRef]

- Labaj, A.; Diesbourg, T.L.; Dumas, G.A.; Plamondon, A.; Mecheri, H. Comparison of lifting and bending demands of the various tasks performed by daycare workers. Int. J. Ind. Ergon. 2019, 69, 96–103. [Google Scholar] [CrossRef]

- Shimaoka, M.; Hiruta, S.; Ono, Y.; Nonaka, H.; Hjelm, E.W.; Hagberg, M. A comparative study of physical work load in Japanese and Swedish nursery school teachers. Eur. J. Appl. Physiol. Occup. Physiol. 1998, 77, 10–18. [Google Scholar] [CrossRef]

- Kumagai, S.; Tabuchi, T.; Tainaka, H.; Miyajima, K.; Matsunaga, I.; Kosaka, H.; Andoh, K.; Seo, A. Load on the low back of teachers in nursery schools. Int. Arch. Occup. Environ. Health 1995, 68, 52–57. [Google Scholar] [CrossRef]

- Frings-Dresen, M.H.W.; Kuijer, P.P.F.M. The TRAC-system: An observation method for analysing work demands at the workplace. Saf. Sci. 1995, 21, 163–165. [Google Scholar] [CrossRef]

- Fransson-Hall, C.; Gloria, R.; Kilbom, A.; Winkel, J.; Karlqvist, L.; Wiktorin, C. A portable ergonomic observation method (PEO) for computerized on-line recording of postures and manual handling. Appl. Ergon. 1995, 26, 93–100. [Google Scholar] [CrossRef]

- Rhen, I.M.; Forsman, M. Inter- and intra-rater reliability of the OCRA checklist method in video-recorded manual work tasks. Appl. Ergon. 2020, 84, 103025. [Google Scholar] [CrossRef] [PubMed]

- Samuels, S.J.; Lemasters, G.K.; Carson, A. Statistical methods for describing occupational exposure measurements. Am. Ind. Hyg. Assoc. J. 1985, 46, 427–433. [Google Scholar] [CrossRef] [PubMed]

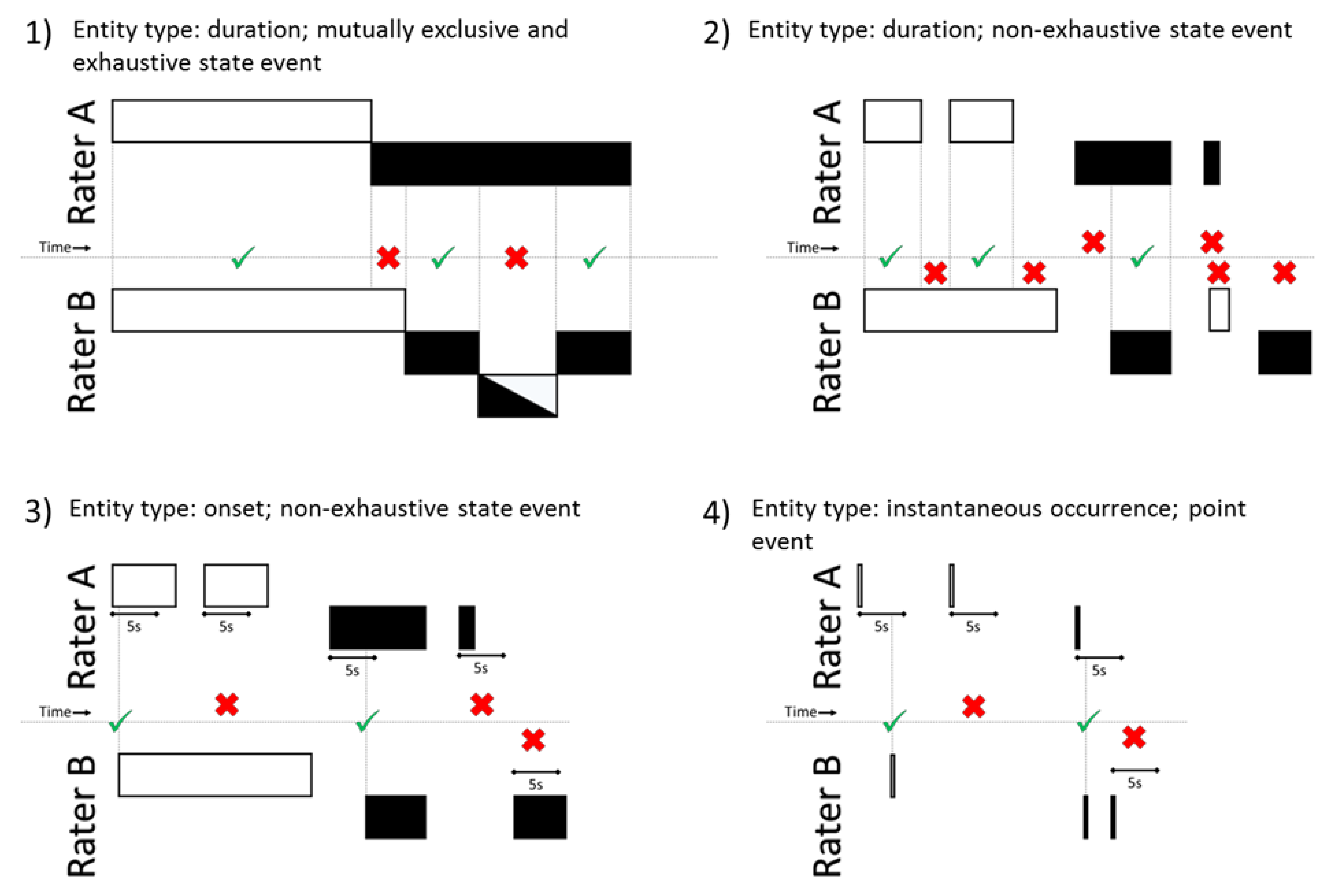

- Jansen, R.G.; Wiertz, L.F.; Meyer, E.S.; Noldus, L.P.J.J. Reliability analysis of observational data: Problems, solutions, and software implementation. Behav. Res. Methods Instrum. Comput. 2003, 35, 391–399. [Google Scholar] [CrossRef] [PubMed]

- Gwet, K.L. Computing inter-rater reliability and its variance in the presence of high agreement. Br. J. Math. Stat. Psychol. 2008, 61, 29–48. [Google Scholar] [CrossRef] [PubMed]

- Wongpakaran, N.; Wongpakaran, T.; Wedding, D.; Gwet, K.L. A comparison of Cohen’s Kappa and Gwet’s AC1 when calculating inter-rater reliability coefficients: A study conducted with personality disorder samples. BMC Med. Res. Methodol. 2013, 13, 61. [Google Scholar] [CrossRef] [PubMed]

- Gwet, K.L. Handbook of Inter-Rater Reliability: The Definitive Guide to Measuring the Extent of Agreement among Raters, 4th ed.; Advanced Analytics, LLC: Gaithersburg, MD, USA, 2014. [Google Scholar]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [PubMed]

- Karstad, K.; Rugulies, R.; Skotte, J.; Munch, P.K.; Greiner, B.A.; Burdorf, A.; Sogaard, K.; Holtermann, A. Inter-rater reliability of direct observations of the physical and psychosocial working conditions in eldercare: An evaluation in the DOSES project. Appl. Ergon. 2018, 69, 93–103. [Google Scholar] [CrossRef]

- Skotte, J.; Korshoj, M.; Kristiansen, J.; Hanisch, C.; Holtermann, A. Detection of physical activity types using triaxial accelerometers. J. Phys. Act. Health 2014, 11, 76–84. [Google Scholar] [CrossRef]

- Korshoj, M.; Skotte, J.H.; Christiansen, C.S.; Mortensen, P.; Kristiansen, J.; Hanisch, C.; Ingebrigtsen, J.; Holtermann, A. Validity of the Acti4 software using ActiGraph GT3X+accelerometer for recording of arm and upper body inclination in simulated work tasks. Ergonomics 2014, 57, 247–253. [Google Scholar] [CrossRef]

| Item | Definition | Item Number | Descriptive Factor a | Definition | Type of Event b |

|---|---|---|---|---|---|

| Settings | |||||

| Indoor | Being indoors | 1 | State event—mutually exclusive and exhaustive | ||

| Outdoor | Being outdoors | 2 | State event—mutually exclusive and exhaustive | ||

| Ergonomic exposures | |||||

| Carry | Child or object not in contact with the surface and carried for at least 2–3 s and/or two steps | 3 | Large/heavy weight child | Rule of thumb: Child able to walk by itself and/or weighs above 12 km | State event—non-exhaustive |

| Small/light weight child | Rule of thumb: Child not able to walk by itself and/or weighs below 12 km | ||||

| Other | Objects. Triviality level: 2–3 km | ||||

| Lift | Child or object not in contact with the surface but carried for less than 2–3 s and/or two steps | 4 | Large/heavy weight child | Rule of thumb: Child able to walk by itself and/or weighs above 12 km | Point event |

| Small/light weight child | Rule of thumb: Child not able to walk by itself and/or weighs below 12 km | ||||

| Other | Objects. Triviality level: 2–3 km | ||||

| Push, pull, partial lift | Child or object moved or supported without he/she/it losing contact to the existing surface | 5 | Large/heavy weight child | Rule of thumb: Child able to walk by itself and/or weighs above 12 km | Point event |

| Small/light weight child | Rule of thumb: Child not able to walk by itself and/or weighs below 12 km | ||||

| Other | Objects. Triviality level: 2–3 km | ||||

| Sit on floor | Sitting either on the bare floor or on a thin mattress (<5 cm) with the bodyweight on at least one buttock, and feet and buttock(s) in approximately the same height. This also includes sitting cross-legged or in a mermaid position. | 6 | With sit pad (only) | CW sits on cushion or pad that is approximately 5–15 cm thick when not being loaded | State event—non-exhaustive |

| With back support (only) | CW leans against something | ||||

| With sit pad + back support | CW sits on cushion or pad that is approximately 5–15 cm thick when not being loaded + leans against something | ||||

| Without any support | No cushion or pad or something to lean against | ||||

| Squat | Sitting position where neither knees nor buttocks touch the floor or ground surface and the angle of the knees is ≤90 degrees | 7 | State event—non-exhaustive | ||

| Kneel | Knee(s) and lower leg(s) are in contact with the floor or ground surface. Heel(s) can, but do not have to, touch the buttock(s) | 8 | With pad | Cushion or pad that is approximately 5–15 cm thick when not being loaded | State event—non-exhaustive |

| Without pad | No cushion or pad | ||||

| Acute strain | An unforeseen incident with a sudden physical strain on the CW, e.g., if a tripping child is caught to cushion or prevent a fall | 9 | Point event | ||

| Situations | |||||

| Hand-over and pick-up | CW interacts with parent(s) (or similar responsible adult/adolescent) upon arrival or leaving. Hand-over or pick-up of a child can be recorded as several situations if interrupted or split | 10 | State event—mutually exclusive and exhaustive | ||

| Diaper and/or clothes change | All diaper and/or clothes changes done in the ward or the child changing facility adjoining each ward | 11 | State event—mutually exclusive and exhaustive | ||

| Other clothes change | All other situations with change of clothes not done in the ward or baby changing facility, e.g., putting on outdoor clothes in the wardrobe | 12 | State event—mutually exclusive and exhaustive | ||

| Eat and/or group gathering | Organised gatherings of all (awake) children, e.g., when eating lunch or doing a group activity | 13 | State event—mutually exclusive and exhaustive | ||

| Tuck children up or pick up when awake | Tucking children up and picking them up when they wake up, either in cradle or dormitory | 14 | State event—mutually exclusive and exhaustive | ||

| Clean-up, tidy up or preparation | Work related to cleaning, tidying, and preparing activities or similar, with or without accompanying children | 15 | State event—mutually exclusive and exhaustive | ||

| Outing | Field trips outside the nursery’s cadastral plot | 16 | State event—mutually exclusive and exhaustive | ||

| Other childcare work | Remaining work not comprised in situations with item number 10–16, 18 or 19 | 17 | State event—mutually exclusive and exhaustive | ||

| Childcare worker break | Breaks for the CW planned in his/her work schedule, e.g., scheduled lunch breaks or meetings | 18 | State event—mutually exclusive and exhaustive | ||

| Rater/observation break | Periods where the rater was unable to observe the CW, e.g., for privacy reasons, unplanned breaks or due to impediments of the furnishing | 19 | State event—mutually exclusive and exhaustive | ||

| Disturbances | |||||

| Disturbance | Pronounced and acute interruption of the CW’s current task | 20 | Colleague | Other CWs | State event—non-exhaustive |

| Parent | Parent or similar responsible adult/adolescent | ||||

| Child | Other children in nursery | ||||

| Other | Objects, e.g., a phone ringing | ||||

| Session | Content | Duration |

|---|---|---|

| 1 | All raters received the observation manual and the observation protocol and were instructed to read both carefully. | 1 h |

| 2 | Lab session: The researchers who had participated in developing the TRACK instrument, instructed the raters in the practical and technical use of the instrument. | 2 h |

| 3 | Field session: In pairs of two, the raters observed one childcare worker simultaneously for 45 consecutive minutes. The raters were not allowed to talk or show their tablets to each other. Subsequently, the observation was evaluated, comparing ratings and discussing challenges and perceptions of item definitions by completing an assessment form. The two steps were repeated three times in total. | 3 h |

| 4 | Lab session: All raters and researchers verbally evaluated the use of the TRACK instrument based on the field experiences and revised the observation manual to clarify and correct differences in how the item definitions were interpreted. | 1.5 h |

| 5 | All raters received the revised observation manual and were instructed to read it carefully. | 1 h |

| Category and Item | Entity Type | Item Number a | Rater A Agrees with Rater B b | Only Rater A Scores c | Only Rater B Scores c | Total Scorings d | % Agreement e | Gwet’s AC1 f | Strength of Agreement g |

|---|---|---|---|---|---|---|---|---|---|

| Settings | |||||||||

| Indoor | Duration (seconds) | 1 | 105,182 | 253 | 272 | 105,706 | 99.5 | 1.00 | Almost perfect |

| Outdoor | Duration (seconds) | 2 | 2374 | 263 | 249 | 2887 | 82.2 | 0.79 | Substantial |

| Ergonomic exposures | |||||||||

| Squat | Onset of duration (no. of events) | 7 | 118 | 16 | 20 | 154 | 76.6 | 0.71 | Substantial |

| Kneel | Onset of duration (no. of events) | 8 | 84 | 13 | 13 | 110 | 76.4 | 0.70 | Substantial |

| Carry | Onset of duration (no. of events) | 3 | 86 | 5 | 11 | 102 | 84.3 | 0.82 | Almost perfect |

| Sit on floor | Onset of duration (no. of events) | 6 | 92 | 6 | 8 | 106 | 86.8 | 0.85 | Almost perfect |

| Squat | Duration (seconds) | 7 | 1507 | 453 | 723 | 2683 | 56.2 | 0.33 | Fair |

| Kneel | Duration (seconds) | 8 | 5029 | 410 | 341 | 5780 | 87.0 | 0.85 | Almost perfect |

| Carry | Duration (seconds) | 3 | 4246 | 583 | 689 | 5537 | 76.7 | 0.71 | Substantial |

| Sit on floor | Duration (seconds) | 6 | 20,222 | 273 | 421 | 20,915 | 96.7 | 0.97 | Almost perfect |

| Manual handling | Instantaneous occurrence (no. of events) | 4 + 5 | 196 | 116 | 71 | 383 | 51.2 | 0.23 | Fair |

| Acute strain h | Instantaneous occurrence (no. of events) | 9 | 0 | 1 | 1 | 2 | N/A | N/A | N/A |

| Work situations | |||||||||

| Hand-over and pick-up | Duration (seconds) | 10 | 1258 | 875 | 836 | 2969 | 42.4 | 0.02 | Slight |

| Diaper and/or clothes change | Duration (seconds) | 11 | 9669 | 537 | 1048 | 11,254 | 85.9 | 0.84 | Almost perfect |

| Other clothes change | Duration (seconds) | 12 | 1485 | 919 | 72 | 2476 | 60.0 | 0.41 | Moderate |

| Eat and/or group gathering | Duration (seconds) | 13 | 20,879 | 2197 | 820 | 23,896 | 87.4 | 0.86 | Almost perfect |

| Tuck children up or pick up when awake | Duration (seconds) | 14 | 5493 | 2397 | 437 | 8327 | 66.0 | 0.53 | Moderate |

| Clean-up, tidy up or preparation | Duration (seconds) | 15 | 6137 | 2642 | 1362 | 10,141 | 60.5 | 0.42 | Moderate |

| Outing | Duration (seconds) | 16 | 191 | 1 | 1 | 193 | 99.0 | 0.99 | Almost perfect |

| Other childcare work | Duration (seconds) | 17 | 50,621 | 1684 | 6847 | 59,152 | 85.6 | 0.83 | Almost perfect |

| Childcare worker break h | Duration (seconds) | 18 | 0 | 0 | 0 | 0 | N/A | N/A | N/A |

| Rater/observation break | Duration (seconds) | 19 | 831 | 254 | 90 | 1176 | 70.7 | 0.61 | Moderate |

| Disturbances | |||||||||

| Disturbance h | Onset of duration (no. of events) | 20 | 1 | 2 | 0 | 3 | N/A | N/A | N/A |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Svendsen, M.J.; Hendriksen, P.F.; Schmidt, K.G.; Stochkendahl, M.J.; Rasmussen, C.N.; Holtermann, A. Inter-Rater Reliability of Ergonomic Work Demands for Childcare Workers Using the Observation Instrument TRACK. Int. J. Environ. Res. Public Health 2020, 17, 1607. https://doi.org/10.3390/ijerph17051607

Svendsen MJ, Hendriksen PF, Schmidt KG, Stochkendahl MJ, Rasmussen CN, Holtermann A. Inter-Rater Reliability of Ergonomic Work Demands for Childcare Workers Using the Observation Instrument TRACK. International Journal of Environmental Research and Public Health. 2020; 17(5):1607. https://doi.org/10.3390/ijerph17051607

Chicago/Turabian StyleSvendsen, Malene Jagd, Peter Fjeldstad Hendriksen, Kathrine Greby Schmidt, Mette Jensen Stochkendahl, Charlotte Nørregaard Rasmussen, and Andreas Holtermann. 2020. "Inter-Rater Reliability of Ergonomic Work Demands for Childcare Workers Using the Observation Instrument TRACK" International Journal of Environmental Research and Public Health 17, no. 5: 1607. https://doi.org/10.3390/ijerph17051607

APA StyleSvendsen, M. J., Hendriksen, P. F., Schmidt, K. G., Stochkendahl, M. J., Rasmussen, C. N., & Holtermann, A. (2020). Inter-Rater Reliability of Ergonomic Work Demands for Childcare Workers Using the Observation Instrument TRACK. International Journal of Environmental Research and Public Health, 17(5), 1607. https://doi.org/10.3390/ijerph17051607