The Utility of Virtual Patient Simulations for Clinical Reasoning Education

Abstract

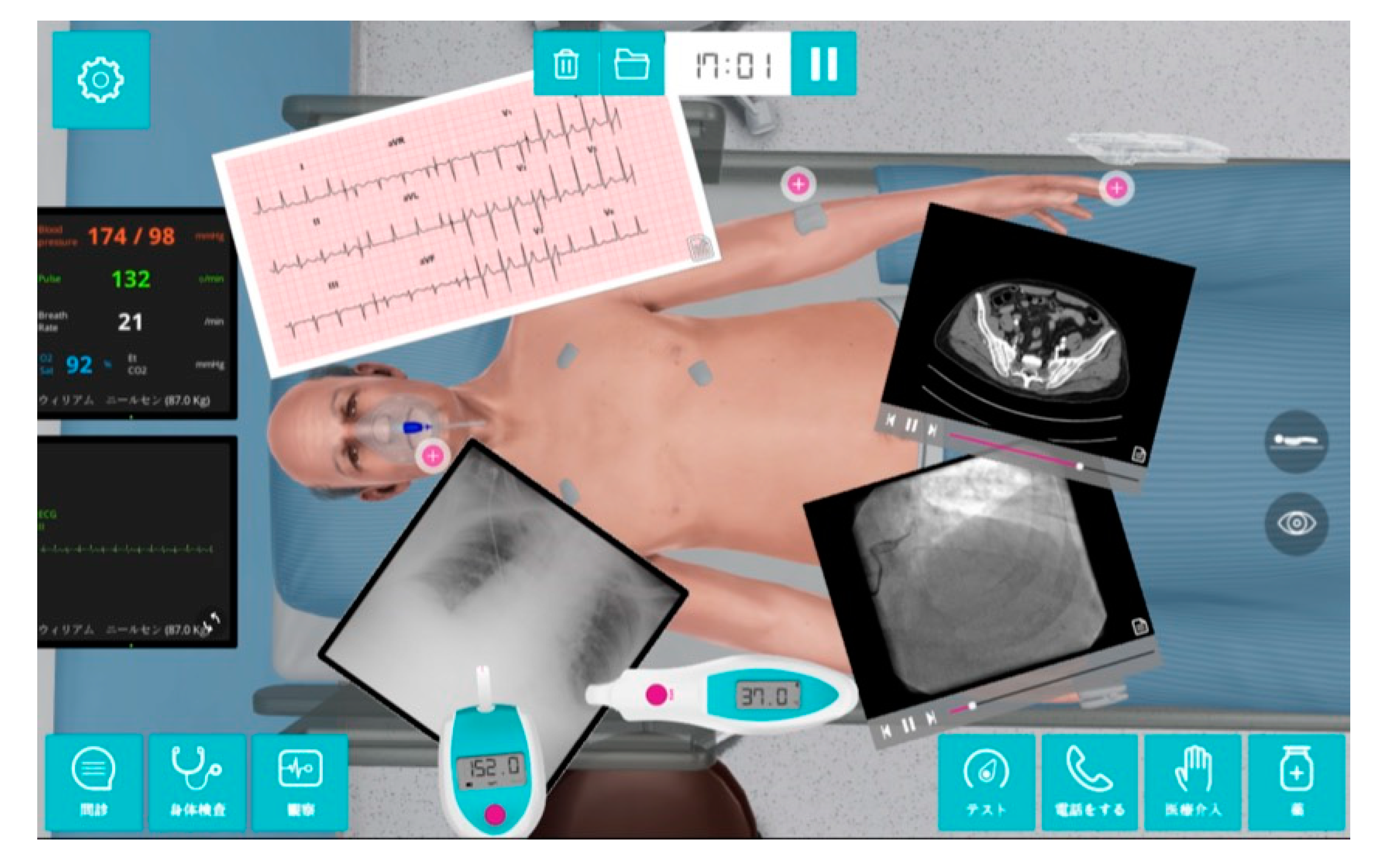

1. Introduction

2. Materials and Methods

2.1. Study Setting and Participants

2.2. Study Design

2.3. Statistical Analyses

2.4. Ethics

3. Results

4. Discussion

Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Schwartzstein, R.M.; Roberts, D.H. Saying Goodbye to Lectures in Medical School-Paradigm Shift or Passing Fad? N. Engl. J. Med. 2017, 377, 605–607. [Google Scholar] [CrossRef] [PubMed]

- Emanuel, E.J. The Inevitable Reimagining of Medical Education. JAMA 2020, 323, 1127–1128. [Google Scholar] [CrossRef] [PubMed]

- Nadarajah, V.D.; Er, H.M.; Lilley, P. Turning Around a Medical Education Conference: Ottawa 2020 in the Time of Covid-19. Med. Educ. 2020, 54, 760–761. [Google Scholar] [CrossRef] [PubMed]

- Prober, C.G.; Heath, C. Lecture Halls without Lectures-A Proposal for Medical Education. N. Engl. J. Med. 2012, 366, 1657–1659. [Google Scholar] [CrossRef] [PubMed]

- Rencic, J.; Trowbridge, R.L., Jr.; Fagan, M.; Szauter, K.; Durning, S. Clinical Reasoning Education at US Medical Schools: Results from a National Survey of Internal Medicine Clerkship Directors. J. Gen. Intern. Med. 2017, 32, 1242–1246. [Google Scholar] [CrossRef]

- Roy, M.J.; Sticha, D.L.; Kraus, P.L.; Olsen, D.E. Simulation and Virtual Reality in Medical Education and Therapy: A Protocol. Cyberpsychol. Behav. 2006, 9, 245–247. [Google Scholar] [CrossRef]

- Hoffman, H.; Irwin, A.; Ligon, R.; Murray, M.; Tohsaku, C. Virtual Reality-Multimedia Synthesis: Next-Generation Learning Environments for Medical Education. J. Biocommun. 1995, 22, 2–7. [Google Scholar]

- Rudran, B.; Logishetty, K. Virtual Reality Simulation: A Paradigm Shift for Therapy and Medical Education. Br. J. Hosp. Med. 2018, 79, 666–667. [Google Scholar] [CrossRef]

- Kyaw, B.M.; Saxena, N.; Posadzki, P.; Vseteckova, J.; Nikolaou, C.K.; George, P.P.; Divakar, U.; Masiello, I.; Kononowicz, A.A.; Zary, N.; et al. Virtual Reality for Health Professions Education: Systematic Review and Meta-Analysis by the Digital Health Education Collaboration. J. Med. Internet Res. 2019, 21, e12959. [Google Scholar] [CrossRef]

- Yao, P.; Challen, C.; Caves, C. An Experimental Study on Usefulness of Virtual Reality 360° in Undergraduate Medical Education [Letter]. Adv. Med. Educ. Pract. 2019, 10, 1103–1104. [Google Scholar] [CrossRef]

- Japanese National Medical Practitioners Qualifying Examination; Organization of the Ministry of Health, Labour and Welfare: Tokyo, Japan, 2019.

- Mariani, A.W.; Pego-Fernandes, P.M. Medical Education: Simulation and Virtual Reality. Sao Paulo Med. J. 2011, 129, 369–370. [Google Scholar] [CrossRef] [PubMed]

- Alharbi, Y.; Al-Mansour, M.; Al-Saffar, R.; Garman, A.; Alraddadi, A. Three-dimensional Virtual Reality as an Innovative Teaching and Learning Tool for Human Anatomy Courses in Medical Education: A Mixed Methods Study. Cureus 2020, 12, e7085. [Google Scholar] [CrossRef] [PubMed]

- Kleinert, R.; Heiermann, N.; Plum, P.S.; Wahba, R.; Chang, D.H.; Maus, M.; Chon, S.H.; Hoelscher, A.H.; Stippel, D.L. Web-Based Immersive Virtual Patient Simulators: Positive Effect on Clinical Reasoning in Medical Education. J. Med. Internet Res. 2015, 17, e263. [Google Scholar] [CrossRef] [PubMed]

- Ammanuel, S.; Brown, I.; Uribe, J.; Rehani, B. Creating 3D models from Radiologic Images for Virtual Reality Medical Education Modules. J. Med. Syst. 2019, 43, 166. [Google Scholar] [CrossRef]

- Labovitz, J.; Hubbard, C. The Use of Virtual Reality in Podiatric Medical Education. Clin. Podiatr. Med. Surg. 2020, 37, 409–420. [Google Scholar] [CrossRef]

- Iserson, K.V. Ethics of Virtual Reality in Medical Education and Licensure. Camb. Q. Healthc. Ethics 2018, 27, 326–332. [Google Scholar] [CrossRef]

- Mayrose, J.; Myers, J.W. Endotracheal Intubation: Application of Virtual Reality to Emergency Medical Services Education. Simul. Healthc. 2007, 2, 231–234. [Google Scholar] [CrossRef]

- Dyer, E.; Swartzlander, B.J.; Gugliucci, M.R. Using Virtual Reality in Medical Education to Teach Empathy. J. Med. Libr. Assoc. 2018, 106, 498–500. [Google Scholar] [CrossRef]

- Theoret, C.; Ming, X. Our Education, Our Concerns: The Impact on Medical Student Education of COVID-19. Med. Educ. 2020, 54, 591–592. [Google Scholar] [CrossRef]

- Longo, P.J.; Orcutt, V.L.; James, K.; Kane, J.; Coleman, V. Clinical Reasoning and Knowledge Organization: Bridging the Gap Between Medical Education and Neurocognitive Science. J. Physician Assist. Educ. 2018, 29, 230–235. [Google Scholar] [CrossRef]

- Chon, S.H.; Timmermann, F.; Dratsch, T.; Schuelper, N.; Plum, P.; Berlth, F.; Datta, R.R.; Schramm, C.; Haneder, S.; Späth, M.R.; et al. Serious Games in Surgical Medical Education: A Virtual Emergency Department as a Tool for Teaching Clinical Reasoning to Medical Students. JMIR Serious Games 2019, 7, e13028. [Google Scholar] [CrossRef]

- Padilha, J.M.; Machado, P.P.; Ribeiro, A.; Ramos, J.; Costa, P. Clinical Virtual Simulation in Nursing Education: Randomized Controlled Trial. J. Med. Internet Res. 2019, 21, e11529. [Google Scholar] [CrossRef] [PubMed]

- Home Page of INTERACTIVE, K. TurningPoint in Education. Available online: http://keepad.com.au/display-collaborate/turningpoint/new-users (accessed on 10 July 2020).

- Weidenbusch, M.; Lenzer, B.; Sailer, M.; Strobel, C.; Kunisch, R.; Kiesewetter, J.; Fischer, M.R.; Zottmann, J.M. Can clinical case discussions foster clinical reasoning skills in undergraduate medical education? A randomised controlled trial. BMJ Open 2019, 9, e025973. [Google Scholar] [CrossRef] [PubMed]

- Durning, S.J.; Dong, T.; Artino, A.R., Jr.; LaRochelle, J.; Pangaro, L.N.; Vleuten, C.V.; Schuwirth, L. Instructional authenticity and clinical reasoning in undergraduate medical education: A 2-year, prospective, randomized trial. Mil. Med. 2012, 177, 38–43. [Google Scholar] [CrossRef]

- Gutiérrez, F.; Pierce, J.; Vergara, V.M.; Coulter, R.; Saland, L.; Caudell, T.P.; Goldsmith, T.E.; Alverson, D.C. The Effect of Degree of Immersion Upon Learning Performance in Virtual Reality Simulations for Medical Education. Stud. Health Technol. Inform. 2007, 125, 155–160. [Google Scholar] [PubMed]

- Edvardsen, O.; Steensrud, T. [Virtual Reality in Medical Education]. Tidsskr. Nor Laegeforen. 1998, 118, 902–906. [Google Scholar]

- Pierce, J.; Gutiérrez, F.; Vergara, V.M.; Alverson, D.C.; Qualls, C.; Saland, L.; Goldsmith, T.; Caudell, T.P. Comparative Usability Studies of Full vs. Partial Immersive Virtual Reality Simulation for Medical Education and Training. Stud. Health Technol. Inform. 2008, 132, 372–377. [Google Scholar] [PubMed]

- Ellaway, R. Virtual Reality in Medical Education. Med. Teach. 2010, 32, 791–793. [Google Scholar] [CrossRef]

- Almarzooq, Z.I.; Lopes, M.; Kochar, A. Virtual Learning During the COVID-19 Pandemic: A Disruptive Technology in Graduate Medical Education. J. Am. Coll. Cardiol. 2020, 75, 2635–2638. [Google Scholar] [CrossRef]

- Cooke, S.; Lemay, J.F. Transforming Medical Assessment: Integrating Uncertainty into the Evaluation of Clinical Reasoning in Medical Education. Acad. Med. 2017, 92, 746–751. [Google Scholar] [CrossRef]

- Kononowicz, A.A.; Woodham, L.A.; Edelbring, S.; Stathakarou, N.; Davies, D.; Saxena, N.; Car, L.T.; Carlstedt-Duke, J.; Car, J.; Zary, N. Virtual Patient Simulations in Health Professions Education: Systematic Review and Meta-Analysis by the Digital Health Education Collaboration. J. Med. Internet Res. 2019, 21, e14676. [Google Scholar] [CrossRef] [PubMed]

| Item No. | Category | Main Topic of Quiz | Pre-Test Score (n = 169) | Post-Test Score (n = 169) | Fluctuation (%) | Adjusted p-Value |

|---|---|---|---|---|---|---|

| 1 | CR | Management of altered mental status | 25.4% | 75.7% | +50.3 | <0.0001 * |

| 2 | K | Electrocardiogram and syncope | 52.1% | 58.0% | +5.9 | 0.1573 |

| 3 | K | Type of hormone secretion during hypoglycemia | 64.5% | 87.0% | +22.5 | <0.0001 * |

| 4 | K | Referred pain of acute coronary syndrome | 51.5% | 53.8% | +2.4 | 0.5862 |

| 5 | CR | Time course of syncope (cardiogenic) | 59.2% | 82.8% | +23.7 | <0.0001 * |

| 6 | K | Pathophysiology of pulmonary failure | 34.9% | 31.4% | −3.6 | 0.1573 |

| 7 | K | Electrocardiogram of ST elevation | 34.3% | 36.7% | +2.4 | 0.5791 |

| 8 | CR | Vital signs of sepsis | 73.4% | 85.8% | +12.4 | <0.0001 * |

| 9 | K | Anatomy of aortic dissection | 55% | 53.3% | −1.8 | 0.6015 |

| 10 | CR | Management of each type of shock | 66.9% | 78.7% | +11.8 | 0.0032 |

| 11 | K | Contrast CT of aortic dissection | 67.5% | 79.3% | +11.8 | 0.0016 * |

| 12 | CR | Treatment strategy of shock | 56.2% | 62.7% | +6.5 | 0.0630 |

| 13 | K | Jugular venous pressure | 42.6% | 49.7% | +7.1 | 0.0455 |

| 14 | CR | Differential diagnosis of hypoglycemia | 24.3% | 82.2% | +58.0 | <0.0001 * |

| 15 | CR | Management of altered mental status | 75.1% | 97.0% | +21.9 | <0.0001 * |

| 16 | K | Chest radiograph of heart failure | 24.3% | 39.6% | +15.4 | <0.0001 * |

| 17 | CR | Management of syncope | 53.8% | 79.3% | +25.4 | <0.0001 * |

| 18 | K | Symptoms of hypoglycemia | 51.5% | 27.8% | −23.7 | <0.0001 * |

| 19 | CR | Treatment of sepsis shock | 47.3% | 50.9% | +3.6 | 0.3428 |

| 20 | CR | Management of chest pain | 46.7% | 85.8% | +39.1 | <0.0001 * |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Watari, T.; Tokuda, Y.; Owada, M.; Onigata, K. The Utility of Virtual Patient Simulations for Clinical Reasoning Education. Int. J. Environ. Res. Public Health 2020, 17, 5325. https://doi.org/10.3390/ijerph17155325

Watari T, Tokuda Y, Owada M, Onigata K. The Utility of Virtual Patient Simulations for Clinical Reasoning Education. International Journal of Environmental Research and Public Health. 2020; 17(15):5325. https://doi.org/10.3390/ijerph17155325

Chicago/Turabian StyleWatari, Takashi, Yasuharu Tokuda, Meiko Owada, and Kazumichi Onigata. 2020. "The Utility of Virtual Patient Simulations for Clinical Reasoning Education" International Journal of Environmental Research and Public Health 17, no. 15: 5325. https://doi.org/10.3390/ijerph17155325

APA StyleWatari, T., Tokuda, Y., Owada, M., & Onigata, K. (2020). The Utility of Virtual Patient Simulations for Clinical Reasoning Education. International Journal of Environmental Research and Public Health, 17(15), 5325. https://doi.org/10.3390/ijerph17155325