An Empirical Investigation of “Physician Congestion” in U.S. University Hospitals

Abstract

:1. Introduction

- Increasing returns to scale resulting from specialization and internal knowledge spillovers are more likely to take place in small teams and units within organizations. In light of this, analysis of state-level spending on the overall physician workforce vis-à-vis aggregate measures of healthcare quality, while important in its own right, could omit one of the most important processes that links scale and size (of organizations) with performance and productivity.

- Many capital resources such as new laboratories or radiotherapy centers, which may have direct effects on healthcare quality, require substantial time to install (meaning that they are fixed inputs, at least in the short run). However, the number of affiliated physicians is a variable input that can be adjusted relatively easily in the short run.

- While state of the art medical technology such as Premium CT Scanners, proton beam therapy systems, and MRI scanners exerts a substantial positive net effect on healthcare, the enormous costs means that the services they provide are usually offered in a limited number of regional or national tertiary referral centers. This provides little room for efficiency gains through redeployment and reallocation. On the other hand, physicians can relocate with relative ease. Understanding the effects of physician relocation, thus, could create a better understanding of the nexus between efficiency and resource reallocation. There is a growing body of literature, related to our paper, that explores the relationship between physician volume and clinical outcomes at the hospital level. In this vein, other studies have examined the effects of physician volume on readmission and mortality in elderly patients with heart failure. They found that patients treated at hospitals with low physician volumes had higher readmission and mortality rates than those treated at hospitals with high physician volumes [17,18]. Controlling for patient, physician, and hospital characteristics they showed that patients with heart failure cared for by the high-volume physicians had lower mortality than those cared for by the low-volume physicians [18]. Focusing on elderly patients with pneumonia, a recent study found that patients cared for in hospitals with more doctors were less likely to be readmitted [19]. The joint effect of hospital and physician volume of primary percutaneous coronary intervention (PCI) on in-hospital mortality was also examined. This study found that primary PCI by high-volume hospitals and high-volume physicians was associated with lower mortality risk [20]. Finally, Birkmeyer et al. tested the link between surgeon volume and operative mortality in the United States for patients who underwent one of eight cardiovascular procedures or cancer resections. For all eight procedures, surgeon volume was found to be inversely related to operative mortality [21].

2. Materials and Methods

2.1. The Empirical Model

2.2. Sample

2.3. Econometric and Other Methodological Concerns

3. Econometric Results

3.1. Hypothesis Testing

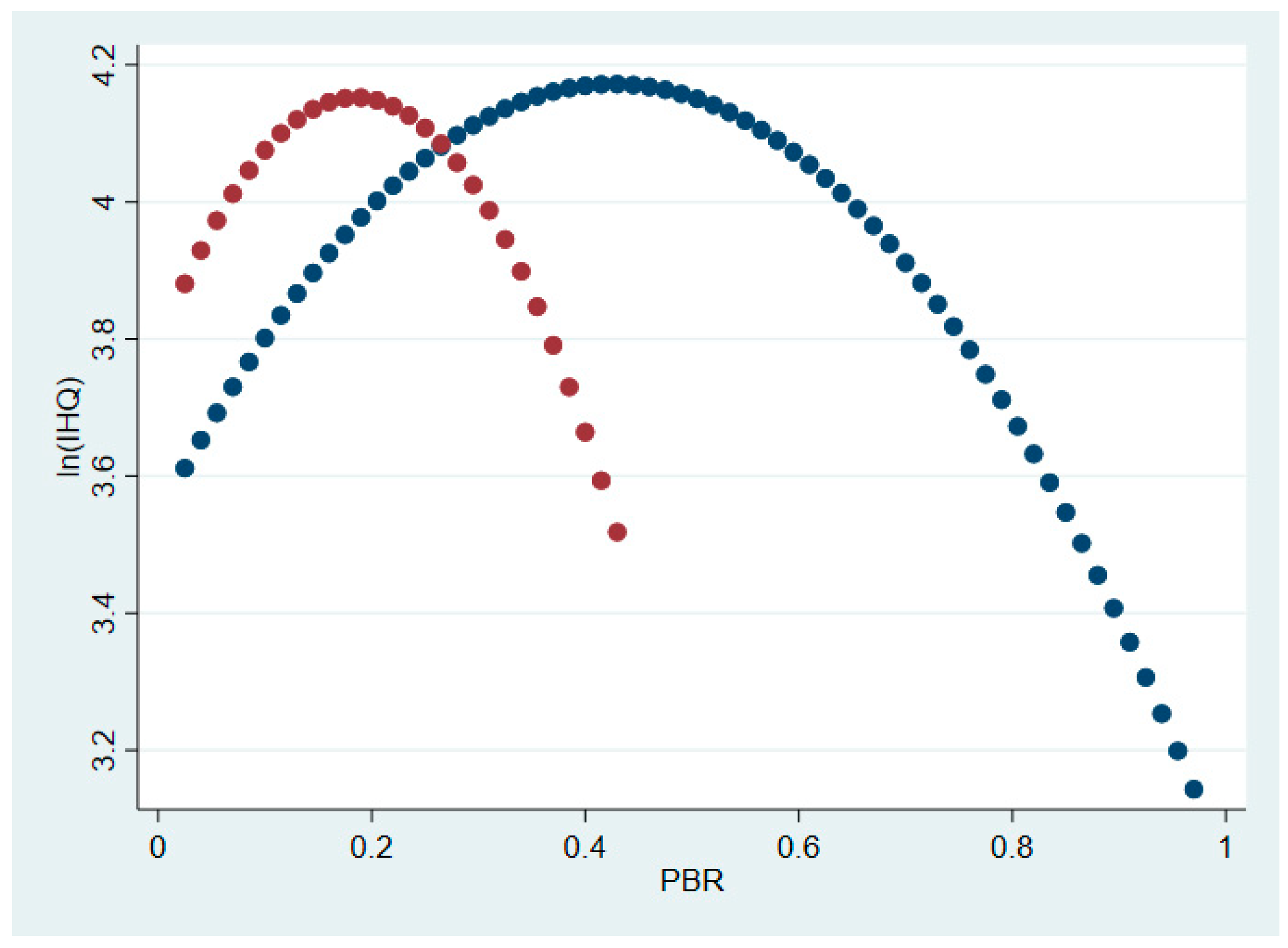

3.1.1. Hypothesis 1: Main Effects

3.1.2. Hypothesis 1: Interaction Effects

3.1.3. Hypothesis 2: The Effect of Research Impact on the Relationship between PBR and Healthcare Quality

3.2. Robustness Checks for Hypotheses 1 and 2

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Fisher, E.S.; Wennberg, D.E.; Stukel, T.A.; Gottlieb, D.J.; Lucas, F.L.; Pinder, E.L. The implications of regional variations in medicare spending. Part 1: The content, quality, and accessibility of care. Ann. Intern. Med. 2003, 138, 273–287. [Google Scholar] [CrossRef] [PubMed]

- Fisher, E.S.; Bynum, J.P.W.; Skinner, J.S. Slowing the growth of health care costs—Lessons from regional variation. N. Engl. J. Med. 2009, 360, 849–852. [Google Scholar] [CrossRef] [PubMed]

- Fisher, E.S.; Welch, H.G. Avoiding the unintended consequences of growth in medical care: How might more be worse? JAMA 1999, 281, 446–453. [Google Scholar] [CrossRef] [PubMed]

- Sirovich, B.E.; Gottlieb, D.J.; Welch, H.G.; Fischer, E.S. Regional variations in health care intensity and physician perceptions of quality of care. Ann. Intern. Med. 2006, 144, 641–649. [Google Scholar] [CrossRef] [PubMed]

- Aaron, H.J.; Ginsburg, P.B. Is health spending excessive? If so, what can we do about it? Health Aff. 2009, 28, 1260–1275. [Google Scholar] [CrossRef] [PubMed]

- Cooper, R.A. States with more physicians have better-quality health care. Health Aff. 2009, 28, 91–102. [Google Scholar] [CrossRef] [PubMed]

- Skinner, J.; Chandra, A.; Goodma, D.; Fischer, E.S. The Elusive connection between health care spending and quality. Health Aff. 2009, 28, 19–23. [Google Scholar] [CrossRef] [PubMed]

- Starfield, B.; Shi, L.; Macinko, J. Physicians and quality: Answering the wrong question. Health Aff. 2009, 28, 596–597. [Google Scholar] [CrossRef] [PubMed]

- Watson, D.E.; McGrail, K.M. More doctors or better care? Health Q 2009, 12, 101–104. [Google Scholar] [CrossRef]

- Goodman, D.C.; Fisher, E.S. Physician workforce crisis? Wrong diagnosis, wrong prescription. N. Engl. J. Med. 2008, 358, 1658–1661. [Google Scholar] [CrossRef] [PubMed]

- Cohen, W.M.; Klepper, S.A. Reprise of Size and R&D. Econ. J. 1996, 106, 925–951. [Google Scholar]

- Chang, T.S.; Huang, K.Y.; Chang, C.M.; Lin, C.H.; Su, Y.C.; Lee, C.C. The association of hospital spending intensity and cancer outcomes: A population-based study in an Asian country. Oncologist 2014, 9, 990–998. [Google Scholar] [CrossRef] [PubMed]

- Baicker, K.; Chandra, A. The Health Care Jobs Fallacy. N Engl. J. Med. 2012, 366, 2433–2435. [Google Scholar] [CrossRef] [PubMed]

- Baicker, K.; Chandra, A. Medicare spending, the physician workforce, and beneficiaries’ quality of care. Health Aff. 2004, 23, 184–197. [Google Scholar] [CrossRef] [PubMed]

- Gallet, C.A.; Doucouliagos, H. The impact of healthcare spending on health outcomes: A meta-regression analysis. Soc. Sci. Med. 2017, 179, 9–17. [Google Scholar] [CrossRef] [PubMed]

- Hvenegaard, A.; Nielsen, A.J.; Street, A.; Gyrd-Hansen, D. Exploring the relationship between costs and quality: Does the joint evaluation of costs and quality alter the ranking of Danish hospital departments? Eur. J. Health. Econ. 2011, 12, 541–551. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.E.; Park, E.C.; Jang, S.Y.; Lee, S.A.; Choy, Y.S.; Kim, T.H. Effects of Physician Volume on Readmission and Mortality in Elderly Patients with Heart Failure: Nationwide Cohort Study. Yonsei. Med. J. 2018, 59, 243–251. [Google Scholar] [CrossRef] [PubMed]

- Joynt, K.E.; Orav, E.J.; Jha, A.K. Physician volume, specialty, and outcomes of care for patients with heart failure. Circ. Heart Fail. 2013, 6, 890–897. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.E.; Kim, T.H.; Cho, K.H.; Han, K.T.; Park, E.C. The association between number of doctors per bed and readmission of elderly patients with pneumonia in South Korea. BMC Health Serv. Res. 2017, 17, 393. [Google Scholar] [CrossRef] [PubMed]

- Srinivas, V.S.; Hailpern, S.M.; Koss, E.; Monrad, E.S. Effect of Physician Volume on the Relationship Between Hospital Volume and Mortality During Primary Angioplasty. J. Am. Coll. Cardiol. 2009, 53, 574–579. [Google Scholar] [CrossRef] [PubMed]

- Birkmeyer, J.D.; Stukel, T.A.; Siewers, A.E.; Goodney, P.P.; Wennberg, D.E.; Lucas, F.L. Surgeon volume and operative mortality in the United States. N. Engl. J. Med. 2003, 349, 2117–2127. [Google Scholar] [CrossRef] [PubMed]

- Coase, R. The Nature of the firm. Economica 1937, 4, 386–405. [Google Scholar] [CrossRef]

- Demange, G. On group stability in hierarchies and networks. J. Polit. Econ. 2004, 112, 754–778. [Google Scholar] [CrossRef]

- Guesnerie, R.; Oddou, C. Increasing returns to size and their limits. Scand. J. Econ. 1988, 1, 259–273. [Google Scholar] [CrossRef]

- Tchetchik, A.; Grinstein, A.; Manes, E.; Shapira, D.; Durst, R. From research to practice: Which research strategy contributes more to clinical excellence? Comparing high-volume versus high-quality biomedical research. PLoS ONE 2015, 10, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Alotaibi, N.M.; Ibrahim, G.M.; Wang, J.; Guha, D.; Mamdani, M.; Schweizer, T.A.; Macdonald, R.L. Neurosurgeon academic impact is associated with clinical outcomes after clipping of ruptured intracranial aneurysms. PLoS ONE 2017, 12, e0181521. [Google Scholar] [CrossRef] [PubMed]

- Donabedian, A. Basic approaches to assessment: Structure, process, and outcome. In The Definition of Quality and Approaches to Its Assessment; Health Administration Press: Chicago, IL, USA, 1980; pp. 77–128. [Google Scholar]

- Di Giorgio, L.; Filippini, M.; Masiero, G. Is higher nursing home quality more costly? Eur. J. Health Econ. 2015. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Radford, M.J.; Wang, Y.; Marciniak, T.A.; Krumholz, H.M. Do “America’s best hospitals” perform better for acute myocardial infarction? N. Engl. J. Med. 1999, 340, 286–292. [Google Scholar] [CrossRef] [PubMed]

- Rosenthal, G.E.; Chren, M.M.; Lasek, R.J.; Landefeld, C.S. The annual guide to “America’s best hospitals”. Evidence of influence among health care leaders. J. Gen. Intern. Med. 1996, 11, 366–369. [Google Scholar] [CrossRef] [PubMed]

- Comarow, A. Best Hospitals 2012–13: How and Why We Rate and Rank Hospitals. 2015. Available online: http://health.usnews.com/health-news/best-hospitals/articles/2015/05/20/faq-how-and-why-we-rate-and-rank-hospitals?int=ab2909&int=ad4609 (accessed on 2 March 2019).

- Hirsch, J.E. An index to quantify an individual’s scientific research output. Proc. Natl. Acad. Sci. USA 2005, 102, 16569–16572. [Google Scholar] [CrossRef] [PubMed]

- Health Guide USA, U.S. Teaching Hospitals 2012. Available online: https://www.healthguideusa.org/teaching_hospitals.htm (accessed on 2 March 2019).

- Baum, C.F.; Schaffer, M.E.; Stillman, S. Ivreg2: Stata Module for Extended Instrumental Variables/2SLS, GMM and AC/HAC, LIML and k-class Regression. 2010. Available online: http://ideas.repec.org/c/boc/bocode/s425401.html (accessed on 2 March 2019).

- StataCorp. Stata Statistical Software: Release 15; StataCorp LLC: College Station, TX, USA, 2017. [Google Scholar]

- Baum, C.F. Instrumental Variables Estimation in Stata, Faculty Micro Resource Center, 2007 Boston College. Available online: http://mayoral.iae-csic.org/IV_2015/StataIV_baum.pdf (accessed on 2 March 2019).

- Lind, J.; Mehlum, H. UTEST. Stata module to test for a U-shaped relationship. 2014. Available online: http://EconPapers.repec.org/RePEc:boc:bocode:s456874 (accessed on 2 March 2019).

- The OECD. Releasing Health Care System Resources: Tackling Ineffective Spending and Waste; OECD Publishing: Paris, France; Available online: http://oecdobserver.org/news/fullstory.php/aid/5758/ (accessed on 2 March 2019).

- Mukamel, D.B.; Spector, W.D. Nursing home costs and risk-adjusted outcome measures of quality. Med. Care 2000, 38, 78–89. [Google Scholar] [CrossRef] [PubMed]

- Weech-Maldonado, R.; Shea, D.; Mor, V. The relationship between quality of care and costs in nursing homes. Am. J. Med. Qual. 2006, 21, 40–48. [Google Scholar] [CrossRef] [PubMed]

| Variable | Description | Descriptive Statistics * |

|---|---|---|

| Dependent Variables | ||

| IHQ(1) | Department’s IHQ, in 2012–2013 expressed as a composite score between 0 and 100 | 50.81 (15.08) Min–Max: 10.90–100.0 |

| Survival (1) | Department’s patient survival rate—a sub-dimension of IHQ, for 2012–2013 | 7.71 (1.70) Min–Max: 3–10 |

| Independent Variables | ||

| Research impact (2) | Median H-index for each department | 3.18 (2.40) Min-Max: 0.5–13.5 |

| Research volume (3) | Median number of publications per physician (FTE) per annum for each department starting from the year his/her first paper was published | 1.50 (0.86) Min–Max: 0.18–4.38 |

| PBR (1) | Number of physicians in each specialty divided by the number of staffed beds | Oncology 0.10 (0.09) Min–Max: 0.008–0.48 Cardiology: 0.17 (0.16) Min–Max: 0.03–1.01 Orthopedics: 0.10 (0.07) Min–Max: 0.007–0.23 |

| PBR_FTEs (1,4) | Number of full-time physicians in each specialty divided by the number of staffed beds | Oncology 0.047 (0.03) Min–Max: 0.006–0.17 Cardiology: 0.08 (0.09) Min–Max: 0.014–0.612 Orthopedics: 0.05 (0.04) Min–Max: 0.01–0.23 |

| For-Profit (5) | =1 if the hospital is a for-profit organization | 0.30 |

| Clinical services (5) | Number of clinical services provided by the hospital | 35.03 (5.17) Min–Max: 21.00–44.00 |

| Length of stay (5) | Average number of days of hospitalization (with a time lag of one year) | 6.27 (0.80) Min–Max: 4.43–7.92 |

| Over 65 (6) | Share of population over 65 years old in the 3 geographically closest zip codes to the hospital | 0.13 (0.03) Min–Max: 0.08–0.19 |

| Median income (5) | Median income of three geographically closest zip codes to the hospital, in current thousands of USD | 40.87 (13.25) Min–Max: 22.95–83.58 |

| Net income (5) | Hospital’s net income/loss per bed in current hundred thousand of US dollars (time lag of one year) | 1.09 (0.97) Min–Max: −0.69–4.03 |

| Patient days (5) | The total number of patient days in hundred thousand (with a time lag of one year) | 20.05 (11.42) Min–Max: 4.10–74.12 |

| Instruments | ||

| Total physicians (1) per bed | Total number of physicians per total beds in each hospital | 1.806 (1.39) Min–Max: 0.10–7.769 |

| Model (1) | Model (2) | ||

|---|---|---|---|

| PBR | 2.481 *** | PBR_FTEs | 5.283 *** |

| (3.27) | (3.05) | ||

| PBR2 | −3.439 *** | (PBR_FTEs)2 | −11.477 *** |

| (−3.04) | (−3.1) | ||

| Orthopedics (1) | −0.057 | −0.048 | |

| (−1.09) | (−0.88) | ||

| Cardiology (1) | 0.042 | 0.037 | |

| (0.71) | (0.58) | ||

| For-Profit | 0.005 | 0.040 | |

| (0.08) | (0.67) | ||

| Length of stay | −0.054 | −0.051 | |

| (−1.53) | (-1.38) | ||

| ln (Median income) | −0.079 | −0.130 | |

| (−0.97) | (−1.48) | ||

| Over 65 | 0.312 | 0.371 | |

| (0.36) | (0.41) | ||

| Patients days | 0.008 *** | 0.010 *** | |

| (3.05) | (3.74) | ||

| Clinical services | 0.015 *** | 0.016 *** | |

| (2.71) | (2.75) | ||

| Net Income | 0.091 *** | 0.092 *** | |

| (3.08) | (3.04) | ||

| Constant | 4.022 *** | 4.422 *** | |

| (4.59) | (4.77) | ||

| Adj-R2 | 0.37 | 0.32 | |

| N | 149 | 149 | |

| Optimal PBR | 0.36 | Optimal PBR_FTEs | 0.23 |

| Model (1) | Model (2) | ||

|---|---|---|---|

| PBR (1,2) | 5.291 *** | PBR_FTEs (1,2) | 7.011 *** |

| (3.46) | (3.42) | ||

| PBR2 (1,2) | −14.890 *** | PBR_FTEs (1,2) | −29.470 *** |

| (−3.79) | (−4.71) | ||

| PBR * # Ortho | −2.980 *** | PBR_FTEs * Ortho | −3.486 *** |

| (−4.10) | (−2.84) | ||

| PBR2 * Ortho | 8.913 *** | (PBR_FTEs)2 * Ortho | 16.45 *** |

| (5.26) | (4.85) | ||

| PBR * Cardio | −3.325 ** | PBR_FTEs * Cardio | −4.676 *** |

| (−2.50) | (−3.47) | ||

| PBR2 * Cardio | 13.950 *** | (PBR_FTEs)2 * Cardio | 25.88 *** |

| (3.95) | (8.21) | ||

| Orthopedics | 0.081 | 0.043 | |

| (0.64) | (0.72) | ||

| Cardiology | 0.106 * | 0.109 | |

| (1.95) | (1.14) | ||

| For-Profit | −0.040 | 0.021 | |

| (−0.55) | (0.28) | ||

| Length of stay | −0.036 | −0.029 | |

| (−0.99) | (−0.85) | ||

| ln (Median income) | −0.060 | −0.0820 | |

| (−0.71) | (−1.03) | ||

| Over 65 | −0.834 | −0.440 | |

| (−0.75) | (−0.39) | ||

| Patients days | 0.001 | 0.004 | |

| (0.44) | (0.96) | ||

| Clinical services | 0.012 | 0.009 | |

| (1.28) | (0.91) | ||

| Net income | 4.250 *** | 4.950 *** | |

| (2.94) | (3.51) | ||

| Constant | −8.970 * | −10.84 ** | |

| (−2.00) | (−2.62) | ||

| Adj-R2 | 0.59 | 0.44 | |

| N | 149 | 149 | |

| Optimal PBR | Oncology 0.18 | Optimal PBR_FTEs | Oncology 0.12 |

| Orthopedics 0.19 | Orthopedics 0.13 | ||

| Cardiology 0.39 | Cardiology 0.32 |

| Model (1) | Model (2) | ||

|---|---|---|---|

| PBR | 1.808 ** | PBR_FTEs | 4.036 ** |

| (2.36) | (2.06) | ||

| PBR | −2.782 ** | (PBR_FTEs)2 | −9.391 ** |

| (−2.54) | (−2.37) | ||

| Orthopedics (1) | −0.011 | −0.007 | |

| (−0.20) | (−0.13) | ||

| Cardiology (1) | 0.087 | 0.077 | |

| (1.40) | (1.13) | ||

| For-Profit | 0.030 | 0.053 | |

| (0.56) | (0.94) | ||

| Length of stay | −0.060 * | −0.057 | |

| (−1.78) | (−1.64) | ||

| ln(median income) | −0.029 | −0.075 | |

| (−0.36) | (−0.83) | ||

| Over 65 | 0.529 | 0.535 | |

| (0.63) | (0.61) | ||

| Patients days | 0.008 *** | 0.009 ** | |

| (3.01) | (3.51) | ||

| Clinical services | 0.014 *** | 0.015 *** | |

| (2.65) | (2.73) | ||

| Net Income | 0.091 *** | 0.090 *** | |

| (3.26) | (3.18) | ||

| Research impact | 0.029 ** | 0.025 * | |

| (2.43) | (1.86) | ||

| Research volume | 0.019 | 0.020 | |

| (0.49) | (0.53) | ||

| Constant | 3.474 *** | 3.852 *** | |

| (3.97) | (4.08) | ||

| R2 | 0.38 | 0.39 | |

| N | 149 | 149 | |

| Optimal PBR | 0.32 | Optimal PBR_FTEs | 0.21 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manes, E.; Tchetchik, A.; Tobol, Y.; Durst, R.; Chodick, G. An Empirical Investigation of “Physician Congestion” in U.S. University Hospitals. Int. J. Environ. Res. Public Health 2019, 16, 761. https://doi.org/10.3390/ijerph16050761

Manes E, Tchetchik A, Tobol Y, Durst R, Chodick G. An Empirical Investigation of “Physician Congestion” in U.S. University Hospitals. International Journal of Environmental Research and Public Health. 2019; 16(5):761. https://doi.org/10.3390/ijerph16050761

Chicago/Turabian StyleManes, Eran, Anat Tchetchik, Yosef Tobol, Ronen Durst, and Gabriel Chodick. 2019. "An Empirical Investigation of “Physician Congestion” in U.S. University Hospitals" International Journal of Environmental Research and Public Health 16, no. 5: 761. https://doi.org/10.3390/ijerph16050761

APA StyleManes, E., Tchetchik, A., Tobol, Y., Durst, R., & Chodick, G. (2019). An Empirical Investigation of “Physician Congestion” in U.S. University Hospitals. International Journal of Environmental Research and Public Health, 16(5), 761. https://doi.org/10.3390/ijerph16050761