Rapid Urban Mapping Using SAR/Optical Imagery Synergy

Abstract

:1. Introduction

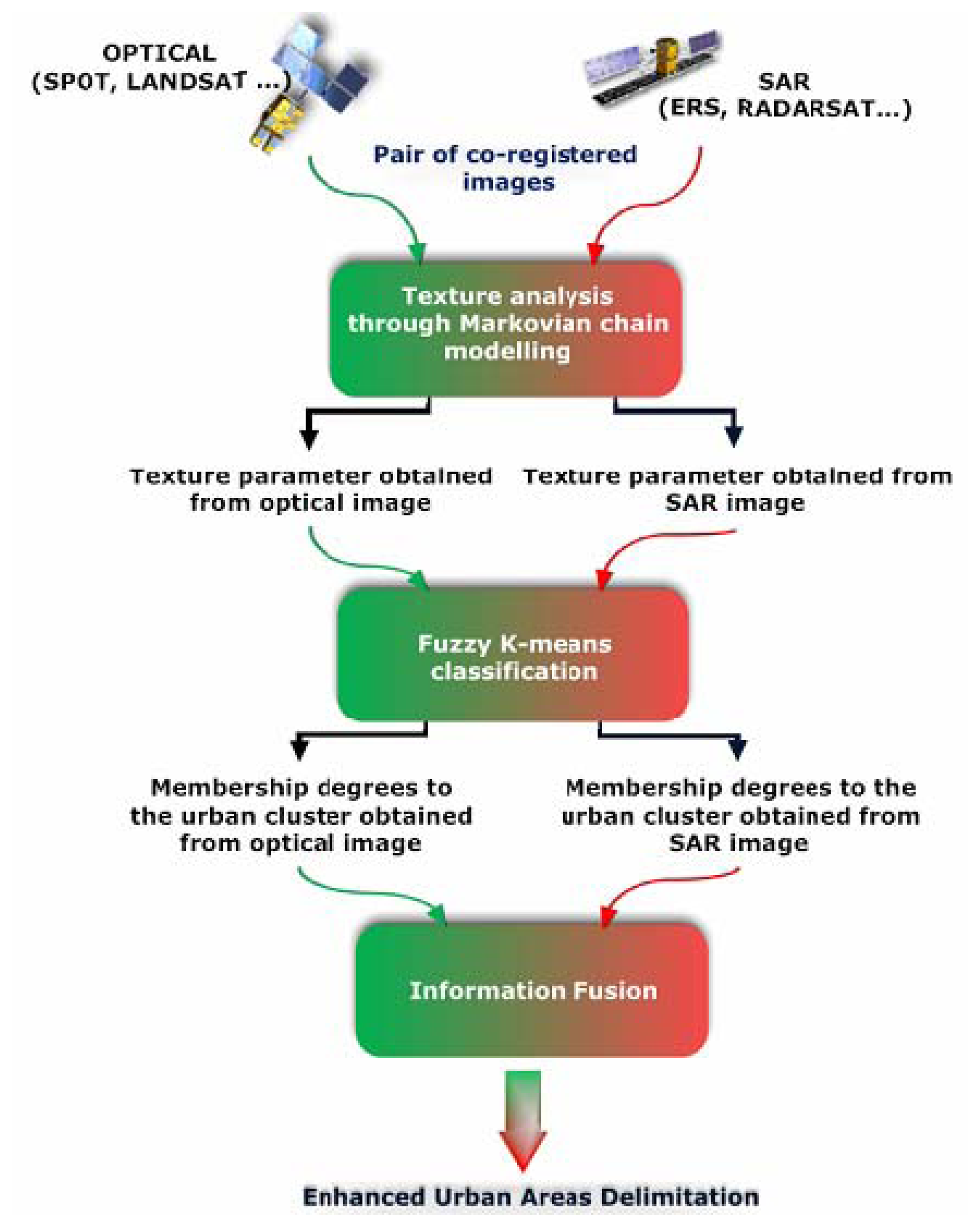

2. Developed procedure

- -

- texture analysis,

- -

- fuzzy K-means clustering,

- -

- information fusion.

2.1. Texture analysis

- -

- GMRF is quite a simple model requiring relatively few parameters and a reduced processing time which makes it suitable for rapid mapping purposes,

- -

- with respect to the complexity of optical and SAR image textures, its parameters can discriminate these different textures, mainly those of urban areas,

- -

- moreover the robustness of the employed technique for parameters estimation leads to an accurate delineation of the urban areas,

- -

- finally the parameters are local mean independent.

- -

- d represents the direction,

- -

- Xs is the grey level value of pixel S, its neighbourhood in direction d and Xr ∈ Vs,

- -

- ZVd(s) is the partition function,

- -

- μ is the local mean,

- -

- β and λ are the texture parameters of the model.

2.2. Fuzzy K-means clustering

- -

- Z is the data matrix,

- -

- U =[μik] ∈ Mfc is a fuzzy partition of Z;

- -

- V= [v1, v2,…, vc], vt ε Rn is a vector of cluster prototypes to be determined ;

- -

- ‖zk– vi‖2 is a dissimilarity measure (Euclidean distance) between the sample zk and the center vi of the specific cluster i ;

- -

- A is the distance norm matrix ;

- -

- m ∈ (1, ∞) is a parameter that determines the fuzziness of the resulting clusters.

- -

- The minimization of the objective function J(Z;U,V) under the constraint leads to the iteration of the following steps :

2.3. Information fusion

- -

- Context Independent Constant Behaviour Operators (CICB): this class is composed of the operators which have the same behaviour whatever the values of information. They are computed without any contextual or external information. They are exclusive.

- -

- Context Independent Variable Behaviour Operators (CIVB): they are context independent but their behaviour depends on the values of x and y.

- -

- Context Dependent Operators (CD): they depend not only on x and y but also on global knowledge or measures on the sources to be fused (like conflict between sources or reliability of sources). For instance, it is possible to build operators which behave in a conjunctive way if the sources are consonant, in a disjunctive way if they are dissonant, and like a compromise if they are partly conflicting [28].

- -

- it is conjunctive if the two sources have low conflict,

- -

- it is disjunctive if the sources have high conflict,

- -

- it behaves in a compromise way in case of partial conflict. The arithmetical mean belonging to the class of Mean Operators is used in this study.

3. Experimental results

3.1. Test sites and data set description

3.2. Parameters involved

- -

- c = the number of clusters = 2,

- -

- m = the fuzzy exponent that determines the degree of fuzziness of the final solution; with the smallest value of 1, the solution is a hard partition, i.e., the results are not fuzzy at all. Most applications of fuzzy K-means use a value of m between 1 and 2; in our study, m = 2,

- -

- ε = the stopping criteria = 0.001(gives reasonable convergence),

- -

- l = the maximum number of iterations = 5.

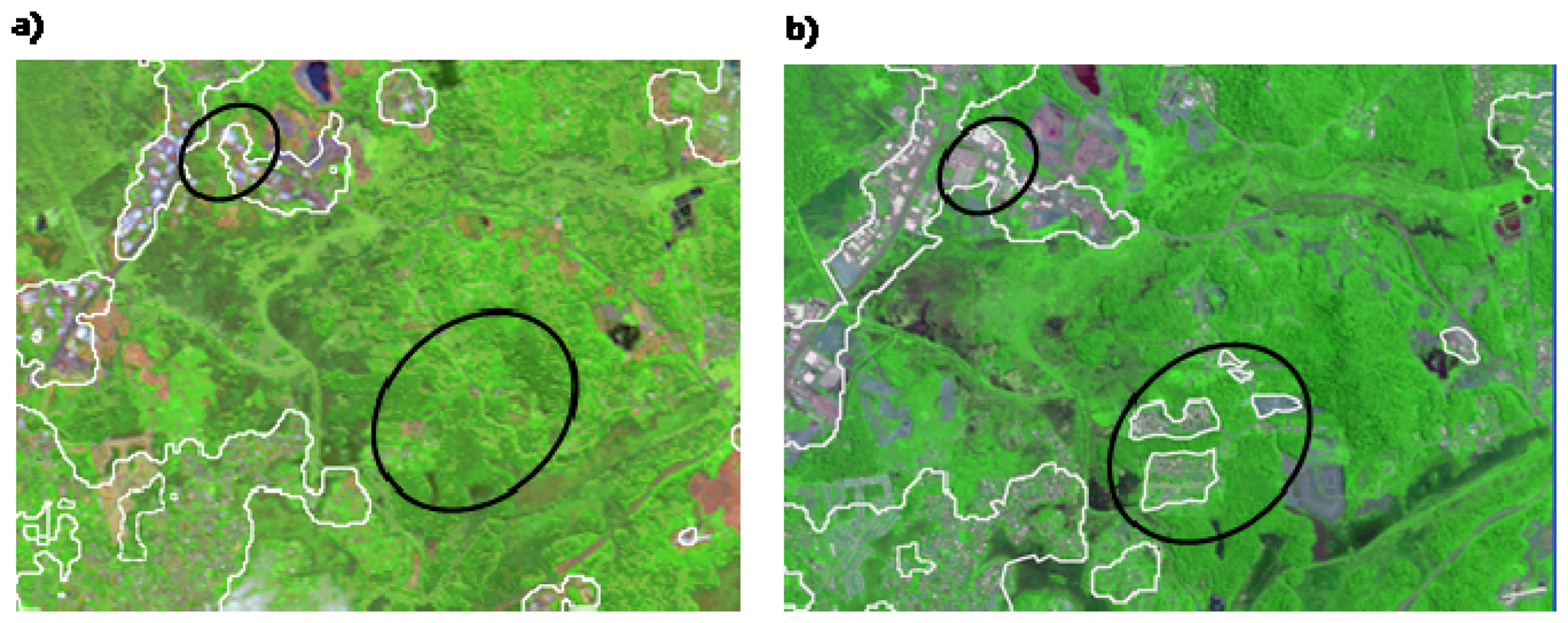

3.2. Results analysis for urban areas extraction

3.3 Results for monitoring the spatial extension of urban growth

4. Conclusions

Acknowledgments

References and Notes

- Jensen, J.R.; Cowen, D.C. Remote sensing of urban/suburb an infrastructure and socio-economic attributes. Photogramm. Eng. Remote Sens. 1999, 65, 611–622. [Google Scholar]

- Herold, M.; Goldstein, N.C.; Clarke, K.C. The spatiotemporal form of urban growth: Measurement, analysis and modeling. Remote Sens. Environ. 2003, 86, 286–302. [Google Scholar]

- Gomez-Chova, L.; Fernandez-Prieto, D.; Calpe, J.; Soria, E.; Vila, J.; Camps-Valls, G. Urban monitoring using multi-temporal SAR and multi-spectral data. Patt. Recog. Lett. 2006, 27, 234–243. [Google Scholar]

- Gamba, P.; Dell′Acqua, F.; Dasarathy, B. V. Urban remote sensing using multiple data sets: Past, present, and future. Inf. Fusion 2005, 6, 319–326. [Google Scholar]

- Esch, T.; Roth, A.; Dech, S. Analysis of urban land use pattern based on high resolution radar imagery. International Geoscience and Remote Sensing Symposium (IGARSS), Barcelona, Spain; 2008. [Google Scholar]

- Durieux, L.; Lagabrielle, E.; Nelson, A. A method for monitoring building construction in urban sprawl areas using object-based analysis of Spot 5 images and existing GIS data. ISPRS J. Photogramm. Remote Sens. 2008, 63, 399–408. [Google Scholar]

- Donnay, J. P.; Barnsley, M. J.; Longley, P. A. Remote sensing and urban analysis; Taylor, Francis: London, England, 2001; pp. 3–18. [Google Scholar]

- Benediktsson, J.A.; Pesaresi, M.; Arnason, K. Classification and feature extraction for remote sensing images from urban areas based on morphological transformations. IEEE Trans.Geosci. Remote Sens. 2003, 41, 1940–1949. [Google Scholar]

- Myint, S.W.; Lam, N.S.-N.; Tyler, J.M. Wavelets for urban spatial feature discrimination: Comparisons with fractal, spatial autocorrelation, and spatial co-occurrence approaches. Photogramm. Eng. Remote Sens. 2004, 70, 803–812. [Google Scholar]

- Pesaresi, M.; Gerhardinger, A.; Kayitakire, F. Monitoring settlement dynamics by anisotropic textural analysis of panchromatic VHR data. 2007 Urban Remote Sensing Joint Event, Pairs, France, Apirl, 2007.

- Dell′Acqua, F.; Gamba, P.; Lisini, G. A semi-automatic high resolution SAR data interpretation procedure. Proc. of Photogrammetric Image Analysis 07, Munich, Germany, September, 2007.

- Dell′Acqua, F.; Gamba, P. Texture-based characterization of urban environments on satellite SAR images. IEEE Trans. Geosci. Remote Sens. 2003, 41, 153–159. [Google Scholar]

- Chen, C.H. Handbook of pattern recognition and computer vision; World Scientific Publishing Company: Singapore, Singapore, 1993. [Google Scholar]

- Alhaddad, B.I.; Burns, M.C.; Cladera, J.R. Texture analysis for correcting and detecting classification structures in urban land uses “metropolitan area case study - Spain”. Urban Remote Sensing Joint Event, URS, Paris, France; 2007. [Google Scholar]

- Dekker, R.J. Texture analysis and classification of ERS SAR images for map updating of urban areas in the Netherlands. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1950–1958. [Google Scholar]

- Bouman, C.; Liu, B. Multiple resolution segmentation of textured images. IEEE Trans. Patt. Anal. Mach. Int. 1991, 13, 99–113. [Google Scholar]

- Francos, J.M.; Meiri, Z.A.; Porat, B. Unified texture model based on a 2D world-like decomposition. IEEE Trans.Signal Process. 1993, 41, 2665–2677. [Google Scholar]

- Cohen, F.S.; Cooper, D.B. Simple parallel hierarchical and relaxation algorithms for segmenting noncausal markovian random fields. IEEE Trans. Patt. Anal. Mach. Intell. 1987, PAMI-9, 195–219. [Google Scholar]

- Descombes, X.; Sigelle, M.; Prêteux, F. Estimating Gaussian Markov random field parameters in a nonstationary framework: Application to remote sensing imaging. IEEE Trans. Image Process. 1999, 8, 490–503. [Google Scholar]

- Lorette, A.; Descombes, X.; Zerubia, J. Texture analysis through a Markovian modelling and fuzzy classification: Application to urban area Extraction from Satellite Images. Int. J. Comput. Vis. 2000, 36, 221–236. [Google Scholar]

- Bezdek, J.C. Pattern Recognition with fuzzy objective function algorithms; Plenum Press: New York, 1981. [Google Scholar]

- Hoppner, F.; Klawonn, F.; Kruse, R.; Runkler, T. Fuzzy Cluster Analysis; J. Wiley & Sons: Chichester, England, 1999. [Google Scholar]

- Bloch, I.; Hunter, A.; Appriou, A.; Ayoun, A.; Benferhat, S.; Besnard, P.; Cholvy, L.; Cooke, R.; Cuppens, F.; Dubois, D. Fusion: General concepts and characteristics. Int. J. Intell. Syst. 2001, 16, 1107–1134. [Google Scholar]

- Oussalah, M. Study of some algebraical properties of adaptive combination rules. Fuzzy Set. Syst. 2000, 114, 391–409. [Google Scholar]

- Renaud, J.; Levrat, E.; Fonteix, C. Weights determination of OWA operators by parametric identification. Math. Comput. Simulat. 2008, 77, 499–511. [Google Scholar]

- Bloch, I. Information combination operators for data fusion: A comparative review with classification. IEEE Trans. Syst. Man Cybern. A: Syst. Humans 1996, 26, 52–67. [Google Scholar]

- Bloch, I. Information combination operators for data fusion: a comparative review with classification. Proceedings of SPIE - The International Society for Optical Engineering, Bergen, Norway, 13-15 June 1994.

- Chanussot, J.; Mauris, G.; Lambert, P. Fuzzy fusion techniques for linear features detection in multitemporal SAR images. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1292–1305. [Google Scholar]

- Dubois, D.; Prade, H. Combination of information in the framework of possibility theory. In Data Fusion in Robotics and Machine Intelligence; Gonzalez, M., Al Abidi, R.C., Eds.; Academic Press: Boston, USA, 1992; pp. 481–505. [Google Scholar]

- Guernier, V. Combiner analyse spatiale et épidémiologie pour l′aide à la décision dans la lutte contre la tuberculose en Guyane française; Université d′Orléans: Montpellier, France, 2006. [Google Scholar]

- Faure, J.-F.; Fotsing, J.-M.; Baghdadi, N. Couplage de données optiques et radar pour la caractérisation de paysages urbains amazoniens: application à l′île de Cayenne. Géomatique Int. 2005, 14, 379–401. [Google Scholar]

| Bucharest (Romania) * | Cayenne (French Guiana) * | Cayenne (French Guiana) † | ||||

|---|---|---|---|---|---|---|

| Source | kalideos Database | Espace unit IRD Database | Espace unit IRD Database | |||

| Sensor Type | Radarsat -1 | SPOT-4 (B2) | Radarsat-1 | SPOT-4 (B2) | ENVISAT ASAR | SPOT-5 (B2) |

| Date of acquisition | 03/05/2001 | 03/05/2001 | 01/05/2001 | 02/07/2001 | 29/03/2006 | 30/08/2006 |

| Incidence angle (°) | 16.7 | 39 | 36.8 | |||

| Pixel size (m) | 12.5 x 12.5 | 20 x 20 | 12.5 x 12.5 | 20 x 20 | 12.5 x 12.5 | 10 x 10 |

| Coregistration RMSE (pixels) | 0.27 | 1.12 | 1.31 | |||

| Image size | CPU Texture analysis | CPU FKM | CPU Information fusion | |

|---|---|---|---|---|

| Bucharest (2001) | 1,600 * 1,000 | 3 min 20 s | 4 min 12 s | 2 min 10 s |

| Cayenne (2001) | 600 * 600 | 1 min 02 s | 2 min 11 s | 1 min 45 s |

| Cayenne (2006) | 600 * 600 | 4 min 15 s | 5 min 15 s | 3 min 51 s |

| BUCHAREST SITE | SS approach | SAR/optical information fusion | Reference area | |

|---|---|---|---|---|

| Extent of urban areas (km2) | Radarsat -1 | SPOT-4 (B2) | 44.04 | 46.5 |

| 30.4 | 74.3 | |||

| Deviation from reference area (km2) | - 16.1 | + 13.3 | - 2.46 | NA |

| CAYENNE SITE (2001) | SS approach | SAR/optical information fusion | Reference area | |

|---|---|---|---|---|

| Extent of urban areas (km2) | Radarsat -1 | SPOT-4 (B2) | 20.3 | 24.3 |

| 17.8 | 30.2 | |||

| Deviation to reference area (km2) | - 6.5 | + 5.9 | - 4 | NA |

| CAYENNE SITE (2006) | SS approach | SAR/optical information fusion | Reference area | |

|---|---|---|---|---|

| Extent of urban areas (km2) | ENVISAT ASAR | SPOT-5 (B2) | 23.5 | 24.3 |

| 20.3 | 34.6 | |||

| Deviation to reference area (km2) | - 4 | + 10.3 | - 0.8 | NA |

© 2008 by the authors; licensee Molecular Diversity Preservation International, Basel, Switzerland. This article is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Corbane, C.; Faure, J.-F.; Baghdadi, N.; Villeneuve, N.; Petit, M. Rapid Urban Mapping Using SAR/Optical Imagery Synergy. Sensors 2008, 8, 7125-7143. https://doi.org/10.3390/s8117125

Corbane C, Faure J-F, Baghdadi N, Villeneuve N, Petit M. Rapid Urban Mapping Using SAR/Optical Imagery Synergy. Sensors. 2008; 8(11):7125-7143. https://doi.org/10.3390/s8117125

Chicago/Turabian StyleCorbane, Christina, Jean-François Faure, Nicolas Baghdadi, Nicolas Villeneuve, and Michel Petit. 2008. "Rapid Urban Mapping Using SAR/Optical Imagery Synergy" Sensors 8, no. 11: 7125-7143. https://doi.org/10.3390/s8117125

APA StyleCorbane, C., Faure, J.-F., Baghdadi, N., Villeneuve, N., & Petit, M. (2008). Rapid Urban Mapping Using SAR/Optical Imagery Synergy. Sensors, 8(11), 7125-7143. https://doi.org/10.3390/s8117125