Robust Object Detection in Adverse Weather Conditions: ECL-YOLOv11 for Automotive Vision Systems

Abstract

1. Introduction

2. Related Work

2.1. Traditional CNN-Based Object Detection Methods

2.2. Transformer-Based Detection Models

2.3. Detection and Enhancement Methods for Adverse Weather Conditions

2.4. Contribution of This Paper

3. Method

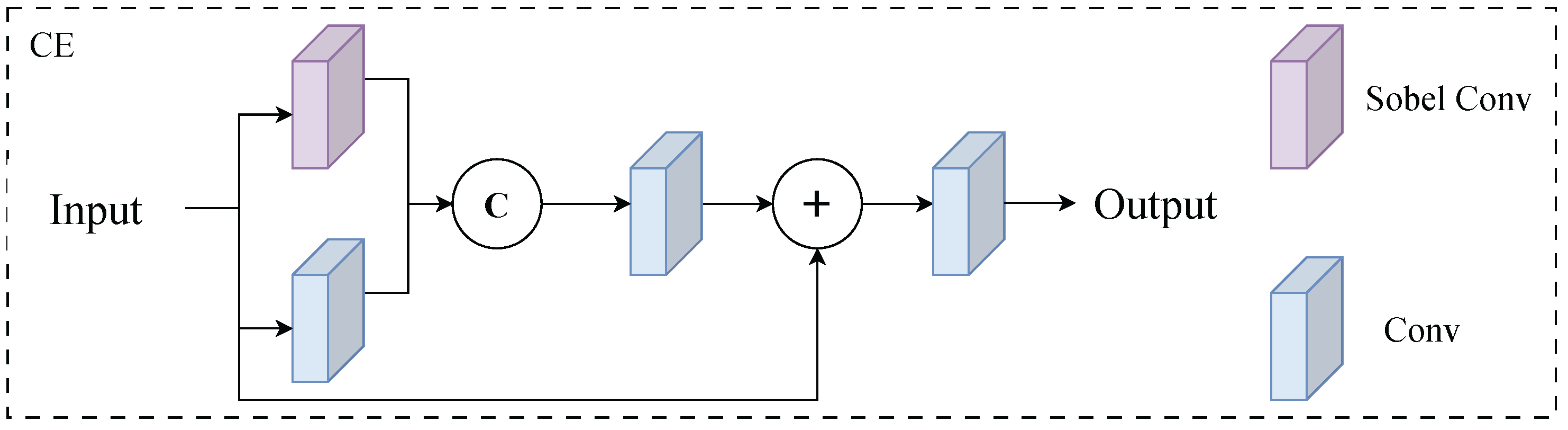

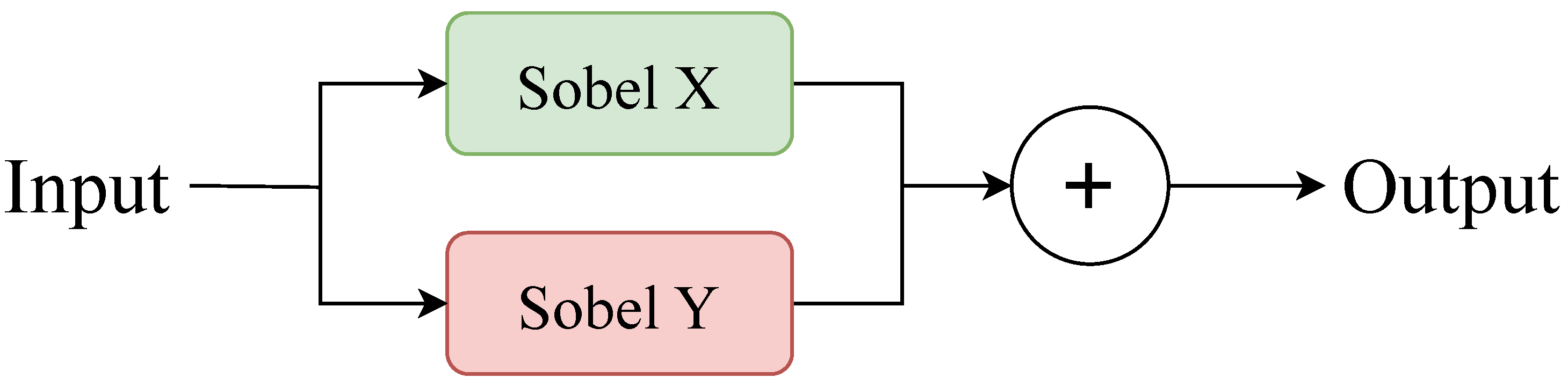

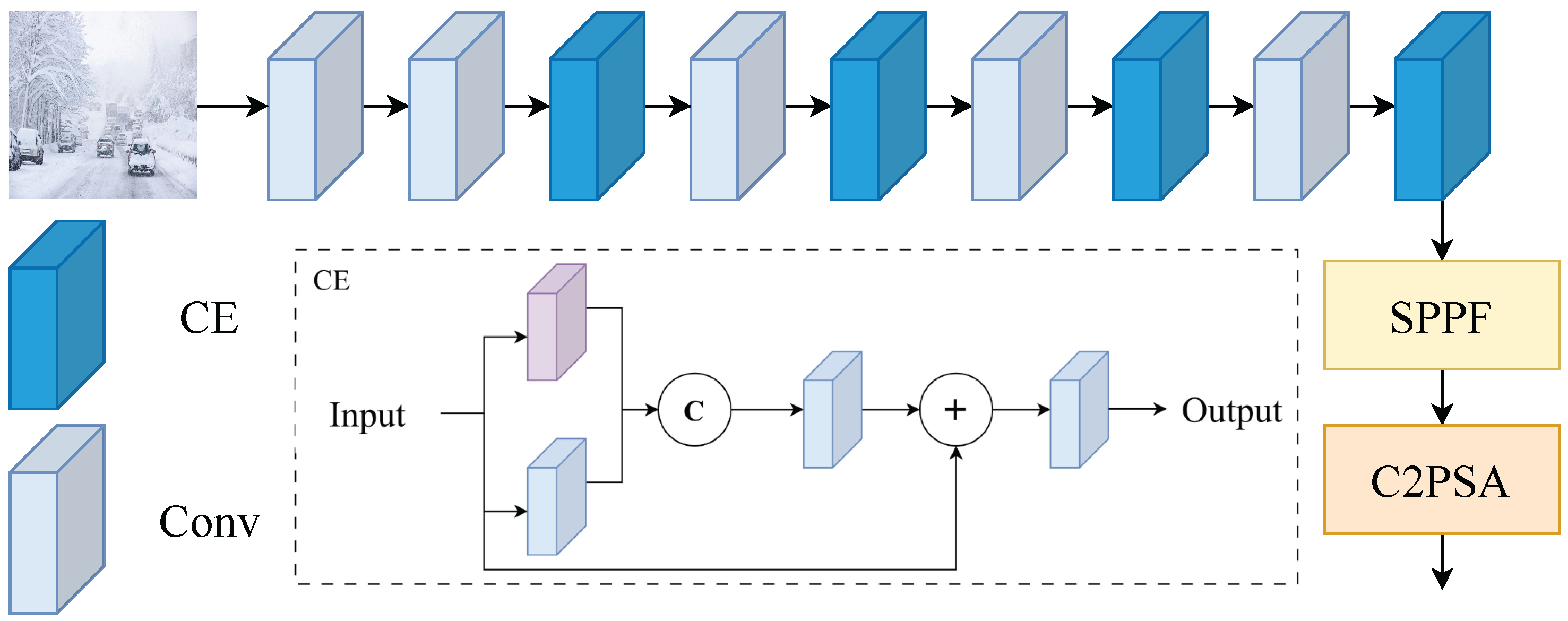

3.1. Edge Enhancement Convolution Module (CE)

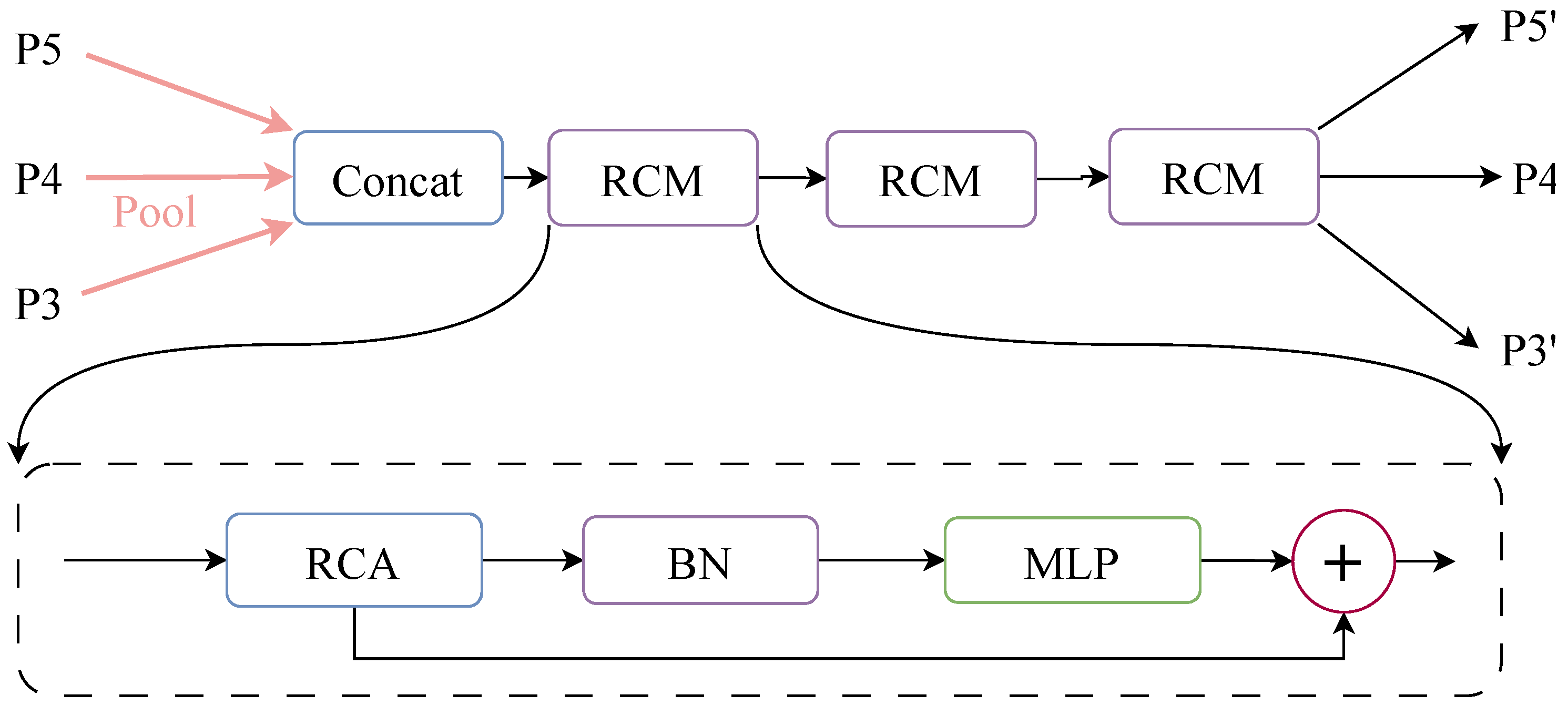

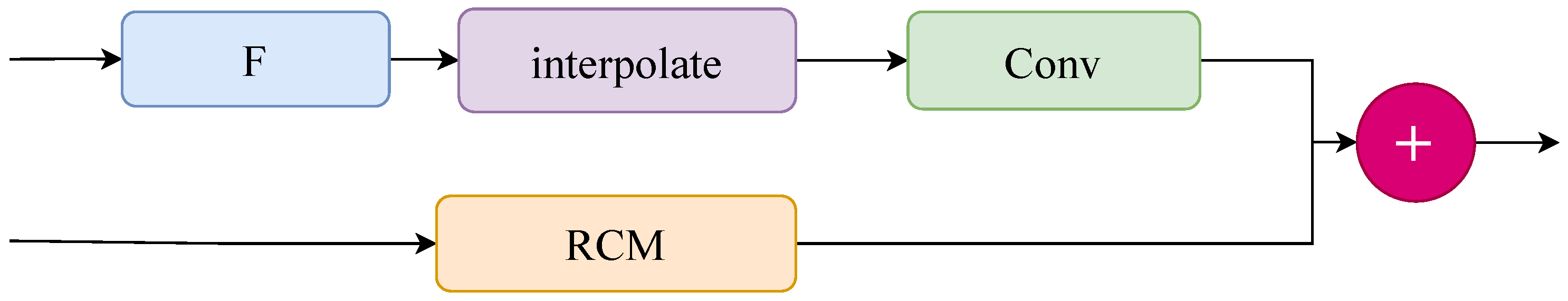

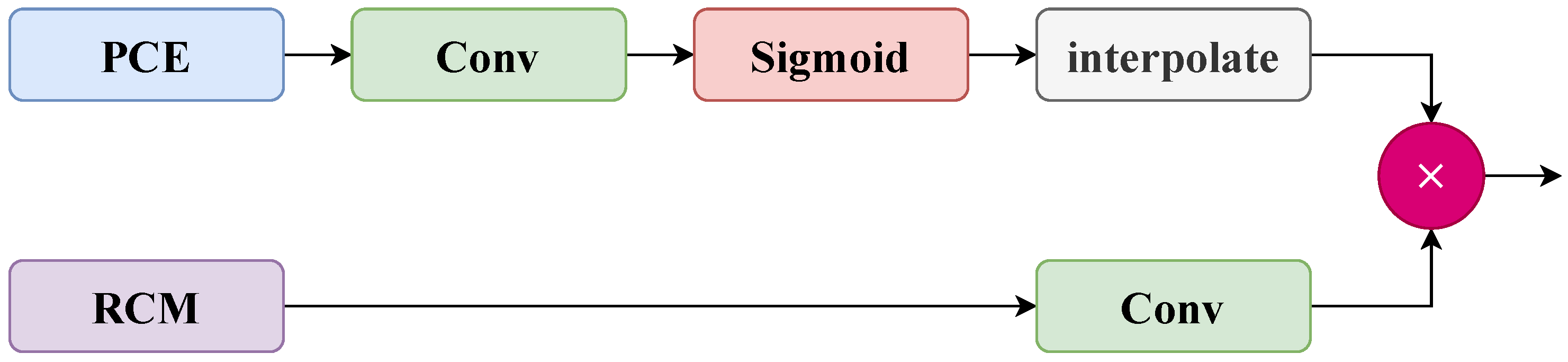

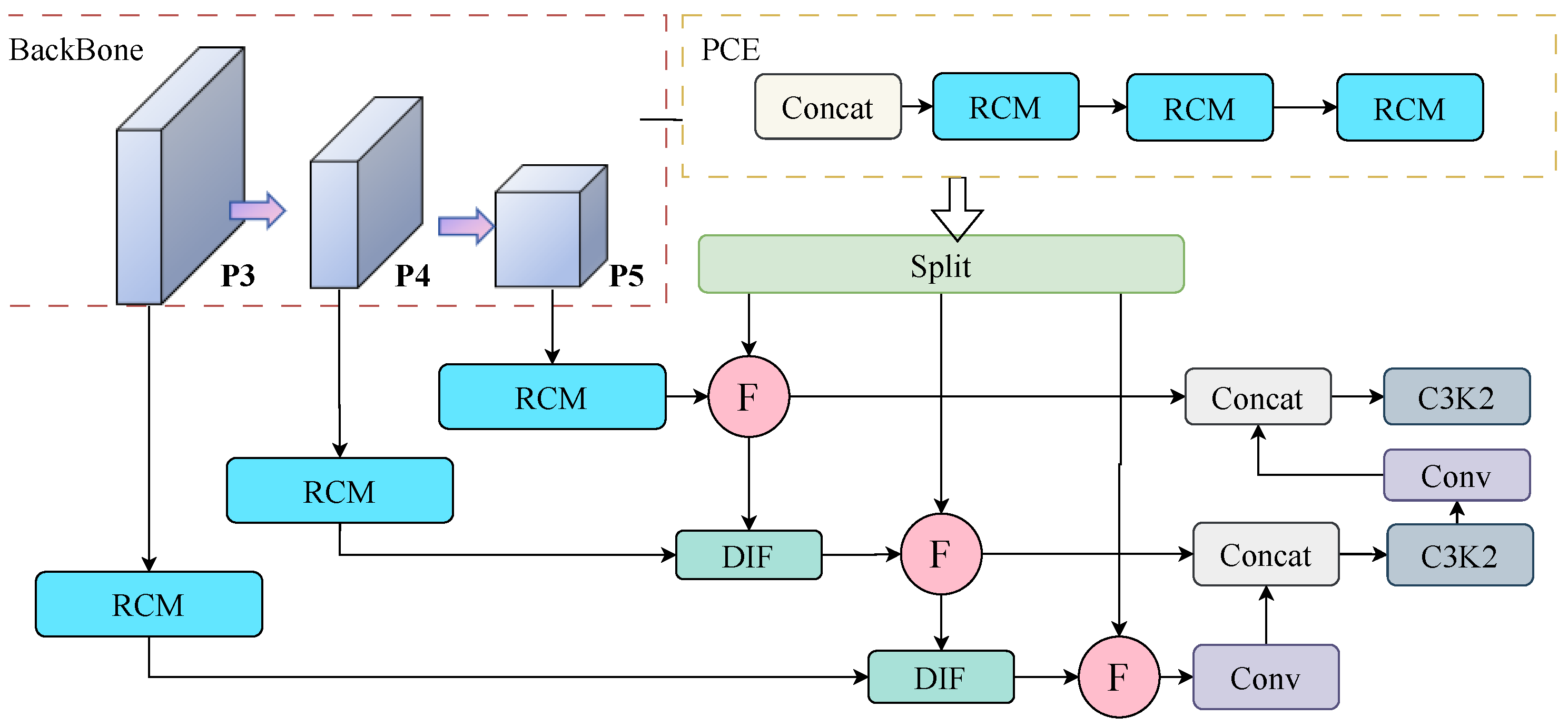

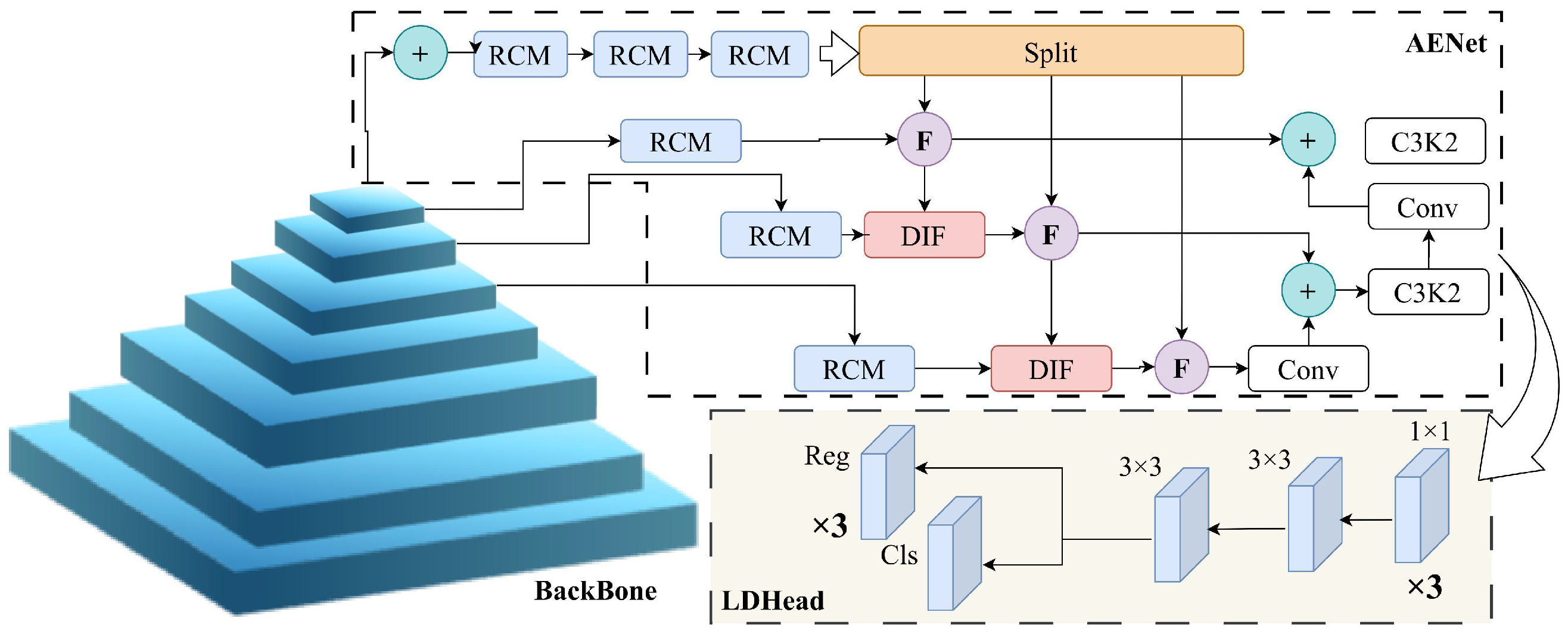

3.2. Context-Guided Multi-Scale Fusion Network (AENet)

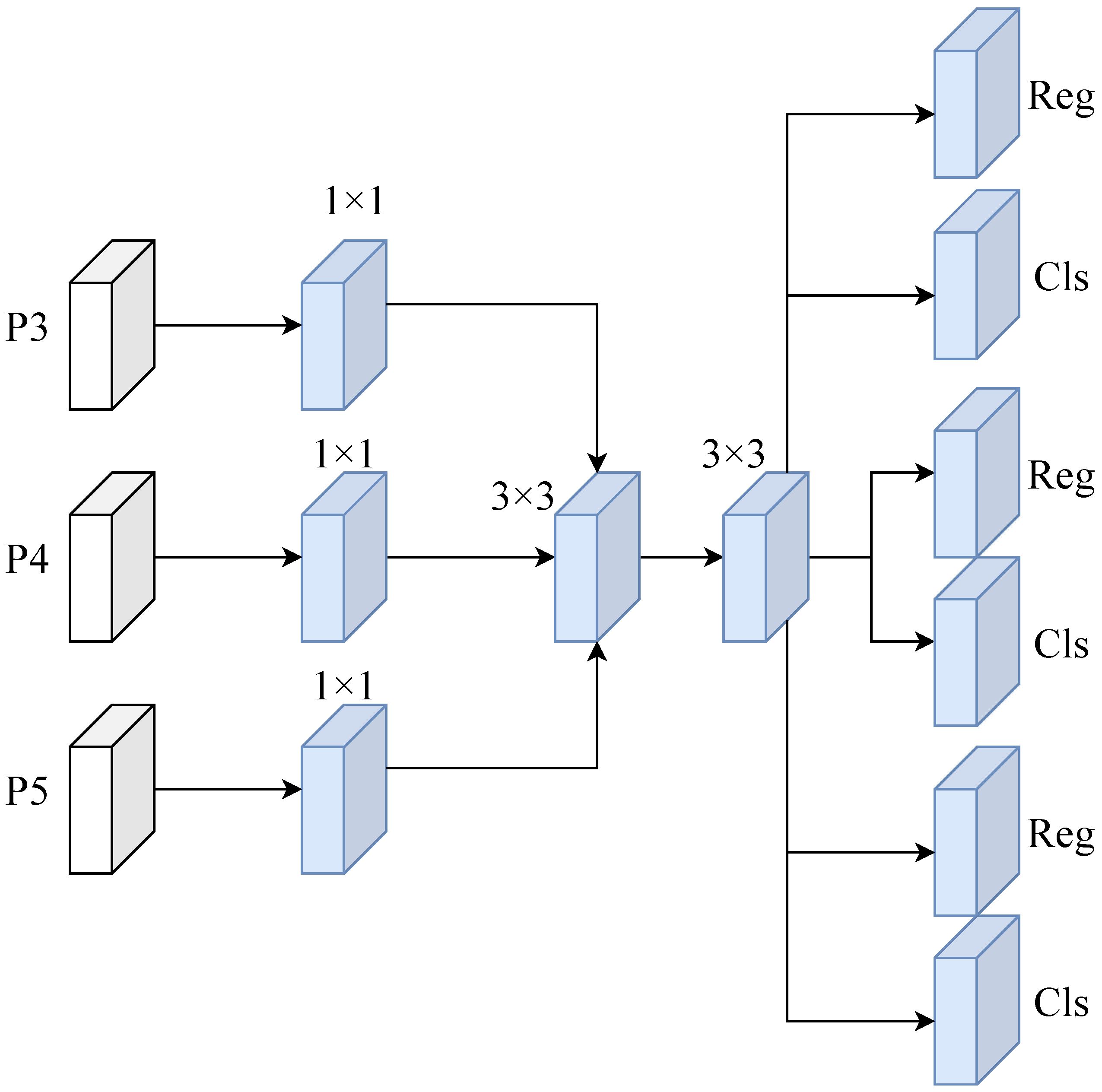

3.3. Lightweight Shared Convolution Detection Head (LDHead)

3.4. Module Integration and Overall Architecture

4. Experiments

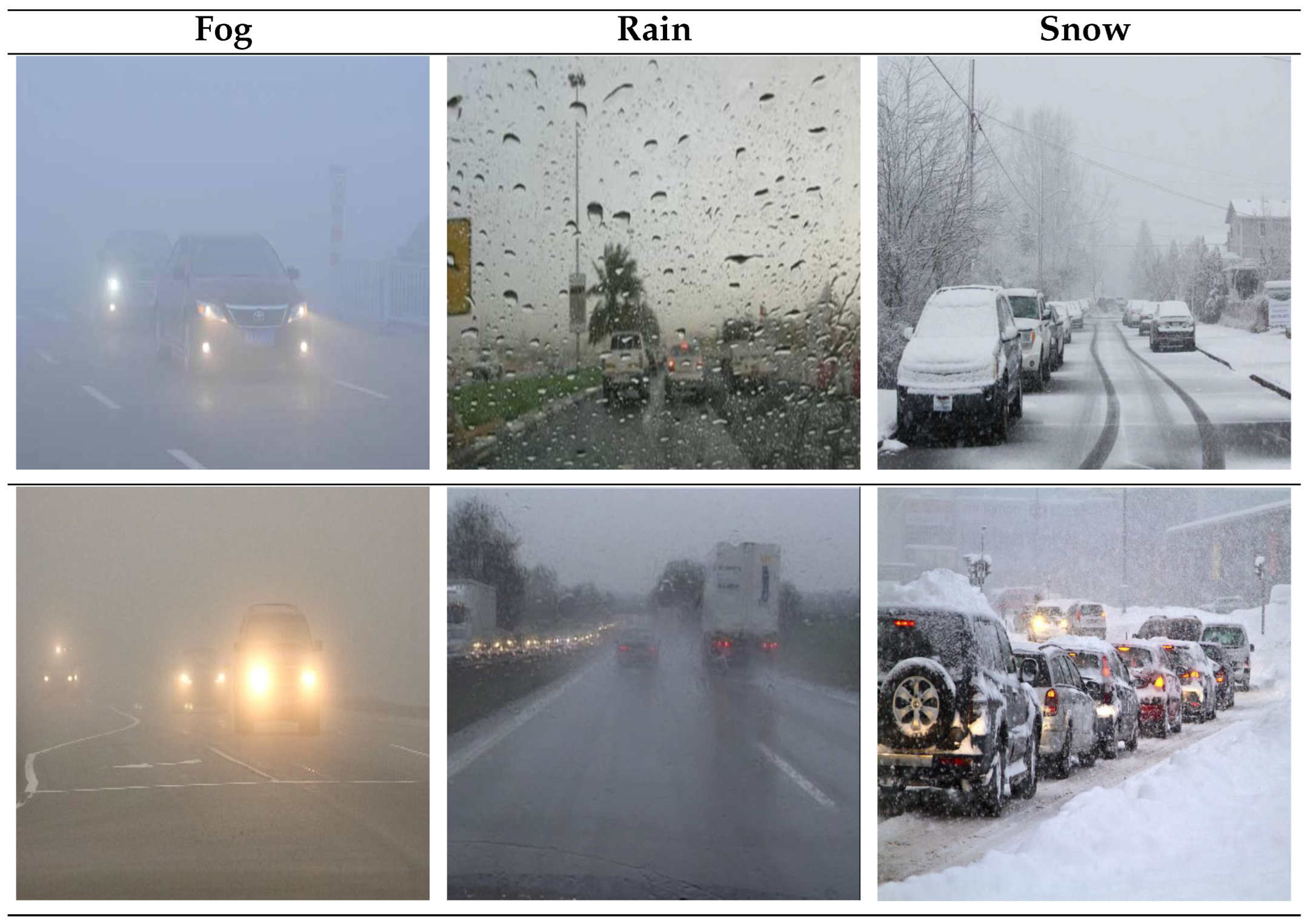

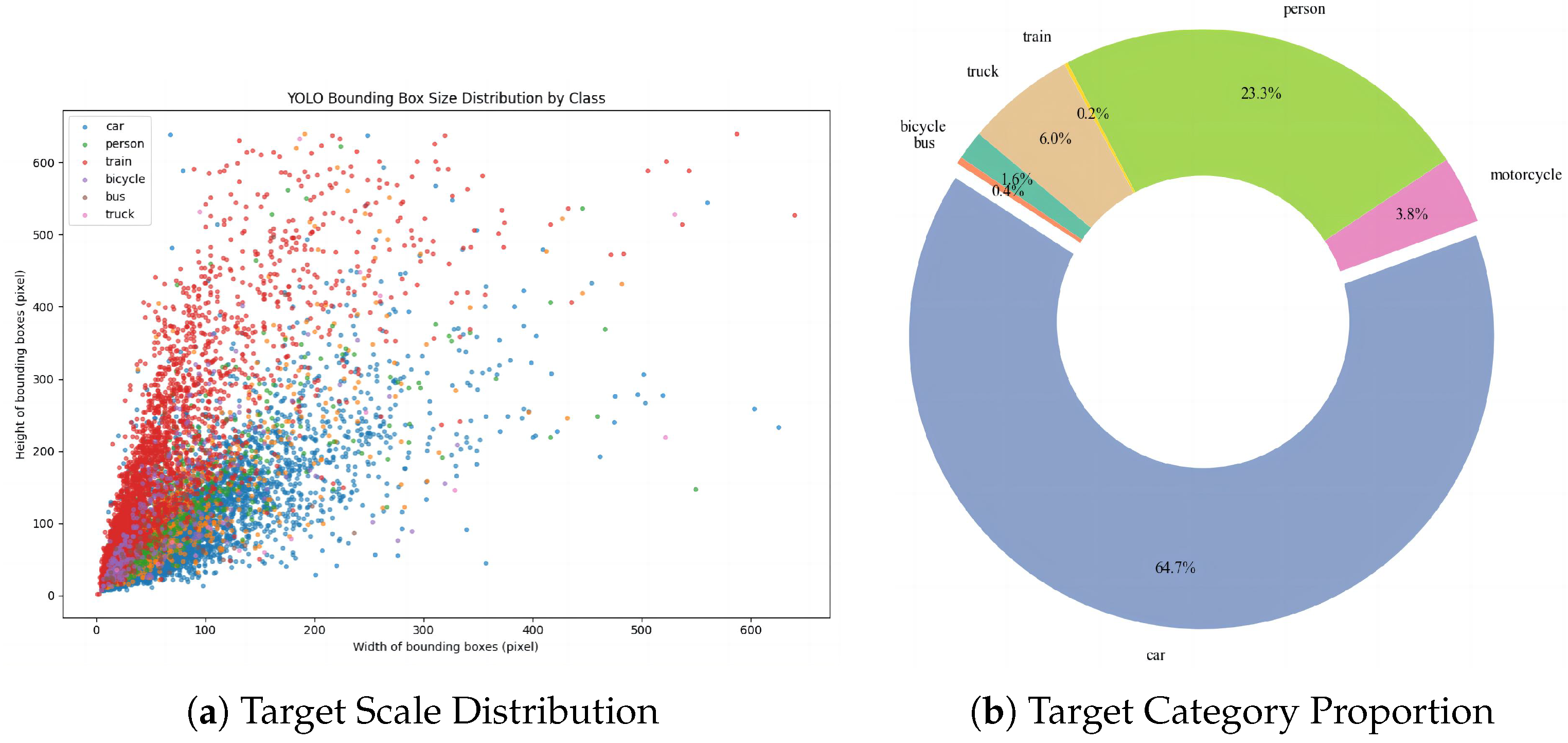

4.1. Dataset

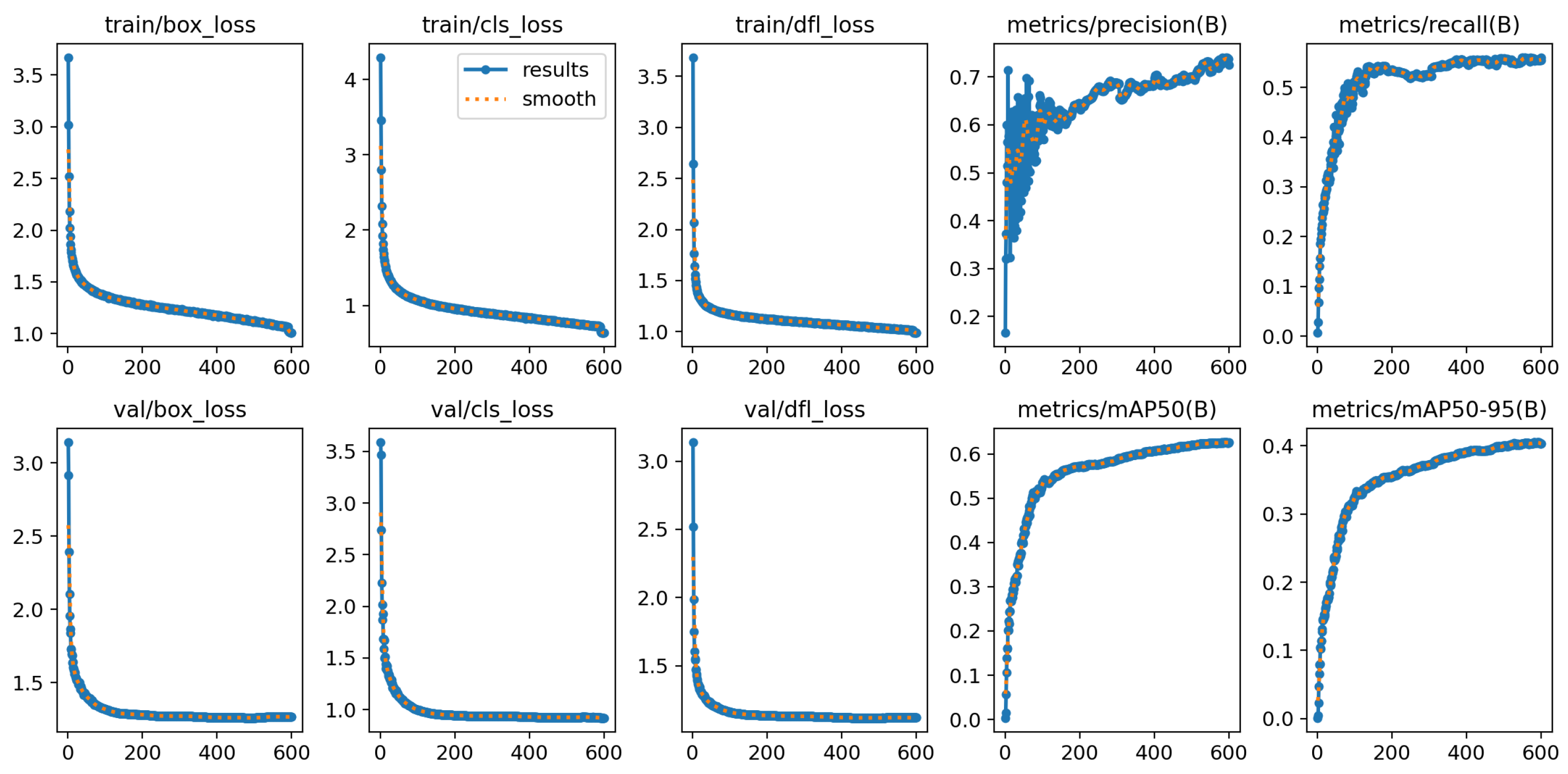

4.2. Experimental Setup and Training

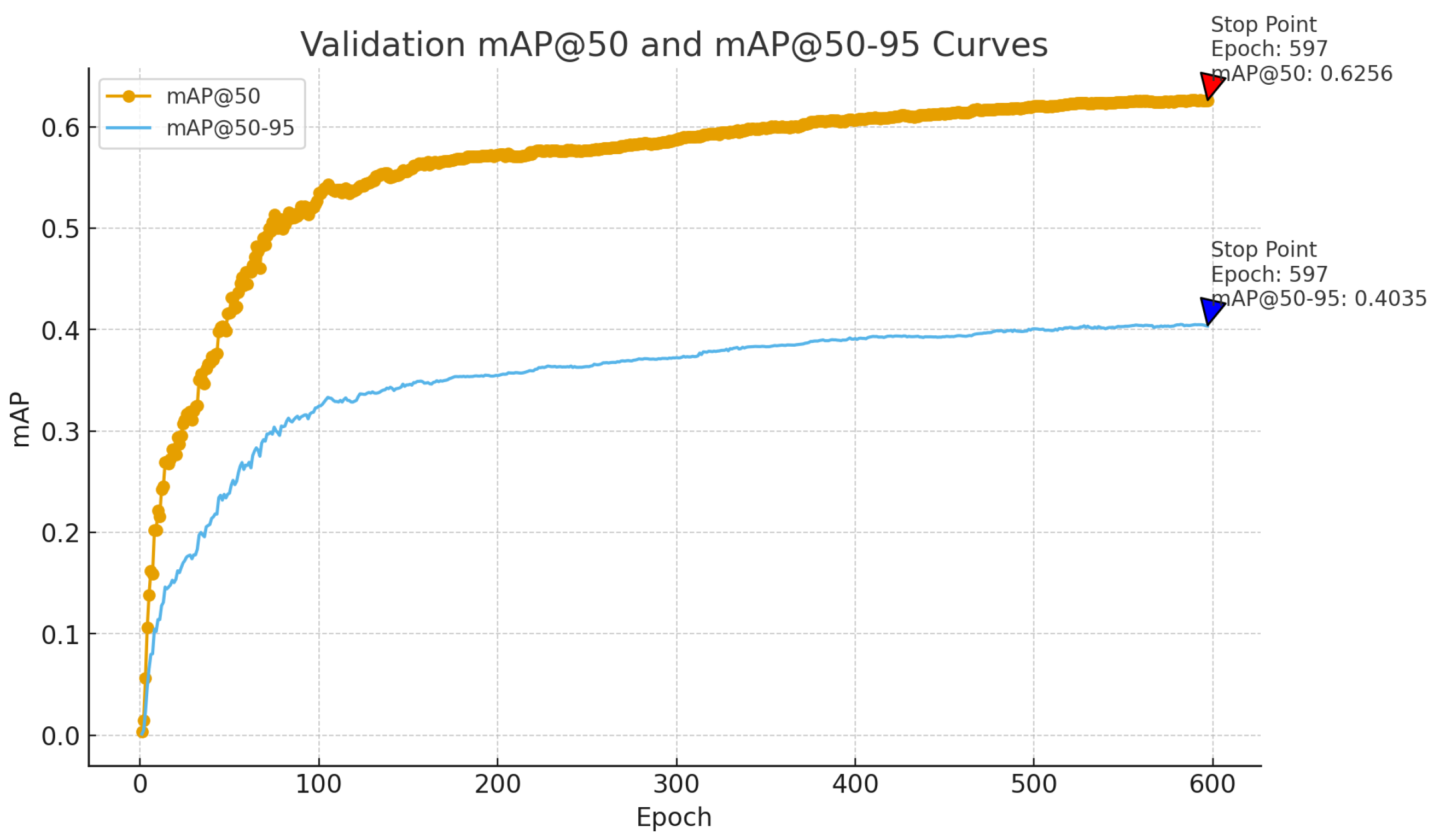

4.3. Detection Performance Evaluation

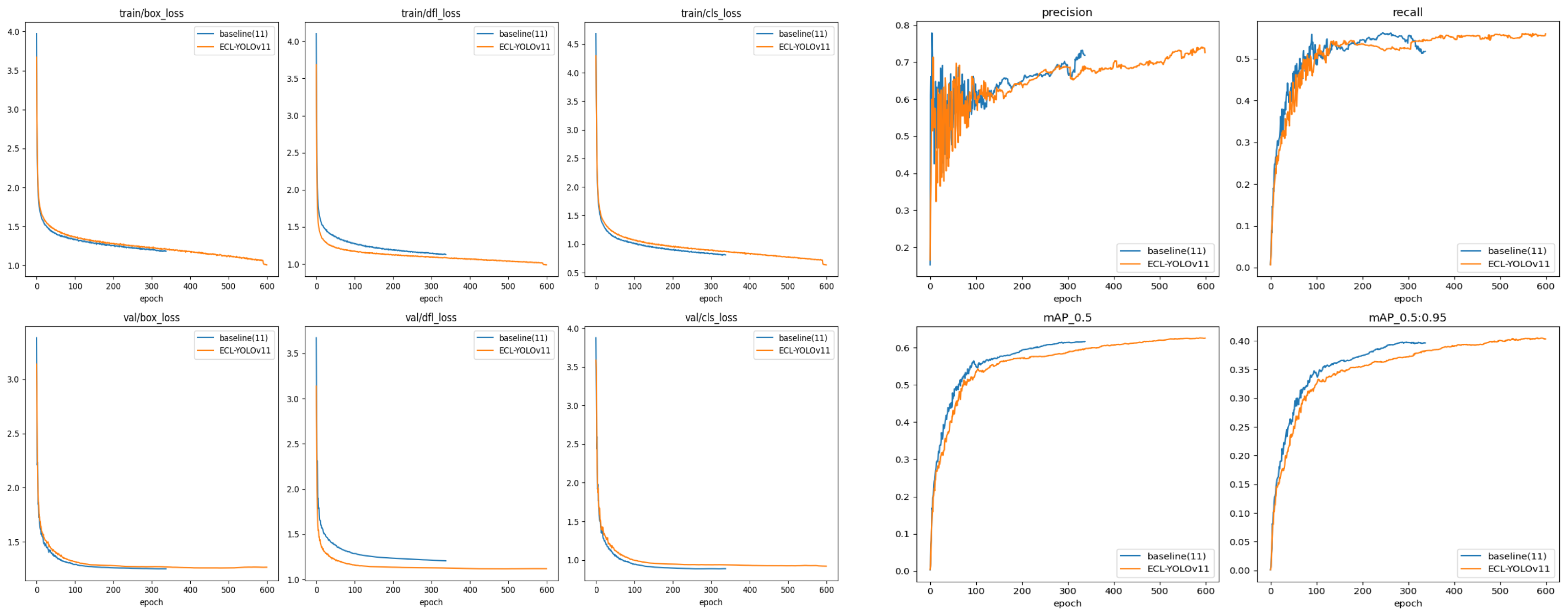

4.4. Ablation Study

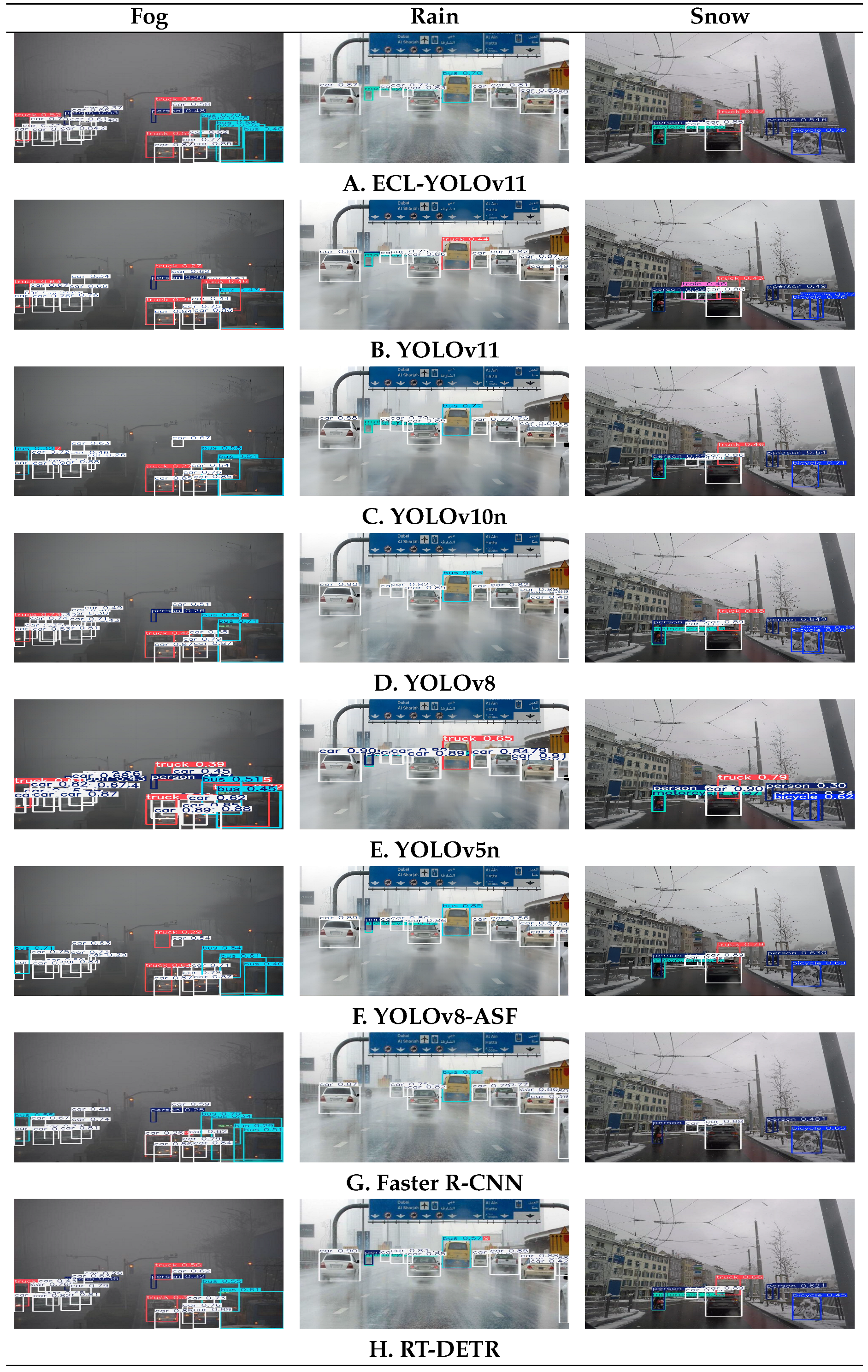

5. Comparison Experiments

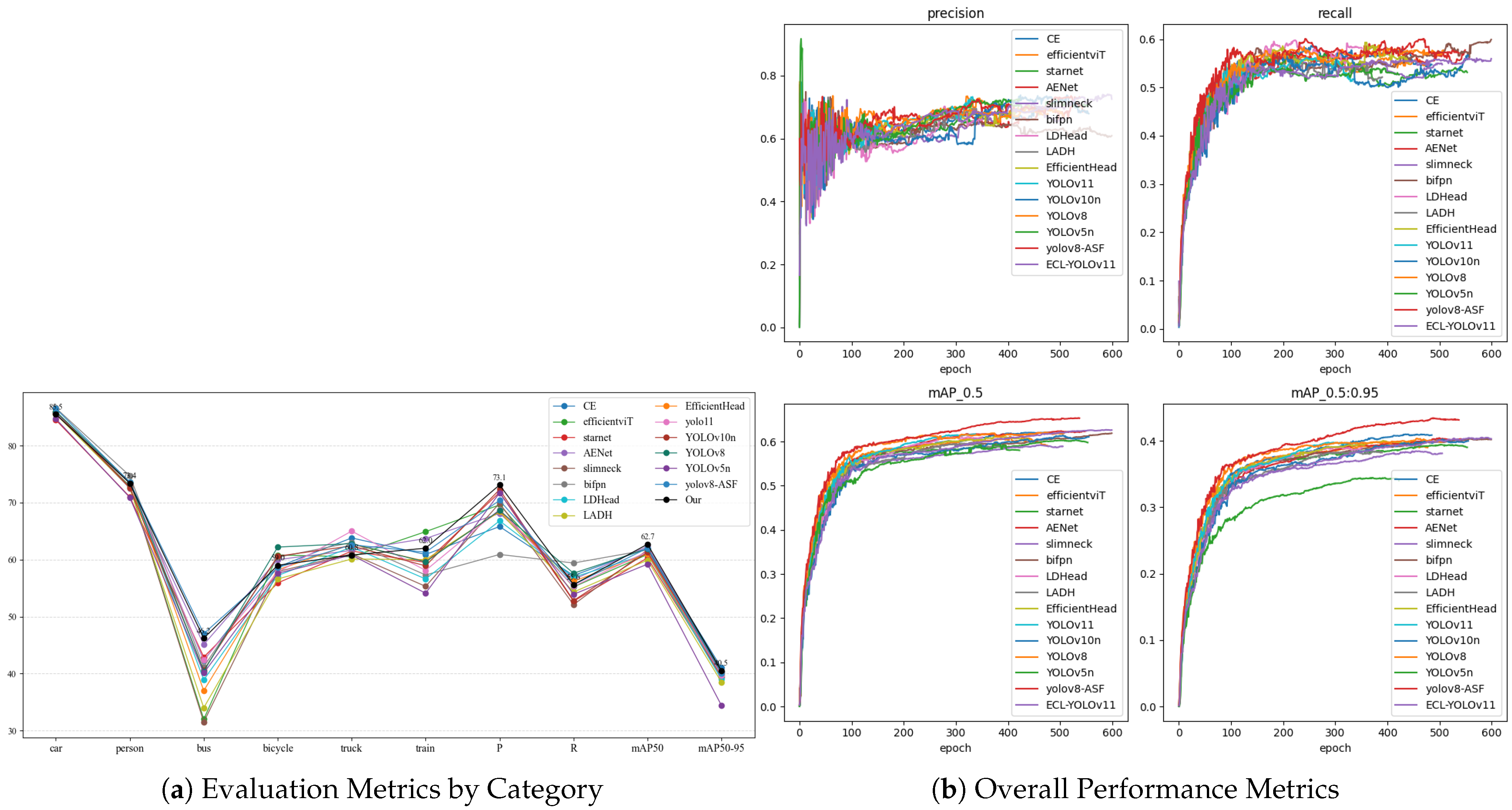

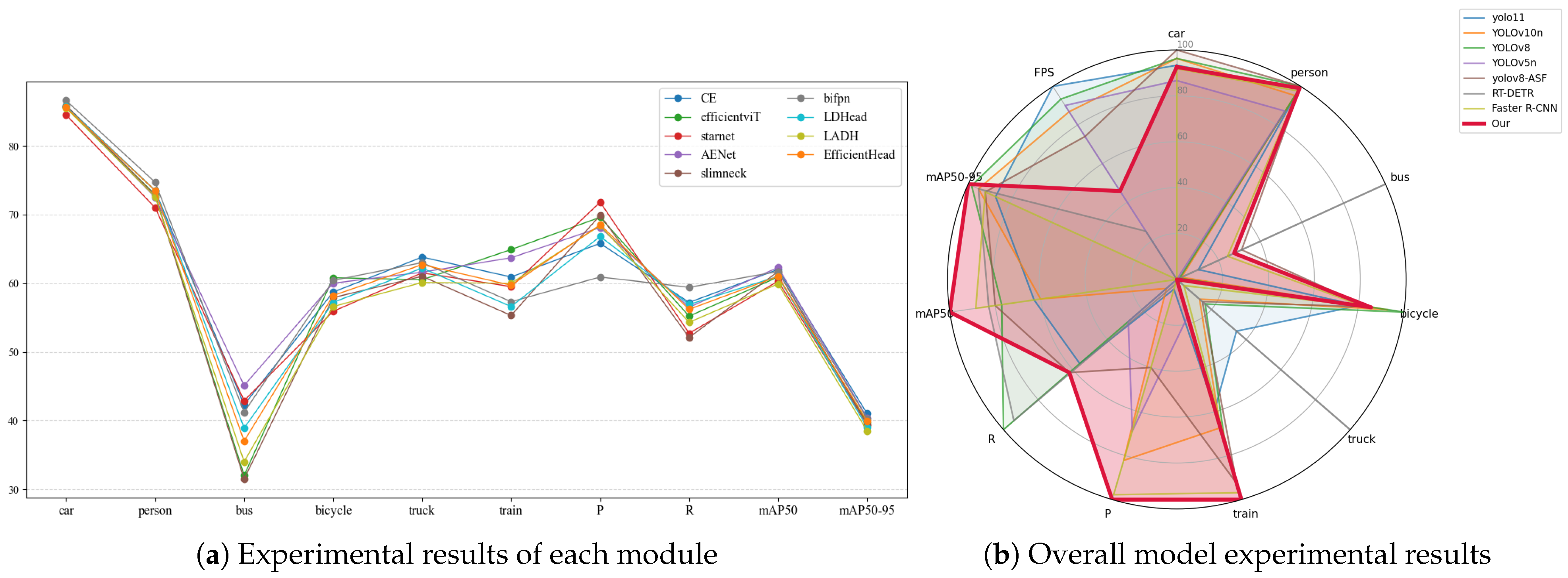

5.1. Comparison of Different Modules and Models

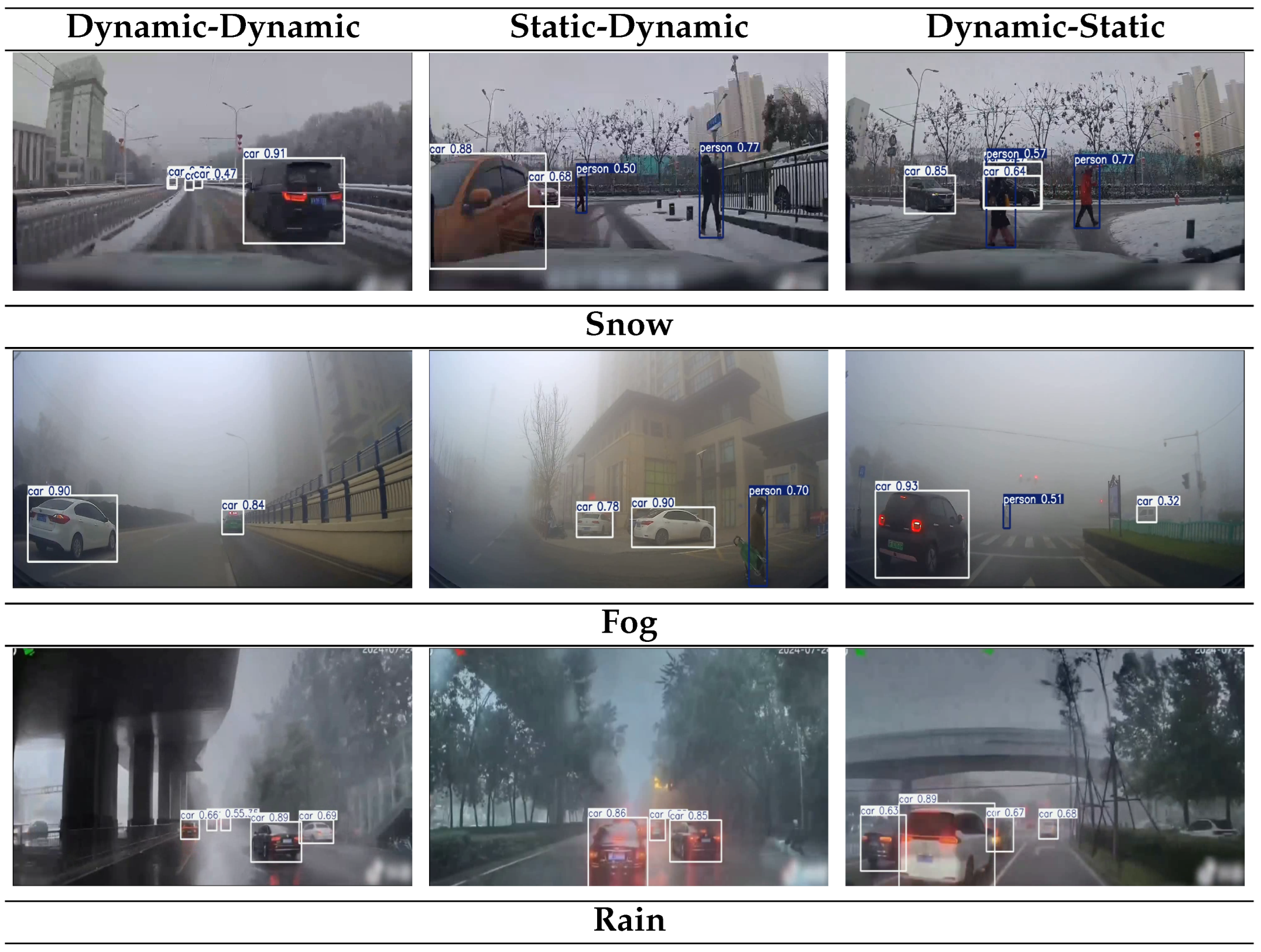

5.2. Comparison Experiments Considering Adverse Weather Conditions and Relative Motion States

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, C.; Seff, A.; Kornhauser, A.; Xiao, J. DeepDriving: Learning Affordance for Direct Perception in Autonomous Driving. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–15 December 2015; pp. 2722–2730. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A Multimodal Dataset for Autonomous Driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11618–11628. [Google Scholar]

- Kurmi, T.; Bhattacharyya, S.; Tyagi, V. A Comprehensive Review on Adverse Weather Conditions Perception for Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19301–19317. [Google Scholar]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing Through Fog Without Seeing Fog: Deep Multimodal Sensor Fusion in Unseen Adverse Weather. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11682–11692. [Google Scholar]

- Tian, Y.; Zhao, L.; Chen, Y. Detection and Tracking of Vehicles in Adverse Weather Using Deep Learning. IEEE Trans. Intell. Transp. Syst. 2023, 24, 6792–6804. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Liu, Z.; Hou, W.; Chen, W.; Chang, J. The Algorithm for Foggy Weather Target Detection Based on YOLOv5 in Complex Scenes. Complex Intell. Syst. 2025, 11, 71. [Google Scholar] [CrossRef]

- Liu, Z.; Yan, J.; Zhang, J. Research on a Recognition Algorithm for Traffic Signs in Foggy Environments Based on Image Defogging and Transformer. Sensors 2024, 24, 4370. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; He, Y.; Wang, C.; Song, R. Analysis of the Influence of Foggy Weather Environment on the Detection Effect of Machine Vision Obstacles. Sensors 2020, 20, 349. [Google Scholar] [CrossRef]

- Alif, M.A.R. YOLOv11 for Vehicle Detection: Advancements, Performance, and Applications in Intelligent Transportation Systems. arXiv 2024, arXiv:2410.22898. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, X.; Zhang, L. Edge Enhancement for Object Detection in Foggy Weather. IEEE Trans. Image Process. 2021, 30, 1766–1779. [Google Scholar]

- Zhang, Y.; Xuan, S.; Li, Z. Robust Object Detection in Adverse Weather with Feature Decorrelation via Independence Learning. Pattern Recognit. 2025, 169, 111790. [Google Scholar] [CrossRef]

- Zhu, M.; Kong, E. Multi-Scale Fusion Uncrewed Aerial Vehicle Detection Based on RT-DETR. Electronics 2024, 13, 1489. [Google Scholar] [CrossRef]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Wang, L. DINO: DETR with Improved Denoising Anchor Boxes for End-to-End Object Detection. arXiv 2022, arXiv:2203.03605. [Google Scholar]

- Valanarasu, J.M.J.; Yasarla, R.; Patel, V.M. TransWeather: Transformer-Based Restoration of Images Degraded by Adverse Weather Conditions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2353–2363. [Google Scholar]

- Pan, K.; Zhao, Y.; Wang, T.; Yang, J.; Zhang, X. MSNet: A Lightweight Multi-Scale Deep Learning Network for Pedestrian Re-Identification. Signal Image Video Process. 2023, 17, 3091–3098. [Google Scholar] [CrossRef]

- Hu, M.; Wu, Y.; Yang, Y.; Li, Z.; Wang, C. DAGL-Faster: Domain Adaptive Faster R-CNN for Vehicle Object Detection in Rainy and Foggy Weather Conditions. Displays 2023, 79, 102484. [Google Scholar] [CrossRef]

- Wang, Y.; Ke, H.; Cai, H. PC-YOLO: Enhancing Object Detection in Adverse Weather Through Physics-Aware and Dynamic Network Structures. J. Electron. Imaging 2025, 34, 023049. [Google Scholar] [CrossRef]

- Kang, M.; Ting, C.M.; Ting, F.F.; Lim, K.M.; Tan, C.H. ASF-YOLO: A Novel YOLO Model with Attentional Scale Sequence Fusion for Cell Instance Segmentation. Image Vis. Comput. 2024, 147, 105057. [Google Scholar] [CrossRef]

- Cai, H.; Li, J.; Hu, M.; Wang, Z.; Liu, S. EfficientViT: Multi-Scale Linear Attention for High-Resolution Dense Prediction. arXiv 2022, arXiv:2205.14756, 2022. [Google Scholar]

- Zhang, X.; Wang, Z.; Wang, X.; Chen, Y.; Li, Q. StarNet: An Efficient Spatiotemporal Feature Sharing Reconstructing Network for Automatic Modulation Classification. IEEE Trans. Wirel. Commun. 2024, 23, 13300–13312. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wei, H.; Zhang, K.; Wang, L. Slim-Neck by GSConv: A Lightweight-Design for Real-Time Detector Architectures. J. Real-Time Image Process. 2024, 21, 62. [Google Scholar] [CrossRef]

- Chen, J.; Mai, H.S.; Luo, L.; Wang, Y.; Zhang, X. Effective Feature Fusion Network in BIFPN for Small Object Detection. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 699–703. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Zhang, Y.; Li, Z. YOLOv10: Real-Time End-to-End Object Detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Sohan, M.; Sai Ram, T.; Rami Reddy, C.V. A Review on YOLOv8 and Its Advancements. In Proceedings of the International Conference on Data Intelligence and Cognitive Informatics, Tirunelveli, Tamil Nadu, India, 18–20 November 2024; Springer: Singapore, 2024; pp. 529–545. [Google Scholar]

- Zhang, Y.; Guo, Z.; Wu, J.; Chen, X.; Wang, L. Real-Time Vehicle Detection Based on Improved YOLO v5. Sustainability 2022, 14, 12274. [Google Scholar] [CrossRef]

| Configuration | Detailed Description |

|---|---|

| CPU | Intel Core i5-12400F |

| GPU | NVIDIA GeForce RTX 3060 (12 GB VRAM) |

| Operating System | Windows 11 |

| Software Environment | Python 3.10.15, PyTorch 2.5.0, CUDA 12.1 |

| Framework Version | Ultralytics 8.3.9 |

| Image Size | 640 × 640 |

| Training Period | 600 |

| Batch Size | 16 |

| Model | P | R | mAP@50 | mAP@50–95 |

|---|---|---|---|---|

| YOLOv11 | 0.688 | 0.553 | 0.614 | 0.397 |

| ECL-YOLOv11 | 0.731 | 0.556 | 0.625 | 0.405 |

| (a) Category-wise Evaluation Metrics. | |||||||

| Model | Car | Person | Bus | Bicycle | Truck | Train | |

| Baseline(11) | 85.6 | 73.4 | 42.4 | 57.8 | 60.5 | 60.9 | |

| CE | 85.8 | 73.5 | 42.3 | 58.7 | 63.8 | 60.9 | |

| AENet | 85.7 | 72.5 | 45.1 | 60.0 | 63.6 | 63.7 | |

| LDHead | 85.7 | 73.5 | 38.9 | 57.2 | 62.2 | 56.9 | |

| CE + AENet | 85.3 | 72.6 | 42.8 | 59.9 | 62.0 | 60.5 | |

| AENet + LDHead | 85.1 | 72.7 | 42.1 | 58.6 | 60.4 | 57.9 | |

| CE + LDHead | 85.8 | 73.0 | 36.2 | 56.2 | 60.2 | 59.5 | |

| Our | 85.5 | 73.4 | 46.2 | 59.0 | 60.8 | 62.0 | |

| (b) Overall Performance Metrics. | |||||||

| Model | P | R | mAP50 | mAP50–95 | Para. | GFLOPs | FPS |

| Baseline(11) | 68.8 | 55.3 | 61.4 | 39.7 | 2,583,517 | 6.3 | 406.5 |

| CE | 65.8 | 57.2 | 62.0 | 41.0 | 2,530,501 | 6.4 | 337.7 |

| AENet | 68.1 | 56.5 | 62.3 | 40.4 | 3,294,861 | 8.6 | 249.2 |

| LDHead | 66.8 | 56.9 | 60.9 | 39.0 | 2,420,882 | 5.6 | 451.6 |

| CE + AENet | 69.8 | 55.6 | 62.7 | 40.2 | 3,241,845 | 8.7 | 213.5 |

| AENet + LDHead | 67.0 | 57.1 | 61.1 | 39.9 | 2,934,413 | 7.2 | 142.5 |

| CE + LDHead | 65.4 | 57.2 | 60.5 | 39.2 | 2,263,029 | 5.1 | 209.9 |

| Our | 73.1 | 55.6 | 62.7 | 40.5 | 3,001,194 | 7.5 | 237.5 |

| Model | Car | Person | Bus | Bicycle | Truck | Train | P | R | mAP50 | mAP50-95 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Backbone | CE | 85.8 | 73.5 | 42.3 | 58.7 | 63.8 | 60.9 | 65.8 | 57.2 | 62 | 41 |

| efficientViT | 85.6 | 72.9 | 32.0 | 60.8 | 60.5 | 64.9 | 69.6 | 55.2 | 60.9 | 39.7 | |

| starnet | 84.5 | 71.0 | 42.9 | 55.9 | 61.5 | 59.5 | 71.8 | 52.7 | 60.3 | 39.4 | |

| Neck | AENet | 85.7 | 72.5 | 45.1 | 60.0 | 61.6 | 63.7 | 68.1 | 56.5 | 62.3 | 40.4 |

| slimneck | 85.9 | 72.8 | 31.5 | 57.9 | 61.0 | 55.3 | 69.9 | 52.1 | 59.1 | 38.4 | |

| bifpn | 86.6 | 74.7 | 41.2 | 60.4 | 63.0 | 57.3 | 69.0 | 59.4 | 61.7 | 40.3 | |

| Head | LDHead | 85.7 | 73.5 | 38.9 | 57.2 | 62.2 | 56.9 | 66.8 | 56.9 | 61.0 | 39.0 |

| LADH | 85.5 | 72.6 | 34.0 | 56.6 | 60.1 | 60.0 | 68.4 | 54.3 | 59.9 | 38.5 | |

| EfficientHead | 85.6 | 73.5 | 37.0 | 58.2 | 62.7 | 59.5 | 68.6 | 56.2 | 61.0 | 39.9 |

| Model | Car | Person | Bus | Bicycle | Truck | Train | P | R | mAP50 | mAP50-95 | FPS |

|---|---|---|---|---|---|---|---|---|---|---|---|

| yolo11 | 85.6 | 73.4 | 42.4 | 57.8 | 65.0 | 58.1 | 68.8 | 55.3 | 61.4 | 39.7 | 434.4 |

| YOLOv10n | 86.0 | 72.6 | 40.6 | 60.6 | 62.4 | 58.9 | 72.3 | 52.8 | 61.3 | 40.2 | 385.0 |

| YOLOv8 | 86.0 | 73.6 | 40.4 | 62.2 | 62.8 | 59.6 | 68.6 | 57.6 | 61.9 | 40.4 | 409.7 |

| YOLOv5n | 84.7 | 70.9 | 40.1 | 57.6 | 60.8 | 54.1 | 71.7 | 53.9 | 59.2 | 39.4 | 397.2 |

| yolov8-ASF | 86.5 | 73.6 | 47.0 | 58.6 | 62.6 | 61.3 | 70.4 | 55.6 | 62.0 | 40.5 | 336.7 |

| RT-DETR | 73.0 | 52.5 | 62.3 | 40.0 | 73.0 | 52.5 | 68.7 | 57.2 | 62.1 | 40.2 | 152.0 |

| Faster R-CNN | 85.4 | 73.1 | 45.5 | 58.3 | 61.3 | 61.7 | 73.0 | 52.5 | 62.3 | 40.0 | 58.0 |

| Our | 85.5 | 73.4 | 46.2 | 59.0 | 60.8 | 62.0 | 73.1 | 55.6 | 62.7 | 40.5 | 230.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Liu, Z.; Zhang, J.; Zhang, X.; Song, H. Robust Object Detection in Adverse Weather Conditions: ECL-YOLOv11 for Automotive Vision Systems. Sensors 2026, 26, 304. https://doi.org/10.3390/s26010304

Liu Z, Zhang J, Zhang X, Song H. Robust Object Detection in Adverse Weather Conditions: ECL-YOLOv11 for Automotive Vision Systems. Sensors. 2026; 26(1):304. https://doi.org/10.3390/s26010304

Chicago/Turabian StyleLiu, Zhaohui, Jiaxu Zhang, Xiaojun Zhang, and Hongle Song. 2026. "Robust Object Detection in Adverse Weather Conditions: ECL-YOLOv11 for Automotive Vision Systems" Sensors 26, no. 1: 304. https://doi.org/10.3390/s26010304

APA StyleLiu, Z., Zhang, J., Zhang, X., & Song, H. (2026). Robust Object Detection in Adverse Weather Conditions: ECL-YOLOv11 for Automotive Vision Systems. Sensors, 26(1), 304. https://doi.org/10.3390/s26010304