SpaceNet: A Multimodal Fusion Architecture for Sound Source Localization in Disaster Response

Abstract

1. Introduction

2. Background

2.1. SSL Applications in Disaster Response

2.2. Deep-Learning Approaches Exploiting Spatial Information in SSL

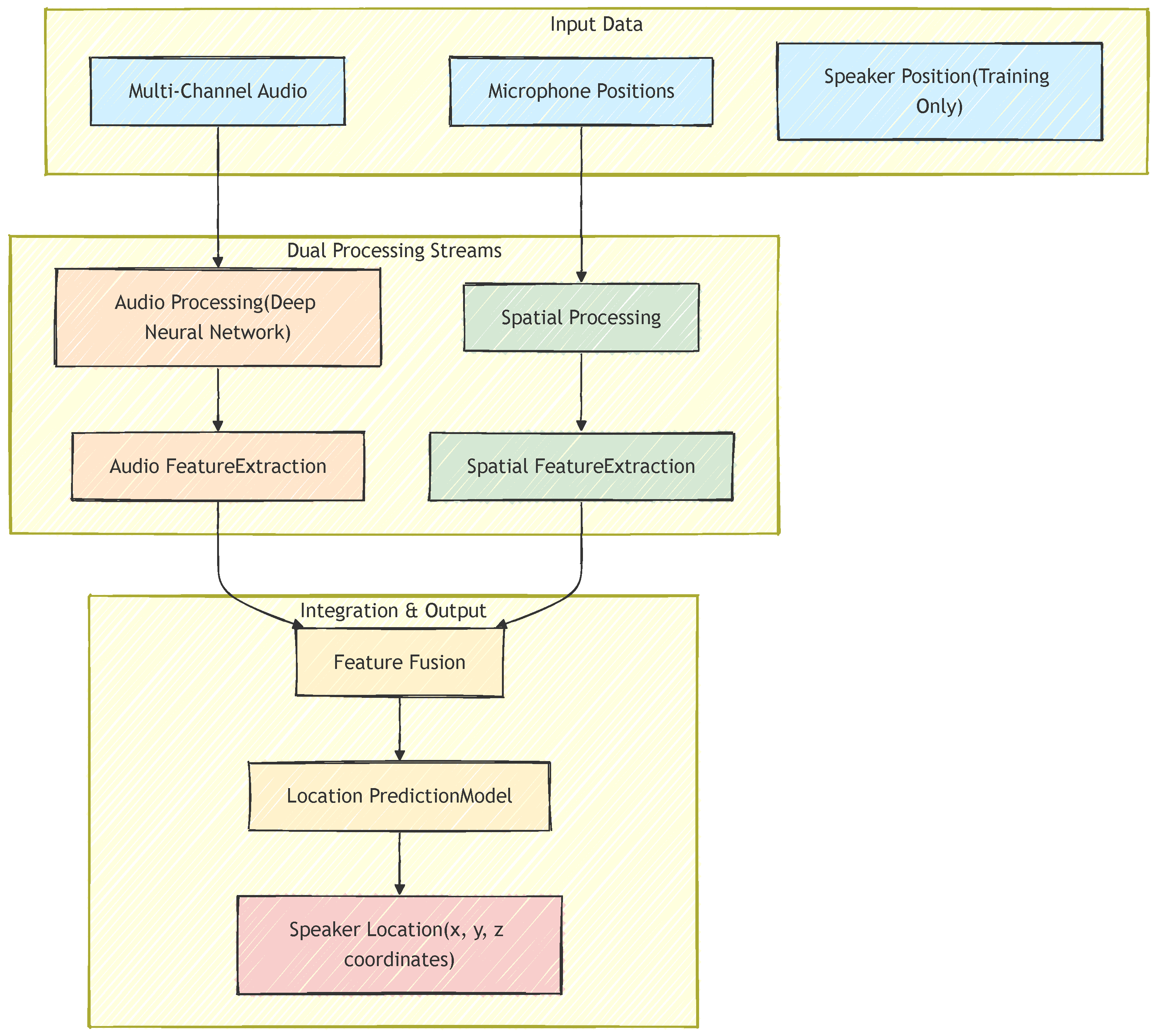

3. SpaceNet

3.1. The SpaceNet Paradigm

3.1.1. Explicit Spatial Decomposition

3.1.2. Principled Feature Normalization

3.1.3. Physics-Informed Fusion

3.2. Architectural Design Principles

- Modality Specialization: Each modality (audio and spatial) requires tailored processing that respects its unique structure. Audio features encode temporal-spectral patterns that benefit from hierarchical convolutional processing, while spatial features represent geometric relationships that require distinct mathematical transformations.

- Explicit Spatial Decomposition: Microphone geometry contains heterogeneous types of information (distances, azimuth angles, elevation angles) that should be extracted and processed separately before fusion. This decomposition enables the network to learn specialized representations for different geometric aspects.

- Learnable Fusion Weights: The relative contribution of modalities and spatial components varies across environments and should be adaptively learned, rather than predetermined.

3.2.1. Audio-Processing Branch

3.2.2. Spatial Processing Branch

- Distances : encode array size and microphone spacing,

- Azimuths : capture horizontal angular structure,

- Elevations : capture vertical configuration.

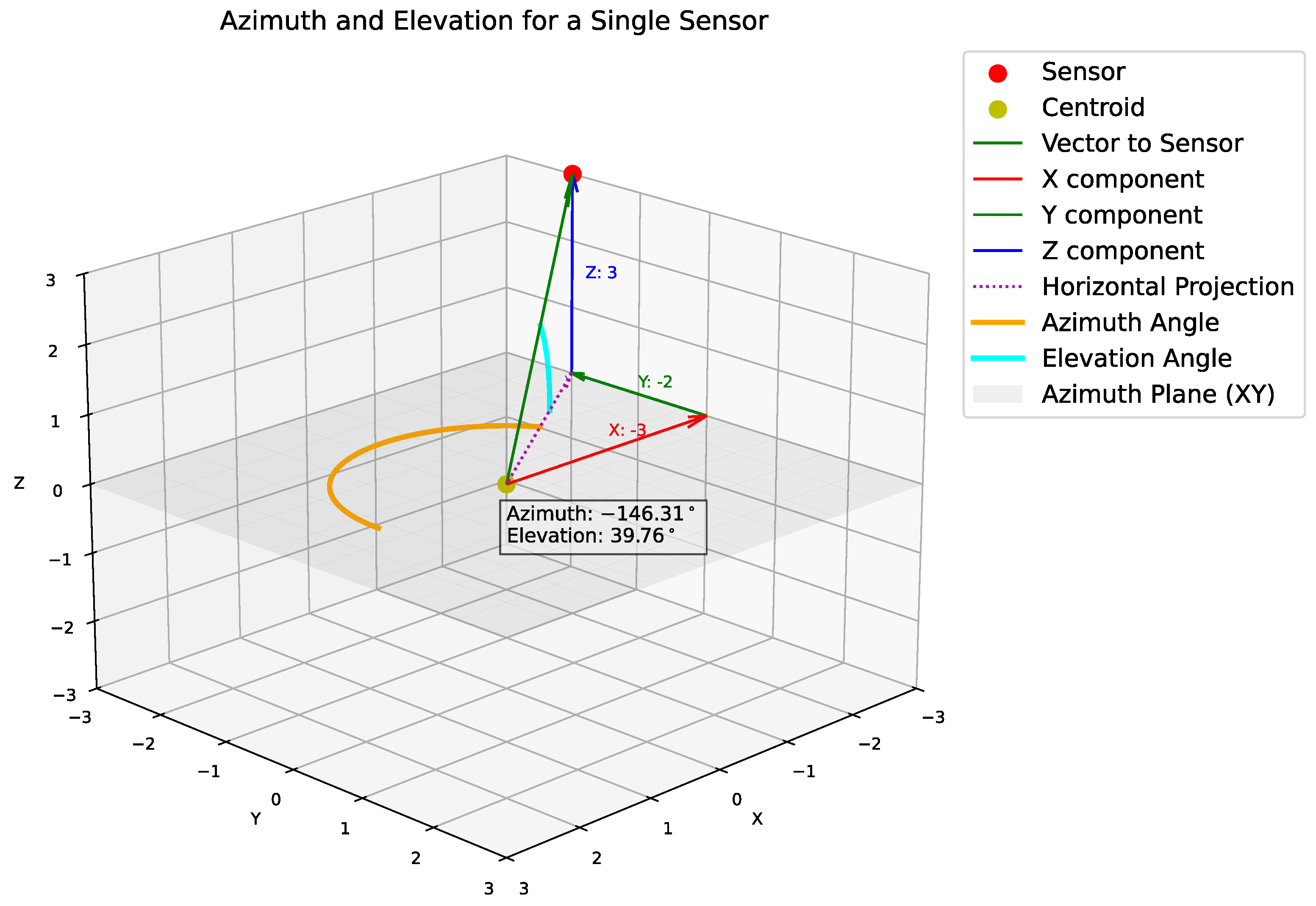

3.3. Spatial Geometry Decomposition

3.3.1. Centroid-Based Coordinate System

3.3.2. Spherical Decomposition

3.4. Normalization

3.4.1. Audio Feature Normalization

3.4.2. Spatial Feature Normalization

4. Datasets

4.1. Naming Convention

4.2. Components

- Microphones: The arrangement and number of microphones are dynamic, i.e., microphones are placed arbitrarily prior to recording a sample. We improved the hardware constraints in our previous work using wireless microphones in addition to wired ones. The number of microphones was 4, posing challenges to localization models. The brands of our devices include 4 RØDE Wireless GO II microphones (RØDE Microphones, Sydney, Australia) with 2 receivers and a Zoom R24 mixer (Zoom Corporation, Tokyo, Japan) to receive data from the receivers before sending it to the computer.

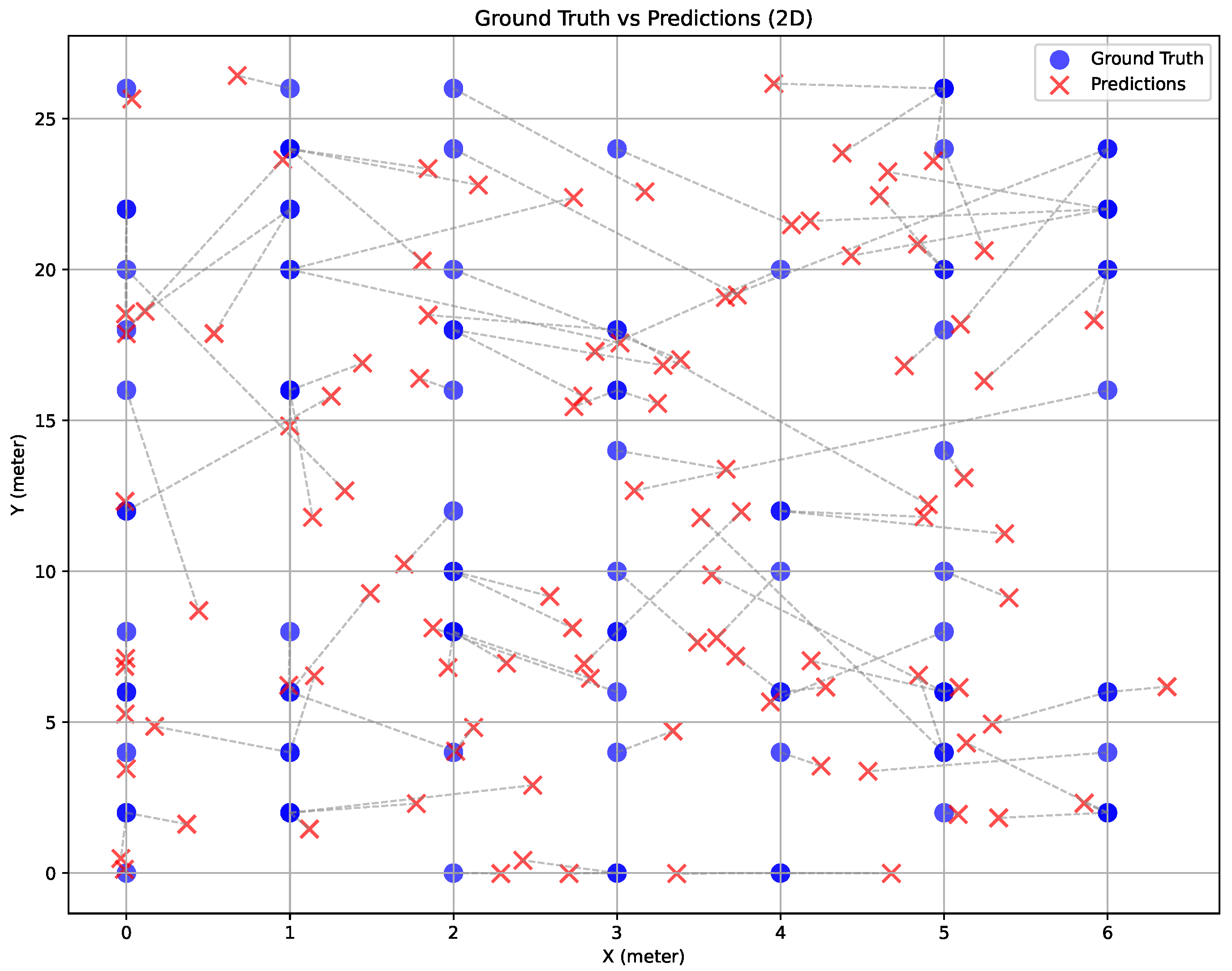

- Geometry: Our experiments include both 2D and 3D settings. In fact, due to the complexity and high-risk nature of the work of recording, we refrained from setting up variable depths of the microphones but focused more on diversifying the width and height instead. Therefore, we expect that 2D experiments can be more realistically reflected. In either case, spherical coordinates (azimuth, elevation, and distance) or Cartesian coordinates can be applied, given a transformation of the z-axis and elevation angle.

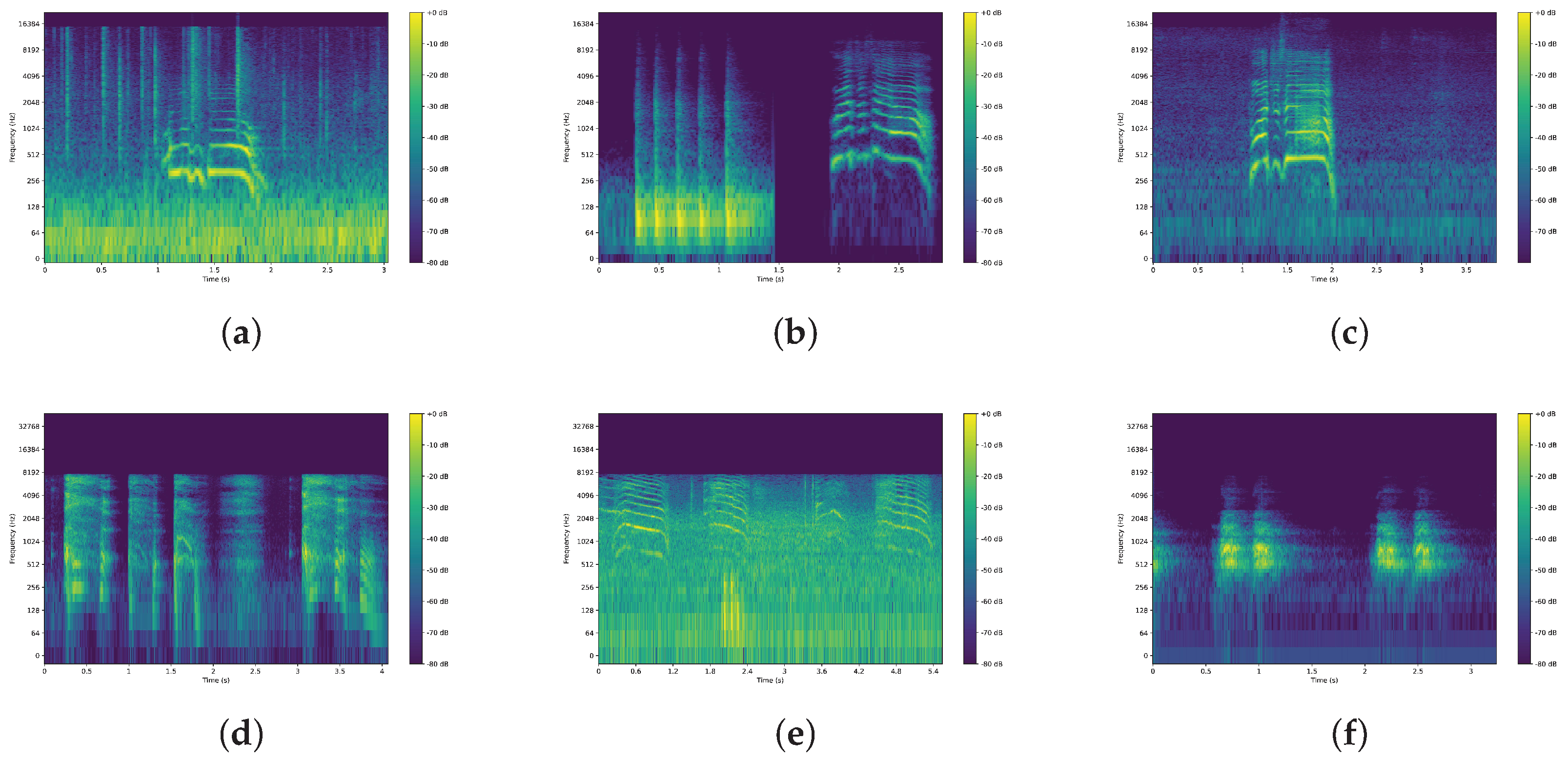

- Sound Source: We assume the victim (sound source) is stationary, thus the loudspeaker playing the audio samples from Figure 4 remained fixed for one 4-channel audio sample, and then relocated for the next recording.

- Environment: All datasets introduced realistic ambient noise artifacts such as machine running, helicopter flying nearby, and echoes from the wall.

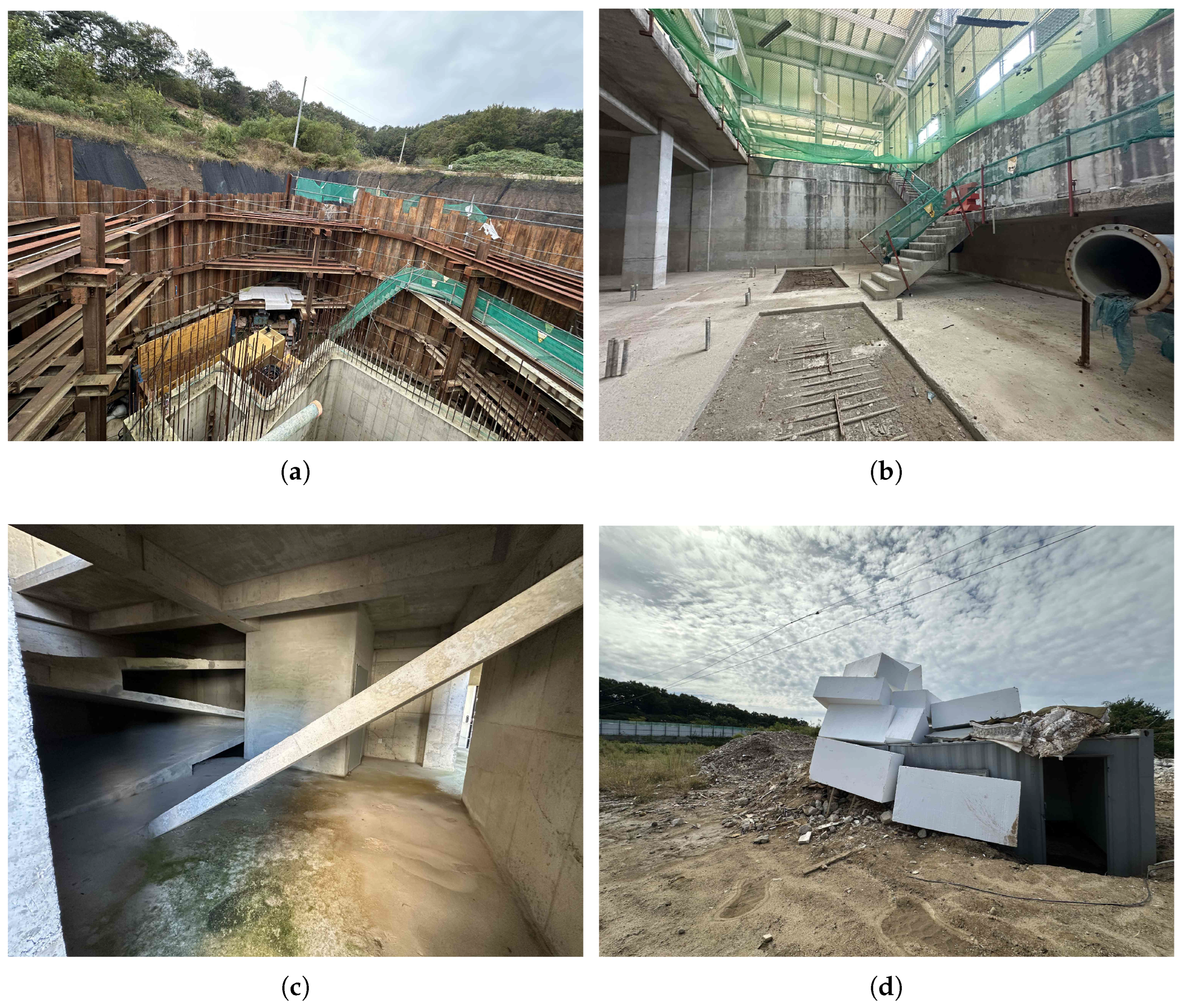

4.3. Environment Setup

4.4. Dataset Description

5. Experiments

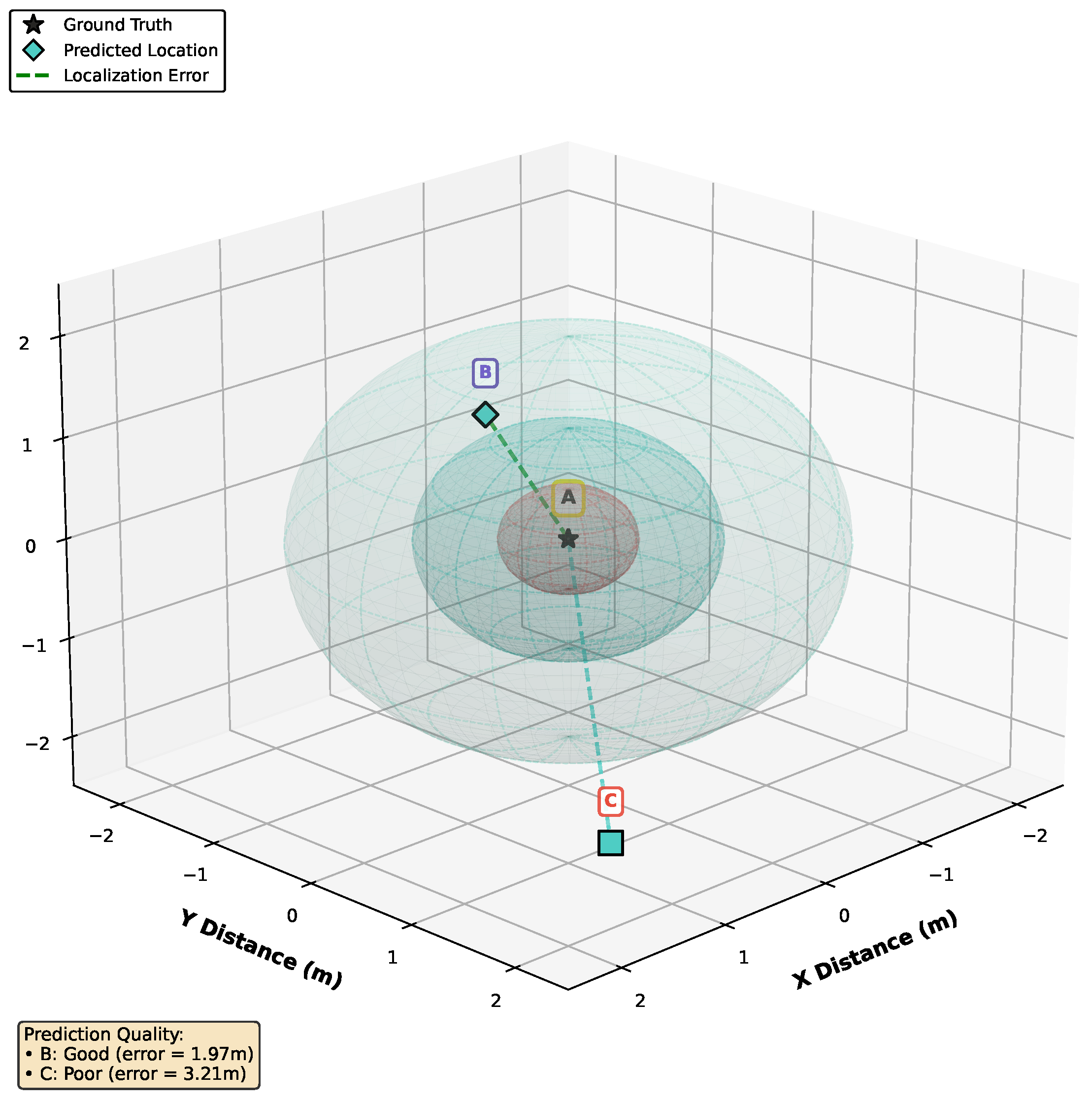

5.1. Evaluation Methodology

5.1.1. Datasets and Dimensionality

5.1.2. Coordinate Representation

5.1.3. Comparison Scope

5.1.4. Experimental Framework

5.1.5. Features and Augmentation

5.1.6. Training and Inference

5.2. Model Comparison

5.3. Ablation Studies

5.4. Spatial Compensation and Feature Regularization

5.5. Discussion

5.5.1. Normalization Benefits

5.5.2. Microphone Geometry

5.5.3. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- He, Y.; Shin, S.; Cherian, A.; Trigoni, N.; Markham, A. SoundLoc3D: Invisible 3D Sound Source Localization and Classification using a Multimodal RGB-D Acoustic Camera. In Proceedings of the Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 6 February 2025. [Google Scholar]

- Estay Zamorano, B.; Dehghan Firoozabadi, A.; Brutti, A.; Adasme, P.; Zabala-Blanco, D.; Palacios Játiva, P.; Azurdia-Meza, C.A. Sound Source Localization Using Hybrid Convolutional Recurrent Neural Networks in Undesirable Conditions. Electronics 2025, 14, 2778. [Google Scholar] [CrossRef]

- Politis, A.; Mesaros, A.; Adavanne, S.; Heittola, T.; Virtanen, T. Overview and evaluation of sound event localization and detection in DCASE 2019. IEEE/ACM Trans. Audio, Speech Lang. Process. 2020, 29, 684–698. [Google Scholar] [CrossRef]

- Desai, D.; Mehendale, N. A Review on Sound Source Localization Systems. Arch. Comput. Methods Eng. 2022, 29, 4631–4642. [Google Scholar] [CrossRef]

- Grumiaux, P.A.; Kitić, S.; Girin, L.; Guérin, A. A survey of sound source localization with deep learning methods. J. Acoust. Soc. Am. 2022, 152, 107–151. [Google Scholar] [CrossRef] [PubMed]

- Liu, M.; Cheng, L.; Qian, K.; Wang, J.; Wang, J.; Liu, Y. Indoor acoustic localization: A survey. Hum.-Centric Comput. Inf. Sci. 2020, 10, 2. [Google Scholar] [CrossRef]

- Knapp, C.; Carter, G. The generalized correlation method for estimation of time delay. IEEE Trans. Acoust. Speech Signal Process. 1976, 24, 320–327. [Google Scholar] [CrossRef]

- Grondin, F.; Glass, J. SVD-PHAT: A Fast Sound Source Localization Method. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 4140–4144. [Google Scholar] [CrossRef]

- Comanducci, L.; Borra, F.; Bestagini, P.; Antonacci, F.; Tubaro, S.; Sarti, A. Source Localization Using Distributed Microphones in Reverberant Environments Based on Deep Learning and Ray Space Transform. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 2238–2251. [Google Scholar] [CrossRef]

- AlAli, Z.T.; Alabady, S.A. A survey of disaster management and SAR operations using sensors and supporting techniques. Int. J. Disaster Risk Reduct. 2022, 82, 103295. [Google Scholar] [CrossRef]

- Latif, T.; Whitmire, E.; Novak, T.; Bozkurt, A. Sound localization sensors for search and rescue biobots. IEEE Sens. J. 2015, 16, 3444–3453. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Nguyen-Vu, L.; Lee, J. CHAWA: Overcoming Phase Anomalies in Sound Localization. IEEE Access 2024, 12, 148653–148665. [Google Scholar] [CrossRef]

- Sun, H.; Yang, P.; Liu, Z.; Zu, L.; Xu, Q. Microphone array based auditory localization for rescue robot. In Proceedings of the 2011 23rd Chinese Control and Decision Conference (CCDC), Mianyang, China, 23–25 May 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 606–609. [Google Scholar] [CrossRef]

- Ohata, T.; Nakamura, K.; Mizumoto, T.; Taiki, T.; Nakadai, K. Improvement in outdoor sound source detection using a quadrotorembedded microphone array. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1902–1907. [Google Scholar]

- Washizaki, K.; Wakabayashi, M.; Kumon, M. Position estimation of sound source on ground by multirotor helicopter with microphone array. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1980–1985. [Google Scholar]

- Wakabayashi, M.; Washizaka, K.; Hoshiba, K.; Nakadai, K.; Okuno, H.G.; Kumon, M. Design and implementation of real-time visualization of sound source positions by drone audition. In Proceedings of the 2020 IEEE/SICE International Symposium on System Integration (SII), Honolulu, HI, USA, 12–15 January 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 814–819. [Google Scholar]

- Chakrabarty, S.; Habets, E.A. Broadband DOA estimation using convolutional neural networks trained with noise signals. In Proceedings of the 2017 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 15–18 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 136–140. [Google Scholar]

- Adavanne, S.; Politis, A.; Virtanen, T. Direction of Arrival Estimation for Multiple Sound Sources Using Convolutional Recurrent Neural Network. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 3–7 September 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1462–1466. [Google Scholar] [CrossRef]

- Tho Nguyen, T.N.; Jones, D.L.; Gan, W.S. A Sequence Matching Network for Polyphonic Sound Event Localization and Detection. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 71–75. [Google Scholar] [CrossRef]

- Adavanne, S.; Politis, A.; Nikunen, J.; Virtanen, T. Sound Event Localization and Detection of Overlapping Sources Using Convolutional Recurrent Neural Networks. IEEE J. Sel. Top. Signal Process. 2019, 13, 34–48. [Google Scholar] [CrossRef]

- Cao, Y.; Iqbal, T.; Kong, Q.; An, F.; Wang, W.; Plumbley, M.D. An Improved Event-Independent Network for Polyphonic Sound Event Localization and Detection. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 885–889. [Google Scholar] [CrossRef]

- Evers, C.; Lollmann, H.W.; Mellmann, H.; Schmidt, A.; Barfuss, H.; Naylor, P.A.; Kellermann, W. The LOCATA Challenge: Acoustic Source Localization and Tracking. IEEE/ACM Trans. Audio, Speech Lang. Process. 2020, 28, 1620–1643. [Google Scholar] [CrossRef]

- Wang, Z.Q.; Zhang, X.; Wang, D. Robust speaker localization guided by deep learning-based time-frequency masking. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 27, 178–188. [Google Scholar] [CrossRef]

- Diaz-Guerra, D.; Miguel, A.; Beltran, J.R. Robust Sound Source Tracking Using SRP-PHAT and 3D Convolutional Neural Networks. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 300–311. [Google Scholar] [CrossRef]

- Berg, A.; Gulin, J.; O’Connor, M.; Zhou, C.; Åström, K.; Oskarsson, M. wav2pos: Sound Source Localization using Masked Autoencoders. In Proceedings of the 2024 14th International Conference on Indoor Positioning and Indoor Navigation (IPIN), Hong Kong, China, 14–17 October 2024. [Google Scholar] [CrossRef]

- Xiao, Y.; Das, R.K. TF-Mamba: A Time-Frequency Network for Sound Source Localization. In Proceedings of the Interspeech 2025, Rotterdam, The Netherlands, 17–21 August 2025. [Google Scholar] [CrossRef]

- Juanola, X.; Morais, G.; Haro, G.; Fuentes, M. Visual Sound Source Localization: Assessing Performance with Both Positive and Negative Audio. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025. [Google Scholar]

- Um, S.J.; Kim, D.; Lee, S.; Kim, J.U. Object-aware Sound Source Localization via Audio-Visual Scene Understanding. In Proceedings of the Computer Vision and Pattern Recognition Conference (CVPR), Nashville, TN, USA, 11–15 June 2025; pp. 8342–8351. [Google Scholar]

- Yang, Y.; Xu, S.; Trigoni, N.; Markham, A. Efficient and Microphone-Fault-Tolerant 3D Sound Source Localization. In Proceedings of the Interspeech 2025, Rotterdam, The Netherlands, 17–21 August 2025. [Google Scholar] [CrossRef]

- Scheibler, R.; Bezzam, E.; Dokmanić, I. Pyroomacoustics: A Python Package for Audio Room Simulation and Array Processing Algorithms. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 351–355. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An Imperative Style, High-Performance Deep Learning Library. Advances in Neural Information Processing Systems 32. 2019. Available online: https://proceedings.neurips.cc/paper/2019/hash/bdbca288fee7f92f2bfa9f7012727740-Abstract.html (accessed on 10 October 2025).

- Park, D.S.; Chan, W.; Zhang, Y.; Chiu, C.C.; Zoph, B.; Cubuk, E.D.; Le, Q.V. SpecAugment: A Simple Data Augmentation Method for Automatic Speech Recognition. In Proceedings of the Interspeech, Graz, Austria, 15–19 September 2019. [Google Scholar]

- Park, D.S.; Zhang, Y.; Chiu, C.C.; Chen, Y.; Li, B.; Chan, W.; Le, Q.V.; Wu, Y. Specaugment on large scale datasets. In Proceedings of the ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 6879–6883. [Google Scholar]

| Module | Type | Input → Output | # Params |

|---|---|---|---|

| ResNet backbone | ResNet-18 | 4-ch → 512 | 11,179,648 |

| Audio head FC1 | Linear | 131,328 | |

| Audio head FC2 | Linear | 32,896 | |

| Audio head FC3 | Linear | 3741 | |

| Spatial FC (dist) | Linear | 20 | |

| Spatial FC (az) | Linear | 20 | |

| Spatial FC (el) | Linear | 20 | |

| Spatial aggregation | Linear + Tanh | 5 | |

| Fusion FC1 | Linear | 528 | |

| Fusion FC2 | Linear | 136 | |

| Fusion FC3 | Linear | 27 | |

| Dropout | – | 0 | |

| Learnable weight | Scalar | 1 | 1 |

| Learnable weight | Scalar | 1 | 1 |

| Learnable weight | Scalar | 1 | 1 |

| Learnable weight | Scalar | 1 | 1 |

| Learnable weight | Scalar | 1 | 1 |

| Dataset | Samples | Train Segments | Test Segments | Audio Format |

|---|---|---|---|---|

| Yeoju | 14,708 | 51,478 | 7354 | PCM, 44.1 kHz, mono, 16-bit |

| Yeongdong | 1056 | 3324 | 900 | PCM, 48 kHz, mono, 24-bit |

| Gyeonggi | 3342 | 5468 | 7900 | PCM, 48 kHz, mono, 24-bit |

| Dataset | Model | Feature | ≤1.0 | ≤1.5 | ≤2.0 |

|---|---|---|---|---|---|

| Yeongdong | SpaceNet | mel-spectra | 9.3 | 23.1 | 38.2 |

| SpaceNet | mel-spectrogram | 9.3 | 17.3 | 28.4 | |

| CHAWA | mel-spectra | 9.8 | 20.0 | 31.6 | |

| CHAWA | mel-spectrogram | 9.3 | 19.6 | 33.8 | |

| Yeoju | SpaceNet | mel-spectra | 53.9 | 65.2 | 73.1 |

| SpaceNet | mel-spectrogram | 32.8 | 50.3 | 64.8 | |

| CHAWA | mel-spectra | 5.5 | 11.4 | 18.7 | |

| CHAWA | mel-spectrogram | 4.4 | 10.0 | 16.1 | |

| Gyeonggi | SpaceNet | mel-spectra | 28.0 | 49.4 | 66.5 |

| SpaceNet | mel-spectrogram | 26.5 | 46.7 | 65.0 | |

| CHAWA | mel-spectra | 28.3 | 50.8 | 67.5 | |

| CHAWA | mel-spectrogram | 20.4 | 39.0 | 56.5 |

| Has Rain | SpaceNet | CHAWA | ||

|---|---|---|---|---|

| MAE (m) | RMSE (m) | MAE (m) | RMSE (m) | |

| No | 0.760 | 0.877 | 2.108 | 2.128 |

| Yes | 0.900 | 0.926 | 1.962 | 1.970 |

| Dimension | Normalized | Spatial | Feature | ≤1.0 | ≤1.5 | ≤2.0 | Avg. R2 |

|---|---|---|---|---|---|---|---|

| 3D | ✓ | ✓ | mel-spectra | 53.9 | 65.2 | 73.1 | 0.53 |

| ✓ | ✗ | mel-spectra | 53.9 | 65.2 | 72.9 | 0.52 | |

| ✗ | ✗ | mel-spectra | 49.3 | 61.8 | 69.0 | 0.50 | |

| ✓ | ✓ | mel-spectrogram | 32.8 | 50.3 | 64.8 | 0.57 | |

| ✓ | ✗ | mel-spectrogram | 32.6 | 51.4 | 65.7 | 0.56 | |

| ✗ | ✗ | mel-spectrogram | 35.0 | 51.1 | 65.5 | 0.56 | |

| 2D | ✓ | ✓ | mel-spectra | 52.8 | 66.3 | 74.2 | 0.81 |

| ✓ | ✗ | mel-spectra | 52.2 | 65.5 | 72.9 | 0.80 | |

| ✗ | ✗ | mel-spectra | 40.1 | 53.3 | 62.1 | 0.66 | |

| ✓ | ✓ | mel-spectrogram | 37.2 | 56.9 | 71.1 | 0.86 | |

| ✓ | ✗ | mel-spectrogram | 34.4 | 53.0 | 66.9 | 0.85 | |

| ✗ | ✗ | mel-spectrogram | 35.3 | 53.4 | 68.1 | 0.85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Nguyen-Vu, L.; Lee, J. SpaceNet: A Multimodal Fusion Architecture for Sound Source Localization in Disaster Response. Sensors 2026, 26, 168. https://doi.org/10.3390/s26010168

Nguyen-Vu L, Lee J. SpaceNet: A Multimodal Fusion Architecture for Sound Source Localization in Disaster Response. Sensors. 2026; 26(1):168. https://doi.org/10.3390/s26010168

Chicago/Turabian StyleNguyen-Vu, Long, and Jonghoon Lee. 2026. "SpaceNet: A Multimodal Fusion Architecture for Sound Source Localization in Disaster Response" Sensors 26, no. 1: 168. https://doi.org/10.3390/s26010168

APA StyleNguyen-Vu, L., & Lee, J. (2026). SpaceNet: A Multimodal Fusion Architecture for Sound Source Localization in Disaster Response. Sensors, 26(1), 168. https://doi.org/10.3390/s26010168