Failure Detection in Sensors via Variational Autoencoders and Image-Based Feature Representation

Abstract

1. Introduction

1.1. Previous Research on Sensor Failures Detection

1.2. Paper Motivation and Outline

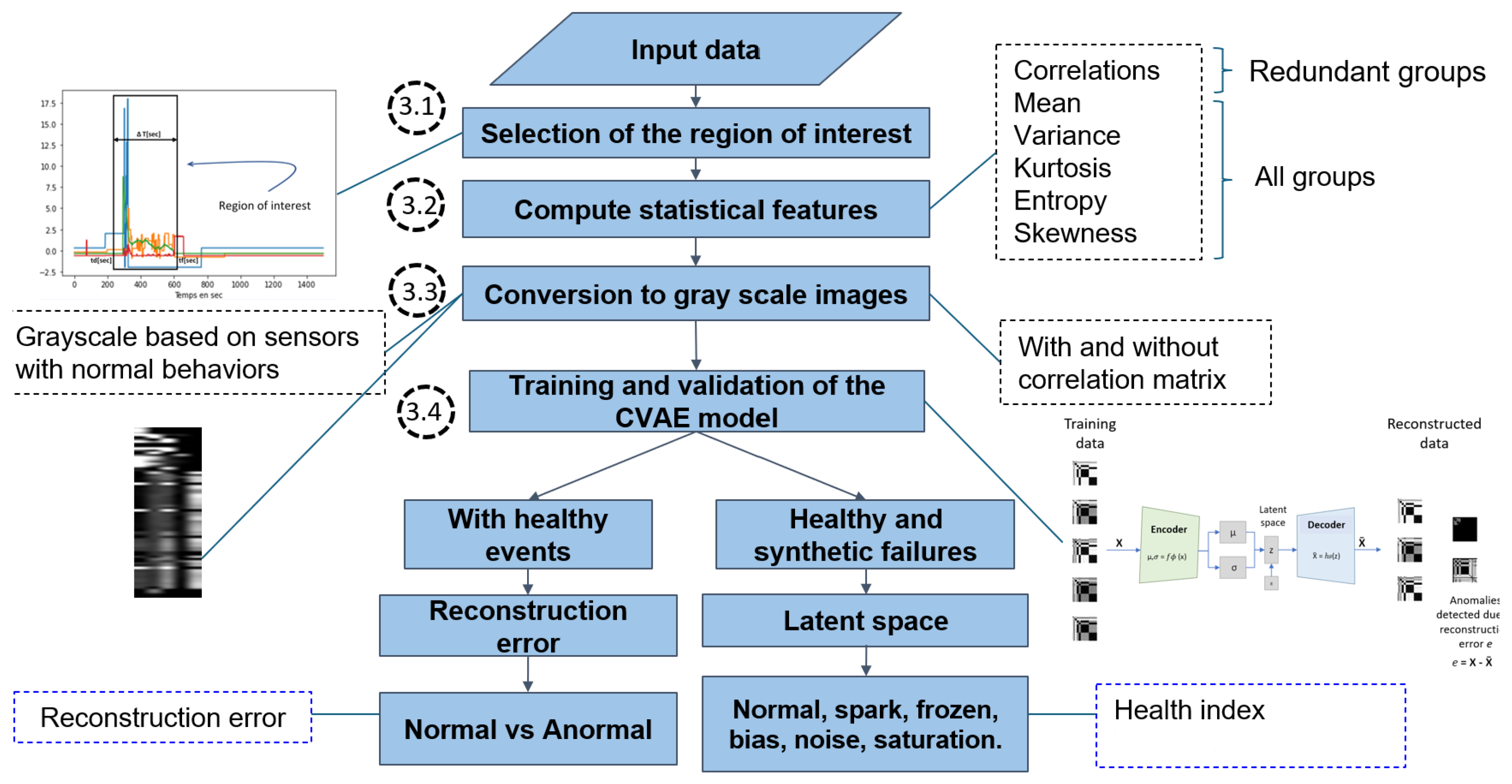

- Failure type: The proposed approach unifies the detection of multiple types of sensor failure, including no signal, bias, frozen, noise, spark, and saturation.

- Feature representation: Statistical features—mean, variance, entropy, skewness, kurtosis, and correlation—are extracted from sensor time series and converted into pixel matrices. This approach enables the use of convolutional neural network layers for effective failure detection and captures complex relationships in sensor data.

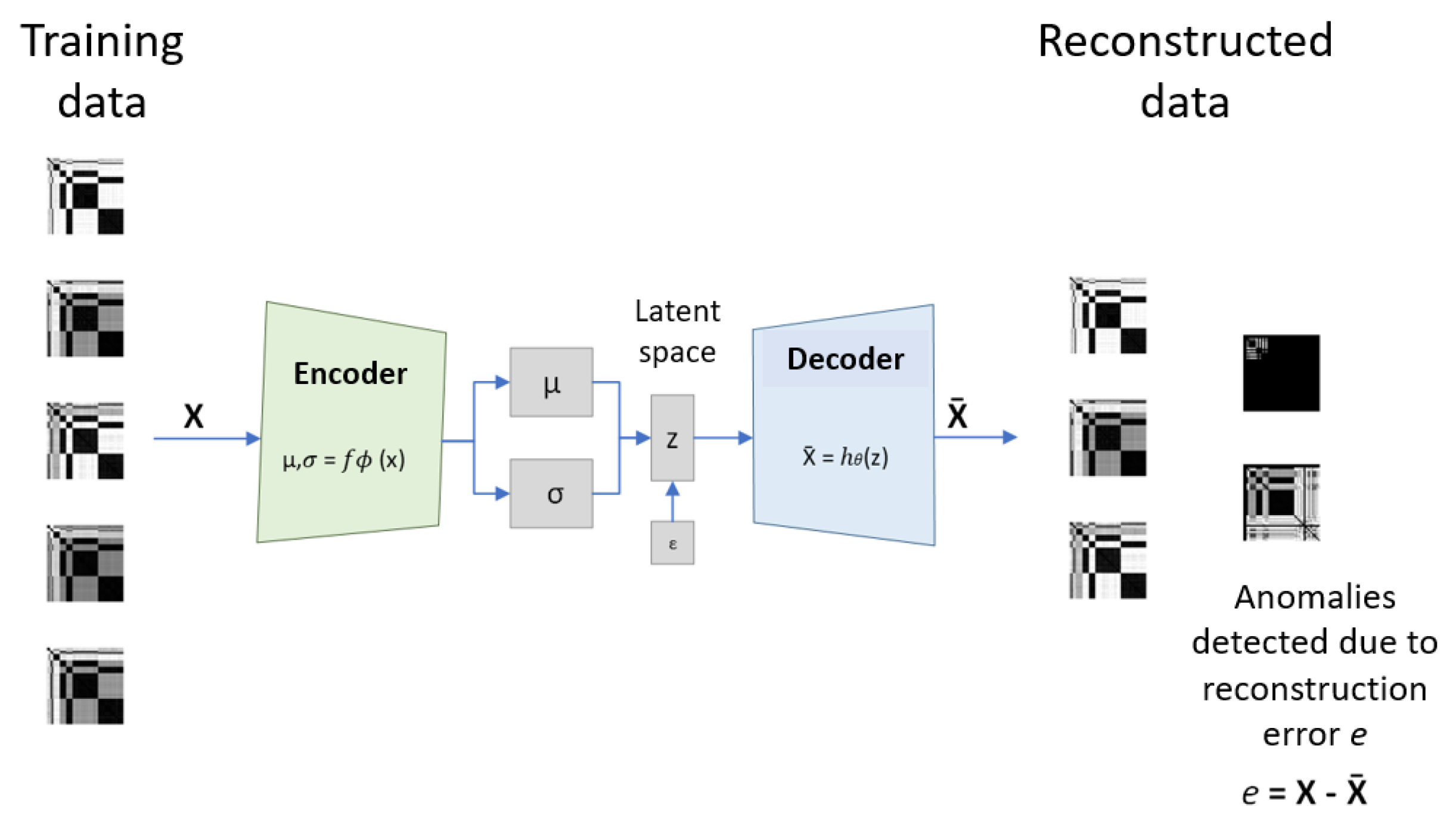

- CVAE model for failure detection: The CVAE model was designed to detect failures in sensor data. The CVAE model is defined and trained to learn data distribution from sensors in normal condition, identifying failures based on reconstruction errors. This definition enables a robust and adaptable failure detection framework.

- Validation: The proposed method is evaluated using synthetic failure data and a real-world industrial dataset from a complex electromechanical system from the aeronautical domain.

2. Background: Classification of Sensor Failures

2.1. Spark

2.2. Frozen

2.3. Bias

2.4. Excessive Noise

2.5. Saturation

- Spark failure: A sudden spike in sensor readings due to electromagnetic interference or transient power surges.

- Frozen failure: The sensor output remains constant despite actual variations, often caused by communication loss or hardware malfunction.

- Bias failure: A consistent deviation from the actual value, typically resulting from calibration errors.

- Noise failure: Random fluctuations in sensor readings due to external disturbances or aging components.

- Saturation failure: The sensor reaches its upper or lower limit and remains at that value, failing to capture further variations.

3. Proposed Approach for Detecting Sensor Failures

3.1. Selection of the Time Window of Interest

3.2. Compute Features

- Mean value :

- Variance :

- Skewness :

- Kurtosis :

- Entropy , where is the empirical probability density:

- Pearson correlation for an event j given a pair of sensor measurement time series and :

3.3. Grayscale Image Representation of the Features

3.4. Training and Validation of the CVAE Model

- Data preparation: Transformation of the sensor time series data into images based on statistical features. Data are partitioned into training, validation, and test datasets.

- Model architecture: An initial architecture is defined based on input data dimension, choices for the latent space dimension, and guidelines from previous implementations.

- Training the model: Given a suitable loss function, optimization of model parameters using the Adam algorithm. Model weights are updated based on the computed loss through the training epochs. The CVAE loss function quantifies how well the model reconstructs the input images (reconstruction error) and how closely the latent distribution aligns with a prior distribution (Kullback–Leibler error).

- Evaluation: The evaluation process involves estimating the model’s performance on the test dataset, which was not seen during the training process. Based on the observed performances, the model architecture and training hyperparameters may be adjusted before a new training iteration.

3.5. Detection of Sensor Failures

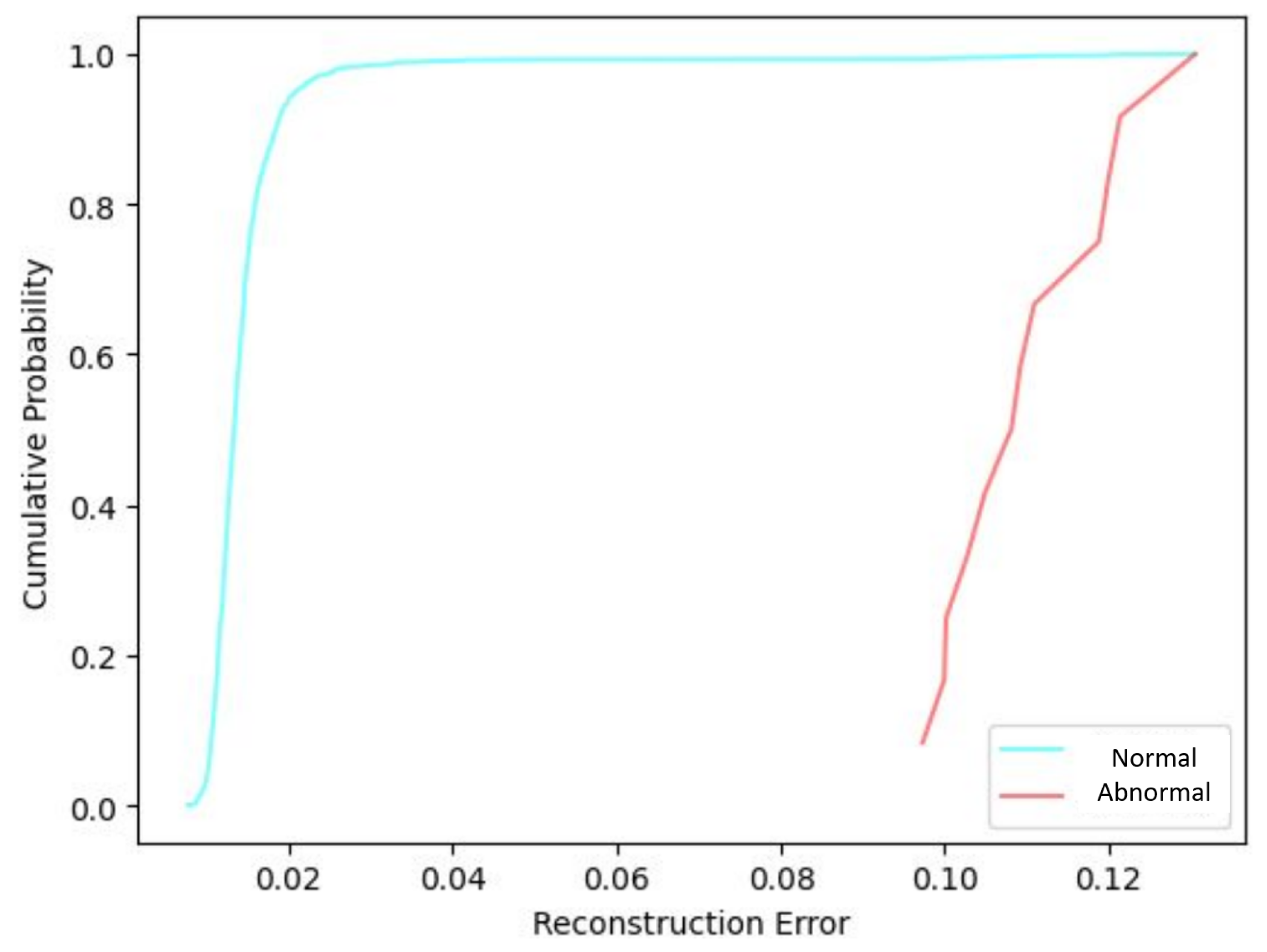

3.5.1. Reconstruction Error Method

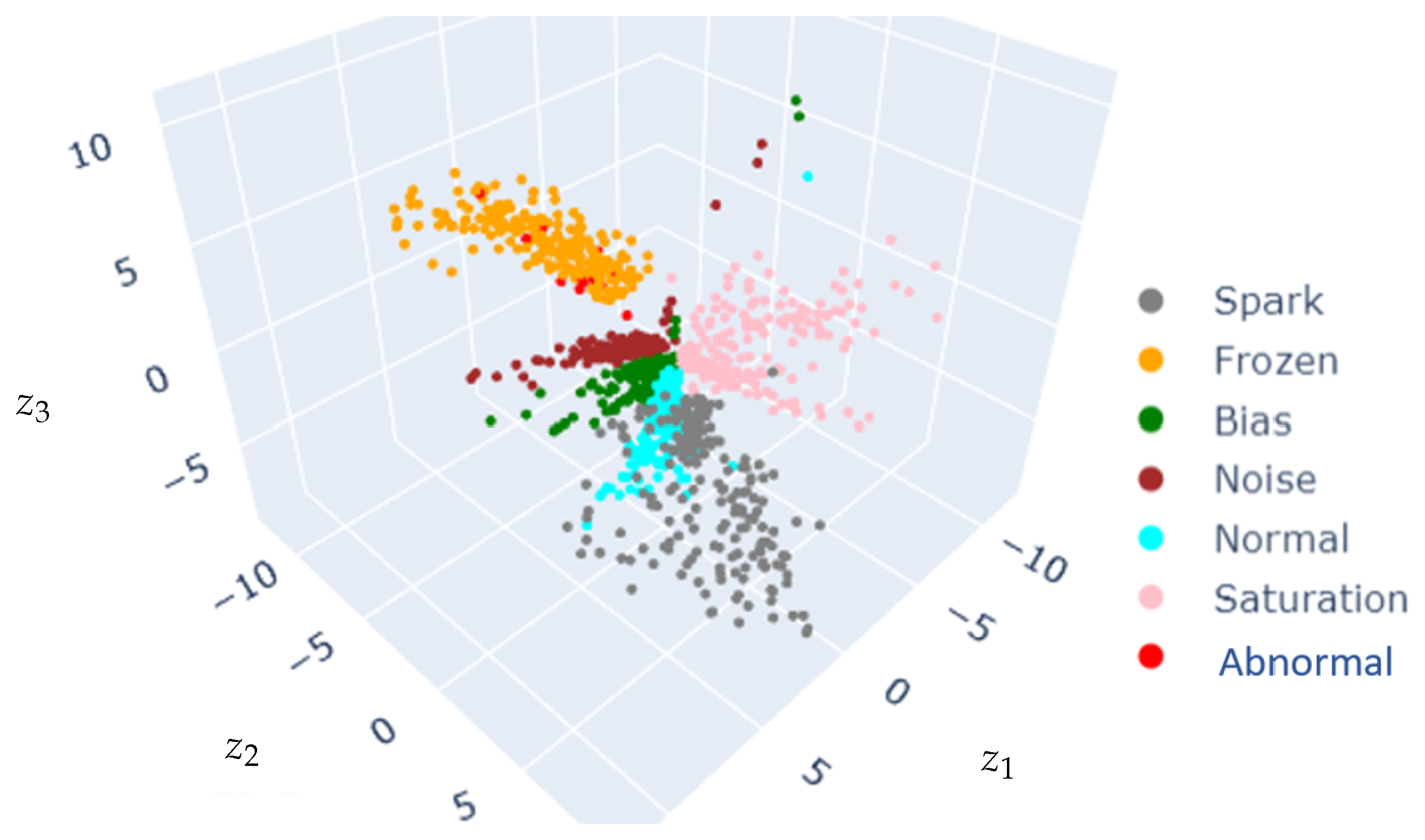

3.5.2. Latent Space-Based Detection

4. Results and Discussion

4.1. Feature-Based Images from Sensors Data

4.2. CVAE Architecture and Training

4.3. Reconstruction Error

4.4. CVAE Latent Space Projection and Distance Metric

4.5. Comparison with Competing Approaches

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ali, A.M.A.; Tawfik, E.Z.; Shaeb, M.; Mousbah, A.M.A. Sensor-to-Sensor Networking Concepts Based on Local Mobile Agent Platform. Int. J. Adv. Eng. Manag. (IJAEM) 2023, 5, 199–204. [Google Scholar]

- Li, D.; Wang, Y.; Wang, J.; Wang, C.; Duan, Y. Recent advances in sensor fault diagnosis: A review. Sens. Actuators A Phys. 2020, 309, 111990. [Google Scholar]

- Bogue, R. Sensors for condition monitoring: A review of technologies and applications. Sens. Rev. 2013, 33, 295–299. [Google Scholar]

- Zhang, L.; Leach, M. Evaluate the impact of sensor accuracy on model performance in data-driven building fault detection and diagnostics using Monte Carlo simulation. Build. Simul. 2022, 15, 769–778. [Google Scholar]

- Takai, S. A general framework for diagnosis of discrete event systems subject to sensor failures. Automatica 2021, 129, 109669. [Google Scholar]

- Moreno Haro, L.M.; Tahan, A.; Agard, B. Aperçu des Méthodes de préDiction de Pannes; CIGI Qualita MOSIM: Trois-Rivières, QC, Canada, 2023. [Google Scholar]

- Balaban, E.; Saxena, A.; Bansal, P.; Goebel, K.F.; Curran, S. Modeling, Detection, and Disambiguation of Sensor Faults for Aerospace Applications. IEEE Sens. J. 2009, 9, 1907–1917. [Google Scholar] [CrossRef]

- Chen, P.Y.; Yang, S.; McCann, J.A. Distributed real-time anomaly detection in networked industrial sensing systems. IEEE Trans. Ind. Electron. 2014, 62, 3832–3842. [Google Scholar] [CrossRef]

- Goswami, P.; Rai, R.N. Data-driven sensor selection for industrial gearbox fault diagnosis using principal component analysis. Meas. Sci. Technol. 2025, 36, 036111. [Google Scholar]

- Swischuk, R.; Allaire, D. A machine learning approach to aircraft sensor error detection and correction. J. Comput. Inf. Sci. Eng. 2019, 19, 041009. [Google Scholar]

- Ahmad, M.W.; Akram, M.U.; Mohsan, M.M.; Saghar, K.; Ahmad, R.; Butt, W.H. Transformer-based sensor failure prediction and classification framework for UAVs. Expert Syst. Appl. 2024, 248, 123415. [Google Scholar] [CrossRef]

- Abbas, M.; Chafouk, H.; Ardjoun, S.A.E.M. Fault diagnosis in wind turbine current sensors: Detecting single and multiple faults with the extended kalman filter bank approach. Sensors 2024, 24, 728. [Google Scholar] [CrossRef] [PubMed]

- Singh, A.; Demirbaga, U.; Aujla, G.S.; Jindal, A.; Sun, H.; Jiang, J. Scalable and Reliable Data Framework for Sensor-enabled Virtual Power Plant Digital Twin. IEEE J. Sel. Areas Sens. 2025, 2, 108–120. [Google Scholar] [CrossRef]

- Thiyagarajan, K.; Kodagoda, S.; Van Nguyen, L.; Ranasinghe, R. Sensor failure detection and faulty data accommodation approach for instrumented wastewater infrastructures. IEEE Access 2018, 6, 56562–56574. [Google Scholar] [CrossRef]

- Zhao, Z.; Sun, Y.G.; Zhang, J. Fault detection and diagnosis for sensor in an aero-engine system. In Proceedings of the 2016 Chinese Control and Decision Conference (CCDC), Yinchuan, China, 28–30 May 2016; Volume 1, pp. 2977–2982. [Google Scholar]

- Zhang, K.; Du, K.; Ju, Y. Algorithm of Railway Turnout Fault Detection Based on PNN Neural Network. In Proceedings of the 2014 Seventh International Symposium on Computational Intelligence and Design, Hangzhou, China, 13–14 December 2014; Volume 1, pp. 544–547. [Google Scholar]

- Ehlenbröker, J.F.; Mönks, U.; Lohweg, V. Sensor defect detection in multisensor information fusion. J. Sens. Sens. Syst. 2016, 5, 337–353. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Jan, S.U.; Saeed, U.; Koo, I. Machine Learning for Detecting Drift Fault of Sensors in Cyber-Physical Systems. In Proceedings of the 2020 17th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 14–18 January 2020; Volume 20, pp. 389–394. [Google Scholar]

- Kim, S.G.; Chae, Y.H.; Seong, P.H. Signal Fault Identification In Nuclear Power Plants Based On Deep Neural Networks. Ann. DAAAM Proc. 2019, 30, 846–852. [Google Scholar]

- de Silva, B.M.; Callaham, J.; Jonker, J.; Goebel, N.; Klemisch, J.; McDonald, D.; Hicks, N.; Nathan Kutz, J.; Brunton, S.L.; Aravkin, A.Y. Hybrid learning approach to sensor fault detection with flight test data. AIAA J. 2021, 59, 3490–3503. [Google Scholar] [CrossRef]

- Fong, K.; Lee, C.; Leung, M.; Sun, Y.; Zhu, G.; Baek, S.H.; Luo, X.; Lo, T.K.K.; Leung, H.S.Y. A hybrid multiple sensor fault detection, diagnosis and reconstruction algorithm for chiller plants. J. Build. Perform. Simul. 2023, 16, 588–608. [Google Scholar] [CrossRef]

- Chen, Y.; Gou, L.; Li, H.; Wang, J. Sensor Fault Diagnosis of Aero Engine Control System Based on Honey Badger Optimizer. IFAC-PapersOnLine 2022, 55, 228–233. [Google Scholar] [CrossRef]

- Reddy, G.N.K.; Manikandan, M.S.; Murty, N.N. On-device integrated PPG quality assessment and sensor disconnection/saturation detection system for IoT health monitoring. IEEE Trans. Instrum. Meas. 2020, 69, 6351–6361. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, M.; Wan, N.; Deng, Z.; He, Z.; Luo, J. Missing measurement data recovery methods in structural health monitoring: The state, challenges and case study. Measurement 2024, 231, 114528. [Google Scholar]

- Feng, J.; Hajizadeh, I.; Yu, X.; Rashid, M.; Samadi, S.; Sevil, M.; Hobbs, N.; Brandt, R.; Lazaro, C.; Maloney, Z.; et al. Multi-model sensor fault detection and data reconciliation: A case study with glucose concentration sensors for diabetes. AIChE J. 2019, 65, 629–639. [Google Scholar]

- Shi, Y.; Kumar, T.S.; Knoblock, C.A. Constraint-based learning for sensor failure detection and adaptation. In Proceedings of the 2018 IEEE 30th International Conference on Tools with Artificial Intelligence (ICTAI), Volos, Greece, 5–7 November 2018; pp. 328–335. [Google Scholar]

- Darvishi, H.; Ciuonzo, D.; Eide, E.R.; Rossi, P.S. Sensor-fault detection, isolation and accommodation for digital twins via modular data-driven architecture. IEEE Sens. J. 2020, 21, 4827–4838. [Google Scholar]

- ElHady, N.E.; Provost, J. A systematic survey on sensor failure detection and fault-tolerance in ambient assisted living. Sensors 2018, 18, 1991. [Google Scholar] [CrossRef]

- Huangfu, Y.; Seddik, E.; Habibi, S.; Wassyng, A.; Tjong, J. Fault Detection and Diagnosis of Engine Spark Plugs Using Deep Learning Techniques. SAE Int. J. Engines 2022, 15, 515–526. [Google Scholar]

- Li, C.; Li, Y. A Spike-Based Model of Neuronal Intrinsic Plasticity. IEEE Trans. Auton. Ment. Dev. 2013, 5, 62–73. [Google Scholar]

- Zidi, S.; Moulahi, T.; Alaya, B. Fault Detection in Wireless Sensor Networks Through SVM Classifier. IEEE Sens. J. 2018, 18, 340–347. [Google Scholar]

- Bordoni, F.; D’Amico, A. Noise in sensors. Sens. Actuators A Phys. 1990, 21, 17–24. [Google Scholar]

- Dang, Q.K.; Suh, Y.S. Sensor saturation compensated smoothing algorithm for inertial sensor based motion tracking. Sensors 2014, 14, 8167–8188. [Google Scholar] [CrossRef]

- Caruso, G.; Galeani, S.; Menini, L. Active vibration control of an elastic plate using multiple piezoelectric sensors and actuators. Simul. Model. Pract. Theory 2003, 11, 403–419. [Google Scholar]

- Li, W.; Liu, D.; Li, Y.; Hou, M.; Liu, J.; Zhao, Z.; Guo, A.; Zhao, H.; Deng, W. Fault diagnosis using variational autoencoder GAN and focal loss CNN under unbalanced data. Struct. Health Monit. 2024, 14759217241254121. [Google Scholar] [CrossRef]

- Alrubaie, S.A.H.; Hameed, A.H. Dynamic weights equations for converting grayscale image to RGB image. J. Univ. Babylon Pure Appl. Sci. 2018, 26, 122–129. [Google Scholar]

- Oliveira-Filho, A.; Zemouri, R.; Cambron, P.; Tahan, A. Early Detection and Diagnosis of Wind Turbine Abnormal Conditions Using an Interpretable Supervised Variational Autoencoder Model. Energies 2023, 16, 4544. [Google Scholar] [CrossRef]

- Sun, J.; Wang, X.; Xiong, N.; Shao, J. Learning Sparse Representation With Variational Auto-Encoder for Anomaly Detection. IEEE Access 2018, 6, 33353–33361. [Google Scholar]

- An, J.; Cho, S. Variational autoencoder based anomaly detection using reconstruction probability. Spec. Lect. IE 2015, 2, 1–18. [Google Scholar]

- Kim, M.S.; Yun, J.P.; Lee, S.; Park, P. Unsupervised Anomaly detection of LM Guide Using Variational Autoencoder. Int. Symp. Adv. Top. Electr. Eng. (ATEE) 2019, 19, 10–15. [Google Scholar]

- Marimont, S.N.; Tarroni, G. Anomaly Detection Through Latent Space Restoration Using Vector Quantized Variational Autoencoders. Int. Symp. Biomed. Imaging (ISBI) 2021, 18, 1764–1767. [Google Scholar]

- Oliveira-Filho, A.; Zemouri, R.; Pelletier, F.; Tahan, A. System condition monitoring based on a standardized latent space and the Nataf transform. IEEE Access 2024, 12, 32637–32659. [Google Scholar]

- Gayathri, R.; Sajjanhar, A.; Xiang, Y. Image-based feature representation for insider threat classification. Appl. Sci. 2020, 10, 4945. [Google Scholar] [CrossRef]

- Zhang, Y.; Xie, X.; Li, H.; Zhou, B. An unsupervised tunnel damage identification method based on convolutional variational auto-encoder and wavelet packet analysis. Sensors 2022, 22, 2412. [Google Scholar] [CrossRef]

- Zemouri, R.; Levesque, M.; Amyot, N.; Hudon, C.; Kokoko, O.; Tahan, S.A. Deep convolutional variational autoencoder as a 2d-visualization tool for partial discharge source classification in hydrogenerators. IEEE Access 2019, 8, 5438–5454. [Google Scholar] [CrossRef]

- Abid, A.; Kachouri, A.; Ben Fradj Guiloufi, A.; Mahfoudhi, A.; Nasri, N.; Abid, M. Centralized KNN anomaly detector for WSN. Int. Multi-Conf. Syst. Signals Devices 2015, 12, 51–54. [Google Scholar]

- Cheng, R.C.; Chen, K.S. Ball bearing multiple failure diagnosis using feature-selected autoencoder model. Int. J. Adv. Manuf. Technol. 2022, 120, 4803–4819. [Google Scholar] [CrossRef]

| Reference | No Signal | Saturation | Bias | Frozen | Noise | Spark | Domain | Method |

|---|---|---|---|---|---|---|---|---|

| Our proposition | x | x | x | x | x | x | Aeronautics | ML (CVAE) |

| [7] | x | x | x | Electrical | ML | |||

| [15] | x | Aeronautics | Statistics | |||||

| [20] | x | Energy | ML | |||||

| [16] | x | x | x | Electrical | ML | |||

| [17] | x | Manufacture | ML-Statistics | |||||

| [26] | x | x | Health surveillance | ML-Statistics | ||||

| [28] | x | Industry 4.0 | ML | |||||

| [19] | x | x | x | CPS | ML | |||

| [21] | x | x | Aeronautics | ML | ||||

| [23] | x | x | x | x | Aeronautics | ML | ||

| [22] | x | Energy | ML | |||||

| [24] | x | Health monitoring | Statistics | |||||

| [47] | x | IoT | ML |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moreno Haro, L.M.; Oliveira-Filho, A.; Agard, B.; Tahan, A. Failure Detection in Sensors via Variational Autoencoders and Image-Based Feature Representation. Sensors 2025, 25, 2175. https://doi.org/10.3390/s25072175

Moreno Haro LM, Oliveira-Filho A, Agard B, Tahan A. Failure Detection in Sensors via Variational Autoencoders and Image-Based Feature Representation. Sensors. 2025; 25(7):2175. https://doi.org/10.3390/s25072175

Chicago/Turabian StyleMoreno Haro, Luis Miguel, Adaiton Oliveira-Filho, Bruno Agard, and Antoine Tahan. 2025. "Failure Detection in Sensors via Variational Autoencoders and Image-Based Feature Representation" Sensors 25, no. 7: 2175. https://doi.org/10.3390/s25072175

APA StyleMoreno Haro, L. M., Oliveira-Filho, A., Agard, B., & Tahan, A. (2025). Failure Detection in Sensors via Variational Autoencoders and Image-Based Feature Representation. Sensors, 25(7), 2175. https://doi.org/10.3390/s25072175