Enhanced Color Nighttime Light Remote Sensing Imagery Using Dual-Sampling Adjustment

Abstract

1. Introduction

2. Research Area and Data Processing

2.1. Research Area

2.2. Data Processing

- (1)

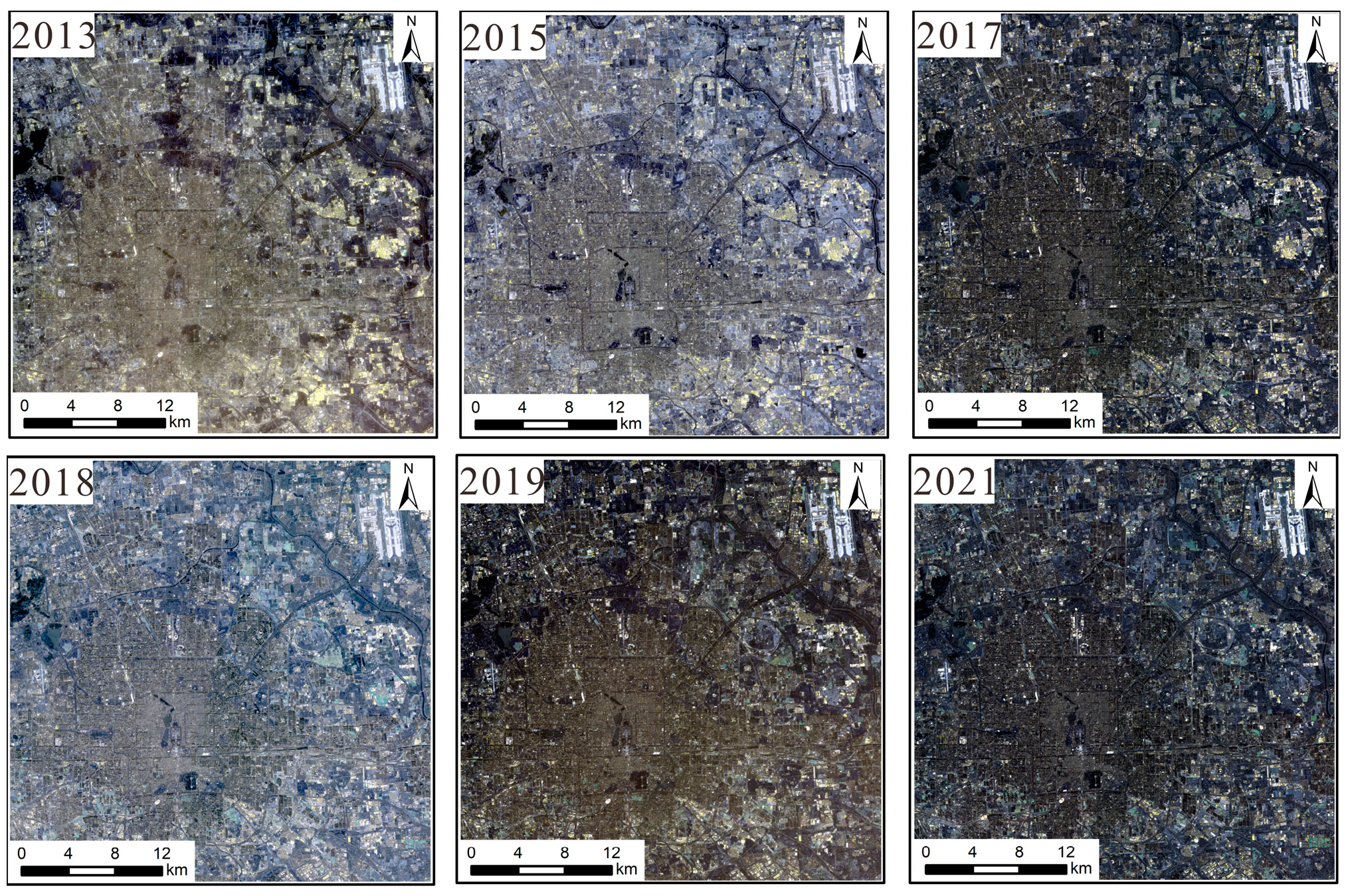

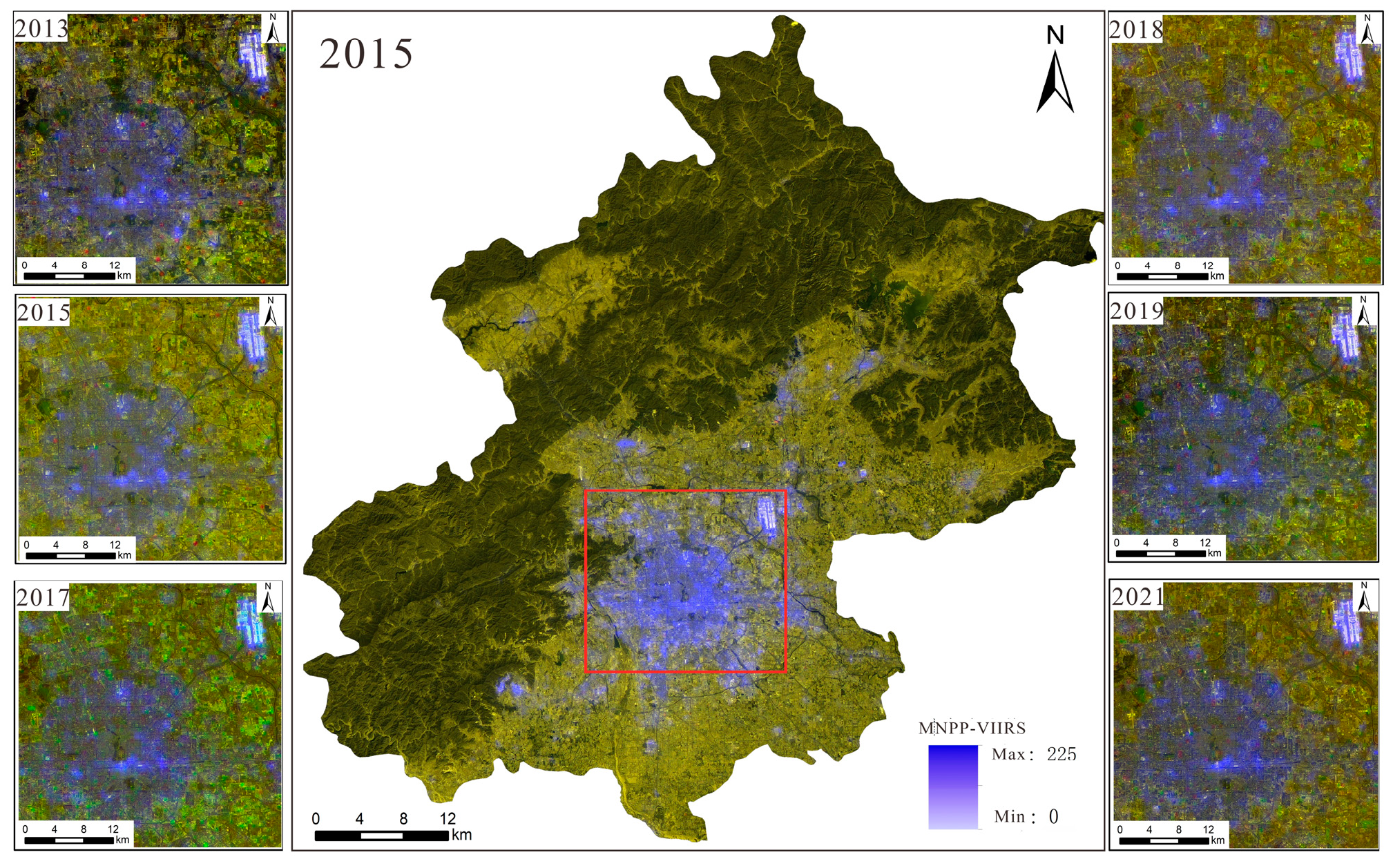

- NPP/VIIRS data: These data come from the long-term nighttime light dataset for China published by the Global Change Science Research Data Publishing System [23] and from the National Geophysical Data Center (NGDC) under the National Oceanic and Atmospheric Administration (NOAA) of the United States. Compared to DMSP/OLS nighttime light remote sensing, the cloud-free monthly data from NPP-VIIRS with 500 m spatial resolution do not have the problem of pixel value saturation. Moreover, the monthly data have completely eliminated the influence of moonlight, aurora borealis, and other stray light sources, and they effectively monitor the ground nighttime light situation. The steps of data processing were as follows: The Chinese administrative division vector data extracted by the mask were used to obtain nighttime light remote sensing images of Beijing during 2013–2021. The data were projected onto the WGS1984 geographic coordinate system and the Lambert Equal Area projection coordinate system. The processing of data for the six-year period was completed as shown in Figure 1.

- (2)

- Landsat 8 data: These data were sourced from the Geospatial Data Cloud. This refers to Landsat 8OL-TIRS (OLI = Operational Land Image; TIRS = Thermal Infrared Sensor) images covering Beijing for the period from 2013 to 2021, where OLI is mainly in the visible to short-wave infrared bands and TIRS is in the thermal infrared band. This study uses OLI data, which contain multispectral images with a resolution of 30 m and panchromatic images with a resolution of 15 m. The radiometric calibration removes the error caused by the difference in sensor response; the atmospheric correction further removes the atmospheric scattering and absorption effects to obtain the true reflectance of the ground surface, and the panchromatic images are radiometrically calibrated to correct the radiometric distortion caused by atmospheric effects to ensure the clarity of spatial details. The fidelity of the pre-processed images is greatly improved, and the color distortion is reduced during fusion. Finally, the images are mosaicked and extracted from the regional map to obtain the multispectral images and panchromatic remote sensing images for 2013–2021. The results are shown in Figure 2 and Figure 3.

3. Dual-Sampling Adjustment to Generate Color Nighttime Light Remote Sensing Imagery

3.1. Dual-Sampling Adjustment Method

- (1)

- Down-sampling adjustment

- (2)

- GS transformation

- (3)

- High-pass filter enhancement

- (4)

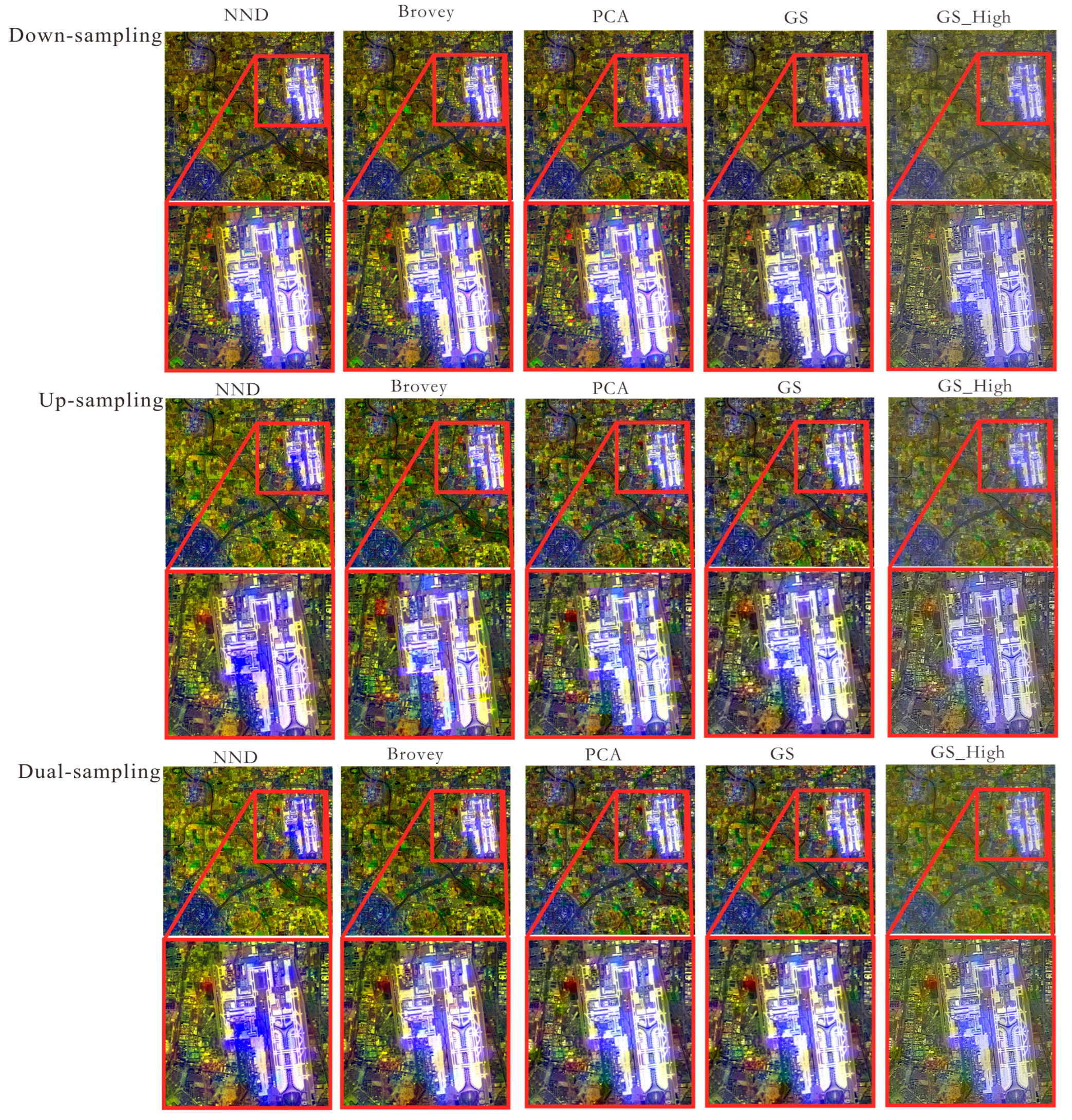

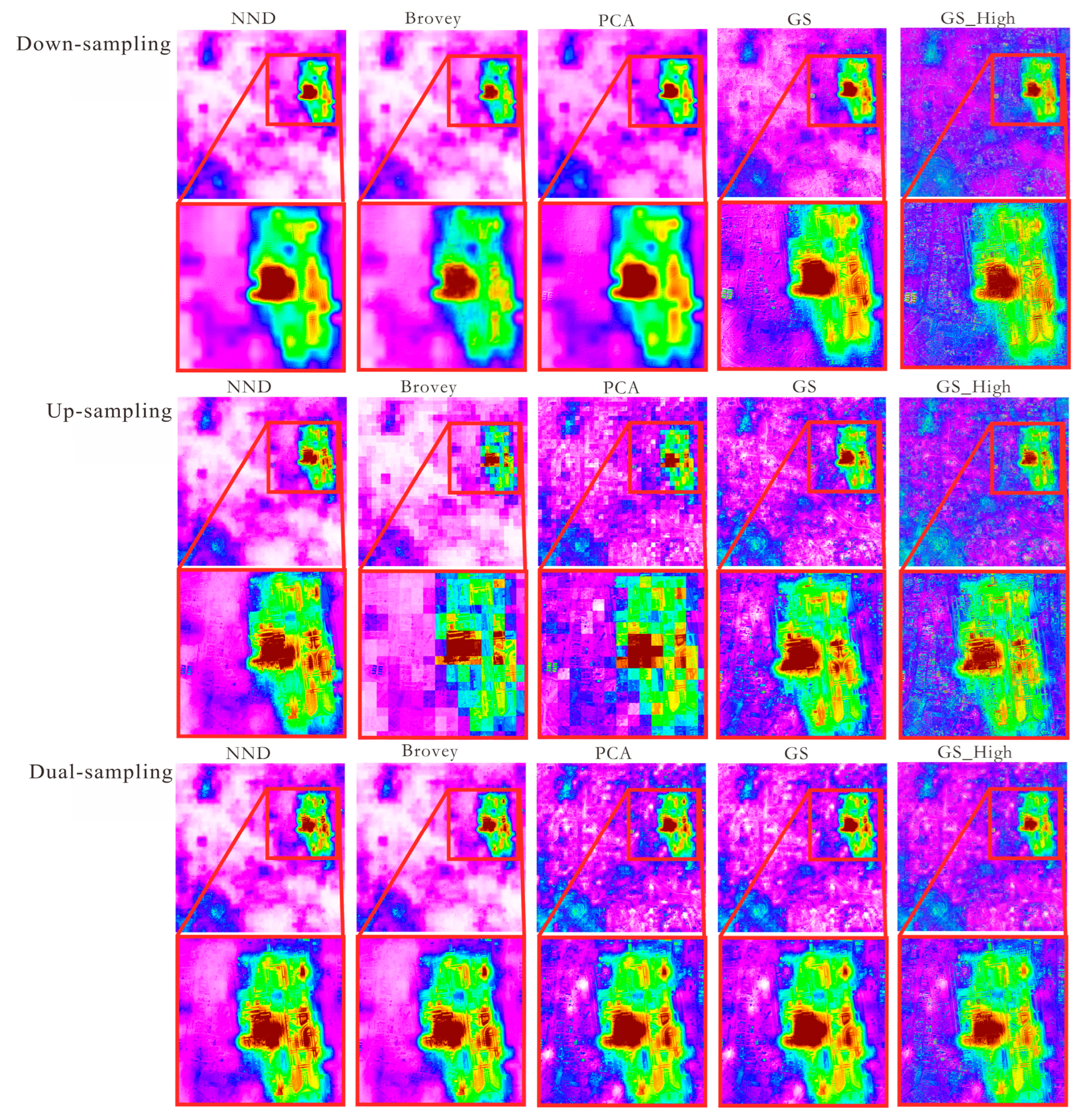

3.2. Sampling Comparison Method

- (1)

- Down-sampling multispectral image

- (2)

- Up-sampling adjustment

- (3)

- Dual-sampling multispectral image

3.3. Image Quality Evaluation

- (1)

- Subjective Evaluation

- (2)

- Objective Evaluation

4. Dual-Sampling Adjustment to Generate Color Nighttime Light Remote Sensing Imagery

4.1. Research Data Analysis

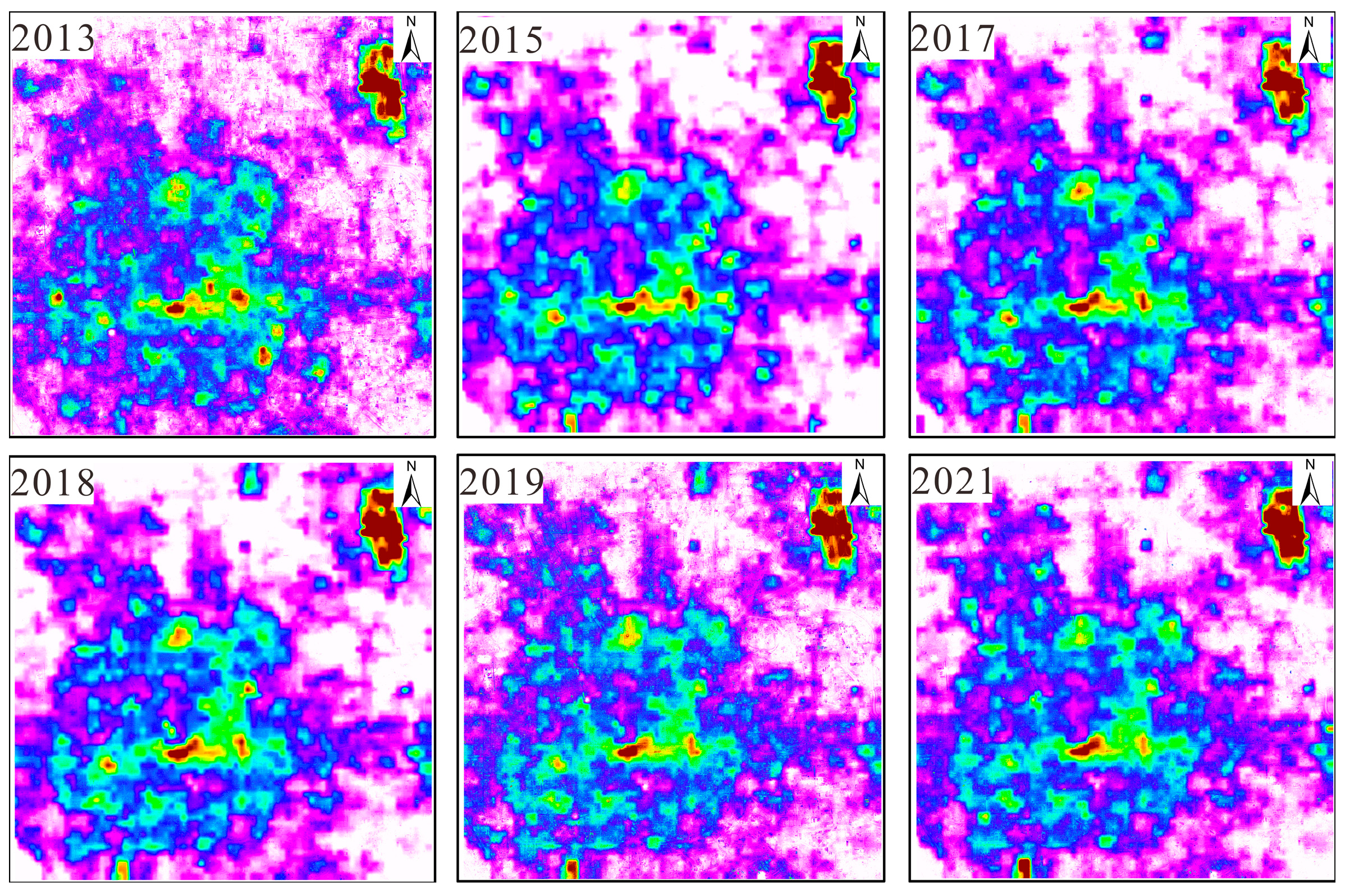

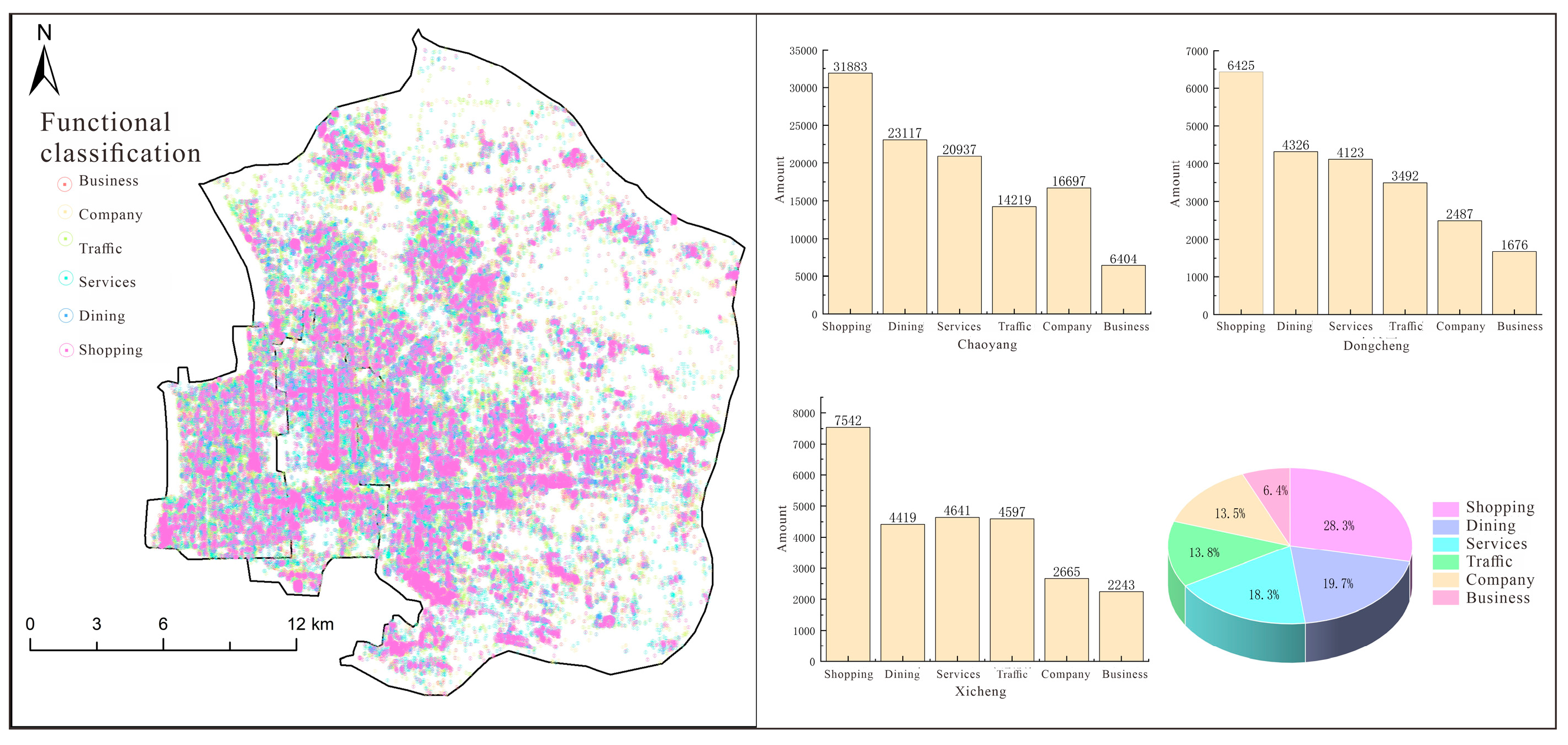

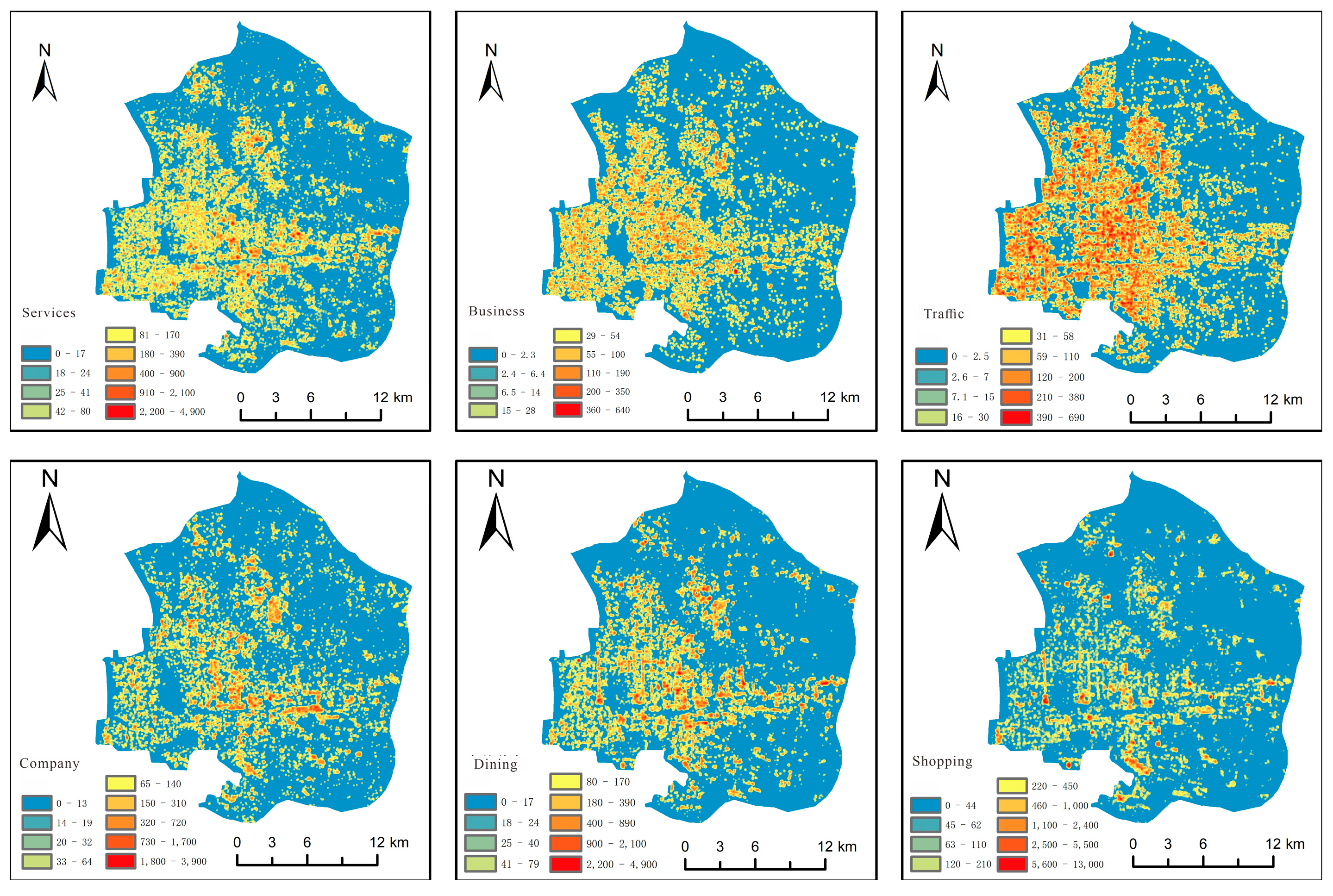

4.2. Kernel Density Analysis of POIs in Urban Functional Areas

4.3. Urban Functional Correlation Analysis Based on Color Light Values

5. Conclusions

- (1)

- The dual-sampling adjustment method generates color nighttime light imagery with a spatial resolution improved from 500 m to 15 m and spectral bands expanded from single-band to three bands (red, green, blue). This approach retains nighttime light distribution while incorporating daytime surface features, significantly improving the comprehensive performance of nighttime light remote sensing imagery.

- (2)

- Subjective and objective evaluations demonstrate that the dual-sampling adjustment image, particularly its third band, achieves the best performance in indicators such as MEAN, STD, EN, CC, and PSNR. These results confirm the method’s superiority in preserving spatial textures, enhancing information capacity, and maintaining spectral fidelity.

- (3)

- Urban functional type analysis reveals that the enhanced color nighttime light remote sensing imagery accurately captures urban spatial features. The brightness of color nighttime light exhibits the strongest correlation with businesses, followed by companies, providing novel data support for dynamic monitoring of urban functions.

6. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Combs, C.L.; Miller, S.D. A review of the far-reaching usage of low-light nighttime data. Remote Sens. 2023, 15, 623. [Google Scholar] [CrossRef]

- Zheng, Y.; Fan, M.; Cai, Y.; Fu, M.; Yang, K.; Wei, C. Spatiotemporal pattern evolution of carbon emissions at the city-county-town scale in Fujian Province based on DMSP/OLS and NPP/VIIRS nighttime light data. J. Clean. Prod. 2024, 442, 140958. [Google Scholar] [CrossRef]

- Yu, B.L.; Wang, C.X.; Gong, W.K.; Chen, Z.; Shi, K.; Wu, B.; Hong, Y.; Li, Q.; Wu, J. Nighttime light remote sensing and urban studies: Data, methods, applications, and prospects. Natl. Remote Sens. Bull. 2021, 25, 342–364. [Google Scholar]

- Li, X.; Cao, H.R.; Gong, Y. Turkey-Syria Earthquake Assessment Using High-Resolution Night-time Light Images. Geomat. Inf. Sci. Wuhan Univ. 2023, 48, 1697–1705. [Google Scholar]

- Ye, Y.; Tong, C.; Dong, B.; Huang, C.; Bao, H.; Deng, J. Alleviate light pollution by recognizing urban night-time light control area based on computer vision techniques and remote sensing imagery. Ecol. Indic. 2024, 158, 111591. [Google Scholar]

- Lin, Y.; Gao, C.; Zhang, T.H. Analysis of Russia-Ukraine Conflicts Situation and Its Economic Impact Using Night-Time Light Remote Sensing. J. Tongji Univ. (Nat. Sci.) 2024, 52, 1975–1984. [Google Scholar]

- Li, W.; Wu, M.; Niu, Z. Spatialization and Analysis of China’s GDP Based on NPP/VIIRS Data from 2013 to 2023. Appl. Sci. 2024, 14, 8599. [Google Scholar] [CrossRef]

- Yu, L.; Zheng, Y.; Jiang, J.Q. Development and Innovative Applications of Night Light Remote Sensing Satellites. Satell. Appl. 2024, 4, 46–53. [Google Scholar]

- Zheng, Q.M.; Weng, Q.H.; Huang, L.Y.; Wang, K.; Deng, J.; Jiang, R.; Ye, Z.; Gan, M. A new source of multi-spectral high spatial resolution night-time light imagery—JL1-3B. Remote Sens. Environ. 2018, 215, 300–312. [Google Scholar] [CrossRef]

- Watson, C.S.; Elliott, J.R.; Córdova, M.; Menoscal, J.; Bonilla-Bedoya, S. Evaluating night-time light sources and correlation with socio-economic development using high-resolution multi-spectral Jilin-1 satellite imagery of Quito, Ecuador. Int. J. Remote Sens. 2023, 44, 2691–2716. [Google Scholar] [CrossRef]

- Guk, E.; Levin, N. Analyzing spatial variability in night-time lights using a high spatial resolution color Jilin-1 image–Jerusalem as a case study. ISPRS J. Photogramm. Remote Sens. 2020, 163, 121–136. [Google Scholar]

- Yan, L.; Hu, Y.; Dou, C.; Li, X.-M. Radiometric Calibration of SDGSAT-1 Nighttime Light Payload. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1000715. [Google Scholar] [CrossRef]

- Guo, H.; Dou, C.; Chen, H.; Liu, J.; Fu, B.; Li, X.; Zou, Z.; Liang, D. SDGSAT-1: The world’s first scientific satellite for sustainable development goals. Sci. Bull. 2023, 68, 34–38. [Google Scholar] [CrossRef]

- Guo, B.; Hu, D.; Zheng, Q. Potentiality of SDGSAT-1 glimmer imagery to investigate the spatial variability in nighttime lights. Int. J. Appl. Earth Obs. Geoinf. 2023, 119, 103313. [Google Scholar]

- Zhang, D.; Cheng, B.; Shi, L.; Gao, J.; Long, T.; Chen, B.; Wang, G. A destriping algorithm for SDGSAT-1 nighttime light images based on anomaly detection and spectral similarity restoration. Remote Sens. 2022, 14, 5544. [Google Scholar] [CrossRef]

- Li, S.T.; Li, C.Y.; Kang, X.D. Development status and future prospects of multi-source remote sensing image fusion. Natl. Remote Sens. Bull. 2021, 25, 148–166. [Google Scholar] [CrossRef]

- Wang, X.; Hua, Z.; Li, J. Multi-focus image fusion framework based on transformer and feedback mechanism. Ain Shams Eng. J. 2023, 14, 101978. [Google Scholar]

- Vivone, G. Multispectral and hyperspectral image fusion in remote sensing: A survey. Inf. Fusion 2023, 89, 405–417. [Google Scholar]

- Tang, Y.L.; Huang, D.S.; Chen, S.L.; Chen, P.M. SFIM Image Fusion Method Combining IHS and Adaptive Filtering. Comput. Mod. 2023, 9, 70. [Google Scholar]

- Lu, Y.; Zhou, G.; Huang, M.; Huang, Y. Color Night Light Remote Sensing Images Generation Using Dual-Transformation. Sensors 2024, 24, 294. [Google Scholar] [CrossRef]

- Karim, S.; Tong, G.; Li, J.; Qadir, A.; Farooq, U.; Yu, Y. Current advances and future perspectives of image fusion: A comprehensive review. Inf. Fusion 2023, 90, 185–217. [Google Scholar]

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 32, 75–89. [Google Scholar]

- Zhong, X.Y.; Yan, Q.W.; Li, G.E. Development of a long time series nighttime light dataset for China (2000–2020). J. Glob. Change Data Discov. 2022, 6, 416–424. [Google Scholar]

- Javan, F.D.; Samadzadegan, F.; Mehravar, S.; Toosi, A.; Khatami, R.; Stein, A. A review of image fusion techniques for pan-sharpening of high-resolution satellite imagery. ISPRS J. Photogramm. Remote Sens. 2021, 171, 101–117. [Google Scholar]

- Zhang, S.; Han, Y.; Wang, H.; Hou, D. Gram-Schmidt Remote Sensing Image Fusion Algorithm Based on Matrix Elementary Transformation. J. Phys. Conf. Ser. 2022, 2410, 012013. [Google Scholar]

- Sekrecka, A.; Kedzierski, M.; Wierzbicki, D. Pre-processing of panchromatic images to improve object detection in pansharpened images. Sensors 2019, 19, 5146. [Google Scholar] [CrossRef]

- Ablin, R.; Sulochana, C.; Prabin, G. An investigation in satellite images based on image enhancement techniques. Eur. J. Remote Sens. 2020, 53, 86–94. [Google Scholar]

- Zhang, J.; Zhao, X. Using POI and multisource satellite datasets for mainland China’s population spatialization and spatiotemporal changes based on regional heterogeneity. Sci. Total Environ. 2024, 912, 169499. [Google Scholar]

- Zhang, X.; Xie, Y.; Jiao, J. How to accurately assess the spatial distribution of energy CO2 emissions? Based on POI and NPP-VIIRS comparison. J. Clean. Prod. 2023, 402, 136656. [Google Scholar]

- Wang, Y.; Li, Y.; Song, X.; Zou, X.; Xiao, J. Correlation Analysis between NPP-VIIRS Nighttime Light Data and POIs Data—A Comparison Study in Different Districts and Counties of Nanchan. IOP Conf. Ser. Earth Environ. Sci. 2021, 693, 012103. [Google Scholar]

| Evaluation Metric | Performance |

|---|---|

| MEAN | Reflects the overall brightness level of the image, characterizing the distribution of sensitivity. |

| STD | Represents color contrast by quantifying pixel value dispersion; higher values indicate stronger contrast. |

| EN | Measures the richness and integrity of spectral information; higher entropy denotes greater informational diversity. |

| AG | Evaluates detail clarity, with higher gradients corresponding to sharper edges and textures. |

| CC | Assesses spectral consistency between the image and a reference; values approaching 1 indicate superior fidelity. |

| SD | Quantifies spectral distortion severity; lower values signify enhanced fidelity. |

| PSNR | Integrates spectral fidelity and detail preservation; higher PSNR reflects improved image quality. |

| SSIM | Holistically evaluates brightness, contrast, and texture in comparison to a reference image; values closer to 1 denote higher alignment. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Y.; Lu, Y.; Zhang, L.; Yin, M. Enhanced Color Nighttime Light Remote Sensing Imagery Using Dual-Sampling Adjustment. Sensors 2025, 25, 2002. https://doi.org/10.3390/s25072002

Huang Y, Lu Y, Zhang L, Yin M. Enhanced Color Nighttime Light Remote Sensing Imagery Using Dual-Sampling Adjustment. Sensors. 2025; 25(7):2002. https://doi.org/10.3390/s25072002

Chicago/Turabian StyleHuang, Yaqi, Yanling Lu, Li Zhang, and Min Yin. 2025. "Enhanced Color Nighttime Light Remote Sensing Imagery Using Dual-Sampling Adjustment" Sensors 25, no. 7: 2002. https://doi.org/10.3390/s25072002

APA StyleHuang, Y., Lu, Y., Zhang, L., & Yin, M. (2025). Enhanced Color Nighttime Light Remote Sensing Imagery Using Dual-Sampling Adjustment. Sensors, 25(7), 2002. https://doi.org/10.3390/s25072002