Transforming Prediction into Decision: Leveraging Transformer-Long Short-Term Memory Networks and Automatic Control for Enhanced Water Treatment Efficiency and Sustainability

Abstract

1. Introduction

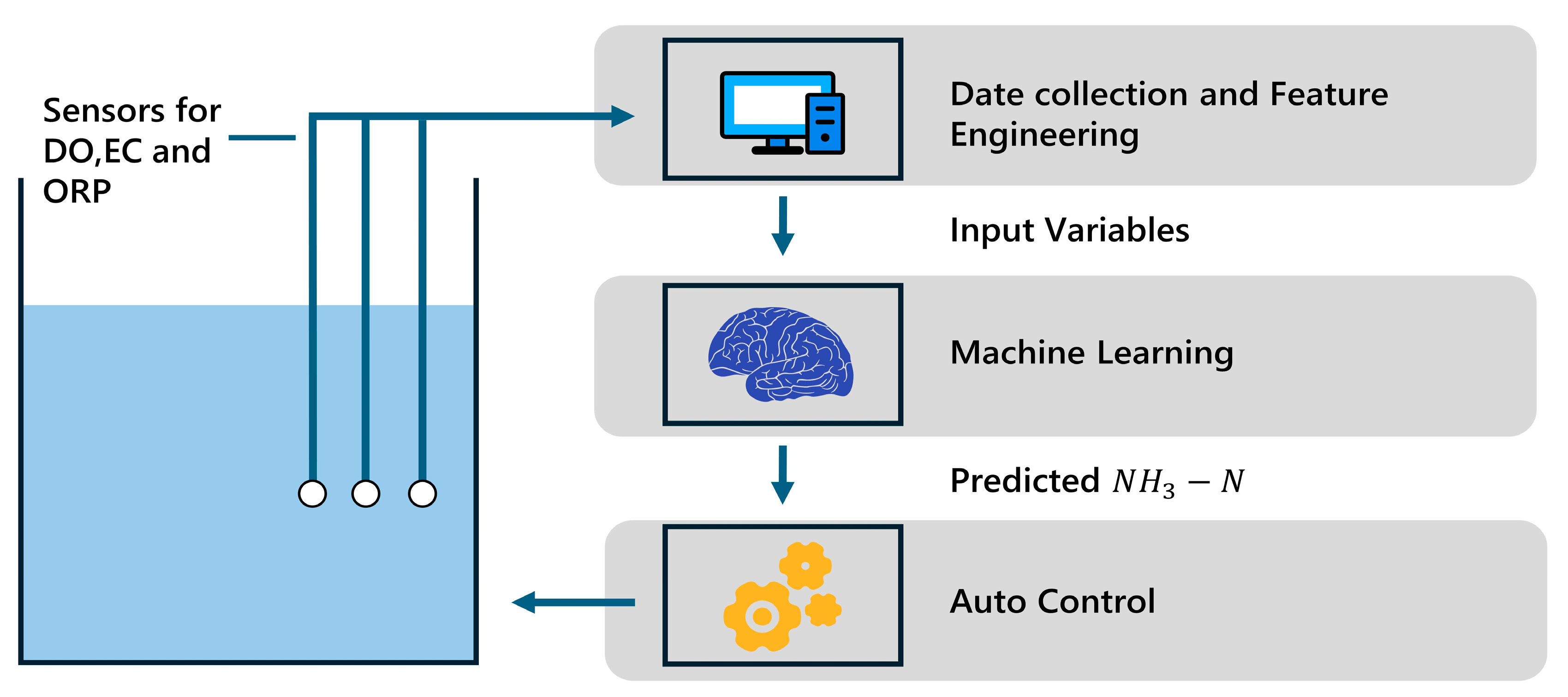

2. Methodology and Application

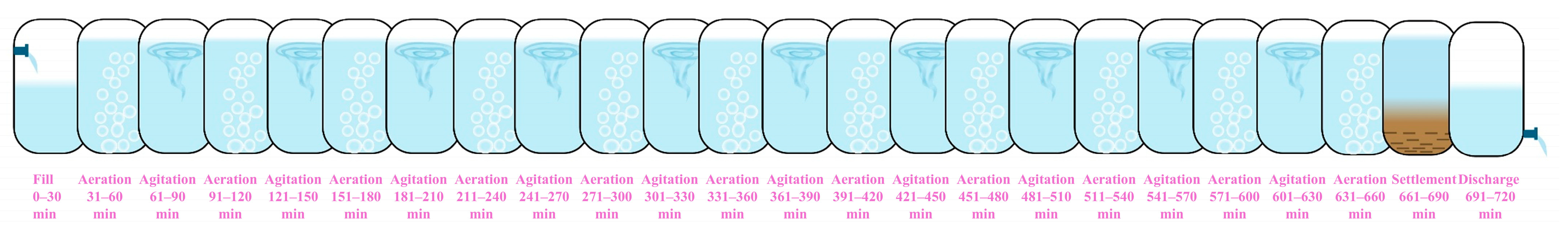

2.1. Structure of SBR

2.2. Control

2.3. Monitoring

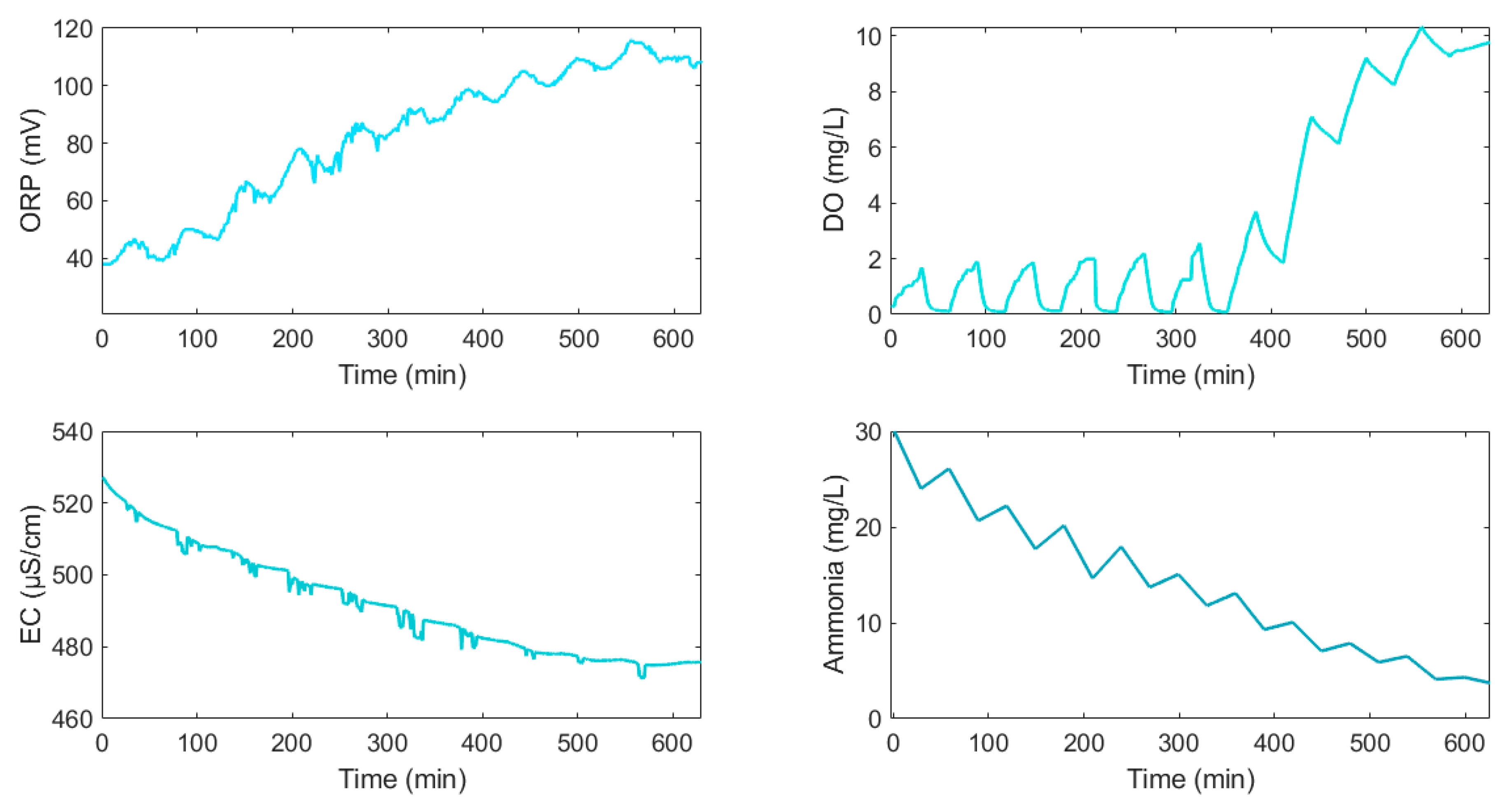

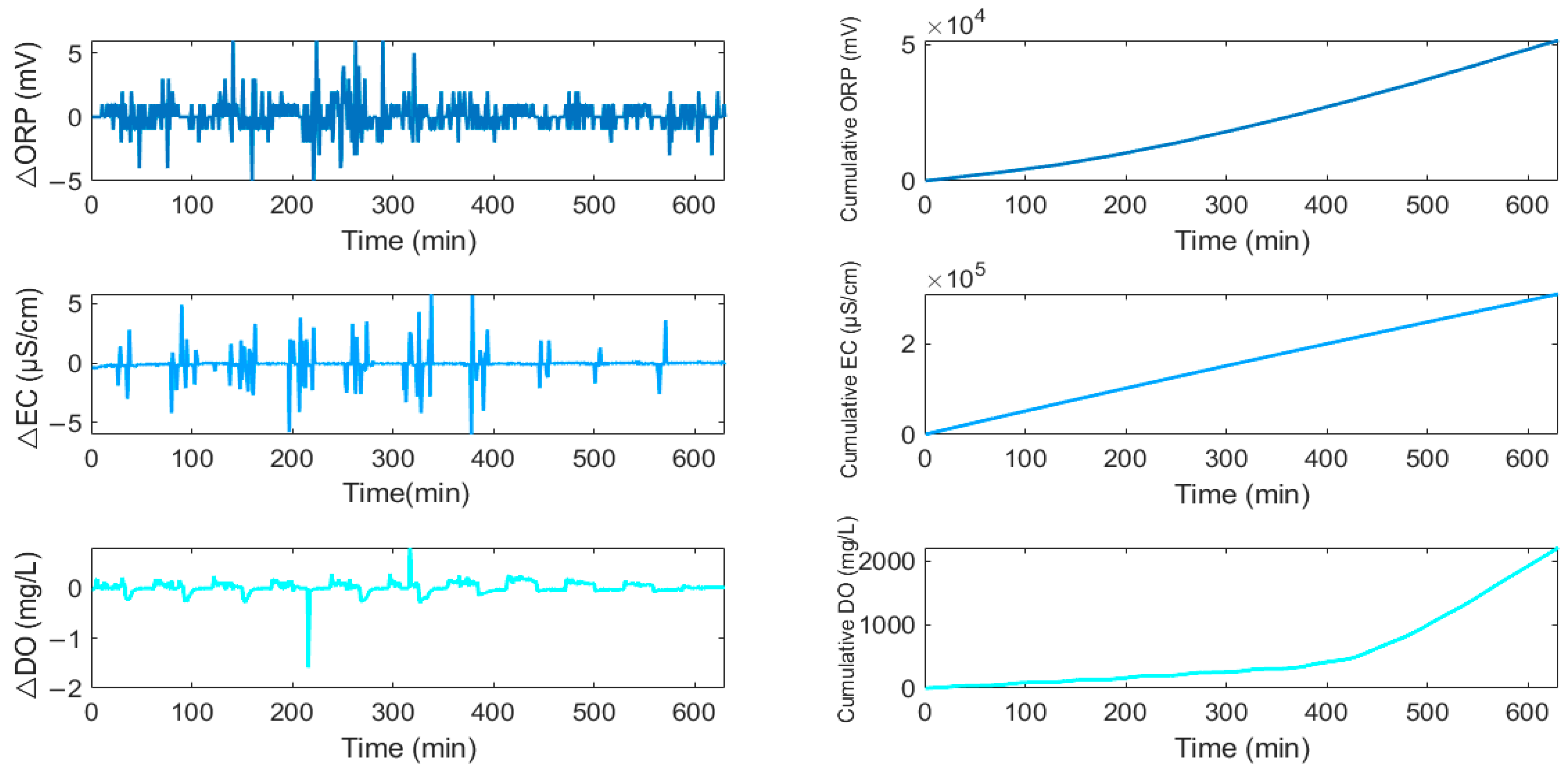

2.4. Assessed Input Variables

2.5. Model Training and Evaluation

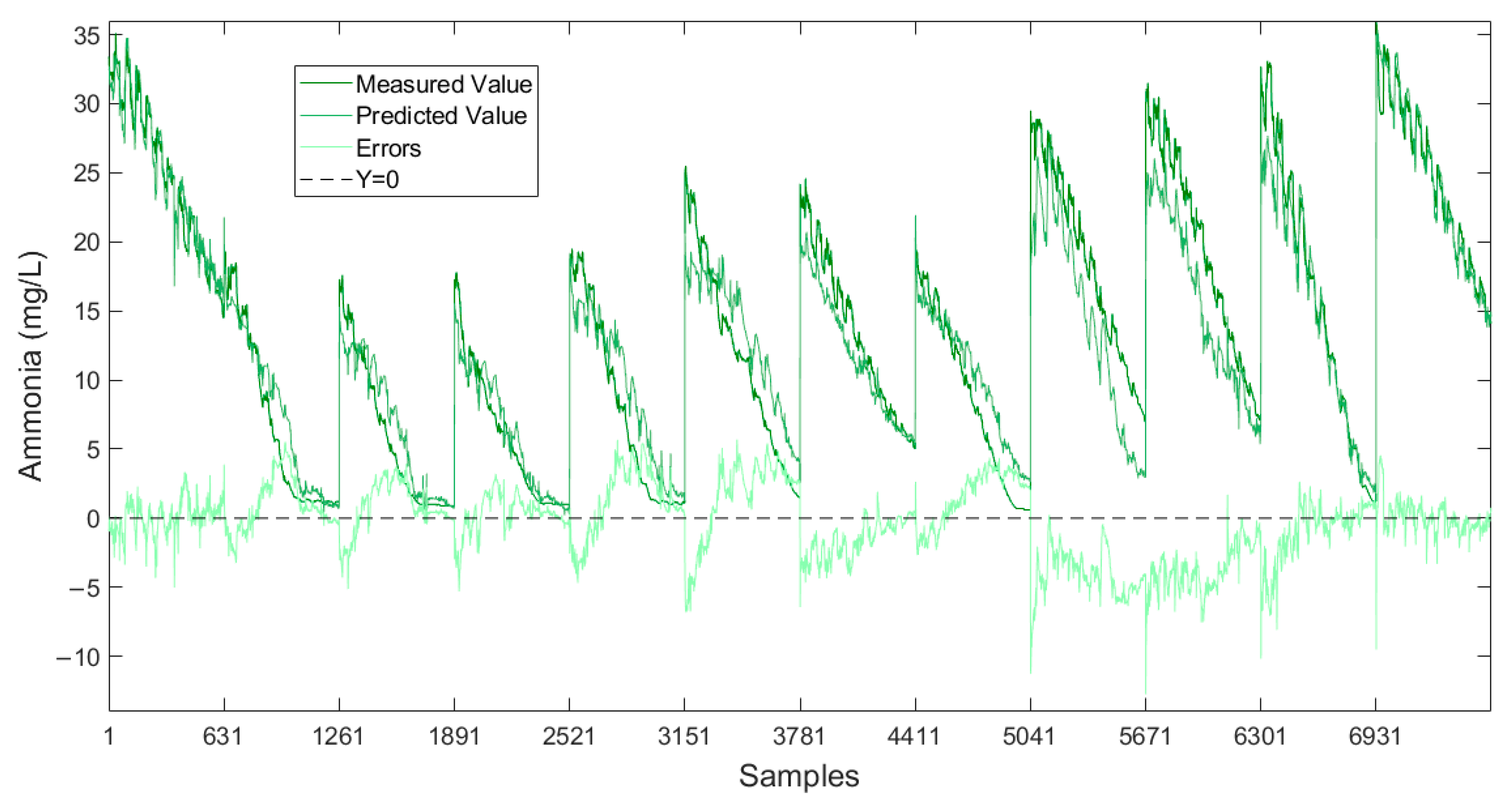

3. Results and Analysis

3.1. Comparison of Models

- (1)

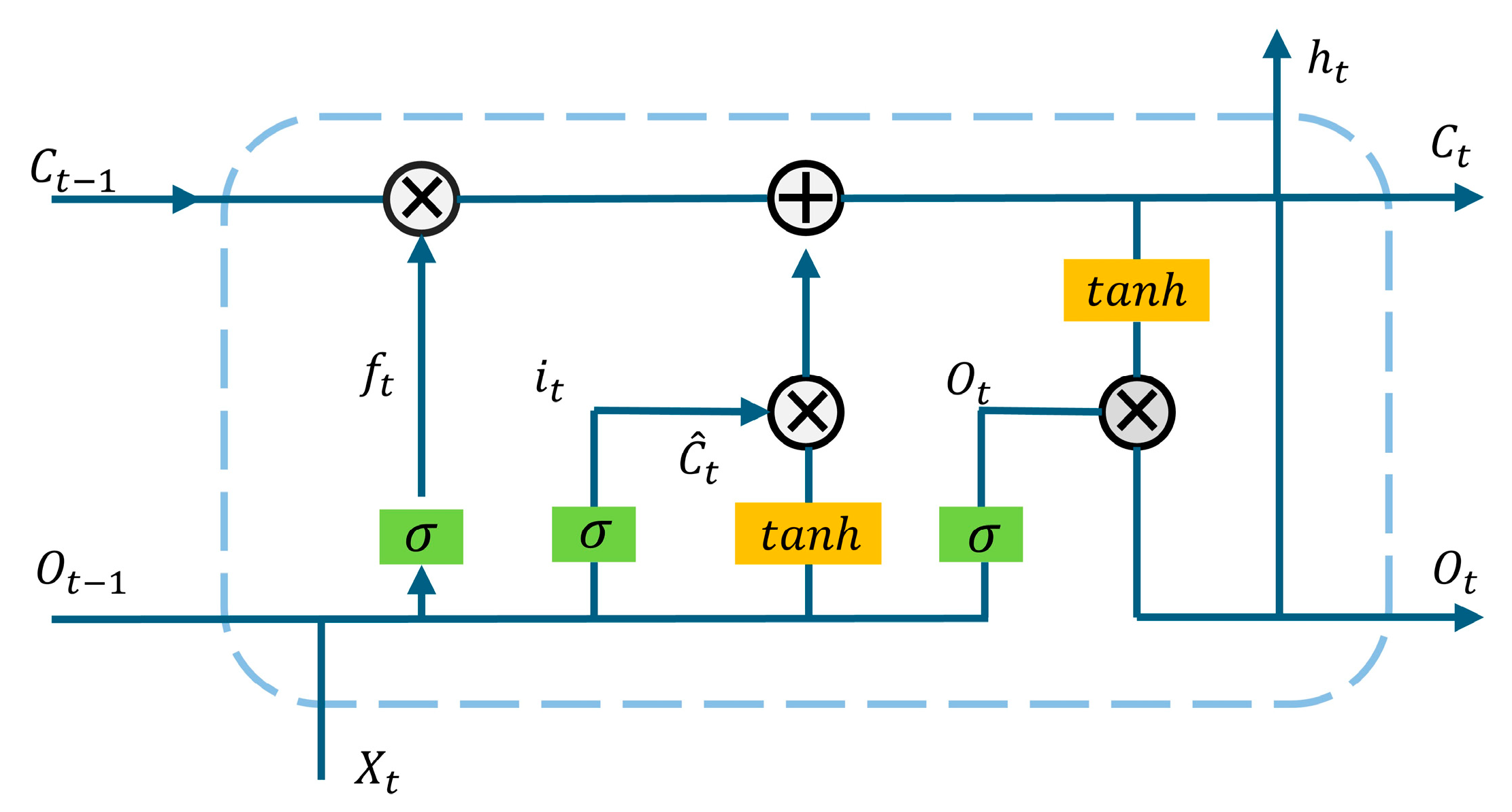

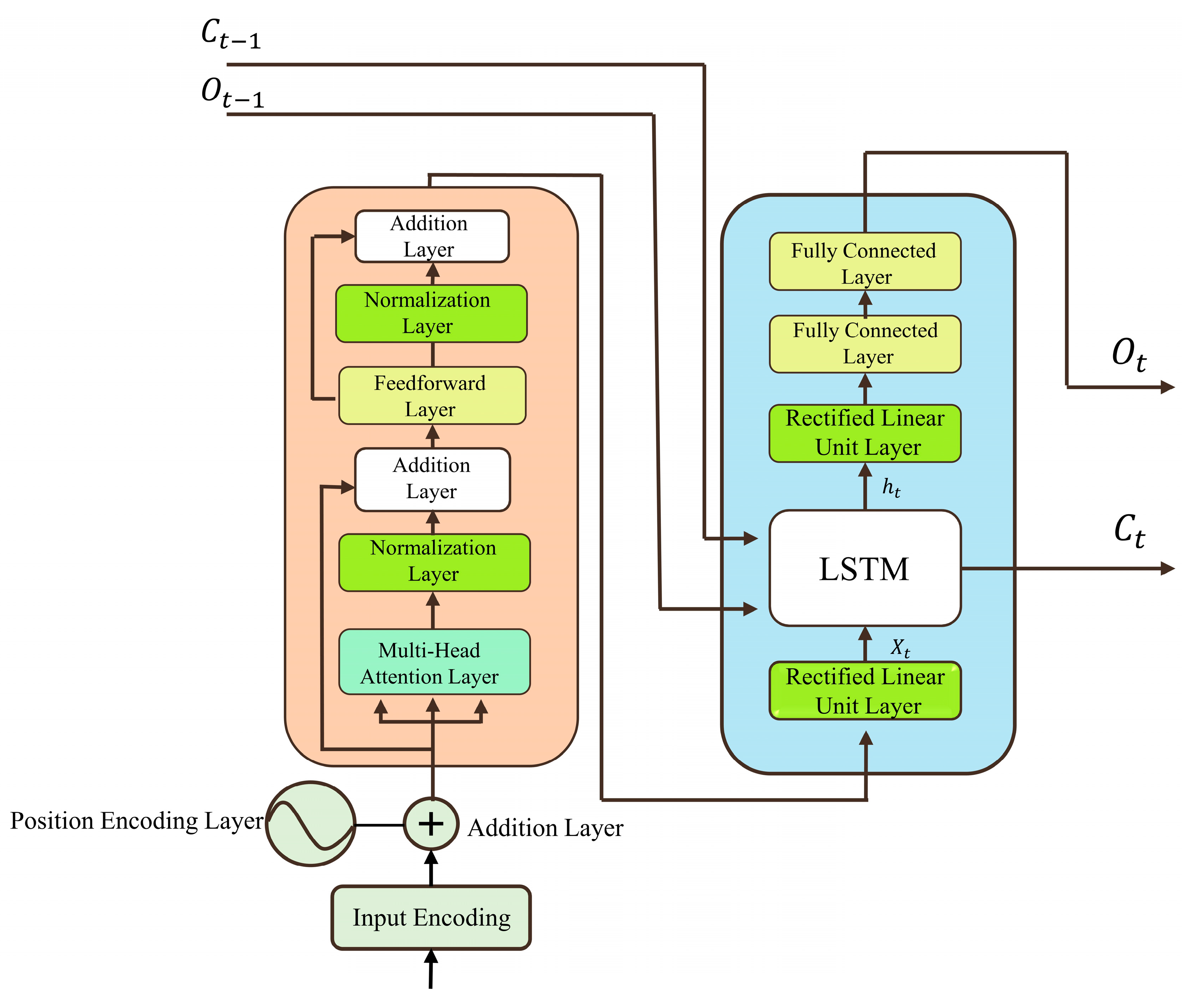

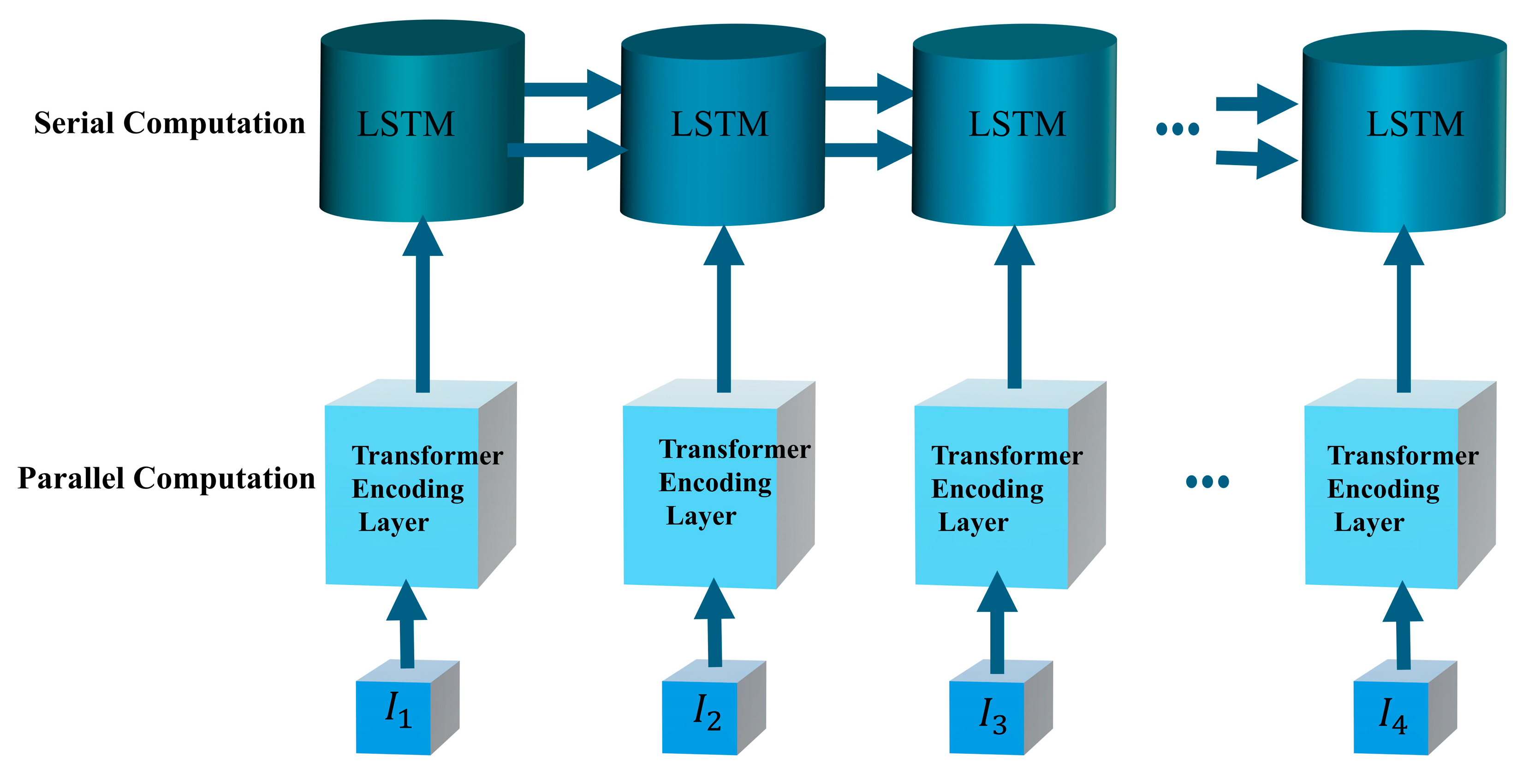

- Model Synergy: While the Transformer focuses on parallel data processing, attending to specific data parts to ensure feature retention and enhance prediction accuracy [31], LSTM handles sequential data processing, capturing overall data trends to ensure sufficient feature coverage and improve prediction precision. By combining these models, their complementary strengths synergistically collaborate, particularly beneficial for tasks requiring simultaneous consideration of sequence and context comprehension [32].

- (2)

- Fusion for comprehensive model amalgamation: In the realm of model integration, the fusion technique provides a robust alternative. Here, the predictions from the Transformer and LSTM are amalgamated using a fusion mechanism, such as weighted averaging or feature concatenation. This fusion process capitalizes on the strengths of both models, ensuring a comprehensive representation of the data for enhanced predictive accuracy [33]. The adaptability of fusion techniques allows for nuanced integration, potentially outperforming conventional ensemble methods [34].

- (3)

- Mitigating Overfitting: The ensemble of Transformer and LSTM models mitigates overfitting risks by leveraging diverse information sources and their complementarity. This fusion method enhances model generalization, enabling more accurate predictions of unseen data. By integrating global and sequential information, stacked models achieve robust performance across various tasks and datasets.

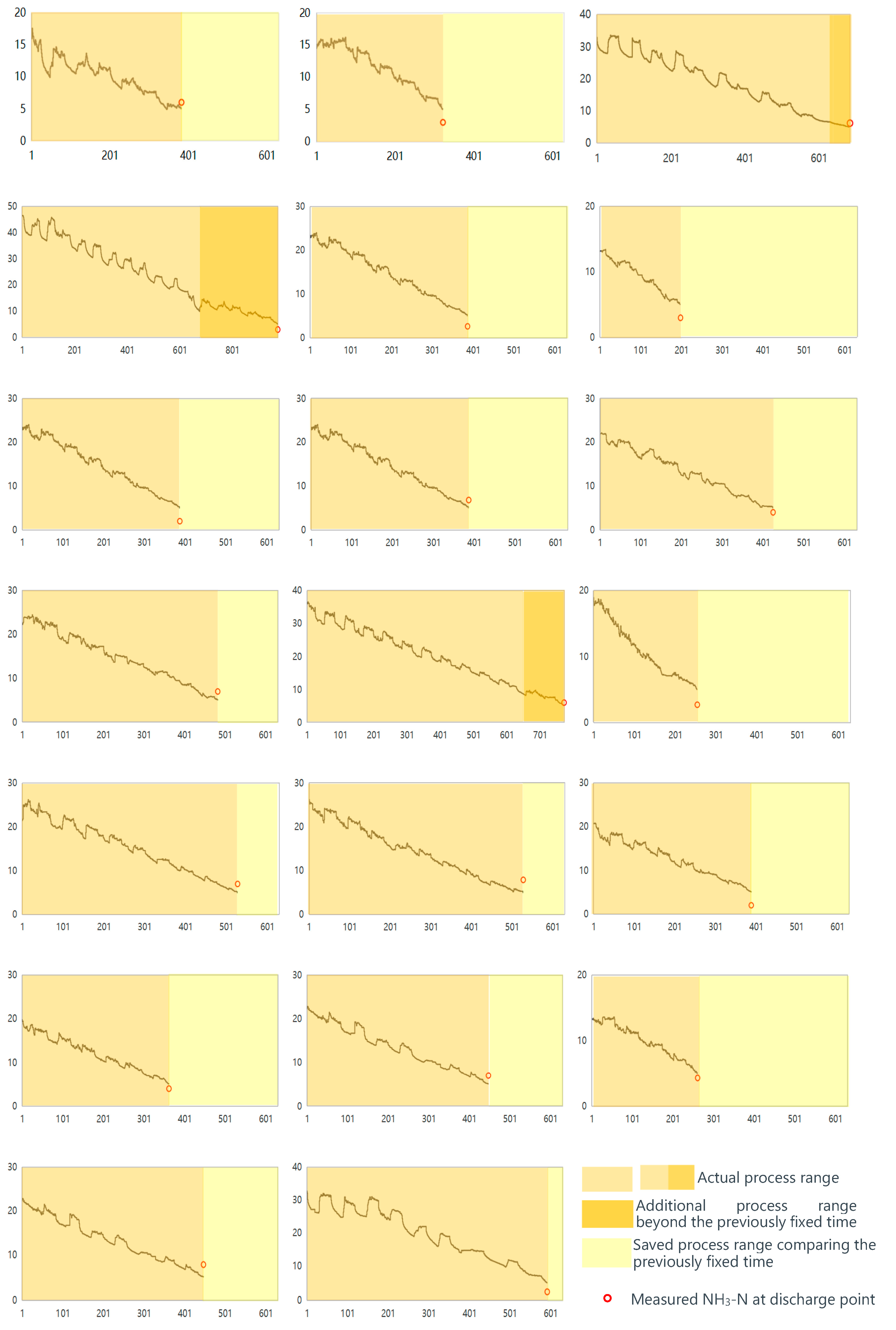

3.2. Processes Optimization by the Transformer-LSTM Network

3.3. Circuit Design for Automated SBR

- (1)

- Sensors

- (2)

- Data Acquisition Module

- (3)

- Transformer-LSTM Model

- (4)

- Control Algorithm

- (5)

- Actuators/Execution

- (6)

- Communication

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Circuit Diagram of Automatic Control of SBR

References

- Zhao, W.; Meng, F. Efficiency Measurement and Influencing Factors Analysis of Green Technology Innovation in Industrial Enterprises Based on DEA-Tobit Model: A Case Study of Anhui Province. J. Educ. Humanit. Soc. Sci. 2023, 16, 256–266. [Google Scholar] [CrossRef]

- Rahaoui, K.; Khayyam, H.; Ve, Q.L.; Akbarzadeh, A.; Date, A. Renewable Thermal Energy Driven Desalination Process for a Sustainable Management of Reverse Osmosis Reject Water. Sustainability 2021, 13, 10860. [Google Scholar] [CrossRef]

- Duan, W.; Gunes, M.; Baldi, A.; Gich, M.; Fernández-Sánchez, C. Compact fluidic electrochemical sensor platform for on-line monitoring of chemical oxygen demand in urban wastewater. Chem. Eng. J. 2022, 449, 137837. [Google Scholar] [CrossRef]

- Cairone, S.; Hasan, S.W.; Choo, K.H.; Lekkas, D.F.; Fortunato, L.; Zorpas, A.A.; Korshin, G.; Zarra, T.; Belgiorno, V.; Naddeo, V. Revolutionizing wastewater treatment toward circular economy and carbon neutrality goals: Pioneering sustainable and efficient solutions for automation and advanced process control with smart and cutting-edge technologies. J. Water Process Eng. 2024, 63, 105486. [Google Scholar] [CrossRef]

- Li, W.; Li, Y.; Li, D.; Zhou, J. A Multivariable Probability Density-Based Auto-Reconstruction Bi-LSTM Soft Sensor for Predicting Effluent BOD in Wastewater Treatment Plants. Sensors 2024, 23, 7508. [Google Scholar] [CrossRef]

- Li, W.; Zhao, Y.; Zhu, Y.; Dong, Z.; Wang, F.; Huang, F. Research progress in water quality prediction based on deep learning technology: A review. Environ. Sci. Pollut. Res. Int. 2024, 31, 26415–26431. [Google Scholar] [CrossRef]

- Liu, T.; Zhang, H.; Wu, J.; Liu, W.; Fang, Y. Wastewater treatment process enhancement based on multi-objective optimization and interpretable machine learning. J. Environ. Manag. 2024, 364, 121430. [Google Scholar] [CrossRef]

- Chang, P.; Meng, F. Fault Detection of Urban Wastewater Treatment Process Based on Combination of Deep Information and Transformer Network. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 8124–8133. [Google Scholar]

- Li, D.; Yang, C.; Li, Y. A multi-subsystem collaborative Bi-LSTM-based adaptive soft sensor for global prediction of ammonia-nitrogen concentration in wastewater treatment processes. Water Res. 2024, 254, 121347. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, C.; Jiang, Y.; Sun, L.; Zhao, R.; Yan, K.; Wang, W. Accurate prediction of water quality in urban drainage network with integrated EMD-LSTM model. J. Clean. Prod. 2022, 354, 131724. [Google Scholar] [CrossRef]

- Wang, J.; Xue, B.; Wang, Y.; A, Y.; Wang, G.; Han, D. Identification of pollution source and prediction of water quality based on deep learning techniques. J. Contam. Hydrol. 2024, 261, 104287. [Google Scholar] [CrossRef] [PubMed]

- Ruan, J.; Cui, Y.; Song, Y.; Mao, Y. A novel RF-CEEMD-LSTM model for predicting water pollution. Sci. Rep. 2023, 13, 20901. [Google Scholar] [CrossRef]

- Bi, J.; Chen, Z.; Yuan, H.; Zhang, J. Accurate water quality prediction with attention-based bidirectional LSTM and encoder–decoder. Expert Syst. Appl. 2024, 238, 121807. [Google Scholar] [CrossRef]

- Yao, J.; Chen, S.; Ruan, X. Interpretable CEEMDAN-FE-LSTM-transformer hybrid model for predicting total phosphorus concentrations in surface water. J. Hydrol. 2024, 629, 130609. [Google Scholar] [CrossRef]

- Jaffari, Z.H.; Abbas, A.; Kim, C.M.; Shin, J.; Kwak, J.; Son, C.; Lee, Y.G.; Kim, S.; Chon, K.; Cho, K.H. Transformer-based deep learning models for adsorption capacity prediction of heavy metal ions toward biochar-based adsorbents. J. Hazard. Mater. 2023, 462, 132773. [Google Scholar] [CrossRef]

- Yu, M.; Masrur, A.; Blaszczak-Boxe, C. Predicting hourly PM2.5 concentrations in wildfire-prone areas using a SpatioTemporal Transformer model. Sci. Total Environ. 2023, 860, 160446. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, S.; Zhao, X.; Chen, L.; Yao, J. Temporal Difference-Based Graph Transformer Networks for Air Quality PM2.5 Prediction: A Case Study in China. Front. Environ. Sci. 2022, 10, 924986. [Google Scholar] [CrossRef]

- Wu, T.; Gao, X.; An, F.; Sun, X.; An, H.; Su, Z.; Gupta, S.; Gao, J.; Kurths, J. Predicting multiple observations in complex systems through low-dimensional embeddings. Nat. Commun. 2024, 15, 2242. [Google Scholar] [CrossRef] [PubMed]

- Charlton-Perez, A.J.; Dacre, H.F.; Driscoll, S.; Gray, S.L.; Harvey, B.; Harvey, N.J.; Hunt, K.M.R.; Lee, R.W.; Swaminathan, R.; Vandaele, R.; et al. Do AI models produce better weather forecasts than physics-based models? A quantitative evaluation case study of Storm Ciarán. NPJ Clim. Atmos. Sci. 2024, 7, 93. [Google Scholar] [CrossRef]

- Zhang, W.; Zhao, J.; Quan, P.; Wang, J.; Meng, X.; Li, Q. Prediction of influent wastewater quality based on wavelet transform and residual LSTM. Applied Soft Computing 2023, 148, 110858. [Google Scholar] [CrossRef]

- Feng, Z.; Zhang, J.; Jiang, H.; Yao, X.; Qian, Y.; Zhang, H. Energy consumption prediction strategy for electric vehicle based on LSTM-transformer framework. Energy 2024, 302, 131780. [Google Scholar] [CrossRef]

- Cao, K.; Zhang, T.; Huang, J. Advanced hybrid LSTM-transformer architecture for real-time multi-task prediction in engineering systems. Sci. Rep. 2024, 14, 4890. [Google Scholar] [CrossRef] [PubMed]

- Korban, M.; Youngs, P.; Acton, S.T. A Semantic and Motion-Aware Spatiotemporal Transformer Network for Action Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 6055–6069. [Google Scholar] [CrossRef]

- Shayestegan, M.; Kohout, J.; Trnková, K.; Chovanec, M.; Mareš, J. Motion Tracking in Diagnosis: Gait Disorders Classification with a Dual-Head Attentional Transformer-LSTM. Int. J. Comput. Intell. Syst. 2023, 16, 98. [Google Scholar] [CrossRef]

- Sun, Y.; Lian, J.; Teng, Z.; Wei, Z.; Tang, Y.; Yang, L.; Gao, Y.; Wang, T.; Li, H.; Xu, M.; et al. COVID-19 diagnosis based on swin transformer model with demographic information fusion and enhanced multi-head attention mechanism. Expert Syst. Appl. 2024, 243, 122805. [Google Scholar] [CrossRef]

- Qiu, C.; Zhan, C.; Li, Q. Development and application of random forest regression soft sensor model for treating domestic wastewater in a sequencing batch reactor. Sci. Rep. 2023, 13, 9149. [Google Scholar]

- Qiu, C.; Huang, F.Q.; Zhong, Y.J.; Wu, J.Z.; Li, Q.L.; Zhan, C.H.; Zhang, Y.F.; Wang, L. Comparative analysis and application of soft sensor models in domestic wastewater treatment for advancing sustainability. Environ. Technol. 2024, 1–22. [Google Scholar] [CrossRef]

- Wang, S.; Li, X.; Kou, X.; Zhang, J.; Zheng, S.; Wang, J.; Gong, J. Sequential Recommendation through Graph Neural Networks and Transformer Encoder with Degree Encoding. Algorithms 2021, 14, 263. [Google Scholar] [CrossRef]

- Zhang, Y.; Ragettli, S.; Molnar, P.; Fink, O.; Peleg, N. Generalization of an Encoder-Decoder LSTM model for flood prediction in ungauged catchments. J. Hydrol. 2022, 614, 128577. [Google Scholar] [CrossRef]

- Fox, S.; McDermott, J.; Doherty, E.; Cooney, R.; Clifford, E. Application of Neural Networks and Regression Modelling to Enable Environmental Regulatory Compliance and Energy Optimisation in a Sequencing Batch Reactor. Sustainability 2022, 14, 4098. [Google Scholar] [CrossRef]

- Hanif, M.; Mi, J. Harnessing AI for solar energy: Emergence of transformer models. Appl. Energy 2024, 369, 123541. [Google Scholar] [CrossRef]

- Huang, R.W.; Wang, Y.Q.; You, D.; Yang, W.H.; Deng, B.N.; Wang, F.; Zeng, Y.J.; Zhang, Y.Y.; Li, X. MOF and its derivative materials modified lithium–sulfur battery separator: A new means to improve performance. Rare Met. 2024, 43, 2418–2443. [Google Scholar] [CrossRef]

- Li, G.; Cui, Z.; Li, M.; Han, Y.; Li, T. Multi-attention fusion transformer for single-image super-resolution. Sci. Rep. 2024, 14, 10222. [Google Scholar] [CrossRef] [PubMed]

- Chai, J.; Pan, S.; Zhou, M.X.; Houck, K. Context-Based Multimodal Input Understanding in Conversational Systems. In Proceedings of the Fourth IEEE International Conference on Multimodal Interfaces, Pittsburgh, PA, USA, 16 October 2002. [Google Scholar]

- Imen, S.; Croll, H.C.; McLellan, N.L.; Bartlett, M.; Lehman, G.; Jacangelo, J.G. Application of machine learning at wastewater treatment facilities: A review of the science, challenges, and barriers by level of implementation. Environ. Technol. Rev. 2023, 12, 493–516. [Google Scholar] [CrossRef]

| References | Variables/Inputs | Targets/Outputs | Model Performance | Model |

|---|---|---|---|---|

| [8] | BOD, DO, NH3-N et al. | Fault detection of urban wastewater treatment | Accuracy 99.7% | Transformer-LSTM |

| [9] | Readily biodegradable substrate, particulate inert organic matter, slowly biodegradable substrate, total suspended solids, flow rate, active autotrophic biomass et al. | Ammonia-nitrogen concentration in wastewater treatment processes | RMSE 3.76, MAE 2.96, R2 80.94% | LSTM |

| [10] | Flow, velocity, liquid level, pH, conductivity | COD, BOD5, TP, TN, NH3–N in urban drainage | R2 0.961, 0.9384, 0.9575, 0.9441, 0.9502; RMSE 8.3112, 6.7795, 0.2691, 2.6239, 1.4894 | LSTM |

| [11] | COD, ammonium nitrogen, total nitrogen, total phosphorus | Future changes in water quality index | Prediction accuracy 85.85%, 47.15%, 85.66%, 89.07% | LSTM |

| [12] | DO, pH, and NH3-N | Water pollution trends | RMSE of the RF-CEEMD-LSTM model is reduced by 62.6%, 39.9%, and 15.5% compared with those of the LSTM, RF-LSTM, and CEEMD-LSTM models | LSTM |

| [13] | pH, TP | TN of a river in the Beijing–Tianjin–Hebei region of China | RMSE 0.2093 MAE 0.1552 R2 0.9552 | LSTM |

| [14] | Temperature, DO, EC, COD, TN | TP concentrations at the inlet of Taihu Lake, China | R2 0.37~0.87 | Transformer-LSTM |

| [15] | Adsorption conditions, pyrolysis conditions, elemental composition, biochar’s physical properties | Heavy metal ions from wastewater | R2 0.98 RMSE 0.296 MAE 0.145 | Transformer |

| [16] | Meteorology, temporal trending variables road traffic, wildfire perimeter, wildfire intensity | Hourly PM2.5 concentrations in wildfire-prone areas | RMSE 6.92 | Transformer |

| [17] | Dew point, temperature, pressure, combined wind direction, cumulated wind speed, cumulated hours of rain, cumulated hours of relative humidity | Hourly air PM2.5 | MAE 9 | Transformer |

| Input Variables | Description |

|---|---|

| EC | Raw electronic conductivity data |

| ΔEC | ΔEC = ECi − ECi−1, the difference between current value and previous |

| ECCum | Cumulative EC value, ECCum = EC1 + EC2 + …+ ECi |

| DO | Raw dissolved oxygen data |

| ΔDO | ΔDO = DOi − DOi−1, the difference between current value and previous |

| DOCum | Cumulative DO value, DOCum = DO1 + DO2 + …+ DOi |

| ORP | Raw ORP data |

| ΔORP | ΔORP = ORPi − ORPi−1, the difference between current value and previous |

| ORPCum | Cumulative ORP value, ORPCum = ORP1 + ORP2 + …+ ORPi |

| Model Component | Parameter | Setting | Description |

|---|---|---|---|

| Positional Encoding Layer | Dimension | 16 | Ensures the model can effectively learn the position information of the data. |

| Maximum Time Steps | 30 | Handles the longest input sequence. | |

| Transformer Encoder Layer | Number of Attention Heads | 4 | Enhances feature extraction capabilities. |

| Hidden Units per Layer | 32 | Ensures the model has sufficient expressiveness. | |

| Number of Layers | 3 | Enhances model depth to capture complex patterns. | |

| LSTM Decoder Layer | LSTM Units | 32 | Maintains consistency with the Transformer encoder complexity. |

| Dropout Rate | 0.2 | Mitigates overfitting. | |

| Training Parameters | Optimizer | Adam | Uses the Adam optimizer. |

| Learning Rate | 0.001 | Learning rate for the Adam optimizer. | |

| Loss Function | MSE | Based on Mean Squared Error (MSE). | |

| Training Epochs | 100 | Total number of training epochs. |

| Model | R2 | RMSE | MAE | Hardware Requirements | Training Time (min) |

|---|---|---|---|---|---|

| Transformer-LSTM | 0.9255 | 2.6306 | 2.0430 | High-end GPU, 16 GB RAM | 8 |

| LSTM | 0.8433 | 4.9433 | 4.0442 | Standard CPU, 8 GB RAM | 5 |

| GRU | 0.8367 | 5.0476 | 4.2178 | Standard CPU, 8 GB RAM | 5 |

| Transformer | 0.7549 | 5.4464 | 4.5084 | High-end GPU, 16 GB RAM | 8 |

| RNN | 0.7667 | 5.8387 | 4.8763 | Standard CPU, 8 GB RAM | 5 |

| Cycle No. | 41 | 42 | 43 | 44 | 45 | 46 | 47 | 48 | 49 | 50 |

|---|---|---|---|---|---|---|---|---|---|---|

| Operation time by proposed methodology (mins) | 393 | 312 | 662 | 994 | 390 | 198 | 388 | 382 | 420 | 484 |

| Cycle No. | 51 | 52 | 53 | 54 | 55 | 56 | 57 | 58 | 59 | 60 |

| Operation time by proposed methodology (mins) | 790 | 254 | 525 | 513 | 386 | 365 | 448 | 266 | 430 | 593 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, C.; Li, Q.; Jing, J.; Tan, N.; Wu, J.; Wang, M.; Li, Q. Transforming Prediction into Decision: Leveraging Transformer-Long Short-Term Memory Networks and Automatic Control for Enhanced Water Treatment Efficiency and Sustainability. Sensors 2025, 25, 1652. https://doi.org/10.3390/s25061652

Qiu C, Li Q, Jing J, Tan N, Wu J, Wang M, Li Q. Transforming Prediction into Decision: Leveraging Transformer-Long Short-Term Memory Networks and Automatic Control for Enhanced Water Treatment Efficiency and Sustainability. Sensors. 2025; 25(6):1652. https://doi.org/10.3390/s25061652

Chicago/Turabian StyleQiu, Cheng, Qingchuan Li, Jiang Jing, Ningbo Tan, Jieping Wu, Mingxi Wang, and Qianglin Li. 2025. "Transforming Prediction into Decision: Leveraging Transformer-Long Short-Term Memory Networks and Automatic Control for Enhanced Water Treatment Efficiency and Sustainability" Sensors 25, no. 6: 1652. https://doi.org/10.3390/s25061652

APA StyleQiu, C., Li, Q., Jing, J., Tan, N., Wu, J., Wang, M., & Li, Q. (2025). Transforming Prediction into Decision: Leveraging Transformer-Long Short-Term Memory Networks and Automatic Control for Enhanced Water Treatment Efficiency and Sustainability. Sensors, 25(6), 1652. https://doi.org/10.3390/s25061652