Optimization of Improved YOLOv8 for Precision Tomato Leaf Disease Detection in Sustainable Agriculture

Abstract

1. Introduction

- 1.

- Develop an improved YOLOv8 model incorporating Grouped Depthwise Convolutions and Squeeze-and-Excitation (SE) Blocks to enhance accuracy and computational efficiency in tomato leaf disease detection.

- 2.

- Integrate the optimized YOLOv8 model into a robotic spraying system for real-time, automated detection and pesticide application.

- 3.

- Evaluate the performance of the proposed model in terms of precision, recall, F1 score, and mean average precision (mAP) against existing models.

- 4.

- Assess the system’s practical feasibility in reducing chemical usage and improving resource efficiency in sustainable agricultural practices.

2. Data Acquisition

2.1. System Architecture and Operational Components

2.2. Hardware Limitations and Mitigation Strategies for Spraying Robots

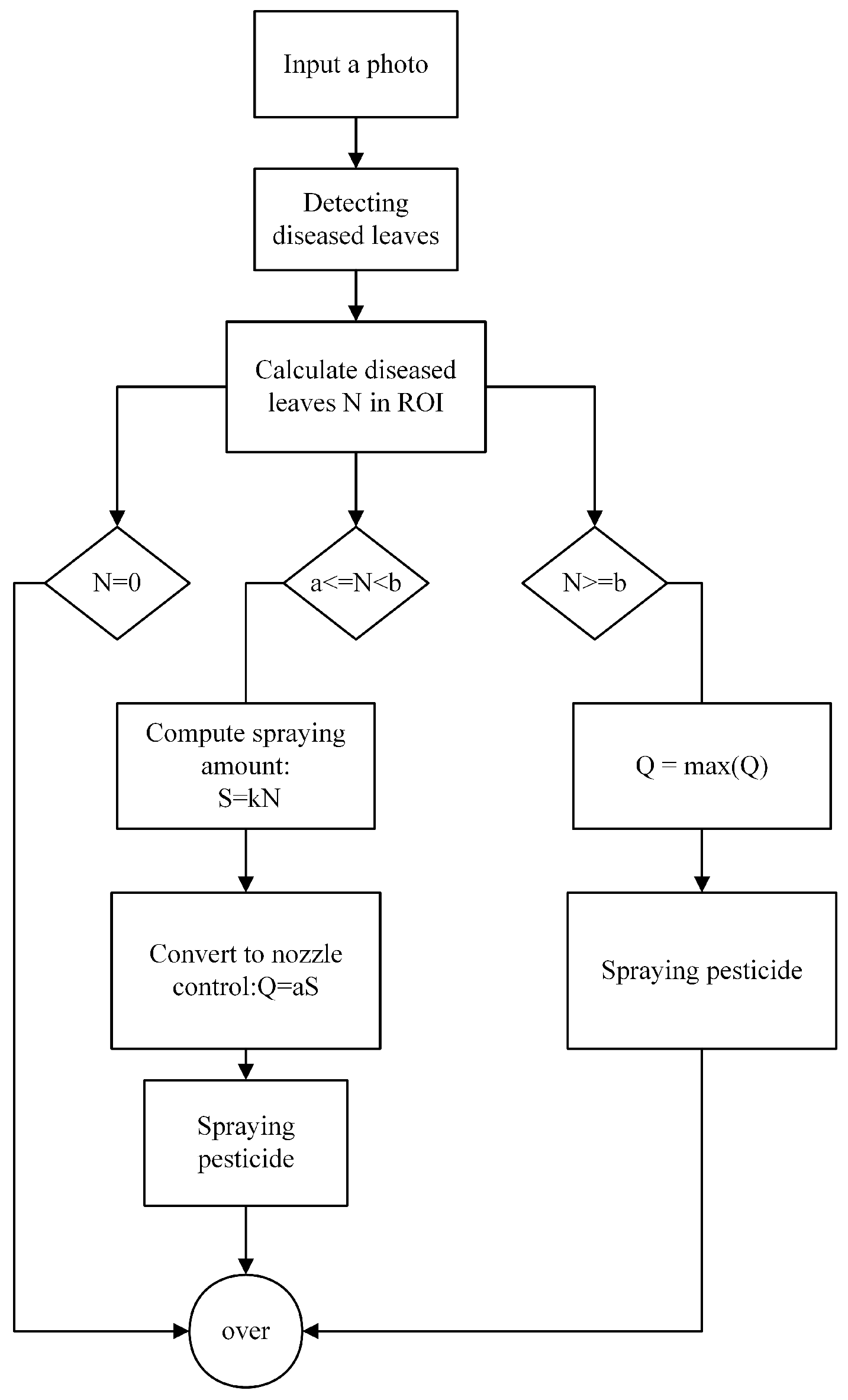

2.3. Intelligent Spraying System and Optimized Pesticide Spraying Process for Disease Detection

2.4. Data Collection and Classification

3. Methodology

3.1. Standard YOLOv8 Architecture and Functionality

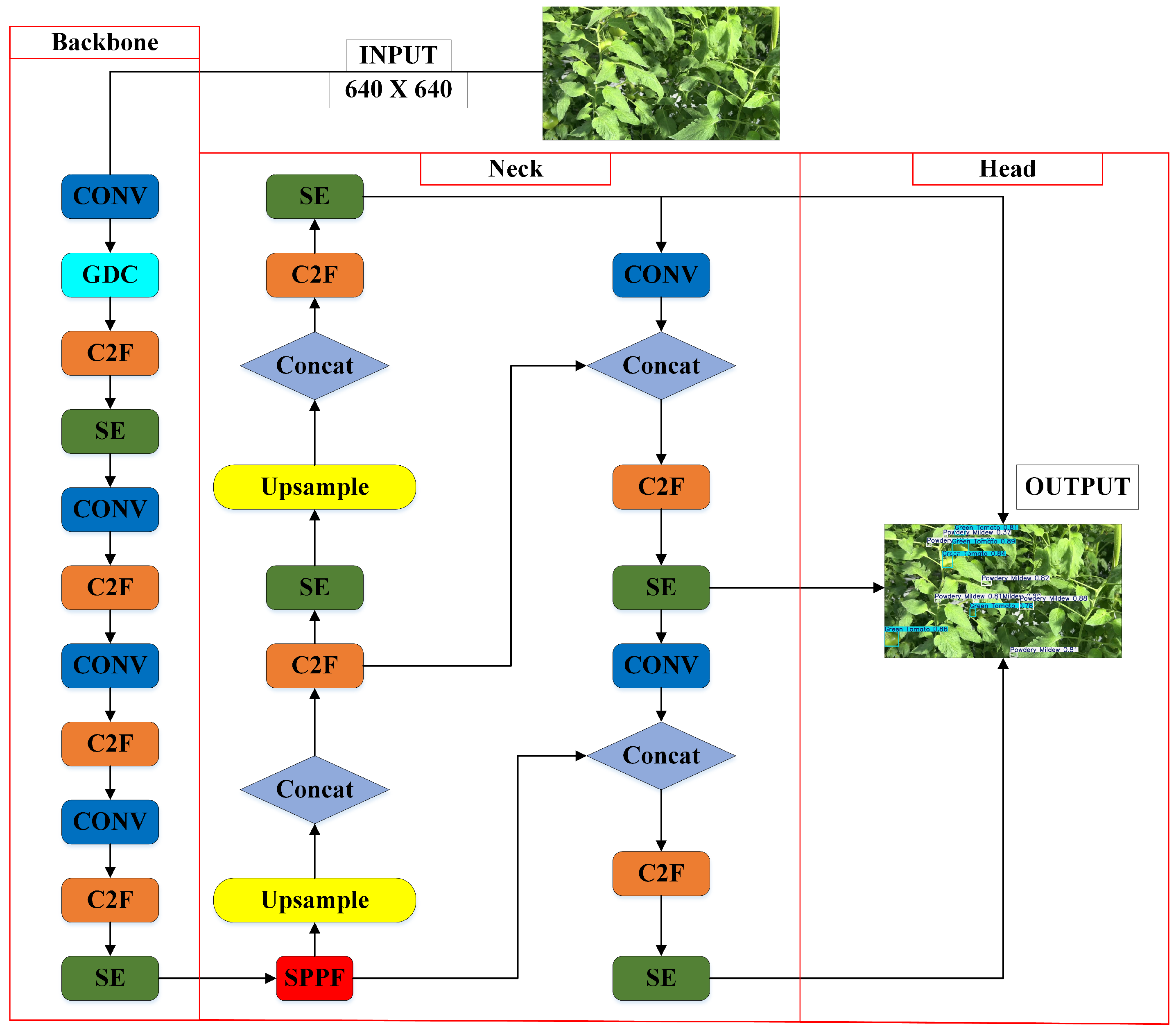

3.2. Improved YOLOv8 Design and Operational Workflow

3.3. Grouped Depthwise Convolution

3.4. SE_Block: Channel-Wise Attention Mechanism in Improved YOLOv8

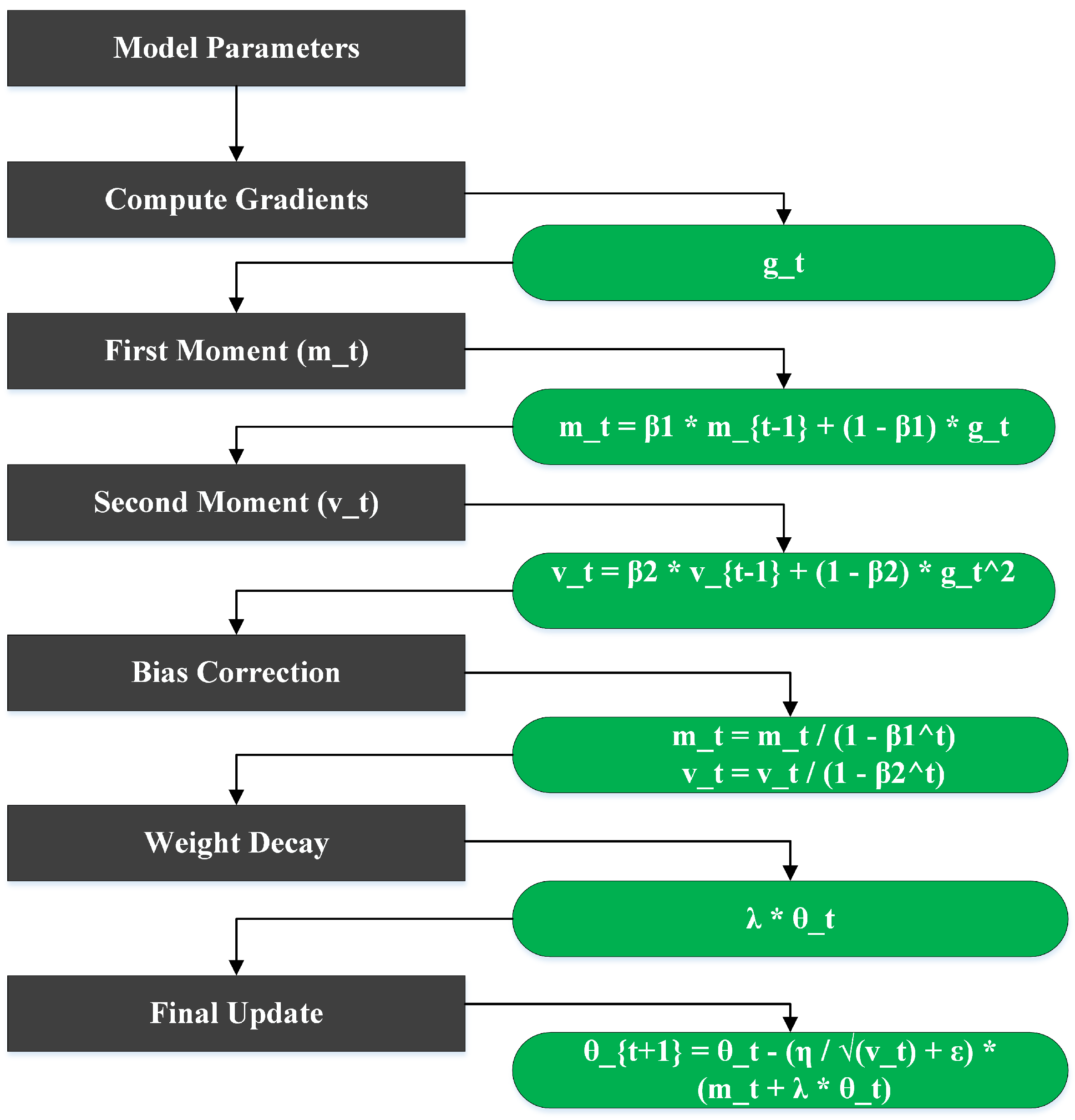

3.5. Parameter Updates with AdamW Optimizer

4. Model Training and Variable Configuration

4.1. Research Setup and Model Training

4.2. Performance Metrics and Computational Evaluation

4.3. Loss Calculation

5. Results and Discussion

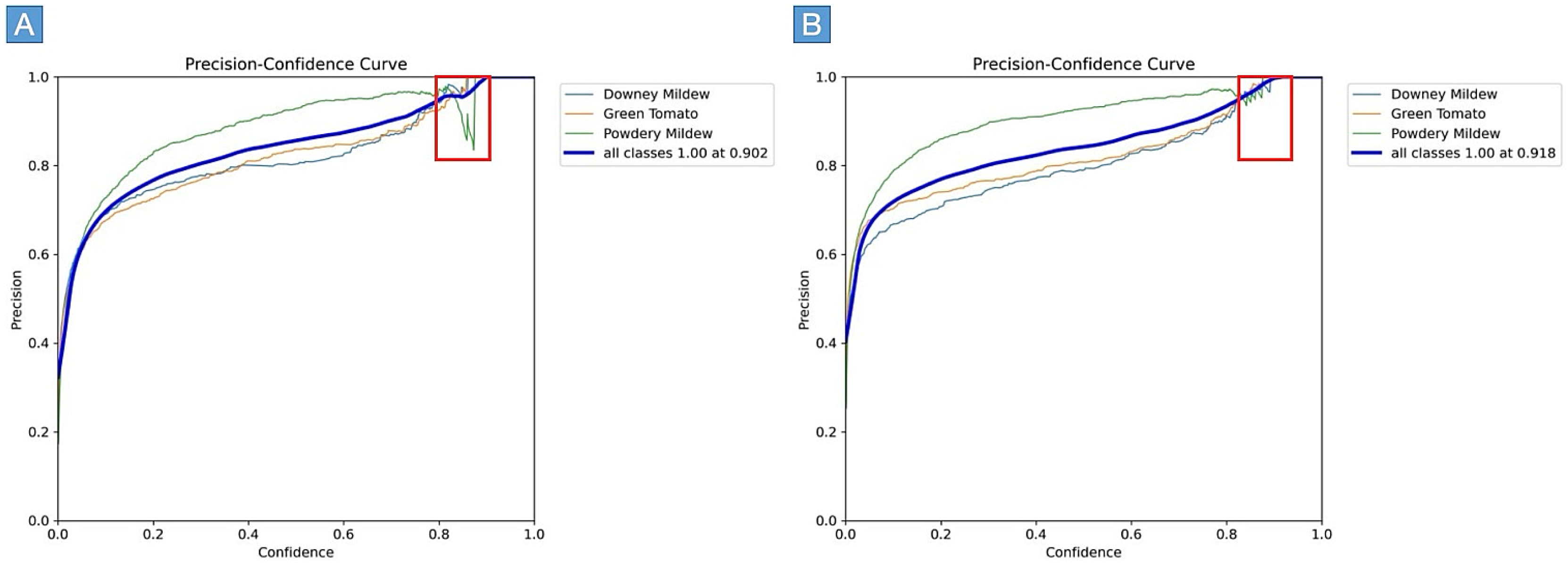

5.1. Comparative Analysis of Decomposition Experiment and Improved YOLOv8 Algorithm

5.2. Comparative Analysis of Detection Performance Utilizing Various Models

5.3. Performance Comparison of YOLOv8-Based Models for Tomato Disease Detection in Agricultural Robotics

5.4. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Stafford, J.V. Implementing precision agriculture in the 21st century. J. Agric. Eng. Res. 2000, 76, 267–275. [Google Scholar] [CrossRef]

- Shen, Y.; Zhu, H.; Liu, H.; Chen, Y.; Ozkan, E. Development of a Laser-Guided, Embedded-Computer-Controlled, Air-Assisted Precision Sprayer. Trans. ASABE 2017, 60, 1827–1838. [Google Scholar] [CrossRef]

- Zhang, F.; Chen, Z.; Ali, S.; Yang, N.; Fu, S.; Zhang, Y. Multi-class detection of cherry tomatoes using improved Yolov4-tiny model. Int. J. Agric. Biol. Eng. 2023, 16, 225–231. [Google Scholar]

- Zhang, T.; Zhou, J.; Liu, W.; Yue, R.; Yao, M.; Shi, J.; Hu, R. Seedling-YOLO: High-Efficiency Target Detection Algorithm for Field Broccoli Seedling Transplanting Quality Based on YOLOv7-Tiny. Agronomy 2024, 14, 931. [Google Scholar] [CrossRef]

- Balasundram, S.K.; Golhani, K.; Shamshiri, R.R.; Vadamalai, G. Precision agriculture technologies for management of plant diseases. In Plant Disease Management Strategies for Sustainable Agriculture Through Traditional and Modern Approaches; Springer: Berlin/Heidelberg, Germany, 2020; pp. 259–278. [Google Scholar]

- Lee, J.; Nazki, H.; Baek, J.; Hong, Y.; Lee, M. Artificial Intelligence Approach for Tomato Detection and Mass Estimation in Precision Agriculture. Sustainability 2020, 12, 9138. [Google Scholar] [CrossRef]

- Abbas, A.; Jain, S.; Gour, M.; Vankudothu, S. Tomato plant disease detection using transfer learning with C-GAN synthetic images. Comput. Electron. Agric. 2021, 187, 106279. [Google Scholar] [CrossRef]

- Hong, H.; Lin, J.; Huang, F. Tomato disease detection and classification by deep learning. In Proceedings of the 2020 International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Fuzhou, China, 12–14 June 2020; pp. 25–29. [Google Scholar]

- Memon, M.S.; Chen, S.; Shen, B.; Liang, R.; Tang, Z.; Wang, S.; Memon, N. Automatic visual recognition, detection and classification of weeds in cotton fields based on machine vision. Crop Prot. 2025, 187, 106966. [Google Scholar] [CrossRef]

- Magalhães, S.A.; Castro, L.; Moreira, G.; Dos Santos, F.N.; Cunha, M.; Dias, J.; Moreira, A.P. Evaluating the Single-Shot MultiBox Detector and YOLO Deep Learning Models for the Detection of Tomatoes in a Greenhouse. Sensors 2021, 21, 3569. [Google Scholar] [CrossRef]

- Rajasree, R.; Latha, C.B.C. Improved YOLO-X Model for Tomato Disease Severity Detection using Field Dataset. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 235–242. [Google Scholar]

- Guo, J.; Yang, Y.; Lin, X.; Memon, M.S.; Liu, W.; Zhang, M.; Sun, E. Revolutionizing Agriculture: Real-Time Ripe Tomato Detection With the Enhanced Tomato-YOLOv7 System. IEEE Access 2023, 11, 133086–133098. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X. Tomato diseases and pests detection based on improved Yolo V3 convolutional neural network. Front. Plant Sci. 2020, 11, 898. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; He, X.; Ge, X.; Wu, X.; Shen, J.; Song, Y. Detection of Key Organs in Tomato Based on Deep Migration Learning in a Complex Background. Agriculture 2018, 8, 196. [Google Scholar] [CrossRef]

- Ji, W.; Gao, X.; Xu, B.; Pan, Y.; Zhang, Z.; Zhao, D. Apple target recognition method in complex environment based on improved YOLOv4. J. Food Process Eng. 2021, 44, e13866. [Google Scholar] [CrossRef]

- Ji, W.; Pan, Y.; Xu, B.; Wang, J. A Real-Time Apple Targets Detect. Method Pick. Robot Based ShufflenetV2-YOLOX. Agriculture 2022, 12, 856. [Google Scholar] [CrossRef]

- Khan, Z.; Liu, H.; Shen, Y.; Zeng, X. Deep learning improved YOLOv8 algorithm: Real-time precise instance segmentation of crown region orchard canopies in natural environment. Comput. Electron. Agric. 2024, 224, 109168. [Google Scholar] [CrossRef]

- Hossain, S.M.M.; Deb, K.; Dhar, P.K.; Koshiba, T. Plant Leaf Disease Recognition Using Depth-Wise Separable Convolution-Based Models. Symmetry 2021, 13, 511. [Google Scholar] [CrossRef]

- Gao, H.; Yang, Y.; Li, C.; Gao, L.; Zhang, B. Multiscale Residual Netw. Mixed Depthwise Convolution Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 3396–3408. [Google Scholar] [CrossRef]

- Alqahtani, O.M. Efficient Deep Learning for Plant Disease Classification in Resource Constrained Environment. Ph.D. Dissertation, University of Georgia, Athens, GA, USA, 2024. [Google Scholar]

- Guo, Z.; Geng, Y.; Wang, C.; Xue, Y.; Sun, D.; Lou, Z.; Quan, L. InstaCropNet: An efficient UNet-Based architecture for precise crop row detection in agricultural applications. Artif. Intell. Agric. 2024, 12, 85–96. [Google Scholar] [CrossRef]

- Sharma, A.; Jain, A.; Gupta, P.; Chowdary, V. Machine learning applications for precision agriculture: A comprehensive review. IEEE Access 2020, 9, 4843–4873. [Google Scholar] [CrossRef]

- Mbunge, E.; Simelane, S.; Fashoto, S.G.; Akinnuwesi, B.; Metfula, A.S. Application of deep learning and machine learning models to detect COVID-19 face masks—A review. Sustain. Oper. Comput. 2021, 2, 235–245. [Google Scholar] [CrossRef]

- Remya, S.; Sasikala, R. Performance evaluation of optimized and adaptive neuro-fuzzy inference system for predictive modeling in agriculture. Comput. Electr. Eng. 2020, 86, 106718. [Google Scholar] [CrossRef]

- Yuan, Y.; Yang, L.; Chang, K.; Huang, Y.; Yang, H.; Wang, J. DSCA-PSPNet: Dynamic spatial-channel attention pyramid scene parsing network for sugarcane field segmentation in satellite imagery. Front. Plant Sci. 2024, 14, 1324491. [Google Scholar] [CrossRef] [PubMed]

- Bhuyan, P.; Singh, P.K.; Das, S.K.; Kalla, A. SE_SPnet: Rice leaf disease prediction using stacked parallel convolutional neural network with squeeze-and-excitation. Expert Syst. 2023, 40, e13304. [Google Scholar] [CrossRef]

- Zayani, H.M.; Ammar, I.; Ghodhbani, R.; Maqbool, A.; Saidani, T.; Slimane, J.B.; Kachoukh, A.; Kouki, M.; Kallel, M.; Alsuwaylimi, A.A.; et al. Deep Learning for Tomato Disease Detection with YOLOv8. Eng. Technol. Appl. Sci. Res. 2024, 14, 13584–13591. [Google Scholar] [CrossRef]

- Abid, M.S.Z.; Mondal, R.K.; Islam, S.M.U.; Das, S.K.; Parvez, S.F.; Biswas, R. Bangladeshi crops leaf disease detection using YOLOv8. Eng. Technol. Appl. Sci. Res. 2024, 14, 13922–13928. [Google Scholar]

- Wang, Y.; He, J. Improved Algorithm for Tomato Disease Detection Based on YOLOv8. In Proceedings of the 2024 IEEE 4th International Conference on Electronic Technology, Communication and Information (ICETCI), Changchun, China, 24–26 May 2024. [Google Scholar]

- Arjun, K.; Shine, L.; Deepak, S. Pest Detection and Disease Categorization in Tomato Crops using YOLOv8. Eng. Technol. Appl. Sci. Res. 2024, 14, 13584–13591. [Google Scholar]

- Wang, X.; Liu, J. Vegetable Disease Detection Using an Improved YOLOv8 Algorithm in the Greenhouse Plant Environment. Sci. Rep. 2024, 14, 4261. [Google Scholar] [CrossRef]

- Himeur, M.; Hassam, A. Tomato Disease Detection and Recognition Using YOLOv8. J. Artif. Intell. 2025, 1, 45–60. [Google Scholar]

| Attribute | Details |

|---|---|

| Total images | 1500 images |

| Validation set | 320 images |

| Training set | 1180 images |

| Preprocessing applied | Auto-orientation (auto-rotate images to correct alignment) |

| Augmentation applied | Brightness: between −50% and +50% |

| Contrast: between −30% and +30% | |

| Saturation: between −30% and +30% | |

| Hue: up to 6% of pixels | |

| Outputs per training example | 3 (indicating 3 object classes per image) |

| Network | P (%) | R (%) | mAP:@0.5 (%) | F1 Score | GFLOP | Inference Time (ms) | Params (M) |

|---|---|---|---|---|---|---|---|

| YOLOv8+AdamW | 81.2 | 70.9 | 75.7 | 75.70 | 8.1 | 5.5 | 3.0 |

| YOLOv8+GDC+AdamW | 85.5 | 71.3 | 79.1 | 77.76 | 30.5 | 9.2 | 2.9 |

| YOLOv8+SE_Block+AdamW | 80.8 | 67.8 | 74.3 | 73.73 | 60.6 | 10.4 | 3.3 |

| YOLOv8+GDC+SE_Block+AdamW | 85.7 | 72.8 | 79.8 | 78.62 | 75.6 | 9.8 | 4.2 |

| Category | Configuration |

|---|---|

| CPU | CPU Intel(R) Xeon(R) Gold 6226R CPU @ 2.90 GHz |

| GPU | Nvidia 3090 |

| System environment | Ubuntu 20.04.5 LTS |

| Framework | Pytorch 1.12 |

| Programming | Python 3.8 |

| Network | P (%) | R (%) | mAP:@0.5 (%) | F1 Score | GFLOPs | Inference Time (ms) | Params (M) |

|---|---|---|---|---|---|---|---|

| YOLOv8 | 83.5 | 70.4 | 75.7 | 76.39 | 8.1 | 7.2 | 3.0 |

| AdamW | 81.2 | 70.9 | 75.7 | 75.70 | 8.1 | 5.5 | 3.0 |

| SE_Block | 86.3 | 71.3 | 78.8 | 78.09 | 60.6 | 10.4 | 3.3 |

| GDC | 88.3 | 70.8 | 79.6 | 78.50 | 30.5 | 9.2 | 2.9 |

| AdamW+SE_Block+GDC | 85.7 | 72.8 | 79.8 | 78.62 | 75.6 | 9.8 | 4.2 |

| Model | P (%) | R (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) | GFLOPs | Inference Time (ms) | F1 Score |

|---|---|---|---|---|---|---|---|

| YOLOv11 | 83.5 | 71.7 | 76.4 | 45.9 | 6.3 | 7.0 | 77.1 |

| YOLOv11+AdamW+SE_Block+GDC | 85.5 | 71.6 | 77.6 | 49.6 | 38.7 | 31.8 | 77.9 |

| YOLOv9 | 83.8 | 72.5 | 78.2 | 50.5 | 27.1 | 8.0 | 77.7 |

| YOLOv9+AdamW+SE_Block+GDC | 84.7 | 72.9 | 78.4 | 46.4 | 59.7 | 19.2 | 78.3 |

| YOLOv8 | 83.5 | 70.4 | 75.7 | 44.2 | 8.1 | 24.2 | 76.4 |

| YOLOv7 | 44.9 | 35.8 | 33.4 | 12.2 | 103.2 | 2.7 | 39.8 |

| YOLOv7+AdamW+SE_Block+GDC | 65.8 | 54.3 | 61.7 | 29.9 | 59.3 | 13.5 | 59.5 |

| YOLOv5 | 81.2 | 71.4 | 76.4 | 44.9 | 7.1 | 8.2 | 75.9 |

| YOLOv5+AdamW+SE_Block+GDC | 85.1 | 71.0 | 78.0 | 48.1 | 44.5 | 23.7 | 77.5 |

| Retina Net | 35.0 | 59.0 | 25.0 | - | - | - | 43.9 |

| ATSS | 51.0 | 50.0 | 32.0 | - | - | - | 50.5 |

| Ours | 85.7 | 72.8 | 79.8 | 51.6 | 75.6 | 9.8 | 78.6 |

| Paper Author | Objective | Dataset Used | Performance Metrics | Integration into Robotic System |

|---|---|---|---|---|

| Hafedh Mahmoud Zayani et al. [27] | Tomato disease detection using YOLOv8 | Custom dataset of tomato diseases (150 images per class, 19 classes) | mAP = 98%, F1 score = 97% | No direct integration described; potential for robotic application in tomato disease management |

| Wang Yonggui and He Jing [29] | Improvement in tomato disease detection with YOLOv8 | Custom dataset for tomato diseases with 150 images per class | Improved mAP compared with original YOLOv8; exact metrics not specified | No direct integration described; potential for improvement in tomato farming operations |

| Arjun K et al. [30] | Pest detection and disease categorization in tomato crops using YOLOv8 | Custom dataset of tomato pests and diseases | mAP = 92.5%; precision and recall not provided | Potential integration in robotic systems for pest and disease identification in tomato crops |

| Md. Shahriar Zaman Abid et al. [28] | Bangladeshi crop leaf disease detection using YOLOv8 | Self-curated dataset of 2850 images (19 classes, 150 images per class) | mAP = 98%, F1 score = 97% | No specific robotic integration; applicable in field monitoring systems for disease management |

| Xuewei Wang and Jun Liu [31] | Vegetable disease detection using an improved YOLOv8 algorithm in greenhouse plant environment | Self-built vegetable disease dataset with 40,000 images from various vegetable types (Tomato, Cucumber, and Eggplant) | mAP = 92.91%, FPS = 271.07, and precision = 92.72% | Designed for use in greenhouse environments with potential for integration into automated agricultural systems |

| Mohammed Himeur and Abdelouahab Hassam [32] | Tomato disease detection using YOLOv8 with improved architecture for better accuracy | Dataset with 3400+ images (2800 for training, 400 for validation) | Precision = 82.43%, recall = 82.62%, and mAP@50 = 84.8% | Suitable for real-time crop monitoring systems |

| Ours | Optimizing robotic spraying for sustainable agriculture with an improved YOLOv8 algorithm for pesticide application on diseased tomato leaves | 1500 tomato leaf images with 4 labels (Green Tomato, Downy Mildew, Powdery Mildew, and All) | Precision = 85.7%, recall = 72.8%, mAP@50 = 79.8%, mAP@50-95 = 51.6%, and F1 Score = 78.6% | Deployed on Spraying Robot LPE-260 for real-time, automated pesticide application |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, Y.; Yang, Z.; Khan, Z.; Liu, H.; Chen, W.; Duan, S. Optimization of Improved YOLOv8 for Precision Tomato Leaf Disease Detection in Sustainable Agriculture. Sensors 2025, 25, 1398. https://doi.org/10.3390/s25051398

Shen Y, Yang Z, Khan Z, Liu H, Chen W, Duan S. Optimization of Improved YOLOv8 for Precision Tomato Leaf Disease Detection in Sustainable Agriculture. Sensors. 2025; 25(5):1398. https://doi.org/10.3390/s25051398

Chicago/Turabian StyleShen, Yue, Zhaofeng Yang, Zohaib Khan, Hui Liu, Wenhua Chen, and Shuyang Duan. 2025. "Optimization of Improved YOLOv8 for Precision Tomato Leaf Disease Detection in Sustainable Agriculture" Sensors 25, no. 5: 1398. https://doi.org/10.3390/s25051398

APA StyleShen, Y., Yang, Z., Khan, Z., Liu, H., Chen, W., & Duan, S. (2025). Optimization of Improved YOLOv8 for Precision Tomato Leaf Disease Detection in Sustainable Agriculture. Sensors, 25(5), 1398. https://doi.org/10.3390/s25051398