In this section, the proposed method is compared with existing techniques, including DCTE [

47], UNTV [

48], PCDE [

49], PCFB [

50], UDHTV [

51], ZSRM [

52], and WFAC [

53]. Among the comparison algorithms, UDHTV is a physical model-based enhancement method, UNTV, PCDE, PCFB, and ZSRM are non-physical model-based enhancement methods, and WFAC and DCTE are frequency domain enhancement methods. By choosing these seven types of algorithms for comparison, we can comprehensively cover mainstream technical routes such as physical modeling, non-physical constraints, and frequency domain processing. Through the performance differences among different methods in color cast correction, defogging, and detail enhancement, the comprehensive advantages and applicable scenarios of the proposed method are highlighted. Three representative low-quality image datasets are selected for the experiments, namely, UIEB [

54], EUVP [

55], and LSUI [

56]. Both the UIEB and EUVP datasets focus on the systematic perceptual research and analysis of underwater image enhancement methods. The UIEB dataset contains 950 real underwater scene images collected from the Internet, while the EUVP dataset includes over 12,000 paired underwater images and 8000 unpaired underwater images, providing rich test samples for the performance evaluation of underwater image enhancement algorithms. The LSUI dataset is a large-scale underwater image dataset containing 5004 pairs of images, covering diverse underwater scenes and providing strictly selected high-quality reference images, specifically designed for underwater image enhancement research.

4.1. Qualitative Assessment

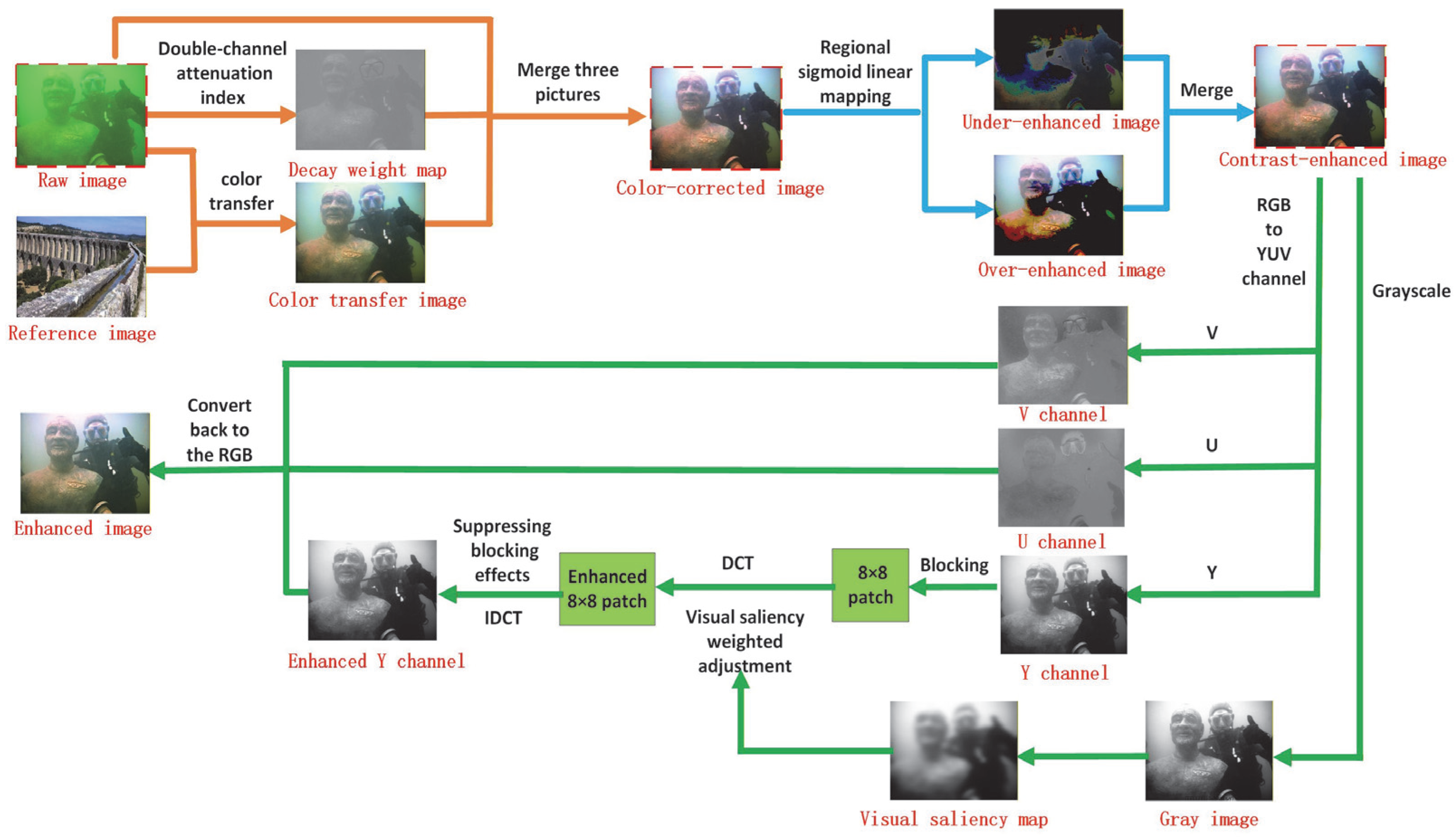

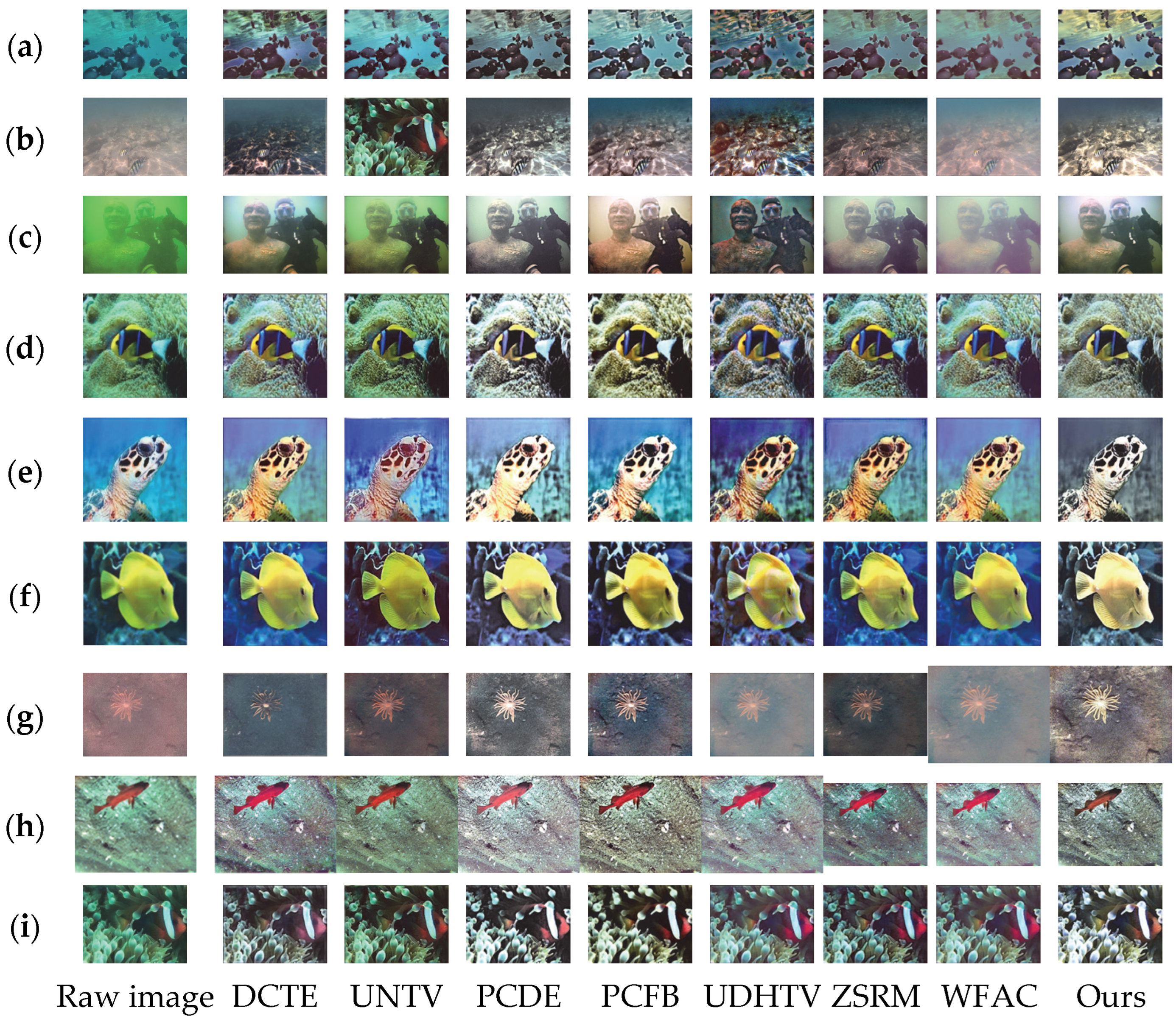

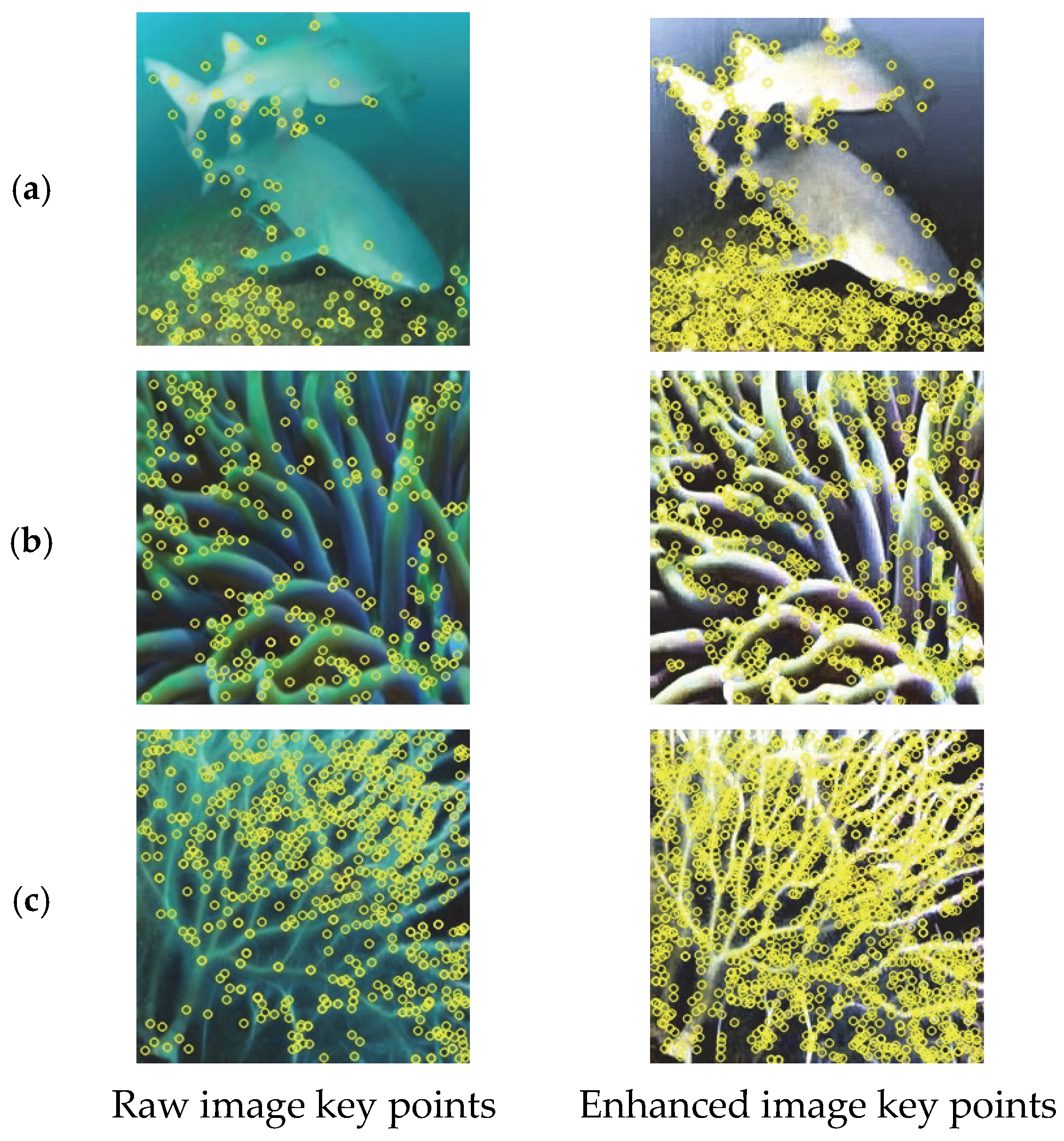

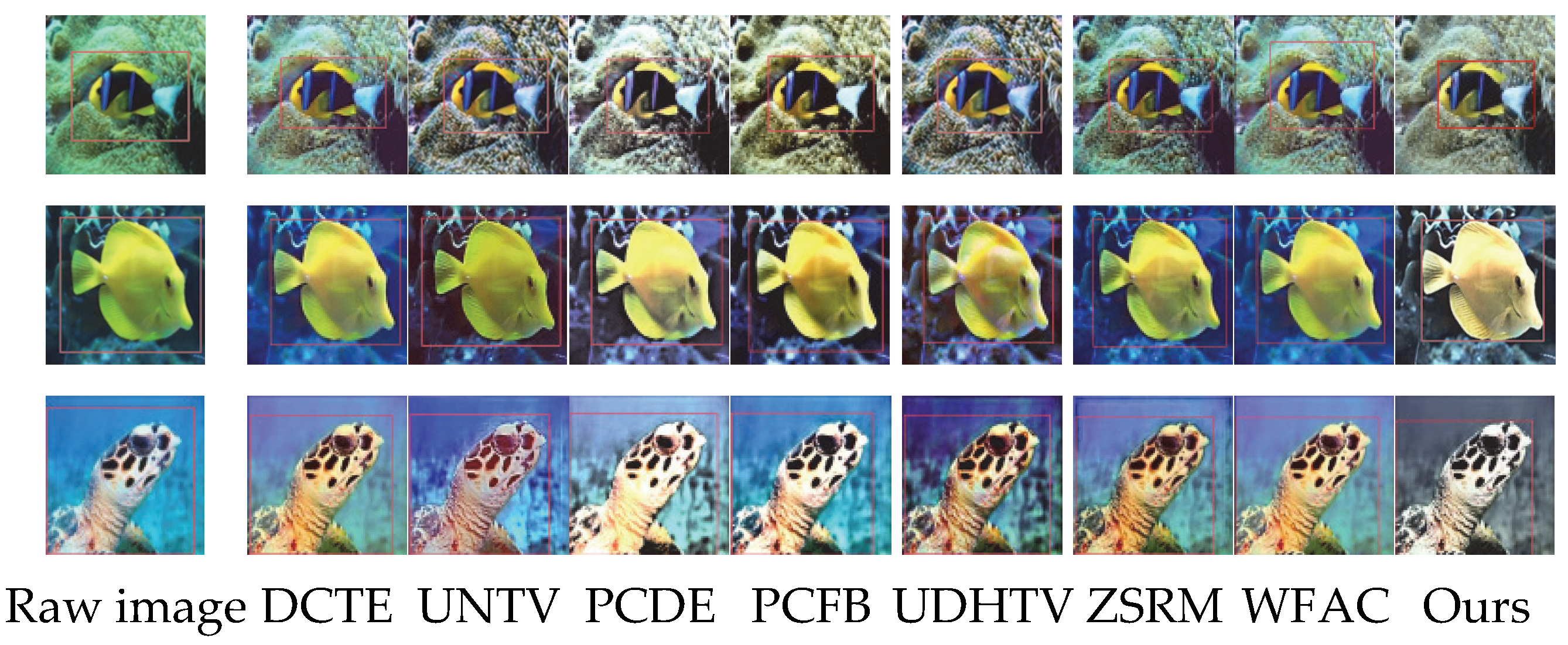

To visually verify the comprehensive effect of the proposed underwater image enhancement method, this section reports qualitative comparison experiments based on the UIEB, EUVP, and LSUI datasets. For the experiments, we selected DCTE, UNTV, PCDE, PCFB, UDHTV, ZSRM, and WFAC as mainstream comparison methods. Through visual effect comparison, the performance of the proposed method in color correction, contrast enhancement, and detail enhancement is evaluated. The qualitative comparison results are shown in

Figure 2, which integrates the enhancement effects of the three datasets: (a)–(c) are samples from the UIEB dataset, (d)–(f) are samples from the EUVP dataset, and (g)–(i) are samples from the LSUI dataset. Each dataset contains three typical underwater images. The experiments verify the enhancement stability of the method in different underwater scenarios through the comparison of multiple dataset samples.

As shown in

Figure 2, DCTE delivers a flat enhancement with no standout dimension: colors remain dim and shallow, while edges and textures gain little clarity, so complex patterns in LSUI still look almost identical to the original. UNTV fails to remove color casts—greenish or bluish hues linger—and keeps underwater creatures and reefs blurry; its low contrast leaves the entire image gray and lifeless. PCDE clearly overenhances: it over-brightens the scene, producing whitish “washed-out” areas that destroy natural underwater tones; aggressive sharpening turns texture edges ragged and noisy, strong contrast blows highlights and crushes shadows, flattening depth. PCFB tinges regions yellow, yielding a dark, disharmonious palette; weak detail recovery leaves edges soft, and blown contrast erases highlight information, breaks layering, and produces a harsh glare. The UDHTV algorithm has an obvious overenhancement problem. In terms of color correction, some areas show over-saturation, and the blue tone of scenes in the UIEB database is overly intensified, appearing unnatural. In terms of contrast enhancement, local highlight areas are overexposed, and the transition between dark and bright areas is harsh, which destroys the layering of the image. Although there is a certain effect in detail enhancement, overenhancement causes texture details to be masked by noise, resulting in relatively low overall visual comfort. ZSRM offers moderate enhancement—no obvious color cast—but its mild contrast still muddies light–dark separation; detail gains are limited, complex textures stay blurred, and edge acuity falls short of DCT. Finally, WFAC remains conservative, giving a hazy impression: blue-green tones are subdued, overall grayness is high, layering is weak, and the soft edge profile provides the lowest detail definition of all. Our column in

Figure 2 clearly shows that the proposed method has the best comprehensive performance of the three core indicators. In color correction, it can effectively correct the color shift in underwater scenes, with no color bias or over-saturation, and is close to the true color of the scene. In contrast enhancement, by adaptively adjusting the gain of high-frequency coefficients, it enhances the overall contrast while avoiding local overexposure—details in dark areas are clearly presented, and bright areas are not over-brightened, significantly enhancing the image layering and visual impact. The detail enhancement effect is particularly outstanding: the directional adjustment module specifically strengthens edges and textures, making edges sharper and details richer without noise amplification, achieving a good balance between detail clarity and overall naturalness.

By comparing the 8 groups of results from 9 samples across the three datasets in

Figure 2, the following conclusions can be drawn: UDHTV causes image distortion due to overenhancement, resulting in low visual comfort; WFAC adopts a conservative enhancement approach, suffering from insufficient enhancement, which leads to hazy images and missing details; ZSRM achieves moderate enhancement but performs poorly in detail processing, with no outstanding advantages in any dimension; DCTE shows a plain overall enhancement effect, featuring dark colors and limited detail improvement, thus performing mediocrely; UNTV has average color correction and insufficient detail and contrast enhancement, leading to high image grayscale and weak visual impact; PCDE’s overenhancement results in bright colors and whitish images, accompanied by noise amplification and damaged image layers; PCFB has problems such as insufficient details, partial yellowish tones and overexposed contrast, leading to uncoordinated color tones and low detail recognition; and the DCT algorithm achieves a better balance in color naturalness, contrast balance, and detail clarity, and its comprehensive performance is significantly superior to other algorithms.

4.2. Quantitative Evaluation

To quantitatively verify the performance of the method, in this section, seven metrics including UIQM [

57] (Underwater Image Quality Measure), SSIM [

58] (Structural Similarity Index), PSNR [

59] (Peak Signal-to-Noise Ratio), UCIQE [

57] (Underwater Color Image Quality Evaluator), information entropy [

60] (IE), average gradient [

61] (AG), and standard deviation [

62] (SD) are adopted for evaluation with the UIEB, EUVP, and LSUI datasets. The quantitative results are summarized in

Table 1,

Table 2 and

Table 3.

From the results of the UIEB dataset in

Table 1, the DCT algorithm stands out in several key indicators. In terms of overall quality, its UIQM reaches 4.5542, significantly higher than other algorithms. Meanwhile, its UCIQE ranks first at 0.5100, highlighting its advantages in color balance and contrast. In terms of detail enhancement, it achieves the highest information entropy of 7.6433, an average gradient of 122.4165 that far exceeds similar algorithms, and a standard deviation of 67.5269 that leads by a large margin. These indicate its remarkable effects in detail preservation, edge clarity and contrast improvement. In terms of structural consistency, the SSIM of DCT (0.8172) is slightly lower than that of PCFB (0.8270) and DCTE (0.8211), but it still remains at a good level. Its PSNR (31.3165) is close to that of most algorithms, belonging to the upper-middle level. In summary, although the DCT algorithm is not the best in terms of SSIM and PSNR, it has obvious advantages in core indicators such as UCIQE and information entropy. Its overall performance is stable and excellent, especially in color balance, detail preservation, and contrast improvement.

The results for the EUVP underwater image dataset in

Table 2 show that the DCT algorithm has significant advantages. In terms of overall quality, its UIQM reaches 4.9359, which is significantly higher than that of other algorithms; its UCIQE stands at 0.4915, second only to ZSRM (0.4942), still highlighting its advantages in color balance and contrast. In terms of detail enhancement, it achieves the highest information entropy of 7.7333, an average gradient of 146.1495 that far exceeds similar algorithms (with WFAC ranking second at 125.6689), and a standard deviation of 71.7036 that leads by a large margin, which indicates its remarkable effects in detail preservation, edge clarity, and contrast improvement. In terms of structural consistency, the SSIM of DCT (0.8093) is slightly lower than that of DCTE (0.8148) and UDHTV (0.8129) but higher than most algorithms such as UNTV (0.7748) and ZSRM (0.7340), still remaining at a good level; its PSNR (31.4466) is close to the values of DCTE (31.4721), UNTV (31.4732) and other algorithms, belonging to the upper-middle level. In summary, although the DCT algorithm is not the best in terms of SSIM and PSNR, it has obvious advantages in core indicators such as UIQM, information entropy, average gradient, and standard deviation, with stable and excellent overall performance, especially in color balance, detail preservation, and contrast improvement.

The results for the LSUI dataset in

Table 3 further validate the advantages of the DCT algorithm. In terms of overall quality, its UIQM reaches 4.5252, significantly higher than other algorithms (with WFAC ranking second at 3.9052); its UCIQE ranks first at 0.4883, far exceeding other algorithms, highlighting its advantages in color balance and contrast. In terms of detail enhancement, its IE (information entropy) is 7.6418, second only to WFAC (7.7409); its AG (average gradient) is 146.0433, which far surpasses similar algorithms; and its SD (standard deviation) leads by a large margin at 69.2745, indicating its remarkable effects in detail preservation, edge clarity, and contrast improvement. In terms of structural consistency, the SSIM of DCT (0.7783) is slightly lower than that of UNTV (0.8056) and UDHTV (0.7998) but higher than that of ZSRM (0.7720) and WFAC (0.6870), still remaining at a reasonable level; its PSNR (31.3688) is close to the values of ZSRM (31.4130), UDHTV (31.3572), and other algorithms, belonging to the upper-middle level. In summary, although the DCT algorithm is not the best in terms of SSIM and PSNR, it has obvious advantages in core indicators such as UIQM, UCIQE, average gradient, and standard deviation, with stable and excellent overall performance, especially in color balance, detail preservation, and contrast improvement.

Across the three benchmark datasets, the proposed DCT method consistently ranks first in terms of global visual quality, detail fidelity, and contrast enhancement. Its superior edge-sharpening and texture-preserving capabilities directly address the core degradations encountered in underwater imaging. Among the competitors, DCTE attains marginally higher scores on certain structural consistency indices, whereas WFAC occasionally surpasses others on isolated detail metrics; UNTV, PCDE, and PCFB exhibit intermediate performance. Overall, none of the alternative algorithms match the comprehensive enhancement delivered by the DCT framework.

4.3. Ablation Experiment

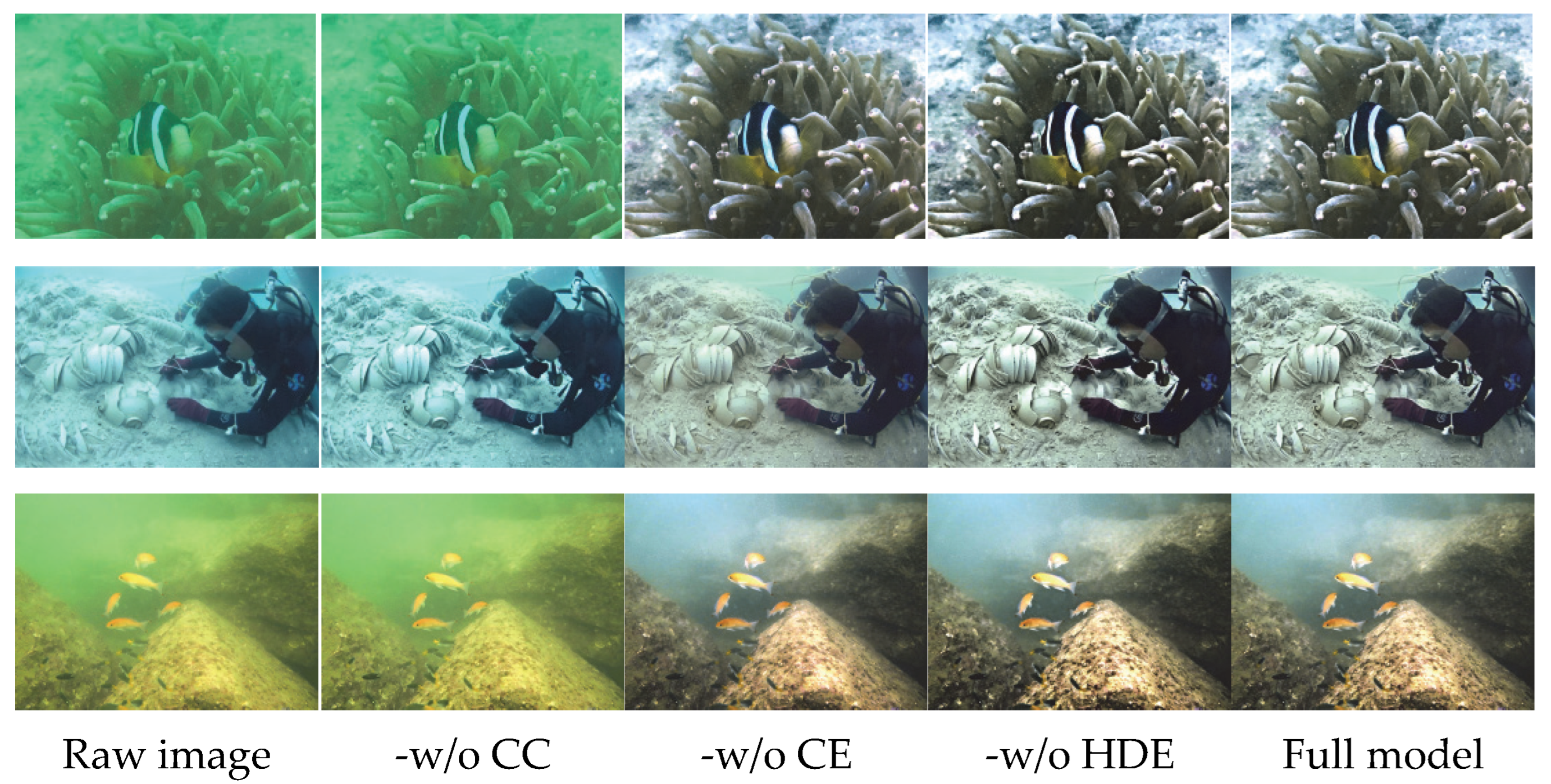

To quantitatively evaluate the contribution of each constituent module—color correction, contrast enhancement, and DCT-based detail refinement—systematic ablation studies were conducted on the UIEB dataset. Progressive removal of individual components and subsequent performance comparison elucidate both their standalone efficacy and their synergistic role in improving underwater image quality.

For the ablation experiment, we selected representative samples from the UIEB dataset and set up four comparison scenarios to isolate the influence of each component: the original image; the method without color correction (-w/o Color Correction, -w/o CC): removing the color correction module while retaining the contrast enhancement and DCT detail enhancement processes; the method without contrast enhancement (-w/o Contrast Enhancement, -w/o CE): removing the contrast enhancement module while retaining the color correction and DCT detail enhancement processes; and the method without DCT detail enhancement (-w/o DCT Detail Enhancement, -w/o HDE): removing the DCT detail enhancement module while retaining the color correction and contrast enhancement processes. The experiment adopted a combined qualitative and quantitative evaluation approach: qualitative results are presented through visual comparison images, as shown in

Figure 3, to demonstrate the visual effect differences among various scenarios; quantitative assessment was conducted by calculating metrics such as UIQM (Underwater Image Quality Measure), SSIM (Structural Similarity Index), PSNR (Peak Signal-to-Noise Ratio), UCIQE (Underwater Color Image Quality Evaluation), information entropy (IE), average gradient (AG), and standard deviation (SD). The average scores of each scenario on the UIEB dataset are summarized in

Table 4, with bolded values indicating the best results for the corresponding metrics.

Figure 3 shows the original underwater images selected from the UIEB dataset, as well as the enhancement results of the complete method and four ablation scenarios. Visual inspection unambiguously reveals the function of each module. In the absence of color correction (-w/o CC), the image retains moderate contrast and coarse details yet suffers from a pronounced blue-green cast caused by wavelength-selective attenuation; the foreground and background exhibit poor chromatic coherence, yielding an unnaturally rigid appearance. This confirms that the color correction module is essential for neutralizing scattering-induced color shift and for re-balancing channel intensities. When contrast enhancement is disabled (-w/o CE), the global histogram is restored to a natural palette, but the dynamic range collapses: dark-region details are submerged, bright-region highlights are compressed, and the entire image appears veiled and “grayish”. Even though the detail enhancement module is active, the low-contrast baseline prevents texture information from being perceived, underscoring the role of contrast enhancement in expanding the dynamic range. Without DCT detail enhancement (-w/o HDE), color fidelity and global contrast remain satisfactory, yet object boundaries and fine textures are blurred, and the overall plasticity is reduced; in complex regions, high-frequency details are clearly lost. This demonstrates that the DCT module, by boosting high-frequency coefficients in a visually adaptive manner, compensates for the local information deficit that contrast enhancement alone cannot restore.

Table 4 presents the average quantitative evaluation results for the complete method and three ablation scenarios on the UIEB dataset. Bold entries denote the best result for each metric. Ablation results demonstrate that the full algorithm consistently outperforms all degraded variants, corroborating the synergistic value of color correction, contrast enhancement, and DCT-based detail refinement. Removing color correction (-w/o CC) reduces UIQM to 2.7586 and UCIQE to 0.4100, confirming that this module is indispensable for natural color recovery and overall perceptual quality. Eliminating contrast enhancement (-w/o CE) decreases PSNR to 22.28 dB; although the average gradient remains high (101.11), the drop in luminance fidelity and global quality underscores the module’s role in boosting image layering. Excluding DCT detail enhancement (-w/o HDE) lowers the average gradient to 91.79 and information entropy to 7.583, revealing a clear loss in texture acuity and fine-detail richness. The complete algorithm, integrating all three modules, attains UIQM = 4.5542, PSNR = 31.32 dB, UCIQE = 0.5100, and average gradient = 122.42—delivering optimal color naturalness, contrast stratification, and detail sharpness.