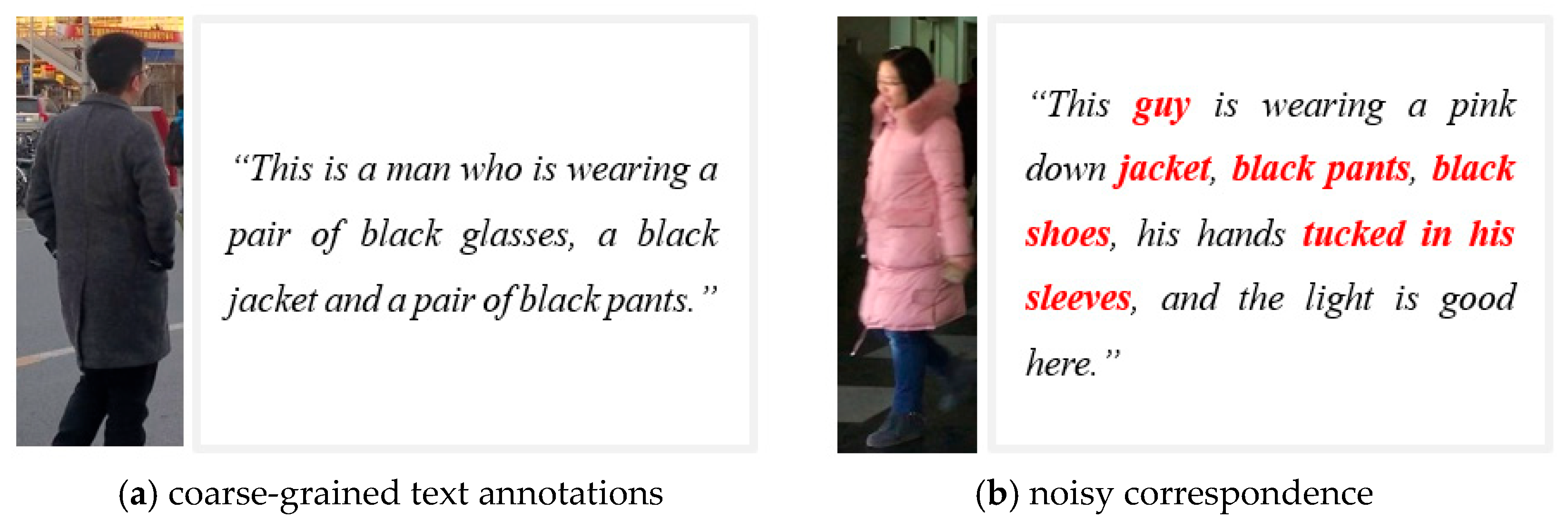

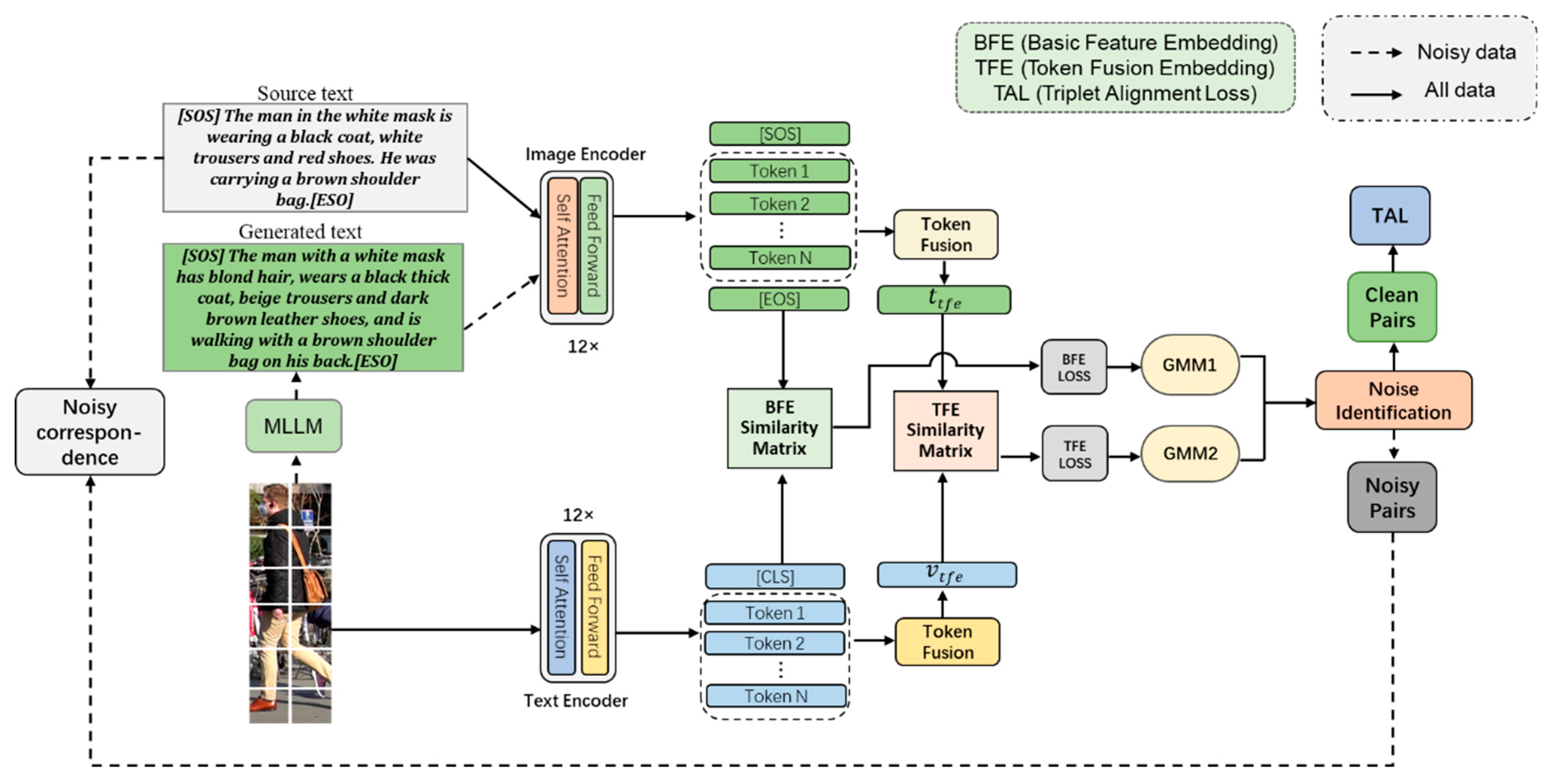

In this section, we present our proposed framework. To achieve robust fine-grained cross-modal alignment, particularly under noisy conditions, our framework employs three key components: feature representations for basic feature embedding (BFE) and token fusion embedding (TFE) features; adaptive pseudo-text augmentation, which first identifies noisy image–text pairs using a Gaussian mixture model (GMM) to focus subsequent processing on cleaner data, and then generates high-quality pseudo-texts for noisy images via am MLLM, effectively recovering valuable information from otherwise discarded samples; and cross-modal alignment, optimized using the triplet alignment loss (TAL), which ensures stable training by relaxing reliance on the hardest negative samples. These components work synergistically to enhance noise resilience. The overall framework of our method is illustrated in

Figure 2: we detail each part next.

3.1. Feature Representations

In this section, we utilize the visual encoder and text encoder of the pre-trained CLIP to obtain token representations and implement cross-modal interactions through two token fusion modules.

Image encoder: The pre-trained ViT model of the CLIP is adopted to obtain the image embedding. Due to the mismatch between the image resolution of the dataset used for the text-to-image pedestrian re-identification task and the resolution of the original image on the WIT dataset, the positional embeddings in the pre-trained ViT model of the CLIP cannot be directly imported. In this paper, the linear 2D interpolation method used in TransReID [

5] is employed to resize the positional embeddings for different image resolutions.

Given an input image

, where

denote the height, width, and number of channels of the image, respectively, we divide the image into

non-overlapping image blocks of 16 × 16 pixels:

. The

image blocks are then projected through a linear projection layer to obtain N D-dimensional image block vectors, and a learnable [CLS] embedding vector is inserted in front of the input sequence of image block vectors. In order to learn the relative positional relationship between the image block vectors, a positional embedding

is added to the input image block vector sequence, which can be expressed as follows:

where

is a flattened patch, and

E is a linear projection and denotes the linear projection layer that maps the parcel image to a D-dimensional vector. Then the embedding vector of image features is input into the ViT network. After passing through the L-layer transformer blocks the embedding vector

will be mapped to the joint image–text embedding space using linear projection, where

is used as the global feature representation of the image.

Text encoder: For the input text T, we directly use the CLIP text encoder to extract the textual representation, and following IRRA [

7] we first tokenize input text T with a 49,152 vocab size into a token sequence using lower-cased byte pair encoding (BPE). The [SOS] and [EOS] embedding vectors are inserted at the beginning and the end of the text description to identify the beginning and the end of the text description sentence, respectively. The maximum length of the text description sequence is set to 77 in order to ensure the computational efficiency. In order to learn the relative positional relationship of the words in the sentence, the positional embedding

is also added to the input sequence of word vectors. After the projection transformation, the input sequence can be represented as:

The final text-specific embedding vector input to the transformer can be expressed as:

Similarly, the output text feature embedding vectors are projected into the joint image–text embedding space at the last layer of the transformer, and the vector

represented by [EOS] is regarded as the global text feature representation. To compute the similarity between any image–text pairs (I, T), we directly utilize the global features [CLS] and [SOS] of the image and text to obtain the basic feature embedding (BFE) similarity by cosine similarity, i.e.,

Token fusion: To more precisely capture the correspondence between modalities, we introduce local features for a finer-grained interaction between the two modalities. Since the global features ([CLS] and [EOS]) are obtained by weighted aggregation of all local features, and the correlation weights reflect the correlation between global features and individual local features, the more informative token can be utilized to learn more discriminative embedding representations to obtain more representative global features. According to the previous approach [

37], we can select local features with higher scores for fusion from these correlation weights, which can be directly extracted from the self-attention weights of the last layer of the Transformer module. By selecting a proportion (

) of the corresponding local token features based on correlation weights, we perform feature transformation to obtain more expressive representations. The feature transformation utilizes the same embedding module as in the residual block [

17], which is as follows:

where

denotes the top-K most informative local features selected, and

and

are the image and text features after L2-normalization. Finally, we calculate the cosine similarity

between

and

, and the global feature similarity embedding

, in order to evaluate the cross-modal matching degree during both training and inference.

3.2. Pseudo-Text Augmentations

3.2.1. Noise Identification

To capture fine-grained correspondences between local visual patches and textual tokens, we propose token fusion embedding (TFE). TFE selects the top-K informative tokens from image and text token sequences according to the last-layer self-attention correlation scores, applies a residual embedding block to each selected token, and aggregates them via L2-normalized fusion. Deep neural networks tend to learn from clean samples faster than from noisy ones, because clean data generally results in lower loss values compared to noisy data [

37,

43]. Therefore, we utilize two two-component Gaussian mixture models, GMM to fit the per-sample loss computed by BFE and TFE to model the similarity of image–text pairs as clean distribution and noisy distribution, i.e., the Gaussian component with the lower mean value was assigned as the clean set and the other as the noisy set, respectively.

For the

ith sample pair we define its TAL as

(

is defined in Equation (10)). The per-sample loss is input into the GMM, which is optimized using the Expectation-Maximization (EM) [

37] algorithm, then we compute the posterior probability, i.e.,

where the posterior probability

denotes the probability that the

ith sample is classified as a clean or noisy sample pair. By setting a threshold

, thus we can classify the dataset containing M image–text pairs into a clean dataset

and noisy dataset

. This can be depicted as follows:

We set the noisy dataset obtained from the two Gaussian mixture model GMMs as

, respectively, and the final segmentation yields a noisy dataset of

and a clean dataset of

. This can be depicted as follows:

For the rest of the data, i.e.,

, we randomly classify it as either a noisy set or a clean set. Instead of randomly assigning ambiguous samples, we adopt a soft-label weighting scheme based on their GMM posterior probabilities. Each sample

i contributes to the loss as:

where

is the posterior probability of being the clean samples. Samples with posterior values in the range [0.4, 0.6] are temporarily excluded from gradient updates, forming an uncertainty zone that prevents unstable supervision. This modification enhances training robustness and avoids reintroducing label noise into the optimization process.

3.2.2. Pseudo-Text Generation

To replace manual annotations with high-quality textual descriptions, we utilize MLLM to generate textual descriptions automatically and employ them to replace traditional manual annotations. Specifically, we apply a similar method by providing the descriptions from the CUHK-PEDES, ICFG-PEDES, and RSTPReid datasets to GPT-3.5 [

44] to capture their sentence patterns (i.e., description templates). After multiple rounds of dialog, ChatGPT generated 35 templates. We randomly select one of these templates and insert it into the static instruction to obtain the dynamic prompt as follows:

“Generate a description about the overall appearance of the person, including clothing, shoes, hairstyle, gender, and belongings, in a style similar to the template: ‘{template}’. If some requirements in the template are not visible, you can ignore them. Do not imagine any contents that are not in the image.”

The publicly available Qwen-VL [

42] covers a broader range of capabilities, and its lightweight and convenient deployment makes it an ideal choice. Therefore, we select the Qwen-VL model for pseudo-text generation, as illustrated in

Figure 3.

Once the model training process approaches a state of convergence, images derived from the noisy image–text pairs that were classified in the preceding training epoch are autonomously inputted into the MLLM. By leveraging dynamic prompting techniques, image captions are generated. These newly produced captions subsequently substitute the originally noisy text within the dataset. The model training then proceeds, utilizing this refined dataset for subsequent iterations of the training process.

Decoding and hyperparameters: We used the following decoding hyperparameters unless otherwise noted: temperature = 0.3, top_p = 0.95, max_length = 64 tokens, and num_return_sequences = 5 (i.e., generate 5 candidate captions per image). The random seed for generation was fixed at 42 to improve reproducibility.

Post-generation filtering and selection: To reduce hallucination and improve semantic alignment, we apply a two-stage selection procedure: (1) Heuristic filtering removes candidates that contain tokens that strongly contradict the image (e.g., explicit object labels known absent from dataset, or uses phrases like “not visible” that indicate model uncertainty). (2) CLIP reranking—for all remaining candidates we compute CLIP cosine similarity between the image and each candidate text; we select the caption with the highest CLIP similarity as the pseudo-text replacement. If all candidates are filtered, the top CLIP-scored candidate is selected (fallback). This selection strategy ensures the chosen pseudo-text both matches the image semantically and follows dataset-style templates.

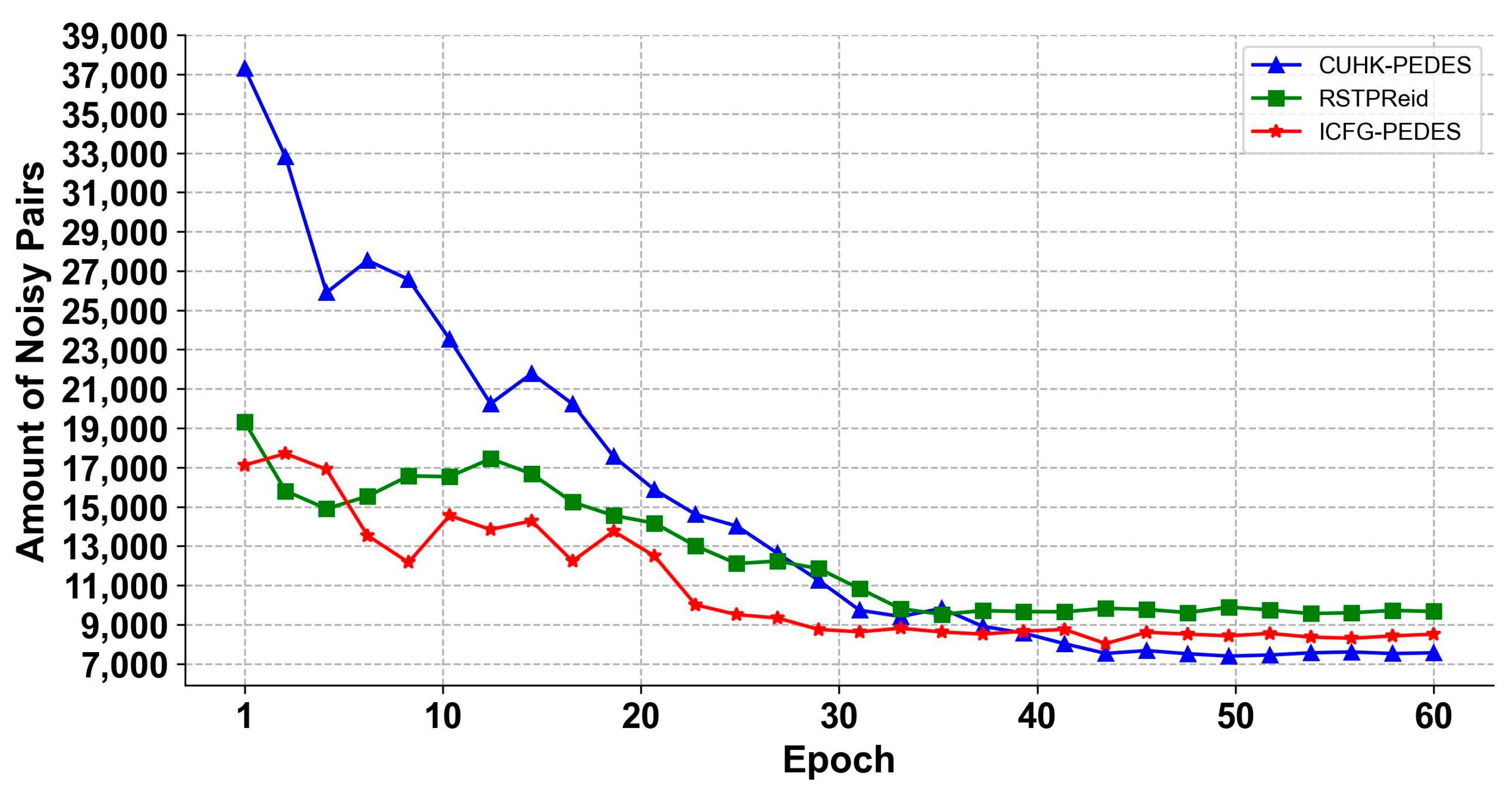

When generation occurs we generate pseudo-texts after the noise-identification step stabilizes; in our experiments, stabilization of noise identification is observed around the 40th training epoch thus pseudo-text generation is executed at epoch 41. Generated pseudo-texts replace the noisy captions for subsequent training epochs.

3.2.3. Cross-Modal Alignment

The commonly used loss functions in text-to-image matching systems that exhibit good performance include InfoNCE loss [

45], Triplet Ranking loss (TRL) [

46] and image–text similarity distribution matching (SDM) loss [

7]. However, these loss functions face challenges such as handling the hardest negative samples and selecting appropriate similarity margins, particularly when dealing with the re-recognition of pedestrian text in noisy environments. Over-concern about the hardest negative samples may lead to local minima or even model collapse in such scenarios. In contrast, the novel triplet alignment loss (TAL) proposed by RDE [

37] reduces the risk of the optimization being dominated by the hardest negatives.

By introducing a temperature coefficient

, TAL considers all positive and negative sample pairs and relaxes the similarity learning from the hardest negative samples to all negative ones by applying an upper bound and using a weighted average to sum the log values of all negative samples, thereby making the training more stable and comprehensive by considering all pairs. Therefore, we utilize the TAL function to optimize and update the model. TAL is defined as:

where

m denotes the margin value of the difference between positive and negative samples,

and

denote the image and text of a batch of samples, respectively, and

,

, and

are the hardest negative, negative, and positive samples of the anchor sample

, respectively.