Jar-RetinexNet: A System for Non-Uniform Low-Light Enhancement of Hot Water Heater Tank Inner Walls

Abstract

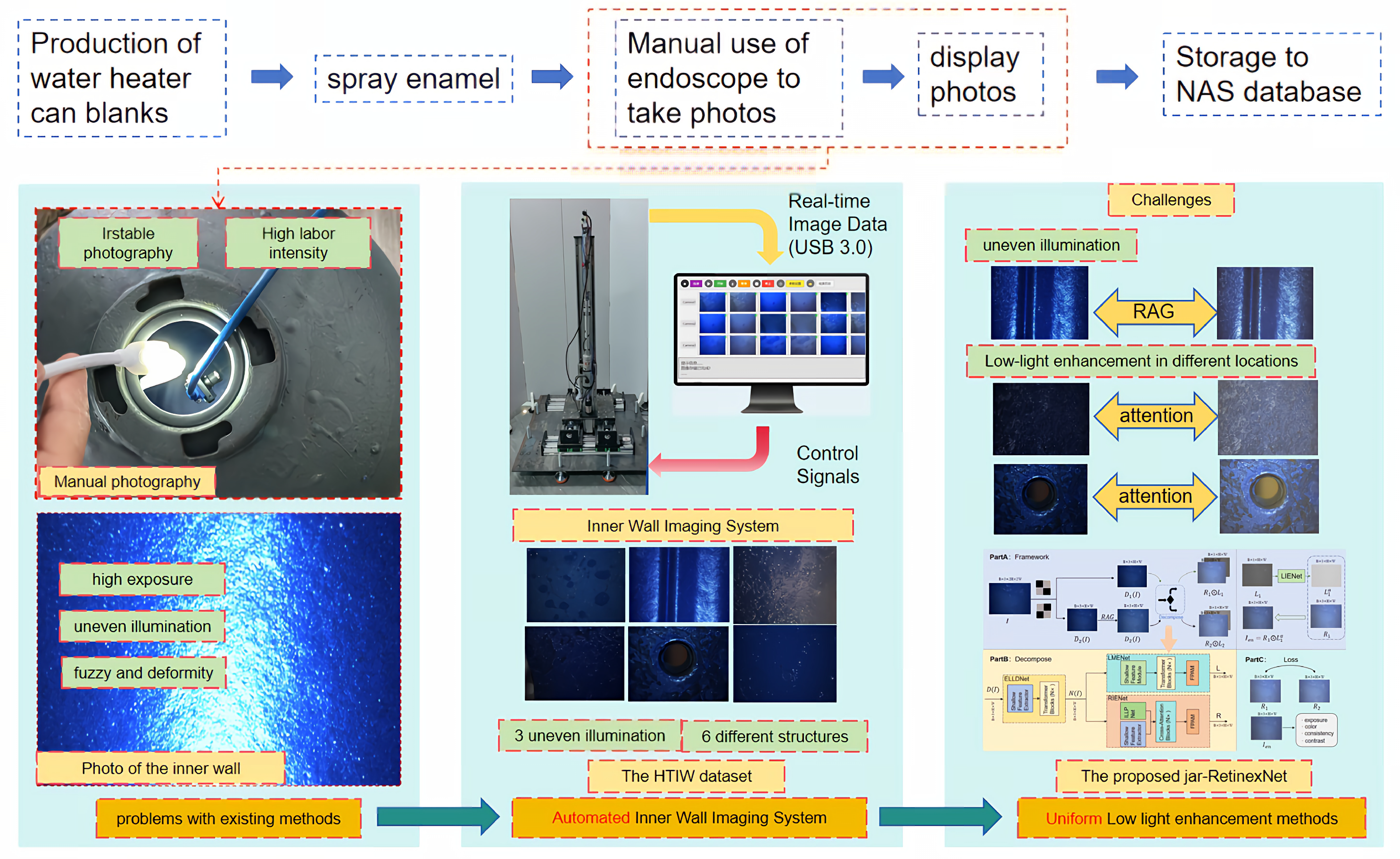

1. Introduction

- How can the adverse effects of non-uniform illumination be eliminated? Non-uniform lighting not only severely degrades the visual quality of images but also significantly impairs the accuracy of downstream task algorithms, such as defect detection.

- How can adaptive enhancement be performed for different illumination regions? The lighting conditions vary dramatically across different areas within the water heater tank. Without an adaptive approach, enhancement results are highly susceptible to localized over-exposure (over-enhancement) or loss of detail (under-enhancement).

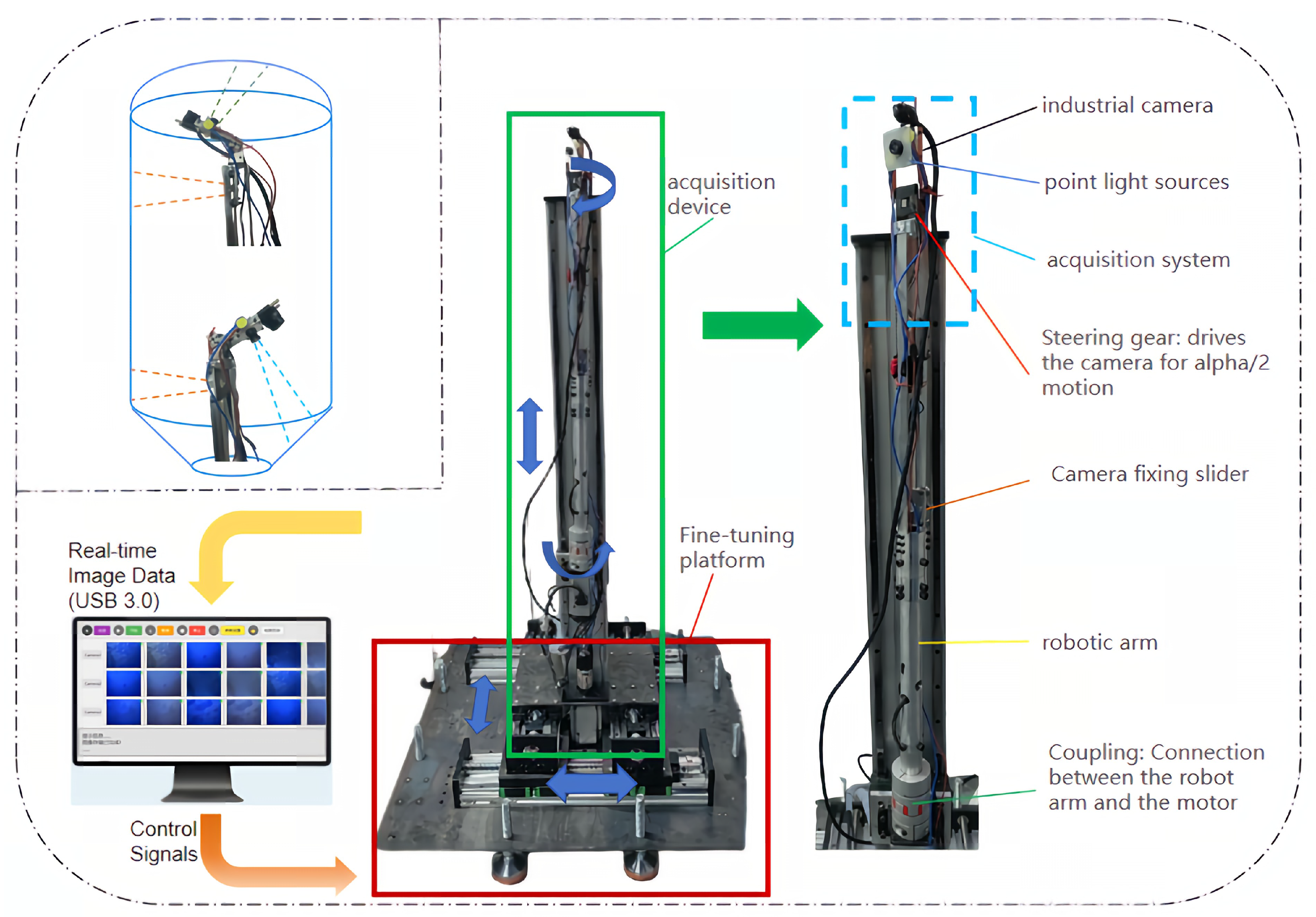

- We propose a novel hardware system for image acquisition inside water heater tanks, consisting of the IAR robot and IVES software (version 1.0). This system achieves efficient, full-coverage image acquisition within an approximate 50 mm aperture, with an inspection efficiency of 3 min per unit.

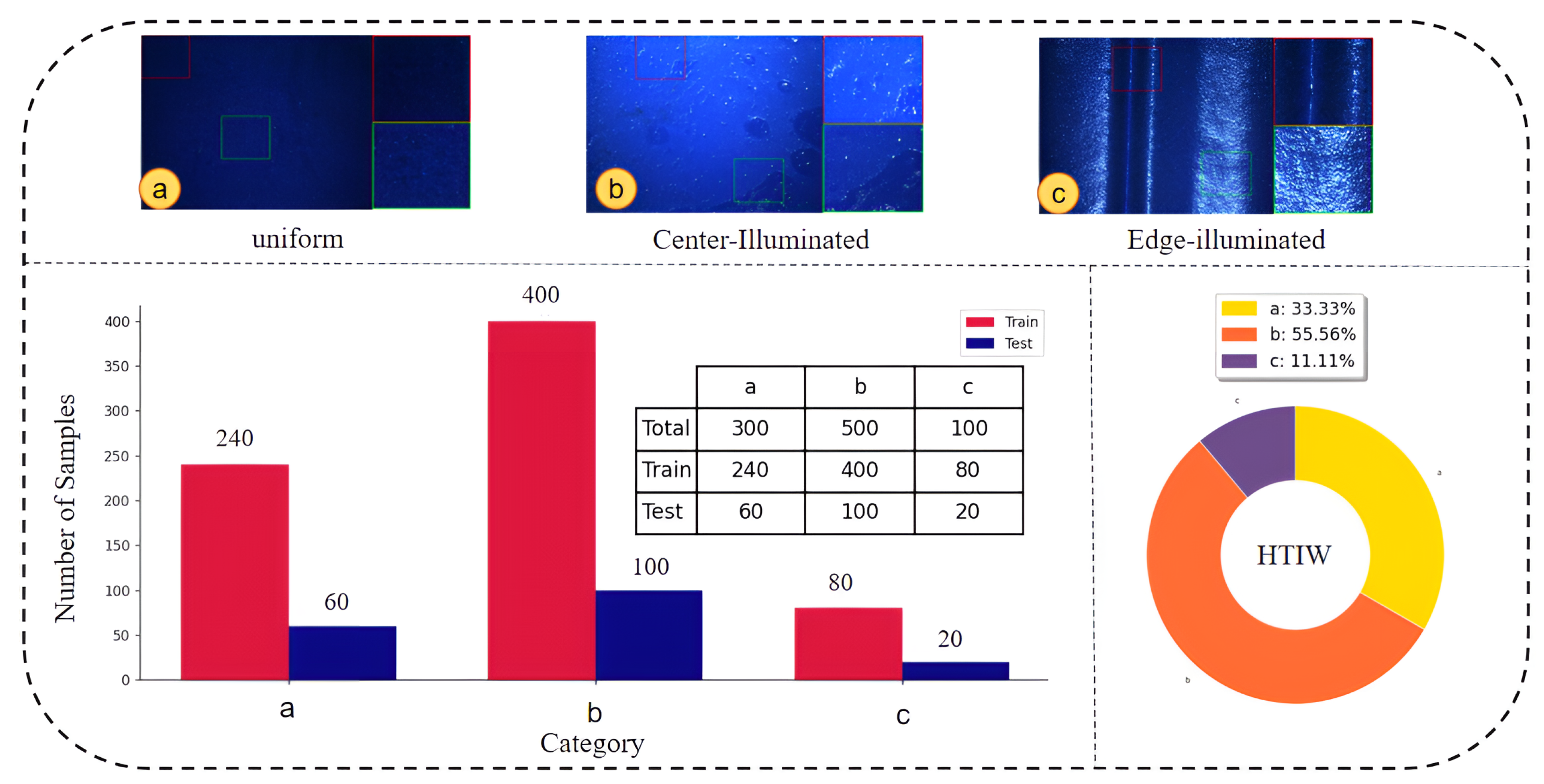

- To the best of our knowledge, we introduce the first public dataset for VE in ESWHs, named the HTIW dataset. It comprises 900 images covering 3 types of non-uniform illumination and 6 representative regions.

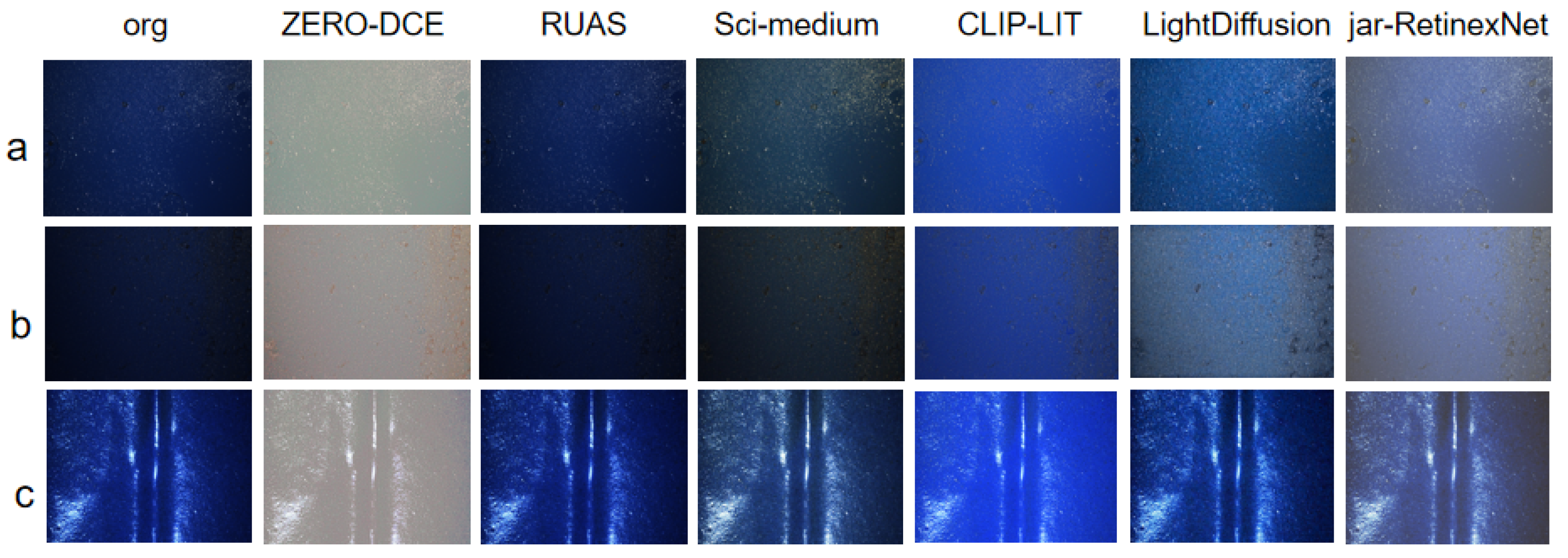

- We propose an effective non-uniform low-light enhancement network, jar-RetinexNet. In a comparison against five state-of-the-art methods, jar-RetinexNet demonstrates superior performance on multiple no-reference metrics and in visual quality.

2. Related Works

2.1. NUI Enhancement in Industrial Production

2.2. Image Acquisition (Systems) for VE in ESWHs

3. Proposed System and Dataset

3.1. Low-Light Image Enhancement System

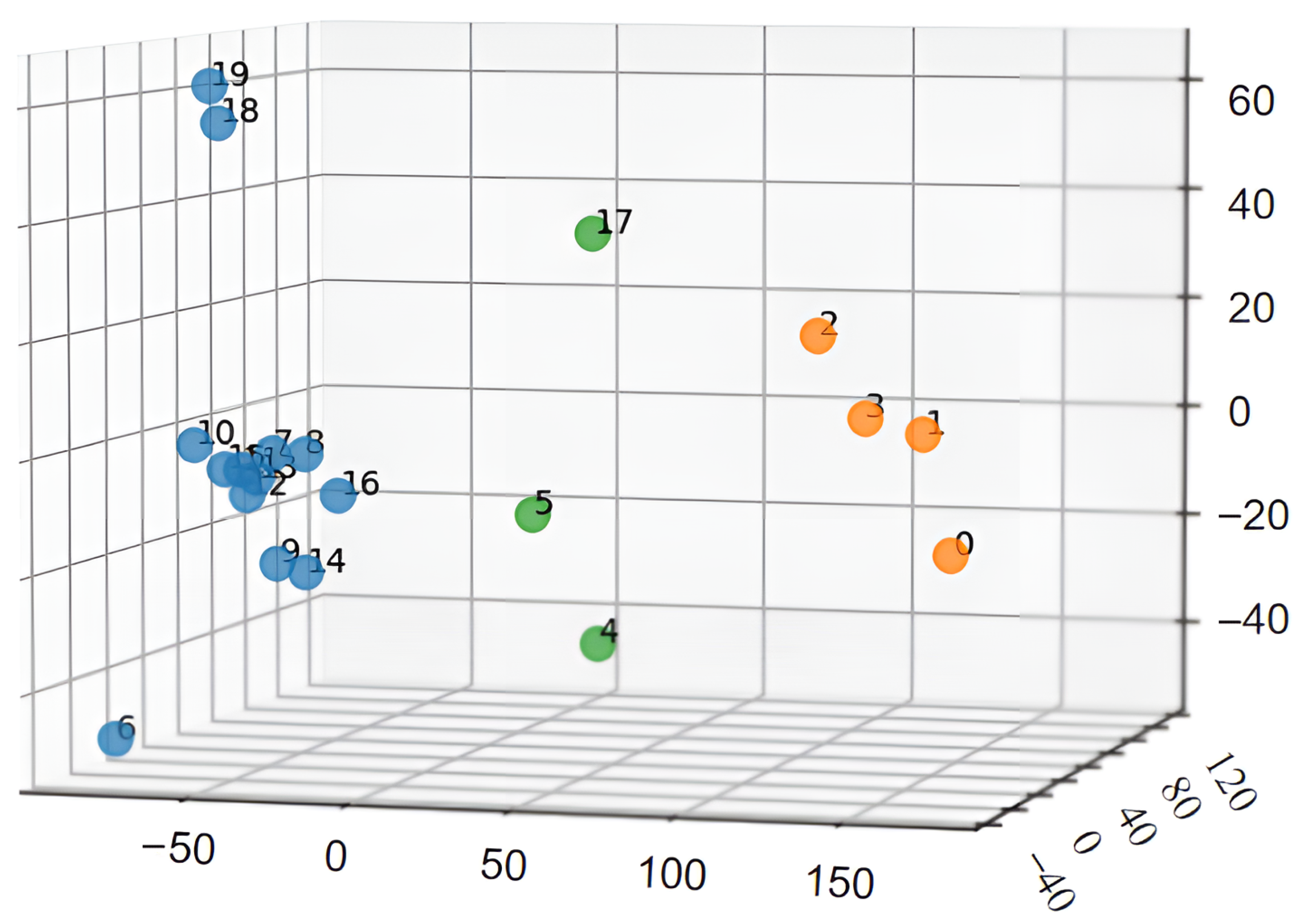

3.2. Dataset Construction Process

- Uniform Low-Light Distribution. Primarily found at the top and bottom regions of the tank (indices 0–3).

- Bright Center, Dark Edges. Mainly appears on the side walls and around small aperture areas of the tank (indices 6–16, 18–19).

- Dark Center, Bright Edges. Predominantly located in the weld seam areas (indices 4, 5, 17).

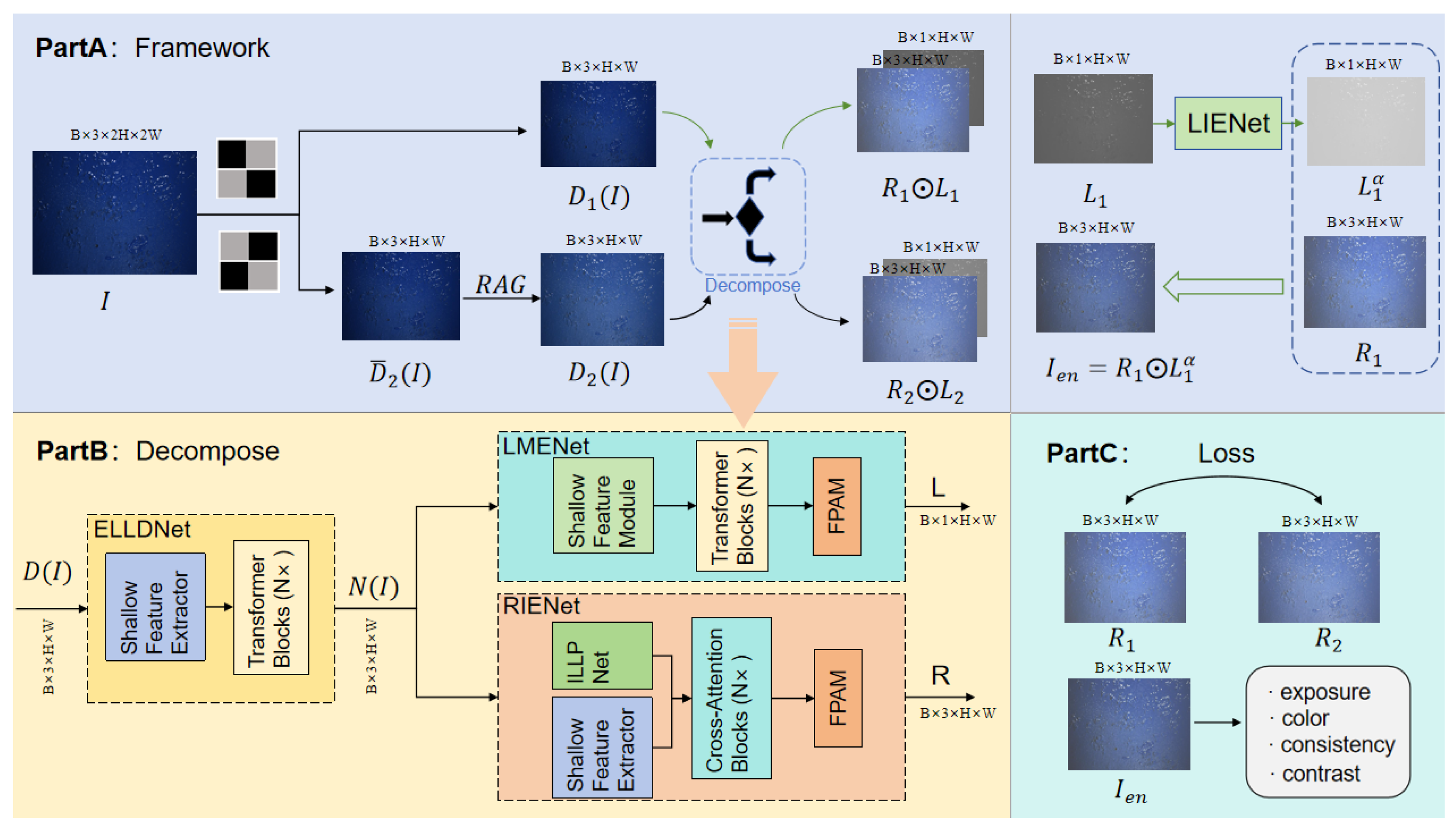

4. Proposed Method

4.1. Overview

4.2. Random Affine Generation Module

4.3. Decomposition Module

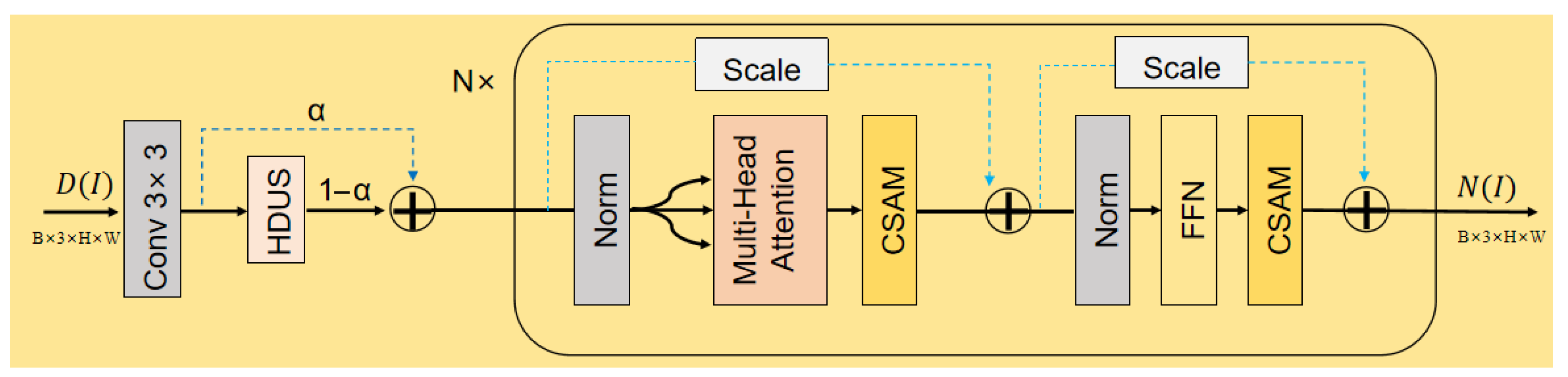

4.3.1. ELLDNet

- Multi-Head Self-Attention Layer:where is Layer Normalization. This layer captures long-range dependencies among features.

- Feed-Forward Network Layer:The FFN layer, consisting of two linear transformations and a non-linear activation, performs point-wise feature transformation.

- CSAM: To enhance the network’s feature representation, we embed a CSAM after both the MHSA and FFN layers. Unlike standard sequential attention (e.g., CAM then SAM), our industrial images contain both large-scale, low-frequency illumination properties (best captured by channel attention) and fine-grained, high-frequency textures/defects (best captured by spatial attention). A sequential-only approach risks suppressing one feature type while refining the other. Our CSAM therefore adopts a parallel, cascaded structure. The module adaptively recalibrates features by fusing two parallel attention branches. The first branch sequentially applies a Channel Attention Module (CAM) and a Spatial Attention Module (SAM), while the second branch applies only the SAM to the original input. The final output is a weighted sum of these two branches, where the balance is controlled by a learnable parameter . For an input feature , the operation is:CAM learns and applies weights to different channels, while SAM subsequently learns and applies weights to different spatial locations. This guides the network to focus on information-rich channels and regions, thereby enhancing feature discriminability.

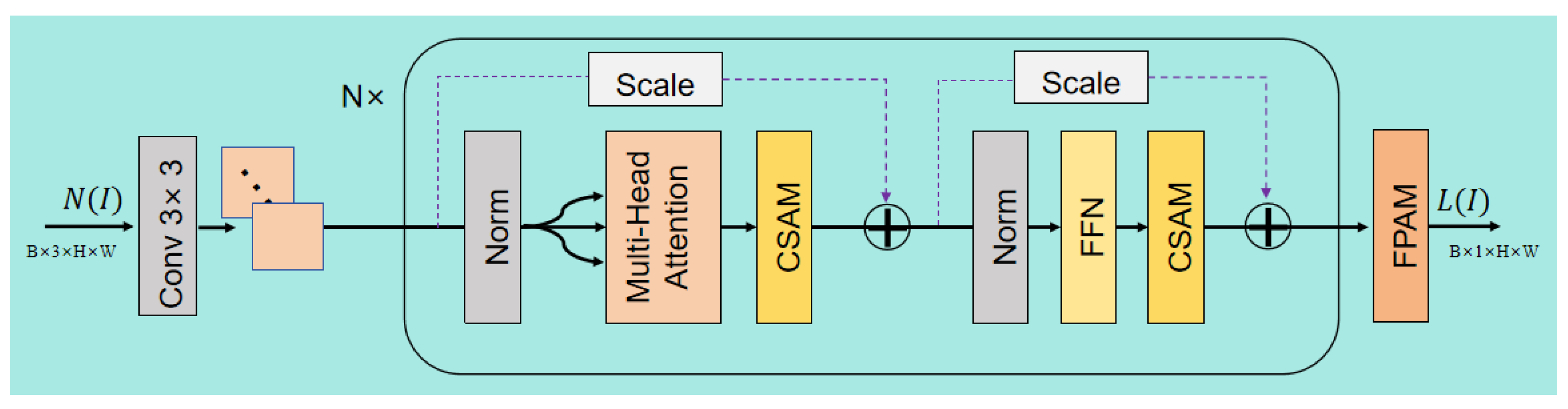

4.3.2. LMENet

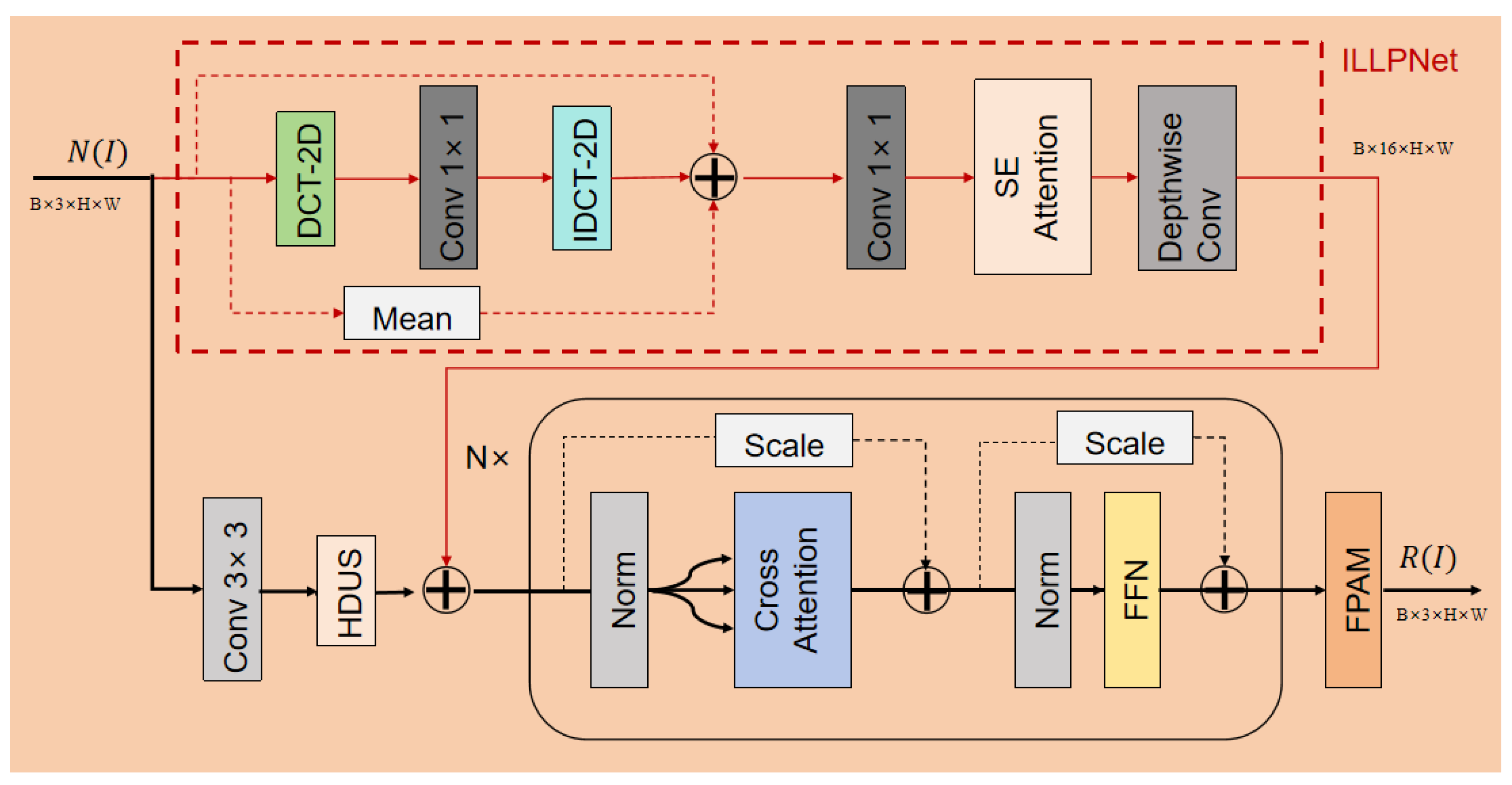

4.3.3. RIENet

- Query (Q): The fused feature is used as the Query. This asks the following question: “For this mixed feature, what illumination information does it contain?”

- Key (K) & Value (V): The illumination-prior is used as both the Key and Value. This provides the following answer: “This is the illumination information you should look for and subtract.”

4.3.4. LIENet

4.4. Loss Function

4.4.1. Retinex Decomposition Loss

- Reflectance Consistency Loss (): This term constrains the reflectance maps (, ) recovered from two sub-images with different degradations to be consistent, based on the prior that an object’s reflectance is illumination-invariant. It is defined aswhere is a cross-scale regularization term to enhance generalization and is the regularization coefficient.

- Illumination and Reconstruction Loss (): This term comprises a reconstruction loss to ensure fidelity and a smoothness loss to enforce the gradual nature of natural illumination. It is formulated as

4.4.2. Self-Supervised Enhancement Loss

- Spatial Consistency Loss (): This loss preserves the image’s structure and content by maintaining the consistency of gradients between the image before and after enhancement.

- Enhancement Effect Loss (): This loss balances two aspects of enhancement: an exposure loss () that drives local brightness towards a well-exposed level, and a color constancy loss () to prevent color casts. Its formula is

5. Experiments

5.1. Implementation Details

5.2. Evaluation Metrics

- Artifact and Distortion Suppression: The primary metric is BRISQUE (Blind/Referenceless Image Spatial Quality Evaluator) [16]. In an industrial context, an enhancement algorithm must not introduce new distortions (e.g., noise amplification, unnatural edges, or blur) that could be mistaken for defects or could obscure them. BRISQUE excels at quantifying such artifacts by evaluating natural scene statistics. A lower score indicates a cleaner, higher-quality image with fewer distortions.

- Structural Clarity and Naturalness: The second metric is CLIPIQA [28]. While BRISQUE assesses low-level artifacts, CLIPIQA, which is based on a large-scale vision-language model (CLIP), evaluates the overall semantic quality and naturalness. For our task, a “good” enhancement must restore the structural clarity and texture of the enamel surface, making potential defects easily discernible. A low CLIPIQA score signifies that the enhanced image is not only bright but also semantically coherent and structurally clear, which is crucial for subsequent human or automated inspection.

5.3. Comparative Experiments

5.3.1. Quantitative Results

5.3.2. Qualitative Results

5.4. Ablation Study

- Importance of RAG: When the RAG is removed (i.e., the row indicated by × RAG), the model’s performance on both BRISQUE and CLIPIQA no-reference metrics decreases. This indicates that the RAG is crucial in guiding the model to learn natural, smooth, and consistent illumination distributions, enhancing the network’s adaptability and generalization performance to non-uniform illumination scenes.

- Effectiveness of FPAM and CSAM Attention Mechanisms: As can be seen from Table 2, Whether increasing FPAM, CSAM, or both simultaneously, the performance of the BRISQUE and CLIPIQA metrics increases significantly, indicating a marked improvement in image quality. This strongly demonstrates that the FPAM and CSAM attention mechanisms play an important role in improving the structural fidelity and perceptual quality of enhanced images, effectively promoting the extraction and representation of useful features in images, especially crucial for the precise separation of reflectance and illumination layers.

5.5. Hyperparameter Sensitivity Analysis

5.6. Downstream Task Validation: Defect Detection

5.7. Practical Application

5.8. Limitations Analysis and Further Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mordor Intelligence. Global Residential Water Heater Market. Size, Share, & Forecast (2024–2029). Market Report, Mordor Intelligence. 2024. Available online: https://www.mordorintelligence.com/industry-reports/global-residential-water-heaters-market (accessed on 18 November 2025).

- Wang, M.; Guo, K.; Wei, Y.; Chen, J.; Cao, C.; Tong, Z. Failure analysis of cracking in the thin-walled pressure vessel of electric water heater. Eng. Fail. Anal. 2023, 143, 106913. [Google Scholar] [CrossRef]

- Mattei, N.; Benedetti, L.; Rossi, S. Corrosion Behavior of Porcelain Enamels in Water Tank Storage. Coatings 2025, 15, 934. [Google Scholar] [CrossRef]

- Colvalkar, A.; Nagesh, P.; Sachin, S.; Patle, B.K. A comprehensive review on pipe inspection robots. Int. J. Mech. Eng. 2021, 10, 51–66. [Google Scholar]

- Land, E.H.; McCann, J.J. Lightness and retinex theory. J. Opt. Soc. Am. 1971, 61, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Shen, L.; Yue, Z.; Feng, F.; Chen, Q.; Liu, S.; Ma, J. Msr-net: Low-light image enhancement using deep convolutional network. arXiv 2017, arXiv:1711.02488. [Google Scholar]

- Park, S.; Yu, S.; Kim, M.; Park, K.; Paik, J. Dual autoencoder network for retinex-based low-light image enhancement. IEEE Access 2018, 6, 22084–22093. [Google Scholar] [CrossRef]

- Liang, J.; Xu, Y.; Quan, Y.; Wang, J.; Ling, H.; Ji, H. Deep bilateral retinex for low-light image enhancement. arXiv 2020, arXiv:2007.02018. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.u.; Woodell, G.A. A multiscale retinex for color image enhancement. IEEE Trans. Image Process. 1997, 6, 969–985. [Google Scholar] [CrossRef] [PubMed]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; Romeny, B.t.H.; Zimmerman, J.B. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Wu, W.; Weng, J.; Zhang, P.; Wang, X.; Yang, W.; Jiang, J. Uretinex-net: Retinex-based deep unfolding network for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5901–5910. [Google Scholar]

- Xu, X.; Wang, R.; Fu, C.W.; Jia, J. SNR-aware low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17714–17724. [Google Scholar]

- Wang, T.; Zhang, K.; Shen, T.; Luo, W.; Stenger, B.; Lu, T. Ultra-high-definition low-light image enhancement: A benchmark and transformer-based method. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 2654–2662. [Google Scholar]

- Cai, Y.; Bian, H.; Lin, J.; Wang, H.; Timofte, R.; Zhang, Y. Retinexformer: One-stage retinex-based transformer for low-light image enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 12504–12513. [Google Scholar]

- Bai, J.; Yin, Y.; He, Q.; Li, Y.; Zhang, X. Retinexmamba: Retinex-based mamba for low-light image enhancement. In Proceedings of the International Conference on Neural Information Processing, Okinawa, Japan, 20–24 November 2025; pp. 427–442. [Google Scholar]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar] [CrossRef]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. Enlightengan: Deep light enhancement without paired supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef] [PubMed]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-reference deep curve estimation for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1780–1789. [Google Scholar]

- Ma, L.; Ma, T.; Liu, R.; Fan, X.; Luo, Z. Toward fast, flexible, and robust low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5637–5646. [Google Scholar]

- Wen, Y.; Xu, P.; Li, Z.; ATO, W.X. An illumination-guided dual attention vision transformer for low-light image enhancement. Pattern Recognit. 2025, 158, 111033. [Google Scholar] [CrossRef]

- Wang, W.; Yin, B.; Li, L.; Li, L.; Liu, H. A Low Light Image Enhancement Method Based on Dehazing Physical Model. Comput. Model. Eng. Sci. (CMES) 2025, 143, 1595–1616. [Google Scholar] [CrossRef]

- Hu, R.; Luo, T.; Jiang, G.; Chen, Y.; Xu, H.; Liu, L.; He, Z. DiffDark: Multi-prior integration driven diffusion model for low-light image enhancement. Pattern Recognit. 2025, 168, 111814. [Google Scholar] [CrossRef]

- Zhang, M.; Yin, J.; Zeng, P.; Shen, Y.; Lu, S.; Wang, X. TSCnet: A text-driven semantic-level controllable framework for customized low-light image enhancement. Neurocomputing 2025, 625, 129509. [Google Scholar] [CrossRef]

- Zhou, H.; Dong, W.; Liu, X.; Zhang, Y.; Zhai, G.; Chen, J. Low-light image enhancement via generative perceptual priors. Proc. AAAI Conf. Artif. Intell. 2025, 39, 10752–10760. [Google Scholar] [CrossRef]

- Czimmermann, T.; Ciuti, G.; Milazzo, M.; Chiurazzi, M.; Roccella, S.; Oddo, C.M.; Dario, P. Visual-based defect detection and classification approaches for industrial applications—A survey. Sensors 2020, 20, 1459. [Google Scholar] [CrossRef] [PubMed]

- Tabernik, D.; Šela, S.; Skvarč, J.; Skočaj, D. Segmentation-based deep-learning approach for surface-defect detection. J. Intell. Manuf. 2020, 31, 759–776. [Google Scholar] [CrossRef]

- Huaqiu, L.; Hu, X.; Wang, H. Interpretable Unsupervised Joint Denoising and Enhancement for Real-World low-light Scenarios. In Proceedings of the the Thirteenth International Conference on Learning Representations, Singapore, 24–28 April 2025. [Google Scholar]

- Zhang, X.; Xu, Z.; Tang, H.; Gu, C.; Chen, W.; Zhu, S.; Guan, X. Enlighten-Your-Voice: When Multimodal Meets Zero-shot Low-light Image Enhancement. arXiv 2023, arXiv:2312.10109. [Google Scholar]

- Liang, Z.; Li, C.; Zhou, S.; Feng, R.; Loy, C.C. Iterative prompt learning for unsupervised backlit image enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 8094–8103. [Google Scholar]

- Liu, R.; Ma, L.; Zhang, J.; Fan, X.; Luo, Z. Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10561–10570. [Google Scholar]

- Jiang, H.; Luo, A.; Liu, X.; Han, S.; Liu, S. Lightendiffusion: Unsupervised low-light image enhancement with latent-retinex diffusion models. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 161–179. [Google Scholar]

| Method | Params (M) | FLOPs (G) | AIT (ms) | BRISQUE ↓ | CLIPIQA ↓ |

|---|---|---|---|---|---|

| RUAS | 0.01 | 18.2 | 4.87 | 32.8689 | 0.3609 |

| ZERO-DCE | 0.08 | 1.5 | 0.67 | 26.4096 | 0.3293 |

| Sci-medium | 0.01 | 271.98 | 6.63 | 29.2346 | 0.3839 |

| Clip-LIT | 0.27 | 432.92 | 111.49 | 34.0603 | 0.4393 |

| LightDiffusion | 25.41 | 2800.77 | 372.25 | 42.2949 | 0.4115 |

| Ours | 0.31 | 968.192 | 216.59 | 25.4457 ± 0.90 | 0.3160 ± 0.04 |

| RAG | FPAM | CSAM | BRISQUE ↓ | CLIPIQA ↓ |

|---|---|---|---|---|

| × | × | × | 39.4295 | 0.4299 |

| × | ✓ | × | 39.2021 | 0.3645 |

| ✓ | ✓ | × | 26.6651 | 0.3268 |

| × | ✓ | ✓ | 25.4706 | 0.3260 |

| 25.4457 | 0.3160 |

| RAG Intensity | Description | BRISQUE ↓ | CLIPIQA ↓ |

|---|---|---|---|

| None | RAG module removed | 39.4295 | 0.4299 |

| High | Extreme Blur + High Noise | 40.9128 | 0.4358 |

| Low | Color Jitter + Gamma | 35.1543 | 0.3781 |

| Medium (Ours) | Low + Blur + Noise | 31.4362 | 0.3460 |

| Training Data | mAP@0.5 (%) |

|---|---|

| Original Low-Light Images | 45.2 |

| jar-RetinexNet Enhanced (Ours) | 72.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, W.; Guo, L.; Cao, J.; Wu, W. Jar-RetinexNet: A System for Non-Uniform Low-Light Enhancement of Hot Water Heater Tank Inner Walls. Sensors 2025, 25, 7121. https://doi.org/10.3390/s25237121

Cao W, Guo L, Cao J, Wu W. Jar-RetinexNet: A System for Non-Uniform Low-Light Enhancement of Hot Water Heater Tank Inner Walls. Sensors. 2025; 25(23):7121. https://doi.org/10.3390/s25237121

Chicago/Turabian StyleCao, Wenxin, Lei Guo, Juanhua Cao, and Weijun Wu. 2025. "Jar-RetinexNet: A System for Non-Uniform Low-Light Enhancement of Hot Water Heater Tank Inner Walls" Sensors 25, no. 23: 7121. https://doi.org/10.3390/s25237121

APA StyleCao, W., Guo, L., Cao, J., & Wu, W. (2025). Jar-RetinexNet: A System for Non-Uniform Low-Light Enhancement of Hot Water Heater Tank Inner Walls. Sensors, 25(23), 7121. https://doi.org/10.3390/s25237121