1. Introduction

In recent years, Deep Learning (DL) has transformed healthcare applications, including disease prediction, drug discovery, and medical image analysis [

1]. These applications typically rely on supervised learning algorithms that can learn complex representations when trained on extensive labeled data collections. Consequently, the performance of DL models is closely linked to the quality of the training labels, which are often assumed to represent the absolute ground truth [

2]. However, in many scenarios, obtaining such ground truth is hardly feasible because the labeling process is costly and time-consuming; moreover, in some cases, the label corresponds to a subjective assessment [

3]. The above challenge is particularly acute in the medical domain, where specialists are scarce and their time is highly valuable [

4]. To address this bottleneck and improve scalability, many initiatives rely on data labeled by multiple annotators with varying expertise levels via crowdsourcing. The main idea is to distribute the annotation effort among different labelers (e.g., physicians), reducing the burden on experts [

5]. Nevertheless, differences in expertise, annotation criteria, and data interpretation can introduce label variability and noise, which can degrade model performance if not correctly handled [

6,

7]. As a result, in the case of crowdsourcing labels, each instance in the training set is assigned to a set of multiple annotators corresponding to a noisy version of the hidden gold standard [

8]. Accordingly, it is necessary to develop methods for dealing with labels from multiple annotators, known in the literature as

Learning from Crowds (LFC) [

9]. LFC can be divided into two perspectives: label aggregation and training DL under supervision from all labelers [

8].

In particular, label aggregation configures a two-stage training procedure: the first step comprises estimating a single label, assumed to approximate the ground truth, which is then used to train a standard DL algorithm [

10]. The most naive method is the so-called majority voting (MV); however, MV assumes annotators with homogeneous performance, which is not feasible due to the presence of annotators with different expertise levels [

11]. Thus, elaborated approaches have been proposed to compute the annotators’ performance while producing the ground truth estimation. For example, the early work in [

12] treats the ground truth as a latent variable. It uses an Expectation-Maximization (EM) algorithm to jointly estimate the annotators’ performance and the hidden ground truth. Similarly, in [

13], the authors propose a weighted MV aiming to code the heterogeneous performances. Despite their simplicity, most label aggregation methods ignore the input features, missing crucial details [

14].

In contrast, a more integrated alternative is to adopt end-to-end architectures to train DL models directly from the multi-annotator labels. The basic idea is to modify typical DL models, including

Crowd Layers [

15], to jointly estimate the supervised learning algorithm and the annotators’ behavior. Several works have shown that this second approach improves performance, as input features provide valuable information to infer the true labels [

16]. The success of end-to-end models relies on the proper codification of the annotators’ performance, which, for classification settings, is usually measured in terms of accuracy [

17], sensitivity, specificity [

11], or the confusion matrix [

2,

18]. Nevertheless, a restriction commonly found in the above approaches is that they assume that the performance is homogeneous, which does not hold in real scenarios, because the labels are provided based not only on the labelers’ expertise but also on the features observed from raw data [

11]. Hence, the model proposed in [

19] is the first attempt to relax this assumption. Here, the annotations provided by each source are modeled by a Bernoulli distribution, where the probability parameter is a function of the input space, which is related to the performance of that annotator. This seminal work has inspired more elaborate models that employ multi-output (MOL) learning concepts to model the annotators’ parameters as a function of the input features [

20]. Unlike standard multi-output learning problems, where each output corresponds to a task, each annotator is modeled as a separate function whose parameters depend on the input, capturing heterogeneous behavior [

4].

While recent models have extended the standard multi-output framework to jointly estimate both the ground truth and annotator reliability as functions of the input, and even capture correlations among annotators, they face important limitations in terms of scalability. These architectures use dedicated outputs for each annotator, which allows modeling of inter-labeler dependencies but results in several parameters and computations that grow linearly (or worse) with the number of annotators. This can become prohibitive in large-scale crowdsourcing settings, where the pool of annotators is numerous [

5,

21].

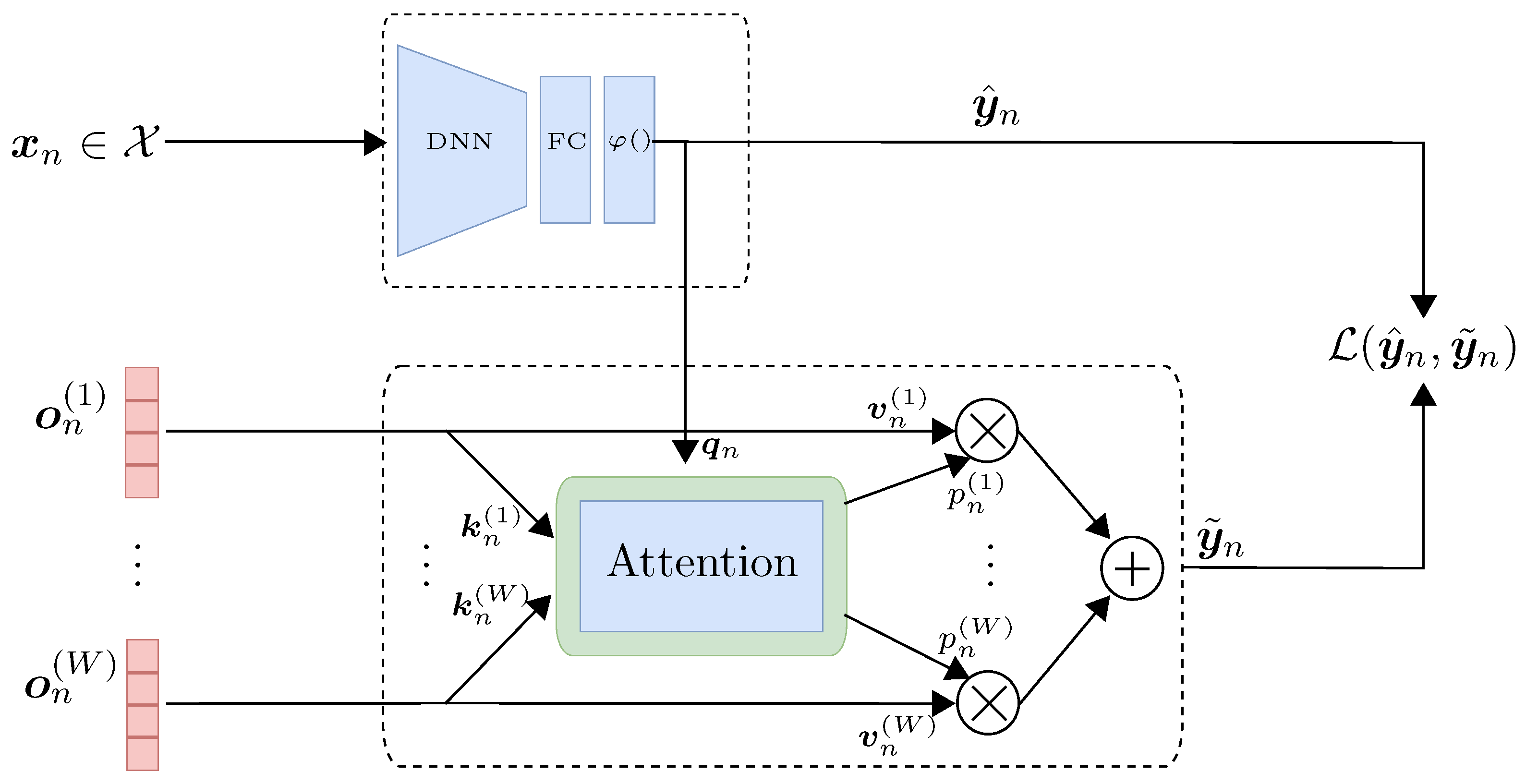

To address the scalability and robustness challenges described above, we introduce CrowdAttention, a novel end-to-end deep learning framework designed to learn from noisy labels provided by multiple annotators. The architecture comprises two main components: a classification network that produces the final prediction, and a crowd network that estimates the latent ground truth through a cross-attention mechanism over the annotators’ responses. The classification network maps the input features to a distribution over class labels. In parallel, this predicted distribution is used as the query in a cross-attention module, while the one-hot encoded responses from multiple annotators serve as keys and values. Crucially, the attention mechanism assigns instance-dependent weights that explicitly represent the reliability of each annotator, thereby producing a pseudo-label that reflects a reliability-weighted consensus rather than a simple majority vote. The model is trained by minimizing a cross-entropy loss between the classification network’s prediction and the pseudo-label produced by the annotators’ network, which allows a joint optimization of both components. This training strategy allows the model to benefit from various labelers while avoiding the need for manually aggregated labels or architectural replication for each annotator.

In summary, our main contributions are as follows:

We propose CrowdAttention, a scalable and parameter-efficient framework that models the reliability of multiple annotators as a function of the input space through a cross-attention mechanism.

In contrast to previous methods [

4,

20,

22], which rely on dedicated output branches for each annotator, our approach employs a unified attention-based design that substantially reduces the number of trainable parameters while enabling end-to-end training directly on datasets annotated by multiple annotators.

We conduct comprehensive evaluations on synthetic and real-world datasets, demonstrating that CrowdAttention achieves superior classification performance and robustness under noisy and heterogeneous supervision, all while preserving architectural simplicity and a significantly lower parameter footprint.

The agenda is as follows:

Section 2 presents the related work and paper contributions.

Section 3 describes our proposal details.

Section 4 and

Section 5 present the results and discussion. Finally,

Section 6 shows the concluding remarks.

2. Related Work

Typical supervised learning algorithms operate under the assumption that each training instance is associated with a single, reliable ground-truth label. This allows models to learn the relationship between inputs and outputs [

11]. However, in many real-world applications, such as medical diagnosis or speech assessment, labels are often provided by multiple annotators. Their judgments can diverge due to differences in expertise, bias, or the ambiguity of the task [

23]. This multi-annotator scenario results in collections of labels that are frequently noisy and, in many cases, incompatible with traditional supervised approaches. Rather than relying on a single source of truth, the learning procedure in these contexts requires explicitly modeling the annotators’ behavior to extract meaningful supervision from heterogeneous annotations.

The field of

learning from crowds has emerged to address the supervision problem when data are annotated by multiple, and potentially unreliable, contributors. Applications cover a wide range from medical image analysis [

24] and quality speech assessment [

25] to natural language processing [

26], where multiple annotators provide inconsistent labels. In this field, we identify two main approaches. The first is termed

label aggregation, which comprises the estimation of a soft label for each training sample, which is then used to train a learning algorithm. Conversely, the second direction jointly estimates the latent ground truth and the parameters of a machine learning algorithm. This family of methods utilizes the interaction between annotator reliability and predictive performance, allowing the classifier and the label inference process to inform each other cohesively [

14].

2.1. Label Aggregation

The simplest method to combine labels from multiple annotators is called

majority voting (MV) [

27]. This approach selects the most frequent label as a soft estimation of the hidden ground truth. However, MV assumes that all annotators perform at a similar level, which often does not reflect reality. In real-world applications, factors such as expertise, bias, and reliability can vary significantly among labelers. To address this, several variants have been proposed. For example, the Iterative Weighted Majority Voting (IWMV) [

28] starts by applying MV to obtain initial aggregated labels. These labels are then used to estimate the reliability of each annotator. The reliability estimates serve as weights in subsequent rounds of aggregation, allowing the method to iteratively update both the annotator weights and the integrated labels until convergence is achieved. Some extensions utilize the confidence of the majority class. For instance, ref. [

29] presents four soft-MV schemes that integrate certainty information to generate more informative consensus labels. Additionally, ref. [

30] uses differential evolution to assess the quality of annotators based on multiple sets of noisy labels. Additionally, the Label Augmented and Weighted Majority Voting (LAWMV) [

31] method leverages K-nearest neighbors to enrich each instance’s label set and applies weighted majority voting based on distance and label similarity.

Beyond heuristic refinements of MV, probabilistic aggregation models have been developed. The seminal work proposed in [

12] jointly estimates the latent ground truth and each annotator’s confusion matrix using an expectation–maximization algorithm, allowing the model to weight annotations according to estimated reliability. Subsequent extensions, such as the Generative model of Labels, Abilities, and Difficulties (GLAD) [

32], further incorporate item difficulty and annotator expertise, capturing the intuition that some annotators are more accurate on specific tasks and that certain items are intrinsically harder to label. Similarly, in [

33], the authors generate multiple clusters based on the information from multiple annotators; then, each cluster is assigned to a specific class to solve a multi-class classification problem.

2.2. End-to-End Algorithms

Despite their simplicity, most label aggregation methods ignore the input features, missing crucial details to puzzle out the ground truth [

14]. Alike, an alternative is to adopt end-to-end architectures to learn DL models directly from the multi-labeler data. The basic idea is to modify the last layer in DL models in order to include an additional

Crowd Layer [

15], which codes the annotators’ performance. Thereby, the classifier and the

Crowd Layer can be estimated simultaneously via backpropagation [

15]. Following this idea, several works such as [

14,

34,

35] propose a probabilistic model, where the main idea is to model each labeler via a confusion matrix

, being

K the number of classes. Such matrices are fed by the output of a basic model, which represents an estimation of the hidden ground truth. Therefore, the labels of each annotator can be seen as a modification of the ground truth with varying levels of reliability and bias [

14].

However, these methods typically assume that transition matrices are annotator- and instance-independent, which is unrealistic in practical scenarios. To relax this assumption, ref. [

18] estimates annotator- and instance-dependent transition matrices using deep neural networks combined with knowledge transfer strategies. In this framework, knowledge about the mixed noise patterns of all annotators is first extracted and then transferred to individual workers. At the same time, additional refinement is achieved by transferring information from neighboring annotators to improve the estimation of each annotator’s transition matrix. On the other hand, recent works such as [

20,

22], categorized as Chained Neural Networks (Chained-NN), employ a framework based on the concept of

Chained Gaussian Processes [

36] to model annotator performance as a function of the input features. The central idea is to design a neural network with

outputs, where

K outputs correspond to the underlying classification task and each of the remaining

R outputs (one per annotator) captures the annotator-specific reliability. In this way, the model simultaneously learns the predictive function and the input-dependent reliability of each labeler, thereby relaxing the assumption of homogeneous annotator performance. Despite their improved flexibility, the previous approaches model the annotators’ performance using a parametric network. For example, transition matrix-based algorithms require

parameters for

R annotators, which can lead to learning inefficiencies for a large number of annotators or classes [

5].

2.3. Main Contribution

According to the above, we identify that most existing approaches share a standard limitation: they model annotator performance using parametric forms that either assume homogeneity or require large parameter spaces to capture heterogeneity. This motivates the need for alternative formulations that can efficiently represent annotator variability, adapt to input-dependent reliability, and scale to realistic multi-annotator scenarios.

We propose CrowdAttention, an end-to-end framework that integrates attention mechanisms to model annotators’ reliability as a function of the input features. The architecture comprises two main components: a classification network that produces the final prediction, and a crowd network that estimates the latent ground truth through a cross-attention mechanism over the annotators’ responses. The classification network maps the input features to a distribution over class labels. In parallel, this predicted distribution is used as the query in a cross-attention module, while the one-hot encoded responses from multiple annotators serve as keys and values. The attention mechanism dynamically weighs each annotator’s contribution, producing a pseudo-label that reflects the annotators’ consensus weighted by instance-specific relevance.

In contrast to confusion–matrix–based approaches [

12,

14,

32], which require

parameters to capture annotator variability, CrowdAttention avoids explicit parameterization of each annotator’s noise process. Instead, annotator reliability is modeled in a non-parametric manner through a dot-product attention mechanism learned jointly with the classification network. This results in a compact representation that flexibly adapts across annotators and instances without introducing large parameter overheads. Furthermore, unlike

Chained approaches [

20,

22], which also account for annotator reliability as a function of inputs but remain computationally demanding, CrowdAttention embeds a lightweight attention mechanism within modern deep architectures, ensuring both scalability to large crowdsourcing datasets and robustness to heterogeneous supervision.

Furthermore, our

CrowdAttention shares similarities with the work in [

5] in the sense that both approaches aim to improve training efficiency by eliminating the need for multiple annotator-specific transition matrices. Instead of requiring

parameters, they adopt a more compact representation that aggregates annotator information within an end-to-end framework. However, unlike UnionNet, which concatenates all annotators’ labels into a single union and relies on a parametric transition matrix,

CrowdAttention employs a non-parametric dot-product attention mechanism that models the labelers as a function of the input space.

Table 1 summarizes the key insights from our proposal alongside state-of-the-art methods.

5. Results and Discussion

5.1. Fully-Synthetic Datasets Results

We first conducted a controlled experiment to evaluate the capabilities of our

CrowdAttention in solving classification tasks while simultaneously estimating annotator performance as a function of the input features. For this purpose, we used the

fully synthetic dataset described in

Section 4.1. We simulated annotations from five labelers with varying levels of expertise, resulting in average accuracies (%) of

.

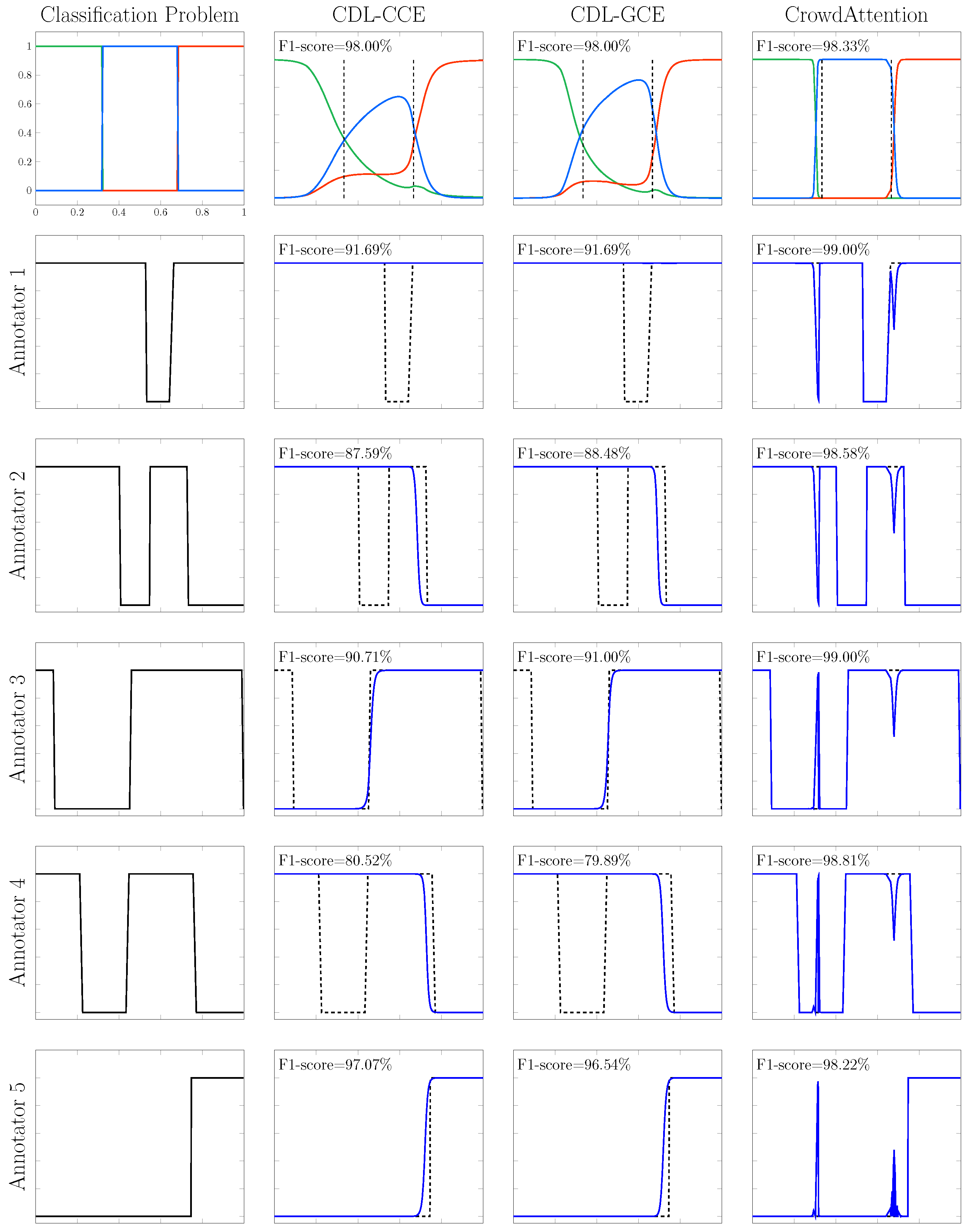

After 10 iterations with different initializations, our method achieves an -score of . This demonstrates the competitiveness of our approach, considering that the theoretical upper bound, represented by DNN-GOLD—a deep neural network trained directly on the true (gold-standard) labels—achieves . Remarkably, unlike DNN-GOLD, our method does not have access to the gold-standard labels, relying solely on the noisy annotations provided by multiple annotators with unknown levels of expertise.

The strong performance of our model highlights its ability to effectively capture annotator reliability through the attention mechanism, enabling accurate label estimation and robust final predictions. To empirically support this claim,

Figure 2 illustrates the predicted class probabilities

alongside the instance-dependent reliability scores

inferred by the cross-attention module. These scores directly represent the trust assigned to each annotator and thus provide a transparent interpretation of how pseudo-labels are formed. We compare our model’s output against CDL-CCE and CDL-GCE, which are state-of-the-art methods for modeling annotator reliability. We remark that the results in

Figure 2 correspond to the best-performing run from the 10 iterations.

As shown in

Figure 2,

CrowdAttention achieves superior classification performance compared to the baseline methods. Specifically, our model attains the highest overall

-score of

, outperforming CDL-CCE and CDL-GCE, which both reach

. A qualitative inspection further supports this result: the class probability estimates produced by

CrowdAttention align more closely with the actual decision boundaries defined in the synthetic setup (see the first column of

Figure 2), resulting in sharper and more accurate predictions. A key factor behind this improved performance lies in the model’s ability to infer annotator reliability as a function of the input. As illustrated in the last row of

Figure 2,

CrowdAttention successfully recovers a diverse set of reliability patterns, including sharp transitions (annotators 1 and 3), non-monotonic behaviors (annotators 2 and 4), and sparse reliability signals (annotator 5). In contrast, CDL-CCE and CDL-GCE produce overly smoothed estimates that fail to capture important local variations in reliability. To quantitatively assess this capability, we compute the average

-score between the estimated and true annotator reliability functions.

CrowdAttention achieves a score of

, substantially outperforming CDL-CCE (

) and CDL-GCE (

), further confirming its effectiveness in modeling complex annotator behaviors.

On the other hand, the estimated annotator reliability functions produced by

CrowdAttention exhibit sharp fluctuations near class transition regions, as seen in the last row of

Figure 2. To explain this behavior, we recall that in our model, the actual label is estimated from crowdsourced data using a cross-attention mechanism, where reliability scores are computed via the dot product between the model’s predicted class distribution (query) and each annotator’s one-hot label (keys and values). Thus, such ground truth estimation heavily relies on a proper computation of the model’s predictions, which can be inaccurate in regions near decision boundaries, thereby causing errors in the attention weights assigned to each annotator [

44].

5.2. Real-World Datasets Results

Up to this point, we have presented empirical evidence that our approach provides a suitable representation of annotator behavior, resulting in competitive classification performance compared to state-of-the-art models. Such an outcome is a consequence of modeling the annotators’ performance as a function of the input features, allowing the model to adapt to the heterogeneity inherent in real-world labelers [

22]. However, the experimental setup considered so far is based on synthetic labels, which may introduce biases derived from the simulation procedure itself. To address this limitation and further validate the generalization capacities of our method, we now turn to fully real-world datasets. These datasets present a more challenging scenario in which both the input samples and the annotations originate from real clinical applications.

Table 3 reports the performances of all methods in terms of the

-score; for multiclass settings, we report the macro-averaged

-score. Firstly, regarding the Voice Dataset, we observe that for scales G and R, all the approaches considered achieve comparable performance. We attribute this outcome to the high-quality annotations provided by the labelers, as studied in [

45]. Notably, for scale G, the theoretical lower bound (DNN-MV) slightly outperforms DFLC-MW, confirming the observation that annotators exhibit reliable behavior. In contrast, scale B presents a more challenging scenario. Here, our CrowdAttention obtains the highest performance, which is a remarkable outcome given the greater uncertainty introduced by annotators, as perceptual scale B is known to be difficult to assess, even for trained specialists.

Next, we turn to the results obtained on the Histology dataset, which poses an even more demanding classification task due to its highly imbalanced label distribution (Tumor: 37,260; Stroma: 27,668; Immune Infiltrate: 10,315). In terms of overall performance, CrowdAttention outperforms all competing methods and achieves an -score of , coming closest to the theoretical upper bound provided by DNN-GOLD (). Beyond the global result, a per-class analysis reveals further advantages of our approach. Specifically, CrowdAttention achieves the best -score on the Tumor class, outperforming all other models, including CDL-CCE and CDL-GCE, which are its most direct competitors as they also model annotator reliability as a function of the input features. Our proposal also performs competitively on the minority classes (Stroma and Infiltrate). These results empirically demonstrate the ability of our method to handle multi-labelers even in the presence of unbalanced classes.

Finally,

Table 4 presents the results for the LabelMe and Music real-world datasets. Again, CrowdAttention also demonstrates strong generalization across diverse modalities. On the LabelMe dataset, which contains natural images annotated through crowdsourcing, our framework achieved an

-score of 82.19, outperforming all competing baselines and approaching the upper bound set by DNN-GOLD (89.12). This result highlights the attention mechanism’s ability to weigh annotator reliability, even in subjective visual tasks, effectively. Note that the CDL-based baselines (CDL-CCE and CDL-GCE) were evaluated using the top 40 labelers with the most labeled instances owing to memory constraints. Similarly, for the Music Genre Classification dataset, CrowdAttention reached an

-score of 69.61, again surpassing classical aggregation methods (DNN-MV and DNN-DS) and crowd-layer approaches, while reducing the performance gap with DNN-GOLD (75.42). These findings emphasize that the proposed model not only adapts to complex biomedical tasks but also extends its robustness to other domains where annotator disagreement and subjectivity are typical. Nonetheless, the remaining gap with respect to the upper bound indicates that future refinements, such as modeling systematic biases or incorporating richer annotator priors, may further improve performance.

Finally, we conducted a non-parametric Friedman test to evaluate the statistical significance of the results. The null hypothesis assumes equivalent performance among all multi-labeler algorithms [

46], excluding the upper bound DNN-GOLD. Using a significance level of

, we obtained a Chi-square value of

with a

p-value of

. Since

, we reject the null hypothesis and conclude that there are statistically significant differences among the evaluated algorithms.

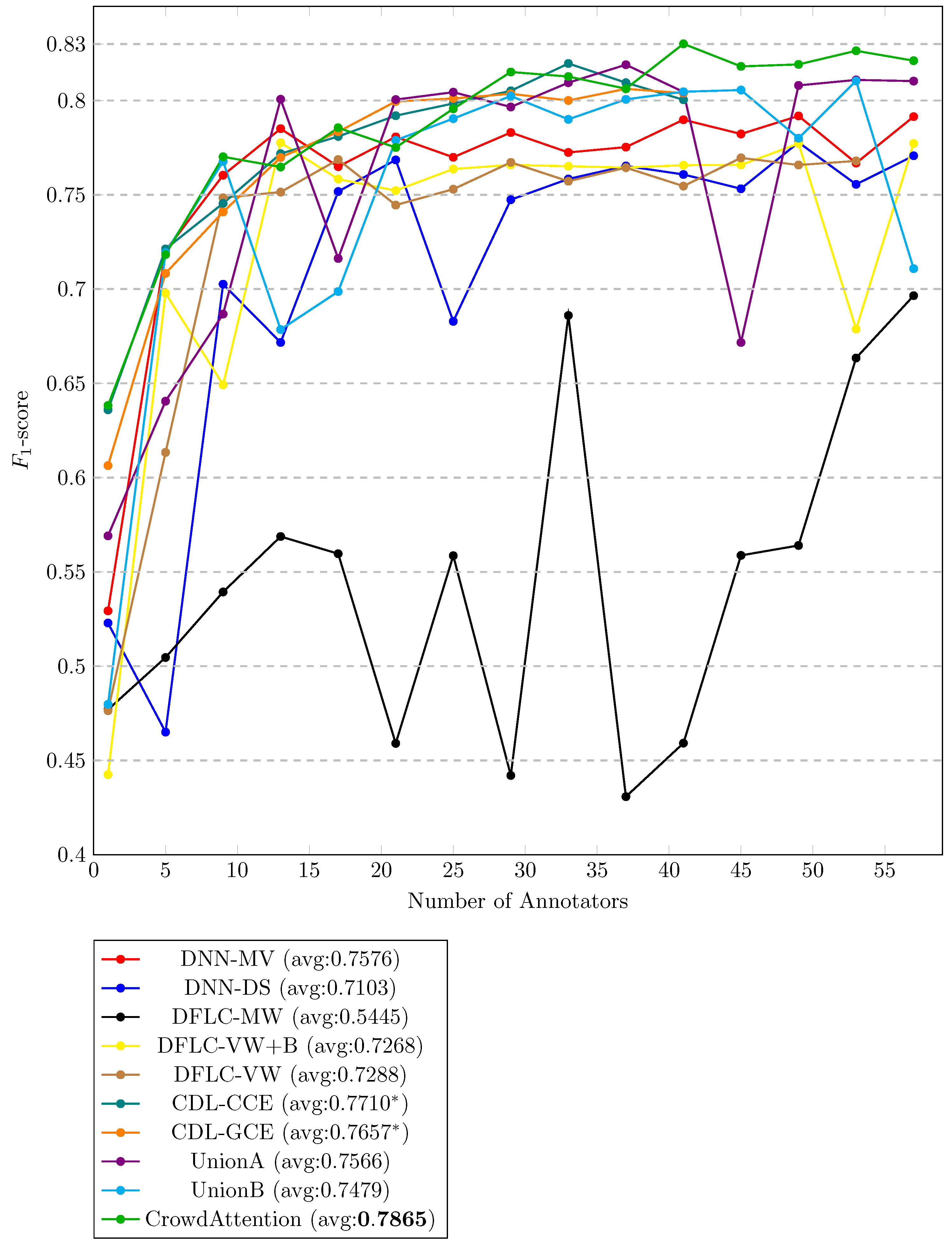

5.3. Varying the Number of the Annotators

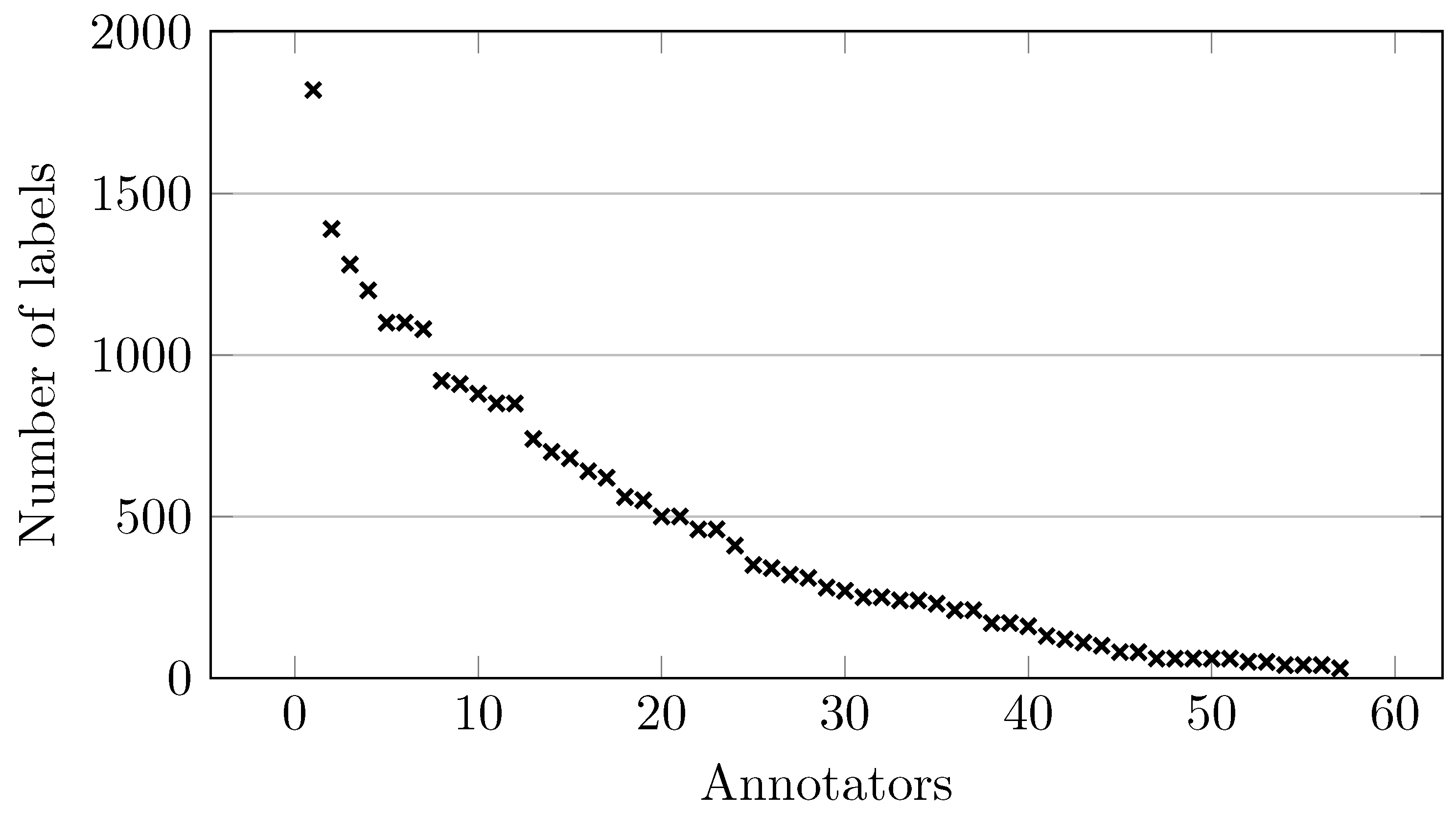

As a final experiment, we wish to evaluate the impact of the number of annotators on the performance of the multi-labeler classifiers. Specifically, we utilize the

LabelMe dataset, which comprises 57 real annotators; we selected this dataset because it provides the largest number of labelers. The annotators were sorted in descending order according to the number of instances they labeled.

Figure 3 shows the number of samples labeled by each annotator.

From

Figure 3, we can observe a strong sparsity in the number of annotations per worker. Only a small group of annotators provided a large number of labels; in fact, less than 10 workers labeled more than 1000 samples (approximately 10% of the whole dataset). This distribution indicates that while a few annotators dominate the dataset in terms of volume, most provide only sparse information. Such an imbalance makes the learning problem more challenging, since the classifier must leverage a small fraction of highly active annotators while still exploiting the sparse signals from the long tail of less active ones.

To evaluate the effect of progressively increasing the number of annotators. Instead of incorporating them one by one, we adopted a cumulative scheme with a step size of four. In the first iteration, the system was trained and evaluated using only the labels from the most prolific annotator. In the second iteration, the first five annotators were included; in the third iteration, the first nine, and so forth, until all 57 annotators were aggregated.

Figure 4 shows the classifiers’ performance in terms of F1 score as a function of the number of annotators. First, we notice that most approaches improve as more annotators are aggregated, with the most significant gains occurring in the initial increments (e.g., from 1 to 9 annotators). After this point, the performance tends to saturate, reflecting diminishing marginal benefits when adding additional annotators. Such an outcome is expected since the first annotators were those who contributed the most significant number of labels.

We also observe that methods based on label aggregation (DNN-MV and DNN-DS) quickly stabilize around a moderate level of performance. In fact, the simplest aggregation strategy achieves better results than the DFLC variants. On the other hand, we remark that the Union methods [

5] consistently outperform the previous approaches; however, we highlight an instability in their progression. Namely, they present considerable drops in performance at certain points.

In contrast, the CDL-based approaches (CDL-CCE and CDL-GCE) exhibit more stable behavior across different numbers of annotators. Both methods maintain a relatively smooth progression without abrupt drops and consistently achieve competitive performance. We attribute such stability to the fact that these approaches are designed to model the annotators’ performance as a function of the input space, which makes them more robust to variations in the number of annotators and the level of label sparsity. However, a key limitation of CDL methods is that they allocate a separate network for each annotator, which makes them inherently non-scalable. In practice, this design imposes heavy memory requirements, to the point that it was only feasible to run experiments with up to 41 annotators.

Finally, CrowdAttention achieves the best overall results, surpassing all baselines while maintaining a stable progression. Similar to CDL approaches, it models annotator performance as a function of the input instances; however, in contrast to CDL, CrowdAttention relies on a dot-product attention mechanism. This design enables the model to scale efficiently with the number of annotators, avoiding the memory limitations of CDL methods while continuing to benefit from the contributions of additional annotators. As a result, CrowdAttention achieves both higher and more consistent performance across the entire range.

5.4. Limitations

Although our CrowdAttention approach demonstrates competitive performance across synthetic and real-world datasets, several limitations remain. First, in the synthetic experiments, we observed sharp fluctuations in the estimated annotator reliability functions near class transition regions (see

Figure 2). This behavior arises because the cross-attention module relies on the model’s predicted class distribution to compute reliability weights, making the approach sensitive to misclassifications close to decision boundaries. Second, while CrowdAttention avoids the heavy parameterization of annotator-specific confusion matrices, it does not explicitly capture structured error patterns such as systematic biases or class-dependent confusions, which were evident in challenging cases of the Voice dataset—particularly for the “Breathiness” (B) dimension (see

Table 3), where annotators displayed high disagreement and our model’s advantage over baselines was more modest. Third, in the histopathology dataset, although CrowdAttention achieved the highest overall F1-score among competing methods (75.06 vs. 75.57 for the upper bound DNN-GOLD), its performance on minority classes such as Stroma and Immune Infiltrates remained affected by the strong class imbalance, highlighting reduced robustness under skewed distributions (see

Table 3). Finally, while our attention-based aggregation is more scalable than annotator-specific models, effective training still requires careful tuning of the classification backbone to avoid overfitting to noisy supervision, especially when the number of annotators is limited.