AI Video Analysis in Parkinson’s Disease: A Systematic Review of the Most Accurate Computer Vision Tools for Diagnosis, Symptom Monitoring, and Therapy Management

Abstract

Highlights

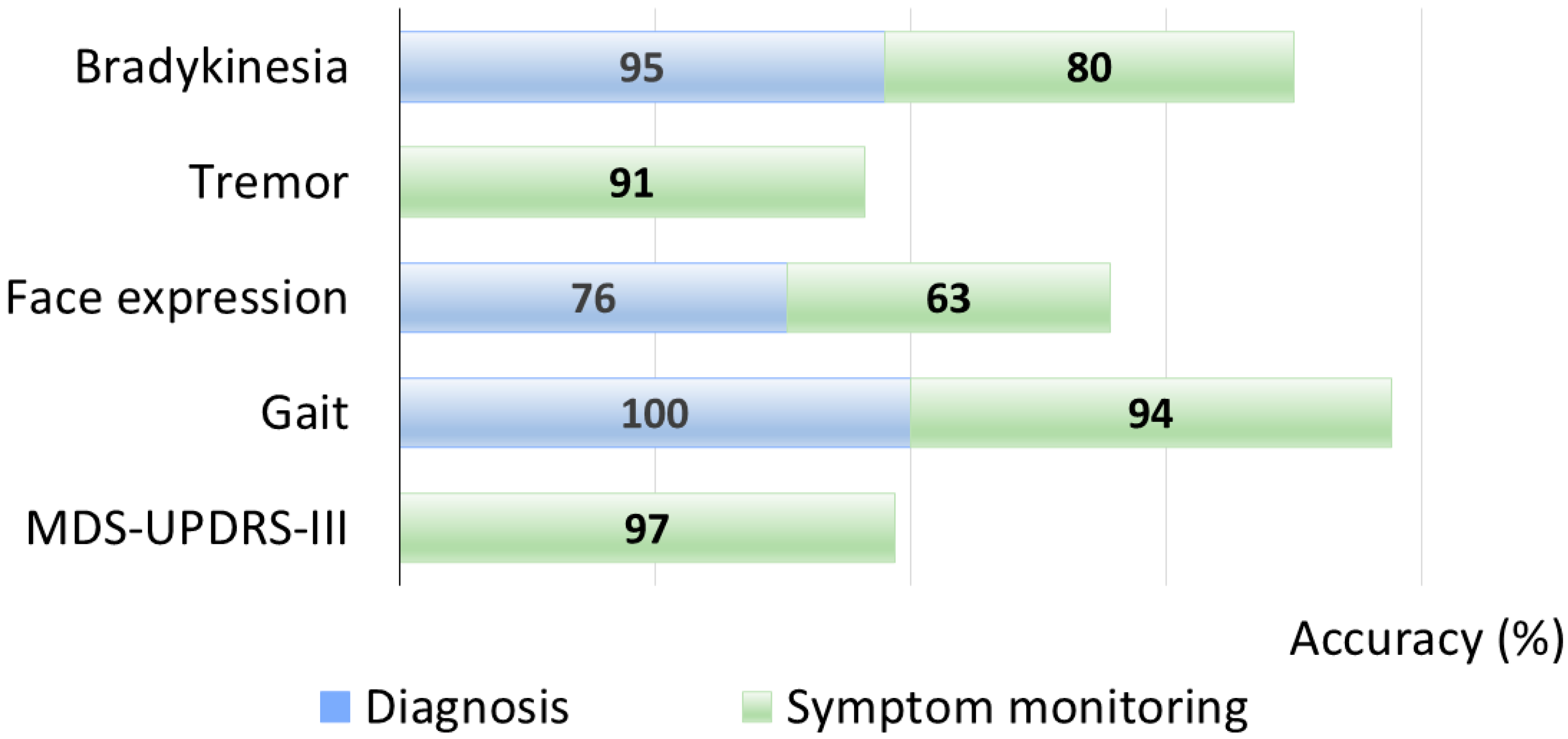

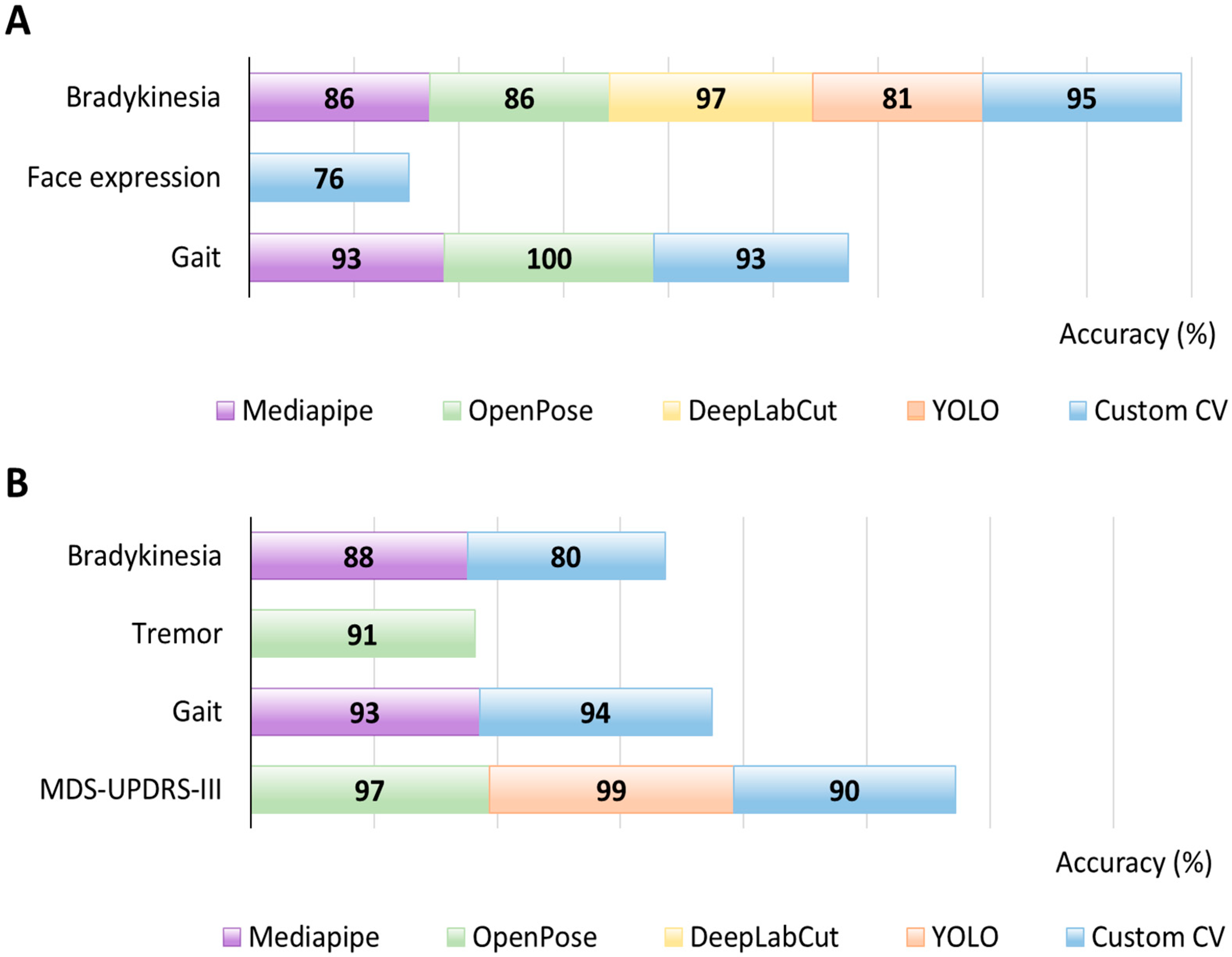

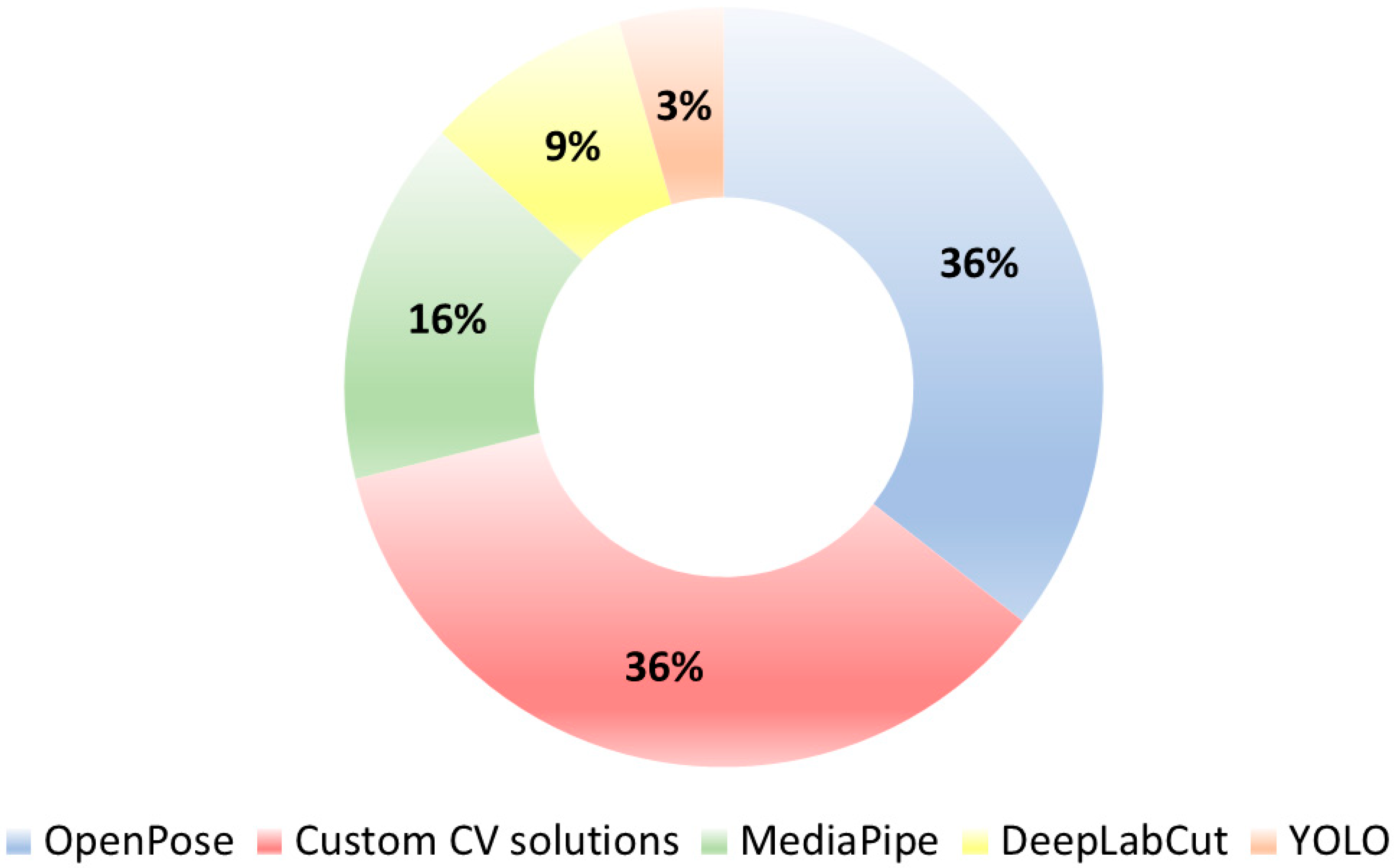

- Across 45 eligible studies, gait was the most investigated task (followed by bradykinesia), and OpenPose/Custom pipelines were the most commonly used pose-estimation tools.

- Computer Vision pipelines achieved diagnostic discrimination and severity tracking that aligned with clinician ratings, supporting feasibility for both remote and in-clinic assessment.

- Computer Vision provides objective, scalable, and non-invasive quantification of PD motor signs to complement routine examination and enable telemonitoring workflows.

- Real-world translation will require standardized video acquisition/processing and external validation across multiple settings and populations.

Abstract

1. Introduction

- Neurodegeneration: evidence of loss of dopaminergic neurons on neuroimaging;

- Genetic risk.

1.1. Computer Vision

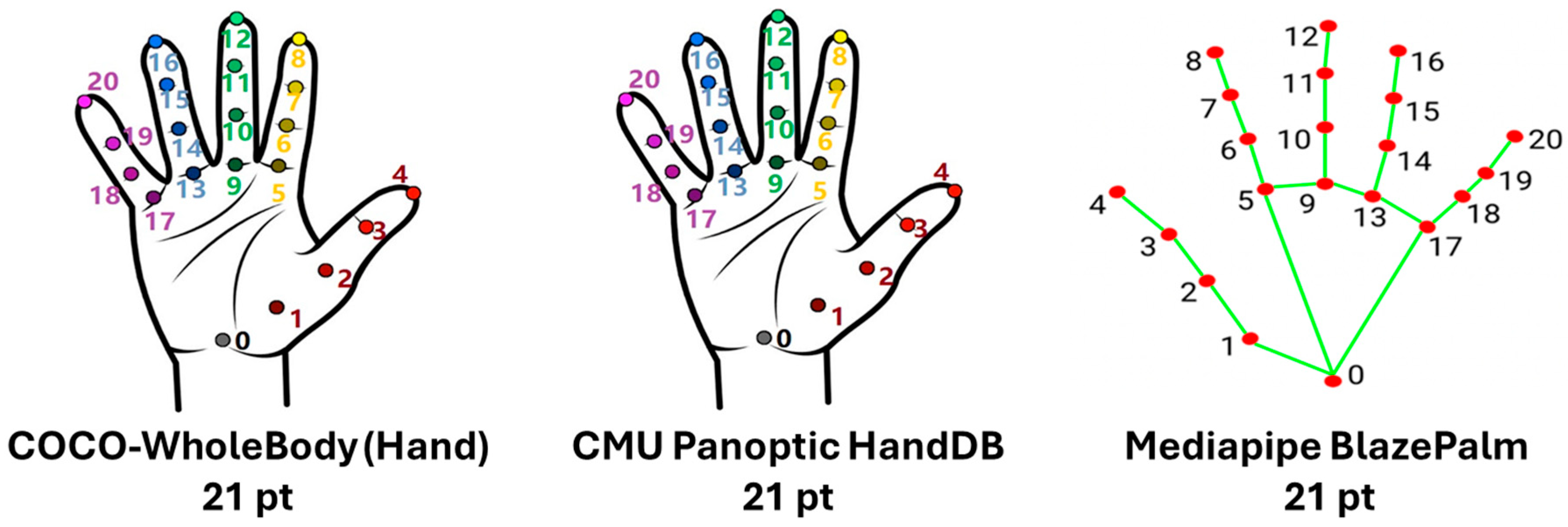

1.2. Human Pose Estimation: Datasets and Frameworks

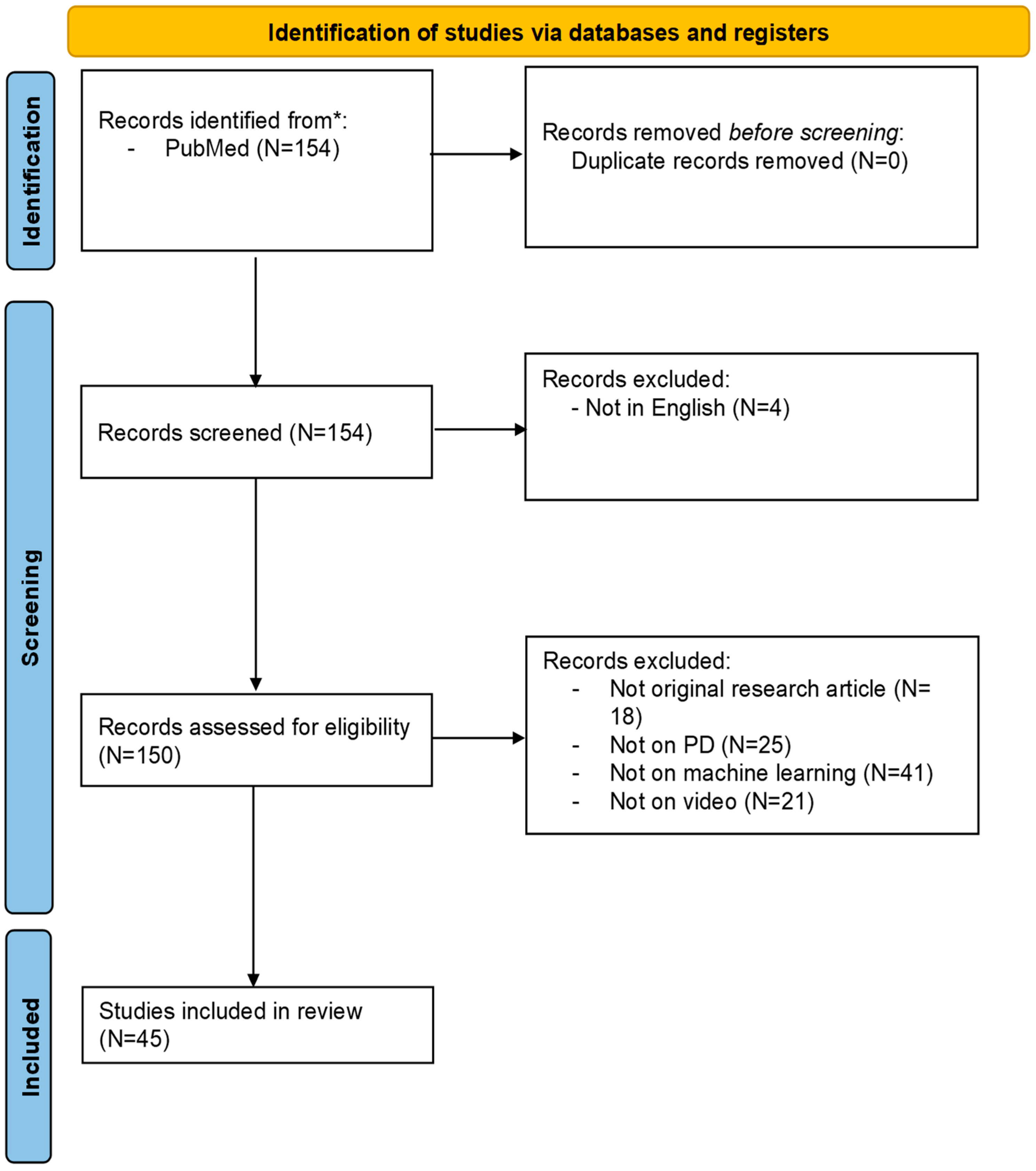

2. Materials and Methods

2.1. Scope and Target Domain

2.2. Search Strategy

2.3. Eligibility Criteria

- In studies on PD, what is the performance of markerless CV–based technologies compared to traditional clinical methods for disease diagnosis?

- In studies on PD, what is the performance of markerless CV–based technologies compared to traditional clinical methods for motor symptom monitoring and severity assessment?

- Population (P): Patients with PD, diagnosed according to standard clinical criteria, including both early- and late-stage individuals, as well as subgroups with varying motor symptom profiles;

- Intervention (I): Application of markerless CV technologies for motor assessment, including pose estimation algorithms, video-based tracking, and ML-driven motion analysis for the evaluation of motor symptoms;

- Comparison (C): Comparison with traditional methods of clinical assessment, expert rater evaluation, or alternative quantitative tools such as wearable sensors or marker-based motion capture systems;

- Outcome (O): Objective measures of diagnostic or assessment performance, including but not limited to classification accuracy, sensitivity, specificity, correlation with clinical scores, Area Under the Receiver Operating Characteristic Curve (AUROC), regression coefficients, or treatment response quantification.

2.4. Data Extraction and Synthesis

- Study characteristics: Participant demographics, sample size, and clinical settings;

- Motor tasks assessed: Specific movements or tests;

- CV methodologies: Type of camera used, specific pose estimation algorithms or software employed, and ML models utilized;

- Performance metrics: Reported accuracy, sensitivity, specificity, AUROC, and correlation with established clinical scales.

- (1)

- Diagnosis, if the study focused on distinguishing individuals with PD from healthy controls or other movement disorders based on a single assessment;

- (2)

- Symptom monitoring, if the study involved longitudinal assessments across multiple time points or visits, typically conducted in remote or real-world settings;

- (3)

- Therapy Management, if the aim was to differentiate motor states (e.g., ON vs. OFF vs. dyskinesia) or to assess the effects of specific treatments.

2.5. Quality Assessment

2.6. Reporting Framework and Presentation of Results

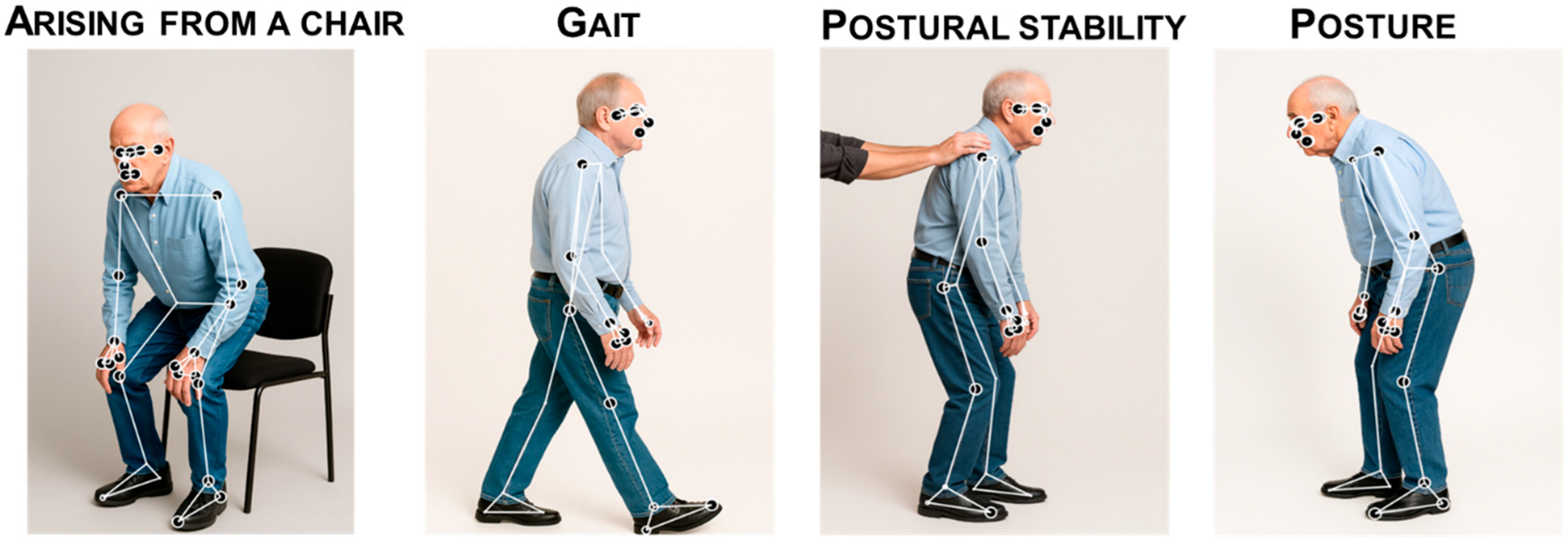

- Bradykinesia

- Tremor

- Rigidity

- Axial symptoms

- ○

- Facial expressions

- ○

- Gait, posture, and balance

- MDS-UPDRS-III total score

- Diagnosis

- Symptom monitoring

- Motor fluctuations

- Levodopa-induced dyskinesias (LIDs)

- Therapy response

3. Results

3.1. Study Selection

3.2. Bradykinesia

3.2.1. Diagnosis

3.2.2. Symptom Monitoring

| Aim | Ref. | Patients | Controls | Device | Pose Estimation | Performance | Technology Comparison |

|---|---|---|---|---|---|---|---|

| Diagnosis | [86] | 40 | 37 | Smartphone (1920 × 1080 px, 60 fps) | Victor Dibia Handtracking, preprocessing in OpenCV | Test accuracy 0.69, precision 0.73, recall 0.76, AUROC 0.76 | NA |

| [87] | 48 | 11 | Intel RealSense SR300 depth camera (640 × 480, ~30 fps, Intel Corporation Santa Clara, CA, USA) | YOLOv3 | 5-fold CV accuracy 81.2% for 0–4 severity classification | NA | |

| [88] | 13 | 6 | Single fixed camera video (10 s, 25 fps, 352 × 288) | OpenCV Haar face detector + motion-template gradient | Accuracy classification 88% (10-fold CV); PD vs. HC 95%; Head-to-head vs. marker-based: mean peak difference ≈ 3.31 px | Marker-based (HSV) system | |

| [89] | 31 | 49 | Standard clinic video camera | DeepLabCut | AUC 0.968; Sensitivity 91%; Specificity 97% | NA | |

| [92] | 82 | 61 | Video camera consumer Sony HDR-CX470 (30 fps, 1280 × 720, Sony Corporation, Tokyo, Japan) | OpenPose | AUC 0.91, Accuracy 0.86, Sensitivity 0.94, Specificity 0.75. After propensity matching: AUC 0.85; Accuracy 0.83, Sensitivity 0.88, Specificity 0.78 | NA | |

| [93] | 31 | 26 | Standard camera (1920 × 1080, 30/60 fps). | MediaPipe | Accuracy:

| NA | |

| Symptom monitoring | [90] | 66 | 24 | Standard RGB camera on tripod at 30 fps | MediaPipe | HC vs. PD: AUC-PR 0.97, F1 = 0.91 (accuracy: HC 85%/PD 88%) | NA |

| [91] | 20 | 15 | Smartphone (iPhone SE, 60 fps, 1080p, Apple Inc, Cupertino, CA, USA) | Hand detector CNN (MobileNetV2 SSD, TensorFlow) + GrabCut | UPDRS-FT > 1 (SVM-R) accuracy 0.80, sensitivity 0.86, specificity 0.74 (LOO-CV) PD diagnosis (NB) accuracy 0.64 (LOO-CV) | NA |

3.3. Tremor

3.3.1. Diagnosis

3.3.2. Symptom Monitoring

| Aim | Ref. | Patients | Controls | Device | Pose Estimation | Performance | Technology Comparison |

|---|---|---|---|---|---|---|---|

| Diagnosis | [94] | 13 | 11 | Smartphone (1080p, 60 fps) | EVM | OR 2.67 (95% CI 1.39–5.17; p < 0.003) | NA |

| Symptom monitoring | [96] | 15 (9 PD, 5 ET, 1 FT) | - | Smartphone (1080p@60 fps) + hand accelerometer (3.84 kHz) | Optical flow | MAE: 0.10 Hz; 95% LoA −0.38 to +0.35 Hz; 97% within ±0.5 Hz; Bland–Altman bias −0.01 Hz | Accelerometer |

| [95] | 130 | - | Single Sony camera (1920 × 1280, 30 fps, Sony Corporation, Tokyo, Japan) | OpenPose | Accuracy:

| NA |

3.4. Rigidity

3.5. Axial Symptoms

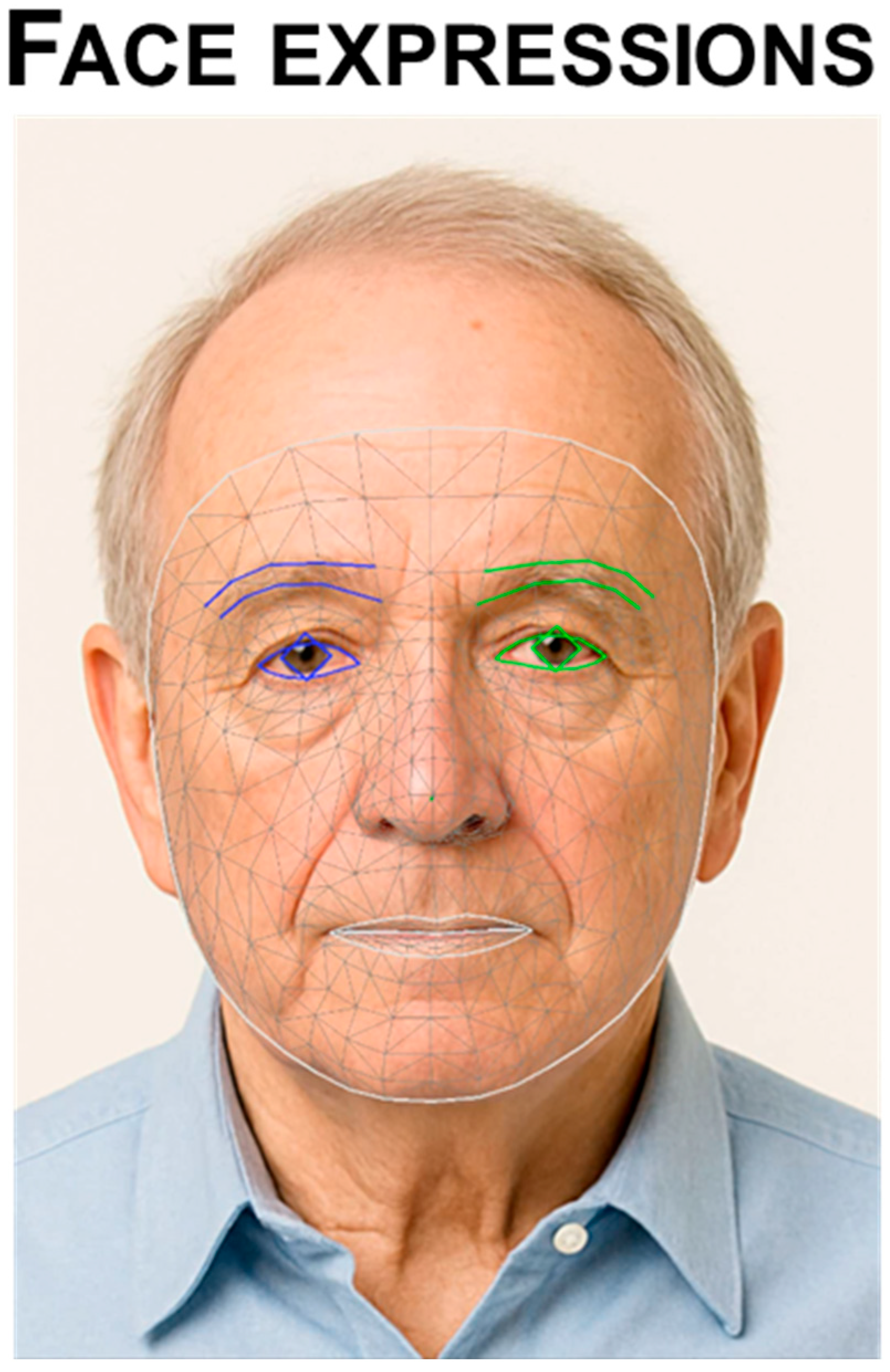

3.5.1. Facial Expressions

- Diagnosis

3.5.2. Gait, Balance and Posture

- Diagnosis

- Symptom monitoring

| Aim | Ref. | Patients | Controls | Device | Pose Estimation | Performance | Technology Comparison |

|---|---|---|---|---|---|---|---|

| Diagnosis | [86] | 40 | 37 | Smartphone (1920 × 1080 px, 60 fps) | Victor Dibia Handtracking, preprocessing in OpenCV | Test accuracy 0.69, precision 0.73, recall 0.76, AUROC 0.76 | NA |

| [108] | 20 | 37 | KinaTra processed in Visual3D (KinaTrax Inc., Boca Raton, FL, USA) | KinaTrax HumanVersion3 | Speed ICC 0.995, stride length ICC 0.992; swing time ICC ≈ 0.910. Bland–Altman speed bias 0.000 ± 0.020 m/s, LOA −0.039 to 0.038 | Marker-based optoelectronic system (SMART-DX, BTS) | |

| [110] | 13 | 13 | Standard RGB camera (~520 × 520, 60 fps) | Dense optical | Early/late fusion (kinematic + deep) Accuracy 1.00, Sensitivity 1.00, Specificity 1.00 (leave-one-patient-out); best single modality: gait-DF (MobileNetV2 4th layer) accuracy 0.961, eye-KF accuracy 0.923 | NA | |

| [100] | 68 | 48 | Smartphone videos (1080p, 30 fps) | OpenPose | AUC 0.91 Sensitivity 84.6% Specificity 89.6% Plantar-pressure insoles: (|Δ| = 0.056 s stance, 0.031 s swing, 0.037 s cycle) and 3D motion capture for spatial/upper-limb metrics (|Δ| = 1.076 cm step length, 0.032 m/s speed, 4.21° arm-swing angle, 9.06°/s arm-swing velocity) | Insole plantar-pressure | |

| [101] | 14 | 16 | RGB camera | OpenPose | Classification accuracy up to 99.4% AUC 0.999–1 | NA | |

| [102] | 34 | 36 | RGB camera | OpenPose | Accuracy 95.8% Sensitivity 94.1% Specificity 97.2% | NA | |

| [119] | 34 | 25 | Single RGB camera (30 fps) synchronized with VICON motion capture (Vicon Ltd., Oxford, UK, 100 Hz) and AMTI force plates (AMTI Optima HPS, Watertown, MA, USA, 1 kHz) | HRNet + HoT-Transformer (3D reconstruction) + GLA-GCN (spatiotemporal modeling) |

| VICON (3D motion capture) | |

| [106] | 119 (PD + HC) | 119 (PD + HC) | Everio GZ-HD40 camera for PD; standard video camera from CASIA dataset for controls | OpenPose | Cadence estimation in controls: good agreement between frontal and lateral views (R2 = 0.754, RMSE = 7.24 steps/min, MAE = 6.05 steps/min). ROC AUC 0.980 for kNN in pre/post DBS comparison | NA | |

| [105] | 30 | 20 | Monocular clinic videos, ~30 fps | SPIN; SORT tracking; ablation with OpenPose (2D) | Macro-F1 0.83, AUC 0.90, precision 0.86, balanced accuracy 81% | NA | |

| [109] | 12 | 21 | Dual AMTI force plates (AMTI Optima HPS, Watertown, MA, USA) + markerless system: 12 Qualisys cameras (Qualisys, Göteborg, Sweden) | Theia3D v2021.2.0.1675 | Bland–Altman: mean bias ~0; typical 95% limits about −0.02–0.03 m; BF01 = 7.4 | Force plates (AMTI) | |

| [107] | 1 PD source video for training + 1 early-PD video for external check | 1 normal-gait video (treadmill) | RGB video camera | OpenPose | Multiclass stage classification accuracy—Gradient Boosting 0.99, KNN 0.97, SVM 0.96; classwise AUCs ~1.0 and F1 (0.94–0.97 overall) | NA | |

| Symptom monitoring | [114] | 36 | - | Microsoft Kinect v2 depth camera (RGB + depth, 30 Hz, Microsoft corporation, Redmond, Washington, DC, USA) | Kinect SDK skeletal tracking | Sub-task segmentation accuracy 94.2%, with MAE in sub-task duration < 0.3 s | NA |

| [118] | 28 | - | Smartphone camera (iPhone 6s, Apple Inc, Cupertino, CA, USA) | OpenPose | ICC > 0.95 for both AFA and DHA; mean bias ≤ 3°; vertical reference bias mean 0.91° | Manual labeling (stickers) | |

| [115] | 25 | - | Webcam (Logitech C920, 480 × 640@30 Hz, Logitech International S.A., Lausanne, Switzerland) + Zeno walkway (120 Hz, ProtoKinetics, Havertown, PA, USA) in clinic | OpenPose, AlphaPose, Detectron (2D); ROMP (3D) | 2D video features show moderate–strong positive correlations with Zeno for steps, cadence, step-width mean/CV | Zeno instrumented walkway (PKMAS) | |

| [103] | 24 | - | 8-camera OptiTrack mocap (240 Hz, NaturalPoint Inc, Corvallis, OR, USA) + 2 smartphone cams (Galaxy A Quantum, 30 Hz, Samsung Electronics, Samsung Digital City, Suwon, South Corea) | MediaPipe | Temporal MAE typically 0.00–0.02 s; treadmill step length MAE 0.01 m, speed MAE 0.03 m/s; overground (lateral) step length MAE 0.09 m (fwd)/0.07 m (back), speed MAE 0.17/0.13 m/s | 3D motion capture (OptiTrack/Motive) | |

| [112] | 729 videos | - | Smartphone/tablet recordings (KELVIN-PD™ app, Machine Medicine Technologies, London, UK) | OpenPose | Balanced accuracy 50% Spearman ρ = 0.52 with clinician ratings; step-frequency r = 0.80 vs. manual counts | NA | |

| [113] | 456 videos from 19 patients | - | Single RGB camera, 25 fps, 352 × 288 px | HOG human detector | Accuracy 70.83%; AUC 0.81. Feature mean-ranks significantly separated severity levels (Kruskal–Wallis, p < 0.05) | NA | |

| [111] | 9 | - | Monocular RGB cam (Panasonic HC-V720M-T, 720 × 480, 30 fps, Panasonic Corporation, Kadoma, Japan) | Light track, MediaPipe | Model II (5-fold CV): Accuracy 93.2% Precision 97.9% Recall 88.8% Specificity 97.9% ICC 0.75–0.94 | NA | |

| [116] | 55 | - | Smartphone | OpenPose BODY25 and AutoPosturePD | ICC vs. image width R = 0.46, p = 0.026; lCC vs. subject “cover factor” width R = 0.51, p = 0.0084, height R = −0.43, p = 0.027, area R = 0.43, p = 0.03; lCC error vs. image height R = −0.46, p = 0.019, cover width R = −0.43, p = 0.028, cover area R = −0.44, p = 0.024) | NA |

3.6. MDS-UPDRS-III

Symptom Monitoring

3.7. Video Analysis Algorithms for PD Diagnosis: A Categorized Overview

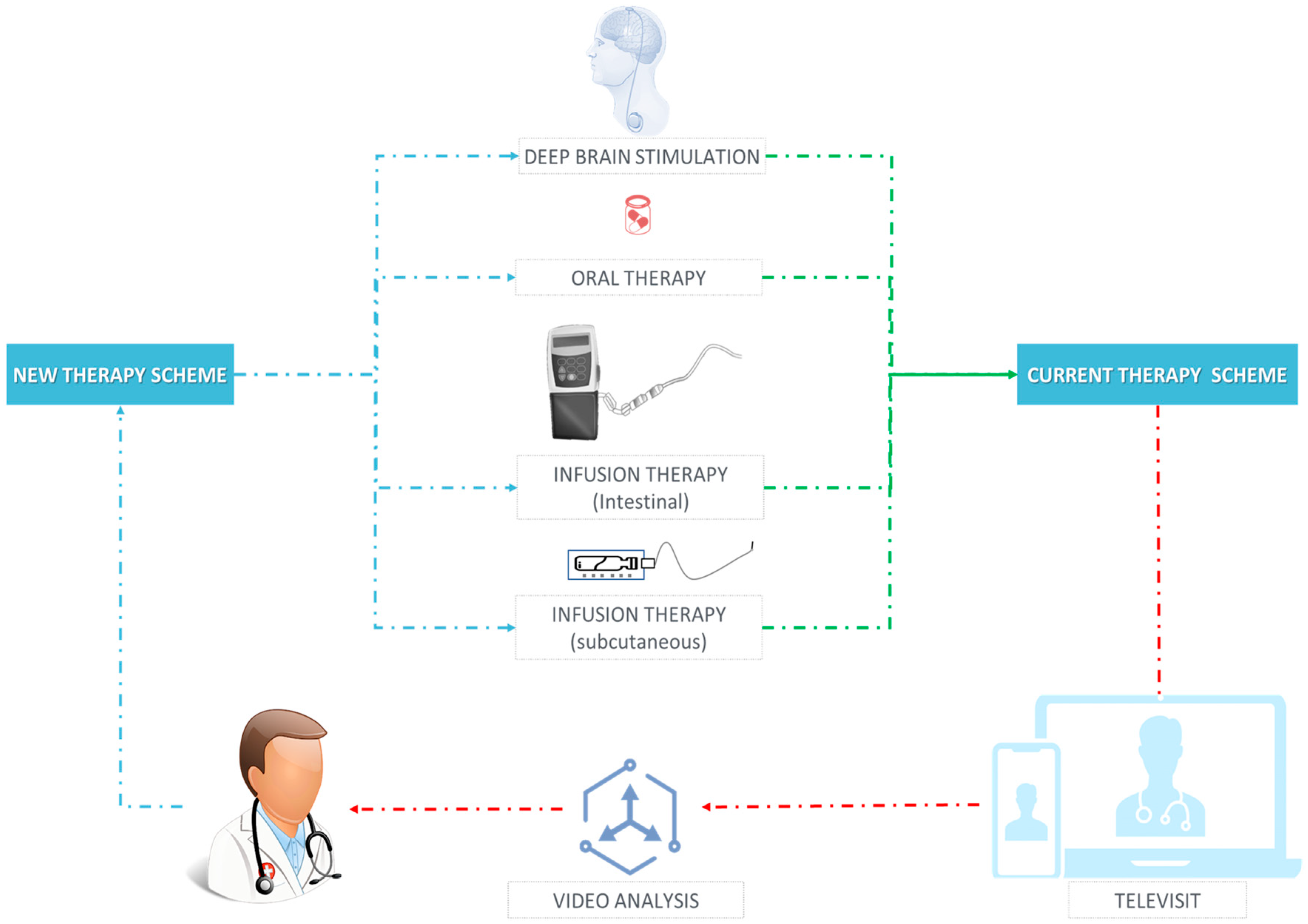

3.8. Therapeutic Management

3.8.1. Motor Fluctuations

3.8.2. LIDs

3.8.3. Therapy Response

3.9. Quality Appraisal

4. Discussion

4.1. Limitations of the Evidence and Research Gaps

4.2. From Bench to Bedside: Choosing CV Tools for Routine Care

4.3. Opportunities and Challenges

4.4. Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kalia, L.V.; Lang, A.E. Parkinson’s disease. Lancet 2015, 386, 896–912. [Google Scholar] [CrossRef]

- Postuma, R.B.; Berg, D.; Stern, M.; Poewe, W.; Olanow, C.W.; Oertel, W.; Obeso, J.; Marek, K.; Litvan, I.; Lang, A.E.; et al. MDS clinical diagnostic criteria for Parkinson’s disease. Mov. Disord. 2015, 30, 1591–1601. [Google Scholar] [CrossRef] [PubMed]

- Poewe, W.; Seppi, K.; Tanner, C.M.; Halliday, G.M.; Brundin, P.; Volkmann, J.; Schrag, A.-E.; Lang, A.E. Parkinson disease. Nat. Rev. Dis. Primers 2017, 3, 1–21. [Google Scholar] [CrossRef]

- di Biase, L.; Pecoraro, P.M.; Di Lazzaro, V. Validating the Accuracy of Parkinson’s Disease Clinical Diagnosis: A UK Brain Bank Case–Control Study. Ann. Neurol. 2025, 97, 1110–1121. [Google Scholar] [CrossRef] [PubMed]

- Rizzo, G.; Copetti, M.; Arcuti, S.; Martino, D.; Fontana, A.; Logroscino, G. Accuracy of clinical diagnosis of Parkinson disease: A systematic review and meta-analysis. Neurology 2016, 86, 566–576. [Google Scholar] [CrossRef] [PubMed]

- di Biase, L.; Pecoraro, P.M.; Carbone, S.P.; Alessi, F.; Di Lazzaro, V. Smoking Exposure and Parkinson’s Disease: A UK Brain Bank pathology-validated Case-Control Study. Park. Relat. Disord. 2024, 125, 107022. [Google Scholar] [CrossRef]

- di Biase, L.; Pecoraro, P.M.; Carbone, S.P.; Di Lazzaro, V. The role of uric acid in Parkinson’s disease: A UK brain bank pathology-validated case–control study. Neurol. Sci. 2025, 46, 3117–3126. [Google Scholar] [CrossRef]

- Marsili, L.; Rizzo, G.; Colosimo, C. Diagnostic criteria for Parkinson’s disease: From James Parkinson to the concept of prodromal disease. Front. Neurol. 2018, 9, 156. [Google Scholar] [CrossRef]

- Simuni, T.; Chahine, L.M.; Poston, K.; Brumm, M.; Buracchio, T.; Campbell, M.; Chowdhury, S.; Coffey, C.; Concha-Marambio, L.; Dam, T.; et al. A biological definition of neuronal α-synuclein disease: Towards an integrated staging system for research. Lancet Neurol. 2024, 23, 178–190. [Google Scholar] [CrossRef]

- Höglinger, G.U.; Adler, C.H.; Berg, D.; Klein, C.; Outeiro, T.F.; Poewe, W.; Postuma, R.; Stoessl, A.J.; Lang, A.E. A biological classification of Parkinson’s disease: The SynNeurGe research diagnostic criteria. Lancet Neurol. 2024, 23, 191–204. [Google Scholar] [CrossRef]

- Williams, S.; Wong, D.; Alty, J.E.; Relton, S.D. Parkinsonian hand or clinician’s eye? Finger tap Bradykinesia interrater reliability for 21 movement disorder experts. J. Park. Dis. 2023, 13, 525–536. [Google Scholar] [CrossRef]

- Evers, L.J.; Krijthe, J.H.; Meinders, M.J.; Bloem, B.R.; Heskes, T.M. Measuring Parkinson’s disease over time: The real-world within-subject reliability of the MDS-UPDRS. Mov. Disord. 2019, 34, 1480–1487. [Google Scholar] [CrossRef]

- Bhatia, K.P.; Bain, P.; Bajaj, N.; Elble, R.J.; Hallett, M.; Louis, E.D.; Raethjen, J.; Stamelou, M.; Testa, C.M.; Deuschl, G.; et al. Consensus Statement on the classification of tremors. from the task force on tremor of the International Parkinson and Movement Disorder Society. Mov. Disord. 2018, 33, 75–87. [Google Scholar] [CrossRef]

- Di Biase, L.; Brittain, J.S.; Shah, S.A.; Pedrosa, D.J.; Cagnan, H.; Mathy, A.; Chen, C.C.; Martín-Rodríguez, J.F.; Mir, P.; Timmerman, L.; et al. Tremor stability index: A new tool for differential diagnosis in tremor syndromes. Brain 2017, 140, 1977–1986. [Google Scholar] [CrossRef]

- Di Pino, G.; Formica, D.; Melgari, J.-M.; Taffoni, F.; Salomone, G.; di Biase, L.; Caimo, E.; Vernieri, F.; Guglielmelli, E. Neurophysiological bases of tremors and accelerometric parameters analysis. In Proceedings of the 2012 4th IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), Rome, Italy, 24–27 June 2012; pp. 1820–1825. [Google Scholar]

- Erro, R.; Pilotto, A.; Magistrelli, L.; Olivola, E.; Nicoletti, A.; Di Fonzo, A.; Dallocchio, C.; Di Biasio, F.; Bologna, M.; Tessitore, A.; et al. A Bayesian approach to Essential Tremor plus: A preliminary analysis of the TITAN cohort. Park. Relat. Disord. 2022, 103, 73–76. [Google Scholar] [CrossRef]

- Erro, R.; Lazzeri, G.; Terranova, C.; Paparella, G.; Gigante, A.F.; De Micco, R.; Magistrelli, L.; Di Biasio, F.; Valentino, F.; Moschella, V.; et al. Comparing Essential Tremor with and without Soft Dystonic Signs and Tremor Combined with Dystonia: The TITAN Study. Mov. Disord. Clin. Pract. 2024, 1, 645–654. [Google Scholar]

- Erro, R.; Pilotto, A.; Esposito, M.; Olivola, E.; Nicoletti, A.; Lazzeri, G.; Magistrelli, L.; Dallocchio, C.; Marchese, R.; Bologna, M.; et al. The Italian tremor Network (TITAN): Rationale, design and preliminary findings. Neurol. Sci. 2022, 43, 5369–5376. [Google Scholar] [CrossRef] [PubMed]

- di Biase, L.; Brittain, J.-S.; Brown, P.; Shah, S.A. Methods and System for Characterising Tremors. WO Patent 2018134579A1, 26 July 2018. [Google Scholar]

- di Biase, L.; Di Santo, A.; Caminiti, M.L.; Pecoraro, P.M.; Di Lazzaro, V. Classification of dystonia. Life 2022, 12, 206. [Google Scholar] [CrossRef] [PubMed]

- di Biase, L.; Di Santo, A.; Caminiti, M.L.; Pecoraro, P.M.; Carbone, S.P.; Di Lazzaro, V. Dystonia diagnosis: Clinical neurophysiology and genetics. J. Clin. Med. 2022, 11, 4184. [Google Scholar] [CrossRef]

- Espay, A.J.; Schwarzschild, M.A.; Tanner, C.M.; Fernandez, H.H.; Simon, D.K.; Leverenz, J.B.; Merola, A.; Chen-Plotkin, A.; Brundin, P.; Kauffman, M.A.; et al. Biomarker-driven phenotyping in Parkinson’s disease: A translational missing link in disease-modifying clinical trials. Mov. Disord. 2017, 32, 319–324. [Google Scholar] [CrossRef]

- di Biase, L. Clinical Management of Movement Disorders. J. Clin. Med. 2023, 13, 43. [Google Scholar] [CrossRef]

- di Biase, L.; Tinkhauser, G.; Martin Moraud, E.; Caminiti, M.L.; Pecoraro, P.M.; Di Lazzaro, V. Adaptive, personalized closed-loop therapy for Parkinson’s disease: Biochemical, neurophysiological, and wearable sensing systems. Expert Rev. Neurother. 2021, 21, 1371–1388. [Google Scholar] [CrossRef]

- Ciarrocchi, D.; Pecoraro, P.M.; Zompanti, A.; Pennazza, G.; Santonico, M.; di Biase, L. Biochemical Sensors for Personalized Therapy in Parkinson’s Disease: Where We Stand. J. Clin. Med. 2024, 13, 7458. [Google Scholar] [CrossRef] [PubMed]

- Kubota, K.J.; Chen, J.A.; Little, M.A. Machine learning for large-scale wearable sensor data in Parkinson’s disease: Concepts, promises, pitfalls, and futures. Mov. Disord. 2016, 31, 1314–1326. [Google Scholar] [CrossRef] [PubMed]

- Sánchez-Ferro, Á.; Elshehabi, M.; Godinho, C.; Salkovic, D.; Hobert, M.A.; Domingos, J.; van Uem, J.M.T.; Ferreira, J.J.; Maetzler, W. New methods for the assessment of Parkinson’s disease (2005 to 2015): A systematic review. Mov. Disord. 2016, 31, 1283–1292. [Google Scholar] [CrossRef] [PubMed]

- di Biase, L.; Pecoraro, P.M.; Pecoraro, G.; Shah, S.A.; Di Lazzaro, V. Machine learning and wearable sensors for automated Parkinson’s disease diagnosis aid: A systematic review. J. Neurol. 2024, 271, 6452–6470. [Google Scholar] [CrossRef]

- Deuschl, G.; Krack, P.; Lauk, M.; Timmer, J. Clinical neurophysiology of tremor. J. Clin. Neurophysiol. 1996, 13, 110–121. [Google Scholar] [CrossRef]

- Stamatakis, J.; Ambroise, J.; Crémers, J.; Sharei, H.; Delvaux, V.; Macq, B.; Garraux, G. Finger tapping clinimetric score prediction in Parkinson’s disease using low-cost accelerometers. Comput. Intell. Neurosci. 2013, 2013, 717853. [Google Scholar] [CrossRef]

- Endo, T.; Okuno, R.; Yokoe, M.; Akazawa, K.; Sakoda, S. A novel method for systematic analysis of rigidity in Parkinson’s disease. Mov. Disord. Off. J. Mov. Disord. Soc. 2009, 24, 2218–2224. [Google Scholar] [CrossRef]

- Kwon, Y.; Park, S.-H.; Kim, J.-W.; Ho, Y.; Jeon, H.-M.; Bang, M.-J.; Koh, S.-B.; Kim, J.-H.; Eom, G.-M. Quantitative evaluation of parkinsonian rigidity during intra-operative deep brain stimulation. Bio-Med. Mater. Eng. 2014, 24, 2273–2281. [Google Scholar] [CrossRef]

- Raiano, L.; di Pino, G.; di Biase, L.; Tombini, M.; Tagliamonte, N.L.; Formica, D. PDMeter: A wrist wearable device for an at-home assessment of the Parkinson’s disease rigidity. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1325–1333. [Google Scholar] [CrossRef]

- Schlachetzki, J.C.M.; Barth, J.; Marxreiter, F.; Gossler, J.; Kohl, Z.; Reinfelder, S.; Gassner, H.; Aminian, K.; Eskofier, B.M.; Winkler, J.; et al. Wearable sensors objectively measure gait parameters in Parkinson’s disease. PLoS ONE 2017, 12, e0183989. [Google Scholar] [CrossRef]

- Suppa, A.; Kita, A.; Leodori, G.; Zampogna, A.; Nicolini, E.; Lorenzi, P.; Rao, R.; Irrera, F. L-DOPA and freezing of gait in Parkinson’s disease: Objective assessment through a wearable wireless system. Front. Neurol. 2017, 8, 406. [Google Scholar] [CrossRef]

- Tosi, J.; Summa, S.; Taffoni, F.; di Biase, L.; Marano, M.; Rizzo, A.C.; Tombini, M.; Schena, E.; Formica, D.; Di Pino, G. Feature Extraction in Sit-to-Stand Task Using M-IMU Sensors and Evaluatiton in Parkinson’s Disease. In Proceedings of the 2018 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Rome, Italy, 11–13 June 2018; pp. 1–6. [Google Scholar]

- di Biase, L.; Di Santo, A.; Caminiti, M.L.; De Liso, A.; Shah, S.A.; Ricci, L.; Di Lazzaro, V. Gait analysis in Parkinson’s disease: An overview of the most accurate markers for diagnosis and symptoms monitoring. Sensors 2020, 20, 3529. [Google Scholar] [CrossRef]

- di Biase, L.; Raiano, L.; Caminiti, M.L.; Pecoraro, P.M.; Di Lazzaro, V. Parkinson’s Disease Wearable Gait Analysis: Kinematic and Dynamic Markers for Diagnosis. Sensors 2022, 22, 8773. [Google Scholar] [CrossRef]

- di Biase, L.; Ricci, L.; Caminiti, M.L.; Pecoraro, P.M.; Carbone, S.P.; Di Lazzaro, V. Quantitative High Density EEG Brain Connectivity Evaluation in Parkinson’s Disease: The Phase Locking Value (PLV). J. Clin. Med. 2023, 12, 1450. [Google Scholar] [CrossRef] [PubMed]

- di Biase, L.; Pecoraro, P.M.; Pecoraro, G.; Caminiti, M.L.; Di Lazzaro, V. Markerless radio frequency indoor monitoring for telemedicine: Gait analysis, indoor positioning, fall detection, tremor analysis, vital signs and sleep monitoring. Sensors 2022, 22, 8486. [Google Scholar] [CrossRef]

- Little, S.; Pogosyan, A.; Neal, S.; Zavala, B.; Zrinzo, L.; Hariz, M.; Foltynie, T.; Limousin, P.; Ashkan, K.; FitzGerald, J.; et al. Adaptive deep brain stimulation in advanced Parkinson disease. Ann. Neurol. 2013, 74, 449–457. [Google Scholar] [CrossRef] [PubMed]

- Di Biase, L.; Falato, E.; Di Lazzaro, V. Transcranial focused ultrasound (tFUS) and transcranial unfocused ultrasound (tUS) neuromodulation: From theoretical principles to stimulation practices. Front. Neurol. 2019, 10, 549. [Google Scholar] [CrossRef]

- di Biase, L.; Falato, E.; Caminiti, M.L.; Pecoraro, P.M.; Narducci, F.; Di Lazzaro, V. Focused ultrasound (FUS) for chronic pain management: Approved and potential applications. Neurol. Res. Int. 2021, 2021, 8438498. [Google Scholar] [CrossRef] [PubMed]

- di Biase, L.; Munhoz, R.P. Deep brain stimulation for the treatment of hyperkinetic movement disorders. Expert Rev. Neurother. 2016, 16, 1067–1078. [Google Scholar] [CrossRef]

- di Biase, L.; Piano, C.; Bove, F.; Ricci, L.; Caminiti, M.L.; Stefani, A.; Viselli, F.; Modugno, N.; Cerroni, R.; Calabresi, P.; et al. Intraoperative local field potential beta power and three-dimensional neuroimaging mapping predict long-term clinical response to deep brain stimulation in Parkinson disease: A retrospective study. Neuromodulation Technol. Neural Interface 2023, 26, 1724–1732. [Google Scholar] [CrossRef]

- di Biase, L.; Fasano, A. Low-frequency deep brain stimulation for Parkinson’s disease: Great expectation or false hope? Mov. Disord. 2016, 31, 962–967. [Google Scholar] [CrossRef]

- Sandoe, C.; Krishna, V.; Basha, D.; Sammartino, F.; Tatsch, J.; Picillo, M.; di Biase, L.; Poon, Y.-Y.; Hamani, C.; Reddy, D.; et al. Predictors of deep brain stimulation outcome in tremor patients. Brain Stimul. 2018, 11, 592–599. [Google Scholar] [CrossRef]

- d’Angelis, O.; Di Biase, L.; Vollero, L.; Merone, M. IoT architecture for continuous long term monitoring: Parkinson’s Disease case study. Internet Things 2022, 20, 100614. [Google Scholar] [CrossRef]

- di Biase, L.; Raiano, L.; Caminiti, M.L.; Pecoraro, P.M.; Di Lazzaro, V. Artificial intelligence in Parkinson’s disease—Symptoms identification and monitoring. In Augmenting Neurological Disorder Prediction and Rehabilitation Using Artificial Intelligence; Elsevier: Amsterdam, The Netherlands, 2022; pp. 35–52. [Google Scholar]

- di Biase, L.; Bonura, A.; Pecoraro, P.M.; Caminiti, M.L.; Di Lazzaro, V. Artificial Intelligence in Stroke Imaging. In Machine Learning and Deep Learning in Neuroimaging Data Analysis; CRC Press: Boca Raton, FL, USA, 2024; pp. 25–42. [Google Scholar]

- di Biase, L.; Bonura, A.; Caminiti, M.L.; Pecoraro, P.M.; Di Lazzaro, V. Neurophysiology tools to lower the stroke onset to treatment time during the golden hour: Microwaves, bioelectrical impedance and near infrared spectroscopy. Ann. Med. 2022, 54, 2646–2659. [Google Scholar] [CrossRef]

- di Biase, L.; Bonura, A.; Pecoraro, P.M.; Carbone, S.P.; Di Lazzaro, V. Unlocking the Potential of Stroke Blood Biomarkers: Early Diagnosis, Ischemic vs. Haemorrhagic Differentiation and Haemorrhagic Transformation Risk: A Comprehensive Review. Int. J. Mol. Sci. 2023, 24, 11545. [Google Scholar] [CrossRef] [PubMed]

- Di Biase, L. Method and Device for the Objective Characterization of Symptoms of Parkinson’s Disease. US Patent App. 18/025,069, 14 September 2023. [Google Scholar]

- di Biase, L.; Pecoraro, P.M.; Carbone, S.P.; Caminiti, M.L.; Di Lazzaro, V. Levodopa-Induced Dyskinesias in Parkinson’s Disease: An Overview on Pathophysiology, Clinical Manifestations, Therapy Management Strategies and Future Directions. J. Clin. Med. 2023, 12, 4427. [Google Scholar] [CrossRef] [PubMed]

- Di Biase, L. Method for the management of oral therapy in parkinson’s disease. U.S. Patent Application 18/025,092, 28 September 2023. [Google Scholar]

- Pfister, F.M.J.; Um, T.T.; Pichler, D.C.; Goschenhofer, J.; Abedinpour, K.; Lang, M.; Endo, S.; Ceballos-Baumann, A.O.; Hirche, S.; Bischl, B.; et al. High-resolution motor state detection in Parkinson’s disease using convolutional neural networks. Sci. Rep. 2020, 10, 5860. [Google Scholar] [CrossRef] [PubMed]

- Espay, A.J.; Bonato, P.; Nahab, F.B.; Maetzler, W.; Dean, J.M.; Klucken, J.; Eskofier, B.M.; Merola, A.; Horak, F.; Lang, A.E.; et al. Technology in Parkinson’s disease: Challenges and opportunities. Mov. Disord. 2016, 31, 1272–1282. [Google Scholar] [CrossRef]

- Pecoraro, P.M.; Marsili, L.; Espay, A.J.; Bologna, M.; di Biase, L. Computer Vision Technologies in Movement Disorders: A Systematic Review. Mov. Disord. Clin. Pract. 2025, 12, 1229–1243. [Google Scholar] [CrossRef]

- Lin, T.; Maire, M.; Belongie, S.; Hays, J.; Zitnick, C. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Andriluka, M.; Pishchulin, L.; Gehler, P.; Schiele, B. 2d human pose estimation: New benchmark and state of the art analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3686–3693. [Google Scholar]

- Ionescu, C.; Papava, D.; Olaru, V.; Sminchisescu, C. Human3.6M: Large scale datasets and predictive methods for 3d human sensing in natural environments. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 1325–1339. [Google Scholar] [CrossRef]

- Joo, H.; Liu, H.; Tan, L.; Gui, L.; Nabbe, B.; Matthews, I.; Kanade, T.; Nobuhara, S.; Sheikh, Y. Panoptic studio: A massively multiview system for social motion capture. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3334–3342. [Google Scholar]

- Wu, J.; Zheng, H.; Zhao, B.; Li, Y.; Yan, B.; Liang, R.; Wang, W.; Zhou, S.; Lin, G.; Fu, Y.; et al. Ai challenger: A large-scale dataset for going deeper in image understanding. arXiv 2017, arXiv:171106475. [Google Scholar]

- Li, J.; Wang, C.; Zhu, H.; Mao, Y.; Fang, H.-S.; Lu, C. Crowdpose: Efficient crowded scenes pose estimation and a new benchmark. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10863–10872. [Google Scholar]

- Andriluka, M.; Iqbal, U.; Insafutdinov, E.; Pishchulin, L.; Milan, A.; Gall, J.; Schiele, B. Posetrack: A benchmark for human pose estimation and tracking. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 5167–5176. [Google Scholar]

- Güler, R.A.; Neverova, N.; Kokkinos, I. Densepose: Dense human pose estimation in the wild. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7297–7306. [Google Scholar]

- Jin, S.; Xu, L.; Xu, J.; Wang, C.; Liu, W.; Qian, C.; Ouyang, W.; Luo, P. Whole-Body Human Pose Estimation in the Wild; Springer: Berlin/Heidelberg, Germany, 2020; pp. 196–214. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. Openpose: Realtime multi-person 2d pose estimation using part affinity fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef] [PubMed]

- Kendall, A.; Grimes, M.; Cipolla, R. Posenet: A convolutional network for real-time 6-dof camera relocalization. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 2938–2946. [Google Scholar]

- Fang, H.-S.; Xie, S.; Tai, Y.-W.; Lu, C. Rmpe: Regional multi-person pose estimation. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2334–2343. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 5693–5703. [Google Scholar]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.-L.; Yong, M.G.; Lee, J.; et al. Mediapipe: A framework for building perception pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar] [CrossRef]

- Bazarevsky, V.; Grishchenko, I.; Raveendran, K.; Zhu, T.; Zhang, F.; Grundmann, M. Blazepose: On-device real-time body pose tracking. arXiv 2020, arXiv:200610204. [Google Scholar]

- Xu, H.; Bazavan, E.G.; Zanfir, A.; Freeman, W.T.; Sukthankar, R.; Sminchisescu, C. Ghum & ghuml: Generative 3d human shape and articulated pose models. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6184–6193. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Mathis, A.; Mamidanna, P.; Cury, K.M.; Abe, T.; Murthy, V.N.; Mathis, M.W.; Bethge, M. DeepLabCut: Markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 2018, 21, 1281–1289. [Google Scholar] [CrossRef] [PubMed]

- Groos, D.; Ramampiaro, H.; Ihlen, E.A. EfficientPose: Scalable single-person pose estimation. Appl. Intell. 2021, 51, 2518–2533. [Google Scholar] [CrossRef]

- Hub, T. MoveNet: Ultra Fast and Accurate Pose Detection Model. 2024—TensorFlow Hub—Tensorflow.org. Available online: https://www.tensorflow.org/hub/tutorials/movenet (accessed on 1 August 2025).

- Lam, W.W.; Tang, Y.M.; Fong, K.N. A systematic review of the applications of markerless motion capture (MMC) technology for clinical measurement in rehabilitation. J. Neuroeng. Rehabil. 2023, 20, 57. [Google Scholar] [CrossRef]

- Sharma, V.; Gupta, M.; Kumar, A.; Mishra, D. Video processing using deep learning techniques: A systematic literature review. IEEE Access 2021, 9, 139489–139507. [Google Scholar] [CrossRef]

- An, S.; Li, Y.; Ogras, U. mri: Multi-modal 3d human pose estimation dataset using mmwave, rgb-d, and inertial sensors. Adv. Neural Inf. Process. Syst. 2022, 35, 27414–27426. [Google Scholar]

- Stenum, J.; Rossi, C.; Roemmich, R.T. Two-dimensional video-based analysis of human gait using pose estimation. PLoS Comput. Biol. 2021, 17, e1008935. [Google Scholar] [CrossRef]

- Ceriola, L.; Taborri, J.; Donati, M.; Rossi, S.; Patanè, F.; Mileti, I. Comparative analysis of markerless motion capture systems for measuring human kinematics. IEEE Sens. J. 2024, 24, 28135–28144. [Google Scholar] [CrossRef]

- Tang, W.; van Ooijen, P.M.; Sival, D.A.; Maurits, N.M. Automatic two-dimensional & three-dimensional video analysis with deep learning for movement disorders: A systematic review. Artif. Intell. Med. 2024, 156, 102952. [Google Scholar] [PubMed]

- Friedrich, M.U.; Relton, S.; Wong, D.; Alty, J. Computer Vision in Clinical Neurology: A Review. JAMA Neurol. 2025, 8, 407–415. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Williams, S.; Hogg, D.C.; Alty, J.E.; Relton, S.D. Deep learning of Parkinson’s movement from video, without human-defined measures. J. Neurol. Sci. 2024, 463, 123089. [Google Scholar] [CrossRef]

- Guo, Z.; Zeng, W.; Yu, T.; Xu, Y.; Xiao, Y.; Cao, X.; Cao, Z. Vision-based finger tapping test in patients with Parkinson’s disease via spatial-temporal 3D hand pose estimation. IEEE J. Biomed. Health Inform. 2022, 26, 3848–3859. [Google Scholar] [CrossRef]

- Khan, T.; Nyholm, D.; Westin, J.; Dougherty, M. A computer vision framework for finger-tapping evaluation in Parkinson’s disease. Artif. Intell. Med. 2014, 60, 27–40. [Google Scholar] [CrossRef]

- Heye, K.; Li, R.; Bai, Q.; George, R.J.S.; Rudd, K.; Huang, G.; Meinders, M.J.; Bloem, B.R.; Alty, J.E. Validation of computer vision technology for analyzing bradykinesia in outpatient clinic videos of people with Parkinson’s disease. J. Neurol. Sci. 2024, 466, 123271. [Google Scholar] [CrossRef] [PubMed]

- Guarín, D.L.; Wong, J.K.; McFarland, N.R.; Ramirez-Zamora, A. Characterizing disease progression in Parkinson’s disease from videos of the finger tapping test. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 2293–2301. [Google Scholar] [CrossRef]

- Williams, S.; Relton, S.D.; Fang, H.; Alty, J.; Qahwaji, R.; Graham, C.D.; Wong, D.C. Supervised classification of bradykinesia in Parkinson’s disease from smartphone videos. Artif. Intell. Med. 2020, 110, 101966. [Google Scholar] [CrossRef]

- Eguchi, K.; Yaguchi, H.; Uwatoko, H.; Iida, Y.; Hamada, S.; Honma, S.; Takei, A.; Moriwaka, F.; Yabe, I. Feasibility of differentiating gait in Parkinson’s disease and spinocerebellar degeneration using a pose estimation algorithm in two-dimensional video. J. Neurol. Sci. 2024, 464, 123158. [Google Scholar] [CrossRef]

- Guarín, D.L.; Wong, J.K.; McFarland, N.R.; Ramirez-Zamora, A.; Vaillancourt, D.E. What the trained eye cannot see: Quantitative kinematics and machine learning detect movement deficits in early-stage Parkinson’s disease from videos. Park. Relat. Disord. 2024, 127, 107104. [Google Scholar] [CrossRef]

- Williams, S.; Fang, H.; Relton, S.D.; Graham, C.D.; Alty, J.E. Seeing the unseen: Could Eulerian video magnification aid clinician detection of subclinical Parkinson’s tremor? J. Clin. Neurosci. 2020, 81, 101–104. [Google Scholar] [CrossRef]

- Liu, W.; Lin, X.; Chen, X.; Wang, Q.; Wang, X.; Yang, B.; Cai, N.; Chen, R.; Chen, G.; Lin, Y. Vision-based estimation of MDS-UPDRS scores for quantifying Parkinson’s disease tremor severity. Med. Image Anal. 2023, 85, 102754. [Google Scholar] [CrossRef] [PubMed]

- Williams, S.; Fang, H.; Relton, S.D.; Wong, D.C.; Alam, T.; Alty, J.E. Accuracy of smartphone video for contactless measurement of hand tremor frequency. Mov. Disord. Clin. Pract. 2021, 8, 69–75. [Google Scholar] [CrossRef] [PubMed]

- Di Biase, L.; Summa, S.; Tosi, J.; Taffoni, F.; Marano, M.; Cascio Rizzo, A.; Vecchio, F.; Formica, D.; Di Lazzaro, V.; Di Pino, G.; et al. Quantitative analysis of bradykinesia and rigidity in Parkinson’s disease. Front. Neurol. 2018, 9, 121. [Google Scholar] [CrossRef] [PubMed]

- Jin, B.; Qu, Y.; Zhang, L.; Gao, Z. Diagnosing Parkinson disease through facial expression recognition: Video analysis. J. Med. Internet Res. 2020, 22, e18697. [Google Scholar] [CrossRef]

- Abrami, A.; Gunzler, S.; Kilbane, C.; Ostrand, R.; Ho, B.; Cecchi, G. Automated computer vision assessment of hypomimia in Parkinson disease: Proof-of-principle pilot study. J. Med. Internet Res. 2021, 23, e21037. [Google Scholar] [CrossRef]

- Liu, P.; Yu, N.; Yang, Y.; Yu, Y.; Sun, X.; Yu, H.; Han, J.; Wu, J. Quantitative assessment of gait characteristics in patients with Parkinson’s disease using 2D video. Park. Relat. Disord. 2022, 101, 49–56. [Google Scholar] [CrossRef]

- Portilla, J.; Rangel, E.; Guayacán, L.; Martínez, F. A Volumetric Deep Architecture to Discriminate Parkinsonian Patterns from Intermediate Pose Representations. Int. J. Psychol. Res. 2024, 17, 84–90. [Google Scholar] [CrossRef] [PubMed]

- De Lim, M.; Connie, T.; Goh, M.K.O.; Saedon, N.I. Model-Based Feature Extraction and Classification for Parkinson Disease Screening Using Gait Analysis: Development and Validation Study. JMIR Aging 2025, 8, e65629. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Kim, R.; Byun, K.; Kang, N.; Park, K. Assessment of temporospatial and kinematic gait parameters using human pose estimation in patients with Parkinson’s disease: A comparison between near-frontal and lateral views. PLoS ONE 2025, 20, e0317933. [Google Scholar] [CrossRef] [PubMed]

- Cao, X.; Xue, Y.; Chen, J.; Chen, X.; Ma, Y.; Hu, C.; Ma, H.; Ma, H. Video based shuffling step detection for parkinsonian patients using 3d convolution. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 641–649. [Google Scholar] [CrossRef]

- Lu, M.; Poston, K.; Pfefferbaum, A.; Sullivan, E.V.; Fei-Fei, L.; Pohl, K.M.; Niebles, J.C.; Adeli, E. Vision-based estimation of MDS-UPDRS gait scores for assessing Parkinson’s disease motor severity. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, 4–8 October 2020; pp. 637–647. [Google Scholar]

- Sato, K.; Nagashima, Y.; Mano, T.; Iwata, A.; Toda, T. Quantifying normal and parkinsonian gait features from home movies: Practical application of a deep learning–based 2D pose estimator. PLoS ONE 2019, 14, e0223549. [Google Scholar] [CrossRef]

- Chavez, J.M.; Tang, W. A vision-based system for stage classification of parkinsonian gait using machine learning and synthetic data. Sensors 2022, 22, 4463. [Google Scholar] [CrossRef]

- Ripic, Z.; Signorile, J.F.; Best, T.M.; Jacobs, K.A.; Nienhuis, M.; Whitelaw, C.; Moenning, C.; Eltoukhy, M. Validity of artificial intelligence-based markerless motion capture system for clinical gait analysis: Spatiotemporal results in healthy adults and adults with Parkinson’s disease. J. Biomech. 2023, 155, 111645. [Google Scholar] [CrossRef]

- Simonet, A.; Fourcade, P.; Loete, F.; Delafontaine, A.; Yiou, E. Evaluation of the margin of stability during gait initiation in Young healthy adults, elderly healthy adults and patients with Parkinson’s disease: A comparison of force plate and markerless motion capture systems. Sensors 2024, 24, 3322. [Google Scholar] [CrossRef]

- Archila, J.; Manzanera, A.; Martínez, F. A multimodal Parkinson quantification by fusing eye and gait motion patterns, using covariance descriptors, from non-invasive computer vision. Comput. Methods Programs Biomed. 2022, 215, 106607. [Google Scholar] [CrossRef]

- Kondo, Y.; Bando, K.; Suzuki, I.; Miyazaki, Y.; Nishida, D.; Hara, T.; Kadone, H.; Suzuki, K. Video-based detection of freezing of gait in daily clinical practice in patients with parkinsonism. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 2250–2260. [Google Scholar] [CrossRef]

- Rupprechter, S.; Morinan, G.; Peng, Y.; Foltynie, T.; Sibley, K.; Weil, R.S.; Leyland, L.-A.; Baig, F.; Morgante, F.; Gilron, R.; et al. A clinically interpretable computer-vision based method for quantifying gait in parkinson’s disease. Sensors 2021, 21, 5437. [Google Scholar] [CrossRef]

- Khan, T.; Zeeshan, A.; Dougherty, M. A novel method for automatic classification of Parkinson gait severity using front-view video analysis. Technol. Health Care 2021, 29, 643–653. [Google Scholar] [CrossRef]

- Li, T.; Chen, J.; Hu, C.; Ma, Y.; Wu, Z.; Wan, W.; Huang, Y.; Jia, F.; Gong, C.; Wan, S.; et al. Automatic timed up-and-go sub-task segmentation for Parkinson’s disease patients using video-based activity classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 2189–2199. [Google Scholar] [CrossRef]

- Sabo, A.; Gorodetsky, C.; Fasano, A.; Iaboni, A.; Taati, B. Concurrent validity of zeno instrumented walkway and video-based gait features in adults with Parkinson’s disease. IEEE J. Transl. Eng. Health Med. 2022, 10, 1–11. [Google Scholar] [CrossRef]

- Aldegheri, S.; Artusi, C.A.; Camozzi, S.; Di Marco, R.; Geroin, C.; Imbalzano, G.; Lopiano, L.; Tinazzi, M.; Bombieri, N. Camera-and viewpoint-agnostic evaluation of axial postural abnormalities in people with Parkinson’s disease through augmented human pose estimation. Sensors 2023, 23, 3193. [Google Scholar] [CrossRef]

- Ma, L.-Y.; Shi, W.-K.; Chen, C.; Wang, Z.; Wang, X.-M.; Jin, J.-N.; Chen, L.; Ren, K.; Chen, Z.-L.; Ling, Y.; et al. Remote scoring models of rigidity and postural stability of Parkinson’s disease based on indirect motions and a low-cost RGB algorithm. Front. Aging Neurosci. 2023, 15, 1034376. [Google Scholar] [CrossRef] [PubMed]

- Shin, J.H.; Woo, K.A.; Lee, C.Y.; Jeon, S.H.; Kim, H.-J.; Jeon, B. Automatic measurement of postural abnormalities with a pose estimation algorithm in Parkinson’s disease. J. Mov. Disord. 2022, 15, 140. [Google Scholar] [CrossRef] [PubMed]

- He, R.; You, Z.; Zhou, Y.; Chen, G.; Diao, Y.; Jiang, X.; Ning, Y.; Zhao, G.; Liu, Y. A novel multi-level 3D pose estimation framework for gait detection of Parkinson’s disease using monocular video. Front. Bioeng. Biotechnol. 2024, 12, 1520831. [Google Scholar] [CrossRef] [PubMed]

- Morinan, G.; Dushin, Y.; Sarapata, G.; Rupprechter, S.; Peng, Y.; Girges, C.; Salazar, M.; Milabo, C.; Sibley, K.; Foltynie, T.; et al. Computer vision quantification of whole-body Parkinsonian bradykinesia using a large multi-site population. npj Park. Dis. 2023, 9, 10. [Google Scholar] [CrossRef]

- Park, K.W.; Lee, E.-J.; Lee, J.S.; Jeong, J.; Choi, N.; Jo, S.; Jung, M.; Do, J.Y.; Kang, D.-W.; Lee, J.-G.; et al. Machine learning–based automatic rating for cardinal symptoms of Parkinson disease. Neurology 2021, 96, e1761–e1769. [Google Scholar] [CrossRef]

- Sarapata, G.; Dushin, Y.; Morinan, G.; Ong, J.; Budhdeo, S.; Kainz, B.; O’Keeffe, J. Video-based activity recognition for automated motor assessment of Parkinson’s disease. IEEE J. Biomed. Health Inform. 2023, 27, 5032–5041. [Google Scholar] [CrossRef]

- Mifsud, J.; Embry, K.R.; Macaluso, R.; Lonini, L.; Cotton, R.J.; Simuni, T.; Jayaraman, A. Detecting the symptoms of Parkinson’s disease with non-standard video. J. Neuroeng. Rehabil. 2024, 21, 72. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, J.; Hu, C.; Ma, Y.; Ge, D.; Miao, S.; Xue, Y.; Li, L. Vision-based method for automatic quantification of parkinsonian bradykinesia. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1952–1961. [Google Scholar] [CrossRef]

- Xu, J.; Xu, X.; Guo, X.; Li, Z.; Dong, B.; Qi, C.; Yang, C.; Zhou, D.; Wang, J.; Song, L.; et al. Improving reliability of movement assessment in Parkinson’s disease using computer vision-based automated severity estimation. J. Park. Dis. 2025, 15, 349–360. [Google Scholar] [CrossRef]

- Chen, S.W.; Lin, S.H.; Liao, L.D.; Lai, H.-Y.; Pei, Y.-C.; Kuo, T.-S.; Lin, C.-T.; Chang, J.-Y.; Chen, Y.-Y.; Lo, Y.-C.; et al. Quantification and recognition of parkinsonian gait from monocular video imaging using kernel-based principal component analysis. Biomed. Eng. Online 2011, 10, 99. [Google Scholar] [CrossRef]

- Morgan, C.; Masullo, A.; Mirmehdi, M.; Isotalus, H.K.; Jovan, F.; McConville, R.; Tonkin, E.L.; Whone, A.; Craddock, I. Automated real-world video analysis of sit-to-stand transitions predicts parkinson’s disease severity. Digit. Biomark. 2023, 7, 92–103. [Google Scholar] [CrossRef]

- Li, M.H.; Mestre, T.A.; Fox, S.H.; Taati, B. Automated assessment of levodopa-induced dyskinesia: Evaluating the responsiveness of video-based features. Park. Relat. Disord. 2018, 53, 42–45. [Google Scholar] [CrossRef] [PubMed]

- Li, M.H.; Mestre, T.A.; Fox, S.H.; Taati, B. Vision-based assessment of parkinsonism and levodopa-induced dyskinesia with pose estimation. J. Neuroeng. Rehabil. 2018, 15, 97. [Google Scholar] [CrossRef] [PubMed]

- Sabo, A.; Iaboni, A.; Taati, B.; Fasano, A.; Gorodetsky, C. Evaluating the ability of a predictive vision-based machine learning model to measure changes in gait in response to medication and DBS within individuals with Parkinson’s disease. Biomed. Eng. Online 2023, 22, 120. [Google Scholar] [CrossRef]

- Shin, J.H.; Yu, R.; Ong, J.N.; Lee, C.Y.; Jeon, S.H.; Park, H.; Kim, H.-J.; Lee, J.; Jeon, B. Quantitative gait analysis using a pose-estimation algorithm with a single 2D-video of Parkinson’s disease patients. J. Park. Dis. 2021, 11, 1271–1283. [Google Scholar] [CrossRef] [PubMed]

- Deng, D.; Ostrem, J.L.; Nguyen, V.; Cummins, D.D.; Sun, J.; Pathak, A.; Little, S.; Abbasi-Asl, R. Interpretable video-based tracking and quantification of parkinsonism clinical motor states. npj Park. Dis. 2024, 10, 122. [Google Scholar] [CrossRef]

- Güney, G.; Jansen, T.S.; Dill, S.; Schulz, J.B.; Dafotakis, M.; Antink, C.H.; Braczynski, A.K. Video-based hand movement analysis of Parkinson patients before and after medication using high-frame-rate videos and MediaPipe. Sensors 2022, 22, 7992. [Google Scholar] [CrossRef]

- Jansen, T.S.; Güney, G.; Ganse, B.; Monje, M.H.G.; Schulz, J.B.; Dafotakis, M.; Antink, C.H.; Braczynski, A.K. Video-based analysis of the blink reflex in Parkinson’s disease patients. Biomed. Eng. Online 2024, 23, 43. [Google Scholar] [CrossRef] [PubMed]

- Baker, S.; Tekriwal, A.; Felsen, G.; Christensen, E.; Hirt, L.; Ojemann, S.G.; Kramer, D.R.; Kern, D.S.; Thompson, J.A. Automatic extraction of upper-limb kinematic activity using deep learning-based markerless tracking during deep brain stimulation implantation for Parkinson’s disease: A proof of concept study. PLoS ONE 2022, 17, e0275490. [Google Scholar] [CrossRef] [PubMed]

- Espay, A.J. Your After-Visit Summary—May 29, 2042. Lancet Neurol. 2022, 21, 412–413. [Google Scholar] [CrossRef] [PubMed]

- Tosin, M.H.; Sanchez-Ferro, A.; Wu, R.M.; de Oliveira, B.G.; Leite, M.A.A.; Suárez, P.R.; Goetz, C.G.; Martinez-Martin, P.; Stebbins, G.T.; Mestre, T.A. In-Home Remote Assessment of the MDS-UPDRS Part III: Multi-Cultural Development and Validation of a Guide for Patients. Mov. Disord. Clin. Pract. 2024, 11, 1576–1581. [Google Scholar] [CrossRef]

- Jha, A.; Espay, A.J.; Lees, A.J. Digital biomarkers in Parkinson’s disease: Missing the forest for the trees? Mov. Disord. Clin. Pract. 2023, 10 (Suppl. S2), S68. [Google Scholar] [CrossRef]

| Dataset | Year | Size (Images/Frames) | Persons/Poses | Keypoints | 2D/3D | Notes |

|---|---|---|---|---|---|---|

| COCO Keypoints | 2014 | 200k+ images | 250k persons | 17 | 2D | Most widely used benchmark |

| MPII Human Pose | 2014 | 25k images | 40k persons | 14–16 | 2D | Rich activity diversity |

| Human3.6M | 2014 | 3.6M frames | 11 subjects | 32 (3D joints) | 3D | Motion capture accuracy |

| CMU Panoptic Studio | 2015 | Multi-view recordings, 65+ HD cams | Thousands | Full body, hands, face, feet | 2D and 3D | Enabled OpenPose full-body estimation |

| AI Challenger | 2017 | 300k images | 700k persons | 14 | 2D | Large scale |

| CrowdPose | 2018 | 20k images | 80k persons | 14 | 2D | Focus on occlusions |

| PoseTrack | 2018 | 23k frames (video) | 150k poses | 15–17 | 2D | Tracking across time |

| COCO DensePose | 2018 | Subset of COCO | 50k persons | Dense surface | 2D→3D | Dense mapping |

| COCO WholeBody | 2020 | - | - | 133 (17 body, 6 feet, 68 face, 42 hands) | 2D | Whole-body keypoint annotations; extension of COCO Keypoints |

| Framework | Year | Origin/Developer | Main Training Dataset(s) | Landmarks | 2D/3D | Notes | ||

|---|---|---|---|---|---|---|---|---|

| B | H | F | ||||||

| OpenPose | 2017 | CMU (Pittsburgh, PA, USA) | COCO, MPII, CMU Panoptic | 15, 18, 25 | 21 | 70 | 2D (3D with multi-view) | First real-time multi-person framework |

| Posenet | 2017 | Google AI (Mountain View, CA, USA) | COCO | 17 | - | - | 2D | Real-time in-browser (TensorFlow.js); single-person |

| AlphaPose | 2017 | SenseTime (1900 Hongmei Road Xuhui District, Shanghai, China) | COCO, MPII | 17 | - | - | 2D | Top-down, high accuracy multi-person |

| HRNet | 2019 | Microsoft (Redmond, WA, USA) | COCO, MPII | 17 | - | - | 2D | Maintains high-res features |

| MediaPipe/BlazePose | 2019–2020 | Google (Mountain View, CA, USA) | COCO + internal + GHUM | 33 | 21 | 478 | 3D (x,y,z) | Real-time, lightweight, GHU-based |

| YOLO-Pose | 2022 | Ultralytics (Frederick, MD, USA) | COCO keypoints | 17 | - | - | 2D (3D experimental) | Extension of YOLO detectors |

| DeepLabCut | 2018 | Mathis Lab (Swiss Federal Institute of Technology, Lausanne (EPFL), Switzerland) | User-defined | Flexible | 2D and 3D | Customizable, used in neuroscience | ||

| EfficientPose | 2020 | Megvii (27 Jiancheng Middle Road, Haidian District, Beijing, China) | COCO | 17 | - | - | 2D | Efficiency-focused |

| Movenet | 2021 | Google AI (1600 Amphitheatre Pkwy, Mountain View, CA, USA) | COCO | 17 | - | - | 2D | Lightning (low-latency) and Thunder (higher-accuracy) variants |

| DensePose | 2018 | Facebook AI (1 Hacker Way, Menlo Park, CA, USA) | COCO Dense | Dense surface mapping | 2D → 3D * | Pixel-to-surface mapping | ||

| Aim | Ref. | Patients | Controls | Device | Pose Estimation | Performance | Technology Comparison |

|---|---|---|---|---|---|---|---|

| Diagnosis | [98] | 33 | 31 | Canon 700D on tripod (Canon Inc, Tokyo, Japan) | Face++ API | SVM F1 0.99 Precision 0.99 Recall 0.99 LR/RF F1 0.98; DT F1 0.93 LSTM Precision 0.86 Recall 0.66, F1 0.75 | NA |

| [99] |

| Training: 1595 control subjects (YouTube Faces DB, 3425 videos). Test set: 27 controls (54 total) | Microsoft Kinect camera at 30 fps (Microsoft corporation, Redmond, DC, USA) | Deep convolutional neural network (VGG-style) |

| NA |

| Aim | Ref. | Patients | Controls | Device | Pose Estimation | Performance | Technology Comparison |

|---|---|---|---|---|---|---|---|

| Symptom monitoring | [123] | 28 | - | Handheld smartphone videos (30 fps) | PIXIE |

| NA |

| [122] | 7310 videos from 1170 PD | - | Consumer mobile devices via KELVIN™ platform (Machine Medicine Technologies, London, UK) | OpenPose | Accuracy 96.51% | NA | |

| [124] | 60 | - | Single RGB camera (25 fps) | MobileNetV2 + DUC + DSNT backbone | Overall 5-class accuracy 89.7% | NA | |

| [125] | 128 | - | Android tablet (1080p, 30 fps, Google, Mountain View, CA, USA) | YOLO | Accuracy 69.6% (item range: gait 78.1%, face 60.9%); Accuracy 98.8%; MAE 0.32 vs. clinician consensus (>inter-rater MAE 0.65) | NA |

| Ref. | Input/Representation | Model Family | Pose Estimation Software | Task | Performance |

|---|---|---|---|---|---|

| [106] | 2D keypoints | Feature-based + classical ML | OpenPose | Gait | AUC 0.98 |

| [87] | Detector + features/ML | YOLOv3 | Bradykinesia | Accuracy 81.2% | |

| [100] | Feature-based + ML | OpenPose | Gait | AUC 0.91 | |

| [107] | Feature-based + ML | OpenPose | Gait | AUC 0.96–0.99 | |

| [89] | Feature-based + ML | DeepLabCut | Bradykinesia | AUC 0.968 | |

| [92] | Sequence model on skeleton | OpenPose | Gait | AUC 0.91 Accuracy 0.86 | |

| [93] | Feature-based + ML | MediaPipe | Bradykinesia | Accuracy 75–86% | |

| [101] | End-to-end CNN/Deep | OpenPose | Gait | Accuracy 99.4% | |

| [102] | Feature-based + ML | OpenPose | Gait | Accuracy 95.8% | |

| [88] | Feature-based + ML | OpenCV Haar face detector + motion-template gradient | Bradykinesia | Accuracy 95% | |

| [108,119] | 3D pose | Feature-based + ML | HRNet + HoT-Transformer + GLA-GCN | Gait | Accuracy 93.3% |

| [98] | Feature-based + ML | Face++ API | Face expressions | F1 0.99 Precision 0.99 Recall 0.99 | |

| [99] | End-to-end CNN/Deep | Deep convolutional neural network | Face expressions | AUC 0.71 | |

| [110] | End-to-end CNN/Deep | Dense optical | Gait | Accuracy 100% | |

| [94] | Silhouette/Optical-flow/Motion | No ML | EVM | Tremor | OR 2.67 (95% CI 1.39–5.17 |

| [105] | Feature-based + ML | SPIN; SORT tracking; ablation with OpenPose | Gait | Accuracy 81% | |

| [86] | Raw video | End-to-end CNN/Deep | Victor Dibia Handtracking, preprocessing in OpenCV | Bradykinesia | AUC 0.76 |

| Aim | Ref. | Patients | Controls | Device | Pose Estimation | Performance | Technology Comparison |

|---|---|---|---|---|---|---|---|

| Motor fluctuations | [89] | 31 | - | Standard clinic video camera | DeepLabCut | ON vs. OFF state classification Accuracy 65%; Sensitivity 65%; Specificity 65% | NA |

| [127] | 12 | 12 | Wall-mounted RGB video cameras in an instrumented home (640 × 480 @ 30 fps) | OpenPose |

| NA | |

| LIDs | [128] | 9 | - | Consumer video camera (480 × 640 or 540 × 960, 30 fps) | Convolutional Pose Machines (OpenCV 2.4.9) | Best onset AUC 0.822 (UDysRS = 0.826) Best remission AUC 0.958 (UDysRS = 0.802) | NA |

| [129] | 36 | - | Consumer-grade RGB camera (30 fps) | Convolutional Pose Machines (OpenCV 2.4.9) | Pearson r = 0.74–0.83 | NA | |

| Therapy response | [135] | 5 | - | 2 × FLIR Blackfly USB 3.0 (FLIR Systems, Wilsonville, OR, USA) | DeepLabCut v2.2b6 | DLC tracking > 95% (reprojection error 0.016–0.041 px); auto epoch extraction 80%; SVM overall accuracy 85.7% | NA |

| [134] | 11 | 12 | Camera consumer ≤ 180 fps (Lumix GH5/, Panasonic Corporation, Kadoma, Japan); GoPro Hero7 (GoPro, San Mateo, CA, USA) | MediaPipe |

| NA | |

| [133] | 11 | High-frame-rate RGB cameras (≤ 180 fps) | MediaPipe |

| Accelerometer | ||

| [126] | 12 | 12 | Panasonic PV-GS400 CCD camera, 320 × 240, 15 fps (Panasonic Corporation, Kadoma, Japan) | Binary silhouettes → Kernel PCA features | Three-class gait recognition accuracy 80.51% (Non-PD vs. Drug-OFF vs. Drug-ON) using KPCA features | GAITRite® (instrumented walkway) | |

| [130] | 13 | - | Tripod-mounted RGB camera, 640 × 480 @ 30 Hz; 6-m walk | Detectron, AlphaPose, OpenPose | Macro-F1 0.22, balanced accuracy 29.5%. Model-predicted MDS-UPDRS-gait scores were lower ON vs. OFF (p = 0.017, Cohen’s d = 0.50); ON–OFF change magnitude correlated with clinicians (Kendall’s τ_b = 0.396, p = 0.010). | NA | |

| [132] | 31 | - | Single-view consumer smartphone camera | MediaPipe | Best AUC 0.79 (LR; combined body + hand), accuracy ≈ 72% (SVM/LR; combined); body-only AUC up to 0.76; hand-only ~0.69 | NA | |

| [131] | 48 | 15 | Single 2D camcorder (1080p, 30 fps), GAITRite pressure mat (CIR Systems Inc, Franklin, NJ, USA) | OpenPose |

| GAITRite® (instrumented walkway, CIR Systems Inc, Franklin, New Jersey, USA) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

di Biase, L.; Pecoraro, P.M.; Bugamelli, F. AI Video Analysis in Parkinson’s Disease: A Systematic Review of the Most Accurate Computer Vision Tools for Diagnosis, Symptom Monitoring, and Therapy Management. Sensors 2025, 25, 6373. https://doi.org/10.3390/s25206373

di Biase L, Pecoraro PM, Bugamelli F. AI Video Analysis in Parkinson’s Disease: A Systematic Review of the Most Accurate Computer Vision Tools for Diagnosis, Symptom Monitoring, and Therapy Management. Sensors. 2025; 25(20):6373. https://doi.org/10.3390/s25206373

Chicago/Turabian Styledi Biase, Lazzaro, Pasquale Maria Pecoraro, and Francesco Bugamelli. 2025. "AI Video Analysis in Parkinson’s Disease: A Systematic Review of the Most Accurate Computer Vision Tools for Diagnosis, Symptom Monitoring, and Therapy Management" Sensors 25, no. 20: 6373. https://doi.org/10.3390/s25206373

APA Styledi Biase, L., Pecoraro, P. M., & Bugamelli, F. (2025). AI Video Analysis in Parkinson’s Disease: A Systematic Review of the Most Accurate Computer Vision Tools for Diagnosis, Symptom Monitoring, and Therapy Management. Sensors, 25(20), 6373. https://doi.org/10.3390/s25206373