Abstract

Recently, aerial manipulations are becoming more and more important for the practical applications of unmanned aerial vehicles (UAV) to choose, transport, and place objects in global space. In this paper, an aerial manipulation system consisting of a UAV, two onboard cameras, and a multi-fingered robotic hand with proximity sensors is developed. To achieve self-contained autonomous navigation to a targeted object, onboard tracking and depth cameras are used to detect the targeted object and to control the UAV to reach the target object, even in a Global Positioning System-denied environment. The robotic hand can perform proximity sensor-based grasping stably for an object that is within a position error tolerance (a circle with a radius of 50 mm) from the center of the hand. Therefore, to successfully grasp the object, a requirement for the position error of the hand (=UAV) during hovering after reaching the targeted object should be less than the tolerance. To meet this requirement, an object detection algorithm to support accurate target localization by combining information from both cameras was developed. In addition, camera mount orientation and UAV attitude sampling rate were determined by experiments, and it is confirmed that these implementations improved the UAV position error to within the grasping tolerance of the robot hand. Finally, the experiments on aerial manipulations using the developed system demonstrated the successful grasping of the targeted object.

1. Introduction

Aerial manipulation, combining unmanned aerial vehicle (UAV) technology and robotic manipulators engaging in physical interactions while in flight, holds the potential to revolutionize logistics, transportation, facility management, and various other industries. A wide range of research areas have been developed for aerial manipulation, including object recognition, self-localization, map generation and navigation, and manipulator and mechanism design [1,2].

One of the significant challenges in aerial manipulation is that the distinctive UAV system, which has a floating reference frame, is subject to external disturbances such as natural wind and ground effects. Oscillations of the UAV body induce position errors between the object to be grasped and the hand leading to grasp failure or damage to the grasped object due to unexpected collisions with the fingers. In recent years, there has been an increase in aerial grasping hands that use flexible fingers to mitigate the impact of collisions between the object and the fingers [3,4,5]. In theory, positioning errors of the UAV within these hand-opening widths are tolerated; however, it is difficult to avoid unexpected finger collisions during the grasping motion if it has a slight position error which could lead to displacement or damage to the object.

Our research group has developed a multi-fingered robotic hand with proximity sensors to achieve aerial manipulation with stable grasping by addressing such position errors [6]. The optical proximity sensors attached to the palm and the fingertips of the robotic hand detects the proximal object surface without contact. Aerial manipulation by a UAV hovering near a targeted object was successfully performed using flight positioning and grasping controls based on these proximity sensors. However, because the developed system required the UAV’s self-location information for flight control, a ground-based motion capture system was employed. In addition, object recognition was not considered. Therefore, the next challenges involve object detection, encompassing object identification and localization and flight positioning to guide the UAV from a wide area into the grasping range of the robotic hand without using an external system.

The Global Positioning System (GPS), a representative positioning technology for UAV flight control, is used in many studies [7,8] and also in drone products by DJI (Shenzhen, China) and Parrot (Paris, France), two of the leading manufacturers in the drone market. In GPS-based flight positioning, Real-Time Kinematic GPS (RTK-GPS), which incorporates correctional position information from ground base stations to improve positioning accuracy, is often utilized and enables positioning flights with an accuracy of approximately 1–5 cm [9,10]. However, it cannot be used indoors, in caves, or in forests, where GPS signals are problematic to reach. For the localization of UAVs for indoor flight, a vision-based motion capture system is often employed, which captures the target UAV using cameras installed in the environment [11,12]. Motion capture typically enables highly accurate location data; however, there are issues around limiting the UAV’s flying area within the detection range and its prohibitive introduction cost.

Research is being actively conducted into technologies for self-localization and environmental mapping using onboard sensors, such as Light Detection And Ranging (LiDAR) sensors or vision sensors, for unmanned vehicles in GPS-denied environments [13,14,15]. LiDAR measures the distance to an object and determines its shape by calculating the time difference between emitting a laser beam and receiving its reflection. It is often used in conjunction with a pre-designed map to estimate self-location. In autonomous aerial manipulation, cameras are more versatile than being used solely for positioning; they can also be used for object detection and identification. Several studies [16,17,18,19] have utilized single- or multi-vision sensor(s) for Visual–Inertial Odometry (VIO) and Simultaneous Localization and Mapping (SLAM) in drones. VIO is a method for estimating a moving path by fusing data from camera(s) and an Inertial Measurement Unit (IMU), which typically includes an accelerometer and a gyroscope. These research efforts allowed for drones to create detailed maps and achieve precise localization or object avoidance in complex environments; however, these studies primarily focused on navigation and mapping. Li et al. [20] evaluated the accuracy of the drone flight based on VIO by fusing RGB-D visual odometry using an onboard Kinect camera and motion estimation from IMU and reported that the maximum indoor flight position deviation was approximately 8 cm. Gerwen et al. [14] surveyed studies on positioning using sensors fusionally, such as visual, inertial, and ultrasonic sensors, summarizing the results of their positioning accuracy. It was reported that the positioning accuracy of flights based solely on onboard sensors was, at best, 8 cm. Ubellacker et al. [5] developed an aerial manipulation system with two cameras for object detection and localization, which is similar to our system, with a soft gripper equipped. The object localization error and flight positioning error at different flight speeds were determined to be at least 4 cm and 2 cm, respectively. However, it is impossible to avoid the unexpected collisions that occur between a finger and an object during grasping. Even though extensive studies have been conducted on aerial manipulation techniques [21,22,23], few studies delve deeply into camera-based object detection and localization in the context of aerial manipulation, as well as localization for UAV positioning. This highlights the need for further research on integrating these advanced sensors with control strategies aimed at ensuring precise and stable UAV operation during active manipulation.

In this study, we developed an aerial manipulation system that does not rely on an offboard tracking system to achieve self-contained autonomous aerial manipulation in a GPS-denied environment. The system integrates two types of onboard cameras and a multi-fingered robotic hand with proximity sensors attached directly the bottom of the UAV. The cameras are used to capture the target object to be grasped and for stable flight up to the proximal graspable area. The main contributions of this paper lie in the following:

- An experimental evaluation of an improved object detection and localization algorithm that is robust to the position and attitude uncertainties inherent to UAVs by integrating information from two cameras.

- Experimental verification of the feasibility of indoor aerial manipulation based on the integrated use of onboard cameras and proximity sensors.

Utilizing a robotic hand based on proximity sensors for aerial manipulation is an original technology pioneered by our research group. To our knowledge, the highly integrative use of cameras and proximity sensors, with different detection ranges, on UAV aerial manipulation is a unique attempt in this paper. This paper is the first to leverage the strengths of cameras and proximity sensors to propose a series of aerial manipulation scenarios and demonstrate its feasibility by the developed aerial manipulation system, including accurate object location detection, flight positioning, and grasping that can correct flight errors in the proximal region.

The remainder of the paper is organized as follows. Section 2 describes the aerial manipulation scheme by the UAV using cameras and proximity sensors. Section 3 presents the configuration of the aerial manipulation system used in this study. Section 4 explains the object detection and localization based on integrated two-camera information. Section 5 describes experiments to determine the on-board camera configuration for precise positioning flight. Section 6 shows the results and evaluation of the aerial manipulation experiment, including grasping based on robotic hand proximity sensor feedback. Section 7 presents the conclusions.

2. Aerial Manipulation Scenario

The UAV system developed in this paper featured proximity sensors attached to the robotic hand. This eliminates the problem of object occlusion by the hand or the target object going out of the field of view in close proximity, which is seen in most conventional systems that merely use cameras. This study assumes aerial manipulation in indoor environments under general lighting conditions.

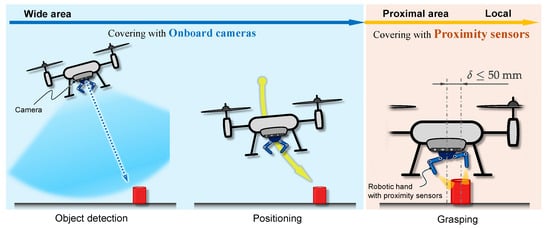

Taking advantage of the different detection areas that cameras and proximity sensors have, we adopted the flow of aerial manipulation shown in Figure 1. A target object specified by the operator is extracted from the camera vision on the flying UAV, and its 3D position is obtained using cameras. Then, to approach the targeted object, the reference position of the UAV is set directly above the object at a certain height, and the UAV is navigated using the onboard camera. Once the robotic hand position converges to a graspable range around the object, proximity sensors inside the robotic hand are activated for the hand to pre-grasp while maintaining non-contact and transitioning to a grasping posture, providing stable grasping without knocking over or damaging the object. Finally, the object is transported to the desired place again using the camera.

Figure 1.

Aerial manipulation scheme adopted for the developed UAV system equipped with a multi-fingered robotic hand with proximity sensors.

To determine the reliable grasping range of the robotic hand, we conducted a preliminary experiment using the same robotic hand used in this study, shown in Figure 2. The reliable grasping range is determined by the range of motion of the fingers and the detection range of the proximity sensors. The maximum opening width of the hand is 160 mm (80 mm on either side from the center of the hand). We verified the following ability of the fingertip proximity sensor-based pre-grasp-controlled single finger to the target plane, which was oscillated in the normal direction of the fingerpad, with an amplitude from 10 mm to 50 mm and a frequency from Hz to Hz. Due to the pre-grasp control, the fingertip follows the movement of the facing surface while maintaining a direct face and keeping a certain distance. The experimental results demonstrate that the finger could follow any oscillated plane while maintaining a distance without colliding with the surface when the target distance of the pre-grasp control was set to 30 mm. Based on the result, we determined the reliable grasping range to be 80 mm − 30 mm = 50 mm from the center axis of the robotic hand. Therefore, there are critical conditions for the UAV that must be met for the robotic hand to grasp an object successfully:

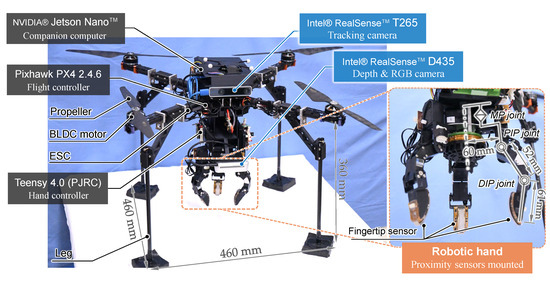

Figure 2.

Overview of the developed UAV and multi-fingered robotic hand.

- Positioning: The UAV must be positioned within a 50 mm radius around the object so that the robotic hand can grasp it reliably using proximity sensor information. To clarify, accurate flight positioning capability is required.

- Stability: The UAV must maintain a stable hover to minimize the movement of the robotic hand while it is positioned around the object. In other words, high positioning precision is required.

- Time allocation: The UAV must remain steady long enough for the robotic hand to complete the grasping action.

In this paper, we validated the UAV flight and aerial manipulation, specifically focusing on object detection and localization and flight positioning for approaching the object based on camera information.

3. System Configuration and Procedure for Aerial Manipulation

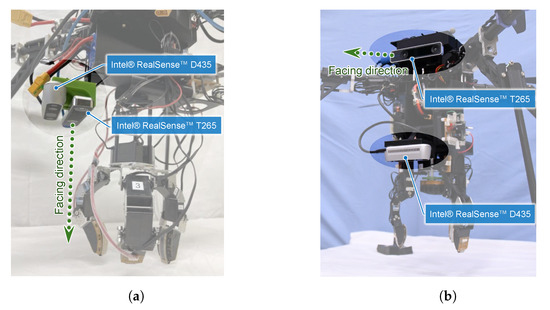

Figure 2 shows the overall configuration of our aerial manipulation system, which is equipped with two types of cameras and a multi-fingered robotic hand with proximity sensors. The total weight of the system is , including the robot hand of .

3.1. UAV Platform

The UAV structure is built based on a Tarot IRON MAN 650 platform with four axes; namely, the UAV is configured as a quadrotor. Each axis is equipped with a brushless DC motor and an electronic speed controller (ESC). The UAV is equipped with carbon fiber pipe legs for safety landing. Lithium polymer batteries for the electronic components, such as computers, cameras, and a robotic hand, were mounted on the platform. The UAV platform has space to install a battery for the motors; however, power for motors was supplied by an offboard power supply to ensure stable evaluation with experimental results that are not affected by voltage drops in the battery.

3.2. Multi-Fingered Robotic Hand Using Proximity Sensors

The right picture in Figure 2 shows the overview of the multi-fingered robotic hand for aerial manipulation developed based on our previous study [6]. Each finger is configured with two flexion joints and one pivot joint, resulting in a total of nine degrees of freedom in three fingers. A proximity sensor is attached to three fingertips. The sensor is a Net-Structured Proximity Sensor (NSPS) consisting of an analog network circuit with infrared photoreflector elements, which contains a light-emitting diode and a phototransistor in a package, arranged in an grid. The amount of reflected infrared light received by each phototransistor varies depending on the distance and inclination between the finger and a nearby object, and the total current and current distribution of the NSPS change accordingly [24]. Using this principle, the multi-fingered hand can determine the appropriate grasping method without contacting the object.

The optical NSPS is marked by its high-speed response, allowing for it to obtain information on relative distance and orientation within 1 ms. Additionally, since there are no obstructions between the sensors inside the hand and the object, the occlusion problem that often troubles cameras can be solved. On the other hand, its detection range is relatively narrow. Thus, in a series of aerial manipulations, the UAV system needs to detect the target object from a wide area and flight close to it until the UAV goes within the detection range of the proximity sensors on the hand, relying on cameras.

3.3. Cameras

The onboard cameras to be used in our UAV system targeted in this study are required to have two functions: (1) the ability to detect the targeted object from a distance and localize its relative position, and (2) the ability for self-localization for navigation.

For object detection, we adopted the Intel® RealSense™ D435 (Intel Corporation, Santa Clara, CA, USA) (hereafter called D435) camera [25], which boasts a wide measurement range. This sensor is equipped with an RGB camera and two depth sensors, obtaining real-time depth images using an active stereo method with an IR projector. The camera is capable of 3D position detection at a high frame rate, making it less likely to lose sight of the targeted object even when swaying due to external disturbances on a UAV.

As an alternative to a motion capture system fixed in the outside world, we adopted the Intel® RealSense™ T265 (Intel Corporation, Santa Clara, CA, USA) (hereafter called T265) camera [26], which can be mounted on a UAV to enable self-localization. The T265 is equipped with a stereo fisheye camera, an IMU, and a built-in processor. The camera employs a VIO to provide an accurate real-time estimate of the camera’s position and motion without using markers. This fusion of data supports high-frequency updates, up to 200 Hz for the gyroscope, ensuring precise and responsive tracking in various environments.

3.4. System

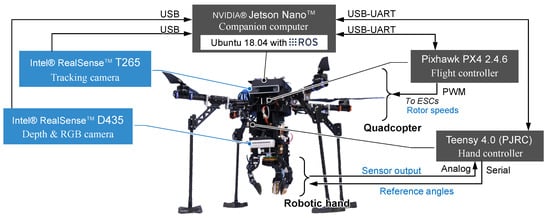

The system architecture is illustrated in Figure 3. This study centers on UAV control and object detection, which involves advanced image processing. To handle these computationally intensive tasks, NVIDIA® (Santa Clara, CA, USA) Jetson Nano is employed as a companion computer, providing the necessary processing power and efficiency for practical image analysis and real-time control. The two cameras are connected to the computer via USB. The companion computer has the Robot Operating System (ROS) installed, which performs the peripheral device management. The flight controller, Pixhawk PX4 2.4.6, is connected to the computer via UART communication to manage the UAV’s position and orientation control. Flight commands and current attitude are exchanged through the MAVROS package using the Micro Air Vehicle Link (MAVLink) communications protocol. The companion computer is operated via remote access from a ground station Windows computer.

Figure 3.

System architecture diagram of the aerial manipulation system.

The proximity sensor data acquisition and the angle control of the finger joint servo motors of the robotic hand are completed by a hand controller executed on a microcomputer without the need for a companion computer. The hand controller and companion computer are connected via UART communication, allowing for them to stream the output values of the proximity sensors.

3.5. Experimental Environment

All flight experiments were conducted in a 3 m cubic cage installed in a sufficiently large room. Note that the cage mesh is not detected by the two cameras and, therefore, does not affect the system’s positioning or object detection accuracy. The following constraints should directly impact the performance evaluation of the developed system intended for indoor aerial manipulation.

- Environmental factors: External conditions, particularly including lighting, can affect camera accuracy. The camera used for object detection must be robust against light reflections to ensure precise measurements.

- Ground effect: Most aerial manipulation involves picking up an object that has been placed on the ground, which can be exposed to ground effect and loss of stability while hovering over the object.

3.6. Procedure for Aerial Manipulation

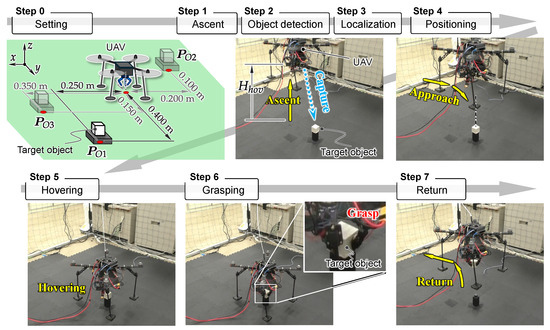

The procedure for aerial manipulation is as follows, as shown in Figure 4.

Figure 4.

Aerial manipulation procedure.

- Ascent: Ascend the UAV to a height of from the ground.

- Object detection: Identify the target object by using a D435 camera.

- Localization: Determine the targeted object position relative to the UAV by using D435 and T265 during hovering only once.

- Positioning: Navigate the UAV based on the self-localization using the T265 camera fused with the flight controller to align itself directly above the targeted object while maintaining a height of .

- Hovering: Descend the UAV slowly until a height of just above the object, then hover precisely over it for a time period .

- Grasping: Grasp the object with the robotic hand using its proximity sensor technology while hovering.

- Return: After grasping, return to its initial starting position while carrying the object.

In the UAV flight maneuvers, such as positioning and hovering, the UAV position and orientation are controlled using a PID controller within the flight controller to reach the target coordinates based on self-localization using the T265 camera.

4. Object Detection and Localization Using Onboard Cameras

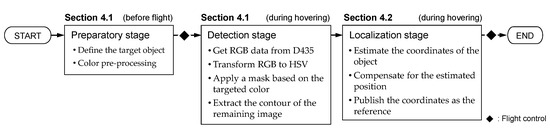

To achieve autonomous aerial manipulation, we developed a solid object detection algorithm that accounts for the UAV’s movement imperfections to provide accurate coordinates for the target object. Figure 5 outlines the different steps involved in the object detection and localization algorithm using both the D435 and T265 cameras.

Figure 5.

Schematic diagram of the object detection and localization algorithm.

For object detection, we assume that the color of the object is known. The D435 camera mounted below the UAV body to face the ground was used for object detection, enabling it to detect and locate the target object. The OpenCV library was used for image processing on the companion computer.

4.1. Target Object Detection Using D435

The object detection method using the average HSV (Hue, Saturation, Value) value for image processing is effective in detecting a wide range of similar color objects. However, it may also detect objects of colors other than the specific color, leading to false positives.

It is essential to improve detection accuracy and success rate, as detecting the wrong object can pose significant dangers during aerial manipulations. To mitigate these issues, we introduced a pre-processing stage to the object detection algorithm. In this step, the user manually determines the HSV color of different objects (of different colors) and then stores these values in a file. This stage involves precisely determining the HSV value of the targeted object before the flight, ensuring that the detection is tailored specifically to the exact color of the object. Then, apply a mask based on the HSV value of the targeted object determined by the user at the beginning of the flight. Then, find the contours and draw a bounding box to the largest contour.

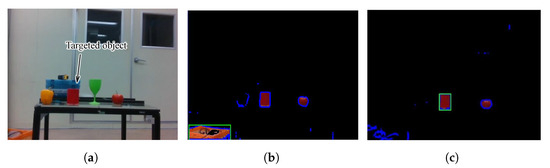

Figure 6 presents the output of the algorithms with/without using the pre-processing step. In this experiment, the intended target was the red cylinder on the table. By utilizing the pre-processing step, the algorithm was able to detect the object of the intended color even in an environment with similarly colored objects, while one without the pre-processing step mistakenly identified the orange box instead due to poor accuracy. This process allows for more reliable and accurate detection of the intended object, even in complex environments with multiple-colored objects and varying lighting conditions.

Figure 6.

Object detection output including the color pre-processing stage: (a) the RGB output, (b) the RGB output after applying a mask without pre-processing step, (c) the RGB output after applying a mask with pre-processing step. The green boxes in (b,c) represent the bounding box and indicate detected objects by the algorithm.

4.2. Targeted Object Localization Using D435 and T265 Cameras

4.2.1. Method

One of the typical classical object localization methods using RGB-D cameras is the intrinsic parameter-based method (hereafter referred to as the IP method). The IP method utilizes the camera’s intrinsic parameters, which are focal lengths , and principal points , , to directly map 2D pixel coordinates to 3D real-world coordinates. When the depth camera is fixed, the targeted object coordinates are obtained by normalizing the pixel coordinates and scaling them by the detected depth , representing the distance between the camera and object.

where represents the horizontal and vertical pixel coordinate in the image.

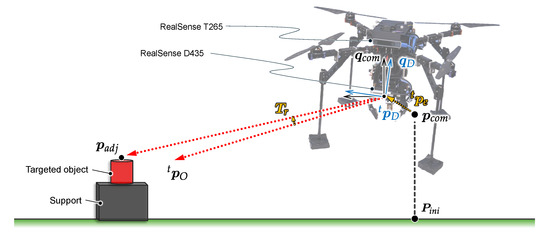

To improve the accuracy of object localization, addressing the inherent instability of tilting and drifting caused by external factors like the ground effect or internal factors like motor vibrations, we leveraged real-time attitude data from the T265 camera on the UAV’s movements. Figure 7 illustrates the object localization compensation method. Here, the relative coordinates of the object with respect to the UAV are obtained, and the command is given to be incremented to the initial hovering position.

Figure 7.

Compensation for object localization based on UAV position and orientation (represented on a 2D plane for clarity).

The orientation of the UAV is defined using a quaternion expressed as Equation (4).

where , , and are the components of the imaginary vector, is the scalar component, and , , and are the imaginary units. The relative quaternion , which represents the rotation difference between the commanded quaternion and the UAV’s actual orientation, is expressed as Equation (5).

where the operation symbol ⊗ represents the Kronecker product. Using , we can determine the rotation matrix associated with the current orientation.

To convert the object coordinates from the D435 camera system to the T265 camera system, a transformation matrix is introduced and expressed in Equation (7).

where,

represents the coordinates of the targeted object determined using the D435 image, and represents it in the T265 coordinate system.

By applying the rotation matrix and introducing the translational position error that takes into account the positional error of the UAV regarding of the command, we effectively adjust the object’s coordinates by Equation (9).

4.2.2. Experimental Evaluation

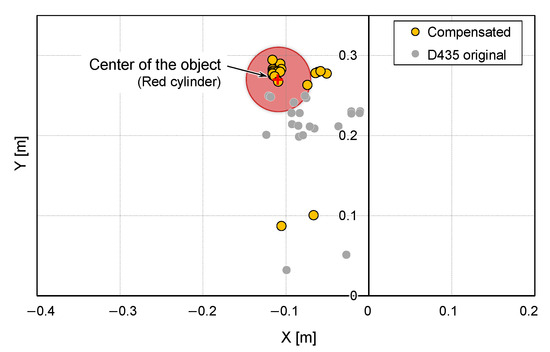

To verify object localization using D435 and T265 cameras, we conducted a object detection experiment using a hovering UAV. A cylindrical red object was used as a target object. The UAV hovered with the flight command coordinates of [m] while the targeted object placed some distance away from the UAV; in this experiment, [m] from the initial position. The target object was identified using the D435 at the hovered position, and its relative position coordinates with the UAV were calculated using the proposed algorithm. To evaluate the object localization accuracy, multiple computations were executed during 10 s of hovering.

The object localization results are shown in Figure 8. During hovering, the T265 camera returned a positional error of up to 37 mm in the x-coordinate and up to mm in the y-coordinate. The raw output from the D435 camera was scattered and inaccurate, largely due to the sensitivity of the camera to the UAV’s attitude variations during the flight. By applying the proposed method, the detected coordinates are successfully compensated to align closely with the actual position. As a result, although the algorithm doesn’t solve the presence of outliers, which can be caused by a poor detection of the distance to the object due to the imperfect calibration of the camera or reflection problems, we achieved localization with sufficient accuracy for our usage even when the UAV was not perfectly stable.

Figure 8.

Targeted object position estimation before and after compensation.

5. UAV Positioning Using Onboard T265 Camera

The T265 camera has been employed in various research scenarios, such for the localization of terrestrial mobile robots [27,28] and state estimations for aerial [29,30] and underwater vehicles [31]. Visual SLAM using this product is performed based on image features in the video frames [32]. Therefore, the mounting orientation of the camera on the robot base should affect the accuracy of self-localization; however, its orientation, i.e., configuration—down-facing or front-facing—has not been directly compared in the context of aerial manipulation. Understanding which configuration yields more precise and stable flight control is crucial for enhancing our system.

5.1. Determination of Camera Orientation

The features of each configuration of the T265 camera in terms of its use for aerial manipulation can be described as follows.

- •

- Down-facing configuration:

- Enhance the UAV’s ability to locate and maintain its position over a target object, especially in applications requiring precise hovering above the object.

- Allows for work to be performed integrally in tandem with the D435 for SLAM, providing detailed environmental mapping and precise localization by leveraging both cameras’ data streams [33].

- Potentially difficult to take sufficient features from the floor to estimate its position.

- Occlusion issues can be caused by including a robotic hand in the field of view.

- •

- Front-facing configuration:

- Effectively estimate its position due to sufficient changes in scenery and environmental features.

- Less likely that the field of vision will be obstructed by the UAV’s body or components.

- Hard to capture the targeted object in the field of view when the UAV approaches above it.

Given these considerations, we explored which configurations provide better positional accuracy and flight stability for our UAV during aerial manipulation tasks.

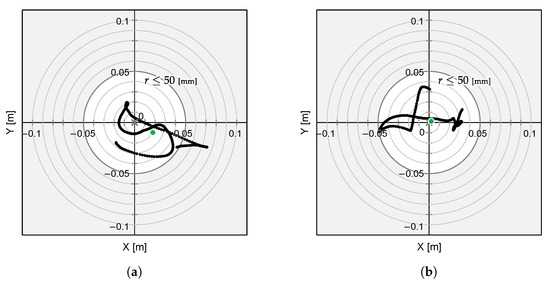

We conducted a series of flight experiments involving object approach and homing, except for the grasping step, using the UAV with different T265 camera orientations, down-facing and front-facing, as shown in Figure 9. The ascent height was set to m, the grasping altitude was set to m, and the hovering period was set to s. The attitude sampling rate of the T265 camera was set to the default setting of 30 Hz.

Figure 9.

Camera configurations: (a) down-facing configuration, (b) front-facing configuration.

To interpret the experimental results, we employed two metrics, the Mean Distance Error (MDE) and the Twice the Distance Root Mean Square (2DRMS). The MDE represents the distance between the reference coordinates and the mean of the actual position coordinates, and a two-dimensional (2D) MDE is defined by Equation (10):

where,

is the mean error of the a coordinate (a is replaced by x or y), N is the sample size, and and are the observed and reference locations, respectively, for the ith sample . MDE can be used as a metric of UAV positioning accuracy, and a smaller MDE indicates a higher UAV positioning accuracy.

The 2D DRMS is a measure of the mean error magnitude in position estimation, as calculated using Equation (12).

where is the standard deviation of the a-axis (a is replaced by x or y) displacement calculated using Equation (13).

where is the sample mean. represents the radius within which approximately 95% of all positional errors fall, whereas represents the radius that contains 68% of the probability. can indicate the UAV positioning flight precision. These statistical measures are commonly used in fields such as ballistics and GPS-based navigation to quantify positional accuracy and error [34], and they make it a valuable metric for assessing the reliability and precision of positioning systems.

As a result of the experiments, both camera configuration demonstrations were conducted successfully. The position coordinates of the flying UAV are depicted in Figure 10. The 2D planar MDE, , and 2DRMS are summarized in Table 1, which were evaluated during a 7 s hovering directly over the object that was localized using cameras.

Figure 10.

Flight experiment result using (a) the down-facing configuration and (b) the front-facing configuration. The grey cross markers indicate the object’s position and the green dots represent the mean position of the UAV’s flight path.

Table 1.

Down-facing and front-facing approach performance comparison.

The results indicate that the positioning errors by the front-facing configuration are significantly reduced, and suggest that this configuration provides a more accurate positioning solution for our UAV system. While the visual odometry relying on floor-based features alone in a down-facing configuration leads to a lack of accuracy of the UAV’s positioning, the front-facing configuration allows for more accurate localization due to the increased visibility and availability of features in the environment. By offering lower positional errors, the front-facing camera orientation ensures better flight accuracy during aerial manipulation tasks. However, even the front-facing configuration has a 2DRMS error, superior to 50 mm, which means that the flight stability is insufficient and the robotic hand requirement is not fulfilled yet.

5.2. Determination of the Attitude Sampling Rate

To improve the 2DRMS further, we conducted multiple flight experiments to determine the attitude sampling rate of the T265 camera, which potentially affects flight precision. The T265 camera was oriented towards a front-facing and attitude sampling rate set to 30, 80, 150 and 200 Hz. The experiments followed the same procedures described above.

The results of the 2DRMS measured during the UAV’s hovering phase over the targeted object are summarized in Table 2. The results indicate that increasing the attitude sampling rate leads to a notable reduction in 2DRMS. A higher sampling rate enables more rapid and precise adjustments of the UAV’s estimated position, which enhances the overall stability and accuracy of the system. As a result, the system can better account for dynamic changes and correct deviations in real time, leading to a significant reduction in the 2DRMS. Finally, we obtained a more accurate and stable system, with a 2DRMS of mm when the attitude sampling rate was 200 Hz, which meets the requirement given in Section 2.

Table 2.

Evolution of the 2DRMS with the T265 attitude sampling rate.

Based on these results, we determined to orient the T265 camera into a front-facing position and set the attitude sampling rate to 200 Hz.

6. Experiments on Aerial Manipulation

Through the flight experiments, we verified the feasibility of aerial manipulation using our UAV system. More precisely, we evaluated whether the developed UAV system, including the object detection algorithm and positioning flight based on onboard cameras, is sufficient to achieve our main goal: reliable and precise aerial manipulation.

6.1. Experimental Method

As a target grasping object in experiments, a white cube with side lengths of 60 mm and a mass of kg, which ensured reliable detection and grasping by the hand’s proximity sensors, was employed. The ascent height was set to m, and the hovering period was set to s.

To evaluate the precision of the UAV positioning and the object detection algorithm more effectively, we prepared three different object placement positions, [m], [m], and [m], relative to the UAV initial position, as illustrated in the Step 0 in Figure 4. We conducted six aerial manipulation experiments for three different object placement positions.

6.2. Results

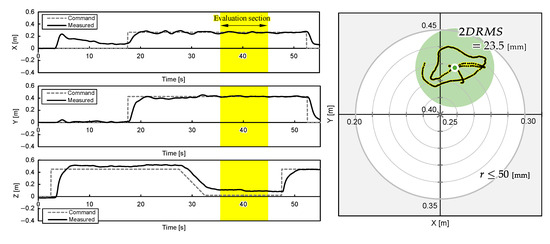

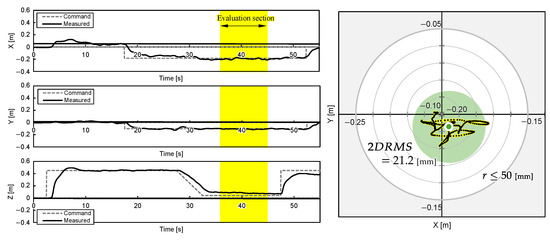

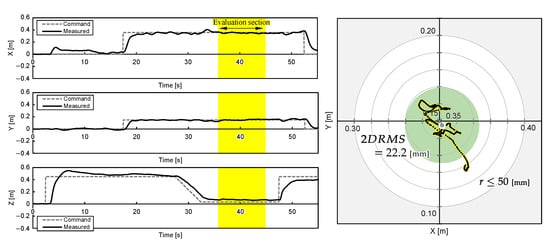

One experimental result for each object position is shown in Figure 11, Figure 12 and Figure 13. The grey cross markers in the right graphs indicate the object’s position and the green dots represent the mean position of the UAV’s flight path. The green-shaded region in the right graphs illustrates the region of 2DRMS. The snapshots in Figure 4 show a series of aerial manipulations performed in this experiment. In all experiments in different object placements, the UAV aerial manipulation system was able to grasp the objects, confirming the feasibility of aerial manipulation with the developed system. From these results, we can observe that the UAV hovered accurately around the position of the object, as determined by the D435 and T265 onboard cameras, and the UAV’s hovering appears relatively stable.

Figure 11.

UAV attitudes during aerial manipulation Experiment 1: object at [m].

Figure 12.

UAV attitudes during aerial manipulation Experiment 2: object at [m].

Figure 13.

UAV attitudes during aerial manipulation Experiment 3: object at [m].

The 2D planar MDE, , and 2DRMS during 10 hovering, which are shaded yellow in Figure 11, Figure 12 and Figure 13, and the time the flight height stabilized after the hovering command was sent, are evaluated and summarized in Table 3. The results indicate that the average MDEs for Experiment 2 and 3 was sufficiently small compared to the graspable range of the robotic hand, and the positioning accuracy achieved mm. The evaluated DRMS and 2DRMS indicated a 68% probability that the UAV stays within a radius of a mm circle and a 95% probability that it stays within a radius of a mm circle. Figure 11, Figure 12 and Figure 13 show that, during grasping stage in the three trials, the center of the robotic hands was within a 50 mm radius, which is the graspable range of the robotic hand. Overall, the results suggest that our UAV system can realize aerial manipulation by staying within the graspable range of the robotic hand during the entire grasping period; therefore, the requirement was fulfilled by using the proposed methods. However, the MDE in Experiment 1 is relatively large, as shown in Table 3. One possible cause of this issue was the drift in the built-in IMU during localization by T265, resulting in inaccuracies in self-location. This positional error value is almost consistent with the results of related studies [5] using similar systems, suggesting the need for further improvement of the VIO method. On the other hand, the experimental results also reveal that the positional error of the developed aerial manipulation system outperforms that of other existing studies using visual sensors [14,20].

Table 3.

Aerial manipulation performance evaluation.

7. Conclusions

In this study, we developed a UAV system for autonomous aerial manipulation using onboard cameras for object localization and UAV positioning, as well as a multi-fingered robotic hand with proximity sensors for stable grasping. We developed an object detection algorithm that compensates for onboard camera information robust to inherent instability caused by external disturbances, thereby improving the accuracy of object localization despite the imperfect movement of the UAV. We also improved flight accuracy by determining the facing orientation of the camera used for the visual odometry and the attitude sampling rate. The aerial manipulation experiment using the developed UAV system demonstrates that the system could maintain a 95% probability for staying within a radius of 50 mm, which is the robotic hand’s requirement.

As a future challenge, we aim to apply the system for more complex aerial manipulation tasks, including grasping a moving object.

Author Contributions

Conceptualization, R.S., E.M.B., C.S.R. and A.M.; methodology, E.M.B. and C.S.R.; software, E.M.B., C.S.R. and T.W.; validation, E.M.B. and T.W.; formal analysis, E.M.B.; investigation, E.M.B., C.S.R. and T.W.; resources, R.S. and A.M.; data curation, R.S., E.M.B. and A.M.; writing—original draft preparation, R.S. and E.M.B.; writing—review and editing, C.S.R., T.W. and A.M.; visualization, R.S. and E.M.B.; supervision, A.M.; project administration, A.M.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article; further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ladig, R.; Paul, H.; Miyazaki, R.; Shimonomura, K. Aerial Manipulation Using Multirotor UAV: A Review from the Aspect of Operating Space and Force. J. Robot. Mechatron. 2021, 33, 196–204. [Google Scholar] [CrossRef]

- Ollero, A.; Tognon, M.; Suarez, A.; Lee, D.; Franchi, A. Past, Present and Future of Aerial Robotic Manipulators. IEEE Trans. Robot. 2022, 38, 626–645. [Google Scholar] [CrossRef]

- Fishman, J.; Ubellacker, S.; Hughes, N.; Carlone, L. Dynamic Grasping with a “Soft” Drone: From Theory to Practice. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 4214–4221. [Google Scholar] [CrossRef]

- Cheung, H.C.; Chang, C.W.; Jiang, B.; Wen, C.Y.; Chu, H.K. A Modular Pneumatic Soft Gripper Design for Aerial Grasping and Landing. In Proceedings of the 2024 IEEE 7th International Conference on Soft Robotics (RoboSoft), San Diego, CA, USA, 14–17 April 2024; pp. 82–88. [Google Scholar] [CrossRef]

- Ubellacker, S.; Ray, A.; Bern, J.M.; Strader, J.; Carlone, L. High-speed Aerial Grasping Using a Soft Drone With Onboard Perception. npj Robot. 2024, 2, 5. [Google Scholar] [CrossRef]

- Wada, T.; Inoue, Y.; Sasaki, K.; Sato, R.; Ming, A. Development of a Multifingered Hand With Proximity Sensors for Aerial Manipulation. IEEE Sens. J. 2024, 24, 35664–35672. [Google Scholar] [CrossRef]

- Suzuki, T.; Takahashi, Y.; Amano, Y. Precise UAV position and attitude estimation by multiple GNSS receivers for 3D mapping. In Proceedings of the 29th International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS+ 2016), Portland, OR, USA, 12–16 September 2016; pp. 1455–1464. [Google Scholar] [CrossRef]

- Wang, Y.; Wei, G.; Guan, Q.; Liu, Y. A Novel Positioning System of UAV Based on IMA-GPS Three-Layer Data Fusion. IEEE Access 2020, 8, 158449–158458. [Google Scholar] [CrossRef]

- Rieke, M.; Foerster, T.; Geipel, J.; Prinz, T. High-precision Positioning and Real-time Data Processing of UAV-systems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 38, 119–124. [Google Scholar] [CrossRef]

- Abdelfatah, R.; Moawad, A.; Alshaer, N.; Ismail, T. UAV Tracking System Using Integrated Sensor Fusion with RTK-GPS. In Proceedings of the 2021 International Mobile, Intelligent and Ubiquitous Computing Conference (MIUCC), Cairo, Egypt, 26–27 May 2021; pp. 352–356. [Google Scholar] [CrossRef]

- Wang, H.; Chen, C.; He, Y.; Sun, S.; Li, L.; Xu, Y.; Yang, B. Easy Rocap: A Low-Cost and Easy-to-Use Motion Capture System for Drones. Drones 2024, 8, 137. [Google Scholar] [CrossRef]

- Choutri, K.; Lagha, M.; Meshoul, S.; Shaiba, H.; Chegrani, A.; Yahiaoui, M. Vision-Based UAV Detection and Localization to Indoor Positioning System. Sensors 2024, 24, 4121. [Google Scholar] [CrossRef]

- Jang, J.T.; Youn, W. Autonomous Indoor Proximity Flight of a Quadcopter Drone Using a Directional 3D Lidar and VIO Sensor. In Proceedings of the 2022 22nd International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 27 November–1 December 2022; pp. 1981–1983. [Google Scholar] [CrossRef]

- Gerwen, J.V.V.; Geebelen, K.; Wan, J.; Joseph, W.; Hoebeke, J.; De Poorter, E. Indoor Drone Positioning: Accuracy and Cost Trade-Off for Sensor Fusion. IEEE Trans. Veh. Technol. 2022, 71, 961–974. [Google Scholar] [CrossRef]

- Sandamini, C.; Maduranga, M.W.P.; Tilwari, V.; Yahaya, J.; Qamar, F.; Nguyen, Q.N.; Ibrahim, S.R.A. A Review of Indoor Positioning Systems for UAV Localization with Machine Learning Algorithms. Electronics 2023, 12, 1533. [Google Scholar] [CrossRef]

- Yongtian, W.; Jie, T.Y.; Gimin, S.; Kenny, L.B.Y.; Srigrarom, S. Autonomous Customized Quadrotor With Vision-Aided Navigation For Indoor Flight Challenges. In Proceedings of the 2022 8th International Conference on Mechatronics and Robotics Engineering (ICMRE), Munich, Germany, 10–12 February 2022; pp. 33–37. [Google Scholar] [CrossRef]

- Lu, Y.; Xue, Z.; Xia, G.S.; Zhang, L. A survey on vision-based UAV navigation. Geo-Spat. Inf. Sci. 2018, 21, 21–32. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Alam, M.M.; Moh, S. Vision-Based Navigation Techniques for Unmanned Aerial Vehicles: Review and Challenges. Drones 2023, 7, 89. [Google Scholar] [CrossRef]

- Zhuang, L.; Zhong, X.; Xu, L.; Tian, C.; Yu, W. Visual SLAM for Unmanned Aerial Vehicles: Localization and Perception. Sensors 2024, 24, 2980. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Li, Q.; Cheng, N.; Wu, Q.; Song, J.; Tang, L. Combined RGBD-inertial based state estimation for MAV in GPS-denied indoor environments. In Proceedings of the 2013 9th Asian Control Conference (ASCC), Istanbul, Turkey, 23–26 June 2013; pp. 1–8. [Google Scholar] [CrossRef]

- Li, G.; Liu, X.; Loianno, G. RotorTM: A Flexible Simulator for Aerial Transportation and Manipulation. IEEE Trans. Robot. 2024, 40, 831–850. [Google Scholar] [CrossRef]

- Paul, H.; Ono, K.; Ladig, R.; Shimonomura, K. A Multirotor Platform Employing a Three-Axis Vertical Articulated Robotic Arm for Aerial Manipulation Tasks. In Proceedings of the 2018 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Auckland, New Zealand, 9–12 July 2018; pp. 478–485. [Google Scholar] [CrossRef]

- Zhang, G.; He, Y.; Dai, B.; Gu, F.; Yang, L.; Han, J.; Liu, G. Aerial Grasping of an Object in the Strong Wind: Robust Control of an Aerial Manipulator. Appl. Sci. 2019, 9, 2230. [Google Scholar] [CrossRef]

- Koyama, K.; Shimojo, M.; Ming, A.; Ishikawa, M. Integrated control of a multiple-degree-of-freedom hand and arm using a reactive architecture based on high-speed proximity sensing. Int. J. Robot. Res. 2019, 38, 1717–1750. [Google Scholar] [CrossRef]

- Intel® RealSense™. Depth Camera D435. Available online: https://www.intelrealsense.com/depth-camera-d435/ (accessed on 13 November 2024).

- Intel. Intel® RealSense™Tracking Camera T265. Available online: https://www.intel.com/content/www/us/en/products/sku/192742/intel-realsense-tracking-camera-t265/specifications.html (accessed on 13 November 2024).

- Park, S.Y.; Lee, U.G.; Baek, S.H. Visual Odometry of a Low-Profile Pallet Robot Based on Ortho-Rectified Ground Plane Image from Fisheye Camera. Appl. Sci. 2023, 13, 9095. [Google Scholar] [CrossRef]

- Bayer, J.; Faigl, J. On Autonomous Spatial Exploration with Small Hexapod Walking Robot using Tracking Camera Intel RealSense T265. In Proceedings of the 2019 European Conference on Mobile Robots (ECMR), Prague, Czech Republic, 4–6 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, X.; Ma, L.; Wang, D.; Su, B. Autonomous UAV-Based Position and 3D Reconstruction of Structured Indoor Scene. In Proceedings of the 2021 China Automation Congress (CAC), Beijing, China, 22–24 October 2021; pp. 3914–3919. [Google Scholar] [CrossRef]

- Marchisotti, D.; Zappa, E. Feasibility of Drone-Based Modal Analysis Using ToF-Grayscale and Tracking Cameras. IEEE Trans. Instrum. Meas. 2023, 72, 5016210. [Google Scholar] [CrossRef]

- Xu, Z.; Haroutunian, M.; Murphy, A.J.; Neasham, J.; Norman, R. An Integrated Visual Odometry System for Underwater Vehicles. IEEE J. Ocean. Eng. 2021, 46, 848–863. [Google Scholar] [CrossRef]

- Grunnet-Jepsen, A.; Harville, M.; Fulkerson, B.; Piro, D.; Brook, S.; Radford, J. Introduction to Intel® RealSense™ Visual SLAM and the T265 Tracking Camera. Available online: https://dev.intelrealsense.com/docs/intel-realsensetm-visual-slam-and-the-t265-tracking-camera (accessed on 13 November 2024).

- Tsykunov, E.; Ilin, V.; Perminov, S.; Fedoseev, A.; Zainulina, E. Coupling of localization and depth data for mapping using Intel RealSense T265 and D435i cameras. arXiv 2020, arXiv:2004.00269. [Google Scholar]

- Specht, M. Determination of Navigation System Positioning Accuracy Using the Reliability Method Based on Real Measurements. Remote Sens. 2021, 13, 4424. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).