Cooperative Jamming and Relay Selection for Covert Communications Based on Reinforcement Learning

Abstract

1. Introduction

1.1. Background

1.2. Motivation and Contributions

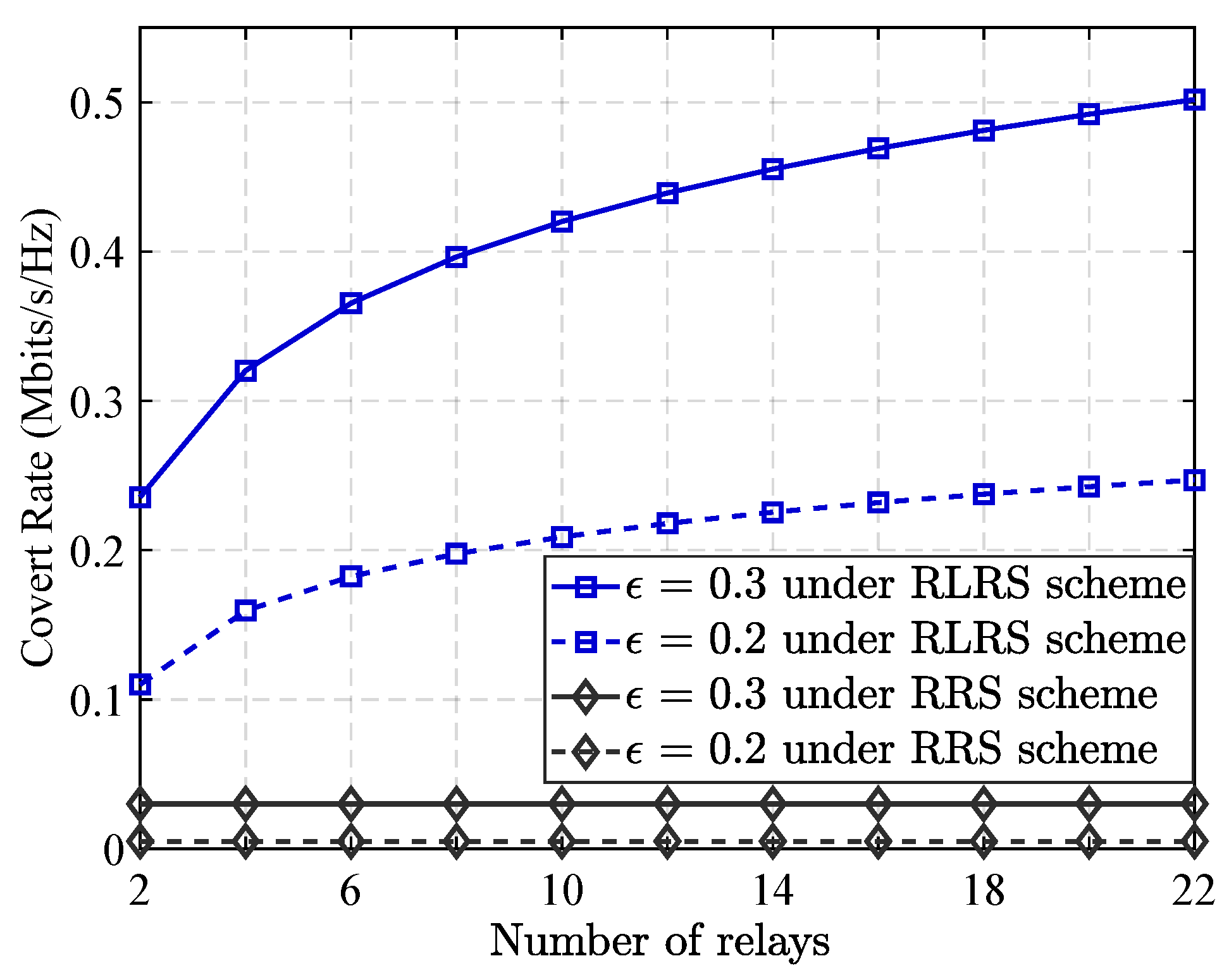

- We introduce an innovative relay selection approach utilizing reinforcement learning principles which dynamically optimizes relay selection for signal forwarding and cooperative jamming, enabling adaptive performance in dynamic networks. Unlike random relay selection, which ignores channel conditions and warden activity, RLRS employs the multi-agent deep reinforcement learning algorithm to make context-aware decisions. This simultaneous optimization of signal quality enhancement and strategic jamming disruption maximizes covert rates while maintaining high detection error probability at colluding wardens.

- We develop a Markov game model to formalize relay–jammer interactions as a multi-objective optimization problem, integrating network state information including channel fading, path loss, and warden collusion intensity. The model’s state space incorporates historical channel data and reflection matrices, while its action space focuses on balancing communication quality and covertness through optimized relay/jammer selection. This framework enables distributed relays to learn optimal strategies via trial-and-error interactions, adapting to varying collusion intensities in (m; M) scenarios where wardens use equal gain combining for detection.

- Extensive simulations validate RLRS’s superiority over RRS across key metrics: maximum covert rate, transmission outage probability, and robustness to network variations. Results show RLRS steadily improves covert rate with more relays (unlike RRS’s random selection which yields no gains), reduces outage probability through spatial diversity and cooperative jamming (outperforming RRS even under high AWGN), and identifies optimal jamming power levels to avoid self-interference while disrupting detection. These findings confirm RL-based approaches’ effectiveness for covert communications and provide insights for future physical layer security designs.

1.3. Related Work

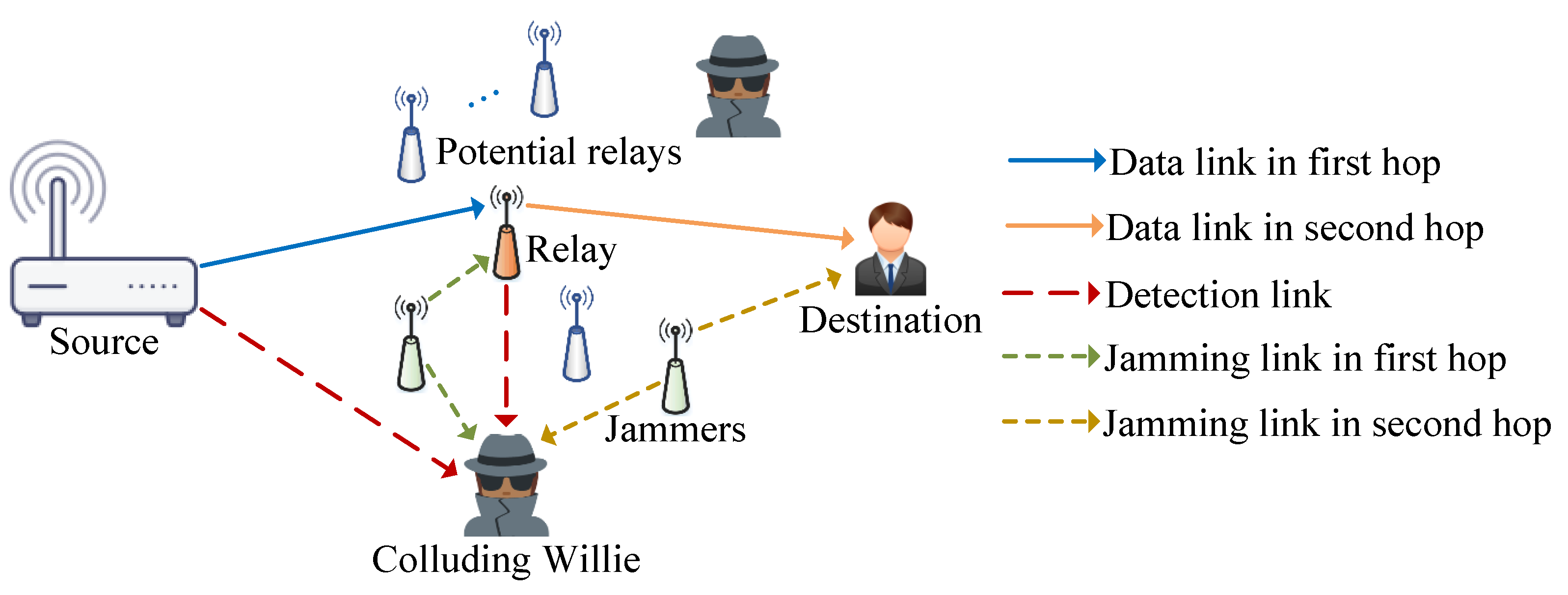

2. System Model

2.1. Signal Transmission Model

2.2. Channel Model

2.3. Relay Selection Scheme

2.4. Performance Metrics

3. Covert Performance Under RRS Scheme

3.1. Detection at Colluding Willies

3.2. Covert Rate Modeling

3.3. Covert Rate Optimization

4. Covert Performance Under RLRS Scheme

4.1. Covert Rate Optimization

4.2. Multiobjective Optimization Based on Markov Game

4.3. Beamforming Optimization and Relay Selection Based on MADDPG

| Algorithm 1 MADDPG algorithm. |

|

5. Simulation Results

5.1. Simulation Setup

5.2. Performance Analysis of the Proposed RLRS Scheme

6. Discussion and Future Work

6.1. Real-World Channel Complexity and Model Adaptability

6.2. Computational Complexity for Resource-Constrained Devices

6.3. Adaptability to Dynamic Adversarial Strategies

6.4. Resilience Against Insider Threats

6.5. Scalability to Multi-User Scenarios

6.6. Limitations of Simulation and Path to Physical Implementation

6.7. Computational Complexity Analysis

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Arzykulov, S.; Celik, A.; Nauryzbayev, G.; Eltawil, A.M. Artificial noise and ris-aided physical layer security: Optimal RIS partitioning and power control. IEEE Wirel. Commun. Lett. 2023, 12, 992–996. [Google Scholar] [CrossRef]

- Xu, H.; Wong, K.-K.; Xu, Y.; Caire, G. Coding-enhanced cooperative jamming for secret communication: The mimo case. IEEE Trans. Commun. 2024, 72, 2746–2761. [Google Scholar] [CrossRef]

- Chorti, A.; Barreto, A.N.; Köpsell, S.; Zoli, M.; Chafii, M.; Sehier, P.; Fettweis, G.; Poor, H.V. Context-aware security for 6g wireless: The role of physical layer security. IEEE Commun. Stand. Mag. 2022, 6, 102–108. [Google Scholar] [CrossRef]

- Wen, Y.; Huo, Y.; Jing, T.; Gao, Q. A reputation framework with multiple-threshold energy detection in wireless cooperative systems. In Proceedings of the ICC 2020-2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Zheng, T.-X.; Yang, Z.; Wang, C.; Li, Z.; Yuan, J.; Guan, X. Wireless covert communications aided by distributed cooperative jamming over slow fading channels. IEEE Trans. Wirel. Commun. 2021, 20, 7026–7039. [Google Scholar] [CrossRef]

- Wen, Y.; Jing, T.; Gao, Q. Trustworthy jammer selection with truth-telling for wireless cooperative systems. Wirel. Commun. Mob. Comput. 2021, 2021, 6626355. [Google Scholar] [CrossRef]

- Tayel, A.F.; Rabia, S.I.; El-Malek, A.H.A.; Abdelrazek, A.M. Throughput maximization of hybrid access in multi-class cognitive radio networks with energy harvesting. IEEE Trans. Commun. 2021, 69, 2962–2974. [Google Scholar] [CrossRef]

- Kifouche, A.; Azzaz, M.S.; Hamouche, R.; Kocik, R. Design and implementation of a new lightweight chaos-based cryptosystem to secure iot communications. Int. J. Inf. Secur. 2022, 21, 1247–1262. [Google Scholar] [CrossRef]

- Tao, Q.; Hu, X.; Zhang, S.; Zhong, C. Integrated sensing and communication for symbiotic radio systems in mobile scenarios. IEEE Trans. Wirel. Commun. 2024, 23, 11213–11225. [Google Scholar] [CrossRef]

- Xiao, H.; Hu, X.; Li, A.; Wang, W.; Su, Z.; Wong, K.-K.; Yang, K. Star-RIS enhanced joint physical layer security and covert communications for multi-antenna mmwave systems. IEEE Trans. Wirel. Commun. 2024, 23, 8805–8819. [Google Scholar] [CrossRef]

- Nasser, A.; Hassan, H.A.H.; Chaaya, J.A.; Mansour, A.; Yao, K.-C. Spectrum sensing for cognitive radio: Recent advances and future challenge. Sensors 2021, 21, 2408. [Google Scholar] [CrossRef] [PubMed]

- Feriani, A.; Hossain, E. Single and multi-agent deep reinforcement learning for ai-enabled wireless networks: A tutorial. IEEE Commun. Surv. Tutor. 2021, 23, 1226–1252. [Google Scholar] [CrossRef]

- Xie, Y.; Wu, Q.; Fan, P.; Cheng, N.; Chen, W.; Wang, J.; Letaief, K.B. Resource allocation for twin maintenance and task processing in vehicular edge computing network. IEEE Internet Things J. 2025, 12, 32008–32021. [Google Scholar] [CrossRef]

- Wen, Y.; Huo, Y.; Ma, L.; Jing, T.; Gao, Q. A scheme for trustworthy friendly jammer selection in cooperative cognitive radio networks. IEEE Trans. Veh. Technol. 2019, 68, 3500–3512. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, N.; Tang, J.; Liu, M.; Zhao, N.; Niyato, D. UAV-aided covert communication with a multi-antenna jammer. IEEE Trans. Veh. Technol. 2021, 70, 11619–11631. [Google Scholar] [CrossRef]

- Wen, Y.; Liu, L.; Li, J.; Hou, X.; Zhang, N.; Dong, M.; Atiquzzaman, M.; Wang, K.; Huo, Y. A covert jamming scheme against an intelligent eavesdropper in cooperative cognitive radio networks. IEEE Trans. Veh. Technol. 2023, 72, 13243–13254. [Google Scholar] [CrossRef]

- Ahmed, R.; Chen, Y.; Hassan, B.; Du, L. Cr-iotnet: Machine learning based joint spectrum sensing and allocation for cognitive radio enabled iot cellular networks. Ad Hoc Netw. 2021, 112, 102390. [Google Scholar] [CrossRef]

- Wang, Z.; Xu, H.; Zhao, L.; Chen, X.; Zhou, A. Deep learning for joint pilot design and channel estimation in symbiotic radio communications. IEEE Wirel. Commun. Lett. 2022, 11, 2056–2060. [Google Scholar] [CrossRef]

- Zheng, S.; Chen, S.; Yang, X. Deepreceiver: A deep learning-based intelligent receiver for wireless communications in the physical layer. IEEE Trans. Cogn. Commun. Netw. 2020, 7, 5–20. [Google Scholar] [CrossRef]

- Wen, Y.; Huo, Y.; Li, J.; Qian, J.; Wang, K. Generative adversarial network-aided covert communication for cooperative jammers in ccrns. IEEE Trans. Inf. Forensics Secur. 2025, 20, 1278–1289. [Google Scholar] [CrossRef]

- Kopic, A.; Perenda, E.; Gacanin, H. A collaborative multi-agent deep reinforcement learning-based wireless power allocation with centralized training and decentralized execution. IEEE Trans. Commun. 2024, 72, 7006–7016. [Google Scholar] [CrossRef]

- Gu, X.; Wu, Q.; Fan, P.; Cheng, N.; Chen, W.; Letaief, K.B. Drl-based federated self-supervised learning for task offloading and resource allocation in isac-enabled vehicle edge computing. Digit. Commun. Netw. 2024. [Google Scholar] [CrossRef]

- Zhao, Q.; Gao, C.; Zheng, D.; Li, Y.; Zheng, X. Covert communication in a multi-relay assisted wireless network with an active warden. IEEE Internet Things J. 2024, 11, 16450–16460. [Google Scholar] [CrossRef]

- Lyu, T.; Xu, H.; Zhang, L.; Han, Z. Source selection and resource allocation in wireless-powered relay networks: An adaptive dynamic programming-based approach. IEEE Internet Things J. 2023, 11, 8973–8988. [Google Scholar] [CrossRef]

- Mensi, N.; Rawat, D.B. Reconfigurable intelligent surface selection for wireless vehicular communications. IEEE Wirel. Commun. Lett. 2022, 11, 1743–1747. [Google Scholar] [CrossRef]

- Hu, J.; Li, H.; Chen, Y.; Shu, F.; Wang, J. Covert communication in cognitive radio networks with poisson distributed jammers. IEEE Trans. Wirel. Commun. 2024, 23, 13095–13109. [Google Scholar] [CrossRef]

- Dang-Ngoc, H.; Nguyen, D.N.; Ho-Van, K.; Hoang, D.T.; Dutkiewicz, E.; Pham, Q.-V.; Hwang, W.-J. Secure swarm uav-assisted communications with cooperative friendly jamming. IEEE Internet Things J. 2022, 9, 25596–25611. [Google Scholar] [CrossRef]

- Wang, T.; Li, Y.; Wu, Y.; Quek, T.Q. Secrecy driven federated learning via cooperative jamming: An approach of latency minimization. IEEE Trans. Emerg. Top. Comput. 2022, 10, 1687–1703. [Google Scholar] [CrossRef]

- Wen, Y.; Wang, F.; Wang, H.-M.; Li, J.; Qian, J.; Wang, K.; Wang, H. Cooperative jamming aided secure communication for ris enabled symbiotic radio systems. IEEE Trans. Commun. 2024, 73, 2936–2949. [Google Scholar] [CrossRef]

- Jia, L.; Qi, N.; Su, Z.; Chu, F.; Fang, S.; Wong, K.-K.; Chae, C.-B. Game theory and reinforcement learning for anti-jamming defense in wireless communications: Current research, challenges, and solutions. IEEE Commun. Surv. Tutor. 2024, 27, 1798–1838. [Google Scholar] [CrossRef]

- Chafii, M.; Naoumi, S.; Alami, R.; Almazrouei, E.; Bennis, M.; Debbah, M. Emergent communication in multi-agent reinforcement learning for future wireless networks. IEEE Internet Things Mag. 2023, 6, 18–24. [Google Scholar] [CrossRef]

| Simulation Parameter | Value |

|---|---|

| Maximum transmit power for the source and jammers (dBm) | 20 |

| Noise power spectral density | −174 dBm/Hz |

| Channel bandwidth B | 1 MHz |

| Path loss exponent | 3.0 |

| RL discount factor | 0.99 |

| MADDPG mini-batch size | 128 |

| Actor network soft update rate | 0.001 |

| Critic network soft update rate | 0.001 |

| Covertness requirement threshold | 0.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qian, J.; Li, H.; Zhu, P.; Zhou, A.; Liu, S.; Wang, F. Cooperative Jamming and Relay Selection for Covert Communications Based on Reinforcement Learning. Sensors 2025, 25, 6218. https://doi.org/10.3390/s25196218

Qian J, Li H, Zhu P, Zhou A, Liu S, Wang F. Cooperative Jamming and Relay Selection for Covert Communications Based on Reinforcement Learning. Sensors. 2025; 25(19):6218. https://doi.org/10.3390/s25196218

Chicago/Turabian StyleQian, Jin, Hui Li, Pengcheng Zhu, Aiping Zhou, Shuai Liu, and Fengshuan Wang. 2025. "Cooperative Jamming and Relay Selection for Covert Communications Based on Reinforcement Learning" Sensors 25, no. 19: 6218. https://doi.org/10.3390/s25196218

APA StyleQian, J., Li, H., Zhu, P., Zhou, A., Liu, S., & Wang, F. (2025). Cooperative Jamming and Relay Selection for Covert Communications Based on Reinforcement Learning. Sensors, 25(19), 6218. https://doi.org/10.3390/s25196218