Hardware, Algorithms, and Applications of the Neuromorphic Vision Sensor: A Review

Abstract

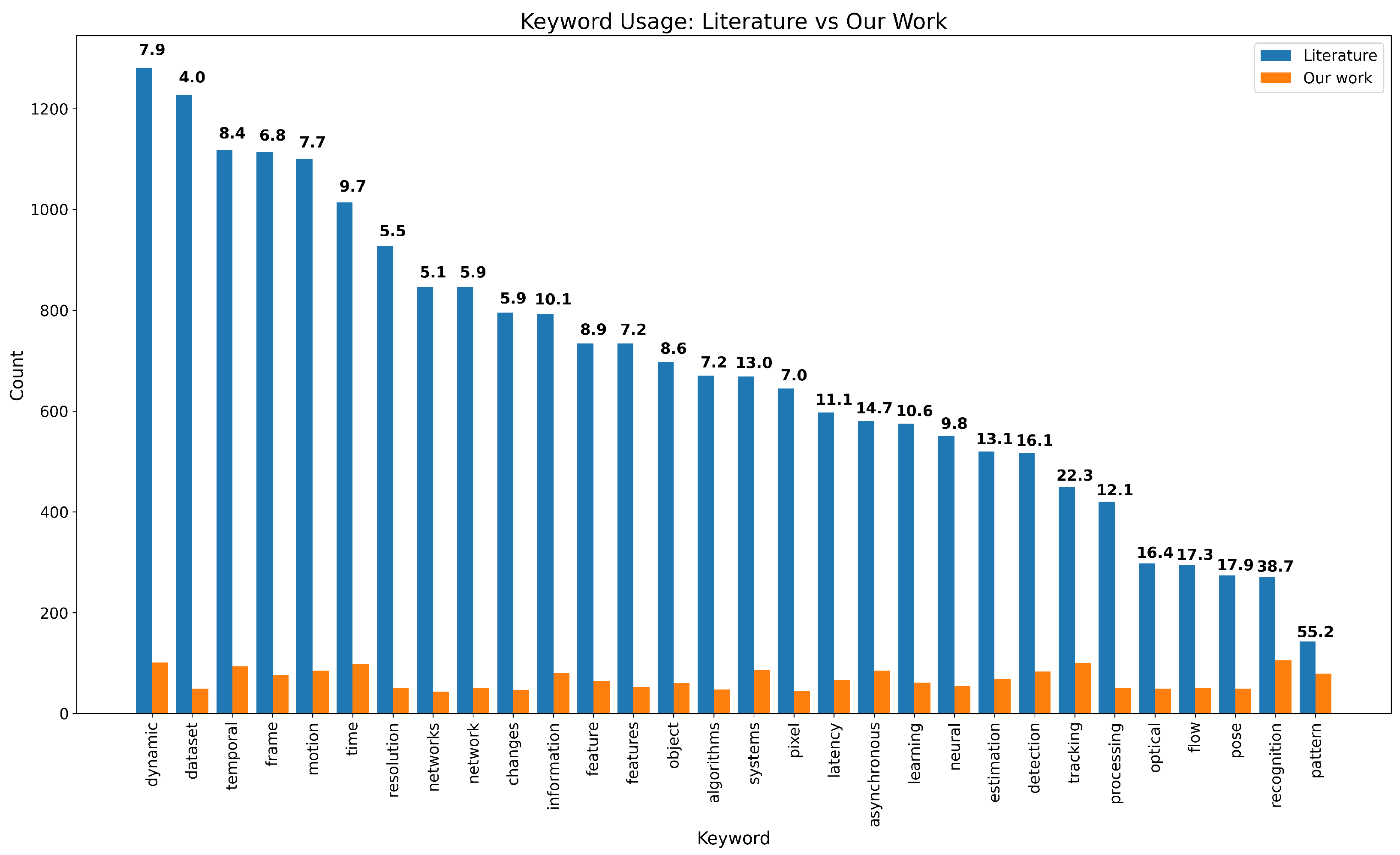

1. Introduction

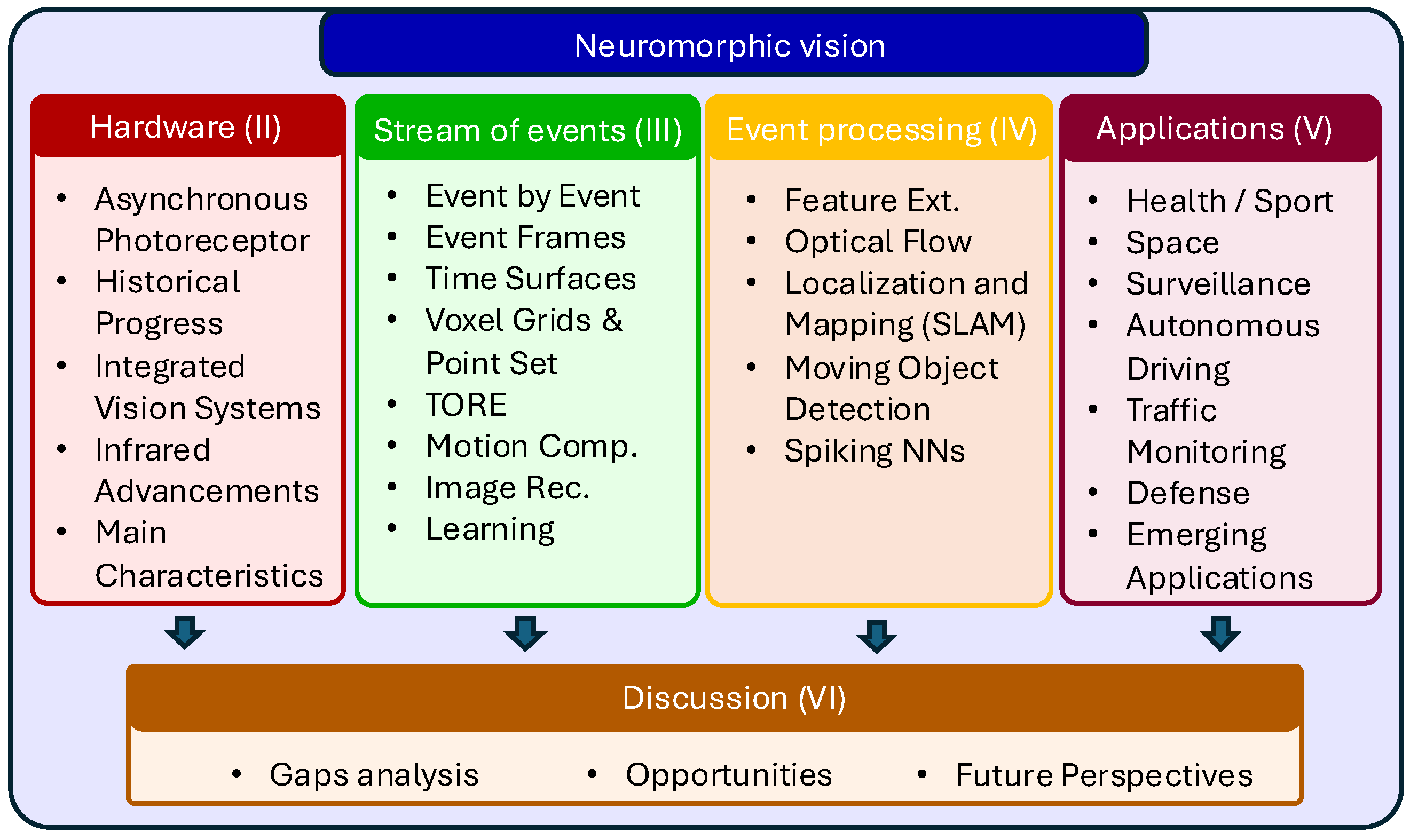

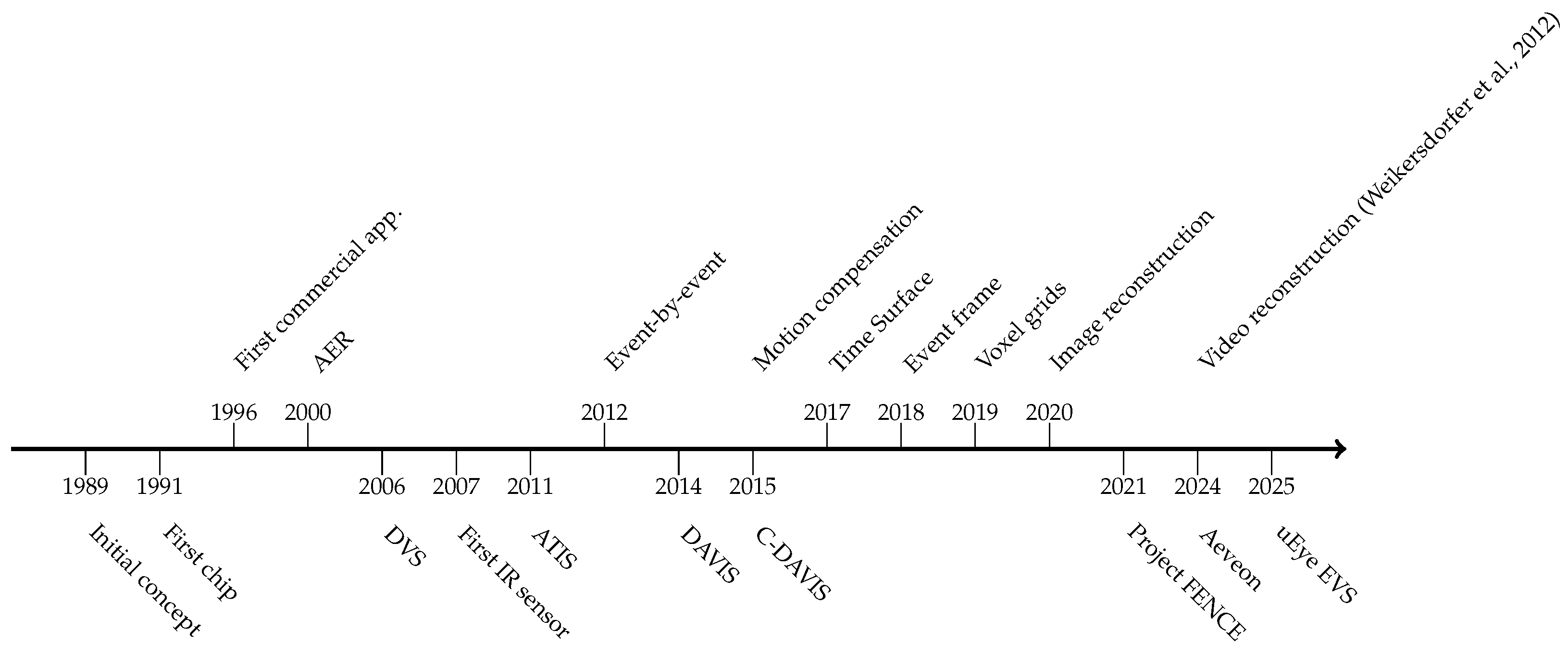

- Neuromorphic Cameras’ Hardware Evolution: We present a timeline of the evolution of neuromorphic vision sensor technology, revealing the chronological progress of the hardware, how it differs from standard vision systems, and why these differences matter (Section 2).

- Application Focus: We discuss key application case studies demonstrating how the unique properties of neuromorphic cameras impact real-world solutions (Section 5).

- Gaps, Limitations, and Future Opportunities: We analyze the key challenges hindering the adoption of neuromorphic vision sensors, from hardware constraints to algorithmic gaps and real-world application barriers, while highlighting the opportunities unlocked by this radical shift in visual sensing modality (Section 6).

2. The Neuromorphic Vision Sensor

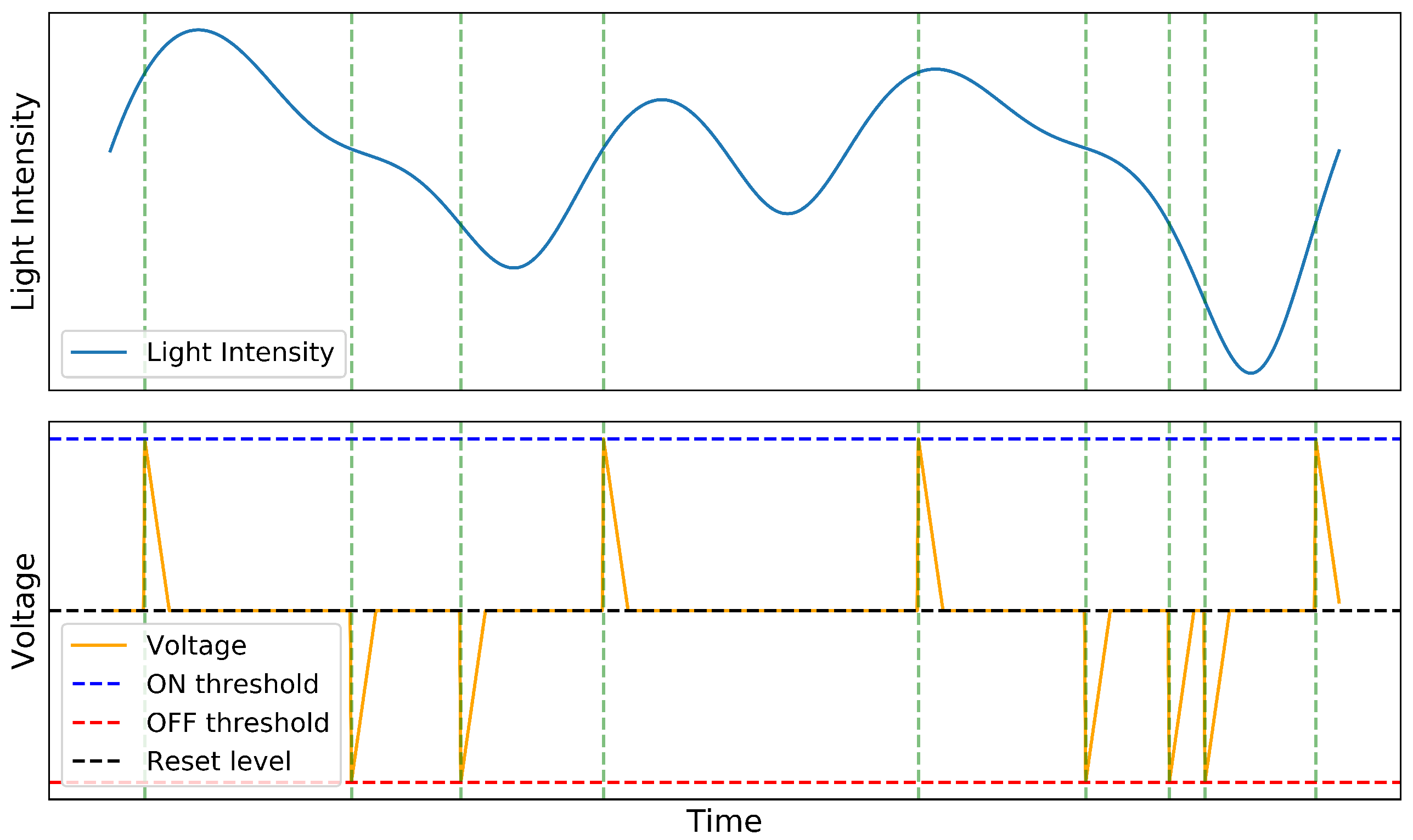

2.1. The Neuromorphic Camera’s Asynchronous Photoreceptor

2.2. Progress in Visible-Light Event-Camera Models

2.3. Toward Fully Integrated Neuromorphic Vision Systems

2.4. Development of the Infrared Neuromorphic Vision Sensor

2.5. Main Characteristics of Event Cameras

- High temporal resolution: NCs can capture fast-moving objects and obtain greater detail of the evolution of the motion without having to interpolate between frames. Light intensity change is detected by analog circuits with high-speed response. Then, a digital readout with a 1MHz clock timestamps the event with microsecond resolution [3].

- Low latency: Event cameras have low latency, meaning they can respond quickly to environmental changes. Contrary to the traditional camera, NCs do not have a shutter, so there is no exposure time to wait for before transmitting brightness change events. Therefore, latency is often tens to hundreds of microseconds under laboratory conditions to a few milliseconds under real conditions [3].

- High dynamic range: NCs can capture bright and dark scenes without losing detail. This property is particularly beneficial for sudden changes in illumination that can cause overexposure or in low-light environments where the scene may appear too dark. This property is due to the logarithmic response at the photoreceptors. Hence, whereas static vision sensors have a dynamic range limited to 60 dB because all the pixels share the same measurement integration time (dictated by the shutter), event cameras can go over 120 dB for their independent pixel operations.

- Low power: NCs consume much less energy than traditional cameras, making them suitable for low-power devices and applications. Notably, all pixels are activated independently based on the illumination changes each one detects, and the analog circuit is very efficient. As a result, the NC’s power demand can go as low as a few milliwatts.

- Sparsity: NCs provide data only when there is a change in the scene. Hence, the amount of data that needs to be processed is reduced. As a result, the event cameras may output up to 100× less data than traditional cameras with similar resolution. To fully exploit this technology, NCs must be coupled with chips capable of processing the events with algorithms such as spiking neural networks designed to maintain the low-power premises and keep events’ intrinsic asynchronous nature intact. SynSense is one such company that develops neuromorphic chips, such as the DYNAP-CNN chip, for ultra-low-power applications, e.g., IoT devices, and can be integrated with the same chip with the DVS pixel array, as demonstrated by IniVation Speck.

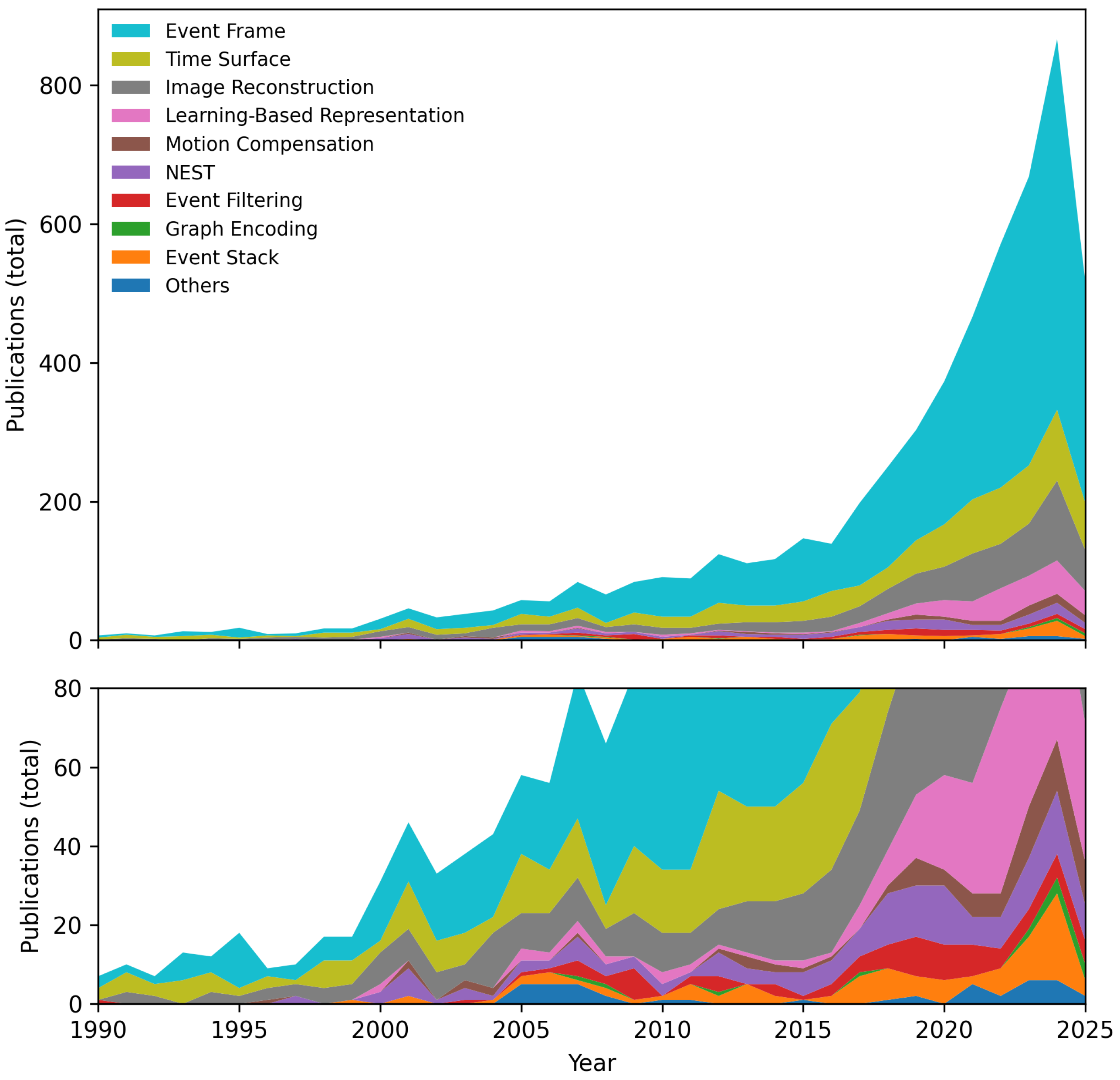

3. Working with Stream of Events

3.1. Event-by-Event Processing

3.2. Event Frame

3.3. Temporal Binary Representation

3.4. Time Surface and Surface of Active Events (TS/SAE/SITS)

3.5. Voxel Grids and Point Sets

3.6. TORE Volumes

3.7. Graph/Event-Cloud Encodings

3.8. Motion Compensation

3.9. Image Reconstruction

- Spike-based image reconstruction [85] involves accumulating spike data over time and reconstructing an image of the scene. One common approach is to use a spike-based reconstruction algorithm that considers the spatial and temporal patterns of the spikes to recreate a picture that closely approximates the original scene.

- Adaptive filtering [86] filters out noise and artifacts in data captured by event cameras. Because these cameras capture data asynchronously and at high temporal resolution, there is often a lot of noise in the data that can interfere with image reconstruction. Adaptive filtering techniques use a combination of statistical analysis and machine learning algorithms to filter out the noise and improve the quality of the reconstructed image.

- Compressed sensing [87] can reconstruct high-quality images from a relatively small quantity of data using a combination of algorithms and mathematical models.

- Deep learning [88] methods entail using neural networks to learn patterns in visual data and generate high-quality reconstructed images. This technique involves training a neural network on a large dataset of visual data and using it to reconstruct images from the sparse data captured by NCs and event cameras. Deep learning has shown promising results in improving the quality of reconstructed images from these types of cameras.

3.10. Learning-Based Representations

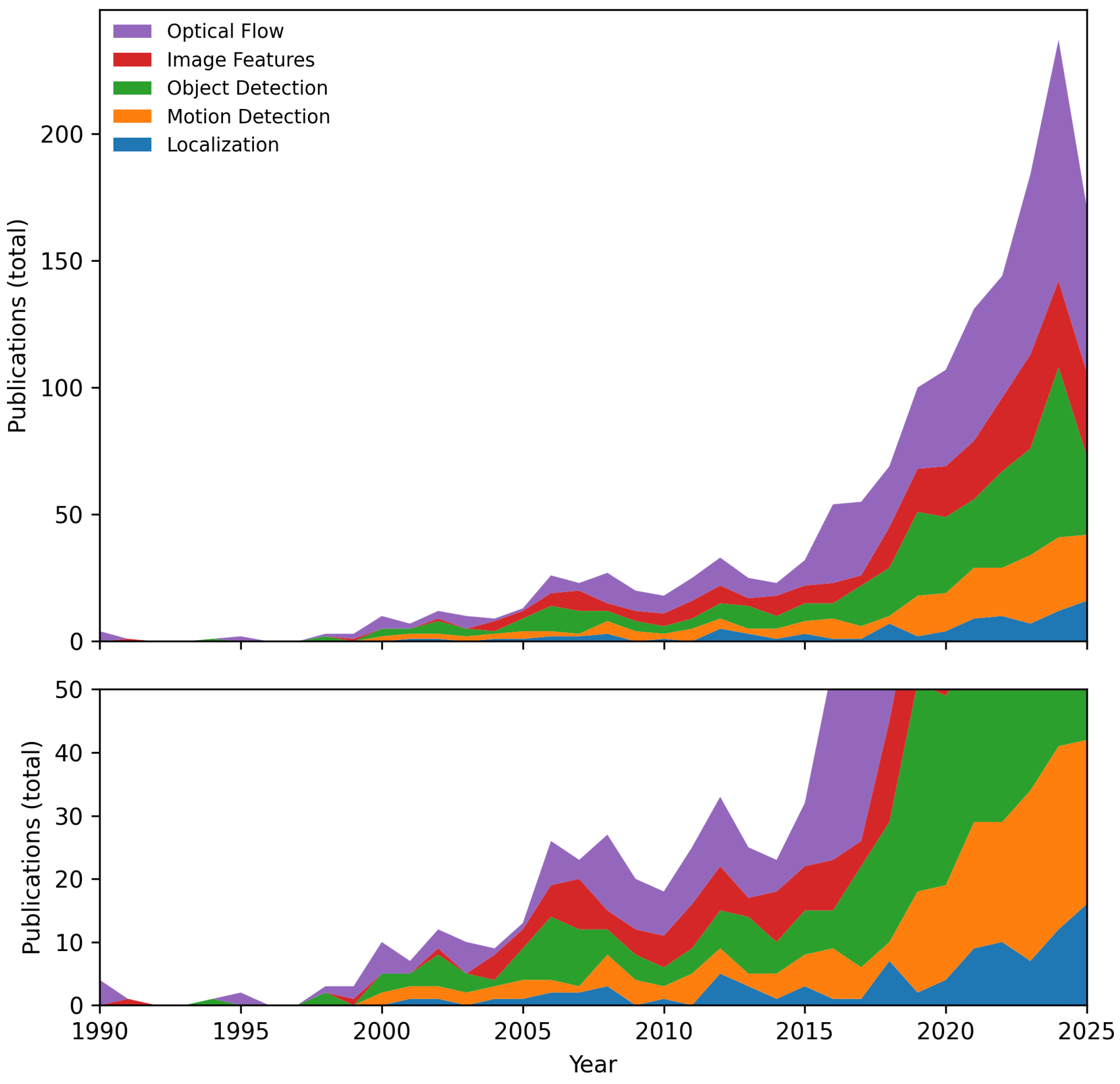

4. Event Stream Processing Algorithms

4.1. Extraction and Tracking of Image Features

4.2. Optical Flow

4.3. Camera Localization and Mapping

4.4. Moving Object Detection

4.5. Spiking Neural Networks for Event-Based Processing

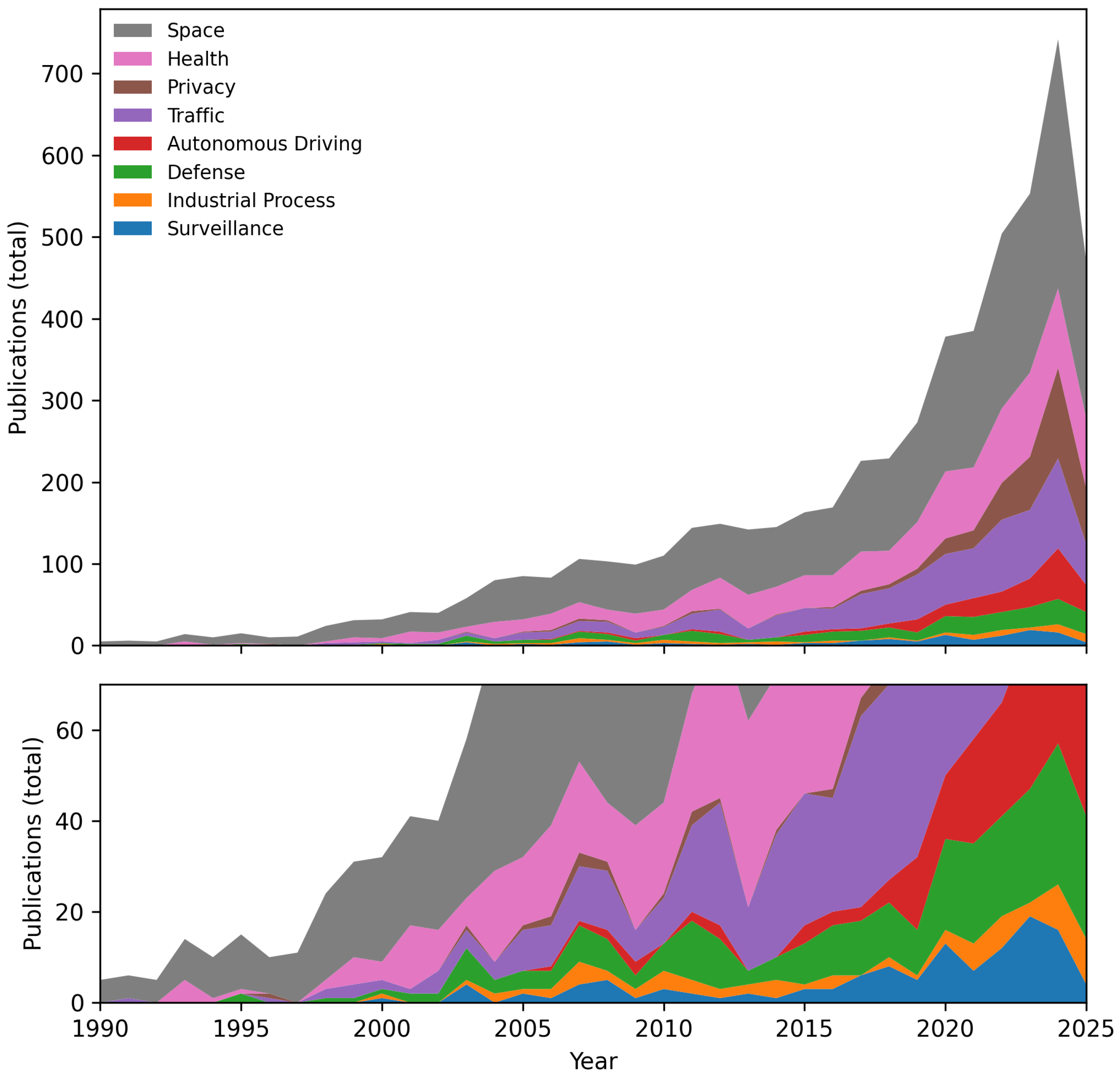

5. Applications

5.1. Health and Sport-Activity Monitoring

5.2. Industrial Process Monitoring and Agriculture

5.3. Space Sector

5.4. Surveillance and Search and Rescue

5.5. Autonomous Driving

5.6. Traffic Monitoring

5.7. Defense

5.8. Other Emerging Applications

6. Discussion

6.1. Gap Analysis

6.2. Opportunities and Future Directions

6.3. Perspectives on Neuromorphic Computing

7. Summary

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Posch, C.; Serrano-Gotarredona, T.; Linares-Barranco, B.; Delbruck, T. Retinomorphic Event-Based Vision Sensors: Bioinspired Cameras with Spiking Output. Proc. IEEE 2014, 102, 1470–1484. [Google Scholar] [CrossRef]

- Ceccarelli, A.; Secci, F. RGB Cameras Failures and Their Effects in Autonomous Driving Applications. IEEE Trans. Dependable Secur. Comput. 2023, 20, 2731–2745. [Google Scholar] [CrossRef]

- Gallego, G.; Delbrück, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.J.; Conradt, J.; Daniilidis, K.; et al. Event-based vision: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 154–180. [Google Scholar] [CrossRef] [PubMed]

- Lichtsteiner, P.; Posch, C.; Delbruck, T. A 128 × 128 120 dB 15 μs Latency Asynchronous Temporal Contrast Vision Sensor. IEEE J. Solid-State Circuits 2008, 43, 566–576. [Google Scholar] [CrossRef]

- Boahen, K. Retinomorphic vision systems. In Proceedings of the Fifth International Conference on Microelectronics for Neural Networks, Lausanne, Switzerland, 12–14 February 1996. MNNFS-96. [Google Scholar] [CrossRef]

- Kudithipudi, D.; Schuman, C.; Vineyard, C.M.; Pandit, T.; Merkel, C.; Kubendran, R.; Aimone, J.B.; Orchard, G.; Mayr, C.; Benosman, R.; et al. Neuromorphic computing at scale. Nature 2025, 637, 801–812. [Google Scholar] [CrossRef]

- Ghosh, S.; Gallego, G. Event-Based Stereo Depth Estimation: A Survey. In IEEE Transactions on Pattern Analysis and Machine Intelligence; IEEE: Piscataway, NJ, USA, 2025; pp. 1–20. [Google Scholar] [CrossRef]

- Adra, M.; Melcarne, S.; Mirabet-Herranz, N.; Dugelay, J.L. Event-Based Solutions for Human-centered Applications: A Comprehensive Review. arXiv 2025, arXiv:2502.18490. [Google Scholar] [CrossRef]

- AliAkbarpour, H.; Moori, A.; Khorramdel, J.; Blasch, E.; Tahri, O. Emerging Trends and Applications of Neuromorphic Dynamic Vision Sensors: A Survey. IEEE Sens. Rev. 2024, 1, 14–63. [Google Scholar] [CrossRef]

- Shariff, W.; Dilmaghani, M.S.; Kielty, P.; Moustafa, M.; Lemley, J.; Corcoran, P. Event Cameras in Automotive Sensing: A Review. IEEE Access 2024, 12, 51275–51306. [Google Scholar] [CrossRef]

- Cazzato, D.; Bono, F. An Application-Driven Survey on Event-Based Neuromorphic Computer Vision. Information 2024, 15, 472. [Google Scholar] [CrossRef]

- Chakravarthi, B.; Verma, A.A.; Daniilidis, K.; Fermuller, C.; Yang, Y. Recent event camera innovations: A survey. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2024; pp. 342–376. [Google Scholar]

- Tenzin, S.; Rassau, A.; Chai, D. Application of event cameras and neuromorphic computing to VSLAM: A survey. Biomimetics 2024, 9, 444. [Google Scholar] [CrossRef]

- Becattini, F.; Berlincioni, L.; Cultrera, L.; Del Bimbo, A. Neuromorphic Face Analysis: A Survey. arXiv 2024, arXiv:2402.11631. [Google Scholar] [CrossRef]

- Zheng, X.; Liu, Y.; Lu, Y.; Hua, T.; Pan, T.; Zhang, W.; Tao, D.; Wang, L. Deep learning for event-based vision: A comprehensive survey and benchmarks. arXiv 2023, arXiv:2302.08890. [Google Scholar]

- Huang, K.; Zhang, S.; Zhang, J.; Tao, D. Event-based simultaneous localization and mapping: A comprehensive survey. arXiv 2023, arXiv:2304.09793. [Google Scholar]

- Schuman, C.D.; Kulkarni, S.R.; Parsa, M.; Mitchell, J.P.; Date, P.; Kay, B. Opportunities for neuromorphic computing algorithms and applications. Nat. Comput. Sci. 2022, 2, 10–19. [Google Scholar] [CrossRef]

- Shi, C.; Song, N.; Li, W.; Li, Y.; Wei, B.; Liu, H.; Jin, J. A Review of Event-Based Indoor Positioning and Navigation. In Proceedings of the WiP Twelfth International Conference on Indoor Positioning and Indoor Navigation, CEUR Workshop Proceedings, Beijing, China, 5–7 September 2022. [Google Scholar]

- Furmonas, J.; Liobe, J.; Barzdenas, V. Analytical Review of Event-Based Camera Depth Estimation Methods and Systems. Sensors 2022, 22, 1201. [Google Scholar] [CrossRef]

- Cho, S.W.; Jo, C.; Kim, Y.H.; Park, S.K. Progress of Materials and Devices for Neuromorphic Vision Sensors. Nano-Micro Lett. 2022, 14, 203. [Google Scholar] [CrossRef] [PubMed]

- Liao, F.; Zhou, F.; Chai, Y. Neuromorphic vision sensors: Principle, progress and perspectives. J. Semicond. 2021, 42, 013105. [Google Scholar] [CrossRef]

- Steffen, L.; Reichard, D.; Weinland, J.; Kaiser, J.; Roennau, A.; Dillmann, R. Neuromorphic Stereo Vision: A Survey of Bio-Inspired Sensors and Algorithms. Front. Neurorobot. 2019, 13, 28. [Google Scholar] [CrossRef] [PubMed]

- Lakshmi, A.; Chakraborty, A.; Thakur, C.S. Neuromorphic vision: From sensors to event-based algorithms. WIREs Data Min. Knowl. Discov. 2019, 9, e1310. [Google Scholar] [CrossRef]

- Vanarse, A.; Osseiran, A.; Rassau, A. A Review of Current Neuromorphic Approaches for Vision, Auditory, and Olfactory Sensors. Front. Neurosci. 2016, 10, 115. [Google Scholar] [CrossRef]

- Mead, C. Analog VLSI and Neural Systems; Addison-Wesley: Boston, MA, USA, 1989; p. 371. [Google Scholar]

- Mahowald, M.; Douglas, R. A silicon neuron. Nature 1991, 354, 515–518. [Google Scholar] [CrossRef]

- Mahowald, M.A.; Mead, C. The Silicon Retina. Sci. Am. 1991, 264, 76–82. [Google Scholar] [CrossRef]

- Mahowald, M. VLSI Analogs of Neuronal Visual Processing: A Synthesis of Form and Function. Ph.D. Thesis, California Institute of Technology, Pasadena, CA, USA, 1992. [Google Scholar] [CrossRef]

- Arreguit, X.; Schaik, F.V.; Bauduin, F.; Bidiville, M.; Raeber, E. A CMOS motion detector system for pointing devices. In Proceedings of the 1996 IEEE International Solid-State Circuits Conference, Digest of Technical Papers, ISSCC, San Francisco, CA, USA, 10 February 1996; IEEE: Piscataway, NJ, USA, 1996. [Google Scholar] [CrossRef]

- Boahen, K. Point-to-point connectivity between neuromorphic chips using address events. IEEE Trans. Circuits Syst. II Analog Digit. Signal Process. 2000, 47, 416–434. [Google Scholar] [CrossRef]

- Lichtsteiner, P.; Posch, C.; Delbruck, T. A 128 × 128 120db 30 mw asynchronous vision sensor that responds to relative intensity change. In Proceedings of the 2006 IEEE International Solid State Circuits Conference-Digest of Technical Papers, San Francisco, CA, USA, 6–9 February 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 2060–2069. [Google Scholar]

- Lazzaro, J.; Wawrzynek, J. A Multi-Sender Asynchronous Extension to the AER Protocol. In Proceedings of the Proceedings Sixteenth Conference on Advanced Research in VLSI, Chapel Hill, NC, USA, 27–29 March 1995; pp. 158–169. [Google Scholar] [CrossRef]

- Weikersdorfer, D.; Conradt, J. Event-Based Particle Filtering For Robot Self-localization. In Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 866–870. [Google Scholar] [CrossRef]

- Posch, C.; Matolin, D.; Wohlgenannt, R. A QVGA 143 dB Dynamic Range Frame-Free PWM Image Sensor with Lossless Pixel-Level Video Compression and Time-Domain CDS. IEEE J. Solid-State Circuits 2011, 46, 259–275. [Google Scholar] [CrossRef]

- Brandli, C.; Berner, R.; Yang, M.; Liu, S.C.; Delbruck, T. A 240 × 180 130 dB 3 μs Latency Global Shutter Spatiotemporal Vision Sensor. IEEE J. Solid-State Circuits 2014, 49, 2333–2341. [Google Scholar] [CrossRef]

- Li, C.; Brandli, C.; Berner, R.; Liu, H.; Yang, M.; Liu, S.C.; Delbruck, T. Design of an RGBW color VGA rolling and global shutter dynamic and active-pixel vision sensor. In Proceedings of the 2015 IEEE International Symposium on Circuits and Systems (ISCAS), Lisbon, Portugal, 24–27 May 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 718–721. [Google Scholar]

- Son, B.; Suh, Y.; Kim, S.; Jung, H.; Kim, J.S.; Shin, C.; Park, K.; Lee, K.; Park, J.; Woo, J.; et al. A 640 × 480 dynamic vision sensor with a 9 μm pixel and 300 Meps address-event representation. In Proceedings of the 2017 IEEE International Solid-State Circuits Conference (ISSCC), Piscataway, NJ, USA, 5–9 February 2017. [Google Scholar] [CrossRef]

- Chen, S.; Tang, W.; Zhang, X.; Culurciello, E. A 64 × 64 Pixels UWB Wireless Temporal-Difference Digital Image Sensor. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2012, 20, 2232–2240. [Google Scholar] [CrossRef]

- Chen, S.; Guo, M. Live Demonstration: CeleX-V: A 1M Pixel Multi-Mode Event-Based Sensor. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- IDS Imaging Development Systems GmbH; Prophesee. IDS Launches New Industrial Camera Series Featuring Prophesee Event-Based Metavision® Sensing and Processing Technologies. 2025. Available online: https://www.prophesee.ai/2025/03/05/ids-launches-new-industrial-camera-series-featuring-prophesee-event-based-metavision-sensing-and-processing-technologies/ (accessed on 28 March 2025).

- Hands on with Android XR and Google’s AI-Powered Smart Glasses. 2024. Available online: https://www.wired.com/story/google-android-xr-demo-smart-glasses-mixed-reality-headset-project-moohan (accessed on 28 March 2025).

- SynSense. Speck™: Event-Driven Neuromorphic Vision SoC. 2022. Available online: https://www.synsense.ai/products/speck-2/ (accessed on 28 March 2025).

- Yao, M.; Richter, O.; Zhao, G.; Qiao, N.; Xing, Y.; Wang, D.; Hu, T.; Fang, W.; Demirci, T.; De Marchi, M.; et al. Spike-based dynamic computing with asynchronous sensing-computing neuromorphic chip. Nat. Commun. 2024, 15, 4464. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Wang, T.; Lin, Y.; Chen, Y.; Zeng, H.; Pei, J.; Wang, J.; Liu, X.; Zhou, Y.; Zhang, J.; et al. A vision chip with complementary pathways for open-world sensing. Nature 2024, 629, 1027–1033. [Google Scholar] [CrossRef]

- Boettiger, J.P. A Comparative Evaluation of the Detection and Tracking Capability Between Novel Event-Based and Conventional Frame-Based Sensors. Master’s Thesis, Air Force Institute of Technology, Wright-Patterson Air Force Base, OH, USA, 2020. [Google Scholar]

- Posch, C.; Matolin, D.; Wohlgenannt, R. A Two-Stage Capacitive-Feedback Differencing Amplifier for Temporal Contrast IR Sensors. In Proceedings of the 2007 14th IEEE International Conference on Electronics, Circuits and Systems, Marrakech, Morocco, 11–14 December 2007; IEEE: Piscataway, NJ, USA, 2007. [Google Scholar] [CrossRef]

- Posch, C.; Matolin, D.; Wohlgenannt, R.; Maier, T.; Litzenberger, M. A Microbolometer Asynchronous Dynamic Vision Sensor for LWIR. IEEE Sens. J. 2009, 9, 654–664. [Google Scholar] [CrossRef]

- Jakobson, C.; Fraenkel, R.; Ben-Ari, N.; Dobromislin, R.; Shiloah, N.; Argov, T.; Freiman, W.; Zohar, G.; Langof, L.; Ofer, O.; et al. Event-Based SWIR Sensor. In Proceedings of the Infrared Technology and Applications XLVIII, May 2022; Fulop, G.F., Kimata, M., Zheng, L., Andresen, B.F., Miller, J.L., Kim, Y.H., Eds.; SPIE: Bellingham, WA, USA, 2022. [Google Scholar] [CrossRef]

- DARPA. DARPA Announces Research Teams to Develop Intelligent Event-Based Imagers. 2021. Available online: https://www.darpa.mil/news/2021/intelligent-event-based-imagers (accessed on 7 June 2023).

- Cazzato, D.; Renaldi, G.; Bono, F. A Systematic Parametric Campaign to Benchmark Event Cameras in Computer Vision Tasks. Electronics 2025, 14, 2603. [Google Scholar] [CrossRef]

- Alevi, D.; Stimberg, M.; Sprekeler, H.; Obermayer, K.; Augustin, M. Brian2CUDA: Flexible and Efficient Simulation of Spiking Neural Network Models on GPUs. Front. Neuroinform. 2022, 16, 883700. [Google Scholar] [CrossRef]

- Lee, J.H.; Delbruck, T.; Pfeiffer, M. Training Deep Spiking Neural Networks Using Backpropagation. Front. Neurosci. 2016, 10, 508. [Google Scholar] [CrossRef] [PubMed]

- Innocenti, S.U.; Becattini, F.; Pernici, F.; Bimbo, A.D. Temporal Binary Representation for Event-Based Action Recognition. arXiv 2020, arXiv:2010.08946. [Google Scholar] [CrossRef]

- Liu, M.; Delbrück, T. Adaptive Time-Slice Block-Matching Optical Flow Algorithm for Dynamic Vision Sensors. In Proceedings of the British Machine Vision Conference, BMVC, Newcastle, UK, 3–6 September 2018. [Google Scholar] [CrossRef]

- Maqueda, A.I.; Loquercio, A.; Gallego, G.; Garcia, N.; Scaramuzza, D. Event-Based Vision Meets Deep Learning on Steering Prediction for Self-Driving Cars. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar] [CrossRef]

- Lagorce, X.; Orchard, G.; Galluppi, F.; Shi, B.E.; Benosman, R.B. HOTS: A Hierarchy of Event-Based Time-Surfaces for Pattern Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1346–1359. [Google Scholar] [CrossRef] [PubMed]

- Sironi, A.; Brambilla, M.; Bourdis, N.; Lagorce, X.; Benosman, R. HATS: Histograms of Averaged Time Surfaces for Robust Event-Based Object Classification. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar] [CrossRef]

- Manderscheid, J.; Sironi, A.; Bourdis, N.; Migliore, D.; Lepetit, V. Speed Invariant Time Surface for Learning to Detect Corner Points with Event-Based Cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Zhu, A.Z.; Yuan, L.; Chaney, K.; Daniilidis, K. Unsupervised Event-Based Learning of Optical Flow, Depth, and Egomotion. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–17 June 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Cannici, M.; Ciccone, M.; Romanoni, A.; Matteucci, M. A differentiable recurrent surface for asynchronous event-based data. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020; pp. 136–152. [Google Scholar]

- Gehrig, D.; Loquercio, A.; Derpanis, K.; Scaramuzza, D. End-to-End Learning of Representations for Asynchronous Event-Based Data. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 5632–5642. [Google Scholar] [CrossRef]

- Baldwin, R.W.; Liu, R.; Almatrafi, M.; Asari, V.; Hirakawa, K. Time-Ordered Recent Event (TORE) Volumes for Event Cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 2519–2532. [Google Scholar] [CrossRef] [PubMed]

- Deng, Y.; Chen, H.; Liu, H.; Li, Y. A Voxel Graph CNN for Object Classification with Event Cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1172–1181. [Google Scholar]

- Schaefer, S.; Gehrig, D.; Scaramuzza, D. AEGNN: Asynchronous Event-Based Graph Neural Networks. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 12361–12371. [Google Scholar] [CrossRef]

- Teng, M.; Zhou, C.; Lou, H.; Shi, B. NEST: Neural Event Stack for Event-Based Image Enhancement. In Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2022; pp. 660–676. [Google Scholar] [CrossRef]

- Zubić, N.; Gehrig, D.; Gehrig, M.; Scaramuzza, D. From chaos comes order: Ordering event representations for object recognition and detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 12846–12856. [Google Scholar]

- Gu, D.; Li, J.; Zhu, L. Learning Adaptive Parameter Representation for Event-Based Video Reconstruction. IEEE Signal Process. Lett. 2024, 31, 1950–1954. [Google Scholar] [CrossRef]

- Censi, A.; Scaramuzza, D. Low-latency event-based visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 703–710. [Google Scholar] [CrossRef]

- Gallego, G.; Rebecq, H.; Scaramuzza, D. A Unifying Contrast Maximization Framework for Event Cameras, with Applications to Motion, Depth, and Optical Flow Estimation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3867–3876. [Google Scholar] [CrossRef]

- Kim, H.; Handa, A.; Benosman, R.; Ieng, S.H.; Davison, A. Simultaneous Mosaicing and Tracking with an Event Camera. In Proceedings of the British Machine Vision Conference 2014, Nottingham, Nottingham, UK, 1–5 September 2014; pp. 26.1–26.12. [Google Scholar] [CrossRef]

- Tumblin, J.; Agrawal, A.; Raskar, R. Why I Want a Gradient Camera. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 103–110. [Google Scholar] [CrossRef]

- Scheerlinck, C.; Barnes, N.; Mahony, R. Continuous-time intensity estimation using event cameras. In Proceedings of the Asian Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2018; pp. 308–324. [Google Scholar]

- Scheerlinck, C.; Barnes, N.; Mahony, R. Asynchronous spatial image convolutions for event cameras. IEEE Robot. Autom. Lett. 2019, 4, 816–822. [Google Scholar] [CrossRef]

- Orchard, G.; Meyer, C.; Etienne-Cummings, R.; Posch, C.; Thakor, N.; Benosman, R. HFirst: A Temporal Approach to Object Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 2028–2040. [Google Scholar] [CrossRef]

- Granlund, G.H. In search of a general picture processing operator. Comput. Graph. Image Process. 1978, 8, 155–173. [Google Scholar] [CrossRef]

- Shrestha, S.B.; Orchard, G. SLAYER: Spike Layer Error Reassignment in Time. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Davies, M.; Srinivasa, N.; Lin, T.H.; Chinya, G.; Cao, Y.; Choday, S.H.; Dimou, G.; Joshi, P.; Imam, N.; Jain, S.; et al. Loihi: A Neuromorphic Manycore Processor with On-Chip Learning. IEEE Micro 2018, 38, 82–99. [Google Scholar] [CrossRef]

- Kogler, J.; Sulzbachner, C.; Kubinger, W. Bio-inspired Stereo Vision System with Silicon Retina Imagers. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2009; pp. 174–183. [Google Scholar] [CrossRef]

- Benosman, R.; Clercq, C.; Lagorce, X.; Ieng, S.-H.; Bartolozzi, C. Event-Based Visual Flow. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 407–417. [Google Scholar] [CrossRef] [PubMed]

- Gallego, G.; Gehrig, M.; Scaramuzza, D. Focus Is All You Need: Loss Functions for Event-Based Vision. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 12272–12281. [Google Scholar] [CrossRef]

- Gehrig, D.; Rebecq, H.; Gallego, G.; Scaramuzza, D. EKLT: Asynchronous Photometric Feature Tracking Using Events and Frames. Int. J. Comput. Vis. 2020, 128, 601–618. [Google Scholar] [CrossRef]

- Rebecq, H.; Horstschaefer, T.; Scaramuzza, D. Real-Time Visual-Inertial Odometry for Event Cameras Using Keyframe-Based Nonlinear Optimization. In Proceedings of the Procedings of the British Machine Vision Conference, London, UK, 4–7 September 2017; p. 16. [Google Scholar] [CrossRef]

- Zhu, A.Z.; Atanasov, N.; Daniilidis, K. Event-Based Visual Inertial Odometry. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5816–5824. [Google Scholar] [CrossRef]

- Mitrokhin, A.; Fermüller, C.; Parameshwara, C.; Aloimonos, Y. Event-Based Moving Object Detection and Tracking. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Zhu, L.; Li, J.; Wang, X.; Huang, T.; Tian, Y. NeuSpike-Net: High Speed Video Reconstruction via Bio-inspired Neuromorphic Cameras. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Wang, Z.W.; Duan, P.; Cossairt, O.; Katsaggelos, A.; Huang, T.; Shi, B. Joint Filtering of Intensity Images and Neuromorphic Events for High-Resolution Noise-Robust Imaging. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Duan, P.; Wang, Z.; Shi, B.; Cossairt, O.; Huang, T.; Katsaggelos, A. Guided Event Filtering: Synergy between Intensity Images and Neuromorphic Events for High Performance Imaging. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 8261–8275. [Google Scholar] [CrossRef]

- Han, J.; Zhou, C.; Duan, P.; Tang, Y.; Xu, C.; Xu, C.; Huang, T.; Shi, B. Neuromorphic Camera Guided High Dynamic Range Imaging. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Annamalai, L.; Chakraborty, A.; Thakur, C.S. EvAn: Neuromorphic Event-Based Sparse Anomaly Detection. Front. Neurosci. 2021, 15, 699003. [Google Scholar] [CrossRef]

- Vemprala, S.; Mian, S.; Kapoor, A. Representation learning for event-based visuomotor policies. Adv. Neural Inf. Process. Syst. 2021, 34, 4712–4724. [Google Scholar]

- Guo, H.; Peng, S.; Yan, Y.; Mou, L.; Shen, Y.; Bao, H.; Zhou, X. Compact neural volumetric video representations with dynamic codebooks. Adv. Neural Inf. Process. Syst. 2023, 36, 75884–75895. [Google Scholar]

- Wang, Z.; Wang, Z.; Li, H.; Qin, L.; Jiang, R.; Ma, D.; Tang, H. EAS-SNN: End-to-End Adaptive Sampling and Representation for Event-based Detection with Recurrent Spiking Neural Networks. arXiv 2024, arXiv:2403.12574. [Google Scholar] [CrossRef]

- Bavle, H.; Sanchez-Lopez, J.L.; Cimarelli, C.; Tourani, A.; Voos, H. From SLAM to Situational Awareness: Challenges and Survey. Sensors 2023, 23, 4849. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A Combined Corner and Edge Detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Rosten, E.; Drummond, T. Machine Learning for High-Speed Corner Detection. In Computer Vision—ECCV 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 430–443. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. Lect. Notes Comput. Sci. 2006, 3951, 404–417. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 2564–2571. [Google Scholar]

- Vasco, V.; Glover, A.; Bartolozzi, C. Fast Event-Based Harris Corner Detection Exploiting the Advantages of Event-Driven Cameras. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar] [CrossRef]

- Mueggler, E.; Bartolozzi, C.; Scaramuzza, D. Fast Event-based Corner Detection. In Proceedings of the Procedings of the British Machine Vision Conference, London, UK, 4–7 September 2017; p. 33. [Google Scholar] [CrossRef]

- Clady, X.; Ieng, S.H.; Benosman, R. Asynchronous event-based corner detection and matching. Neural Netw. 2015, 66, 91–106. [Google Scholar] [CrossRef]

- Alzugaray, I.; Chli, M. Asynchronous Corner Detection and Tracking for Event Cameras in Real Time. IEEE Robot. Autom. Lett. 2018, 3, 3177–3184. [Google Scholar] [CrossRef]

- Alzugaray, I.; Chli, M. ACE: An Efficient Asynchronous Corner Tracker for Event Cameras. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar] [CrossRef]

- Li, R.; Shi, D.; Zhang, Y.; Li, K.; Li, R. FA-Harris: A Fast and Asynchronous Corner Detector for Event Cameras. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Li, R.; Shi, D.; Zhang, Y.; Li, R.; Wang, M. Asynchronous event feature generation and tracking based on gradient descriptor for event cameras. Int. J. Adv. Robot. Syst. 2021, 18, 1–13. [Google Scholar] [CrossRef]

- Ramesh, B.; Yang, H.; Orchard, G.; Le Thi, N.A.; Zhang, S.; Xiang, C. DART: Distribution Aware Retinal Transform for Event-Based Cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2767–2780. [Google Scholar] [CrossRef]

- Huang, Z.; Sun, L.; Zhao, C.; Li, S.; Su, S. EventPoint: Self-Supervised Interest Point Detection and Description for Event-based Camera. arXiv 2022, arXiv:2109.00210. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-Supervised Interest Point Detection and Description. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar] [CrossRef]

- Chiberre, P.; Perot, E.; Sironi, A.; Lepetit, V. Detecting Stable Keypoints from Events through Image Gradient Prediction. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Shi, J.; Tomasi. Good features to track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition CVPR-94, Seattle, WA, USA, 21–23 June 1994; IEEE Computer Society Press: Piscataway, NJ, USA, 1994. [Google Scholar] [CrossRef]

- Besl, P.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Ni, Z.; Bolopion, A.; Agnus, J.; Benosman, R.; Regnier, S. Asynchronous Event-Based Visual Shape Tracking for Stable Haptic Feedback in Microrobotics. IEEE Trans. Robot. 2012, 28, 1081–1089. [Google Scholar] [CrossRef]

- Tedaldi, D.; Gallego, G.; Mueggler, E.; Scaramuzza, D. Feature detection and tracking with the dynamic and active-pixel vision sensor (DAVIS). In Proceedings of the 2016 Second International Conference on Event-Based Control, Communication, and Signal Processing (EBCCSP), Krakow, Poland, 13–15 June 2016; pp. 1–7. [Google Scholar] [CrossRef]

- Kueng, B.; Mueggler, E.; Gallego, G.; Scaramuzza, D. Low-Latency Visual Odometry Using Event-Based Feature Tracks. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 16–23. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Ni, Z.; Ieng, S.H.; Posch, C.; Régnier, S.; Benosman, R. Visual tracking using neuromorphic asynchronous event-based cameras. Neural Comput. 2015, 27, 925–953. [Google Scholar] [CrossRef]

- Lagorce, X.; Ieng, S.H.; Clady, X.; Pfeiffer, M.; Benosman, R.B. Spatiotemporal features for asynchronous event-based data. Front. Neurosci. 2015, 9, 46. [Google Scholar] [CrossRef]

- Glover, A.; Bartolozzi, C. Robust Visual Tracking with a Freely-Moving Event Camera. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3769–3776. [Google Scholar] [CrossRef]

- Glover, A.; Bartolozzi, C. Event-Driven Ball Detection and Gaze Fixation in Clutter. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Repubic of Korea, 9–14 October 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar] [CrossRef]

- Zhu, A.Z.; Atanasov, N.; Daniilidis, K. Event-Based Feature Tracking with Probabilistic Data Association. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 4465–4470. [Google Scholar] [CrossRef]

- Alzugaray, I.; Chli, M. Haste: Multi-Hypothesis Asynchronous Speeded-Up Tracking of Events. In Proceedings of the 31st British Machine Vision Virtual Conference (BMVC 2020), Virtual Event, UK, 7–10 September 2020; ETH Zurich, Institute of Robotics and Intelligent Systems: Zurich, Switzerland, 2020; p. 744. [Google Scholar]

- Alzugaray, I.; Chli, M. Asynchronous Multi-Hypothesis Tracking of Features with Event Cameras. In Proceedings of the 2019 International Conference on 3D Vision (3DV), Québec City, QC, Canada, 16–19 September 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Hu, S.; Kim, Y.; Lim, H.; Lee, A.J.; Myung, H. eCDT: Event Clustering for Simultaneous Feature Detection and Tracking. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Messikommer, N.; Fang, C.; Gehrig, M.; Scaramuzza, D. Data-driven Feature Tracking for Event Cameras. arXiv 2022, arXiv:2211.12826. [Google Scholar] [CrossRef]

- Horn, B.K.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Barron, J.L.; Fleet, D.J.; Beauchemin, S.S. Performance of optical flow techniques. Int. J. Comput. Vis. 1994, 12, 43–77. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision. In Proceedings of the International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981. [Google Scholar]

- Benosman, R.; Ieng, S.H.; Clercq, C.; Bartolozzi, C.; Srinivasan, M. Asynchronous frameless event-based optical flow. Neural Netw. 2012, 27, 32–37. [Google Scholar] [CrossRef] [PubMed]

- Brosch, T.; Tschechne, S.; Neumann, H. On event-based optical flow detection. Front. Neurosci. 2015, 9, 137. [Google Scholar] [CrossRef]

- Orchard, G.; Benosman, R.; Etienne-Cummings, R.; Thakor, N.V. A spiking neural network architecture for visual motion estimation. In Proceedings of the 2013 IEEE Biomedical Circuits and Systems Conference (BioCAS), Rotterdam, The Netherlands, 31 October–2 November 2013; IEEE: Piscataway, NJ, USA, 2013. [Google Scholar] [CrossRef]

- Valois, R.L.D.; Cottaris, N.P.; Mahon, L.E.; Elfar, S.D.; Wilson, J. Spatial and temporal receptive fields of geniculate and cortical cells and directional selectivity. Vis. Res. 2000, 40, 3685–3702. [Google Scholar] [CrossRef]

- Rueckauer, B.; Delbruck, T. Evaluation of Event-Based Algorithms for Optical Flow with Ground-Truth from Inertial Measurement Sensor. Front. Neurosci. 2016, 10, 176. [Google Scholar] [CrossRef]

- Paredes-Valles, F.; Scheper, K.Y.W.; de Croon, G.C.H.E. Unsupervised Learning of a Hierarchical Spiking Neural Network for Optical Flow Estimation: From Events to Global Motion Perception. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2051–2064. [Google Scholar] [CrossRef]

- Lee, C.; Kosta, A.K.; Zhu, A.Z.; Chaney, K.; Daniilidis, K.; Roy, K. Spike-FlowNet: Event-Based Optical Flow Estimation with Energy-Efficient Hybrid Neural Networks. In Computer Vision—ECCV 2020; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 366–382. [Google Scholar] [CrossRef]

- Paredes-Valles, F.; de Croon, G.C.H.E. Back to Event Basics: Self-Supervised Learning of Image Reconstruction for Event Cameras via Photometric Constancy. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Zhang, Y.; Lv, H.; Zhao, Y.; Feng, Y.; Liu, H.; Bi, G. Event-Based Optical Flow Estimation with Spatio-Temporal Backpropagation Trained Spiking Neural Network. Micromachines 2023, 14, 203. [Google Scholar] [CrossRef]

- Parameshwara, C.M.; Li, S.; Fermuller, C.; Sanket, N.J.; Evanusa, M.S.; Aloimonos, Y. SpikeMS: Deep Spiking Neural Network for Motion Segmentation. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Bardow, P.; Davison, A.J.; Leutenegger, S. Simultaneous Optical Flow and Intensity Estimation from an Event Camera. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar] [CrossRef]

- Liu, M.; Delbruck, T. Block-matching optical flow for dynamic vision sensors: Algorithm and FPGA implementation. In Proceedings of the 2017 IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, MD, USA, 28–31 May 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar] [CrossRef]

- Liu, M.; Delbruck, T. EDFLOW: Event Driven Optical Flow Camera with Keypoint Detection and Adaptive Block Matching. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 5776–5789. [Google Scholar] [CrossRef]

- Zhu, A.; Yuan, L.; Chaney, K.; Daniilidis, K. EV-FlowNet: Self-Supervised Optical Flow Estimation for Event-based Cameras. In Proceedings of the Robotics: Science and Systems XIV, Robotics: Science and Systems Foundation, Pittsburgh, PA, USA, 26 June 2018. [Google Scholar] [CrossRef]

- Ye, C.; Zhu, A.Z.; Daniilidis, K. Unsupervised Learning of Dense Optical Flow, Depth and Egomotion with Event-Based Sensors. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 574–581. [Google Scholar]

- Sun, H.; Dao, M.Q.; Fremont, V. 3D-FlowNet: Event-based Optical Flow Estimation with 3D Representation. arXiv 2022, arXiv:2201.12265. [Google Scholar] [CrossRef]

- Gehrig, M.; Millhausler, M.; Gehrig, D.; Scaramuzza, D. E-RAFT: Dense Optical Flow from Event Cameras. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Ding, Z.; Zhao, R.; Zhang, J.; Gao, T.; Xiong, R.; Yu, Z.; Huang, T. Spatio-Temporal Recurrent Networks for Event-Based Optical Flow Estimation. In Proceedings of the AAAI Conference on Artificial Intelligence, Oline, 22 February–1 March 2022; Volume 36, pp. 525–533. [Google Scholar]

- Tian, Y.; Andrade-Cetto, J. Event Transformer FlowNet for optical flow estimation. In Proceedings of the 33rd British Machine Vision Conference 2022, BMVC 2022, London, UK, 21–24 November 2022; BMVA Press: Durham, UK, 2022. [Google Scholar]

- Li, Y.; Huang, Z.; Chen, S.; Shi, X.; Li, H.; Bao, H.; Cui, Z.; Zhang, G. Blinkflow: A dataset to push the limits of event-based optical flow estimation. arXiv 2023, arXiv:2303.07716. [Google Scholar]

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Visual SLAM algorithms: A survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 16. [Google Scholar] [CrossRef]

- Tsintotas, K.A.; Bampis, L.; Gasteratos, A. The Revisiting Problem in Simultaneous Localization and Mapping: A Survey on Visual Loop Closure Detection. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19929–19953. [Google Scholar] [CrossRef]

- Kabiri, M.; Cimarelli, C.; Bavle, H.; Sanchez-Lopez, J.L.; Voos, H. Graph-Based vs. Error State Kalman Filter-Based Fusion of 5G and Inertial Data for MAV Indoor Pose Estimation. J. Intell. Robot. Syst. 2024, 110, 87. [Google Scholar] [CrossRef]

- Gallego, G.; Scaramuzza, D. Accurate Angular Velocity Estimation with an Event Camera. IEEE Robot. Autom. Lett. 2017, 2, 632–639. [Google Scholar] [CrossRef]

- Reinbacher, C.; Munda, G.; Pock, T. Real-time panoramic tracking for event cameras. In Proceedings of the 2017 IEEE International Conference on Computational Photography (ICCP), Stanford, CA, USA, 12–14 May 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar] [CrossRef]

- Rebecq, H.; Horstschaefer, T.; Gallego, G.; Scaramuzza, D. EVO: A Geometric Approach to Event-Based 6-DOF Parallel Tracking and Mapping in Real Time. IEEE Robot. Autom. Lett. 2017, 2, 593–600. [Google Scholar] [CrossRef]

- Weikersdorfer, D.; Hoffmann, R.; Conradt, J. Simultaneous Localization and Mapping for Event-Based Vision Systems. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; pp. 133–142. [Google Scholar] [CrossRef]

- Weikersdorfer, D.; Adrian, D.B.; Cremers, D.; Conradt, J. Event-Based 3D SLAM with a Depth-Augmented Dynamic Vision Sensor. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar] [CrossRef]

- Kim, H.; Leutenegger, S.; Davison, A.J. Real-Time 3D Reconstruction and 6-DoF Tracking with an Event Camera. In Computer Vision – ECCV 2016; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 349–364. [Google Scholar] [CrossRef]

- Gallego, G.; Lund, J.E.; Mueggler, E.; Rebecq, H.; Delbruck, T.; Scaramuzza, D. Event-Based, 6-DOF Camera Tracking from Photometric Depth Maps. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 2402–2412. [Google Scholar] [CrossRef]

- Rebecq, H.; Gallego, G.; Mueggler, E.; Scaramuzza, D. EMVS: Event-Based Multi-View Stereo—3D Reconstruction with an Event Camera in Real-Time. Int. J. Comput. Vis. 2018, 126, 1394–1414. [Google Scholar] [CrossRef]

- Vidal, A.R.; Rebecq, H.; Horstschaefer, T.; Scaramuzza, D. Ultimate SLAM? Combining Events, Images, and IMU for Robust Visual SLAM in HDR and High-Speed Scenarios. IEEE Robot. Autom. Lett. 2018, 3, 994–1001. [Google Scholar] [CrossRef]

- Chen, P.; Guan, W.; Lu, P. Esvio: Event-Based Stereo Visual Inertial Odometry. IEEE Robot. Autom. Lett. 2023, 8, 3661–3668. [Google Scholar] [CrossRef]

- Zhou, Y.; Gallego, G.; Shen, S. Event-Based Stereo Visual Odometry. IEEE Trans. Robot. 2021, 37, 1433–1450. [Google Scholar] [CrossRef]

- Liu, Z.; Shi, D.; Li, R.; Yang, S. ESVIO: Event-Based Stereo Visual-Inertial Odometry. Sensors 2023, 23, 1998. [Google Scholar] [CrossRef] [PubMed]

- Zuo, Y.F.; Yang, J.; Chen, J.; Wang, X.; Wang, Y.; Kneip, L. DEVO: Depth-Event Camera Visual Odometry in Challenging Conditions. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Hidalgo-Carrio, J.; Gehrig, D.; Scaramuzza, D. Learning Monocular Dense Depth from Events. In Proceedings of the 2020 International Conference on 3D Vision (3DV), Fukuoka, Japan, 25–28 November 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Zhu, J.; Gehrig, D.; Scaramuzza, D. Self-Supervised Event-based Monocular Depth Estimation using Cross-Modal Consistency. arXiv 2024, arXiv:2401.07218. [Google Scholar]

- Jin, Y.; Yu, L.; Li, G.; Fei, S. A 6-DOFs event-based camera relocalization system by CNN-LSTM and image denoising. Expert Syst. Appl. 2021, 170, 114535. [Google Scholar] [CrossRef]

- Hu, L.; Song, X.; Wang, Y.; Liang, P. 6-DoF Pose Relocalization for Event Cameras with Entropy Frame and Attention Networks. In Proceedings of the ACM Symposium on Virtual Reality, Visualization and Interaction (VRCAI), Guangzhou, China, 27–29 December 2022. [Google Scholar]

- Ren, H.; Zhou, S.; Taylor, C.J.; Daniilidis, K. A Simple and Effective Point-based Network for Event Camera 6-DOFs Pose Relocalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Hagenaars, J.; van Gemert, J.C.; Gehrig, D.; Scaramuzza, D. Self-Supervised Learning of Event-Based Optical Flow with Spiking Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Online, 6–14 December 2021. [Google Scholar]

- Zhu, A.Z.; Thakur, D.; Ozaslan, T.; Pfrommer, B.; Kumar, V.; Daniilidis, K. The Multivehicle Stereo Event Camera Dataset: An Event Camera Dataset for 3D Perception. IEEE Robot. Autom. Lett. 2018, 3, 2032–2039. [Google Scholar] [CrossRef]

- Gehrig, M.; Aarents, W.; Gehrig, D.; Scaramuzza, D. DSEC: A Stereo Event Camera Dataset for Driving Scenarios. IEEE Robot. Autom. Lett. 2021, 6, 4947–4954. [Google Scholar] [CrossRef]

- Mueggler, E.; Rebecq, H.; Gallego, G.; Delbruck, T.; Scaramuzza, D. The Event-Camera Dataset and Simulator: Event-based Data for Pose Estimation, Visual Odometry, and SLAM. Int. J. Robot. Res. 2017, 36, 142–149. [Google Scholar] [CrossRef]

- Li, Z.; Chen, Y.; Xie, Y.; Liu, Y.; Yu, L.; Li, J.; Tang, J. M3ED: A Multi-Modal Multi-Motion Event Detection Dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 13624–13634. [Google Scholar]

- Litzenberger, M.; Posch, C.; Bauer, D.; Belbachir, A.; Schon, P.; Kohn, B.; Garn, H. Embedded Vision System for Real-Time Object Tracking using an Asynchronous Transient Vision Sensor. In Proceedings of the 2006 IEEE 12th Digital Signal Processing Workshop & 4th IEEE Signal Processing Education Workshop, Napa, CA, USA, 24–27 September 2006; IEEE: Piscataway, NJ, USA, 2006. [Google Scholar] [CrossRef]

- Vasco, V.; Glover, A.; Mueggler, E.; Scaramuzza, D.; Natale, L.; Bartolozzi, C. Independent motion detection with event-driven cameras. In Proceedings of the 2017 18th International Conference on Advanced Robotics (ICAR), Hong Kong, China, 10–12 July 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar] [CrossRef]

- Stoffregen, T.; Gallego, G.; Drummond, T.; Kleeman, L.; Scaramuzza, D. Event-Based Motion Segmentation by Motion Compensation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republis of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Cannici, M.; Ciccone, M.; Romanoni, A.; Matteucci, M. Asynchronous Convolutional Networks for Object Detection in Neuromorphic Cameras. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1656–1665. [Google Scholar] [CrossRef]

- Liang, Z.; Cao, H.; Yang, C.; Zhang, Z.; Chen, G. Global-local Feature Aggregation for Event-based Object Detection on EventKITTI. In Proceedings of the 2022 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Bedford, UK, 20–22 September 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Mitrokhin, A.; Ye, C.; Fermüller, C.; Aloimonos, Y.; Delbruck, T. EV-IMO: Motion Segmentation Dataset and Learning Pipeline for Event Cameras. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 6105–6112. [Google Scholar] [CrossRef]

- Burner, L.; Mitrokhin, A.; Fermüller, C.; Aloimonos, Y. EVIMO2: An Event Camera Dataset for Motion Segmentation, Optical Flow, Structure from Motion, and Visual Inertial Odometry in Indoor Scenes with Monocular or Stereo Algorithms. arXiv 2022, arXiv:2205.03467. [Google Scholar] [CrossRef]

- Gehrig, M.; Scaramuzza, D. Recurrent Vision Transformers for Object Detection with Event Cameras. In Proceedings of the CVPR, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Zhou, Z.; Wu, Z.; Paudel, D.P.; Boutteau, R.; Yang, F.; Van Gool, L.; Timofte, R.; Ginhac, D. Event-free moving object segmentation from moving ego vehicle. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 8960–8965. [Google Scholar]

- Georgoulis, S.; Ren, W.; Bochicchio, A.; Eckert, D.; Li, Y.; Gawel, A. Out of the room: Generalizing event-based dynamic motion segmentation for complex scenes. In Proceedings of the 2024 International Conference on 3D Vision (3DV), Davos, Switzerland, 18–21 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 442–452. [Google Scholar]

- Wang, Z.; Guo, J.; Daniilidis, K. Un-evmoseg: Unsupervised event-based independent motion segmentation. arXiv 2023, arXiv:2312.00114. [Google Scholar]

- Wu, Z.; Gehrig, M.; Lyu, Q.; Liu, X.; Gilitschenski, I. Leod: Label-efficient object detection for event cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16933–16943. [Google Scholar]

- Snyder, S.; Thompson, H.; Kaiser, M.A.A.; Schwartz, G.; Jaiswal, A.; Parsa, M. Object motion sensitivity: A bio-inspired solution to the ego-motion problem for event-based cameras. arXiv 2023, arXiv:2303.14114. [Google Scholar]

- Clerico, V.; Snyder, S.; Lohia, A.; Abdullah-Al Kaiser, M.; Schwartz, G.; Jaiswal, A.; Parsa, M. Retina-Inspired Object Motion Segmentation for Event-Cameras. In Proceedings of the 2025 Neuro Inspired Computational Elements (NICE), Heidelberg, Germany, 24–26 March 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–6. [Google Scholar]

- Zhou, H.; Shi, Z.; Dong, H.; Peng, S.; Chang, Y.; Yan, L. JSTR: Joint spatio-temporal reasoning for event-based moving object detection. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 10650–10656. [Google Scholar]

- Zhou, Z.; Wu, Z.; Boutteau, R.; Yang, F.; Demonceaux, C.; Ginhac, D. RGB-Event Fusion for Moving Object Detection in Autonomous Driving. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 7808–7815. [Google Scholar] [CrossRef]

- Lu, D.; Kong, L.; Lee, G.H.; Chane, C.S.; Ooi, W.T. FlexEvent: Towards Flexible Event-Frame Object Detection at Varying Operational Frequencies. arXiv 2024, arXiv:2412.06708. [Google Scholar]

- Fang, W.; Yu, Z.; Chen, Y.; Huang, T.; Masquelier, T.; Tian, Y. Deep residual learning in spiking neural networks. Adv. Neural Inf. Process. Syst. 2021, 34, 21056–21069. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Yao, M.; Qiu, X.; Hu, T.; Hu, J.; Chou, Y.; Tian, K.; Liao, J.; Leng, L.; Xu, B.; Li, G. Scaling spike-driven transformer with efficient spike firing approximation training. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 2973–2990. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, X.; Chen, Y.; Peng, W.; Zhang, Y.; Ma, Z. Spiking Transformer: Introducing Accurate Addition-Only Spiking Self-Attention for Transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Denver, CO, USA, 3–7 June 2025; pp. 24398–24408. [Google Scholar]

- Xu, Q.; Deng, J.; Shen, J.; Chen, B.; Tang, H.; Pan, G. Hybrid Spiking Vision Transformer for Object Detection with Event Cameras. In Proceedings of the International Conference on Machine Learning (ICML), Vancouver, BC, Canada, 13–19 July 2025. [Google Scholar]

- Lee, D.; Li, Y.; Kim, Y.; Xiao, S.; Panda, P. Spiking Transformer with Spatial-Temporal Attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 11–15 June 2025; pp. 13948–13958. [Google Scholar]

- Zhao, L.; Huang, Z.; Ding, J.; Yu, Z. TTFSFormer: A TTFS-based Lossless Conversion of Spiking Transformer. In Proceedings of the International Conference on Machine Learning (ICML), Vancouver, BC, Canada, 13–19 July 2025. [Google Scholar]

- Lei, Z.; Yao, M.; Hu, J.; Luo, X.; Lu, Y.; Xu, B.; Li, G. Spike2former: Efficient Spiking Transformer for High-performance Image Segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 1364–1372. [Google Scholar]

- Wang, Y.; Zhang, Y.; Xiong, R.; Zhao, J.; Zhang, J.; Fan, X.; Huang, T. Spk2SRImgNet: Super-Resolve Dynamic Scene from Spike Stream via Motion Aligned Collaborative Filtering. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 11416–11426. [Google Scholar]

- Qian, Y.; Ye, S.; Wang, C.; Cai, X.; Qian, J.; Wu, J. UCF-Crime-DVS: A Novel Event-Based Dataset for Video Anomaly Detection with Spiking Neural Networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 6577–6585. [Google Scholar]

- Amir, A.; Taba, B.; Berg, D.; Melano, T.; McKinstry, J.; di Nolfo, C.; Nayak, T.; Andreopoulos, A.; Garreau, G.; Mendoza, M.; et al. A Low Power, Fully Event-Based Gesture Recognition System. In Proceedings of the The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Orchard, G.; Jayawant, A.; Cohen, G.K.; Thakor, N. Converting static image datasets to spiking neuromorphic datasets using saccades. Front. Neurosci. 2015, 9, 437. [Google Scholar] [CrossRef]

- Li, H.; Liu, H.; Ji, X.; Li, G.; Shi, L. Cifar10-dvs: An event-stream dataset for object classification. Front. Neurosci. 2017, 11, 244131. [Google Scholar] [CrossRef]

- Sun, S.; Cioffi, G.; de Visser, C.; Scaramuzza, D. Autonomous Quadrotor Flight Despite Rotor Failure with Onboard Vision Sensors: Frames vs. Events. IEEE Robot. Autom. Lett. 2021, 6, 580–587. [Google Scholar] [CrossRef]

- Chen, G.; Cao, H.; Conradt, J.; Tang, H.; Rohrbein, F.; Knoll, A. Event-based neuromorphic vision for autonomous driving: A paradigm shift for bio-inspired visual sensing and perception. IEEE Signal Process. Mag. 2020, 37, 34–49. [Google Scholar] [CrossRef]

- Litzenberger, M.; Kohn, B.; Belbachir, A.; Donath, N.; Gritsch, G.; Garn, H.; Posch, C.; Schraml, S. Estimation of Vehicle Speed Based on Asynchronous Data from a Silicon Retina Optical Sensor. In Proceedings of the 2006 IEEE Intelligent Transportation Systems Conference, Toronto, ON, Canada, 17–20 September 2006; IEEE: Piscataway, NJ, USA, 2006. [Google Scholar] [CrossRef]

- Dietsche, A.; Cioffi, G.; Hidalgo-Carrio, J.; Scaramuzza, D. PowerLine Tracking with Event Cameras. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021. [Google Scholar]

- Chin, T.J.; Bagchi, S.; Eriksson, A.; van Schaik, A. Star Tracking Using an Event Camera. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Cohen, G.; Afshar, S.; Morreale, B.; Bessell, T.; Wabnitz, A.; Rutten, M.; van Schaik, A. Event-based Sensing for Space Situational Awareness. J. Astronaut. Sci. 2019, 66, 125–141. [Google Scholar] [CrossRef]

- Afshar, S.; Nicholson, A.P.; van Schaik, A.; Cohen, G. Event-Based Object Detection and Tracking for Space Situational Awareness. IEEE Sensors J. 2020, 20, 15117–15132. [Google Scholar] [CrossRef]

- Angelopoulos, A.N.; Martel, J.N.; Kohli, A.P.; Conradt, J.; Wetzstein, G. Event-Based Near-Eye Gaze Tracking Beyond 10,000 Hz. IEEE Trans. Vis. Comput. Graph. 2021, 27, 2577–2586. [Google Scholar] [CrossRef]

- Colonnier, F.; Della Vedova, L.; Orchard, G. ESPEE: Event-Based Sensor Pose Estimation Using an Extended Kalman Filter. Sensors 2021, 21, 7840. [Google Scholar] [CrossRef]

- Zou, S.; Guo, C.; Zuo, X.; Wang, S.; Wang, P.; Hu, X.; Chen, S.; Gong, M.; Cheng, L. EventHPE: Event-based 3D Human Pose and Shape Estimation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10976–10985. [Google Scholar] [CrossRef]

- Scarpellini, G.; Morerio, P.; Bue, A.D. Lifting Monocular Events to 3D Human Poses. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Shao, Z.; Zhou, W.; Wang, W.; Yang, J.; Li, Y. A Temporal Densely Connected Recurrent Network for Event-based Human Pose Estimation. Pattern Recognit. 2024, 147, 110048. [Google Scholar] [CrossRef]

- Zhang, Z.; Chai, K.; Yu, H.; Majaj, R.; Walsh, F.; Wang, E.; Mahbub, U.; Siegelmann, H.; Kim, D.; Rahman, T. Neuromorphic high-frequency 3D dancing pose estimation in dynamic environment. Neurocomputing 2023, 547, 126388. [Google Scholar] [CrossRef]

- Calabrese, E.; Taverni, G.; Awai Easthope, C.; Skriabine, S.; Corradi, F.; Longinotti, L.; Eng, K.; Delbruck, T. DHP19: Dynamic Vision Sensor 3D Human Pose Dataset. In Proceedings of the The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Moeys, D.P.; Corradi, F.; Li, C.; Bamford, S.A.; Longinotti, L.; Voigt, F.F.; Berry, S.; Taverni, G.; Helmchen, F.; Delbruck, T. A Sensitive Dynamic and Active Pixel Vision Sensor for Color or Neural Imaging Applications. IEEE Trans. Biomed. Circuits Syst. 2017, 12, 123–136. [Google Scholar] [CrossRef] [PubMed]

- Choi, C.; Lee, G.J.; Chang, S.; Kim, D.H. Inspiration from Visual Ecology for Advancing Multifunctional Robotic Vision Systems: Bio-inspired Electronic Eyes and Neuromorphic Image Sensors. Adv. Mater. 2024, 36, e2412252. [Google Scholar] [CrossRef] [PubMed]

- Tulyakov, S.; Gehrig, D.; Georgoulis, S.; Erbach, J.; Gehrig, M.; Li, Y.; Scaramuzza, D. Time Lens: Event-based Video Frame Interpolation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Tulyakov, S.; Bochicchio, A.; Gehrig, D.; Georgoulis, S.; Li, Y.; Scaramuzza, D. Time Lens++: Event-based Frame Interpolation with Parametric Nonlinear Flow and Multi-Scale Fusion. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Mavridou, E.; Vrochidou, E.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Machine Vision Systems in Precision Agriculture for Crop Farming. J. Imaging 2019, 5, 89. [Google Scholar] [CrossRef]

- Bialik, K.; Kowalczyk, M.; Blachut, K.; Kryjak, T. Fast-moving object counting with an event camera. arXiv 2022, arXiv:2212.08384. [Google Scholar] [CrossRef]

- Dold, P.M.; Nadkarni, P.; Boley, M.; Schorb, V.; Wu, L.; Steinberg, F.; Burggräf, P.; Mikut, R. Event-based vision in laser welding: An approach for process monitoring. J. Laser Appl. 2025, 37, 012040. [Google Scholar] [CrossRef]

- Baldini, S.; Bernardini, R.; Fusiello, A.; Gardonio, P.; Rinaldo, R. Measuring Vibrations with Event Cameras. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2024, 48, 9–16. [Google Scholar] [CrossRef]

- Rebecq, H.; Ranftl, R.; Koltun, V.; Scaramuzza, D. Events-to-Video: Bringing Modern Computer Vision to Event Cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Rebecq, H.; Ranftl, R.; Koltun, V.; Scaramuzza, D. High Speed and High Dynamic Range Video with an Event Camera. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1964–1980. [Google Scholar] [CrossRef]

- Bacon, J.G., Jr. Satellite Tracking with Neuromorphic Cameras for Space Domain Awareness. Master’s Thesis, Department of Aeronautics and Astronautics, Cambridge, MA, USA, 2021. [Google Scholar]

- Żołnowski, M.; Reszelewski, R.; Moeys, D.P.; Delbrück, T.; Kamiński, K. Observational evaluation of event cameras performance in optical space surveillance. In Proceedings of the NEO and Debris Detection Conference, Darmstadt, Germany, 22–24 January 2019. [Google Scholar]

- Jolley, A.; Cohen, G.; Lambert, A. Use of neuromorphic sensors for satellite material characterisation. In Proceedings of the Imaging and Applied Optics Congress, Munich, Germany, 24–27 June 2019. [Google Scholar]

- Jawaid, M.; Elms, E.; Latif, Y.; Chin, T.J. Towards Bridging the Space Domain Gap for Satellite Pose Estimation using Event Sensing. arXiv 2022, arXiv:2209.11945. [Google Scholar] [CrossRef]

- Mahlknecht, F.; Gehrig, D.; Nash, J.; Rockenbauer, F.M.; Morrell, B.; Delaune, J.; Scaramuzza, D. Exploring Event Camera-Based Odometry for Planetary Robots. IEEE Robot. Autom. Lett. 2022, 7, 8651–8658. [Google Scholar] [CrossRef]

- McHarg, M.G.; Balthazor, R.L.; McReynolds, B.J.; Howe, D.H.; Maloney, C.J.; O’Keefe, D.; Bam, R.; Wilson, G.; Karki, P.; Marcireau, A.; et al. Falcon Neuro: An event-based sensor on the International Space Station. Opt. Eng. 2022, 61, 085105. [Google Scholar] [CrossRef]

- Hinz, G.; Chen, G.; Aafaque, M.; Röhrbein, F.; Conradt, J.; Bing, Z.; Qu, Z.; Stechele, W.; Knoll, A. Online Multi-object Tracking-by-Clustering for Intelligent Transportation System with Neuromorphic Vision Sensor. In KI 2017: Advances in Artificial Intelligence; Springer International Publishing: Berlin/Heidelberg, Germany, 2017; pp. 142–154. [Google Scholar] [CrossRef]

- Ganan, F.; Sanchez-Diaz, J.; Tapia, R.; de Dios, J.M.; Ollero, A. Efficient Event-based Intrusion Monitoring using Probabilistic Distributions. In Proceedings of the 2022 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Sevilla, Spain, 8–10 November 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- de Dios, J.M.; Eguiluz, A.G.; Rodriguez-Gomez, J.; Tapia, R.; Ollero, A. Towards UAS Surveillance Using Event Cameras. In Proceedings of the 2020 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Abu Dhabi, United Arab Emirates, 4–6 November 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Rodriguez-Gomez, J.; Eguiluz, A.G.; Martinez-de Dios, J.; Ollero, A. Asynchronous Event-Based Clustering and Tracking for Intrusion Monitoring in UAS. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 8518–8524. [Google Scholar] [CrossRef]

- Pérez-Cutiño, M.; Eguíluz, A.G.; Dios, J.M.d.; Ollero, A. Event-Based Human Intrusion Detection in UAS Using Deep Learning. In Proceedings of the 2021 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 15–18 June 2021; pp. 91–100. [Google Scholar] [CrossRef]

- Falanga, D.; Kim, S.; Scaramuzza, D. How Fast Is Too Fast? The Role of Perception Latency in High-Speed Sense and Avoid. IEEE Robot. Autom. Lett. 2019, 4, 1884–1891. [Google Scholar] [CrossRef]

- Loquercio, A.; Kaufmann, E.; Ranftl, R.; Müller, M.; Koltun, V.; Scaramuzza, D. Learning high-speed flight in the wild. Sci. Robot. 2021, 6, abg581. [Google Scholar] [CrossRef]

- Wzorek, P.; Kryjak, T. Traffic Sign Detection with Event Cameras and DCNN. In Proceedings of the 2022 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 21–22 September 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Yang, C.; Liu, P.; Chen, G.; Liu, Z.; Wu, Y.; Knoll, A. Event-based Driver Distraction Detection and Action Recognition. In Proceedings of the 2022 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Bedford, UK, 20–22 September 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Hu, Y.; Liu, S.C.; Delbruck, T. v2e: From Video Frames to Realistic DVS Events. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Shair, Z.E.; Rawashdeh, S. High-Temporal-Resolution Event-Based Vehicle Detection and Tracking. Opt. Eng. 2022, 62, 031209. [Google Scholar] [CrossRef]

- Belbachir, A.; Schraml, S.; Brandle, N. Real-Time Classification of Pedestrians and Cyclists for Intelligent Counting of Non-Motorized Traffic. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, San Francisco, CA, USA, 13–18 June 2010; IEEE: Piscataway, NJ, USA, 2010. [Google Scholar] [CrossRef]

- Kirkland, P.; Di Caterina, G.; Soraghan, J.; Matich, G. Neuromorphic technologies for defence and security. In Proceedings of the Emerging Imaging and Sensing Technologies for Security and Defence V; and Advanced Manufacturing Technologies for Micro-and Nanosystems in Security and Defence III; SPIE: St Bellingham, WA, USA, 2020; Volume 11540, pp. 113–130. [Google Scholar]

- Stewart, T.; Drouin, M.A.; Gagne, G.; Godin, G. Drone Virtual Fence Using a Neuromorphic Camera. In Proceedings of the International Conference on Neuromorphic Systems; ACM: New York, NY, USA, 2021; pp. 1–9. [Google Scholar]

- Boehrer, N.; Kuijf, H.J.; Dijk, J. Laser Warning and Pointed Optics Detection Using an Event Camera. In Proceedings of the Electro-Optical and Infrared Systems: Technology and Applications XXI, Edinburgh, UK, 16–19 September 2024; SPIE: St Bellingham, WA, USA, 2024; Volume 13200, pp. 314–326. [Google Scholar] [CrossRef]

- Eisele, C.; Seiffer, D.; Sucher, E.; Sjöqvist, L.; Henriksson, M.; Lavigne, C.; Domel, R.; Déliot, P.; Dijk, J.; Kuijf, H.; et al. DEBELA: Investigations on Potential Detect-before-Launch Technologies. In Proceedings of the Electro-Optical and Infrared Systems: Technology and Applications XXI, Edinburgh, UK, 16–19 September 2024; SPIE: St Bellingham, WA, USA, 2024; Volume 13200, pp. 300–313. [Google Scholar] [CrossRef]

- Kim, E.; Yarnall, J.; Shah, P.; Kenyon, G.T. A neuromorphic sparse coding defense to adversarial images. In Proceedings of the International Conference on Neuromorphic Systems; ACM: New York, NY, USA, 2019; pp. 1–8. [Google Scholar]

- Cha, J.H.; Abbott, A.L.; Szu, H.H.; Willey, J.; Landa, J.; Krapels, K.A. Neuromorphic implementation of a software-defined camera that can see through fire and smoke in real-time. In Proceedings of the Independent Component Analyses, Compressive Sampling, Wavelets, Neural Net, Biosystems, and Nanoengineering XII; SPIE: St Bellingham, WA, USA, 2014; Volume 9118, pp. 25–34. [Google Scholar]

- Moustafa, M.; Lemley, J.; Corcoran, P. Contactless Cardiac Pulse Monitoring Using Event Cameras. arXiv 2025, arXiv:2505.09529. [Google Scholar] [CrossRef]

- Kim, J.; Kim, Y.M.; Wu, Y.; Zahreddine, R.; Welge, W.A.; Krishnan, G.; Ma, S.; Wang, J. Privacy-preserving visual localization with event cameras. arXiv 2022, arXiv:2212.03177. [Google Scholar] [CrossRef]

- Habara, T.; Sato, T.; Awano, H. Zero-Aware Regularization for Energy-Efficient Inference on Akida Neuromorphic Processor. In Proceedings of the 2025 IEEE International Symposium on Circuits and Systems (ISCAS), London, UK, 25–28 May 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–5. [Google Scholar]

- Kiselev, I.; Neil, D.; Liu, S.C. Event-driven deep neural network hardware system for sensor fusion. In Proceedings of the 2016 IEEE International Symposium on Circuits and Systems (ISCAS), Montréal, QC, Canada, 22–25 May 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar] [CrossRef]

- O’Connor, P.; Neil, D.; Liu, S.C.; Delbruck, T.; Pfeiffer, M. Real-time classification and sensor fusion with a spiking deep belief network. Front. Neurosci. 2013, 7, 178. [Google Scholar] [CrossRef] [PubMed]

- Lv, W.; Xu, S.; Zhao, Y.; Wang, G.; Wei, J.; Cui, C.; Du, Y.; Dang, Q.; Liu, Y. DETRs Beat YOLOs on Real-time Object Detection. arXiv 2023, arXiv:2304.08069. [Google Scholar] [CrossRef]

- Sabater, A.; Montesano, L.; Murillo, A.C. Event Transformer. A Sparse-Aware Solution for Efficient Event Data Processing. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Piscataway, NJ, USA, 18–24 June 2022. [Google Scholar] [CrossRef]

- Kingdon, G.; Coker, R. The eyes have it: Improving mill availability through visual technology. In Proceedings of the International Semi-Autogenous Grinding and High Pressure Grinding Roll Technology, Vancouver, BC, Canada, 20–23 September 2015; Volume 3, pp. 1–15. [Google Scholar]

| Reference | Year | Focus Topic | Key Highlights |

|---|---|---|---|

| This survey | 2025 | Comprehensive Overview (Hardware, Algorithms, Applications) | Provides a structured and up-to-date survey of neuromorphic vision, covering the evolution of hardware, key developments in event-based image processing, practical application case studies, and current limitations. Highlights challenges and opportunities for broader adoption across domains. |

| Kudithipudi et al. [6] | 2025 | Neuromorphic Computing at Scale | Proposes a forward-looking framework for scaling neuromorphic systems from lab prototypes to real-world deployments. Offers high-level guidance on architecture, tools, ecosystem needs, and application potential. It is especially relevant for stakeholders aiming at system-level integration. |

| Ghosh and Gallego [7] | 2025 | Event-based Stereo Depth Estimation | Extensive survey of stereo depth estimation with event cameras, encompassing both model-based and deep learning techniques. Compares instantaneous vs. long-term methods, highlights the role of neuromorphic hardware and SNNs for stereo, benchmarks methods and datasets, and proposes future directions for real-world deployment of event-based depth estimation. |

| Adra et al. [8] | 2025 | Human-Centered Event-Based Applications | Provides the first comprehensive survey unifying event-based vision applications for body and face analysis. Discusses challenges, opportunities, and less explored topics such as event compression and simulation frameworks. |

| AliAkbarpour et al. [9] | 2024 | Emerging Trends and Applications | Reviews a wide spectrum of emerging event-based processing techniques and niche applications (e.g., SSA, RS correction, SR, robotics). Focuses on technical breadth and dataset/tool support, with limited emphasis on foundational hardware or unified algorithmic frameworks. |

| Shariff et al. [10] | 2024 | Automotive Sensing (In-Cabin and Out-of-Cabin) | Presents a comprehensive review of event cameras for automotive sensing, covering both in-cabin (driver/passenger monitoring) and out-of-cabin (object detection, SLAM, obstacle avoidance). Details hardware architecture, data processing, datasets, noise filtering, sensor fusion, and transformer-based approaches. |

| Cazzato and Bono [11] | 2024 | Application-Driven Event-Based Vision | Reviews event-based neuromorphic vision sensors from an application perspective. Categorizes computer vision problems by field and discusses each application area’s key challenges, major achievements, and unique characteristics. |

| Chakravarthi et al. [12] | 2024 | Event Camera Innovations | Traces the evolution of event cameras, comparing them with traditional sensors. Reviews technological milestones, major camera models, datasets, and simulators while consolidating research resources for further innovation. |

| Tenzin et al. [13] | 2024 | Event-Based VSLAM and Neuromorphic Computing | Surveys the integration of event cameras and neuromorphic processors into VSLAM systems. Discusses feature extraction, motion estimation, and map reconstruction while highlighting energy efficiency, robustness, and real-time performance improvements. |

| Becattini et al. [14] | 2024 | Face Analysis | Examines novel applications such as expression and emotion recognition, face detection, identity verification, and gaze tracking for AR/VR, areas not previously covered by event cameras surveys. The paper emphasizes the significant gap in standardized datasets and benchmarks, stressing the importance of using real data over simulations. |

| Zheng et al. [15] | 2023 | Deep Learning Approaches | Extensively surveys deep learning approaches for event-based vision, focusing on advancements in data representation and processing techniques. It systematically categorizes and evaluates methods across multiple computer vision topics. The paper discusses the unique advantages of event cameras, particularly under challenging conditions, and suggests future directions for integrating deep learning to exploit these benefits further. |

| Reference | Year | Focus Topic | Key Highlights |

|---|---|---|---|

| Huang et al. [16] | 2023 | Self-Localization and Mapping | Discusses various event-based vSLAM methods, including feature-based, direct, motion-compensation, and deep learning approaches. Evaluates these methods on different benchmarks, underscoring their unique properties and advantages with respect to one another. Then, it gives deep reasons for the challenges inherent to sensors and the task of SLAM, drawing future directions for research. |

| Schuman et al. [17] | 2022 | Neuromorphic Algorithmic Directions | Highlights algorithmic opportunities for neuromorphic computing, covering SNN training methods, non-ML models, and application potential. Emphasis is placed on open challenges and co-design needs rather than exhaustive architectural or vision-specific coverage. |

| Shi et al. [18] | 2022 | Motion and Depth Estimation for Indoor Positioning | Reviews notable techniques for ego-motion estimation, tracking, and depth estimation utilizing event-based sensing. Then, it suggests further research directions for real-world applications to indoor positioning. |

| Furmonas et al. [19] | 2022 | Depth Estimation Techniques | Discusses various depth estimation approaches, including monocular and stereo methods, detailing the strengths and challenges of each. It advocates integrating these sensors with neuromorphic computing platforms to enhance depth perception accuracy and processing efficiency. |

| Cho et al. [20] | 2022 | Material Innovations and Computing Paradigms | Highlights the evolution from traditional designs to innovative in-sensor and near-sensor computing that optimizes processing speed and energy efficiency. It addresses the challenge of complex manufacturing processes, suggesting directions for future research and application in flexible electronics. |

| Liao et al. [21] | 2021 | Technologies and Biological Principles | Reviews advancements in neuromorphic vision sensors, contrasting silicon-based CMOS technologies such as DVS, DAVIS, and ATIS with emerging technologies in analogical devices. |

| Gallego et al. [3] | 2020 | Sensor Working Principle and Vision Algorithms | Thoroughly reviews the advancements in event-based vision, emphasizing its unique properties. The survey spans various vision tasks, including feature detection, optical flow, and object recognition, and discusses innovative processing techniques. It also outlines significant challenges and future opportunities in this rapidly evolving field. |

| Steffen et al. [22] | 2019 | Stereo Vision and Sensor Principles | Performs a comparative analysis of event-based sensors, focusing on technologies such as DVS, DAVIS, and ATIS. It reviews the biological principles underlying depth perception and explores the approaches to stereoscopy using event-based sensors. |