Motor Imagery Acquisition Paradigms: In the Search to Improve Classification Accuracy

Abstract

1. Introduction

2. Materials and Methods

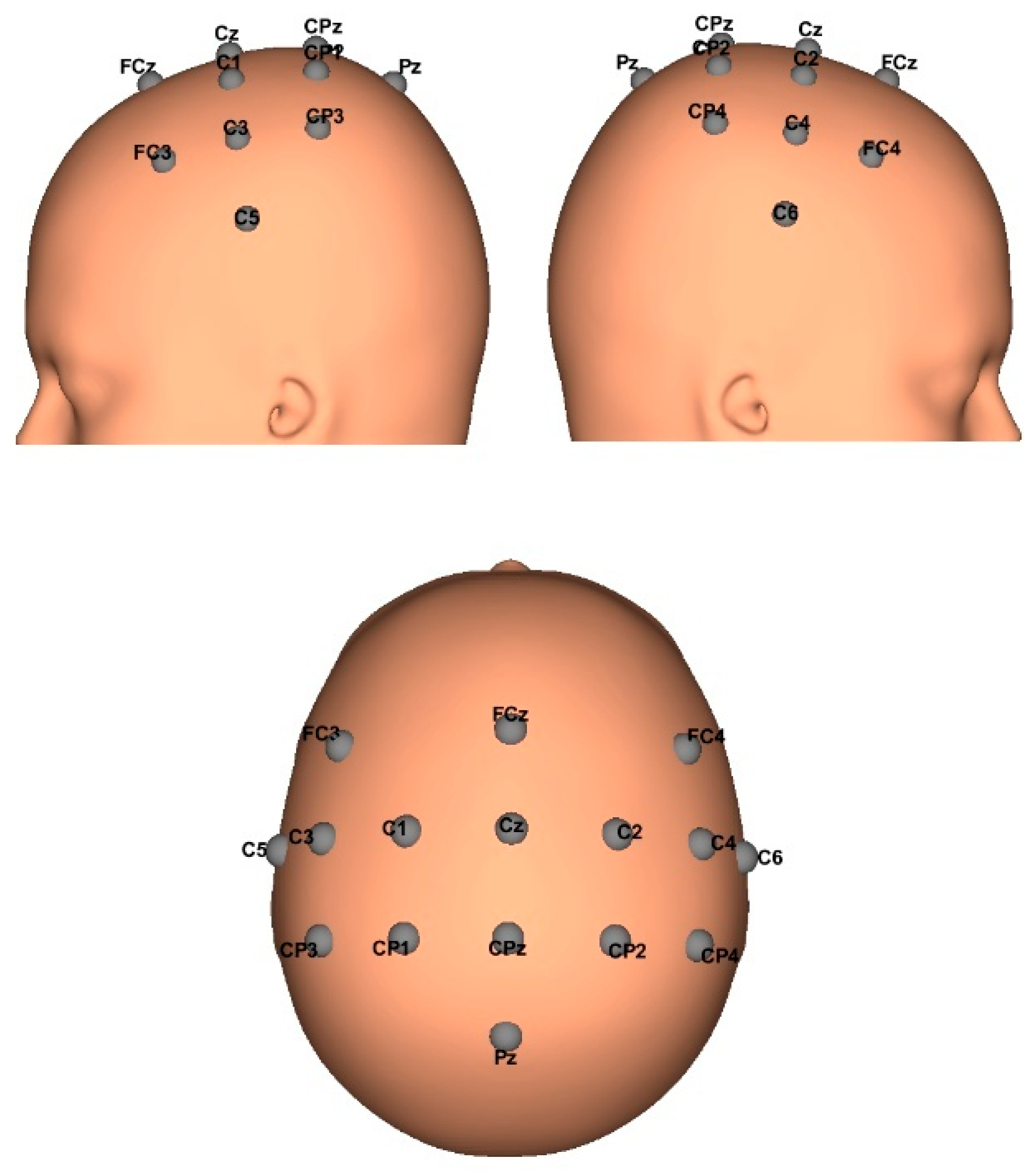

2.1. EEG Biosignals Acquisition

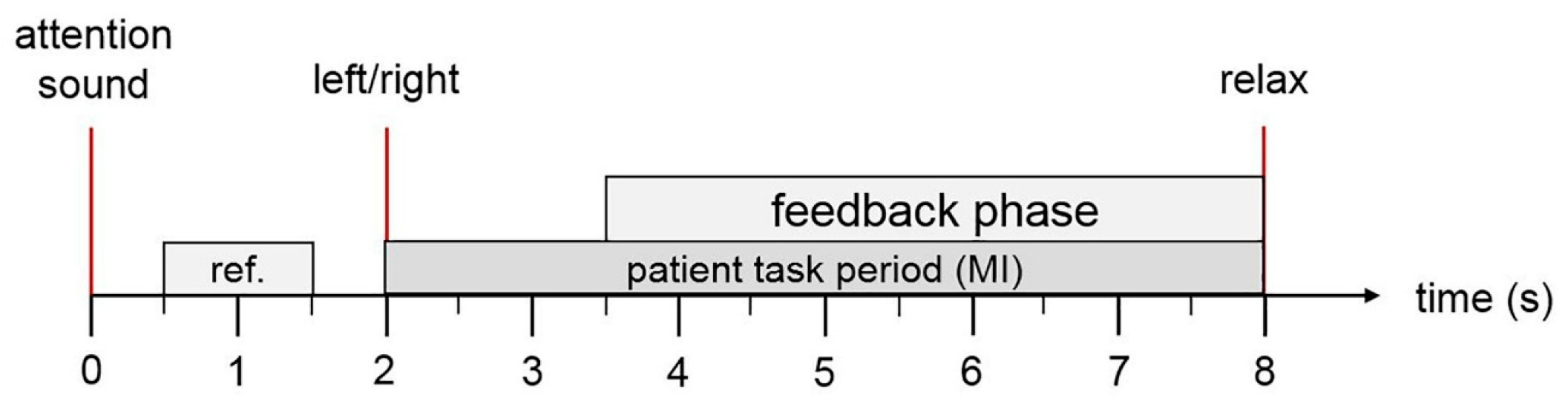

Experimental Paradigms

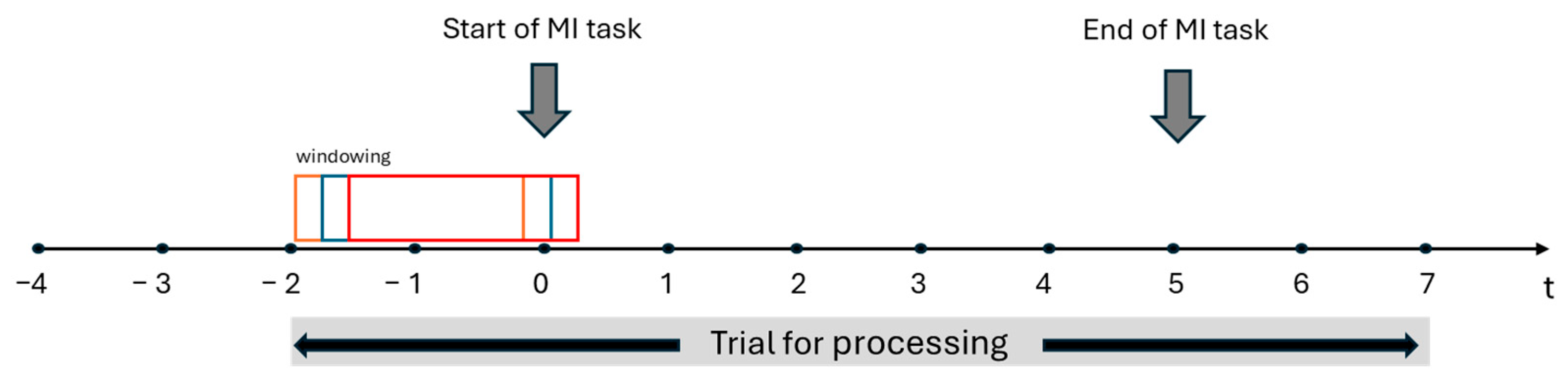

2.2. EEG Biosignals Processing

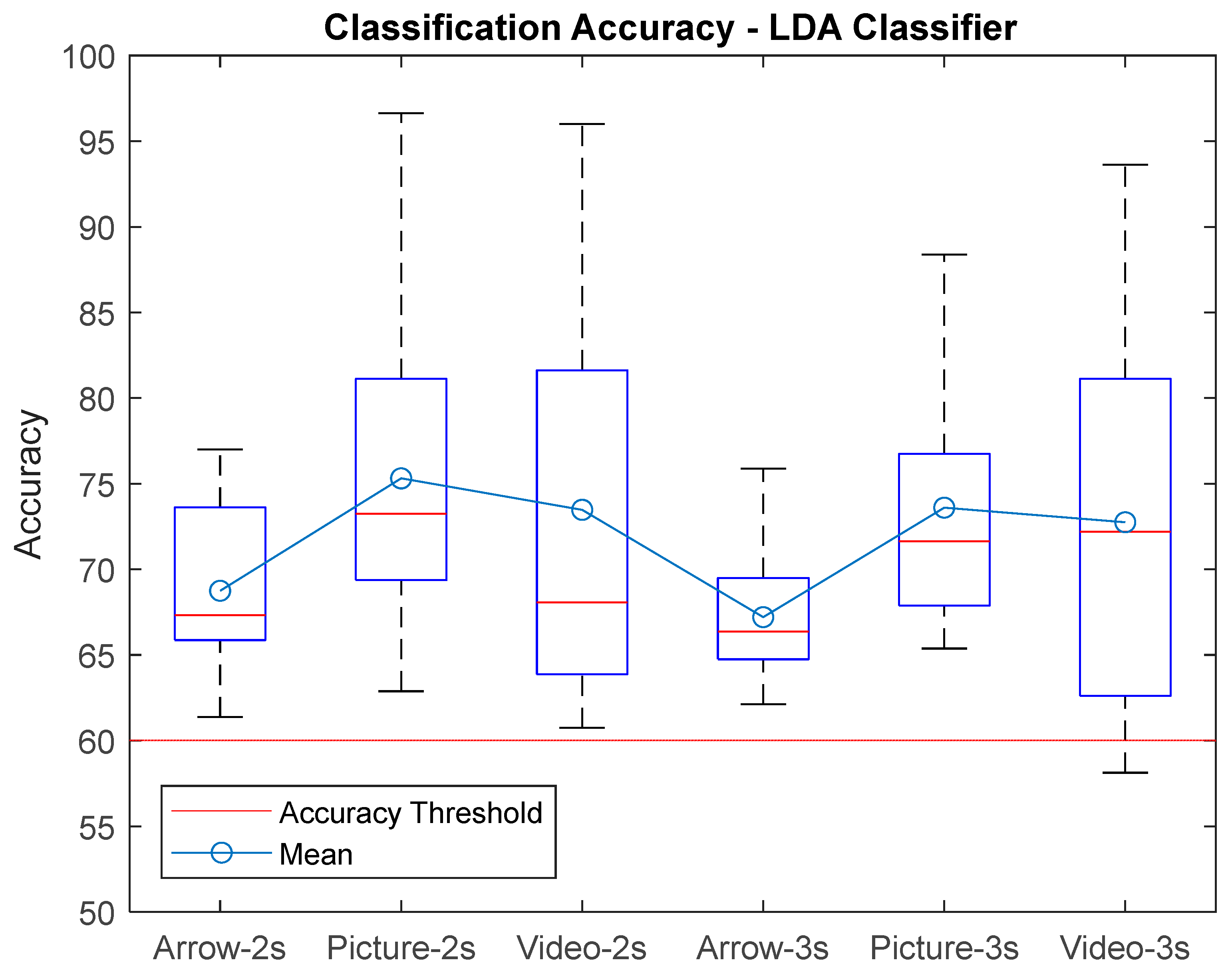

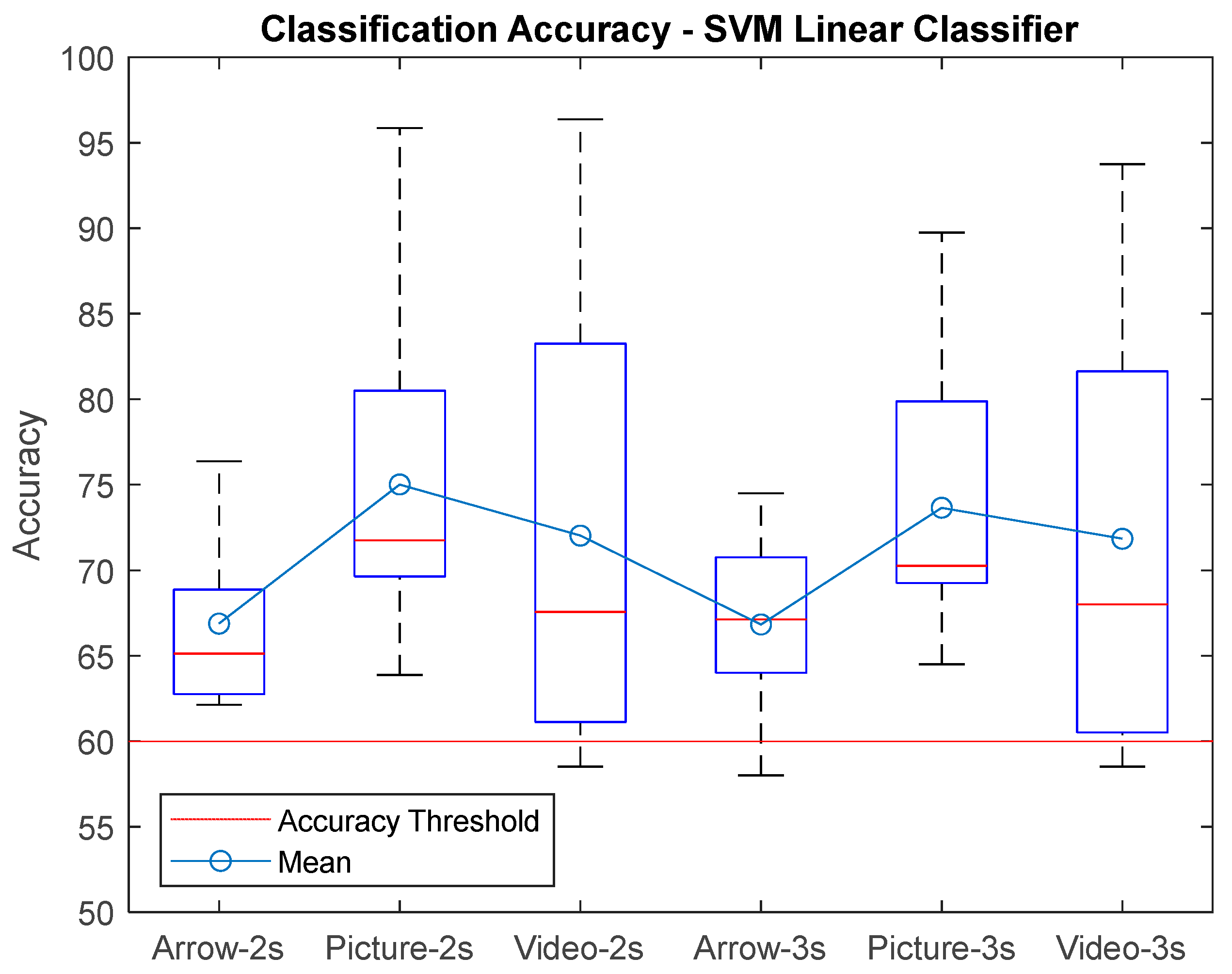

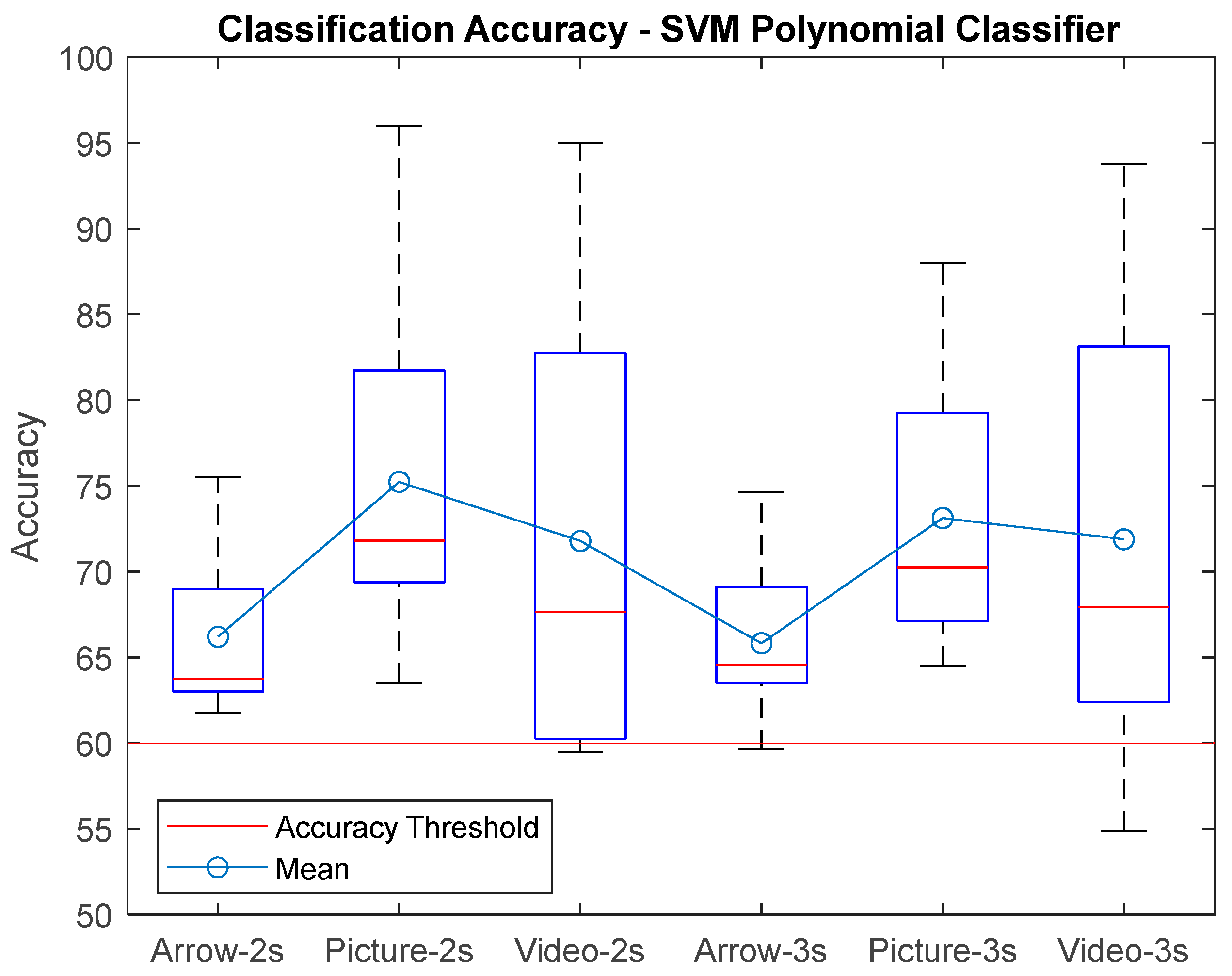

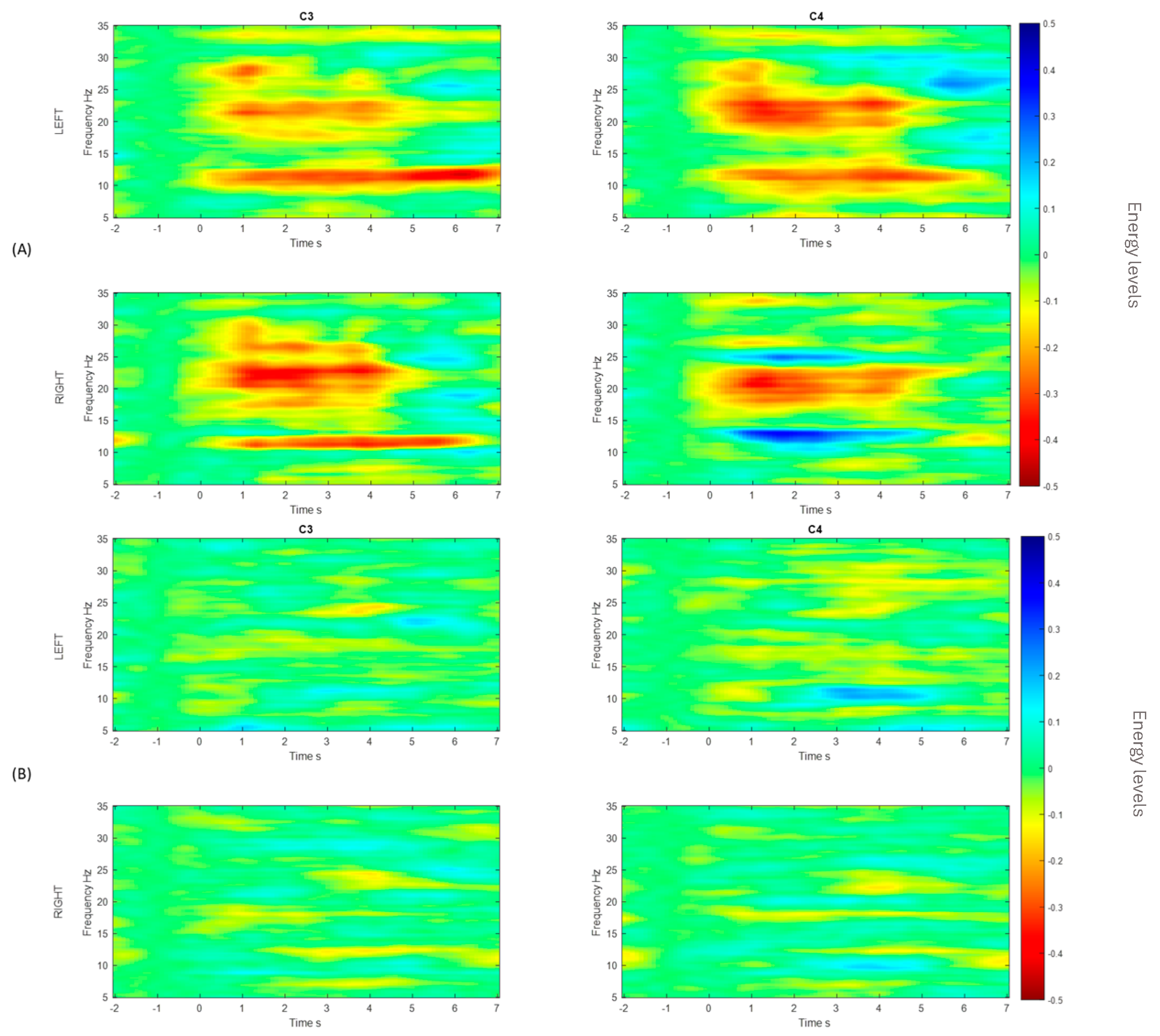

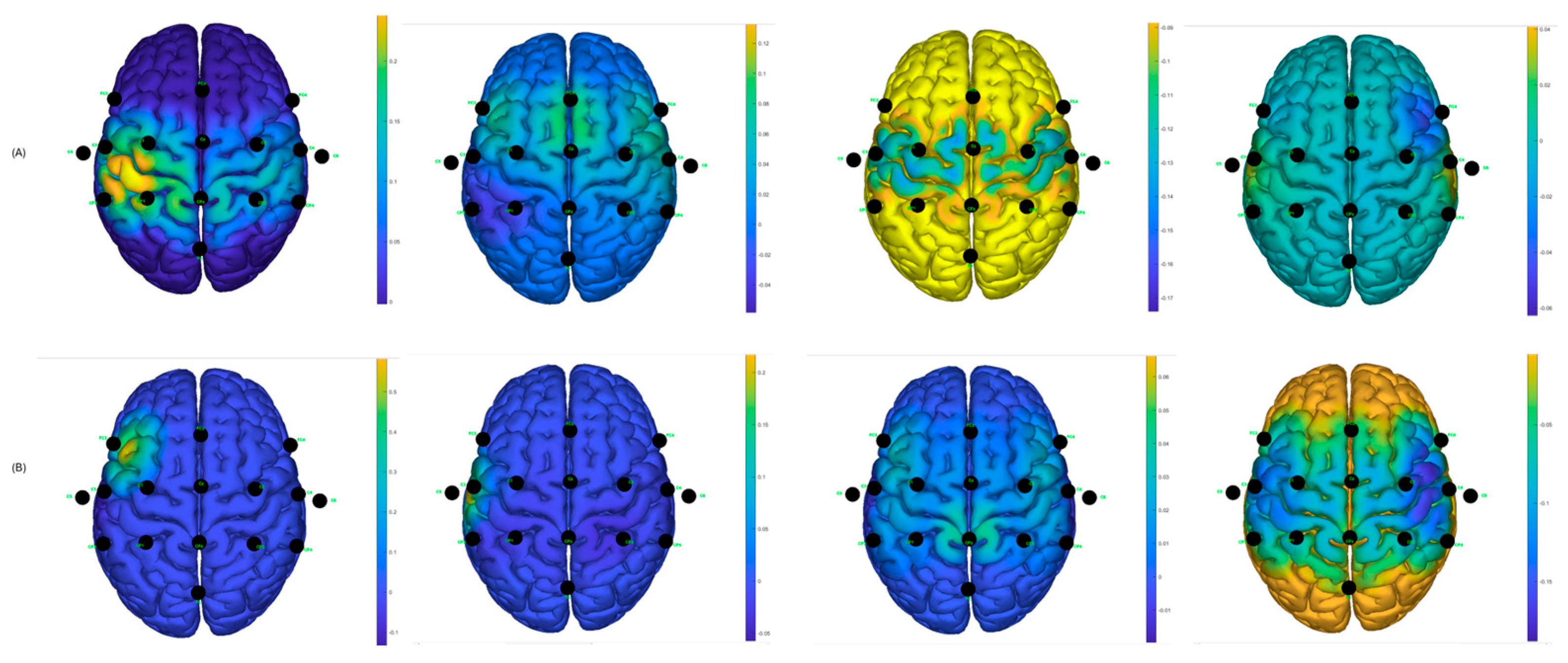

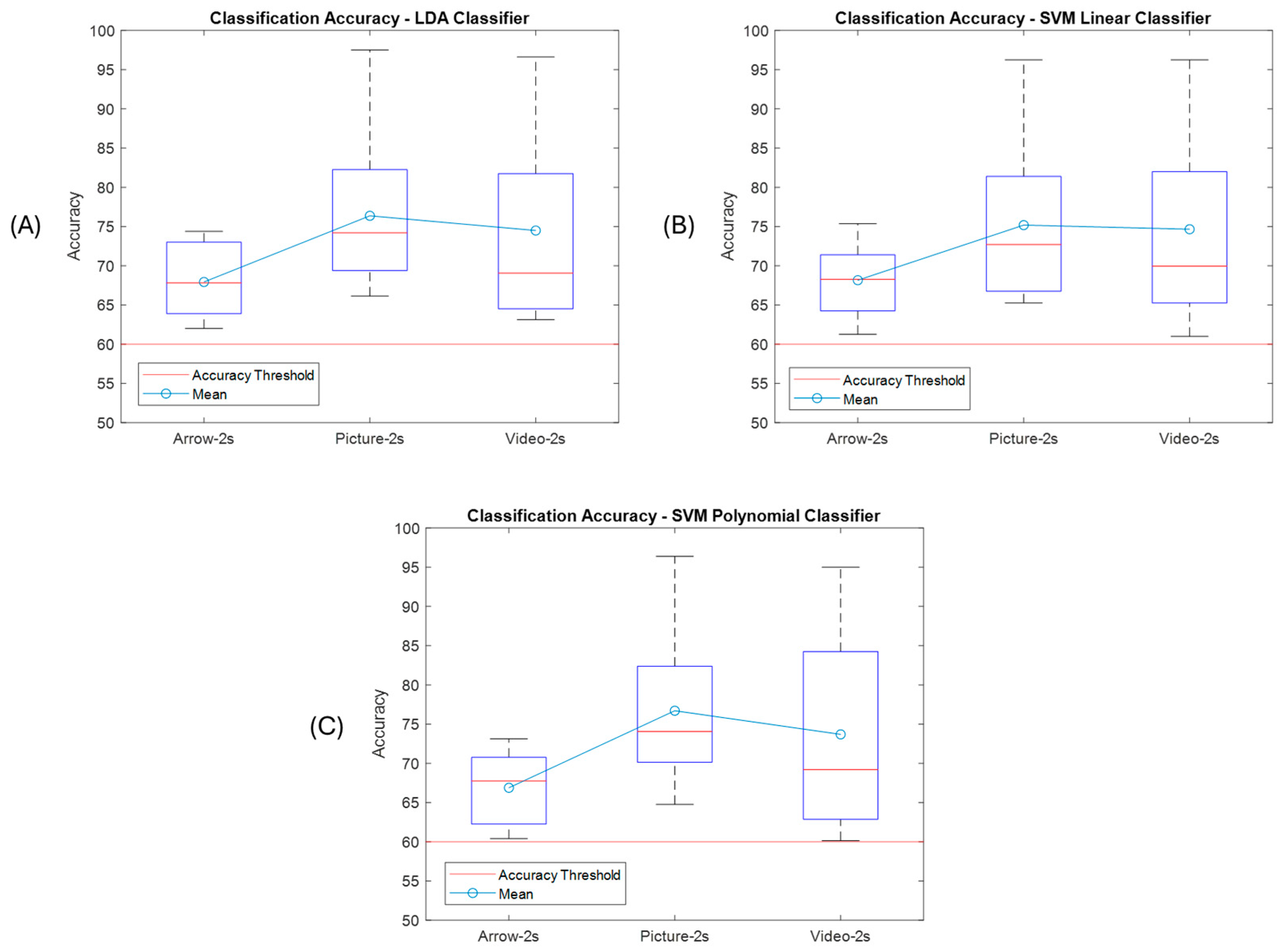

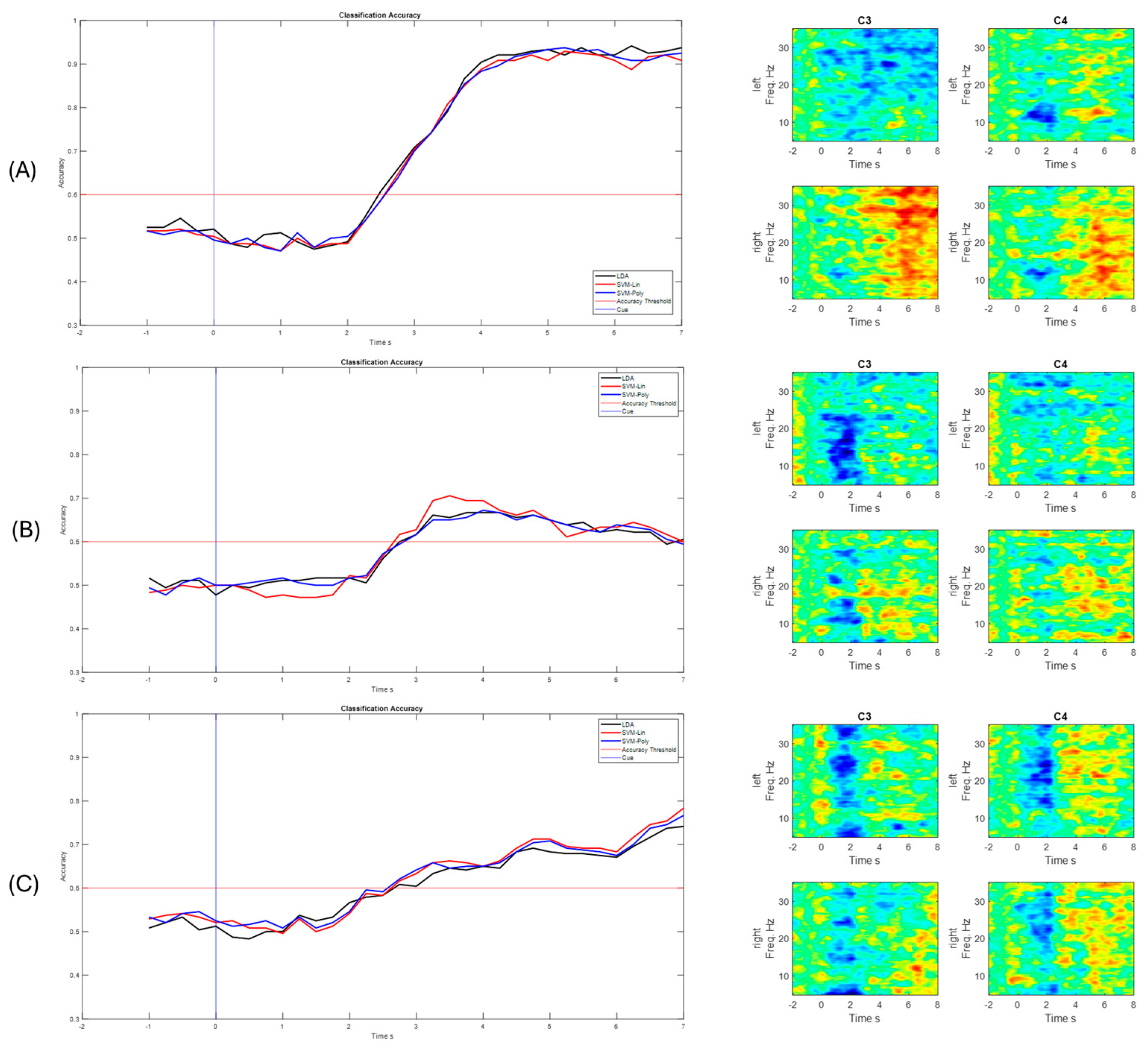

3. Results

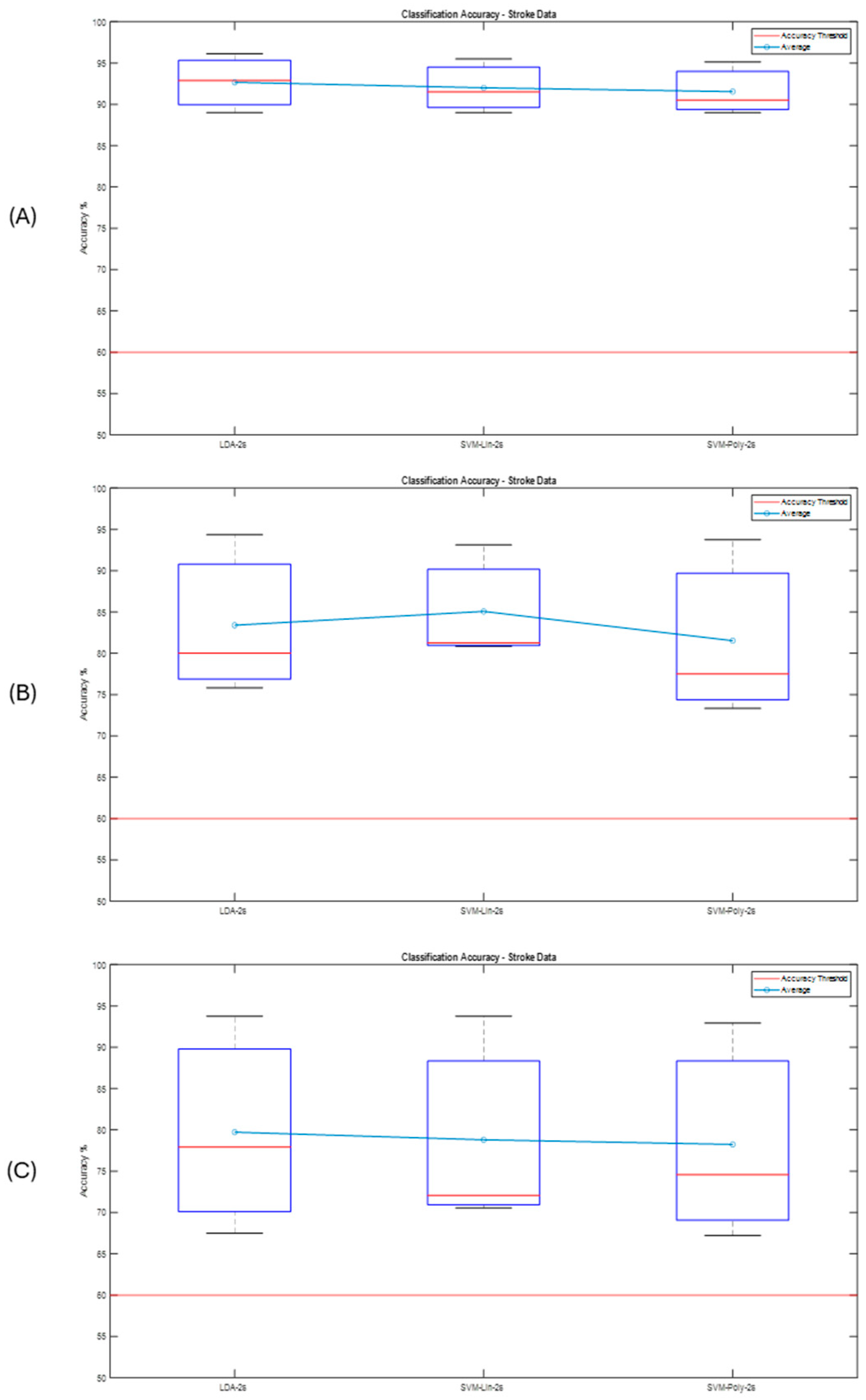

Post-Stroke Data

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dewan, M.C.; Rattani, A.; Gupta, S.; Baticulon, R.E.; Hung, Y.C.; Punchak, M.; Agrawal, A.; Adeleye, A.O.; Shrime, M.G.; Rubiano, A.M.; et al. Estimating the global incidence of traumatic brain injury. J. Neurosurg. 2019, 130, 1080–1097. [Google Scholar] [CrossRef]

- Rashid, M.; Sulaiman, N.; P. P. Abdul Majeed, A.; Musa, R.M.; Ahmad, A.F.; Bari, B.S.; Khatun, S. Current Status, Challenges, and Possible Solutions of EEG-Based Brain-Computer Interface: A Comprehensive Review. Front. Neurorobot. 2020, 14, 25. [Google Scholar] [CrossRef] [PubMed]

- Departamento Administrativo Nacional de Estadística (DANE). Estadísticas por Tema: Salud, Discapacidad. Available online: https://www.dane.gov.co/index.php/estadisticas-por-tema/demografia-y-poblacion/discapacidad (accessed on 21 March 2023).

- Mane, R.; Chouhan, T.; Guan, C. BCI for stroke rehabilitation: Motor and beyond. J. Neural Eng. 2020, 17, 041001. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Zhang, W.; Li, W.; Zhang, S.; Lv, P.; Yin, Y. Effects of motor imagery based brain-computer interface on upper limb function and attention in stroke patients with hemiplegia: A randomized controlled trial. BMC Neurol. 2023, 23, 136. [Google Scholar] [CrossRef]

- Poboroniuc, M.; Irimia, D.; Ionascu, R.; Roman, A.I.; Mitocaru, A.; Baciu, A. Design and Experimental Results of New Devices for Upper Limb Rehabilitation in Stroke. In Proceedings of the 2021 International Conference on e-Health and Bioengineering (EHB), Iasi, Romania, 18–19 November 2021; pp. 22–25. [Google Scholar] [CrossRef]

- Cho, J.-H.; Jeong, J.-H.; Lee, S.-W. NeuroGrasp: Real-Time EEG Classification of High-Level Motor Imagery Tasks Using a Dual-Stage Deep Learning Framework. IEEE Trans. Cybern. 2021, 52, 13279–13292. [Google Scholar] [CrossRef]

- King, C.E.; Wang, P.T.; McCrimmon, C.M.; Chou, C.C.Y.; Do, A.H.; Nenadic, Z. Brain-Computer Interface Driven Functional Electrical Stimulation System for Overground Walking in Spinal Cord Injury Participant. Neurology 2014, 82 (Suppl. 1). Available online: http://ovidsp.ovid.com/ovidweb.cgi?T=JS&PAGE=reference&D=emed12&NEWS=N&AN=71466813 (accessed on 8 July 2025).

- da Cunha, M.; Rech, K.D.; Salazar, A.P.; Pagnussat, A.S. Functional electrical stimulation of the peroneal nerve improves post-stroke gait speed when combined with physiotherapy. A systematic review and meta-analysis. Ann. Phys. Rehabil. Med. 2021, 64, 101388. [Google Scholar] [CrossRef]

- Ambrosini, E.; Gasperini, G.; Zajc, J.; Immick, N.; Augsten, A.; Rossini, M.; Ballarati, R.; Russold, M.; Ferrante, S.; Ferrigno, G.; et al. A Robotic System with EMG-Triggered Functional Eletrical Stimulation for Restoring Arm Functions in Stroke Survivors. Neurorehabil. Neural Repair 2021, 35, 334–345. [Google Scholar] [CrossRef]

- Qiu, Z.; Allison, B.Z.; Jin, J.; Zhang, Y.; Wang, X.; Li, W.; Cichocki, A. Optimized motor imagery paradigm based on imagining Chinese characters writing movement. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1009–1017. [Google Scholar] [CrossRef]

- Gómez-Morales, Ó.W.; Collazos-Huertas, D.F.; Álvarez-Meza, A.M.; Castellanos-Dominguez, C.G. EEG Signal Prediction for Motor Imagery Classification in Brain–Computer Interfaces. Sensors 2025, 25, 2259. [Google Scholar] [CrossRef]

- Hayta, Ü.; Irimia, D.C.; Guger, C.; Erkutlu, İ.; Güzelbey, İ.H. Optimizing Motor Imagery Parameters for Robotic Arm Control by Brain-Computer Interface. Brain Sci. 2022, 12, 833. [Google Scholar] [CrossRef]

- Thanjavur, K.; Babul, A.; Foran, B.; Bielecki, M.; Gilchrist, A.; Hristopulos, D.T.; Brucar, L.R.; Virji-Babul, N. Recurrent neural network-based acute concussion classifier using raw resting state EEG data. Sci. Rep. 2021, 11, 12353. [Google Scholar] [CrossRef]

- Prashant, P.; Joshi, A.; Gandhi, V. Brain Computer Interface: A review. In Proceedings of the 2015 5th Nirma University International Conference on Engineering (NUiCONE) Brain, Ahmedabad, India, 26–28 November 2015; Volume 74, pp. 3–30. [Google Scholar]

- Proietti, T.; Crocher, V.; Roby-Brami, A.; Jarrasse, N. Upper-limb robotic exoskeletons for neurorehabilitation: A review on control strategies. IEEE Rev. Biomed. Eng. 2016, 9, 4–14. [Google Scholar] [CrossRef] [PubMed]

- Saragih, A.S.; Basyiri, H.N.; Raihan, M.Y. Analysis of motor imagery data from EEG device to move prosthetic hands by using deep learning classification. AIP Conf. Proc. 2022, 2537, 50009. [Google Scholar] [CrossRef]

- Sebastián-Romagosa, M.; Cho, W.; Ortner, R.; Murovec, N.; Von Oertzen, T.; Kamada, K.; Allison, B.Z.; Guger, C. Brain Computer Interface Treatment for Motor Rehabilitation of Upper Extremity of Stroke Patients—A Feasibility Study. Front. Neurosci. 2020, 14, 591435. [Google Scholar] [CrossRef] [PubMed]

- Sieghartsleitner, S.; Sebastian-Romagosa, M.; Schreiner, L.; Grunwald, J.; Cho, W.; Ortner, R.; Tanackovic, S.; Scharinger, J.; Guger, C. Analysis of Cortical Excitability During Brain-Computer Interface Stroke Rehabilitation of Upper and Lower Extremity. In Proceedings of the 2024 IEEE International Conference on Metrology for eXtended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE), St Albans, UK, 21–23 October 2024; pp. 1106–1111. [Google Scholar] [CrossRef]

- Yu, T.; Xiao, J.; Wang, F.; Zhang, R.; Gu, Z.; Cichocki, A.; Li, Y. Enhanced Motor Imagery Training Using a Hybrid BCI with Feedback. IEEE Trans. Biomed. Eng. 2015, 62, 1706–1717. [Google Scholar] [CrossRef]

- Scherer, R.; Vidaurre, C. Chapter 8—Motor Imagery Based Brain-Computer Interfaces. In Smart Wheelchairs and Brain-Computer Interfaces: Mobile Assistive Technologies, 2nd ed.; Elsevier, B.V.: Amsterdam, The Netherlands, 2018; p. 171. ISBN 9780128128923. [Google Scholar]

- g.tec Medical Engineering. RecoveriX. Available online: https://recoverix.com/ (accessed on 2 February 2025).

- g.tec Medical Engineering. BR41N.IO Hackathon. Available online: https://www.br41n.io/ (accessed on 8 July 2025).

- Song, M.; Kim, J. Motor Imagery Enhancement Paradigm Using Moving Rubber Hand Illusion System. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBS), Jeju, Republic of Korea, 11–15 July 2017; pp. 1146–1149. [Google Scholar]

- Syrov, N.; Vasilyev, A.; Kaplan, A. Sensorimotor EEG Rhythms During Action Observation and Passive Mirror-Box Illusion. Commun. Comput. Inf. Sci. 2021, 1499, 101–106. [Google Scholar] [CrossRef]

- Brunner, I.; Lundquist, C.B.; Pedersen, A.R.; Spaich, E.G.; Dosen, S.; Savic, A. Brain computer interface training with motor imagery and functional electrical stimulation for patients with severe upper limb paresis after stroke: A randomized controlled pilot trial. J. Neuroeng. Rehabil. 2024, 21, 10. [Google Scholar] [CrossRef]

- Ludwig, K.A.; Miriani, R.M.; Langhals, N.B.; Joseph, M.D.; Anderson, D.J.; Kipke, D.R. Using a common average reference to improve cortical neuron recordings from microelectrode arrays. J. Neurophysiol. 2009, 101, 1679–1689. [Google Scholar] [CrossRef]

- Guger, C.; Ramoser, H.; Pfurtscheller, G. Real-time EEG analysis with subject-specific spatial patterns for a brain-computer interface (BCI). IEEE Trans. Rehabil. Eng. 2000, 8, 447–456. [Google Scholar] [CrossRef]

- Geng, X.; Li, D.; Chen, H.; Yu, P.; Yan, H.; Yue, M. An improved feature extraction algorithms of EEG signals based on motor imagery brain-computer interface. Alexandria Eng. J. 2022, 61, 4807–4820. [Google Scholar] [CrossRef]

- Kundu, S.; Tomar, A.S.; Chowdhury, A.; Thakur, G.; Tomar, A. Advancements in Temporal Fusion: A New Horizon for EEG-Based Motor Imagery Classification. IEEE Trans. Med. Robot. Bionics 2024, 6, 567–576. [Google Scholar] [CrossRef]

- Bhatti, M.H.; Khan, J.; Khan, M.U.G.; Iqbal, R.; Aloqaily, M.; Jararweh, Y.; Gupta, B. Soft Computing-Based EEG Classification by Optimal Feature Selection and Neural Networks. IEEE Trans. Ind. Informatics 2019, 15, 5747–5754. [Google Scholar] [CrossRef]

- Selim, S.; Tantawi, M.; Shedeed, H.; Badr, A. A Comparative Analysis of Different Feature Extraction Techniques for Motor Imagery Based BCI System. In Proceedings of the Advances in Intelligent Systems and Computing, Cairo, Egypt, 8–10 April 2020; Volume 1153, pp. 740–749. [Google Scholar]

- dos Santos, E.M.; San-Martin, R.; Fraga, F.J. Comparison of subject-independent and subject-specific EEG-based BCI using LDA and SVM classifiers. Med. Biol. Eng. Comput. 2023, 61, 835–845. [Google Scholar] [CrossRef]

- Malass, M.; Tabbal, J.; El Falou, W. EEG Features Extraction and Classification Methods in Motor Imagery Based Brain Computer Interface. In Proceedings of the 2019 Fifth International Conference on Advances in Biomedical Engineering (ICABME), Tripoli, Lebanon, 17–19 October 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Hou, Y.; Chen, T.; Lun, X.; Wang, F. A novel method for classification of multi-class motor imagery tasks based on feature fusion. Neurosci. Res. 2022, 176, 40–48. [Google Scholar] [CrossRef] [PubMed]

- Mebarkia, K.; Reffad, A. Multi optimized SVM classifiers for motor imagery left and right hand movement identification. Australas. Phys. Eng. Sci. Med. 2019, 42, 949–958. [Google Scholar] [CrossRef] [PubMed]

- Pfurtschellera, G.; da Silva, F.H.L. Event-related EEG/MEG synchronization and desynchronization: Basic principles. Clin. Neurophysiol. 2012, 58, 1865–1873. [Google Scholar] [CrossRef] [PubMed]

- Bian, Y.; Zhao, L.; Li, J.; Guo, T.; Fu, X.; Qi, H. Improvements in Classification of Left and Right Foot Motor Intention Using Modulated Steady-State Somatosensory Evoked Potential Induced by Electrical Stimulation and Motor Imagery. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 150–159. [Google Scholar] [CrossRef]

- Takahashi, M.; Gouko, M.; Ito, K. Functional Electrical Stimulation (FES) effects for Event Related Desynchronization (ERD) on foot motor area. In Proceedings of the 2009 ICME International Conference on Complex Medical Engineering, Tempe, AZ, USA, 9–11 April 2009; pp. 1–6. [Google Scholar]

- Jeannerod, M. Neural simulation of action: A unifying mechanism for motor cognition. Neuroimage 2001, 14, 103–109. [Google Scholar] [CrossRef]

- Pineda, J.A. The functional significance of mu rhythms: Translating “seeing” and “hearing” into “doing”. Brain Res. Rev. 2005, 50, 57–68. [Google Scholar] [CrossRef]

- Rizzolatti, G.; Craighero, L. The mirror-neuron system. Annu. Rev. Neurosci. 2004, 27, 169–192. [Google Scholar] [CrossRef]

- Kim, M.S.; Park, H.; Kwon, I.; An, K.O.; Kim, H.; Park, G.; Hyung, W.; Im, C.H.; Shin, J.H. Efficacy of brain-computer interface training with motor imagery-contingent feedback in improving upper limb function and neuroplasticity among persons with chronic stroke: A double-blinded, parallel-group, randomized controlled trial. J. Neuroeng. Rehabil. 2025, 22, 1. [Google Scholar] [CrossRef]

- Vogt, S.; Rienzo, F.D.; Collet, C.; Collins, A.; Guillot, A. Multiple roles of motor imagery during action observation. Front. Hum. Neurosci. 2013, 7, 807. [Google Scholar] [CrossRef]

- Lin, C.L.; Chen, L.T. Improvement of brain–computer interface in motor imagery training through the designing of a dynamic experiment and FBCSP. Heliyon 2023, 9, e13745. [Google Scholar] [CrossRef]

- Cajigas, I.; Davis, K.C.; Prins, N.W.; Gallo, S.; Naeem, J.A.; Fisher, L.; Ivan, M.E.; Prasad, A.; Jagid, J.R. Brain-Computer interface control of stepping from invasive electrocorticography upper-limb motor imagery in a patient with quadriplegia. Front. Hum. Neurosci. 2023, 16, 1077416. [Google Scholar] [CrossRef]

| Participants | Condition |

|---|---|

| S1 | Hand: Left side affected |

| S2 | Hand: Left side affected |

| S3 | Hand: Right side affected |

| MOTOR IMAGERY ACCURACY | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PARADIGM | ARROW | PICTURE | VIDEO | |||||||||||||||

| WINDOW | T = 2 s | T = 3 s | T = 2 s | T = 3 s | T = 2 s | T = 3 s | ||||||||||||

| CLASSIFIER | LDA | SVML | SVMP | LDA | SVML | SVMP | LDA | SVML | SVMP | LDA | SVML | SVMP | LDA | SVML | SVMP | LDA | SVML | SVMP |

| SUBJECT | ||||||||||||||||||

| S1 | 73,62 | 75,25 | 72,25 | 75,87 | 74,5 | 74,62 | 81,12 | 80,5 | 81,75 | 76,75 | 79,87 | 79,25 | 81,63 | 83,25 | 82,75 | 81,13 | 81,63 | 83,13 |

| S2 | 75,87 | 62,75 | 64,25 | 63,12 | 65,12 | 63,5 | 62,88 | 63,87 | 66,87 | 65,38 | 64,5 | 64,5 | 65,5 | 63,5 | 59,5 | 62,62 | 63,5 | 62,25 |

| S3 | 65,87 | 65,63 | 63 | 62,12 | 61,5 | 63,5 | 69,37 | 69,63 | 69,38 | 70,87 | 69,87 | 71,63 | 70,63 | 71,88 | 70,5 | 72,25 | 72,5 | 69,5 |

| S4 | 66,75 | 64,62 | 63 | 64,75 | 64 | 63,75 | 67,87 | 66,75 | 63,5 | 65,63 | 66 | 66,37 | 63,88 | 62,62 | 60,25 | 59,63 | 59,88 | 54,87 |

| S5 | 67,87 | 67,88 | 67,88 | 68 | 69,12 | 69,12 | 73,13 | 74,25 | 71,88 | 67,88 | 70,63 | 67,13 | 60,75 | 58,5 | 60,13 | 58,13 | 58,5 | 62,38 |

| S6 | 64,62 | 63 | 61,75 | 65,37 | 64,12 | 60,5 | 73,75 | 71,37 | 71,37 | 71,63 | 71,88 | 70,38 | 63,88 | 60,5 | 66,63 | 63,88 | 60,5 | 62,62 |

| S7 | 68,37 | 68,87 | 69 | 67,37 | 69,12 | 67,12 | 83,13 | 84,38 | 86,5 | 86,12 | 84,88 | 84,38 | 90,5 | 90,88 | 92,5 | 87 | 87,63 | 87,38 |

| S8 | 61,38 | 62,13 | 62,13 | 65,37 | 58 | 59,62 | 71,88 | 72,13 | 71,75 | 71,62 | 69,25 | 70,12 | 60,75 | 61,12 | 62 | 72,12 | 61,12 | 66,38 |

| S9 | 66,13 | 62,25 | 63,25 | 70,63 | 72 | 65,38 | 73,37 | 71,37 | 73,38 | 71,75 | 69,87 | 69,5 | 81,25 | 71,62 | 68,63 | 77,13 | 79,38 | 76,63 |

| S10 | 77 | 76,37 | 75,5 | 69,5 | 70,75 | 71,12 | 96,62 | 95,87 | 96,38 | 88,38 | 89,75 | 88 | 96 | 96,38 | 95 | 93,62 | 93,75 | 93,75 |

| AVG ± STD | 68,75 ± 5,09 | 66,88 ± 5,24 | 66,20 ± 4,75 | 67,21 ± 4,05 | 66,83 ± 5,12 | 65,83 ± 4,71 | 75,31 ± 9,52 | 75,01 ± 9,48 | 75,28 ± 9,91 | 73,60 ± 7,94 | 73,65 ± 8,34 | 73,13 ± 7,98 | 73,48 ± 12,93 | 72,02 ± 13,62 | 71,79 ± 13,51 | 72,75 ± 12 | 71,84 ± 12,98 | 71,89 ± 12,74 |

| MOTOR IMAGERY ACCURACY | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| PARADIGM | ARROW | PICTURE | VIDEO | ||||||

| WINDOW | T = 2 s | T = 2 s | T = 2 s | ||||||

| CLASSIFIER | LDA | SVML | SVMP | LDA | SVML | SVMP | LDA | SVML | SVMP |

| SUBJECT | |||||||||

| S1 | 73 | 75,37 | 70,75 | 82,25 | 81,38 | 82,38 | 81,75 | 82 | 84,25 |

| S2 | 62,87 | 63,25 | 62,38 | 68,5 | 66,75 | 70,13 | 68 | 69,38 | 60,13 |

| S3 | 67 | 66,75 | 66,13 | 69,38 | 65,25 | 64,75 | 70,12 | 70,5 | 68,38 |

| S4 | 63,88 | 64,25 | 60,38 | 66,13 | 66,62 | 66,5 | 64,25 | 65,25 | 61,63 |

| S5 | 73 | 71,38 | 73,13 | 74,88 | 74,88 | 74,37 | 63,13 | 61 | 63,38 |

| S6 | 65 | 65,63 | 61,88 | 73,5 | 71 | 71,25 | 64,5 | 66 | 70 |

| S7 | 68,63 | 69,75 | 70 | 84 | 84,25 | 89,13 | 91,38 | 92,13 | 93,5 |

| S8 | 62 | 61,25 | 62,25 | 72,63 | 71,63 | 73,75 | 64,87 | 65,25 | 62,87 |

| S9 | 69,5 | 69,75 | 69,38 | 74,88 | 73,75 | 77,5 | 80,25 | 78,75 | 77,75 |

| S10 | 74,37 | 74,13 | 72,5 | 97,5 | 96,25 | 96,38 | 96,62 | 96,25 | 95 |

| AVG ± STD | 67,93 ± 4,50 | 68,15 ± 4,69 | 66,88 ± 4,84 | 76,37 ± 9,31 | 75,18 ± 9,65 | 76,61 ± 10,04 | 74,48 ± 12,24 | 74,65 ± 12,13 | 73,69 ± 13,20 |

| CLASSIFIER | PARADIGMS | p_ANOVA | Wicoxon | p_Bonferroni-Corrected | p_ANOVA | Wilcoxon | p_Bonferroni-Corrected |

|---|---|---|---|---|---|---|---|

| LDA | Arrow vs. Picture | 0,0412 | 0,0488 | 0,1235 | 0,0201 | 0,0039 | 0,0602 |

| Arrow vs. Video | 0,2110 | 0,3750 | 0,6331 | 0,1315 | 0,1602 | 0,3946 | |

| SVM Linear | Arrow vs. Picture | 0,0016 | 0,0020 | 0,0048 | 0,0130 | 0,0195 | 0,0390 |

| Arrow vs. Video | 0,1398 | 0,3223 | 0,4195 | 0,1669 | 0,2324 | 0,5008 | |

| SVM Polynomial | Arrow vs. Picture | 0,0013 | 0,0020 | 0,0040 | 0,0059 | 0,0059 | 0,0177 |

| Arrow vs. Video | 0,1161 | 0,1602 | 0,3483 | 0,0971 | 0,1309 | 0,2912 |

| T = 2 s | ||||

| CLASSIFIER | PARADIGMS | p_ANOVA | Wilcoxon | p_Bonferroni-corrected |

| LDA | Arrow vs. Picture | 0,0032 | 0,0020 | 0,0096 |

| Arrow vs. Video | 0,0699 | 0,0600 | 0,2096 | |

| SVM Linear | Arrow vs. Picture | 0,0104 | 0,0039 | 0,0312 |

| Arrow vs. Video | 0,0660 | 0,0400 | 0,1980 | |

| SVM Polynomial | Arrow vs. Picture | 0,0026 | 0,0059 | 0,0077 |

| Arrow vs. Video | 0,0737 | 0,0800 | 0,2212 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reyes, D.; Sieghartsleitner, S.; Loaiza, H.; Guger, C. Motor Imagery Acquisition Paradigms: In the Search to Improve Classification Accuracy. Sensors 2025, 25, 6204. https://doi.org/10.3390/s25196204

Reyes D, Sieghartsleitner S, Loaiza H, Guger C. Motor Imagery Acquisition Paradigms: In the Search to Improve Classification Accuracy. Sensors. 2025; 25(19):6204. https://doi.org/10.3390/s25196204

Chicago/Turabian StyleReyes, David, Sebastian Sieghartsleitner, Humberto Loaiza, and Christoph Guger. 2025. "Motor Imagery Acquisition Paradigms: In the Search to Improve Classification Accuracy" Sensors 25, no. 19: 6204. https://doi.org/10.3390/s25196204

APA StyleReyes, D., Sieghartsleitner, S., Loaiza, H., & Guger, C. (2025). Motor Imagery Acquisition Paradigms: In the Search to Improve Classification Accuracy. Sensors, 25(19), 6204. https://doi.org/10.3390/s25196204