Sensor Input Type and Location Influence Outdoor Running Terrain Classification via Deep Learning Approaches

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

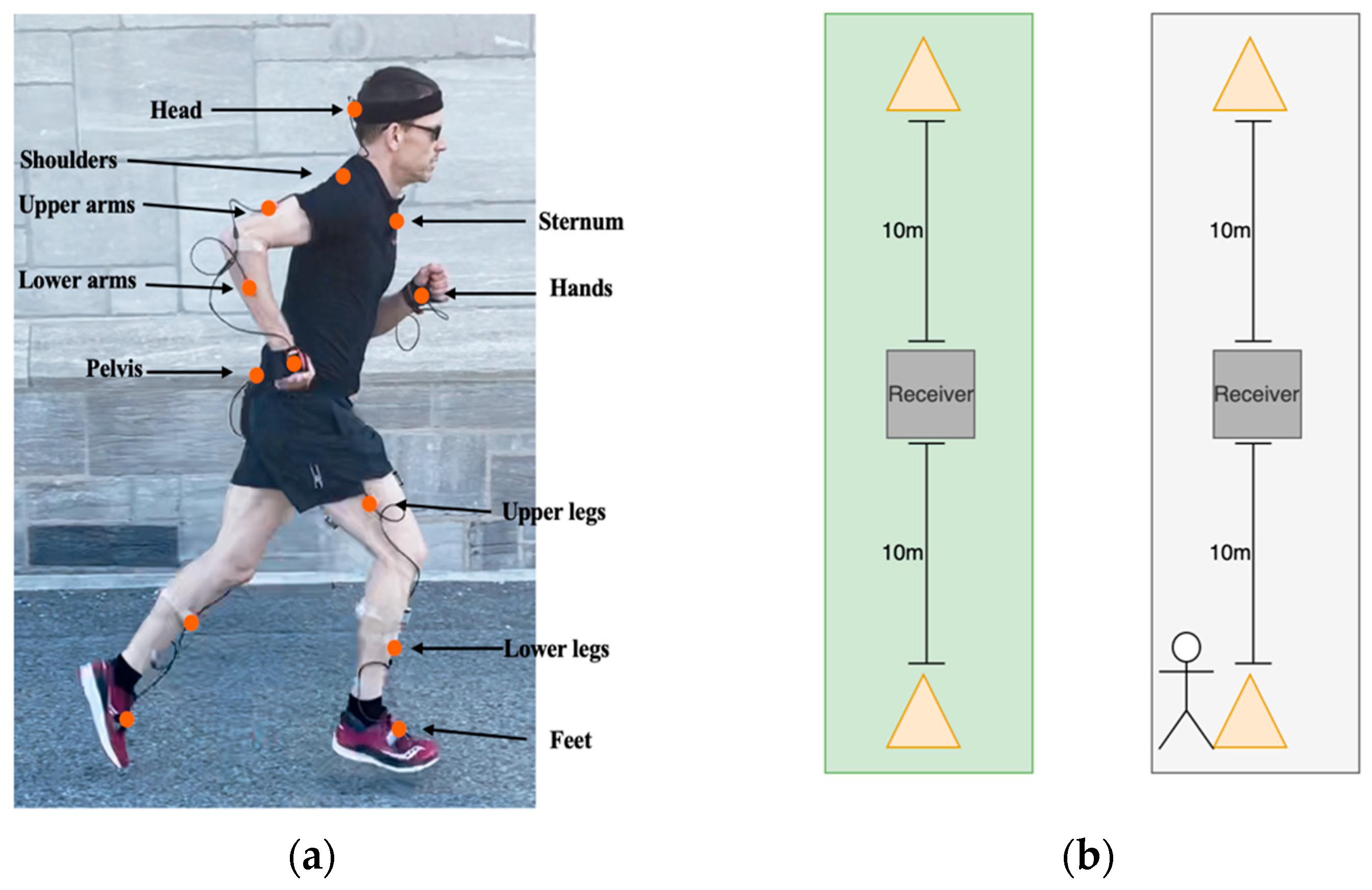

2.2. Data Collection

2.3. Initial Data Processing and Separation

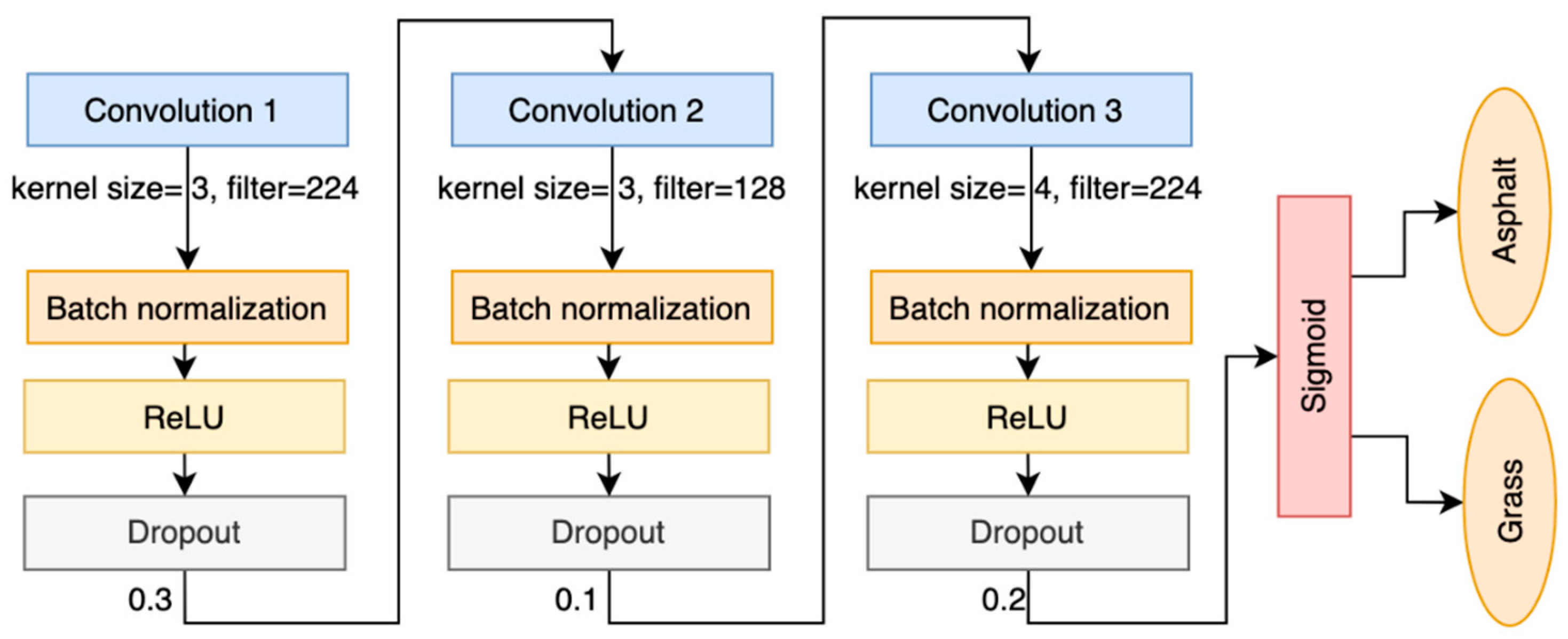

2.4. CNN Model Architecture

2.5. Train, Validation, and Test Split

2.6. Model Performance Analysis

3. Results

3.1. Effect of Preprocessing Steps and Sensor Combination

3.2. Effect of Network Optimization

4. Discussion

4.1. Summary

4.2. Preprocessing

4.3. Sensor Location and Count

4.4. Splitting Approaches

4.5. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNNs | convolutional neural networks |

| IMU | inertial measurement unit |

| AUC | area under the curve |

| ReLUs | rectified linear units |

References

- Benca, E.; Listabarth, S.; Flock, F.K.J.; Pablik, E.; Fischer, C.; Walzer, S.M.; Dorotka, R.; Windhager, R.; Ziai, P. Analysis of Running-Related Injuries: The Vienna Study. J. Clin. Med. 2020, 9, 438. [Google Scholar] [CrossRef]

- Vincent, H.K.; Brownstein, M.; Vincent, K.R. Injury Prevention, Safe Training Techniques, Rehabilitation, and Return to Sport in Trail Runners. Arthrosc. Sports Med. Rehabil. 2022, 4, e151–e162. [Google Scholar] [CrossRef]

- Willwacher, S.; Fischer, K.M.; Rohr, E.; Trudeau, M.B.; Hamill, J.; Brüggemann, G.-P. Surface Stiffness and Footwear Affect the Loading Stimulus for Lower Extremity Muscles When Running. J. Strength Cond. Res. 2022, 36, 82–89. [Google Scholar] [CrossRef]

- Ferris, D.P.; Louie, M.; Farley, C.T. Running in the real world: Adjusting leg stiffness for different surfaces. Proc. R. Soc. B Biol. Sci. 1998, 265, 989–994. [Google Scholar]

- Reenalda, J.; Maartens, E.; Homan, L.; Buurke, J.H.J. Continuous three dimensional analysis of running mechanics during a marathon by means of inertial magnetic measurement units to objectify changes in running mechanics. J. Biomech. 2016, 49, 3362–3367. [Google Scholar] [CrossRef] [PubMed]

- Van Caekenberghe, I.; Segers, V.; Willems, P.; Gosseye, T.; Aerts, P.; De Clercq, D. Mechanics of overground accelerated running vs. running on an accelerated treadmill. Gait Posture 2013, 38, 125–131. [Google Scholar] [CrossRef]

- García-Pérez, J.A.; Pérez-Soriano, P.; Llana, S.; Martínez-Nova, A.; Sánchez-Zuriaga, D. Effect of overground vs treadmill running on plantar pressure: Influence of fatigue. Gait Posture 2013, 38, 929–933. [Google Scholar] [CrossRef]

- Agostini, V.; Gastaldi, L.; Rosso, V.; Knaflitz, M.; Tadano, S. A Wearable Magneto-Inertial System for Gait Analysis (H-Gait): Validation on Normal Weight and Overweight/Obese Young Healthy Adults. Sensors 2017, 17, 2406. [Google Scholar] [CrossRef]

- Wouda, F.J.; Giuberti, M.; Bellusci, G.; Maartens, E.; Reenalda, J.; Van Beijnum, B.-J.F.; Veltink, P.H. On the Validity of Different Motion Capture Technologies for the Analysis of Running. In Proceedings of the 2018 7th IEEE International Conference on Biomedical Robotics and Biomechatronics (Biorob), Enschede, The Netherlands, 26–29 August 2018; pp. 1175–1180. [Google Scholar]

- Abu-Faraj, Z.O.; Harris, G.F.; Smith, P.A.; Hassani, S. Human gait and Clinical Movement Analysis. In Wiley Encyclopedia of Electrical and Electronics Engineering; American Cancer Society: New York, NY, USA, 2015; pp. 1–34. [Google Scholar]

- Negi, S.; Negi, P.C.B.S.; Sharma, S.; Sharma, N. Human Locomotion Classification for Different Terrains Using Machine Learning Techniques. Crit. Rev. Biomed. Eng. 2020, 48, 199–209. [Google Scholar] [CrossRef] [PubMed]

- Dixon, S.J.; Collop, A.C.; Batt, M.E. Surface effects on ground reaction forces and lower extremity kinematics in running. Med. Sci. Sports Exerc. 2000, 32, 1919–1926. [Google Scholar] [CrossRef]

- Boey, H.; Aeles, J.; Schütte, K.; Vanwanseele, B. The effect of three surface conditions, speed and running experience on vertical acceleration of the tibia during running. Sports Biomech. 2017, 16, 166–176. [Google Scholar] [CrossRef] [PubMed]

- Schütte, K.H.; Aeles, J.; De Beéck, T.O.; van der Zwaard, B.C.; Venter, R.; Vanwanseele, B. Surface effects on dynamic stability and loading during outdoor running using wireless trunk accelerometry. Gait Posture 2016, 48, 220–225. [Google Scholar] [CrossRef] [PubMed]

- Worsey, M.T.O.; Espinosa, H.G.; Shepherd, J.B.; Thiel, D.V. Automatic classification of running surfaces using an ankle-worn inertial sensor. Sports Eng. 2021, 24, 22. [Google Scholar] [CrossRef]

- Lam, G.; Rish, I.; Dixon, P.C. Estimating individual minimum calibration for deep-learning with predictive performance recovery: An example case of gait surface classification from wearable sensor gait data. J. Biomech. 2023, 154, 111606. [Google Scholar] [CrossRef]

- Getting Your VO2 Max. Estimate. Available online: https://www8.garmin.com/manuals-apac/webhelp/venusq/EN-SG/GUID-AD20C854-A0C5-401C-B4DD-55EDE4E1F54F-2318.html (accessed on 16 July 2025).

- Dixon, P.C.; Schütte, K.H.; Vanwanseele, B.; Jacobs, J.V.; Dennerlein, J.T.; Schiffman, J.M.; Fournier, P.-A.; Hu, B. Machine learning algorithms can classify outdoor terrain types during running using accelerometry data. Gait Posture 2019, 74, 176–181. [Google Scholar] [CrossRef]

- Slim, S.O.; Atia, A.; Elfattah, M.M.; Mostafa, M.-S.M. Survey on Human Activity Recognition based on Acceleration Data. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 84–98. [Google Scholar] [CrossRef]

- Negi, S.; Negi, P.C.B.S.; Sharma, S.; Sharma, N. Electromyographic and Acceleration Signals-Based Gait Phase Analysis for Multiple Terrain Classification Using Deep Learning; Social Science Research Network: Rochester, NY, USA, 2020; Available online: https://papers.ssrn.com/abstract=3657175 (accessed on 26 August 2021).

- Moya Rueda, F.; Grzeszick, R.; Fink, G.A.; Feldhorst, S.; Ten Hompel, M. Convolutional Neural Networks for Human Activity Recognition Using Body-Worn Sensors. Informatics 2018, 5, 26. [Google Scholar] [CrossRef]

- Dehzangi, O.; Taherisadr, M.; ChangalVala, R. IMU-Based Gait Recognition Using Convolutional Neural Networks and Multi-Sensor Fusion. Sensors 2017, 17, 2735. [Google Scholar] [CrossRef]

- Stirling, L.M.; von Tscharner, V.; Kugler, P.F.; Nigg, B.M. Classification of muscle activity based on effort level during constant pace running. J. Electromyogr. Kinesiol. Off. J. Int. Soc. Electrophysiol. Kinesiol. 2011, 21, 566–571. [Google Scholar] [CrossRef]

- Gao, S.; Wang, Y.; Fang, C.; Xu, L. A Smart Terrain Identification Technique Based on Electromyography, Ground Reaction Force, and Machine Learning for Lower Limb Rehabilitation. Appl. Sci. 2020, 10, 2638. [Google Scholar] [CrossRef]

- Zheng, X.; Wang, M.; Ordieres-Meré, J. Comparison of Data Preprocessing Approaches for Applying Deep Learning to Human Activity Recognition in the Context of Industry 4.0. Sensors 2018, 18, 2146. [Google Scholar] [CrossRef]

- Gu, F.; Chung, M.-H.; Chignell, M.; Valaee, S.; Zhou, B.; Liu, X. A Survey on Deep Learning for Human Activity Recognition. ACM Comput. Surv. 2021, 54, 1–34. [Google Scholar] [CrossRef]

- Tao, W.; Lai, Z.-H.; Leu, M.C.; Yin, Z. Worker Activity Recognition in Smart Manufacturing Using IMU and sEMG Signals with Convolutional Neural Networks. Procedia Manuf. 2018, 26, 1159–1166. [Google Scholar] [CrossRef]

- Pinzón-Arenas, J.O.; Jiménez-Moreno, R.; Herrera-Benavides, J.E. Convolutional Neural Network for Hand Gesture Recognition using 8 different EMG Signals. In Proceedings of the 2019 XXII Symposium on Image, Signal Processing and Artificial Vision (STSIVA), Bucaramanga, Colombia, 24–26 April 2019; pp. 1–5. [Google Scholar]

- Xsens. MVN User Manual. 2021. Available online: https://www.xsens.com/hubfs/Downloads/usermanual/MVN_User_Manual.pdf (accessed on 1 June 2019).

- Dixon, P.C.; Loh, J.J.; Michaud-Paquette, Y.; Pearsall, D.J. biomechZoo: An open-source toolbox for the processing, analysis, and visualization of biomechanical movement data. Comput. Methods Programs Biomed. 2017, 140, 1–10. [Google Scholar] [CrossRef] [PubMed]

- McGrath, D.; Greene, B.R.; O’Donovan, K.J.; Caulfield, B. Gyroscope-Based Assessment of Temporal Gait Parameters During Treadmill Walking and Running|Sports Engineering. Available online: https://link.springer.com/article/10.1007/s12283-012-0093-8 (accessed on 16 July 2025).

- Chollet, F. Deep Learning with Python; Manning: Shelter Island, NY, USA, 2018. [Google Scholar]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit. Lett. 2019, 119, 3–11. [Google Scholar] [CrossRef]

- Shah, V.; Flood, M.W.; Grimm, B.; Dixon, P.C. Generalizability of deep learning models for predicting outdoor irregular walking surfaces. J. Biomech. 2022, 139, 111159. [Google Scholar] [CrossRef]

| Number of Triaxial Sensors | Combinations |

|---|---|

| 12 | Full body (head, left and right shoulder, left and right upper arm, left and right hands, pelvis, left and right lower leg, and left and right feet) |

| 5 | Lower body (pelvis, left and right lower leg, and left and right feet) |

| 1 | Pelvis |

| 2 | Feet (left and right feet) |

| Hyperparameters | Options |

|---|---|

| Epochs | Using callback for early stop (patience 50) |

| Batch size | 50, 100, 200, and 300 |

| Optimization function | Adam, RMSprop, and SGD |

| Learning rate | From 0.0001 to 0.01 |

| Model architecture | Options |

| Number of convolutional layers | From 1 to 4 |

| Filter number | From 32 to 256 (step 32) |

| Kernel size | From 3 to 5 (step 1) |

| Dropouts | From 0 to 0.5 (step 0.1) |

| Regularization | L1, L2, and L1_L2 |

| Sensor Signal Type | Surfaces | Precision | Recall | F1-Score | Accuracy (%) |

|---|---|---|---|---|---|

| 3D | Grass | 0.97 | 0.95 | 0.95 | 95.71 |

| Acceleration | Asphalt | 0.97 | 0.92 | 0.94 | |

| 3D Angular velocity | Grass | 0.94 | 0.88 | 0.91 | 91.91 |

| Asphalt | 0.89 | 0.94 | 0.91 |

| Split Protocol | Accuracy | |

|---|---|---|

| Acceleration | Leave-n-subject-out | 82.32 |

| Subject-dependent | 95.71 | |

| Angular velocity | Leave-n-subject-out | 57.58 |

| Subject-dependent | 91.91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thibault, G.; Dixon, P.C.; Pearsall, D.J. Sensor Input Type and Location Influence Outdoor Running Terrain Classification via Deep Learning Approaches. Sensors 2025, 25, 6203. https://doi.org/10.3390/s25196203

Thibault G, Dixon PC, Pearsall DJ. Sensor Input Type and Location Influence Outdoor Running Terrain Classification via Deep Learning Approaches. Sensors. 2025; 25(19):6203. https://doi.org/10.3390/s25196203

Chicago/Turabian StyleThibault, Gabrielle, Philippe C. Dixon, and David J. Pearsall. 2025. "Sensor Input Type and Location Influence Outdoor Running Terrain Classification via Deep Learning Approaches" Sensors 25, no. 19: 6203. https://doi.org/10.3390/s25196203

APA StyleThibault, G., Dixon, P. C., & Pearsall, D. J. (2025). Sensor Input Type and Location Influence Outdoor Running Terrain Classification via Deep Learning Approaches. Sensors, 25(19), 6203. https://doi.org/10.3390/s25196203