Task Offloading and Resource Allocation Strategy in Non-Terrestrial Networks for Continuous Distributed Task Scenarios

Abstract

1. Introduction

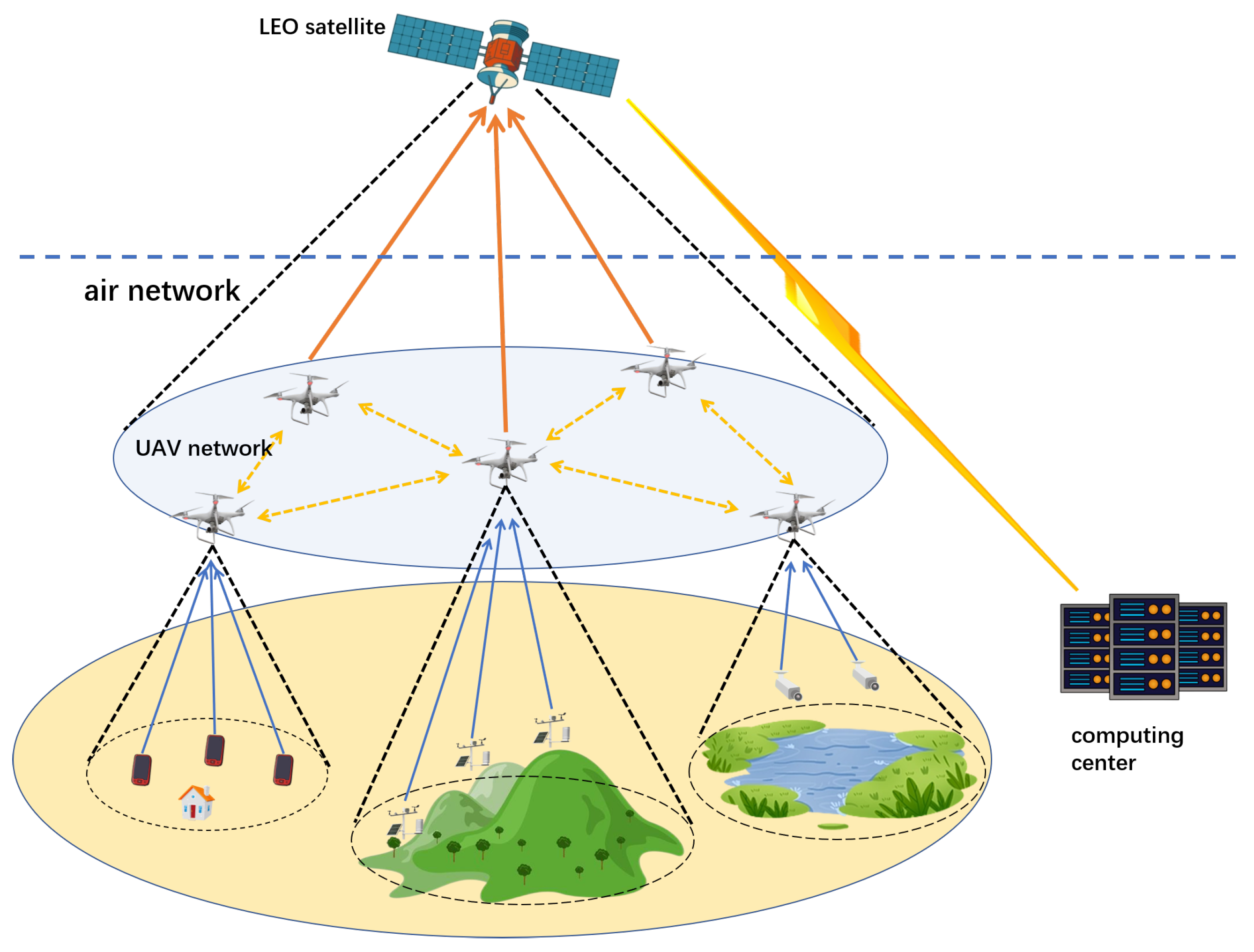

2. System Model

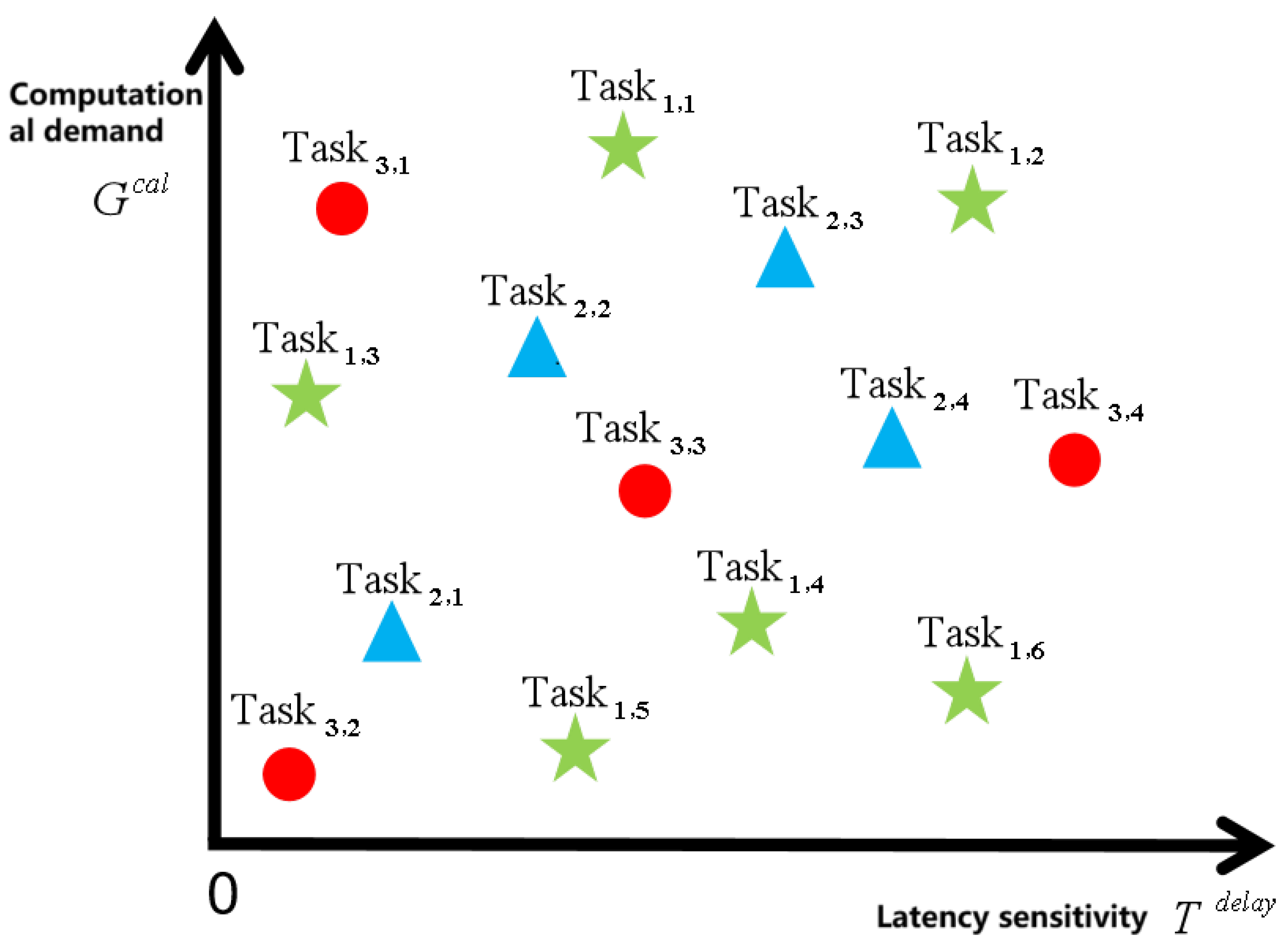

2.1. Continuous Task Model

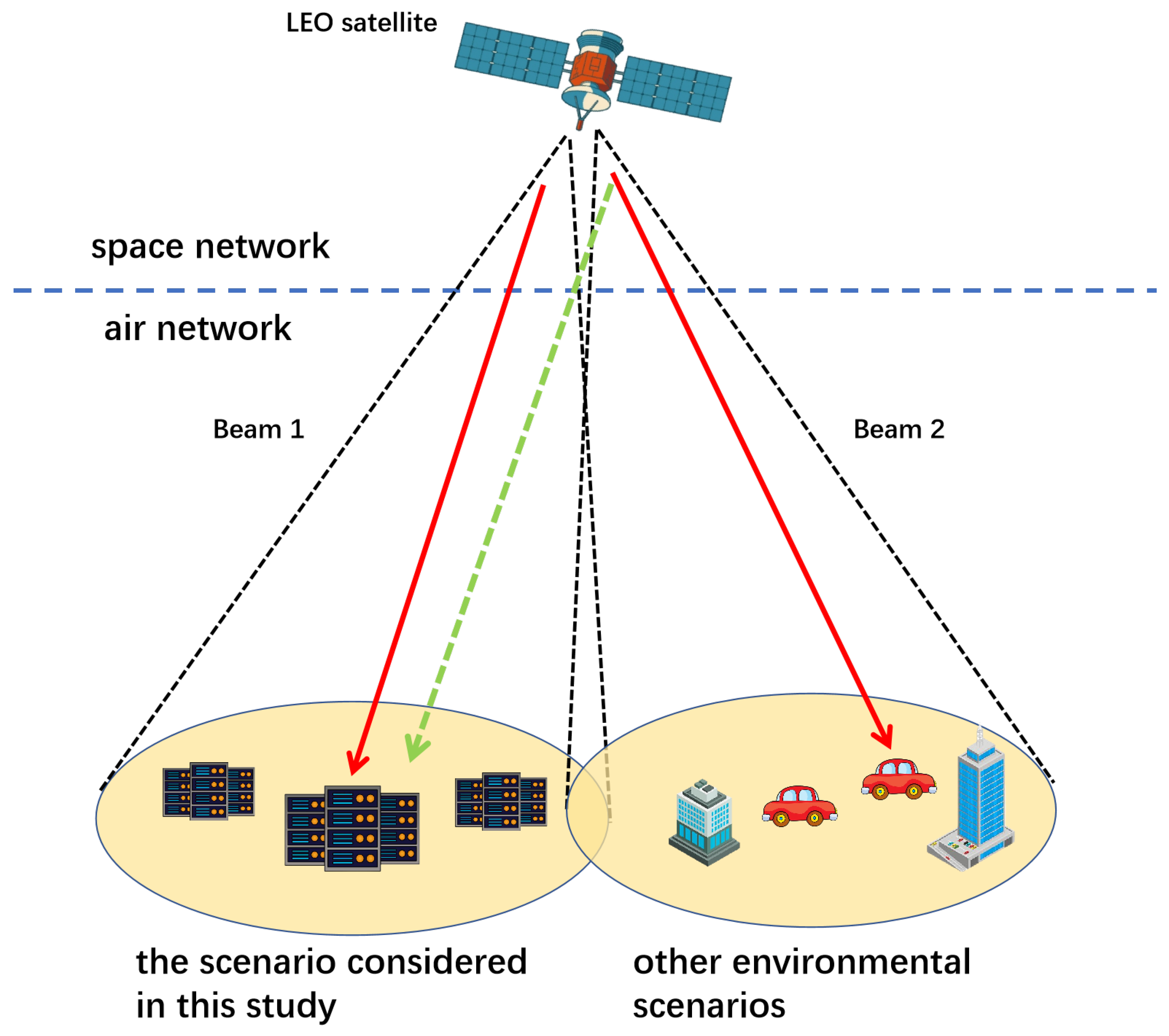

2.2. Communication Model

2.2.1. Ground-to-Air Link

2.2.2. Air-to-Air Link

2.2.3. Air-to-Space Link

2.2.4. Space-to-Ground Link

2.3. Computational Model

2.3.1. UAV Computing Node

2.3.2. Ground Computing Center

2.3.3. LEO Satellite Node

2.4. Load Balancing and Energy Control Model

3. Task Optimization Model for Non-Terrestrial Networks with Resource Coordination

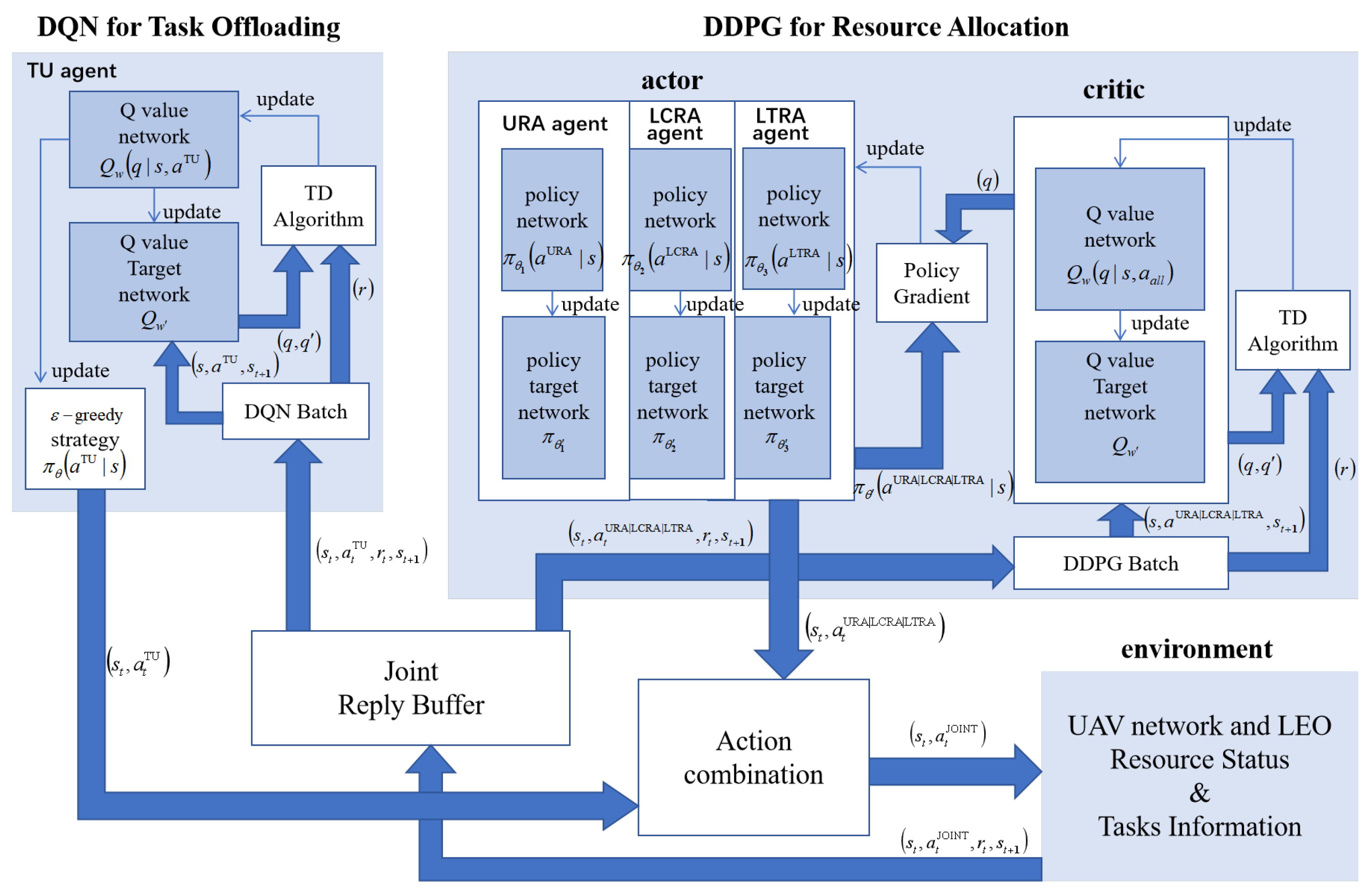

4. Algorithmic Solution

4.1. Deep Reinforcement Learning Based on Multi-Agent

4.2. Design of the Reward Function

4.3. Two-Layer Based on Multi-Type-Agent Deep Reinforcement Learning Algorithm

4.3.1. DQN Network

4.3.2. DDPG Network

4.3.3. Training Method and Execution Flow

| Algorithm 1 Two-layer Multi-agent Deep Reinforcement Learning for Task Offloading and Resource Allocation (TMDRL). |

2: Generate tasks, initialize , 3: Initialize state space S, partial observation spaces O 4: Initialize exploration factor , action noise 5: for each task in order of arrival do 6: TO agent on UAV selects offloading action 7: if then ▹ Offload to UAV 8: URA agent selects computing resource action 9: else if then ▹ Offload to LEO for computation 10: LCRA agent on LEO selects action 11: else if then ▹ Offload to GCC via LEO 12: LTRA agent on LEO selects action 13: end if 14: Merge actions: 15: Execute , observe next state 16: end for 17: Obtain rewards for all state-action pairs after all tasks are executed 18: Store experiences into joint replay buffer 19: Sample a batch of L experiences from the replay buffer 20: for round to L do ▹ DQN update thread 21: Compute loss for DQN Q-network via TD error 22: Update DQN Q-network parameters 23: if round step then 24: Update target Q-network: 25: end if 26: end for 27: for round to L do ▹ DDPG update thread 28: Compute loss for DDPG critic network via TD error 29: Update DDPG critic network parameters 30: if round step then 31: Update target critic network: 32: end if 33: if round frequency then 34: Compute policy gradient for actor network 35: Update DDPG actor network parameters 36: if round (frequency·step) then 37: Update target actor network: 38: end if 39: end if 40: end for 41: end for |

5. Simulation Results and Analysis

5.1. Parameter Settings and Benchmark Algorithms

5.1.1. Algorithms for Task Offloading and Resource Allocation Using Deterministic Methods

5.1.2. Algorithms with a Single-Layer Architecture and Fewer Types of Agents

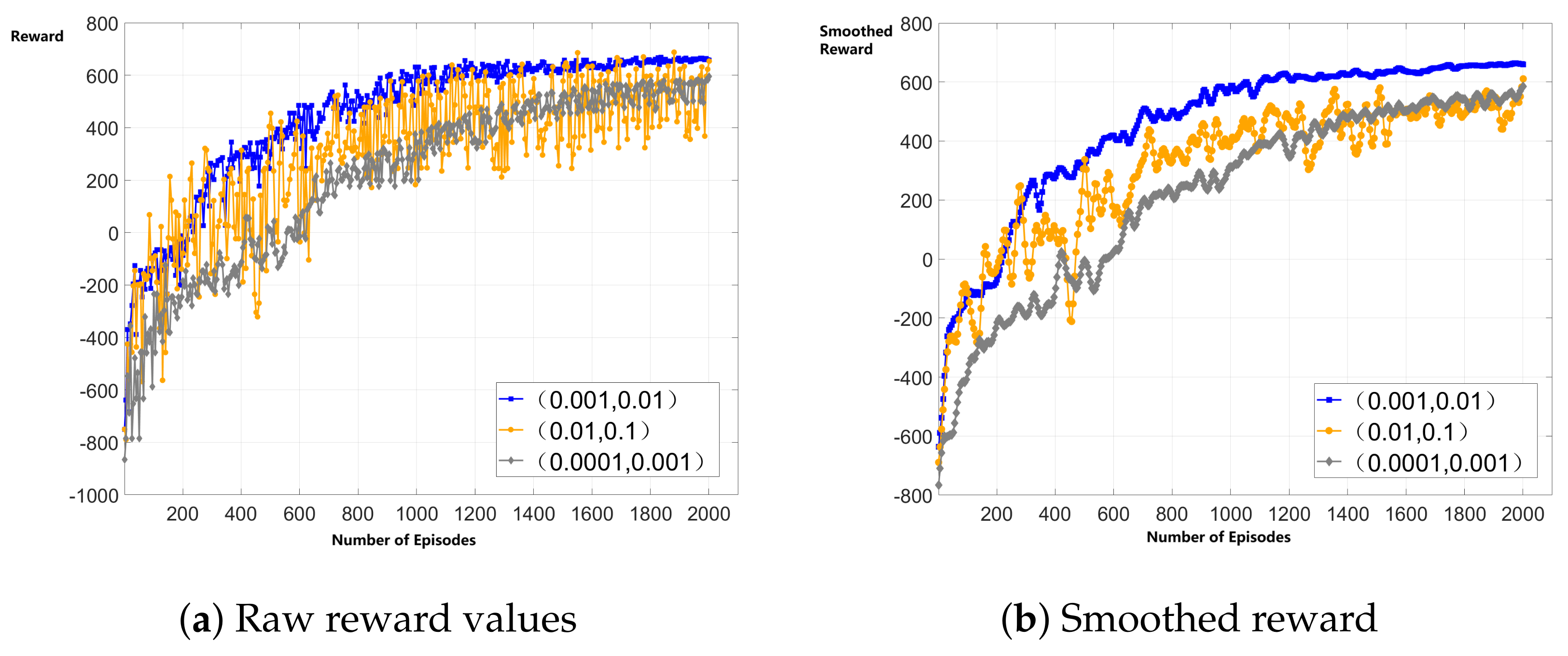

5.2. Feasibility Analysis

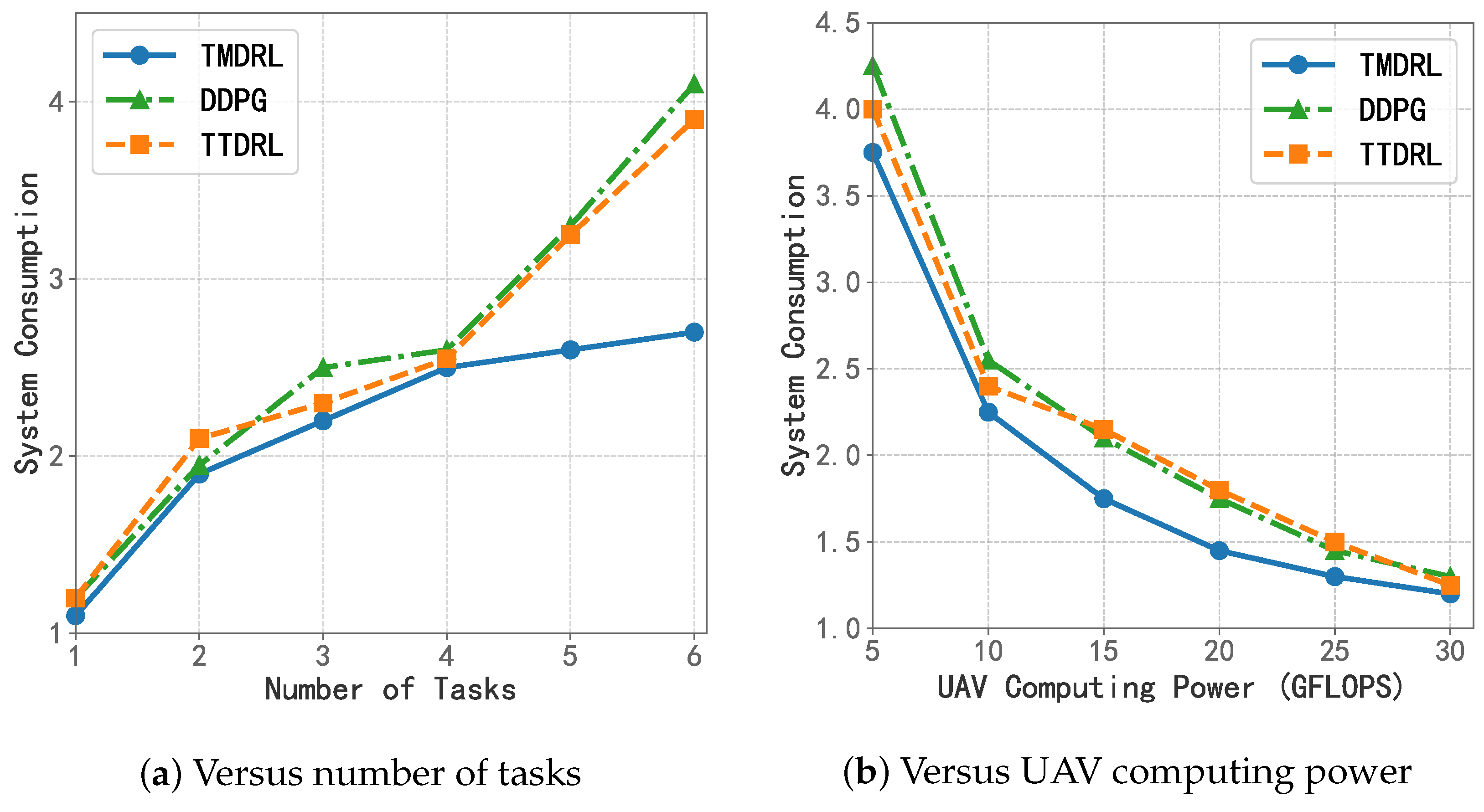

5.3. System Consumption

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Raeisi-Varzaneh, M.; Dakkak, O.; Habbal, A.; Kim, B.-S. Resource scheduling in edge computing: Architecture, taxonomy, open issues and future research directions. IEEE Access 2023, 11, 25329–25350. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Wang, D.; Tian, J.; Zhang, H.; Wu, D. Task offloading and trajectory scheduling for UAV-enabled MEC networks: An optimal transport theory perspective. IEEE Wirel. Commun. Lett. 2021, 11, 150–154. [Google Scholar] [CrossRef]

- Azari, M.M.; Solanki, S.; Chatzinotas, S.; Kodheli, O.; Sallouha, H.; Colpaert, A.; Montoya, J.F.M.; Pollin, S.; Haqiqatnejad, A.; Mostaani, A.; et al. Evolution of non-terrestrial networks from 5G to 6G: A survey. IEEE Commun. Surv. Tutor. 2022, 24, 2633–2672. [Google Scholar] [CrossRef]

- Giordani, M.; Zorzi, M. Non-terrestrial networks in the 6G era: Challenges and opportunities. IEEE Netw. 2021, 35, 244–251. [Google Scholar] [CrossRef]

- Guo, X.; Ma, L.; Su, W.; Jiang, X. Failure models for space-air-ground integrated networks. In Proceedings of the 2024 International Conference on Satellite Internet (SAT-NET), Xi’an, China, 25–27 October 2024; pp. 78–81. [Google Scholar]

- Shi, Y.; Yi, C.; Wang, R.; Wu, Q.; Chen, B.; Cai, J. Service migration or task rerouting: A two-timescale online resource optimization for MEC. IEEE Trans. Wirel. Commun. 2024, 23, 1503–1519. [Google Scholar] [CrossRef]

- Yi, C.; Huang, S.; Cai, J. Joint resource allocation for device-to-device communication assisted fog computing. IEEE Trans. Mob. Comput. 2021, 20, 1076–1091. [Google Scholar] [CrossRef]

- Yi, C.; Cai, J.; Su, Z. A multi-user mobile computation offloading and transmission scheduling mechanism for delay-sensitive applications. IEEE Trans. Mob. Comput. 2020, 19, 29–43. [Google Scholar] [CrossRef]

- Ei, N.N.; Aung, P.S.; Han, Z.; Saad, W.; Hong, C.S. Deep-reinforcement-learning-based resource management for task offloading in integrated terrestrial and nonterrestrial networks. IEEE Internet Things J. 2025, 12, 11977–11993. [Google Scholar] [CrossRef]

- Giannopoulos, A.E.; Paralikas, I.; Spantideas, S.T.; Trakadas, P. HOODIE: Hybrid computation offloading via distributed deep reinforcement learning in delay-aware cloud-edge continuum. IEEE Open J. Commun. Soc. 2024, 5, 7818–7841. [Google Scholar] [CrossRef]

- Yang, W.; Feng, Y.; Yang, Y.; Xing, K. Joint task offloading and resource allocation for blockchain-empowered SAGIN. In Proceedings of the 2024 International Conference on Networking, Sensing and Control (ICNSC), Hangzhou, China, 18–20 October 2024; pp. 1–6. [Google Scholar]

- Dai, X.; Chen, X.; Jiao, L.; Wang, Y.; Du, S.; Min, G. Priority-aware task offloading and resource allocation in satellite and HAP assisted edge-cloud collaborative networks. In Proceedings of the 2023 15th International Conference on Communication Software and Networks (ICCSN), Shenyang, China, 21–23 July 2023; pp. 166–171. [Google Scholar]

- Chen, Z.; Zhang, J.; Min, G.; Ning, Z.; Li, J. Traffic-aware lightweight hierarchical offloading towards adaptive slicing-enabled SAGIN. IEEE J. Sel. Areas Commun. 2024, 42, 3536–3550. [Google Scholar] [CrossRef]

- Fan, K.; Feng, B.; Zhang, X.; Zhang, Q. Demand-driven task scheduling and resource allocation in space-air-ground integrated network: A deep reinforcement learning approach. IEEE Trans. Wirel. Commun. 2024, 23, 13053–13067. [Google Scholar] [CrossRef]

- Tu, H.; Bellavista, P.; Zhao, L.; Zheng, G.; Liang, K.; Wong, K.K. Priority-based load balancing with multi-agent deep reinforcement learning for space-air-ground integrated network slicing. IEEE Internet Things J. 2024, 11, 30690–30703. [Google Scholar] [CrossRef]

- Khizbullin, R.; Chuvykin, B.; Kipngeno, R. Research on the effect of the depth of discharge on the service life of rechargeable batteries for electric vehicles. In Proceedings of the 2022 International Conference on Industrial Engineering, Applications and Manufacturing (ICIEAM), Sochi, Russia, 16–20 May 2022; pp. 504–509. [Google Scholar]

- Wang, J.; Xu, Z.; Zhi, R.; Wang, L. Reliability study of LEO satellite networks based on random linear network coding. In Proceedings of the 2024 IEEE International Conference on Acoustics, Speech, and Signal Processing Workshops (ICASSPW), Seoul, Republic of Korea, 14–19 April 2024; pp. 665–669. [Google Scholar]

- Shanthi, K.G.; Sohail, M.A.; Babu, M.D.; KR, C.L.; Mritthula, B. Latency minimization in 5G network backhaul links using chaotic algorithms. In Proceedings of the 2023 International Conference on Advances in Computation, Communication and Information Technology (ICAICCIT), Faridabad, India, 23–24 November 2023; pp. 630–635. [Google Scholar]

- Meng, A.; Gao, X.; Zhao, Y.; Yang, Z. Three-dimensional trajectory optimization for energy-constrained UAV-enabled IoT system in probabilistic LoS channel. IEEE Internet Things J. 2022, 9, 1109–1121. [Google Scholar] [CrossRef]

- Zhang, H.; Jiang, M.; Ma, L.; Xiang, Z.; Zhuo, S. Computing offloading strategy for Internet of Medical Things in space-air-ground integrated network. In Proceedings of the 2023 IEEE International Conference on E-health Networking, Application & Services (Healthcom), Chongqing, China, 15–17 December 2023; pp. 177–182. [Google Scholar]

- Ding, Y.; Lu, W.; Zhang, Y.; Feng, Y.; Li, B.; Gao, Y. Energy consumption minimization for secure UAV-enabled MEC networks against active eavesdropping. In Proceedings of the 2023 IEEE 98th Vehicular Technology Conference (VTC2023-Fall), Hong Kong, 10–13 October 2023; pp. 1–5. [Google Scholar]

- Aissa, S.B.; Letaifa, A.B.; Sahli, A.; Rachedi, A. Computing offloading and load balancing within UAV clusters. In Proceedings of the 2022 IEEE 19th Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 8–11 January 2022; pp. 497–498. [Google Scholar]

- Xu, B.; Oudalov, A.; Ulbig, A.; Andersson, G.; Kirschen, D.S. Modeling of lithium-ion battery degradation for cell life assessment. IEEE Trans. Smart Grid 2018, 9, 1131–1140. [Google Scholar] [CrossRef]

| Link Type | Channel Condition | Losses | Link Formula |

|---|---|---|---|

| G2A | Probabilistic line-of-sight channel | Path loss Shadowing effect | Equations (2)–(4) |

| A2A | line-of-sight channel | Path loss | |

| A2S | line-of-sight channel | Path loss | |

| S2G | line-of-sight channel | Path loss Multibeam interference | Equations (10)–(12) |

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| N | 5 | 1.5 W | |

| I | 1∼6 | 3 W | |

| H0 | 400 m | dBm | |

| H1 | 800 km | 30 dB | |

| 2 km × 2 km | [10, 200] Mbit | ||

| [1, 100] GFLOPS | 1000 Mbit | ||

| [10, 10,000] ms | K | 3 | |

| [5, 30] GFLOPS | 10 dB | ||

| 500 GFLOPS | 30% | ||

| 5 MHz | 1200 W/m2 | ||

| 5 MHz | M | 1 m2 | |

| 100 MHz | 0.8 kWh | ||

| 100 MHz | L | 100 | |

| 0.2 W | 0.5 W |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qi, Y.; Du, Y.; Guo, Y.; Hao, J. Task Offloading and Resource Allocation Strategy in Non-Terrestrial Networks for Continuous Distributed Task Scenarios. Sensors 2025, 25, 6195. https://doi.org/10.3390/s25196195

Qi Y, Du Y, Guo Y, Hao J. Task Offloading and Resource Allocation Strategy in Non-Terrestrial Networks for Continuous Distributed Task Scenarios. Sensors. 2025; 25(19):6195. https://doi.org/10.3390/s25196195

Chicago/Turabian StyleQi, Yueming, Yu Du, Yijun Guo, and Jianjun Hao. 2025. "Task Offloading and Resource Allocation Strategy in Non-Terrestrial Networks for Continuous Distributed Task Scenarios" Sensors 25, no. 19: 6195. https://doi.org/10.3390/s25196195

APA StyleQi, Y., Du, Y., Guo, Y., & Hao, J. (2025). Task Offloading and Resource Allocation Strategy in Non-Terrestrial Networks for Continuous Distributed Task Scenarios. Sensors, 25(19), 6195. https://doi.org/10.3390/s25196195