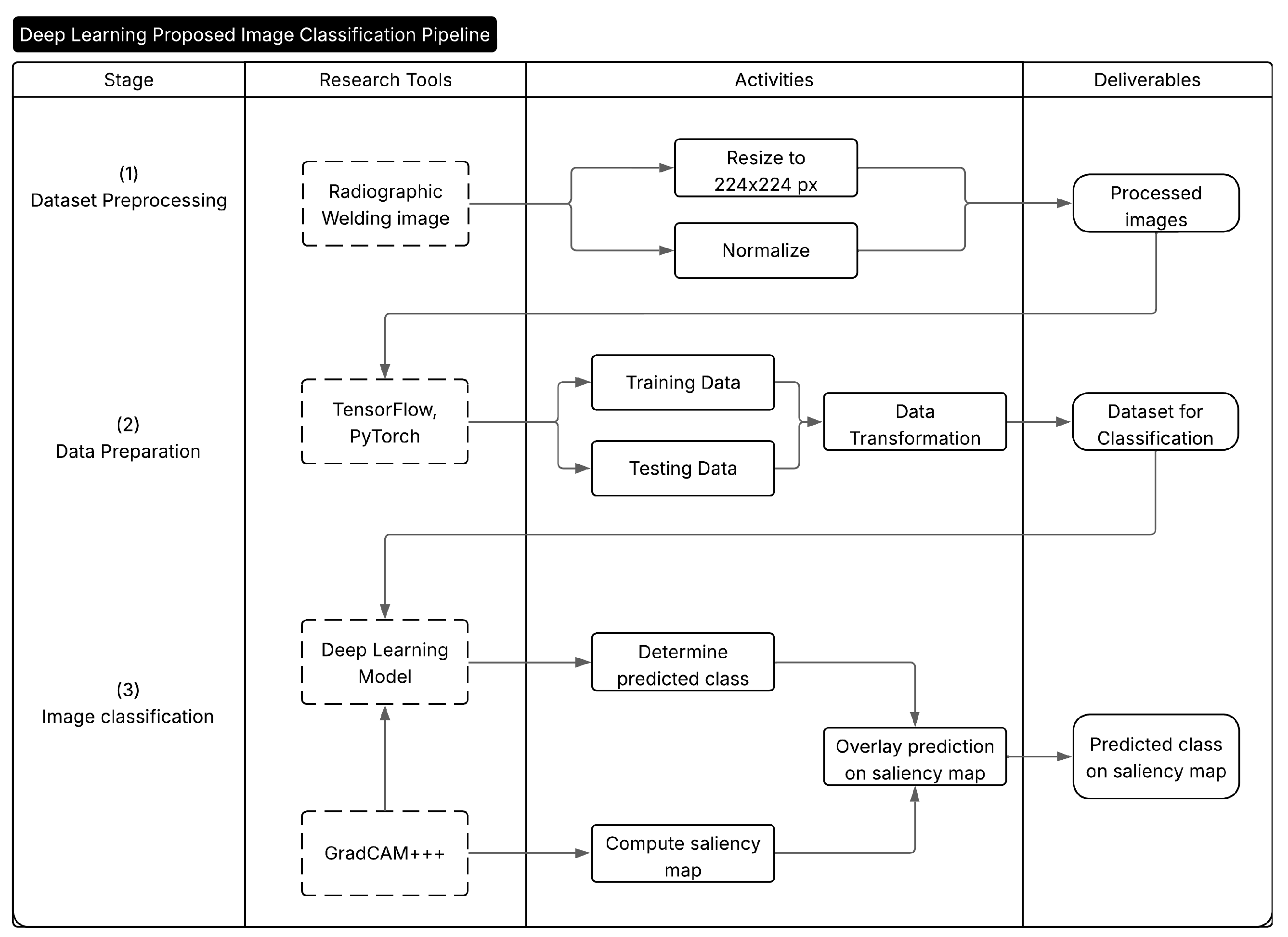

4.1. RIAWELC Dataset

The experiments in this study were conducted using a labeled dataset of weld radiographic images, compiled and annotated in prior works [

30,

31]. The images in this dataset were compiled using industry-standard X-Ray equipment in a real non-disclosed manufacturing environment. While the original publication does not include the specific equipment and its parameters, its images reflect real industrial inspection scenarios aligned with these standards, thereby supporting its suitability as a benchmark dataset for image classification.

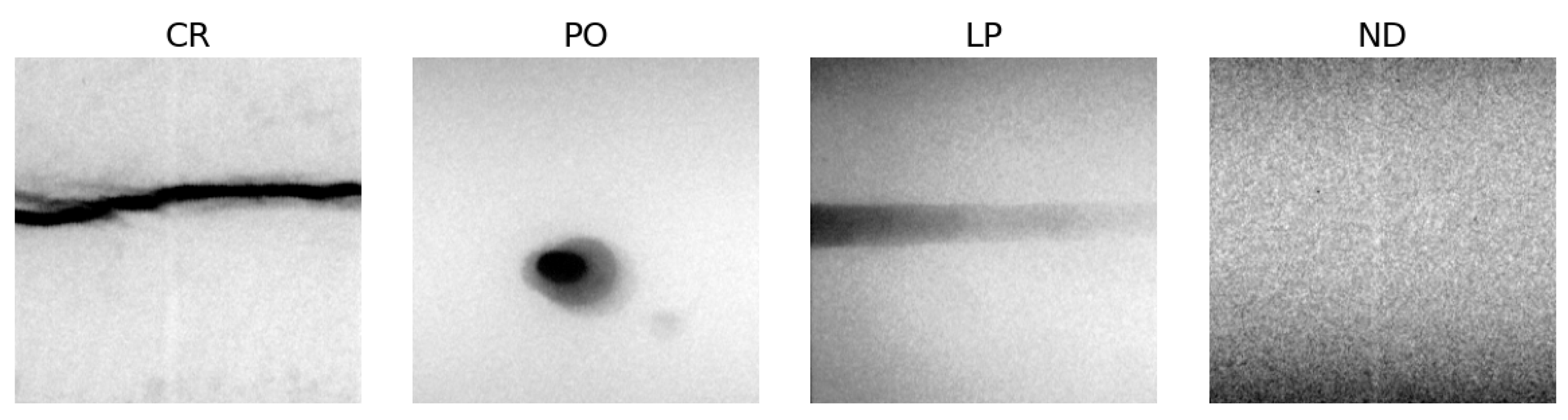

The dataset comprises 24,407 grayscale radiographic images, each classified into one of four categories: three defect types, cracking (CR), porosity (PO), lack of penetration (LP), and a no defect (ND) category. These classes represent common issues encountered in weld inspections and are critical for ensuring structural integrity in industrial manufacturing. Each sample in the dataset corresponds to a cropped region of interest, standardized to a size of 224 × 224 pixels, extracted from larger weld radiographs. The annotations were performed by domain experts and reflect real-world variations in defect shape, contrast, and noise, making the dataset a representative benchmark for defect classification. Furthermore, these classes are consistent with the categories of discontinuities defined in major international standards for weld inspection. For example, the AWS D1.1 [

1] Structural Welding Code explicitly lists cracks, porosity, and incomplete penetration as rejectable discontinuities due to their impact on weld integrity. Similarly, ISO 17636-1 [

32] provides guidelines for radiographic testing techniques, while ISO 10675-1 [

33] defines acceptance levels for such discontinuities. This alignment reinforces the dataset’s relevance for industrial applications, as summarized in

Table 2.

The dataset was initially stratified by class into training (65%), validation (25%), and testing (10%) subsets.

Table 3 summarizes the class distribution across these splits. A representative example from each class is shown in

Figure 3.

The proposed WeldVGG is specifically suited to this dataset due to CNNs being particularly well suited to this dataset because the grayscale radiographs encode weld defects as localized edge- and texture-based patterns (e.g., crack tips, pore clusters, root gaps) that CNNs learn effectively through small receptive fields and hierarchical feature stacking. Crucially, the dataset’s scale (24,407 annotated tiles across CR/PO/LP/ND) provides sufficient supervised signal for training compact or moderate-capacity CNNs, and prior experiments on the same corpus [

30,

31] have already demonstrated high test accuracy with a lightweight model, indicating that defect classes are separable in a CNN feature space. These properties make CNNs a natural and empirically validated choice for this benchmark.

4.2. GDXray Weld Subset (Series W0003)

To evaluate cross-dataset generalization, we employed the weld subset of the GDXray database [

34]. While the welding category of GDXray includes four distinct series (W0001–W0004), only Series W0003 was used in this study, as it provides digitized radiographs from a round-robin test conducted by BAM under ISO 17636-1 standards, together with the accompanying annotation file.

Series W0003 contains 68 radiographs in total, of which 38 images include detailed defect annotations in the Excel metadata. Each annotated radiograph was manually partitioned into 1 cm-wide segments (248 pixels at the native resolution) which were later resized to 224 × 224 pixels following the same convention as in the RIAWELC dataset. From these crops, labels were manually assigned based on the defect type reported in the annotation file.

For consistency with the RIAWELC setup, only the four common defect categories were considered: Cracking (CR), Porosity (PO), Lack of Penetration (LP), and No Defect (ND). Crops containing multiple simultaneous defect types were discarded to avoid label ambiguity. After this filtering step, a balanced subset of 120 images (30 per class) was constructed for evaluation, the distribution of defect types is shown in

Table 4. This curated subset ensured compatibility with the RIAWELC experimental setup, yet preserved the intrinsic variability of GDXray radiographs, providing a more realistic test of cross-dataset generalization. A sample of these labeled images can be seen in

Figure 4.

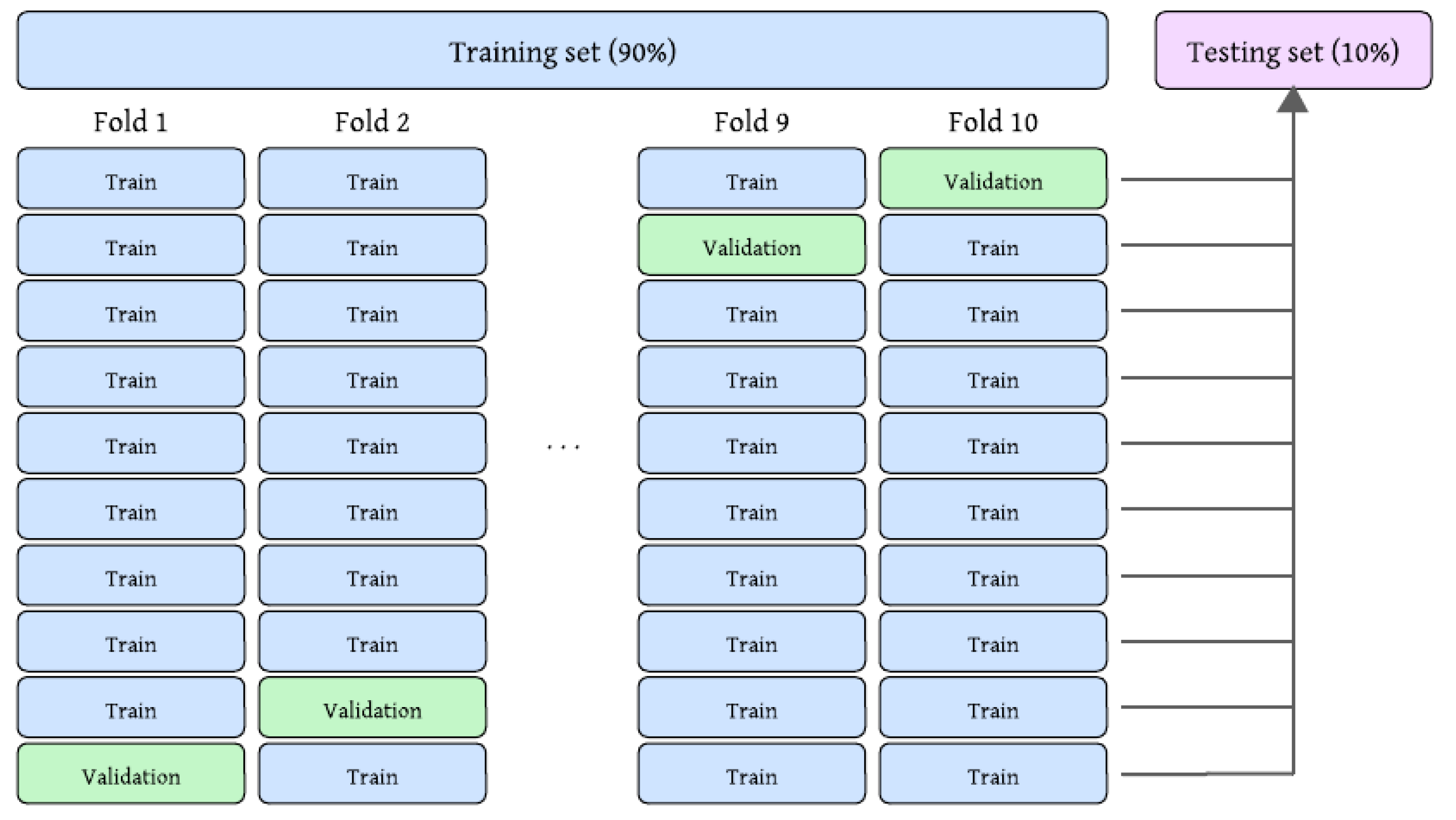

4.3. Experimental Setup

All experiments were conducted using the dataset described in

Section 4.1. The original training and validation splits were merged into a combined set of 21,964 images, preserving class balance. A 10-fold stratified cross-validation protocol was applied to this set for model training and validation, ensuring that each image was used once for validation and nine times for training. A fixed holdout test set comprising 2443 samples (10% of the total data) was reserved for final evaluation, as depicted in

Figure 5.

The primary architecture investigated is WeldVGG, a VGG-inspired Convolutional Neural Network (CNN) implemented from scratch in PyTorch (see

Section 3.4). To provide a modern and computationally efficient baseline, MobileNetV3 [

29] was also included for comparison. MobileNetV3 leverages depthwise separable convolutions, inverted residual blocks, and squeeze-and-excitation attention to achieve high accuracy with substantially fewer parameters than WeldVGG, making it suitable for deployment in resource-constrained environments. For both CNNs, input images were resized to

pixels and normalized prior to training.

Additionally, four traditional classifiers—Decision Tree, Random Forest, K-Nearest Neighbors (KNN), and Support Vector Classifier (SVC)—were implemented using Scikit-learn. For these models, images were resized to a smaller

resolution to reduce computational cost and feature dimensionality. The resized images were flattened into one-dimensional vectors, standardized, and compressed via PCA to 100 components prior to training. Hyperparameter tuning was performed via GridSearchCV with 5-fold cross-validation on the training folds. The explored parameter ranges are provided in

Table A1, and the optimal configurations found for each classifier are summarized in

Table A2 (

Appendix A).

Both CNN architectures were trained for 30 epochs per fold using the Adam optimizer (

learning rate), cross-entropy loss, and a batch size of 32. Model weights were saved based on the highest validation F1-score. Traditional classifiers were similarly selected based on their best F1-score during tuning. A concise overview of all experimental settings is provided in

Table 5.

All experiments were conducted using Kaggle’s hosted environment. For training the CNN architectures, the runtime was configured with a NVIDIA T4 GPU (×2), 16 virtual CPUs, and 30 GB of RAM. Traditional machine learning models were executed on CPU resources.

4.4. Evaluation Metrics

The classification performance of the proposed and baseline models was evaluated using standard metrics, including accuracy, precision (

P), recall (

R), and F1-score (

F1), the harmonic mean of precision and recall. These metrics are computed from the confusion matrix, based on the number of correctly and incorrectly predicted class labels across all test samples. Precision is defined as the proportion of correctly predicted positive samples among all samples predicted as positive for a given class, while recall quantifies the proportion of actual positive samples that were correctly identified. The F1-score, which balances both measures, is computed as follows:

Additionally, overall accuracy is reported, defined as the ratio of total correct predictions to the total number of samples:

where

,

,

, and

refer to true positives, true negatives, false positives, and false negatives, respectively.

For multi-class classification, all metrics were computed using macro-averaging, which calculates the unweighted mean of each metric across all classes. This approach treats all classes equally, regardless of their frequency, and is particularly appropriate in the presence of class imbalance. For robust test evaluation, we trained 10 models using stratified 10-fold cross-validation on the training set. Each model was selected based on its best validation F1-score within its fold. All 10 trained models were then independently evaluated on the same holdout test set (10% of data). Final metrics are reported as mean ± standard deviation over these 10 evaluations.

To quantitatively evaluate the interpretability of the Grad-CAM++ visualizations, we adopted the ROAD (Remove And Debias) metric [

35], a class-sensitive approach designed to measure the faithfulness of saliency maps. The ROAD metric assesses the impact of iteratively removing the most or least relevant pixels, as indicated by the saliency map, and recording the resulting change in the model’s confidence for the predicted class. Specifically, we employ the ROADCombined variant, which aggregates performance degradation across multiple removal thresholds (e.g., 20%, 40%, 60%, 80%), yielding a single scalar score per sample. This variant was implemented using the pytorch-grad-cam library [

36]. The ROAD score is defined as follows:

where higher positive values indicate that the most relevant pixels, as identified by the saliency map, have a strong influence on the model’s confidence, thereby validating the quality of the visual explanation. Conversely, near-zero or negative values suggest weak localization or misleading attribution.

4.5. Results

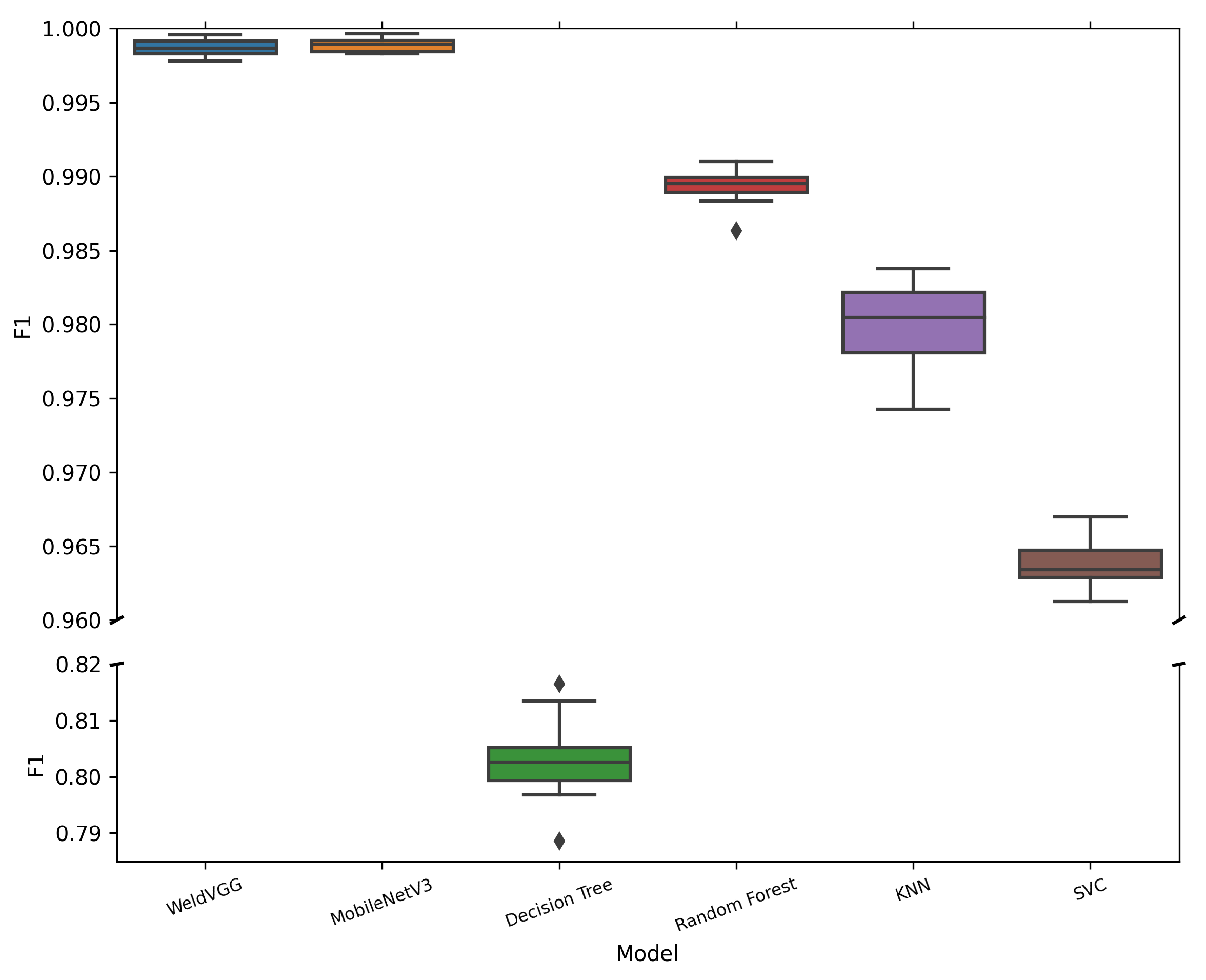

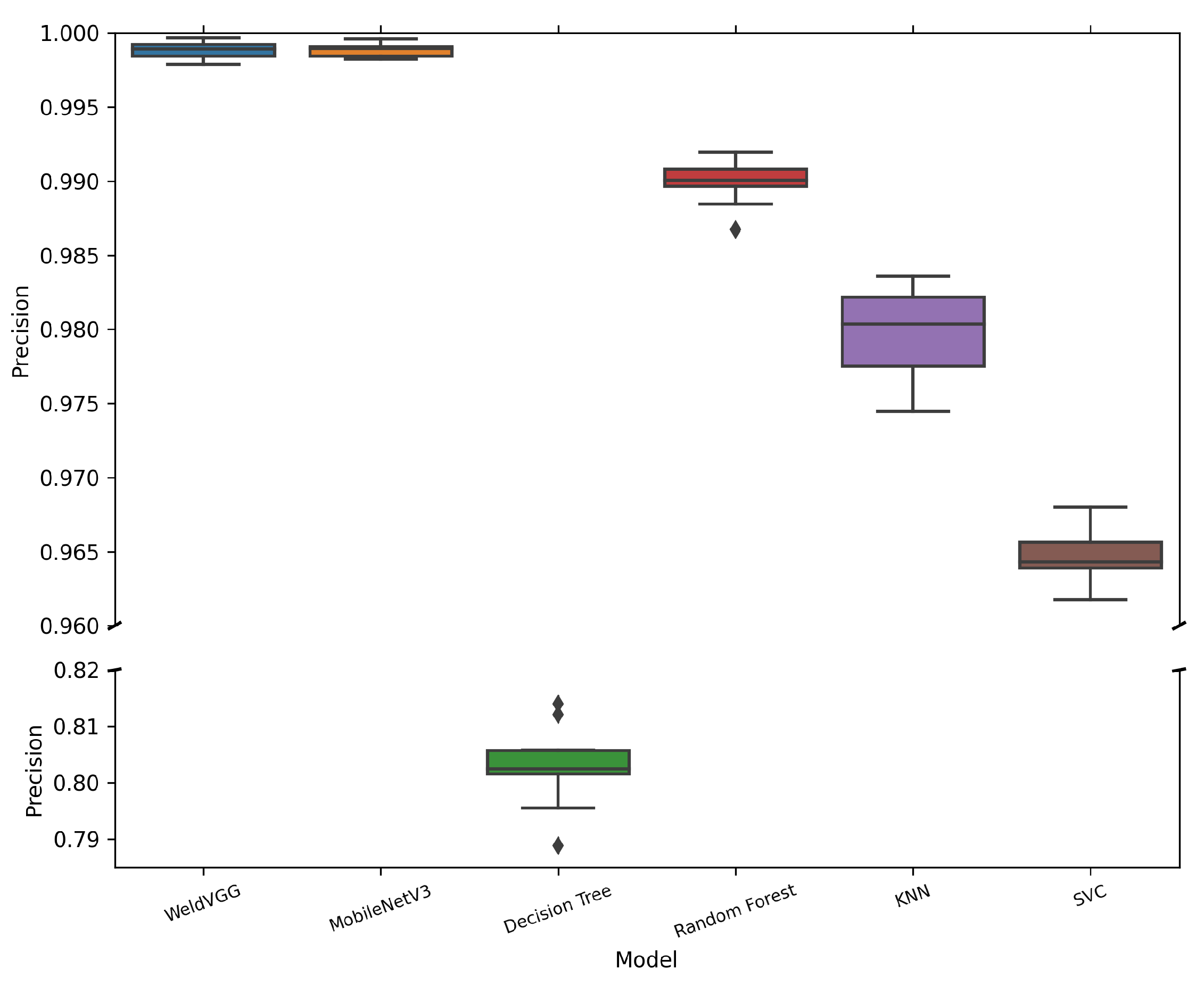

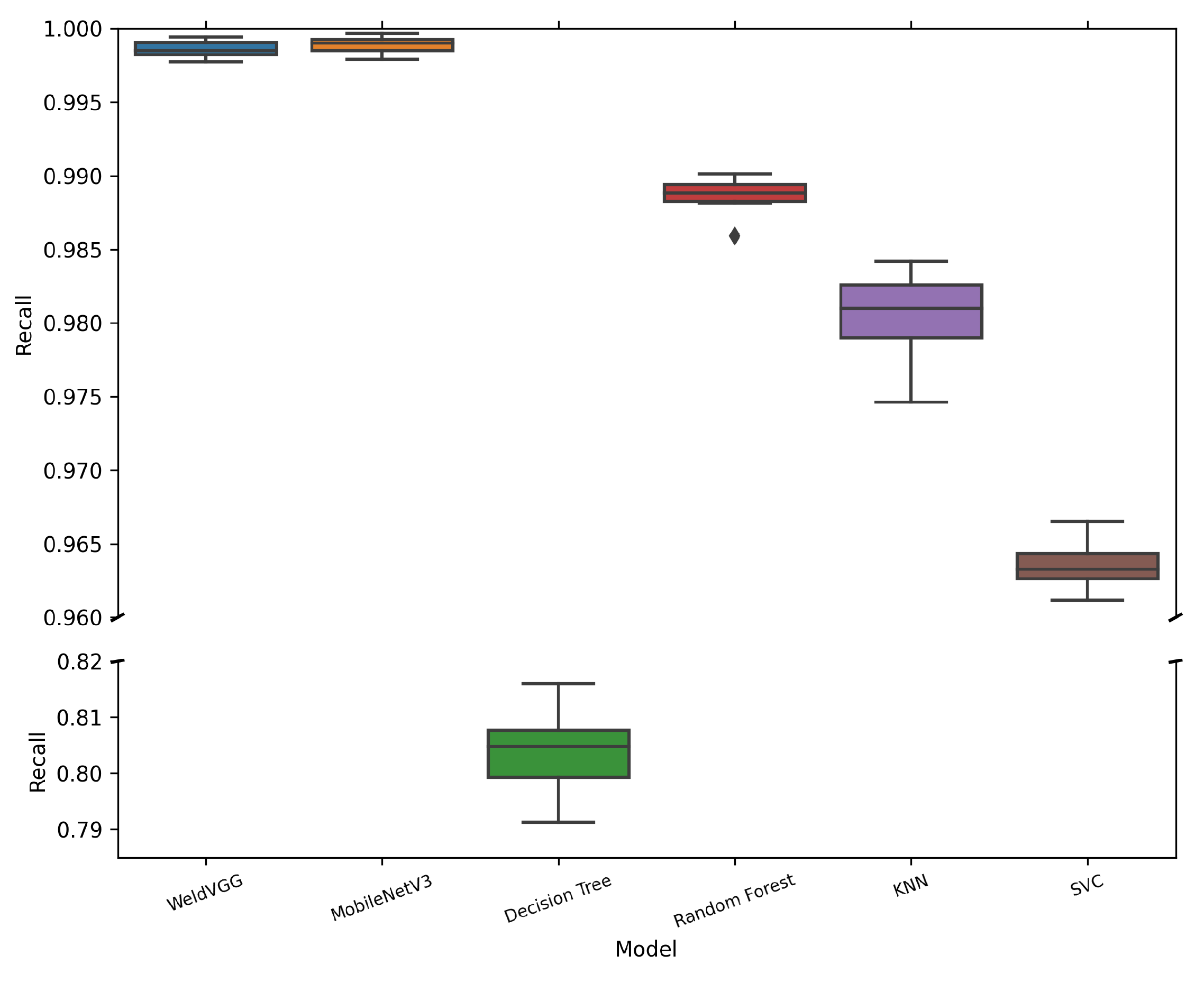

Table 6 summarizes the mean and standard deviation of macro-averaged metrics across 10 cross-validation folds for all evaluated models. Both deep learning models, MobileNetV3 and WeldVGG, achieved near-perfect classification results, substantially outperforming traditional machine learning baselines. MobileNetV3 slightly surpassed WeldVGG across all metrics, confirming the effectiveness of modern lightweight architectures when applied to weld radiographs. Nevertheless, WeldVGG remained highly competitive, with marginal differences that were within statistical variation. In contrast, the shallow baselines exhibited a clear performance gap, with Random Forest providing the strongest results among them, followed by KNN, SVC, and Decision Tree. The boxplots in

Figure 6,

Figure 7,

Figure 8 and

Figure 9 provide further insight into the stability and variability of these outcomes across folds.

Figure 6 illustrates the distribution of macro-averaged Accuracy values across the evaluated models. Both WeldVGG and MobileNetV3 achieved near-perfect performance across all folds, with median accuracies of 0.999 and extremely narrow interquartile ranges (IQR < 0.002). The absence of significant outliers indicates highly stable predictions, underscoring the ability of deep CNN architectures to consistently extract robust spatial features from weld radiographs.

Among the shallow baselines, Random Forest delivered the strongest results, though with slightly greater dispersion across folds, likely due to the fixed, low-dimensional feature space generated through PCA compression. KNN exhibited lower accuracy and wider variability, reflecting its sensitivity to data distribution shifts across folds. The SVC model showed even lower accuracy, with several folds producing outliers that suggest instability in decision boundaries. Finally, Decision Tree attained the lowest accuracy overall, with broad dispersion and multiple outliers, confirming its limited suitability for such complex visual classification tasks.

Figure 7 presents the distribution of macro-averaged F1-scores across all models, offering a deeper view into class-level consistency by balancing both precision and recall. Both WeldVGG and MobileNetV3 achieved near-perfect and highly stable F1-scores across all folds, confirming that their strong accuracy was not simply driven by majority class predictions but reflected consistent performance across all defect categories. The narrow interquartile ranges and absence of significant outliers demonstrate that both deep CNNs generalize reliably across folds.

Among the traditional baselines, Random Forest maintained the highest F1 performance, though with slightly wider dispersion and occasional outliers, suggesting occasional difficulties with minority class samples. KNN followed with competitive but more variable results, reflecting sensitivity to fold composition and intra-class variation. The SVC model exhibited lower overall F1-scores with a tighter distribution, indicating consistent but limited capacity to capture defect-specific patterns. Finally, Decision Tree showed the lowest and most unstable F1 performance, underscoring its inadequacy for complex weld radiograph classification.

Figure 8 shows that MobileNetV3 and WeldVGG consistently achieved the highest precision, with median values near 0.999 and extremely narrow interquartile ranges. This indicates that both CNNs are highly effective at minimizing false positives across defect categories. MobileNetV3 displayed slightly lower variability compared to WeldVGG, suggesting even more consistent decision boundaries. Such reliability is particularly important in industrial inspection, where false alarms can trigger unnecessary weld rework and production delays. Random Forest, while generally precise, exhibited occasional drops in precision, possibly linked to the inherent information loss caused by PCA compression or the model’s sensitivity to fold-specific distributions.

Finally, as shown in

Figure 9, both WeldVGG and MobileNetV3 achieved near-perfect recall with negligible variability, reliably capturing true defect instances across all classes and folds. This high sensitivity is critical in weld inspection, as missed defects (false negatives) directly compromise structural integrity. The narrow interquartile ranges and absence of outliers highlight the robustness of both CNNs in consistently identifying defective regions. Among the baselines, Random Forest attained the strongest recall, though with occasional drops that suggest susceptibility to class imbalance and feature compression. KNN also performed reasonably well but exhibited broader variability, indicating dependence on data distribution across folds. SVC displayed lower overall recall, reflecting its tendency to miss defect cases. Decision Tree again showed the weakest recall, with both low scores and high variability, underscoring its inability to generalize for reliable defect detection.

Overall, the boxplot analysis shows that the depth and representational power of CNN architectures, particularly WeldVGG and MobileNetV3, translate into both higher average scores and greater stability across folds. This consistency is crucial for industrial deployment, where minimizing variability is as important as achieving high accuracy.

Table 7 highlights the best-performing fold for each model based on macro-averaged F1-score. Both WeldVGG and MobileNetV3 achieved near-perfect classification (F1 = 0.9996), confirming their robustness and reliability. Among the traditional baselines, Random Forest was the strongest, but the gap to the CNN models remained substantial.

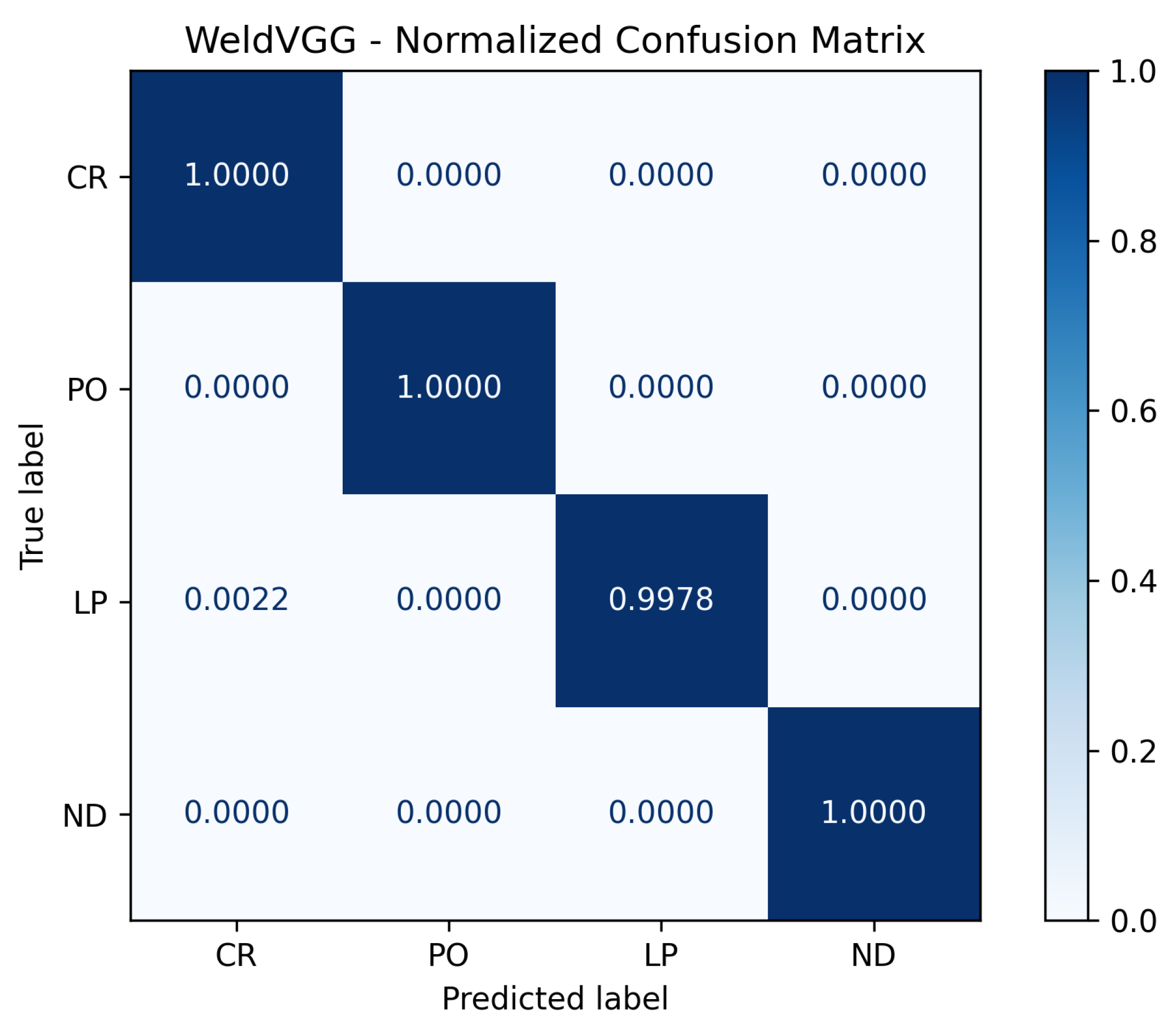

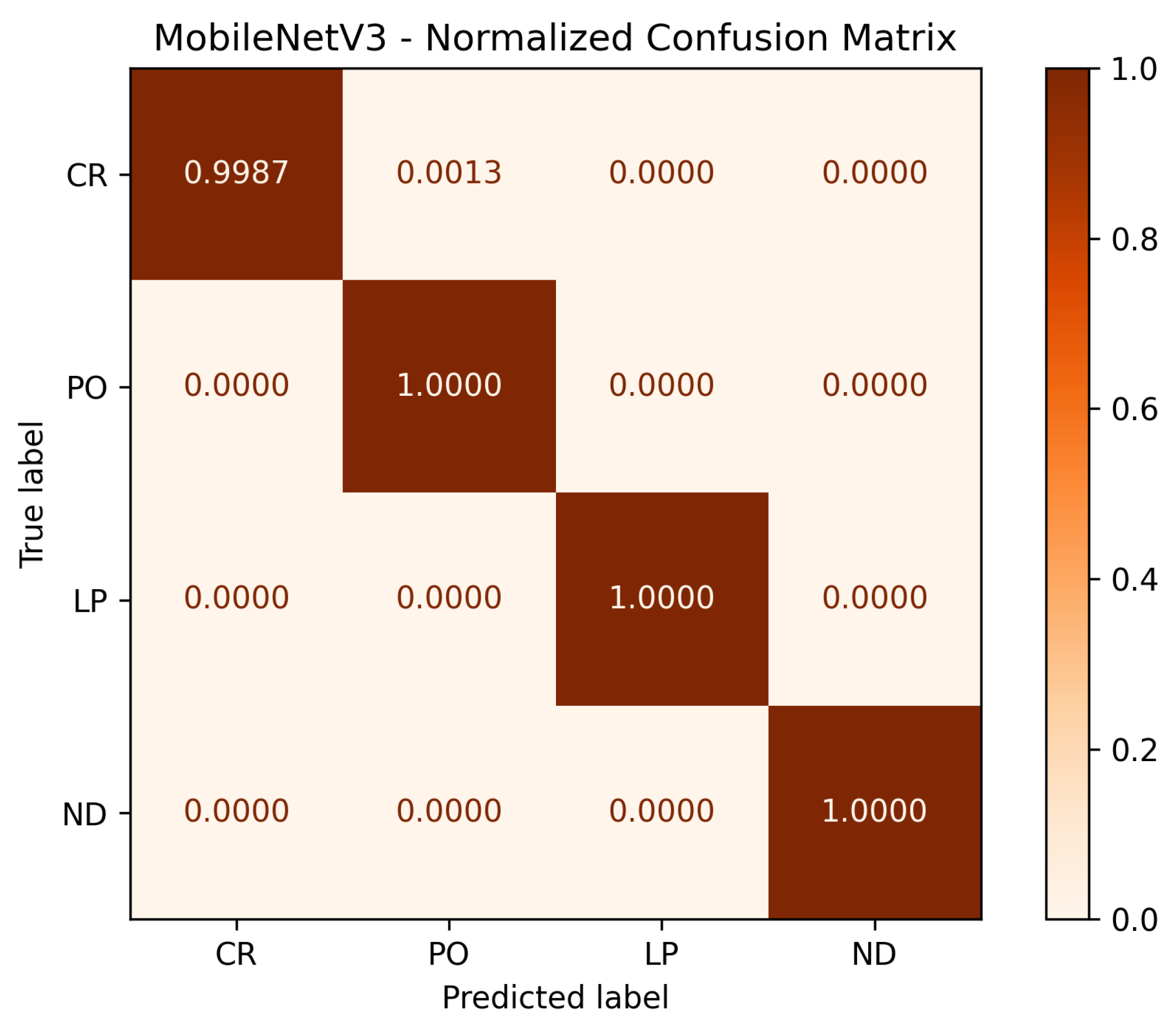

To further analyze per-class performance,

Figure 10 and

Figure 11 display the normalized confusion matrices corresponding to the best-performing fold for the WeldVGG and MobileNetV3 models, respectively. Both CNNs achieved flawless recognition of the no defect (ND) and porosity (PO) classes, underscoring their ability to reliably distinguish these categories. WeldVGG showed a single instance of confusion between lack of penetration (LP) and cracking (CR), while MobileNetV3 produced one minor misclassification between CR and PO. The WeldVGG’s errors to correctly classify those defects can be explained due to the model confusing lack of penetration with cracks on the radiography. Overall, both models demonstrated near-perfect per-class discrimination, with WeldVGG exhibiting slightly higher sensitivity to LP and MobileNetV3 displaying marginally more consistent separation between CR and PO. These results confirm the robustness of both architectures in accurately detecting weld defects at the class level.

Although both CNNs achieved near-perfect performance on the RIAWELC dataset, these results may not directly translate to unconstrained industrial environments. RIAWELC, while realistic and expert-annotated, is composed of cropped regions of interest with clearly delineated defect patterns. Such conditions inherently reduce background noise and imaging variability, making the classification task more tractable than real-world inspections. To address these limitations, the following sections first examine computational complexity and statistical robustness, before turning, in

Section 4.9, to a dedicated cross-dataset evaluation on GDXray to assess generalization.

4.7. Statistical Validation of CNN Performance

To assess whether the observed performance differences between classifiers were statistically meaningful, pairwise comparisons were conducted using the Wilcoxon signed-rank test [

37] over four macro-averaged metrics: Accuracy, F1-Score, Precision, and Recall. Each comparison involved a one-sided test, evaluating whether each convolutional architecture (WeldVGG or MobileNetV3) significantly outperformed the baseline models across 10 independent cross-validation folds.

Table 10 summarizes the directional trend of performance differences, their consistency across all metrics, and whether statistical significance was achieved at the

level. Complementary

p-values for each metric and comparison are provided in

Table 11 for transparency and detailed inspection.

These results confirm that both CNN architectures (WeldVGG and MobileNetV3) deliver statistically significant improvements over shallow baselines consistently across all evaluation metrics. However, the pairwise comparison between the two deep models did not yield statistical significance ( in both directions), despite MobileNetV3 showing slightly higher average scores. This suggests that, while the deeper WeldVGG maintains state-of-the-art performance, a compact architecture such as MobileNetV3 can achieve comparable results with reduced computational cost, offering a competitive lightweight alternative for industrial deployment.

4.9. Generalization to External Datasets (GDXray)

Although the proposed architecture achieves near-perfect performance on RIAWELC, such results may not directly translate to unconstrained industrial environments. RIAWELC consists of cropped regions of interest with clearly delineated defect patterns, which reduces background noise and standardizes image conditions. In real-world inspections, however, radiographs often vary substantially in quality, acquisition parameters, and defect appearance. It is therefore essential to evaluate whether the proposed models generalize beyond the dataset on which they were trained.

To this end, we conducted cross-dataset experiments using the weld subset of the publicly available GDXray database [

34]. Two scenarios were considered:

Zero-shot evaluation: Models trained exclusively on RIAWELC were directly tested on GDXray without fine-tuning. This setting simulates deployment to novel inspection environments without access to retraining data.

Few-shot adaptation: A small number of labeled GDXray images (5 and 10 per class, respectively) were used for fine-tuning, after which the models were evaluated on the remaining GDXray samples. This scenario reflects practical situations in which limited annotation budgets are available for adapting to new factories.

Table 13 summarizes the mean accuracy, macro-F1, macro-Precision and macro-Recall performance across the 10 trained models per scenario.

The results confirm the anticipated domain shift: when transferred directly, both CNNs exhibited a substantial performance drop relative to their near-perfect RIAWELC scores, with macro-F1 decreasing to approximately 64%. This gap highlights the distributional differences between curated datasets and heterogeneous industrial radiographs, and underscores that the near-perfect scores on RIAWELC are not simply a result of overfitting but of dataset homogeneity. Nevertheless, the zero-shot evaluation demonstrates that both models retain transferable representations, avoiding collapse to random performance.

In the few-shot setting, the benefits of limited supervision are evident. With as few as five labeled samples per class, WeldVGG improved by more than 10 percentage points in both accuracy and F1, while MobileNetV3 achieved more modest gains. When ten samples per class were available, WeldVGG reached 83.5% accuracy and 83.4 macro-F1, substantially narrowing the gap to in-domain performance. These results indicate that deeper architectures are better able to exploit limited supervision for rapid domain adaptation, whereas lightweight models such as MobileNetV3 plateau at lower levels of transfer performance.

Overall, these findings reinforce the robustness of the proposed VGG-CNN: despite severe domain shift, it adapts effectively with minimal annotation effort. This suggests that, in realistic factory deployments, the cost of re-annotation can be kept low without sacrificing classification reliability. At the same time, the persistent zero-shot performance drop underscores the need for future research on domain generalization and synthetic augmentation strategies [

39], to ensure consistent weld defect detection under highly variable field conditions.

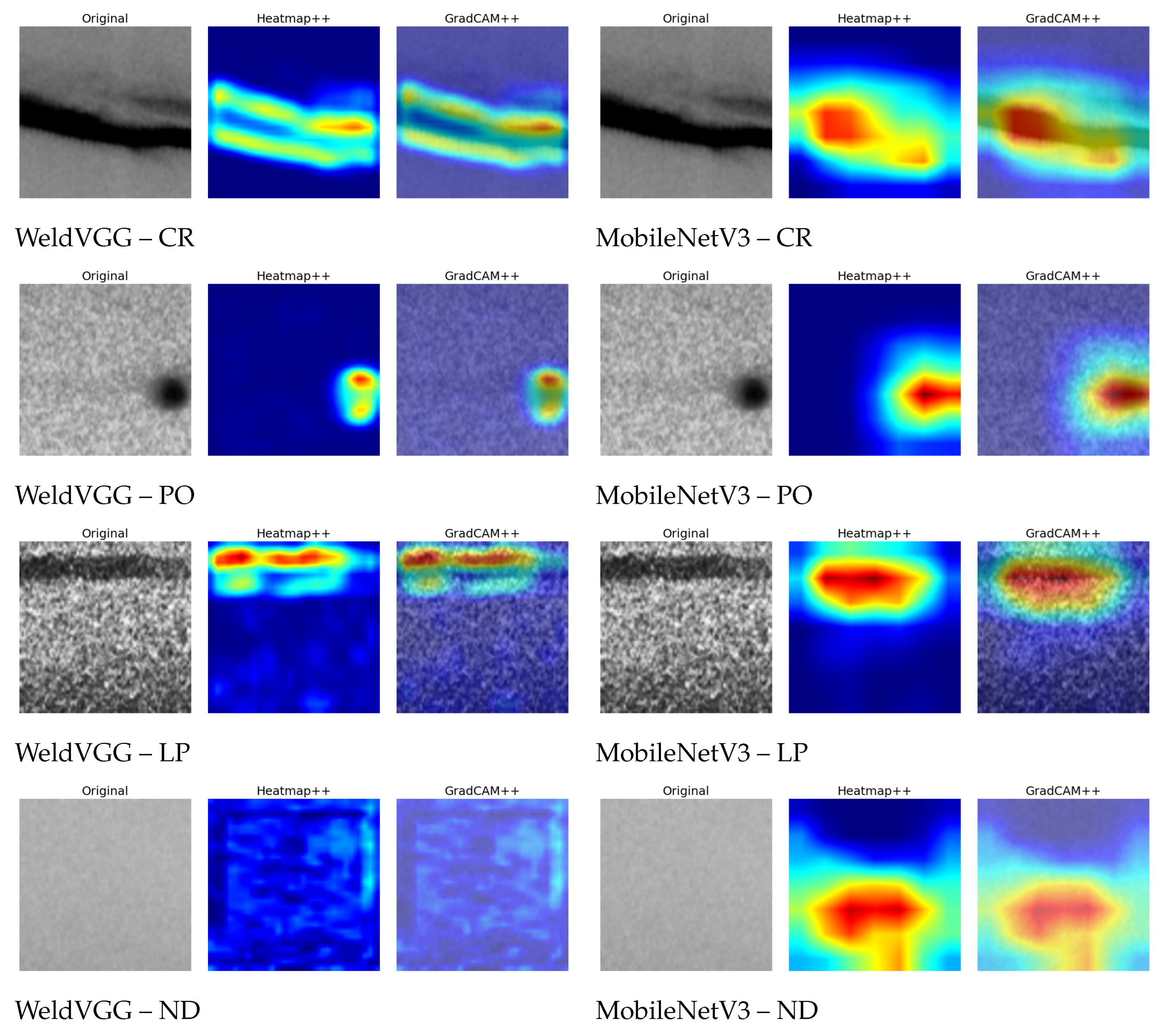

4.10. Interpretability Analysis via Grad-CAM++

Figure 12 presents a qualitative comparison of Grad-CAM++ visualizations for representative samples from each class, as generated by the WeldVGG and MobileNetV3 models. Visually, both models tend to focus on similar discriminative regions within each defect type, indicating that they have learned to attend to class-relevant patterns in the radiographs. However, the MobileNetV3 consistently produces more intense heatmaps, especially for the cracking (CR), porosity (PO), and lack of penetration (LP) classes. This is reflected in the prevalence of red regions in its saliency maps, suggesting higher attribution scores in those areas. In contrast, the WeldVGG exhibits less sharply defined heatmaps, with comparatively weaker activations and broader, more diffuse attention.

An interesting distinction is observed for the ND class, where the WeldVGG’s Grad-CAM++ map is nearly empty, indicating the absence of dominant localized features, which is consistent with the homogeneous and low-texture nature of ND samples. The MobileNetV3, however, displays residual activations and mild noise across the image, suggesting less confident or less focused reasoning in the absence of defect-specific features. This distinction showcases an important difference between both models, highlighting the ability of the proposed WeldVGG to suppress spurious responses in negative samples (ND) and reserve saliency for truly discriminative cues. In other words, the WeldVGG has a better understanding of the ND class as “absence of defect”.

To complement the qualitative Grad-CAM++ visualizations, we computed the ROADCombined metric as a scalar measure of interpretability fidelity. This metric quantifies how strongly the regions highlighted by saliency maps influence the model’s decisions, with higher values indicating greater alignment between attribution and prediction.

Table 14 reports both class-wise and overall ROAD scores across the 2443-image holdout test set, providing a detailed view of explanation quality under in-domain conditions.

The results highlight important differences in attribution behavior between the two models. MobileNetV3 attains higher mean ROAD scores overall (0.272 vs. 0.204), particularly in the defect classes CR (0.330), PO (0.387), and LP (0.381). These values suggest that its Grad-CAM++ maps concentrate more strongly on regions that influence predictions. However, this comes at the expense of stability: MobileNetV3 also exhibits a larger standard deviation (0.198 vs. 0.192), reflecting greater variability across samples and the presence of negative scores in its distribution.

In contrast, WeldVGG produces lower mean ROAD values but with greater consistency across images. This pattern indicates more conservative attribution, where the highlighted regions may be smaller but are more reliably tied to model decisions. This conservatism is especially evident in the ND class, where WeldVGG produces nearly empty saliency maps and a near-zero ROAD score (). While this initially appears unfavorable, it is in fact desirable for defect-free samples, since no specific region should dominate the explanation. As such, negative values arise when removing the highlighted regions does not harm (or even improves) the prediction compared to random, implying that the attribution has captured non-causal or counter-evidential content.

Taken together, these findings suggest a trade-off between attribution strength and consistency. MobileNetV3 yields higher ROAD values in defect classes, but may overemphasize or even spuriously activate irrelevant regions, inflating its scores without improving interpretability. WeldVGG, on the other hand, favors steadier and more calibrated explanations that better reflect the presence or absence of discriminative features.

To assess whether these attribution patterns persist beyond the training domain, we further computed ROADCombined scores on the weld subset of the GDXray database. As described in

Section 4.9, models were evaluated under zero-shot transfer and with limited few-shot adaptation. The resulting scores are presented in

Table 15. This cross-dataset analysis provides a more stringent test of interpretability, since models are confronted with images acquired under different conditions and annotation protocols.

The GDXray evaluation in

Table 15 reveals that both models achieve ROAD scores in a similar range to the in-domain setting, but with greater variability and less stable trends. In the zero-shot case, MobileNetV3 again obtains slightly higher overall scores (

) compared to WeldVGG (

), largely due to stronger attribution in the defect classes CR and LP. However, this advantage diminishes under few-shot adaptation. In fact, WeldVGG surpasses MobileNetV3 in the 10-shot setting (0.235 vs. 0.232 overall), indicating that its attribution patterns adapt more effectively to limited supervision.

Across both models, the ND class remains close to zero, with values ranging from to . As in the RIAWELC experiments, this behavior is consistent with the expectation that defect-free images should not trigger concentrated attention. At the same time, the small positive or negative fluctuations highlight the sensitivity of ROADCombined to noise when saliency maps contain little or no structure.

Taken together, these cross-dataset results suggest that while MobileNetV3 tends to achieve higher ROAD scores in defect classes, WeldVGG provides more stable and calibrated explanations when limited adaptation data are available. More importantly, the inconsistent trends across zero-shot and few-shot conditions reinforce the limitations of ROADCombined as a standalone measure of interpretability: higher values do not necessarily correspond to more faithful or trustworthy explanations, particularly when evaluating transferability across domains.

4.11. Ablation Studies

To further examine the contribution of key architectural and training choices, we conducted a set of ablation experiments on the RIAWELC dataset. For consistency with the main evaluation protocol, results are reported on Fold 4, which was identified as the best-performing fold for WeldVGG as shown in

Table 7. Each ablation modifies a single component of the baseline configuration (four convolutional blocks, two fully connected layers of 512 and 256 units, dropout

, RGB input at

with normalization), while all other settings are kept constant. The baseline corresponds to the original WeldVGG architecture described in

Section 3.4.

The evaluated variants were as follows:

Shallow-CNN, reducing the depth to three convolutional blocks.

No-Dropout, removing dropout layers in the classifier.

Narrow-FC, decreasing the dense layers to 256 and 128 units.

Low-res 192, reducing input size from to .

Grayscale-224, switching from RGB triplication to single-channel input.

No-Norm, omitting input normalization.

The results shown in

Table 16 highlight that both model depth and dropout regularization have measurable impact. Reducing to three convolutional blocks or removing dropout led to small but consistent decreases in macro-F1 (

and

, respectively). In contrast, narrowing the fully connected layers or lowering input resolution had negligible effect, indicating redundancy in dense layer capacity and robustness to modest input scaling. Switching to grayscale yielded identical results to the baseline, which is expected given that RIAWELC images are inherently grayscale and the RGB triplication in the baseline adds no new information. Finally, removing normalization also produced virtually no change, suggesting that the dataset’s pixel distribution is already well-conditioned. Overall, these findings confirm the importance of WeldVGG’s depth and regularization, while demonstrating resilience to input modality and resolution variations.