Multi-Agent Deep Reinforcement Learning for Joint Task Offloading and Resource Allocation in IIoT with Dynamic Priorities

Abstract

Highlights

- Developed a cloud–edge–end collaborative framework that jointly optimizes task offloading and resource allocation for IIoT systems with dynamic task priorities.

- Designed a priority-gated attention-enhanced MAPPO algorithm to capture priority-related features and improve decision accuracy under fluctuating workloads.

- Improves system adaptability and efficiency in IIoT environments with fluctuating workloads and heterogeneous QoS demands.

- Enables robust, low-latency, and energy-efficient scheduling for cloud–edge–end collaborative systems.

Abstract

1. Introduction

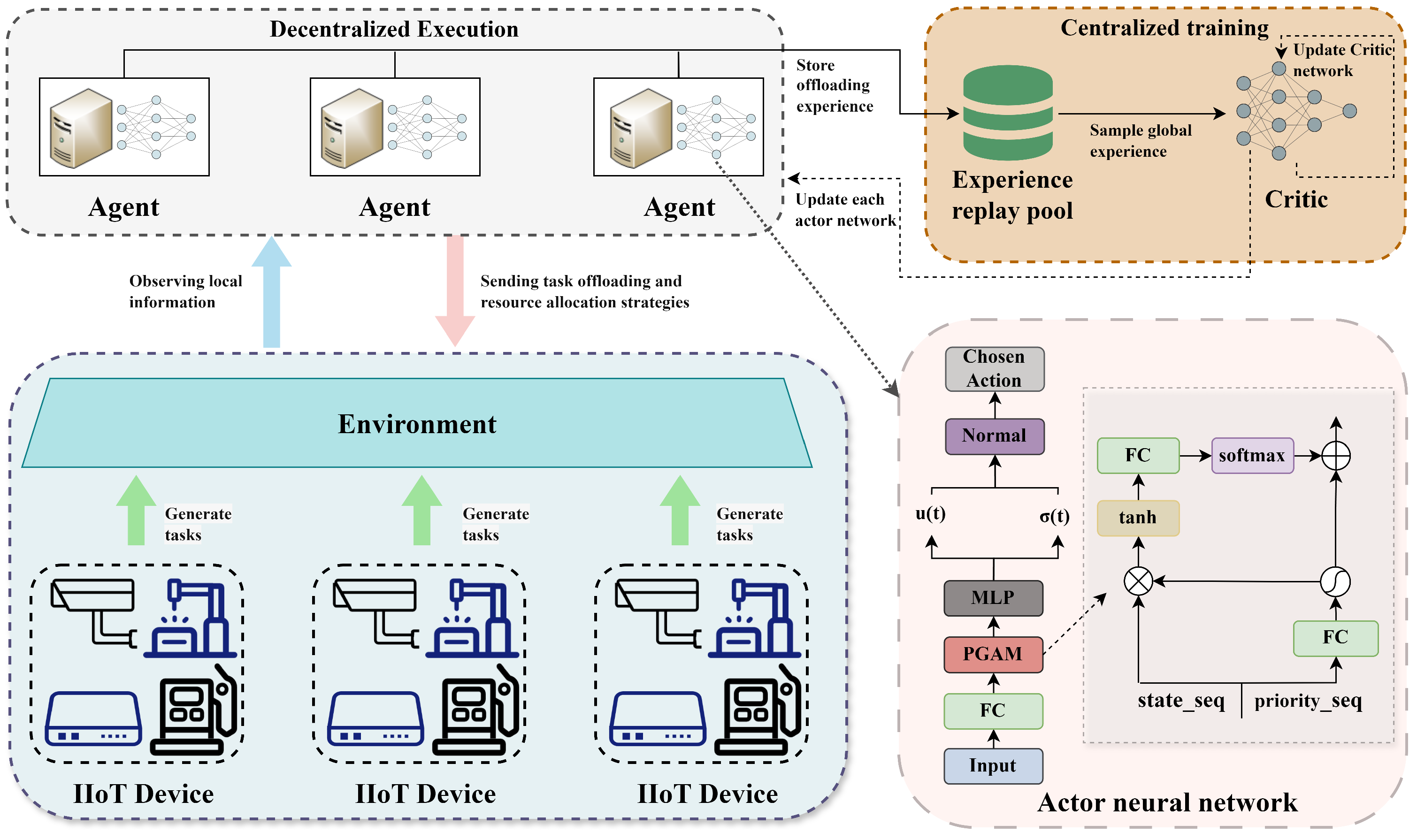

- We construct a cloud–edge–end collaborative framework for TO-RA, and we design a dynamic multi-level feedback queue task-scheduling model. In this framework, the optimization objective for collaborative TO-RA is to minimize overall system latency and energy costs. The problem is formulated as a Mixed-Integer Nonlinear Programming (MINLP) problem. To address this, we propose a DPTORA algorithm, which provides efficient solutions for dynamically prioritized industrial tasks.

- In the first stage, we formulate the task offloading subproblem as a Partially Observable Markov Decision Process (POMDP). To address this, we propose a MAPPO algorithm enhanced with a Priority-Gated Attention Mechanism (PGAM). By incorporating PGAM into the policy network, agents are able to adaptively focus on task priority features, thereby improving the sensitivity of decision-making and the accuracy of resource allocation under dynamic priority conditions.

- In the second stage, we formulate the RA subproblem for each edge server as a constrained, weighted single-objective convex optimization problem. By applying the Karush–Kuhn–Tucker (KKT) conditions, we analytically explore the duality of the objective function. Through the construction of a Lagrange multiplier function, we decouple the transmission and computation resource constraints, and ultimately derive closed-form globally optimal allocation strategies for both bandwidth and computational resources.

- We conduct extensive simulation experiments to validate the convergence and effectiveness of the proposed DPTORA algorithm. The results demonstrate that DPTORA outperforms baseline methods and other mainstream MADRL methods in terms of the task response time, system energy consumption, and the task completion rate.

2. Related Works

2.1. Traditional TO-RA Approaches

2.2. Machine Learning-Based TO-RA Approaches

2.3. Multi-Agent Deep Reinforcement Learning Approaches

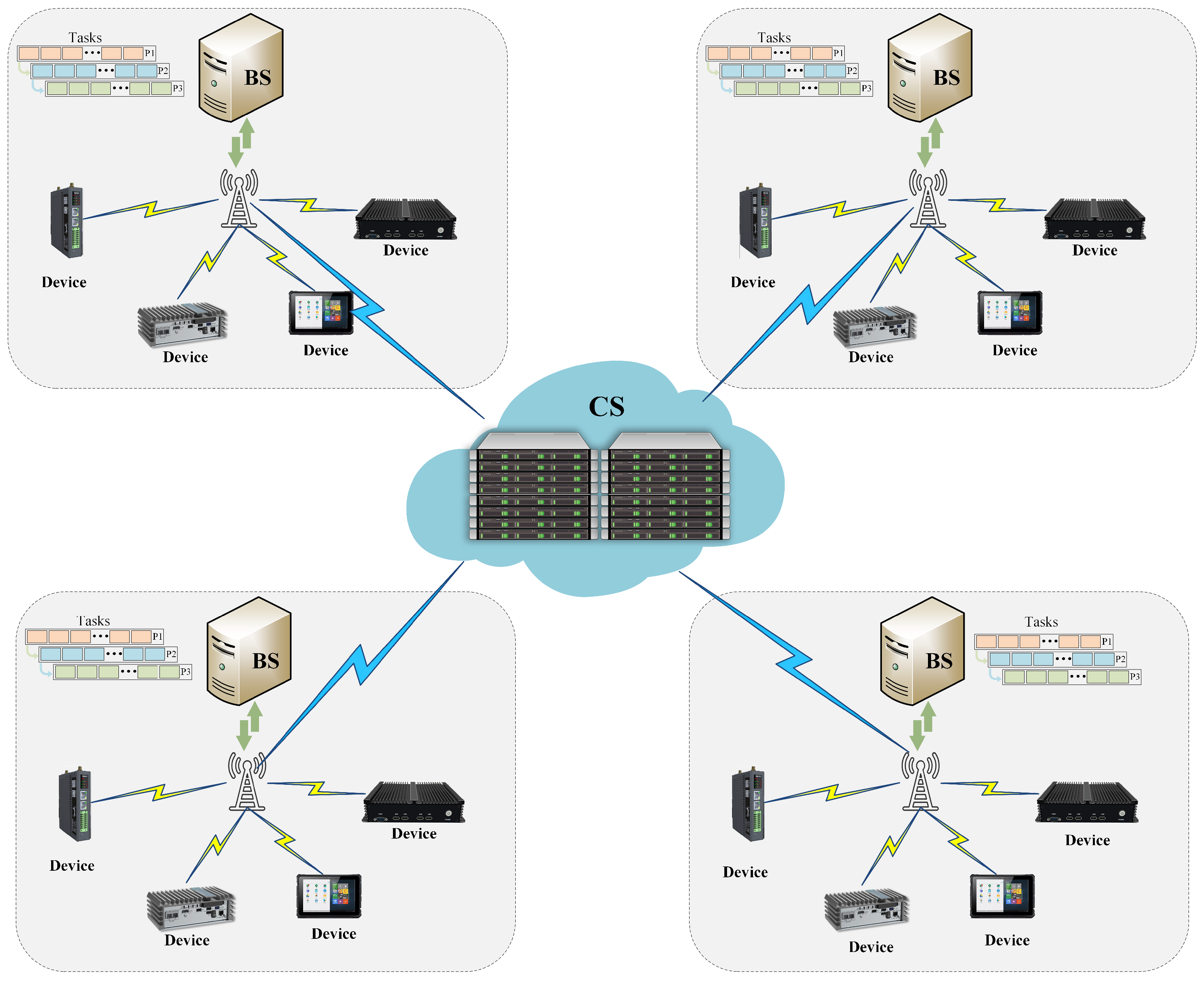

3. System Model

3.1. Network Model

3.2. Task Model

3.3. Communication Model

3.4. Computational Model

3.5. Dynamic Priority Queue Model

3.5.1. Initial Priority Assignment

3.5.2. Dynamic Priority Adjustment

3.5.3. Multi-Level Priority Feedback Queue Scheduling

| Algorithm 1 Multi-level priority feedback queue scheduling |

|

1: Input: Task set 2: Output: Task queue allocation , at each ES 3: while system is running do 4: for each do 5: Compute initial priority 6: Compute waiting time factor 7: Compute load suppression factor 8: Compute urgency factor 9: Calculate dynamic priority: 10: end for 11: Calculate dynamic priority threshold using Equation (17) 12: for each do 13: if then 14: Assign to high-priority queue 15: else 16: Assign to low-priority queue 17: end if 18: end for 19: Execute tasks in using offloading and resource allocation policies 20: Offload tasks in to the CS 21: end while |

3.6. Problem Formulation

4. Methods

4.1. Stage I: Task Offloading Based on Improved MAPPO

- State : the global state space represents the overall status of the IIoT system at time t, encompassing the computing resources, communication resources, and task queue states of all ES:

- Observation : The observation space characterizes all the jointly observable information. At the beginning of each time slot t, agent i obtains its local observation , but it cannot directly access the full global state. The local observation of agent i at time t is defined as:where denotes the observed task information, including the task size, the required CPU cycles, and the deadline. indicates the current task queue length and task priority features. reflects the edge server’s available power, computing resources, load level, and connection status with IIoT devices and the cloud.

- Action : denotes the joint action space of all agents. Based on its local observation and offloading policy, each agent selects the action that maximizes expected reward. The action of agent i at time t is defined as follows:where the action determines the proportions of the task processed locally and offloaded to the cloud, respectively.

- Reward : The reward is the feedback received from the environment based on the agents’ actions. The reward function is designed to minimize total task delay and energy consumption while encouraging the completion of high-priority tasks:where evaluates whether a task is completed within its tolerable delay threshold, . If a task misses the deadline, a penalty proportional to its dynamic priority, , is imposed:

Improved MAPPO Algorithm

| Algorithm 2 Improved MAPPO for task offloading |

| 1: Input: Edge computing environment parameters, Agent set , Local observations , Policy network parameters , Centralized Critic network parameters , Experience replay buffer , Learning rates , . 2: Output: Task offloading policy 3: Initialize policy networks for each agent 4: Initialize global critic network 5: Initialize experience buffer 6: for episode = 1 to E do 7: Initialize global state 8: for to T do 9: for each agent do 10: Observe local state 11: Calculate context vector via PGAM 12: Sample action 13: Execute , receive reward , observe 14: Compute global state: 15: Store in buffer 16: end for 17: for mini-batch to K do 18: Sample mini-batch from 19: Compute value: 20: Compute target: 21: Compute advantage: 22: Update critic: 23: Update actor: 24: end for 25: end for 26: end for |

4.2. Stage II: Resource Allocation Phase

| Algorithm 3 Resource allocation algorithm for edge server |

|

1: Input: Edge server set M; task proportion coefficients ; task demand ; computation demand ; bandwidth ; CPU capacity ; convergence threshold 2: Output: Optimal bandwidth and CPU allocation 3: for each edge server do 4: Initialize Lagrange multipliers , with arbitrary positive values 5: repeat 6: Update Lagrange multipliers , 7: Check convergence conditions: 8: if converged then 9: break 10: else 11: Set , 12: end if 13: until convergence 14: Compute optimal allocation: 15: end for |

4.3. Computational Complexity Analysis of DPTORA

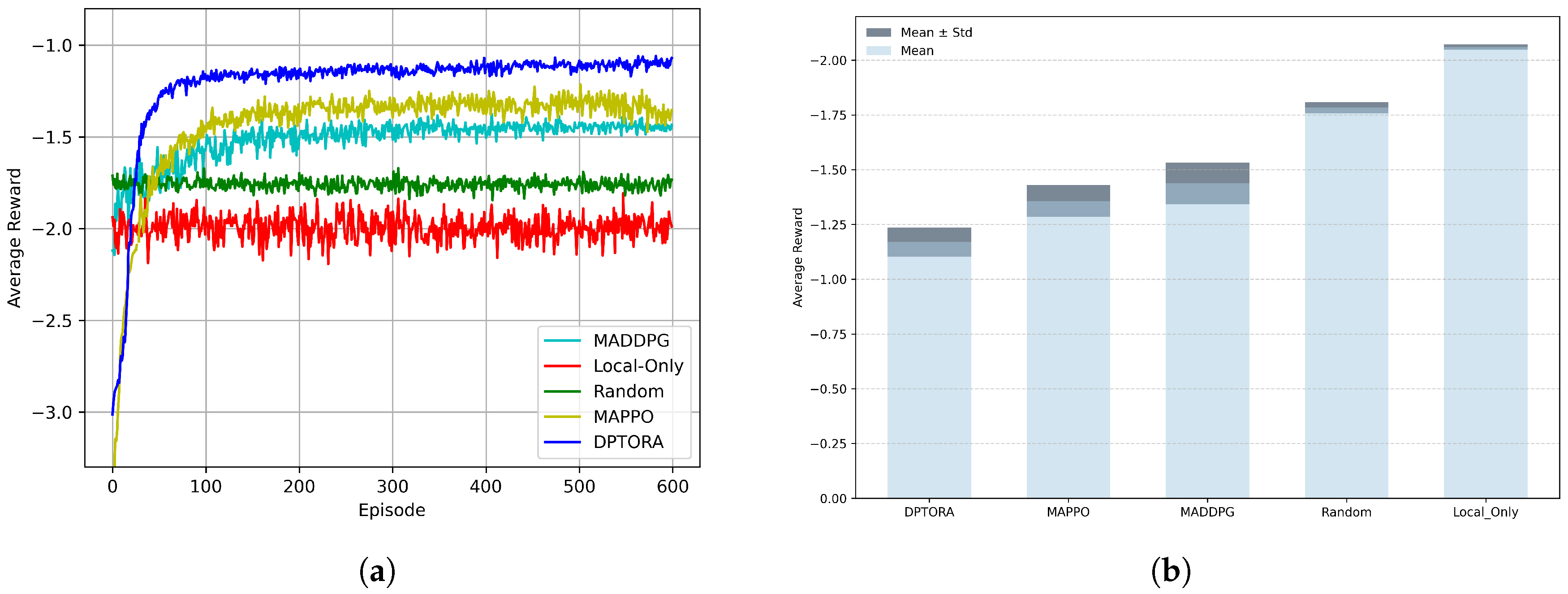

5. Results

- Local-only scheduling baseline algorithm: This fully decentralized baseline executes all computation tasks locally on each IIoT device without any offloading. It serves as a lower bound to reflect performance in the absence of collaborative scheduling.

- Random scheduling baseline algorithm [48]: This baseline randomly assigns each generated task to the local device, an edge server, or the cloud. As a non-intelligent comparator, it highlights the advantages of our method in terms of rational scheduling and performance optimization.

- MADDPG algorithm [30,49]: MADDPG is tailored for cooperative multi-agent tasks and is suitable for environments with coupled agent interactions. In task-offloading settings, MADDPG can learn coordinated policies across heterogeneous devices and resource constraints, thereby improving overall system performance; it thus serves to verify DPTORA’s advantages under complex cooperative scheduling.

- MAPPO algorithm [22,44,50]: MAPPO is an on-policy deep reinforcement learning algorithm for multi-agent scenarios, following a centralized-training–decentralized-execution actor–critic paradigm. It is known for stable learning and convergence in complex environments and can optimize offloading policies under dynamic network conditions and concurrent workloads, making it a strong DRL baseline for task scheduling and resource allocation.

5.1. Experimental Setup

5.2. Convergence Performance Evaluation

5.2.1. Performance Comparison of Convergence

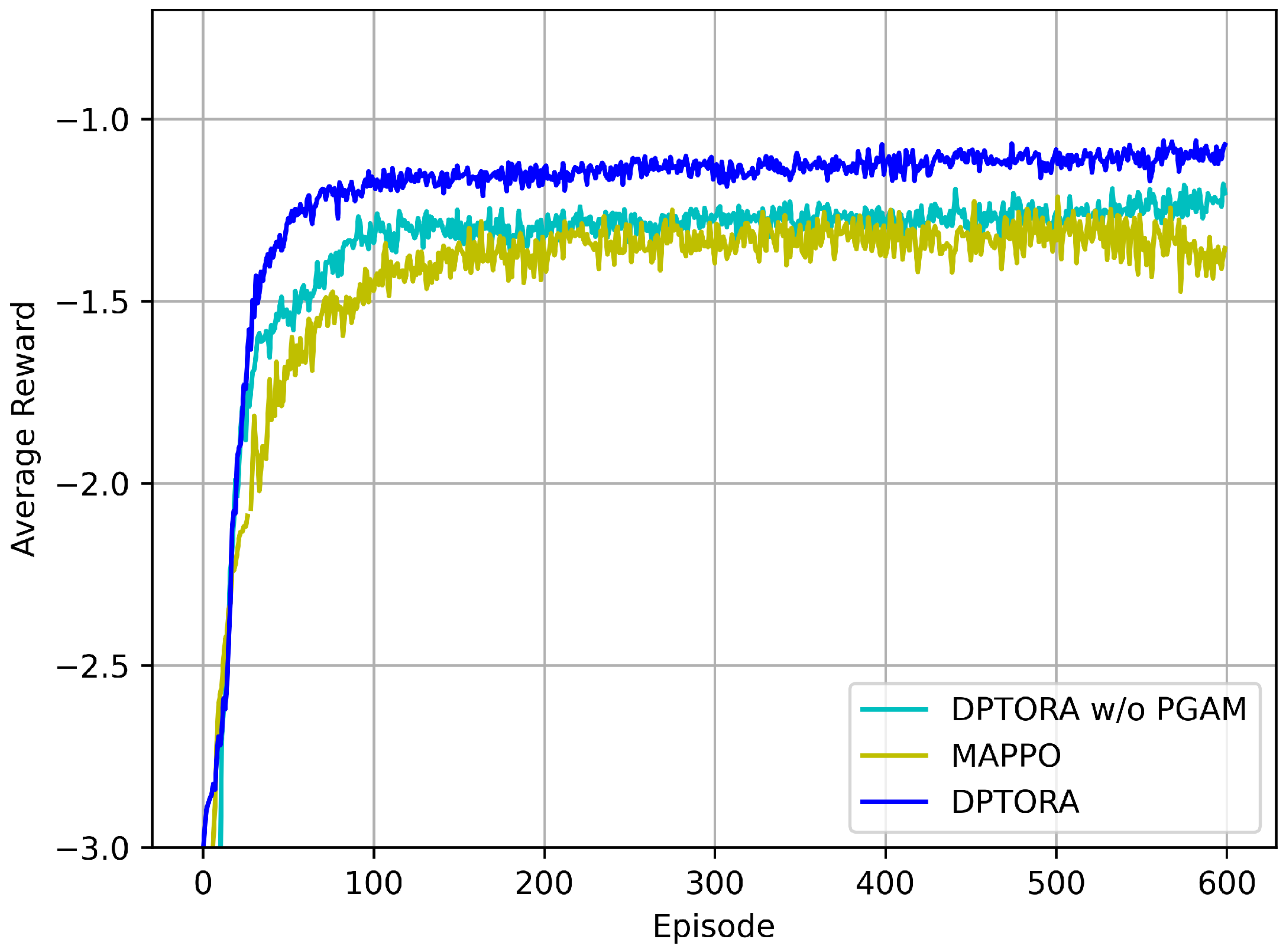

5.2.2. Ablation Experiment

5.3. Scalability and Load Adaptability Evaluation

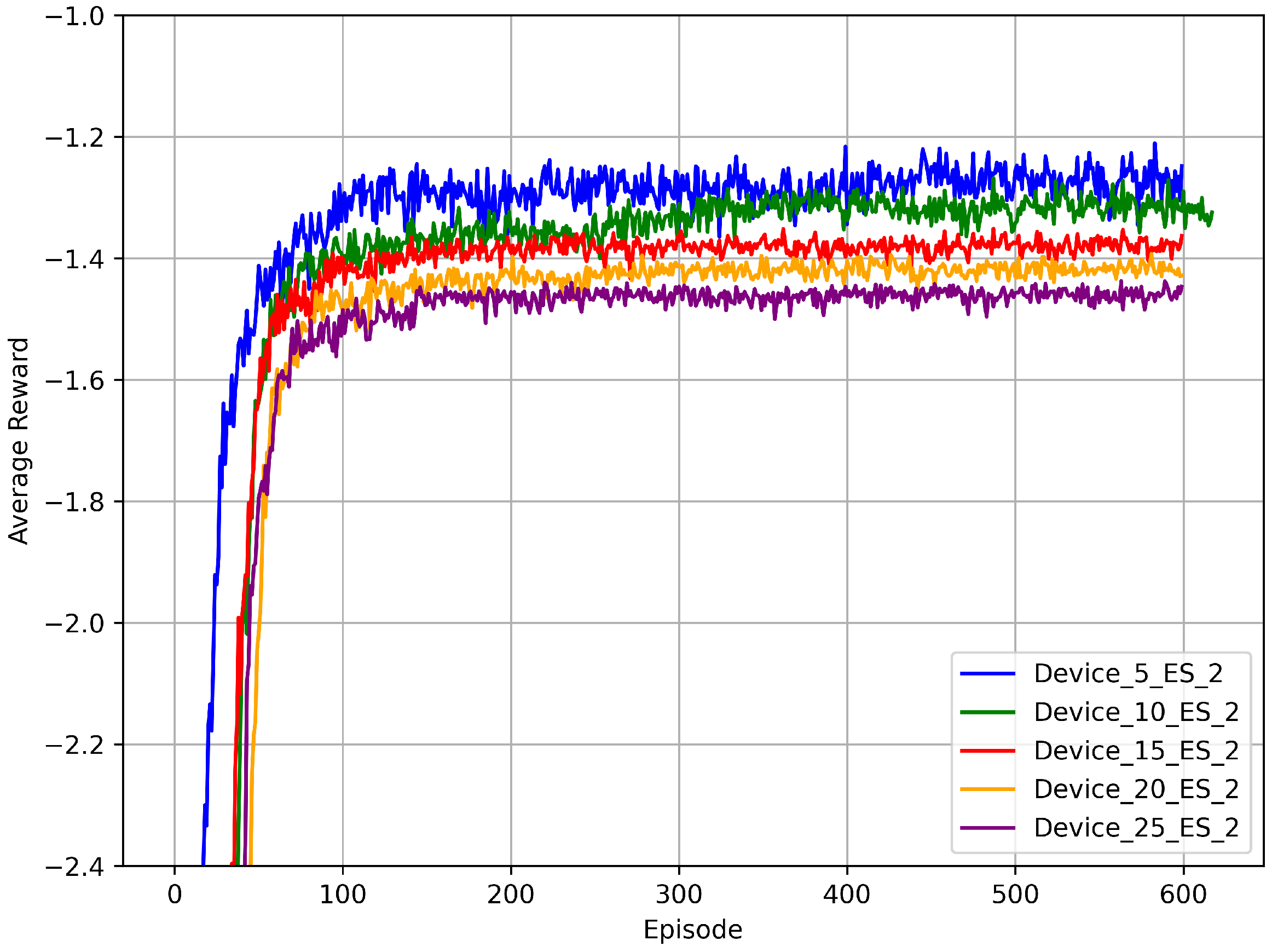

5.3.1. Convergence Analysis Under Different Numbers of Devices

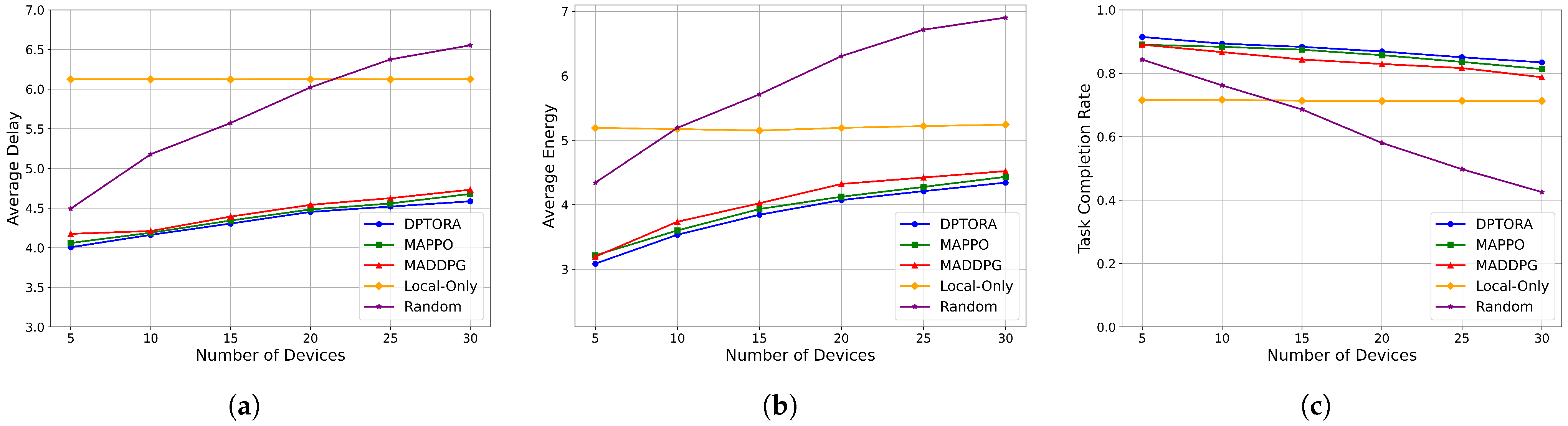

5.3.2. Impact of Device Scale on System Performance

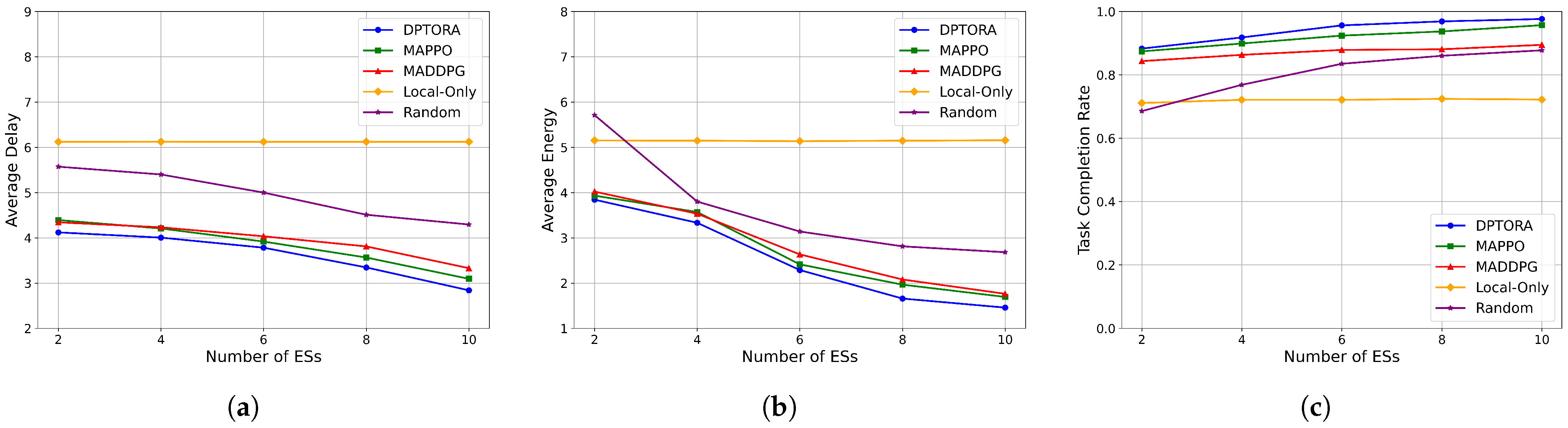

5.3.3. Impact of Edge Server Quantity on System Performance

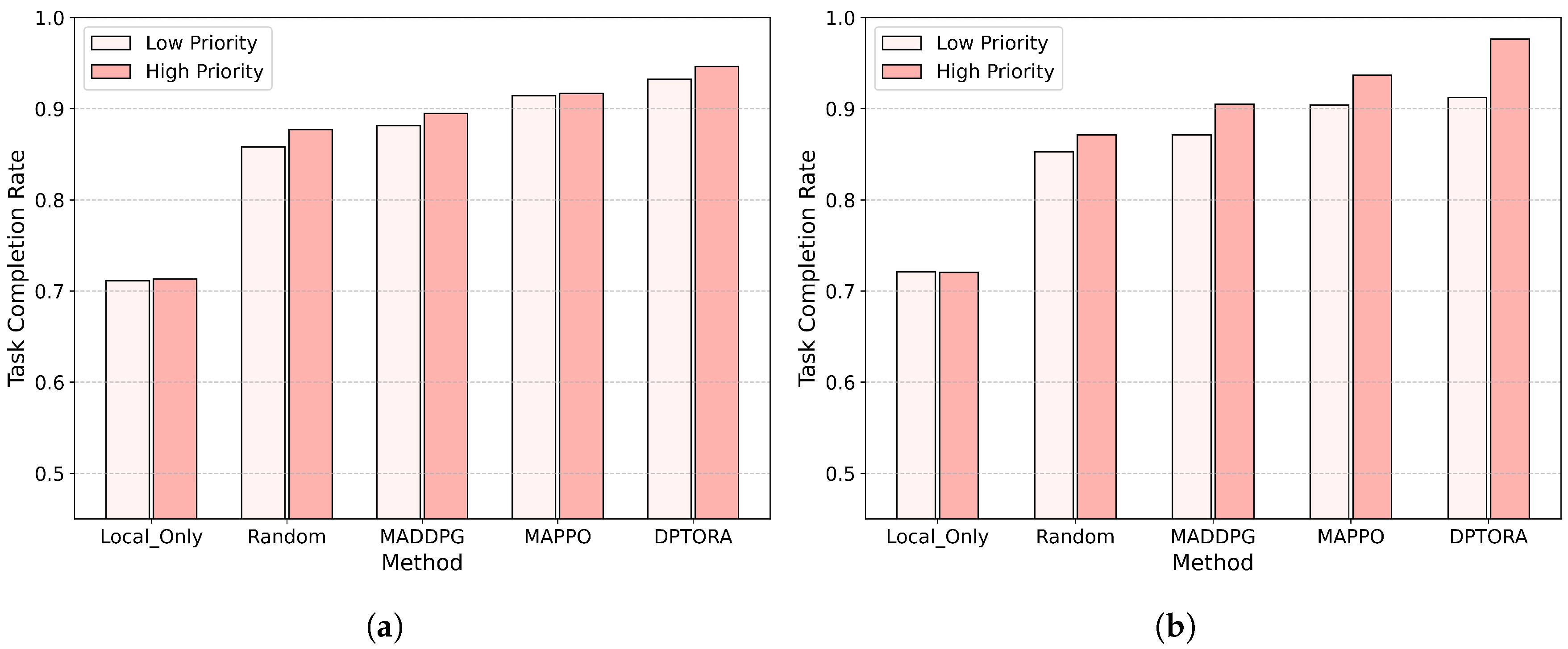

5.4. Performance Comparison in Dynamic Priority Task Scenarios

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hu, Y.; Jia, Q.; Yao, Y.; Lee, Y.; Lee, M.; Wang, C.; Zhou, X.; Xie, R.; Yu, F.R. Industrial Internet of Things Intelligence Empowering Smart Manufacturing: A Literature Review. IEEE Internet Things J. 2024, 11, 19143–19167. [Google Scholar] [CrossRef]

- Farooq, M.S.; Abdullah, M.; Riaz, S.; Alvi, A.; Rustam, F.; Flores, M.A.L.; Galán, J.C.; Samad, M.A.; Ashraf, I. A Survey on the Role of Industrial IoT in Manufacturing for Implementation of Smart Industry. Sensors 2023, 23, 8958. [Google Scholar] [CrossRef]

- Patsias, V.; Amanatidis, P.; Karampatzakis, D.; Lagkas, T.; Michalakopoulou, K.; Nikitas, A. Task allocation methods and optimization techniques in edge computing: A systematic review of the literature. Future Internet 2023, 15, 254. [Google Scholar] [CrossRef]

- Saini, H.; Singh, G.; Dalal, S.; Moorthi, I.; Aldossary, S.M.; Nuristani, N.; Hashmi, A. A Hybrid Machine Learning Model with Self-Improved Optimization Algorithm for Trust and Privacy Preservation in Cloud Environment. J. Cloud Comput. 2024, 13, 157. [Google Scholar] [CrossRef]

- Qin, W.; Chen, H.; Wang, L.; Xia, Y.; Nascita, A.; Pescapè, A. MCOTM: Mobility-aware computation offloading and task migration for edge computing in industrial IoT. Future Gener. Comput. Syst. 2024, 151, 232–241. [Google Scholar] [CrossRef]

- Chakraborty, C.; Mishra, K.; Majhi, S.K.; Bhuyan, H.K. Intelligent Latency-Aware Tasks Prioritization and Offloading Strategy in Distributed Fog-Cloud of Things. IEEE Trans. Ind. Inform. 2023, 19, 2099–2106. [Google Scholar] [CrossRef]

- Fan, W.; Gao, L.; Su, Y.; Wu, F.; Liu, Y. Joint DNN Partition and Resource Allocation for Task Offloading in Edge–Cloud-Assisted IoT Environments. IEEE Internet Things J. 2023, 10, 10146–10159. [Google Scholar] [CrossRef]

- Liu, F.; Huang, J.; Wang, X. Joint Task Offloading and Resource Allocation for Device-Edge-Cloud Collaboration with Subtask Dependencies. IEEE Trans. Cloud Comput. 2023, 11, 3027–3039. [Google Scholar] [CrossRef]

- Kar, B.; Yahya, W.; Lin, Y.D.; Ali, A. Offloading Using Traditional Optimization and Machine Learning in Federated Cloud–Edge–Fog Systems: A Survey. IEEE Commun. Surv. Tutor. 2023, 25, 1199–1226. [Google Scholar] [CrossRef]

- Yuan, X.; Wang, Y.; Wang, K.; Ye, L.; Shen, F.; Wang, Y.; Yang, C.; Gui, W. A Cloud-Edge Collaborative Framework for Adaptive Quality Prediction Modeling in IIoT. IEEE Sens. J. 2024, 24, 33656–33668. [Google Scholar] [CrossRef]

- Yin, Z.; Xu, F.; Li, Y.; Fan, C.; Zhang, F.; Han, G.; Bi, Y. A Multi-Objective Task Scheduling Strategy for Intelligent Production Line Based on Cloud-Fog Computing. Sensors 2022, 22, 1555. [Google Scholar] [CrossRef]

- Xie, R.; Feng, L.; Tang, Q.; Zhu, H.; Huang, T.; Zhang, R.; Yu, F.R.; Xiong, Z. Priority-Aware Task Scheduling in Computing Power Network-enabled Edge Computing Systems. IEEE Trans. Netw. Sci. Eng. 2025, 12, 3191–3205. [Google Scholar] [CrossRef]

- Murad, S.A.; Muzahid, A.J.M.; Azmi, Z.R.M.; Hoque, M.I.; Kowsher, M. A review on job scheduling technique in cloud computing and priority rule based intelligent framework. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 2309–2331. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, F.; Xiong, Z.; Zhang, K.; Chen, D. LsiA3CS: Deep-Reinforcement-Learning-Based Cloud–Edge Collaborative Task Scheduling in Large-Scale IIoT. IEEE Internet Things J. 2024, 11, 23917–23930. [Google Scholar] [CrossRef]

- Xu, J.; Yang, B.; Liu, Y.; Chen, C.; Guan, X. Joint Task Offloading and Resource Allocation for Multihop Industrial Internet of Things. IEEE Internet Things J. 2022, 9, 22022–22033. [Google Scholar] [CrossRef]

- Tam, P.; Kim, S. Graph-Based Learning in Core and Edge Virtualized O-RAN for Handling Real-Time AI Workloads. IEEE Trans. Netw. Sci. Eng. 2025, 12, 302–318. [Google Scholar] [CrossRef]

- Sharif, Z.; Tang Jung, L.; Ayaz, M.; Yahya, M.; Pitafi, S. Priority-based task scheduling and resource allocation in edge computing for health monitoring system. J. King Saud Univ.-Comput. Inf. Sci. 2023, 35, 544–559. [Google Scholar] [CrossRef]

- Wu, G.; Chen, X.; Gao, Z.; Zhang, H.; Yu, S.; Shen, S. Privacy-preserving offloading scheme in multi-access mobile edge computing based on MADRL. J. Parallel Distrib. Comput. 2024, 183, 104775. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Nguyen, N.D.; Nahavandi, S. Deep Reinforcement Learning for Multiagent Systems: A Review of Challenges, Solutions, and Applications. IEEE Trans. Cybern. 2020, 50, 3826–3839. [Google Scholar] [CrossRef]

- Bui, K.A.; Yoo, M. Interruption-Aware Computation Offloading in the Industrial Internet of Things. Sensors 2025, 25, 2904. [Google Scholar] [CrossRef]

- Zhang, F.; Han, G.; Liu, L.; Zhang, Y.; Peng, Y.; Li, C. Cooperative Partial Task Offloading and Resource Allocation for IIoT Based on Decentralized Multiagent Deep Reinforcement Learning. IEEE Internet Things J. 2024, 11, 5526–5544. [Google Scholar] [CrossRef]

- Zhu, X.; Luo, Y.; Liu, A.; Bhuiyan, M.Z.A.; Zhang, S. Multiagent Deep Reinforcement Learning for Vehicular Computation Offloading in IoT. IEEE Internet Things J. 2021, 8, 9763–9773. [Google Scholar] [CrossRef]

- Tran, T.X.; Pompili, D. Joint Task Offloading and Resource Allocation for Multi-Server Mobile-Edge Computing Networks. IEEE Trans. Veh. Technol. 2019, 68, 856–868. [Google Scholar] [CrossRef]

- Hong, Z.; Chen, W.; Huang, H.; Guo, S.; Zheng, Z. Multi-Hop Cooperative Computation Offloading for Industrial IoT–Edge–Cloud Computing Environments. IEEE Trans. Parallel Distrib. Syst. 2019, 30, 2759–2774. [Google Scholar] [CrossRef]

- Liu, D.; Ren, F.; Yan, J.; Su, G.; Gu, W.; Kato, S. Scaling Up Multi-Agent Reinforcement Learning: An Extensive Survey on Scalability Issues. IEEE Access 2024, 12, 94610–94631. [Google Scholar] [CrossRef]

- Teng, H.; Li, Z.; Cao, K.; Long, S.; Guo, S.; Liu, A. Game Theoretical Task Offloading for Profit Maximization in Mobile Edge Computing. IEEE. Trans. Mob. Comput. 2023, 22, 5313–5329. [Google Scholar] [CrossRef]

- Yang, Z.; Bi, S.; Zhang, Y.J.A. Dynamic Offloading and Trajectory Control for UAV-Enabled Mobile Edge Computing System with Energy Harvesting Devices. IEEE Trans. Wirel. Commun. 2022, 21, 10515–10528. [Google Scholar] [CrossRef]

- Deng, X.; Yin, J.; Guan, P.; Xiong, N.N.; Zhang, L.; Mumtaz, S. Intelligent Delay-Aware Partial Computing Task Offloading for Multiuser Industrial Internet of Things Through Edge Computing. IEEE Internet Things J. 2023, 10, 2954–2966. [Google Scholar] [CrossRef]

- Chai, F.; Zhang, Q.; Yao, H.; Xin, X.; Gao, R.; Guizani, M. Joint Multi-Task Offloading and Resource Allocation for Mobile Edge Computing Systems in Satellite IoT. IEEE Trans. Veh. Technol. 2023, 72, 7783–7795. [Google Scholar] [CrossRef]

- Du, J.; Kong, Z.; Sun, A.; Kang, J.; Niyato, D.; Chu, X.; Yu, F.R. MADDPG-Based Joint Service Placement and Task Offloading in MEC Empowered Air–Ground Integrated Networks. IEEE Internet Things J. 2024, 11, 10600–10615. [Google Scholar] [CrossRef]

- Suzuki, A.; Kobayashi, M.; Oki, E. Multi-Agent Deep Reinforcement Learning for Cooperative Computing Offloading and Route Optimization in Multi Cloud-Edge Networks. IEEE Trans. Netw. Serv. Manag. 2023, 20, 4416–4434. [Google Scholar] [CrossRef]

- Yao, Z.; Xia, S.; Li, Y.; Wu, G. Cooperative Task Offloading and Service Caching for Digital Twin Edge Networks: A Graph Attention Multi-Agent Reinforcement Learning Approach. IEEE J. Sel. Areas Commun. 2023, 41, 3401–3413. [Google Scholar] [CrossRef]

- Xu, C.; Zhang, P.; Yu, H.; Li, Y. D3QN-Based Multi-Priority Computation Offloading for Time-Sensitive and Interference-Limited Industrial Wireless Networks. IEEE Trans. Veh. Technol. 2024, 73, 13682–13693. [Google Scholar] [CrossRef]

- Dai, X.; Chen, X.; Jiao, L.; Wang, Y.; Du, S.; Min, G. Priority-Aware Task Offloading and Resource Allocation in Satellite and HAP Assisted Edge-Cloud Collaborative Networks. In Proceedings of the 2023 15th International Conference on Communication Software and Networks (ICCSN), Shenyang, China, 21–23 July 2023; pp. 166–171. [Google Scholar] [CrossRef]

- Uddin, A.; Sakr, A.H.; Zhang, N. Adaptive Prioritization and Task Offloading in Vehicular Edge Computing Through Deep Reinforcement Learning. IEEE Trans. Veh. Technol. 2025, 74, 5038–5052. [Google Scholar] [CrossRef]

- Alshammari, H.H. The internet of things healthcare monitoring system based on MQTT protocol. Alex. Eng. J. 2023, 69, 275–287. [Google Scholar] [CrossRef]

- Yang, R.; He, H.; Xu, Y.; Xin, B.; Wang, Y.; Qu, Y.; Zhang, W. Efficient intrusion detection toward IoT networks using cloud–edge collaboration. Comput. Netw. 2023, 228, 109724. [Google Scholar] [CrossRef]

- Xiong, J.; Guo, P.; Wang, Y.; Meng, X.; Zhang, J.; Qian, L.; Yu, Z. Multi-agent deep reinforcement learning for task offloading in group distributed manufacturing systems. Eng. Appl. Artif. Intell. 2023, 118, 105710. [Google Scholar] [CrossRef]

- Wang, L.; Wang, K.; Pan, C.; Xu, W.; Aslam, N.; Hanzo, L. Multi-Agent Deep Reinforcement Learning-Based Trajectory Planning for Multi-UAV Assisted Mobile Edge Computing. IEEE Trans. Cogn. Commun. Netw. 2021, 7, 73–84. [Google Scholar] [CrossRef]

- Chi, J.; Zhou, X.; Xiao, F.; Lim, Y.; Qiu, T. Task Offloading via Prioritized Experience-Based Double Dueling DQN in Edge-Assisted IIoT. IEEE. Trans. Mob. Comput. 2024, 23, 14575–14591. [Google Scholar] [CrossRef]

- Bali, M.S.; Gupta, K.; Gupta, D.; Srivastava, G.; Juneja, S.; Nauman, A. An effective technique to schedule priority aware tasks to offload data on edge and cloud servers. Meas. Sens. 2023, 26, 100670. [Google Scholar] [CrossRef]

- Li, P.; Xiao, Z.; Wang, X.; Huang, K.; Huang, Y.; Gao, H. EPtask: Deep Reinforcement Learning Based Energy-Efficient and Priority-Aware Task Scheduling for Dynamic Vehicular Edge Computing. IEEE Trans. Intell. Veh. 2024, 9, 1830–1846. [Google Scholar] [CrossRef]

- Bhattacharya, S.; Kailas, S.; Badyal, S.; Gil, S.; Bertsekas, D. Multiagent Reinforcement Learning: Rollout and Policy Iteration for POMDP with Application to Multirobot Problems. IEEE Trans. Robot. 2024, 40, 2003–2023. [Google Scholar] [CrossRef]

- Kang, H.; Chang, X.; Mišić, J.; Mišić, V.B.; Fan, J.; Liu, Y. Cooperative UAV Resource Allocation and Task Offloading in Hierarchical Aerial Computing Systems: A MAPPO-Based Approach. IEEE Internet Things J. 2023, 10, 10497–10509. [Google Scholar] [CrossRef]

- Liu, W.; Li, B.; Xie, W.; Dai, Y.; Fei, Z. Energy Efficient Computation Offloading in Aerial Edge Networks with Multi-Agent Cooperation. IEEE Trans. Wirel. Commun. 2023, 22, 5725–5739. [Google Scholar] [CrossRef]

- Yu, C.; Velu, A.; Vinitsky, E.; Gao, J.; Wang, Y.; Bayen, A.; Wu, Y. The Surprising Effectiveness of PPO in Cooperative Multi-Agent Games. In Advances in Neural Information Processing Systems; ACM: New York, NY, USA, 2022; Volume 35. [Google Scholar]

- Agarwal, D.; Singh, P.; El Sayed, M. The Karush–Kuhn–Tucker (KKT) optimality conditions for fuzzy-valued fractional optimization problems. Math. Comput. Simul. 2023, 205, 861–877. [Google Scholar] [CrossRef]

- Wu, G.; Xu, Z.; Zhang, H.; Shen, S.; Yu, S. Multi-agent DRL for joint completion delay and energy consumption with queuing theory in MEC-based IIoT. J. Parallel Distrib. Comput. 2023, 176, 80–94. [Google Scholar] [CrossRef]

- Rauch, R.; Becvar, Z.; Mach, P.; Gazda, J. Cooperative Multi-Agent Deep Reinforcement Learning for Dynamic Task Execution and Resource Allocation in Vehicular Edge Computing. IEEE Trans. Veh. Technol. 2025, 74, 5741–5756. [Google Scholar] [CrossRef]

- Ling, C.; Peng, K.; Wang, S.; Xu, X.; Leung, V.C.M. A Multi-Agent DRL-Based Computation Offloading and Resource Allocation Method with Attention Mechanism in MEC-Enabled IIoT. IEEE Trans. Serv. Comput. 2024, 17, 3037–3051. [Google Scholar] [CrossRef]

| Work | Year | Optimization Target | Approach | Objective | ||

|---|---|---|---|---|---|---|

| Energy | Delay | Priority | ||||

| [23] | 2019 | ✓ | ✓ | × | Heuristic Optimization | Minimize system energy and task execution latency |

| [24] | 2019 | ✓ | ✓ | × | Graph-Based Multi-Hop Offloading Algorithm | Minimize overall task latency and edge energy consumption across IoT |

| [25] | 2024 | ✓ | × | × | Game-theoretic model | Optimize task allocation and resource utilization to minimize energy |

| [26] | 2023 | ✓ | × | × | Non-cooperative Game Theory | Maximize profit defined by minimizing energy and transmission-related costs |

| [27] | 2022 | ✓ | ✓ | × | Lyapunov Optimization | Minimize energy and task delay via online UAV trajectory and offloading control |

| [28] | 2023 | ✓ | ✓ | × | Online Reinforcement Learning | Minimize energy and task delay via online UAV trajectory and offloading control |

| [29] | 2023 | ✓ | ✓ | × | Proximal Policy Optimization | Minimize overall cost of multi-task offloading |

| [30] | 2024 | ✓ | ✓ | × | Multi-Agent Deep Deterministic Policy Gradient | Minimize long-term average delay and economic cost under QoS constraints |

| [31] | 2023 | ✓ | ✓ | × | Cooperative Multi-Agent Deep Reinforcement Learning | Optimize offloading efficiency under topology and resource constraints |

| [35] | 2025 | ✓ | × | ✓ | Deep Reinforcement Learning | Improve completion rate for high-priority tasks |

| [32] | 2023 | ✓ | ✓ | × | Graph Attention Multi-Agent Reinforcement Learning | Maximize QoE-based system utility under storage and radio resource constraints |

| [33] | 2024 | × | ✓ | ✓ | Double Dueling Deep Q-Network | Minimize overall task latency |

| [34] | 2023 | × | ✓ | ✓ | Priority-Aware Deep Deterministic Policy Gradient | Maximize average system utility under dynamic QoS-aware task offloading |

| Our work | 2025 | ✓ | ✓ | ✓ | Improved Multi-Agent Proximal Policy Optimization | Jointly optimize task offloading, resource allocation, and priority adaptation to enhance system efficiency |

| Notation | Description |

|---|---|

| N | Number of IIoT devices |

| M | Number of ES |

| Data size of task | |

| CPU cycles for computing task | |

| Maximum latency of task | |

| Proportion of task computed locally | |

| Proportion of task offloaded to ES | |

| Channel gain between IIoT n and ES m | |

| Transmission power at time t | |

| Bandwidth between device n and ES m | |

| SNR between device n and ES m | |

| Total bandwidth resources of ES m | |

| Total bandwidth resources of CS | |

| CPU frequency of device n | |

| CPU frequency of ES m | |

| CPU frequency of CS | |

| Total energy consumption of device n | |

| Task latency on the cloud | |

| Total task completion delay |

| Notation | Description | Value |

|---|---|---|

| Data size of task | 150–300 MB | |

| CPU cycles required by task | 20–50 Gcycles | |

| WiFi bandwidth | 30–50 MHz | |

| Fiber optic bandwidth | 200–300 MHz | |

| Transmission power of IIoT device n | 0.5 W | |

| Hardware-related constant for IIoT device n | ||

| CPU frequency of IIoT device n | 10–30 Gcycles/s | |

| CPU frequency of edge server m | 60–80 Gcycles/s | |

| CPU frequency of cloud server | 200 Gcycles/s | |

| Channel gain between device n and server m | – | |

| Noise power | W |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Y.; Zhao, Y.; Hu, Y.; He, X.; Feng, S. Multi-Agent Deep Reinforcement Learning for Joint Task Offloading and Resource Allocation in IIoT with Dynamic Priorities. Sensors 2025, 25, 6160. https://doi.org/10.3390/s25196160

Ma Y, Zhao Y, Hu Y, He X, Feng S. Multi-Agent Deep Reinforcement Learning for Joint Task Offloading and Resource Allocation in IIoT with Dynamic Priorities. Sensors. 2025; 25(19):6160. https://doi.org/10.3390/s25196160

Chicago/Turabian StyleMa, Yongze, Yanqing Zhao, Yi Hu, Xingyu He, and Sifang Feng. 2025. "Multi-Agent Deep Reinforcement Learning for Joint Task Offloading and Resource Allocation in IIoT with Dynamic Priorities" Sensors 25, no. 19: 6160. https://doi.org/10.3390/s25196160

APA StyleMa, Y., Zhao, Y., Hu, Y., He, X., & Feng, S. (2025). Multi-Agent Deep Reinforcement Learning for Joint Task Offloading and Resource Allocation in IIoT with Dynamic Priorities. Sensors, 25(19), 6160. https://doi.org/10.3390/s25196160