2.1. Navigation-Based Image Segmentation

In image-based environment modeling approaches, cubic environment mapping is commonly used for overall environment visualization. For example, in the design of reservoir virtual simulation systems, Xue Yutong et al. utilized skybox technology to resample spherical projection panoramas onto the six faces of a cube, achieving reservoir environment visualization [

9]. However, this method primarily suits rendering of distant views where image segmentation imposes weak constraints on spatial positioning—segmentation deviations minimally affect overall presentation. In contrast, for near-view environments with complex structures like coal mine roadways, each plane maintains strict spatial correspondence in real space, requiring segmentation to strictly align with actual structures. When cubic environment mapping is applied here, post-mapping images across six faces not only introduce noticeable parallax errors but may also erroneously split a single plane into multiple segments. Consequently, relying solely on cubic environment mapping fails to meet modeling demands for complex near-view environments, necessitating exploration of more rational image segmentation and mapping methodologies [

10,

11,

12].

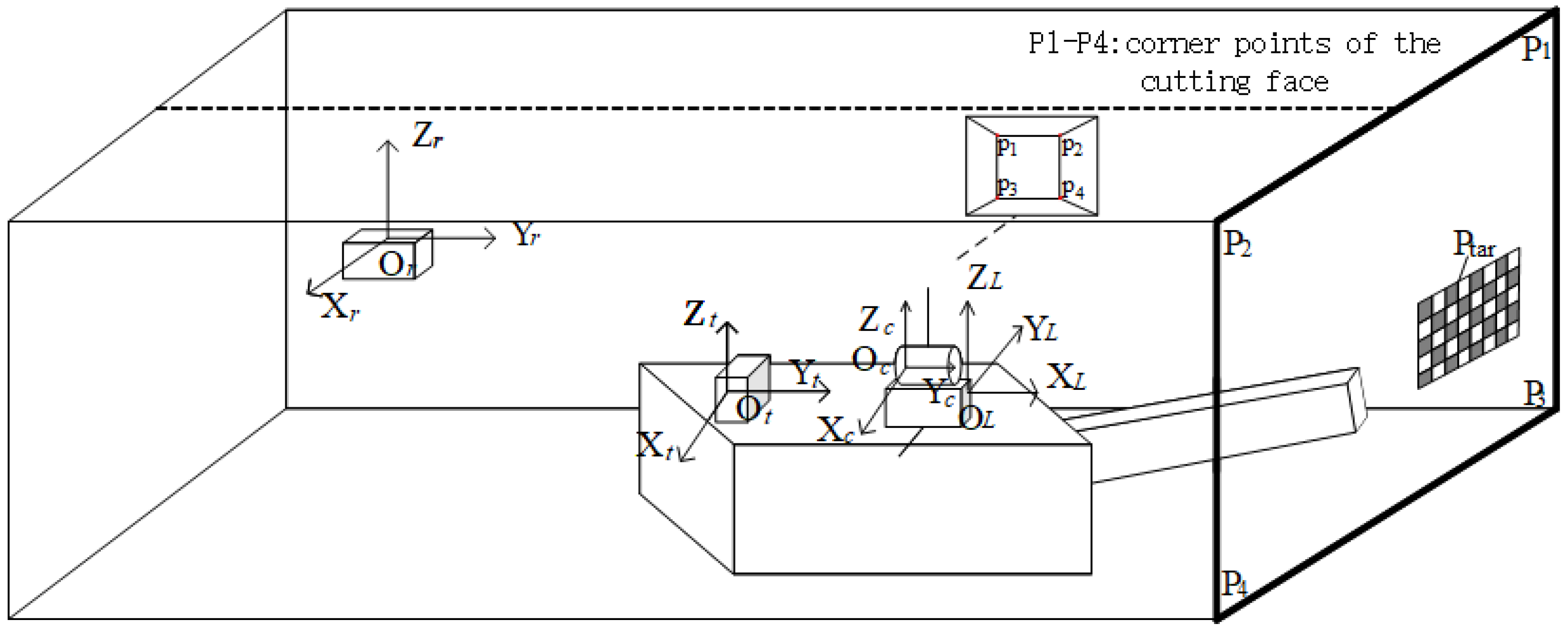

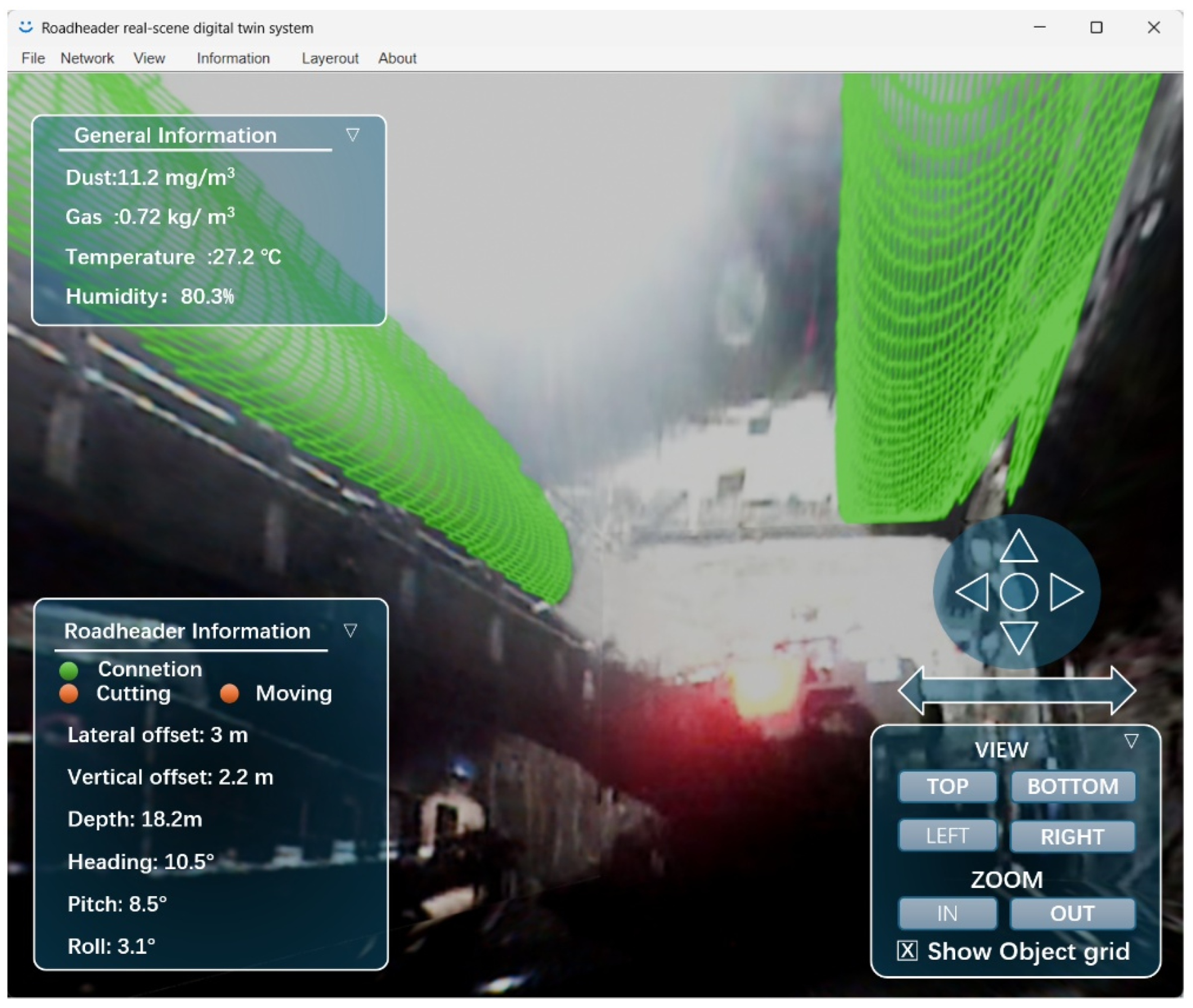

This study incorporates navigation and positioning information from roadheaders to achieve more precise panoramic image segmentation. The positioning system consists of two components: a laser guidance device suspended from the midline of the roadway roof at the rear end, and a pose measurement unit mounted on the roadheader body. The whole navigation and positioning device is manufactured by Beijing BlueVision Technology Co., Ltd., Beijing, China, with attitude accuracy specifications of less than 0.15° in heading, 0.02° in pitch, and 0.02° in roll. As illustrated in

Figure 1, the origin of the roadway coordinate system

Or is located on the laser guidance device, with its

X,

Y, and

Z-axes aligned with the lateral direction, heading direction, and vertical direction of the roadway, respectively. This integrated navigation system provides the roadheader’s yaw angle, pitch angle, roll angle, and its coordinates within the roadway coordinate system [

13].

To obtain the pixel coordinates of cutting section corner points in the camera coordinate system, pose calibration between the camera and navigation positioning device is first required. The camera is installed such that its field of view fully covers the cutting section, facilitating subsequent image segmentation. A calibration target is mounted at the roadway heading face, with coordinates of each corner point on the target pre-measured in the roadway coordinate system. Let any corner point’s coordinates in the roadway coordinate system be denoted as

. By utilizing the camera’s intrinsic parameters, the target’s geometric parameters, and the corresponding pixel coordinates of the corner point’s image formed by the intermediate camera, its coordinates in the camera coordinate system

can be calculated.

where

and

represent the rotation matrix and translation vector from the pose measurement unit coordinate system

Ot to the tunnel coordinate system

Or. These can be inversely derived from the heading angle, roll angle, pitch angle, and spatial position coordinates provided by the roadheader navigation and positioning system.

and

denote the rotation matrix and translation vector from the intermediate camera coordinate system

Oc to the pose measurement unit coordinate system

Ot, which can be calculated by combining multiple corner coordinates and Equation (1).

For the four corners

pi {

i = 1, 2, 3, 4} in the cutting section, their coordinates in the tunnel coordinate system are denoted as

, in the pose measurement device coordinate system as

, and in the camera coordinate system as

. These can be obtained through Equation (2):

Combined with the camera intrinsic parameters, the corresponding pixel coordinates

pi {

i = 1, 2, 3, 4} of the corners in the camera image can be further derived, as shown in the following equation:

where

K is the intrinsic matrix of the camera, and

represents the distance of

along the camera’s

Z-axis. We can similarly use this approach to verify the correctness of the pose relationship between the camera and the navigation and positioning device. By computing the 3D coordinates of a point on the checkerboard using the above equation, we can project this point onto the camera image and compare its resulting pixel position. Matching points with an error greater than 1 pixel are eliminated. Then the relationship between camera and navigation device is recalibrated.

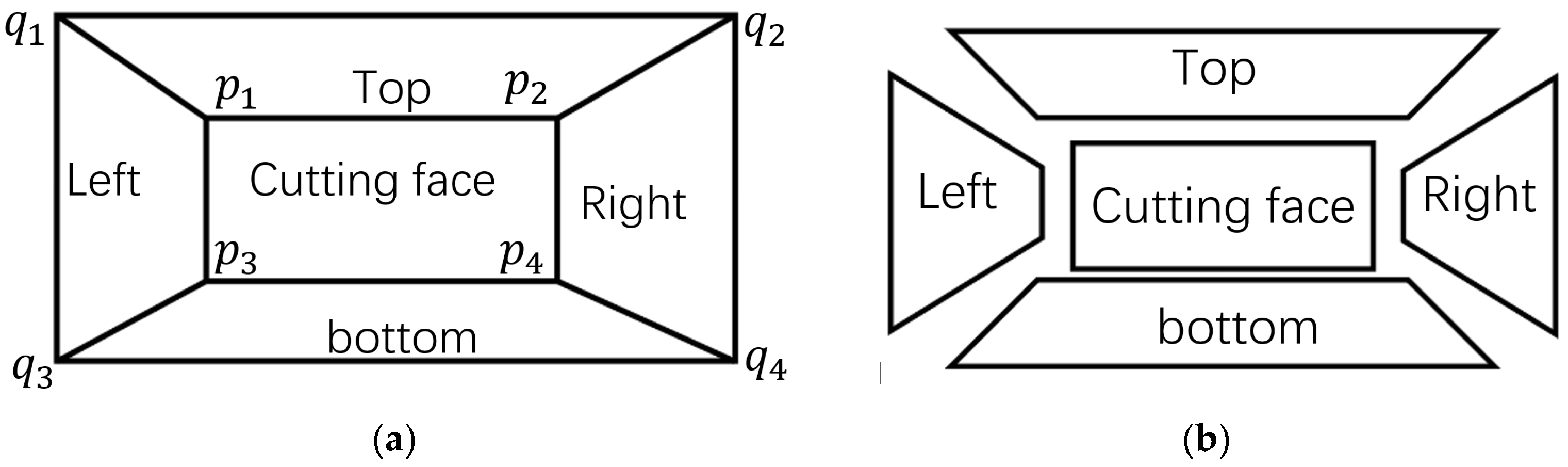

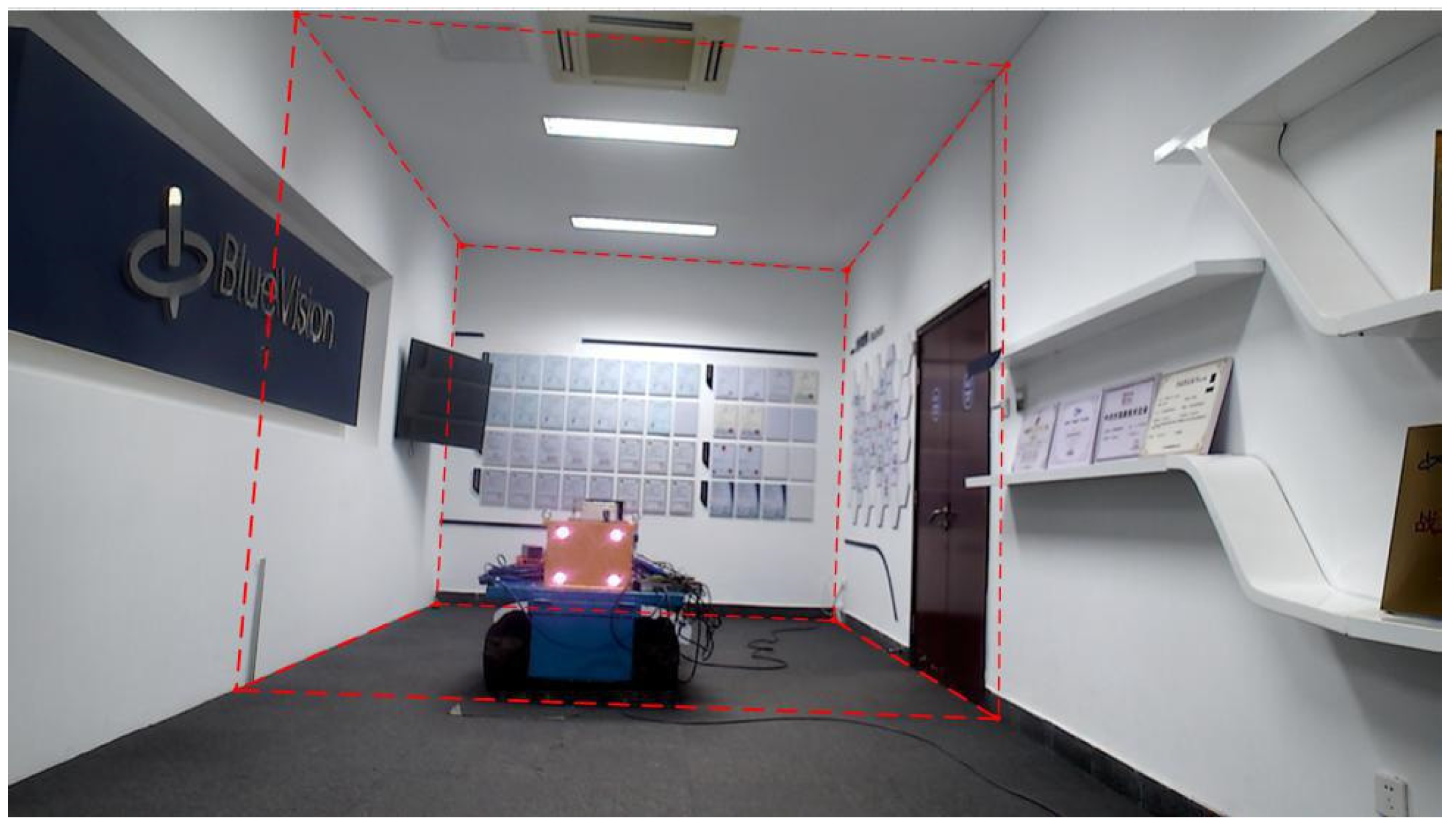

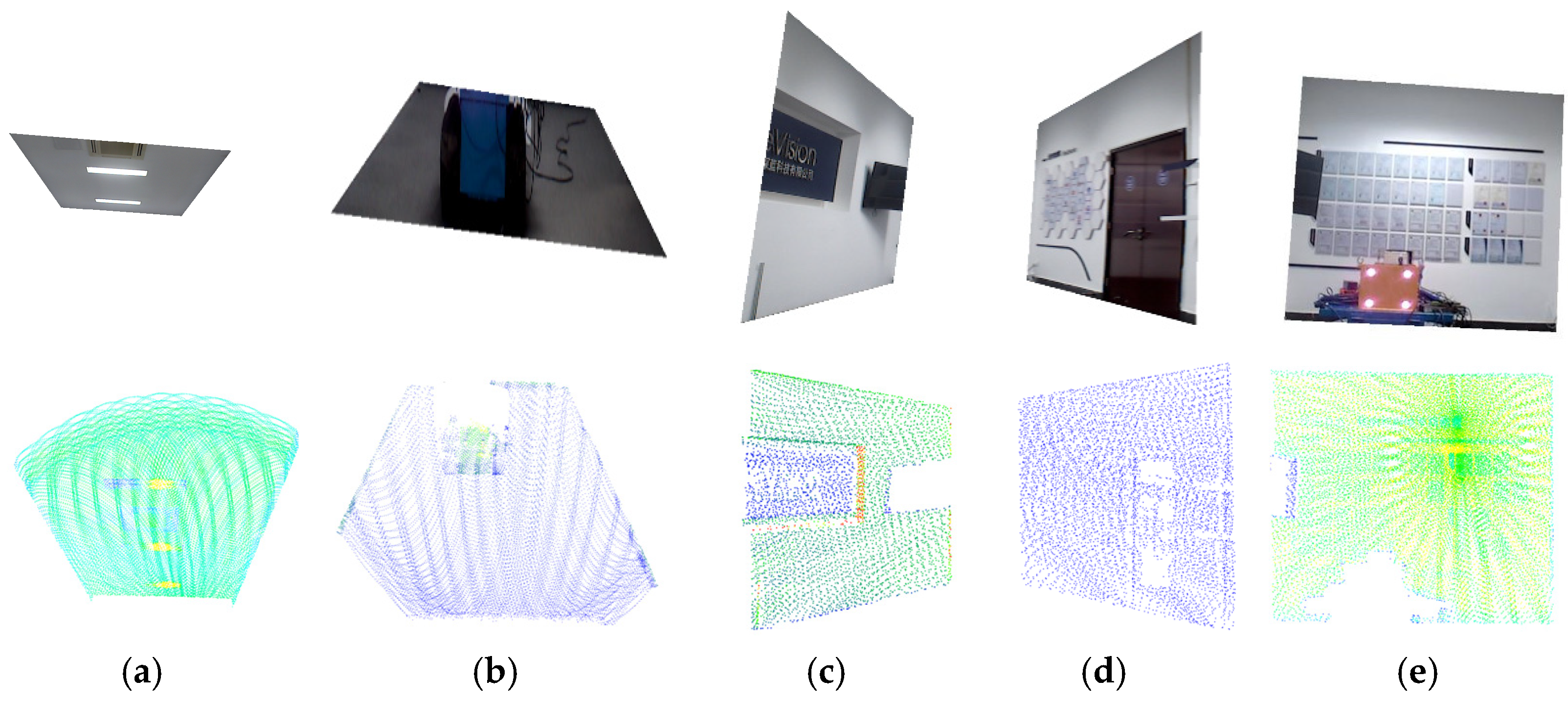

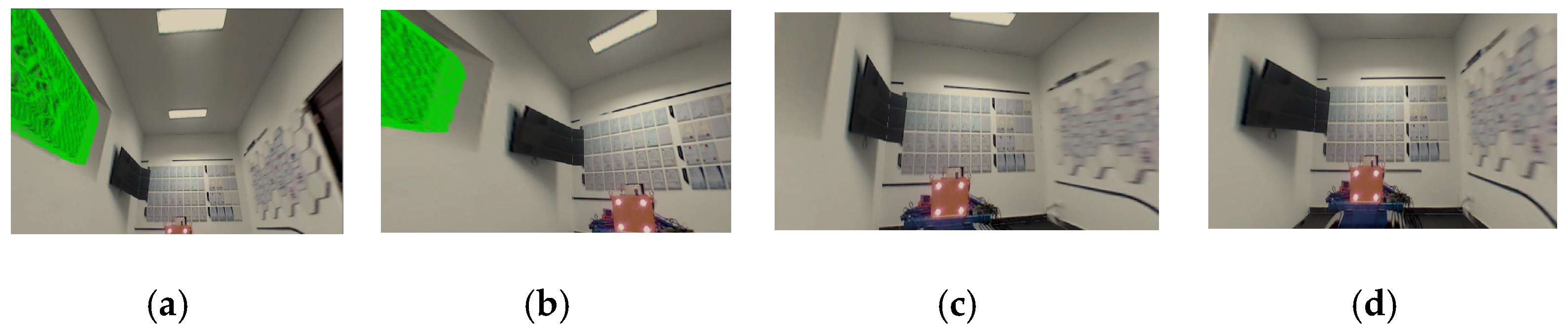

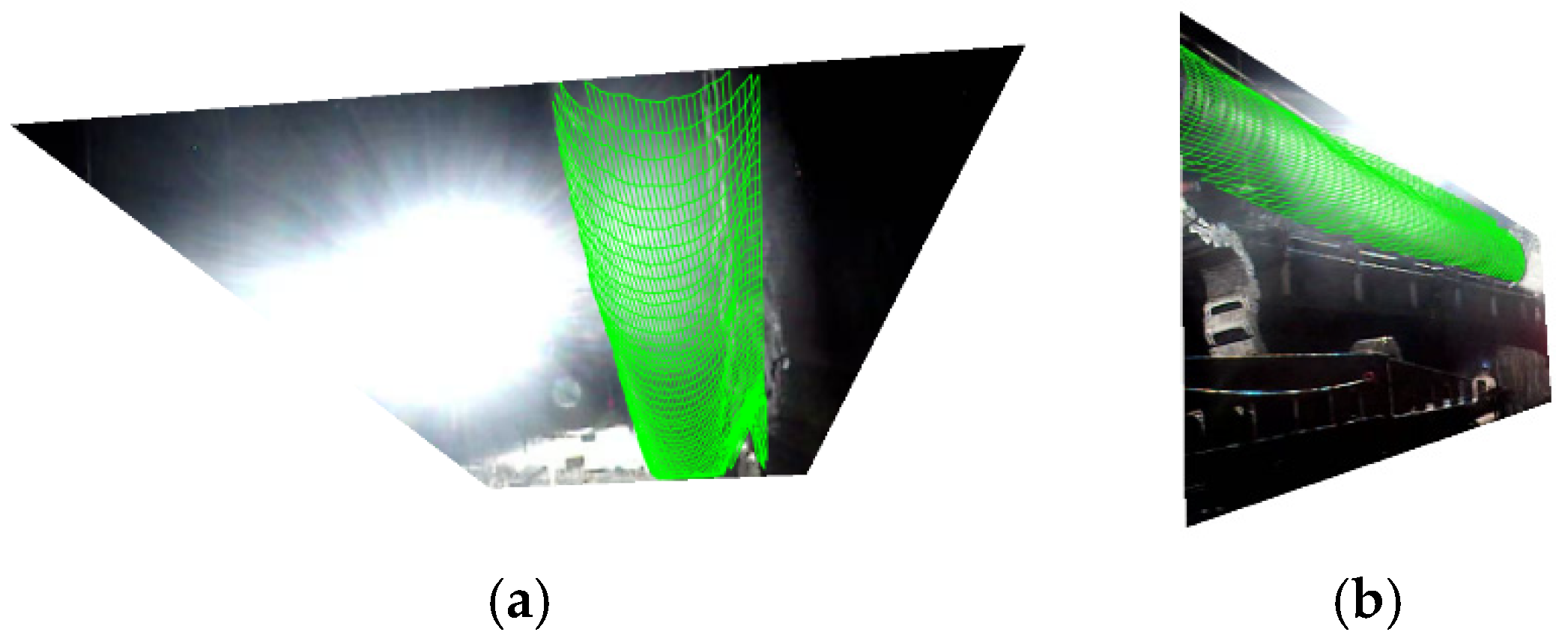

As illustrated in

Figure 2, to achieve accurate image segmentation, besides obtaining the pixel coordinates

pi {

i = 1, 2, 3, 4} of the section corners, it is also necessary to determine their corresponding pixel coordinates

qi {

i = 1, 2, 3, 4} at the same depth. In this study,

pi is defined as the “inner corner” for image segmentation, while

qi is defined as the “outer corner”. By connecting the corresponding

pi and

qi, accurate segmentation as shown in

Figure 2b can be realized. The tunnel image is segmentate into five regions based on the connecting lines.

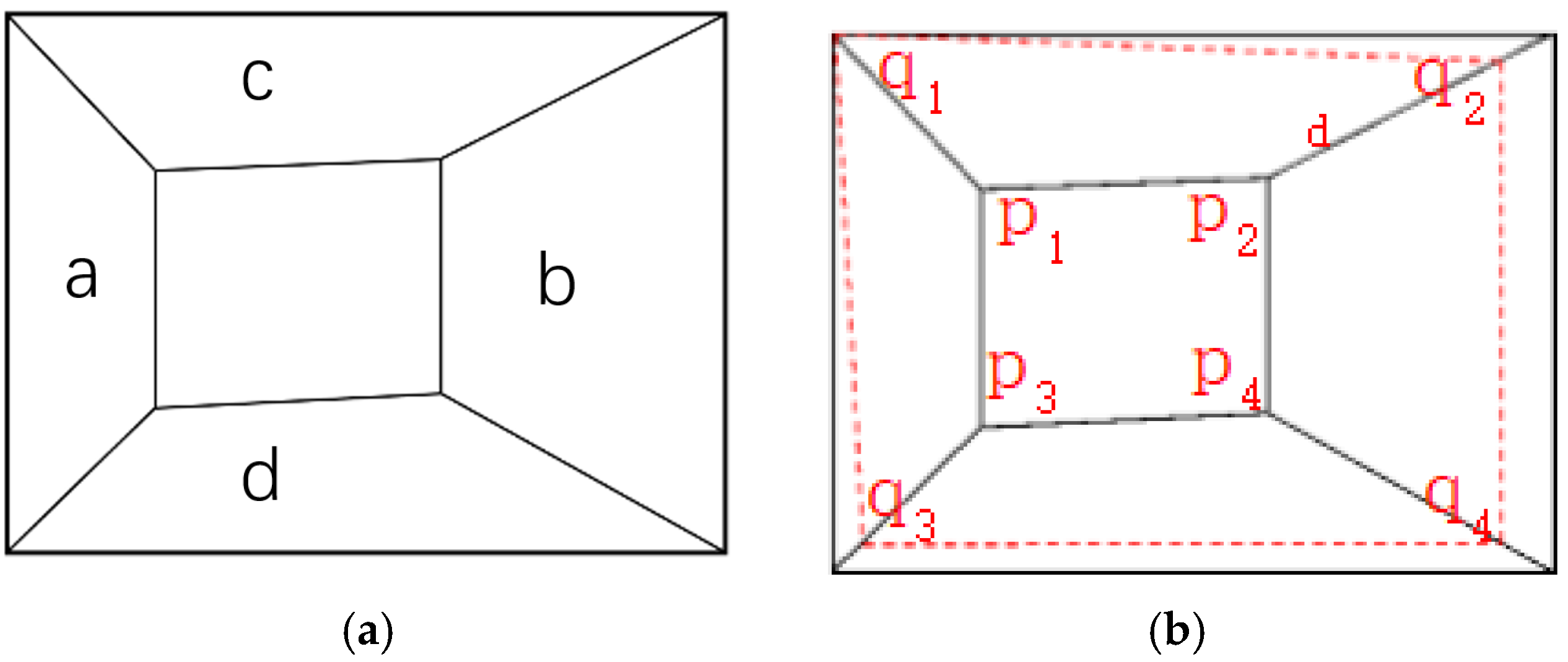

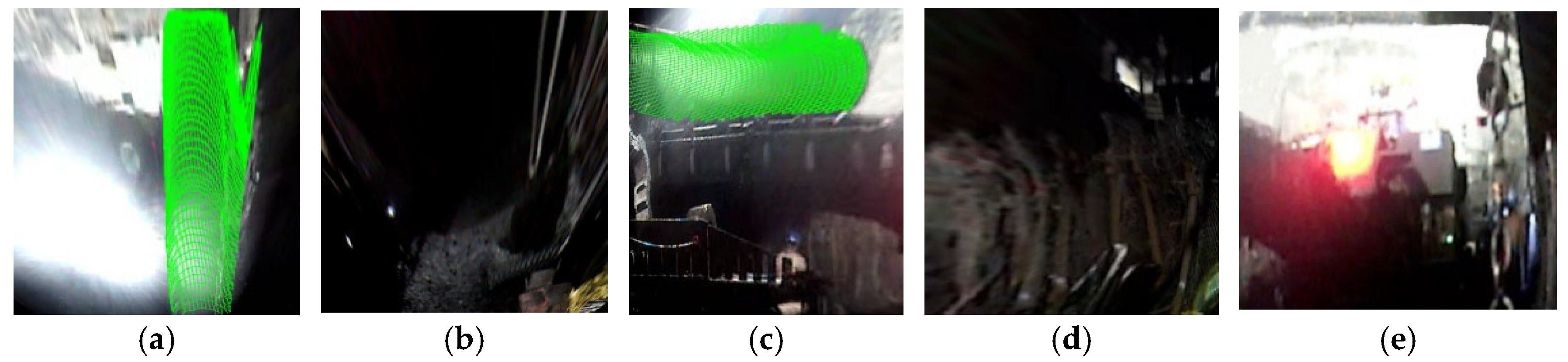

Due to the camera’s inability to maintain parallel alignment with the tunnel cutting face during image acquisition, as shown in

Figure 3a, when the camera view tilts to the right, region

a exhibits smaller depth than region

b, while region

c shows

a gradual depth increase from left to right compared to region b. To obtain an approximate cuboid tunnel model, the captured images and point clouds need to be cropped. The known inner corner coordinates in the tunnel coordinate system are

. Since outer and inner corners differ only in the depth direction (

Y-axis), the outer corner coordinates in the tunnel system can be expressed as

. The corresponding outer corner pixel coordinates

qi in the image are then obtained via coordinate transformation methods. To fully utilize image information, the maximum planar surface

q1q2q3q4 parallel to the cutting face

p1p2p3p4 can be determined using pose data, navigation information, and the pre-calibrated relationship between the camera–LiDAR system and the tunnel coordinate system.

The tunnel image is segmentate into five regions based on the connecting lines between outer corner points and inner corner points.

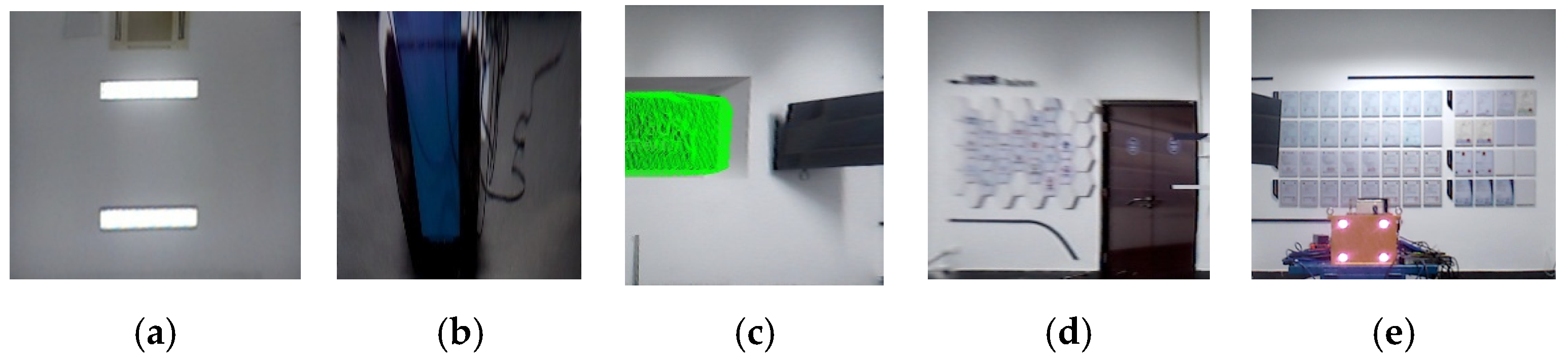

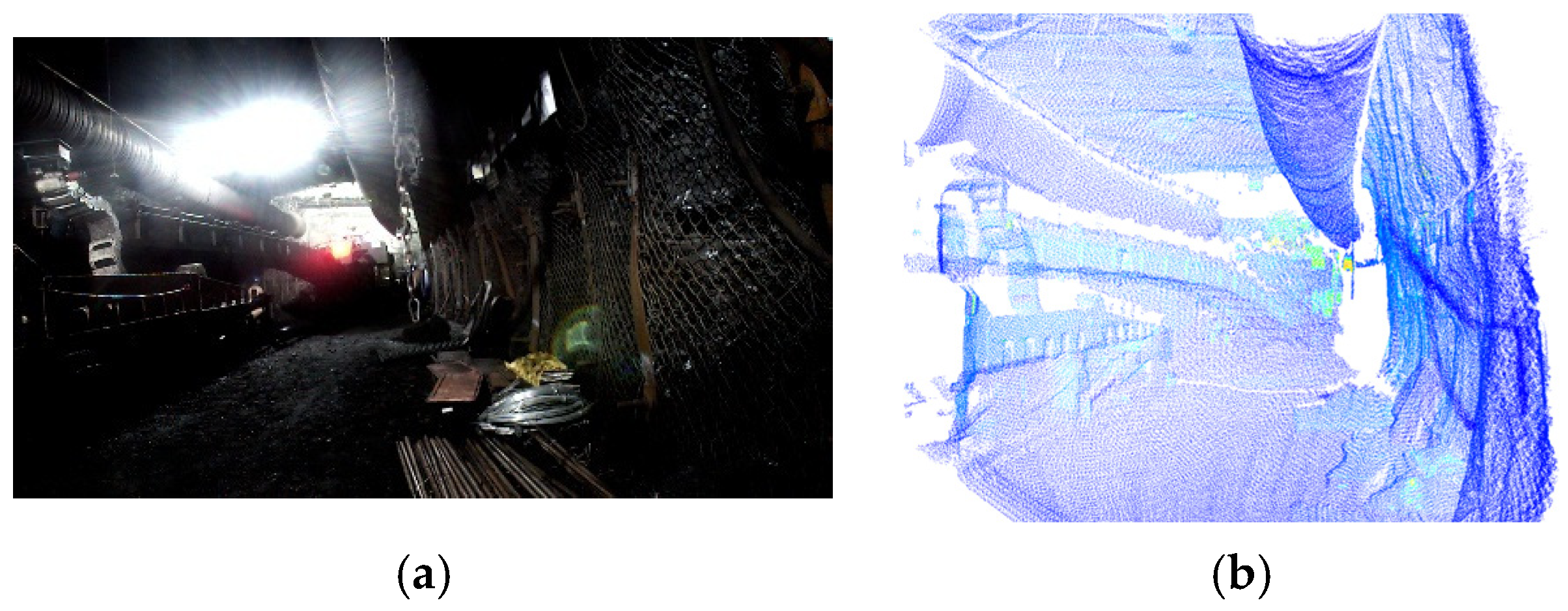

2.2. Point Cloud of Tunnel Plane Segmentation Based on Image Segmentation

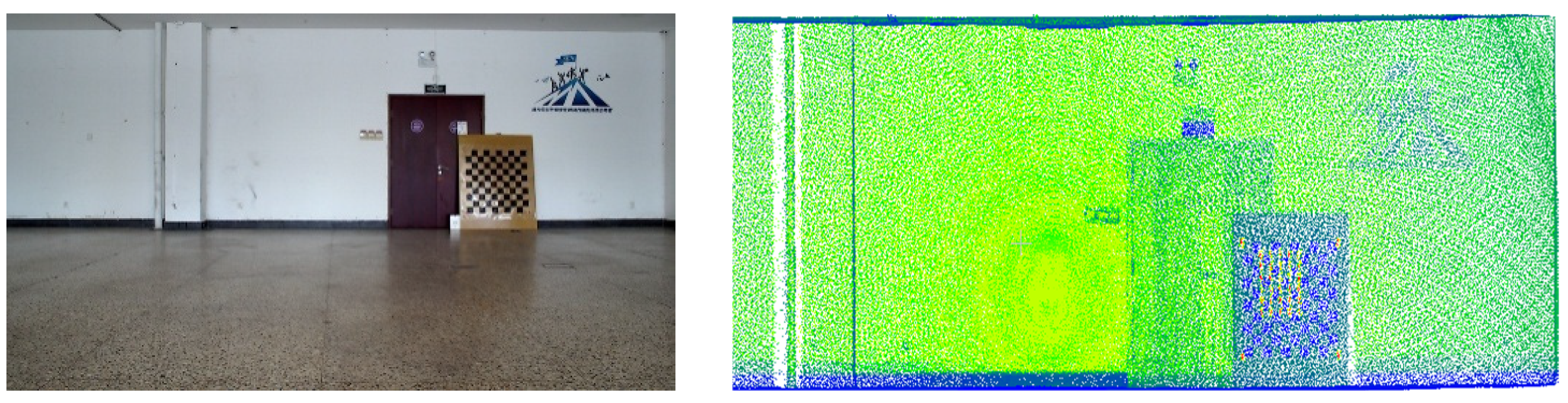

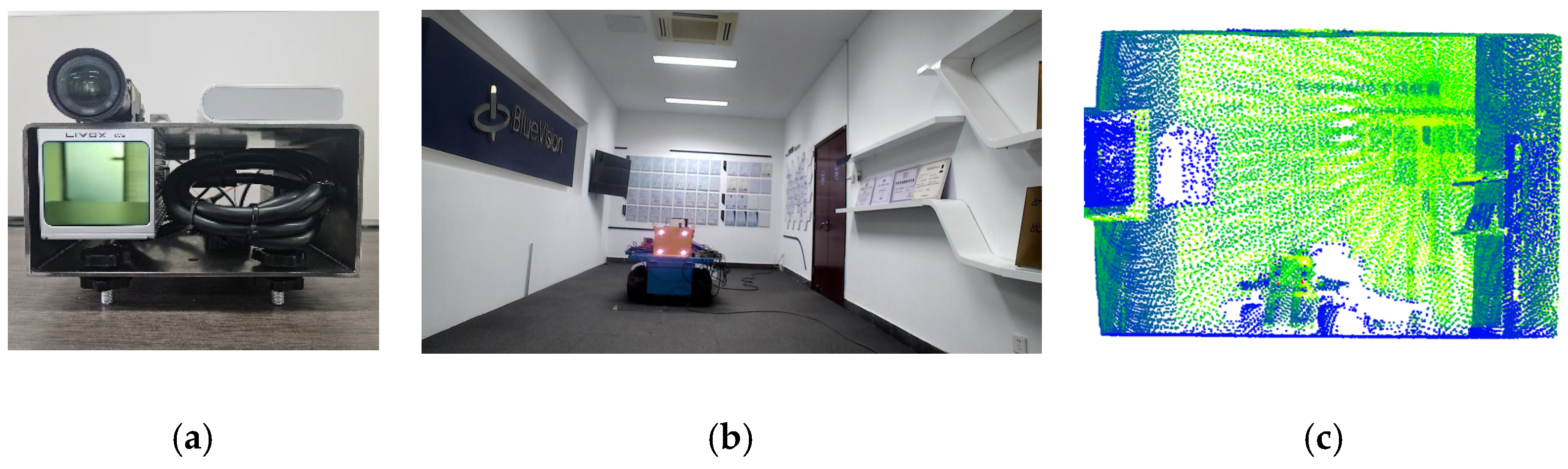

To visualize three-dimensional information in two-dimensional images, we integrated a LiDAR system with a camera and performed calibration. In the experiments, a Livox-Avia LiDAR (range random error < 2 cm, angular random error < 0.05°, manufactured by Livox Technology Co., Ltd., Shenzhen, China) and a MindVision industrial camera (resolution 1920 × 1080, manufactured by MindVision Technology Co., Ltd., Shenzhen, China) were employed. The Livox-Avia LiDAR operates in a non-repetitive scanning mode, continuously outputting point clouds in dual-echo mode at a rate of 480,000 points per second. Before performing LiDAR-ca-era registration, multiple checkerboard images were captured from different viewpoints using the camera, and the camera intrinsic parameters were estimated with Zhang’s calibration algorithm. Subsequently, multi-view camera images and LiDAR point clouds containing the calibration board were synchronously acquired, from which corner points in the images and echo features in the point clouds were extracted for feature matching. Based on these correspondences, a nonlinear Levenberg-Marquardt optimization algorithm was applied to estimate the six-degree-of-freedom rigid transformation between the two sensors, i.e., the rotation matrix

R and translation vector

T. During optimization, the maximum number of iterations was set to 300, and the process was terminated once the residual converged below a threshold of

. In this way, the extrinsic parameters and relative pose between the LiDAR and the camera were determined [

14,

15], and the results are shown in

Figure 4.

By combining the camera intrinsic matrix

K, after acquiring tunnel images and point clouds, the tunnel LiDAR point clouds are projected onto the images to establish pixel-wise correspondence between point clouds and their projected positions.

where

represents the 3D coordinates of the tunnel LiDAR point cloud in the LiDAR coordinate system.

denotes the 3D coordinates of the same point in the camera coordinate system. Equation (4) transforms coordinates from the LiDAR system to the camera system. (

u,

v) are the pixel coordinates in the image, calculated via Equation (5) by projecting 3D points from the camera coordinate system to 2D image space.

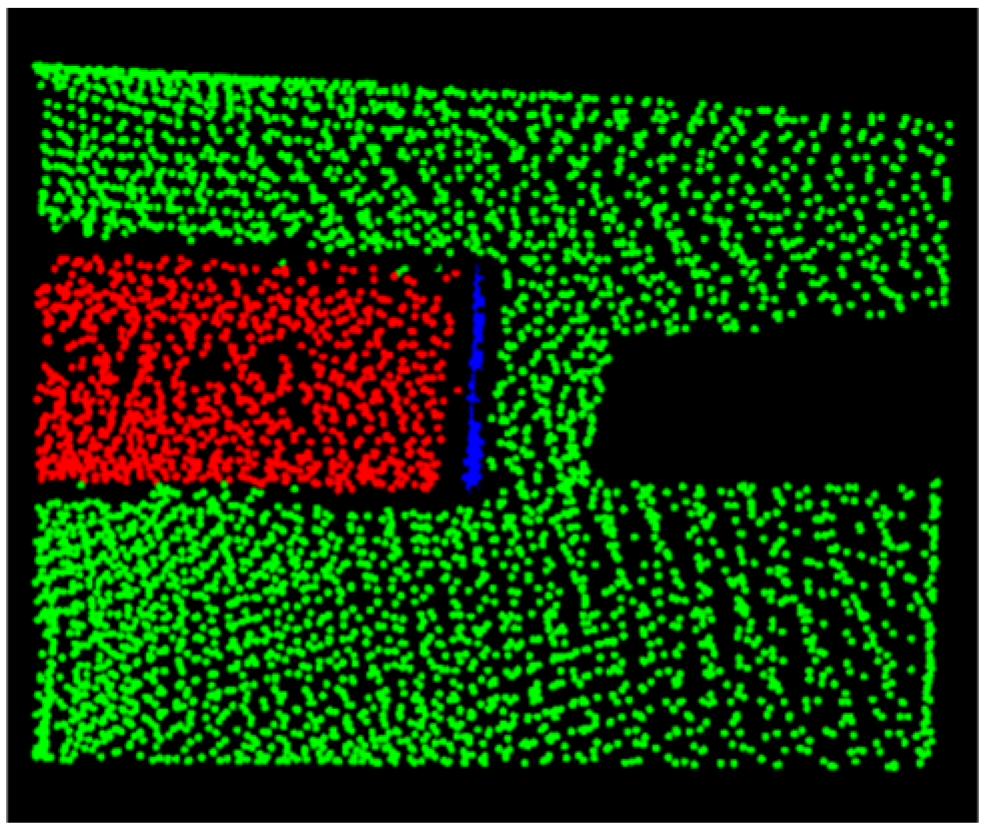

Based on this mapping relationship, the segmented images are used to synchronously segment the point cloud data, as shown in

Figure 5. Subsequently, the overall geometric dimensions (depth

d, width

w, height

h) of the segmented point cloud are extracted to provide data support for subsequent 3D model reconstruction.

2.3. Point Cloud Extraction of Objects in Tunnels Based on Regional Growth

To accurately extract structural features in tunnel environments, this paper employs a region-growing algorithm to perform plane detection on point clouds acquired by LiDAR, using the angle between point cloud normal vectors as the discriminant constraint for region growth. The determination of the key threshold for this constraint relies on the optimization evaluation of point information entropy.

2.3.1. Normal Vector Angle Threshold Calculation and Estimation

In the specific implementation, a local neighborhood is first constructed for each point in the point cloud. Experimental validation in this study shows that using 50 neighboring points effectively balances the preservation of local details with the suppression of noise, ensuring the robustness and accuracy of normal vector and angle calculations. Therefore, this value is selected as the default parameter in the algorithm. Based on the neighborhood point set, a covariance matrix is constructed, and the normal vector direction of the point is obtained through eigenvalue decomposition [

16,

17,

18,

19]. The eigenvector corresponding to the smallest eigenvalue is the normal vector. Meanwhile, the curvature estimate of the point is calculated using the eigenvalues, with smaller curvature values indicating flatter regions. Region growth is then performed by sequentially selecting points with the smallest curvature as seed points, using the normal vector angle threshold as a constraint to detect planes in the point cloud.

In tunnel environments, structural features such as ventilation ducts typically exhibit higher curvature than smooth surfaces like walls. During region-growing-based plane detection, the setting of the normal vector angle threshold significantly impacts recognition performance: if the threshold is too large, non-planar regions with high curvature may be misclassified as planes; if it is too small, it may fail to fully capture the entire extent of feature objects. Therefore, selecting an appropriate threshold is crucial for accurately distinguishing smooth areas from complex structural features.

To address this, this paper introduces point information entropy as a criterion for threshold optimization. Information entropy can measure the degree of uncertainty or disorder in a system; in the point cloud context, it reflects the complexity and dispersion of local normal vector distributions. Specifically, for a discrete probability distribution, the entropy is defined as [

20]:

where

pi denotes the probability of the

i-th event. In point cloud processing, higher entropy values correspond to regions with relatively uniform normal vector distributions, typically indicative of flat surfaces, whereas lower entropy values often occur in areas with significant geometric variations, such as edges, corners, or other feature-rich regions.

To determine the optimal angle threshold, all normal vector angles are sorted in ascending order, and each angle is sequentially used as a candidate threshold to classify all point pairs into two categories: those less than or equal to the threshold are considered potential “intra-plane” pairs, while those greater than the threshold are regarded as “inter-plane” pairs. The entropy values of these two subsets are then computed separately. If the threshold is too small, the first subset will have high purity and low entropy, but the second subset will contain a mixture of point pairs that should belong to the same plane and those representing true features, resulting in high disorder and elevated entropy. Conversely, if the threshold is too large, the first subset may incorporate noise, increasing its entropy, while the second subset, though pure, remains high in entropy, potentially leading to a suboptimal total entropy.

To balance the segmentation quality between these two types of regions, this paper aims to minimize the weighted total entropy as the objective function to identify the best threshold:

Here,

D1 and

D2 represent the two points sets divided according to the current threshold, and

w1 and

w2 are their corresponding weights, generally taken as the proportion of points in each subset relative to the total. This weighted total entropy reflects the efficacy of the current threshold in distinguishing between intra-plane and inter-plane point pairs. Ultimately, the threshold that minimizes

Htotal selected as the constraint for the normal vector angle in the region-growing algorithm, enabling more accurate and robust plane extraction.

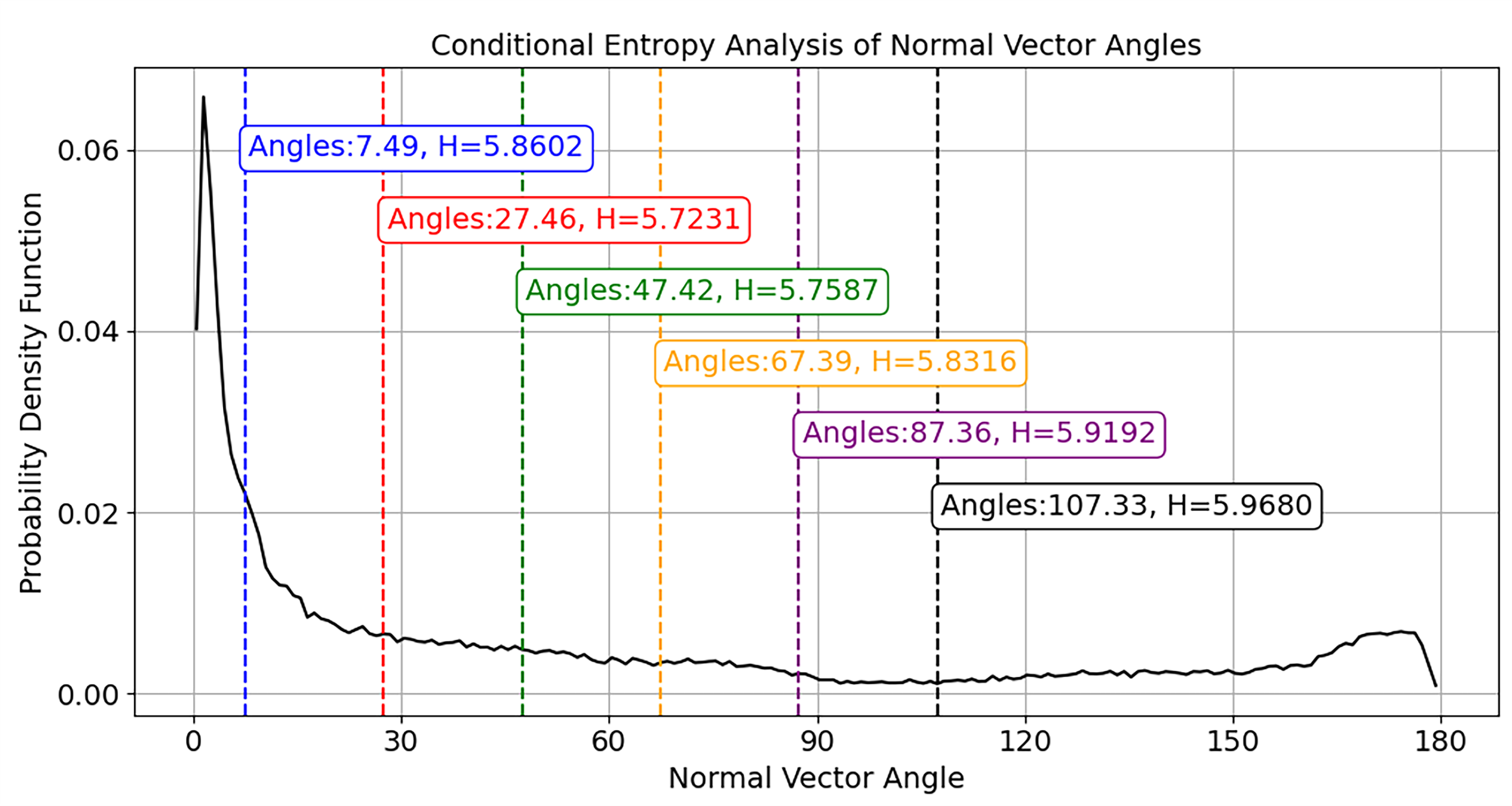

Figure 6 illustrates the optimal curvature threshold determined via conditional entropy analysis of normal vector angles, where the red dashed line corresponds to the threshold yielding minimal entropy

H. All other thresholds (colored dashed lines) result in higher entropy values. Using this optimal angle threshold as the region growing constraint enhances plane segmentation accuracy and stability.

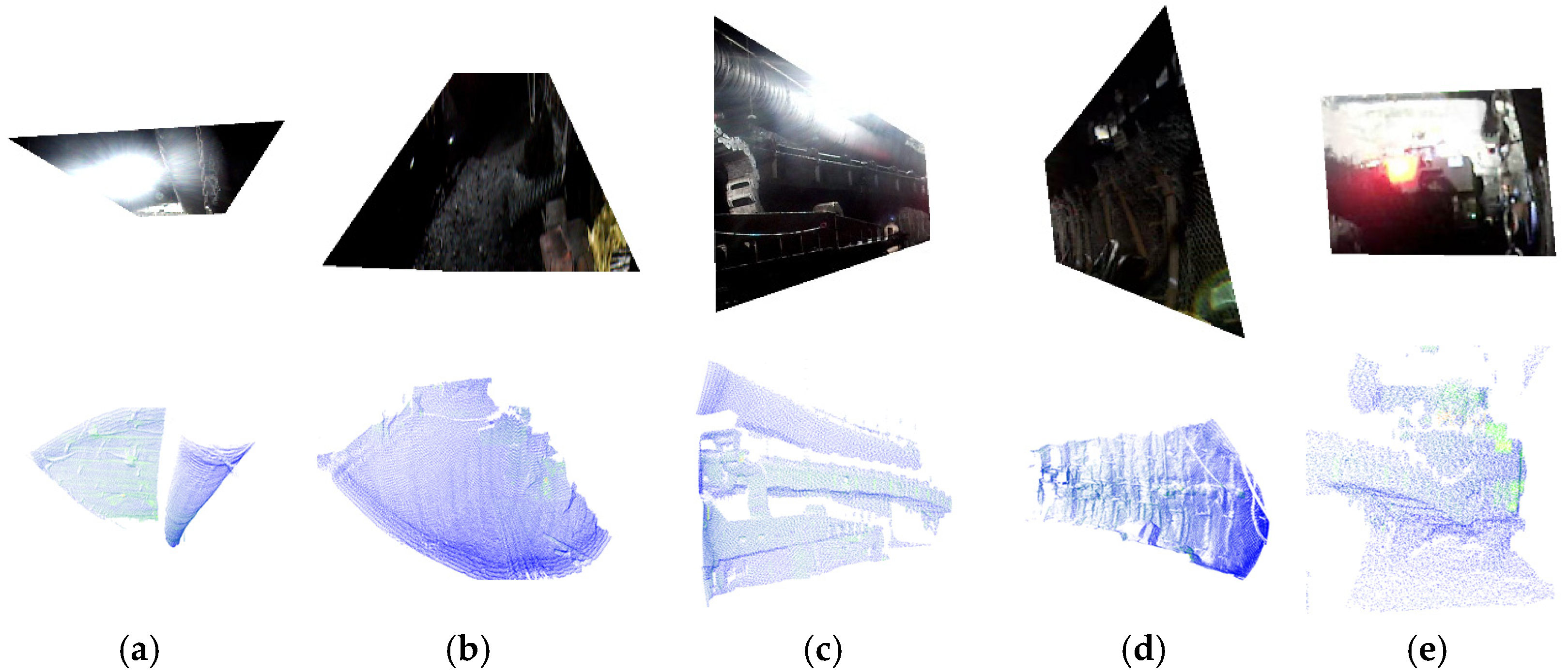

2.3.2. Point Cloud Segmentation and Extraction Based on Normal Vector Angle Threshold

After obtaining the optimal threshold ϕ, points with minimal curvature are selected as seed points for region growing. The angle between the seed point’s normal vector ni and its neighborhood points’ normal vectors nj is calculated. If θij ≤ ϕ, the neighborhood point is incorporated into the current region and updated as a new seed for further growth. This process repeats until all seeds in the current region complete growth, forming a complete smooth region.

To extract tunnel features, information entropy is employed to evaluate regions by quantifying their internal structural complexity. Based on multiple sets of repeated experiments involving point cloud samples collected from tunnel walls, the calculated entropy values were found to be highly concentrated. Points with information entropy less than 0.3 accounts for 99.7% of the total data set. Accordingly, a statistically significant threshold of H < 0.3 was selected to identify planar regions. Areas with low entropy (i.e., H < 0.3) exhibit consistent normal vectors, typically corresponding to planes or regular surfaces, whereas regions with high entropy (i.e., H ≥ 0.3) show dispersed normal vectors, indicating complex geometries that are classified as feature objects. This statistically validated method enables accurate and stable extraction of objects from the point cloud.

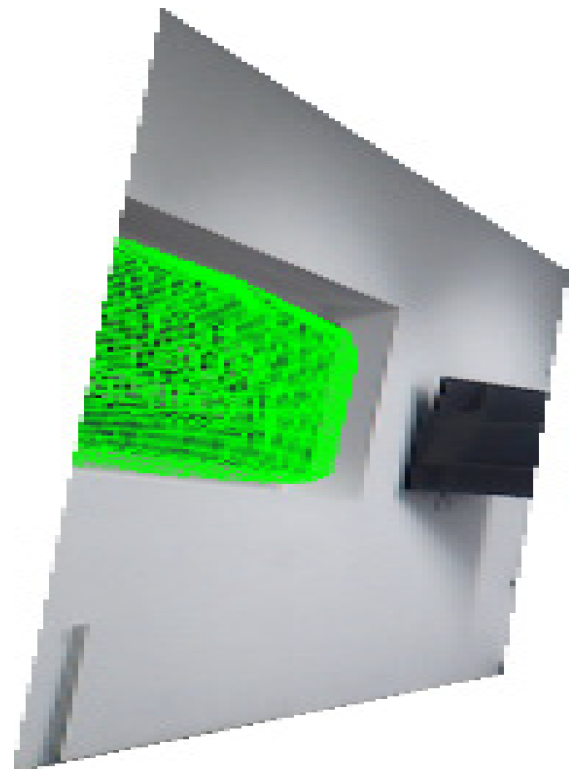

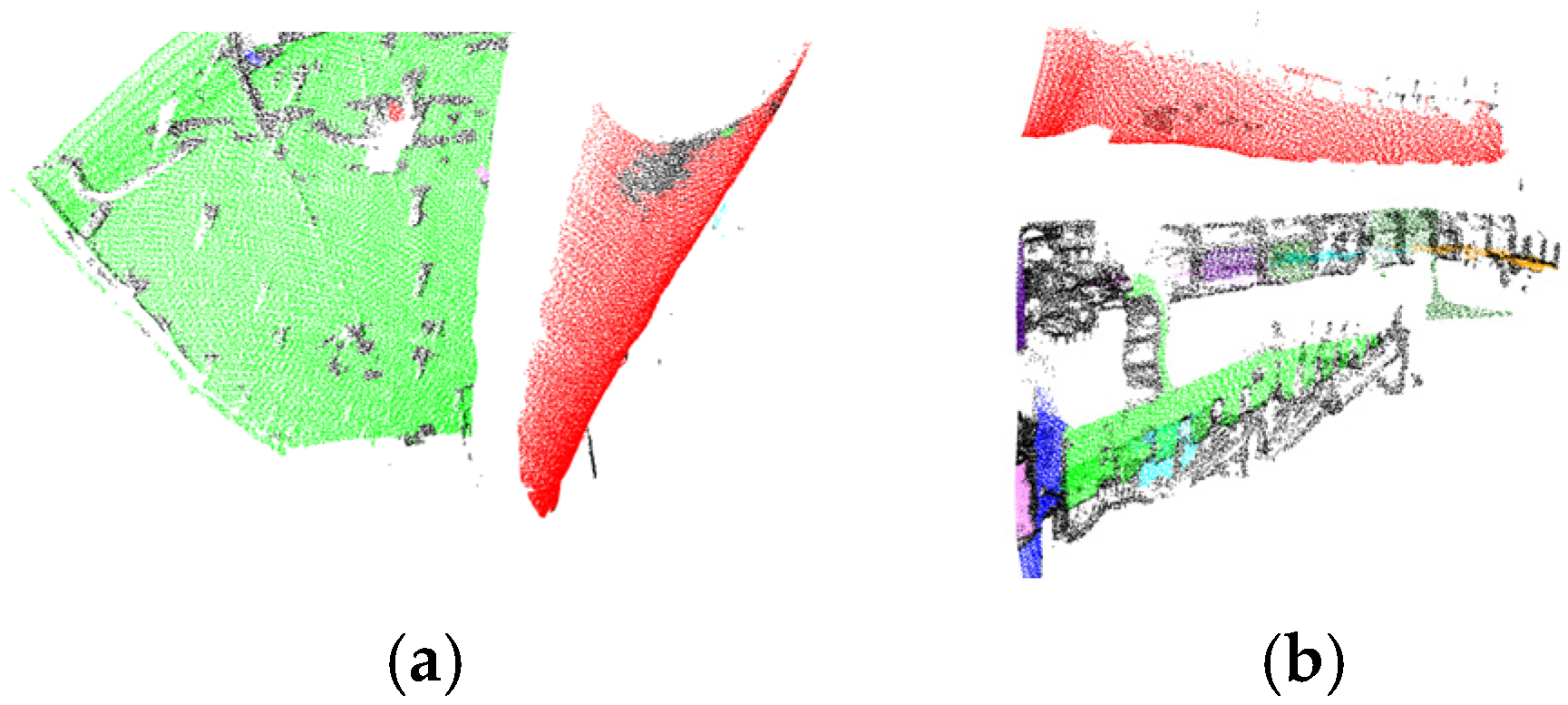

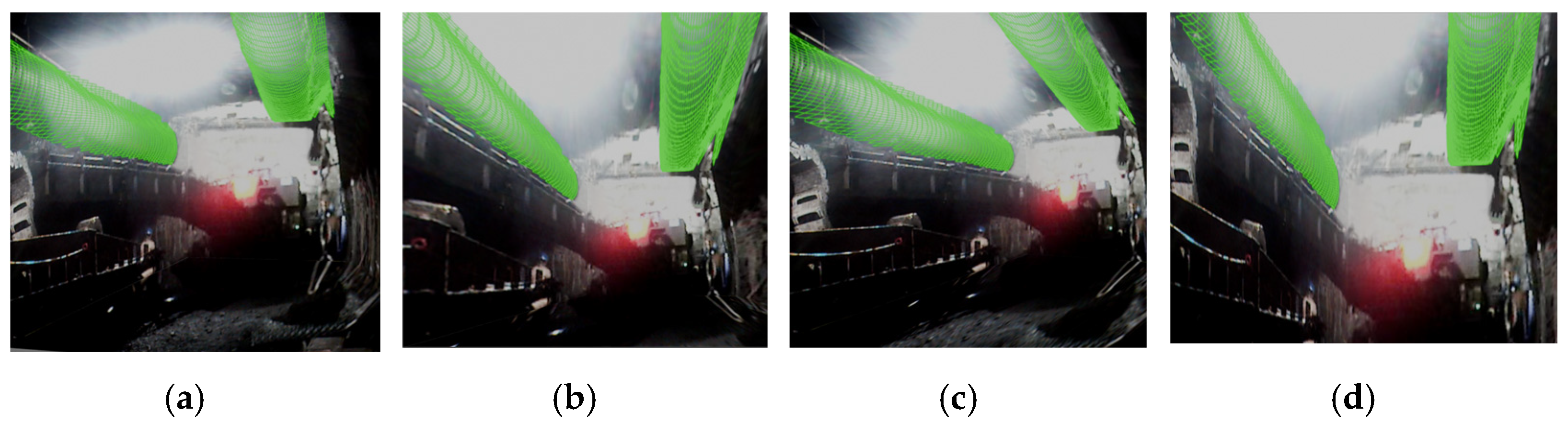

2.4. 3D Reconstruction Based on Point Cloud Projection

After identifying high-curvature surfaces, these regions are extracted for further processing. To visualize three-dimensional information on 2D images, the extracted object point clouds are first processed to form continuous depth-reflective surfaces. This study employs a scattered point interpolation method to construct an interpolation function F (x, y). Taking ground objects as an example, the depth coordinate z is set as the output of the interpolation function, while 2D planar coordinates (x, y) serve as inputs. Within a given (x, y) range, a regular grid point set (X, Y) is generated, and corresponding Z values are calculated using the interpolation function. Adjacent grid points are then connected to form a regularized surface mesh, achieving the conversion from discrete point clouds to continuous surfaces.

Finally, leveraging the pre-calibrated pose relationship between LiDAR and camera, the generated 3D mesh surface is projected onto the segmented 2D image plane. This results in a 2D image with grid lines, effectively fusing 3D surface information with the 2D image.

2.5. 3D Viewpoint Conversion

In actual coal mine tunnel images, limited by camera installation positions and shooting angles, the perspectives of different regions vary. However, the model requires orthographic planar images for accurate matching and fusion with the 3D model. To achieve this, inclined side-view images must undergo viewpoint correction and be transformed into stereoscopic orthographic views.

The orthographic view sizes for different regions correspond to the geometric dimensions obtained from point cloud segmentation.

- (1)

The left and right sidewall orthographic views have dimensions based on the actual tunnel depth d and height h.

- (2)

The roof and floor orthographic views use the tunnel width w and depth d.

- (3)

The cutting face orthographic view adopts the tunnel width w and height h.

Using pixel coordinate correspondences between source image corners (

u,

v) and target orthographic plane corners (

u′,

v′), four pairs of corresponding points are established. The homography matrix

H is solved via least squares, with the transformation expressed as:

Here,

s is the homogeneous coordinate scale factor. To resolve scale ambiguity, we constrain

h33 = 1. Expanding Equation (8) for each correspondence yields two linear equations per point:

for

i = 1, 2, 3, 4. These equations are assembled into matrix form:

Defining the parameter vector:

For all corresponding points, adding

Ai vertically to form matrix

A yields a homogeneous linear system of equations

Perform singular value decomposition on

A:

The solution h is taken as the right singular vector corresponding to the smallest singular value (last column of V). Reshaping h into a 3 × 3 matrix yields the homography H. This matrix is then applied to perform viewpoint transformation on segmented images.