1. Introduction

Over the years, sensor array source localization based on DoA estimation has drawn interest from a variety of engineering fields [

1], such as sonar systems [

2], emergency-call localization [

3], automatic vehicle tracking [

4], radar systems [

5], and patient monitoring [

6]. To obtain DoA information for these applications, the impinging signals on the antenna array are usually digitally processed using a specific direction-finding technique [

7]. Furthermore, the performance of Multiple Input Multiple Output (MIMO) systems is improved by the implementation of adaptive beamforming and efficient direction-finding methods [

8], in the development of smart antenna technology, which places nulls in the direction of interfering sources to suppress interference [

9]. Recently, reconfigurable intelligent surfaces (RISs) have emerged as low-power, programmable metasurfaces that shape propagation to enhance coverage, suppress interference, and improve localization/DoA accuracy [

10]. Associated developments in harmonic coordinated beamforming for automobile radar enhance lane detection and DoA-based sensing [

11]. The space-time path [

12], which is required for MIMO systems to operate efficiently [

13], can be precisely represented using a source localization technique. Improving direction-finding performance in huge MIMO systems requires efficient sampling of the received data matrix. Therefore, the difficult problem of producing a smaller dataset closely reflective of a bigger dataset may be solved using extreme value theory, which is also very practical [

14].

The literature has presented a number of algorithms and approaches related to the DoA estimation problem, such as Minimum Variance Distortionless Response (MVDR) [

15], Multiple Signal Classification (MUSIC) [

16], Minimum Norm (MinNorm) [

17], and Estimation of Signal Parameters via the Rotational Invariance Technique (ESPRIT) [

18]. In MinNorm, the optimal array weight vector is used to reduce the output norm. Only Uniform Liner Array (ULA) configurations are appropriate for this algorithm. In Root-MUSIC, polynomial root searching replaces spectral searching in order to simplify classical music [

19]. While efficient, this method is sensitive to coherent sources and limited to ULA geometry. The computationally inefficient MVDR method requires the inverse of the Covariance Matrix (CM). In contrast, other techniques require eigenvalue or singular value decomposition of the data matrix to determine eigenvalues and eigenvectors [

20]. Additionally, eigen/singular value decomposition methods are inefficient in terms of processing complexity [

21]. It is challenging to distinguish between noise and signal subspaces, especially in poor channel circumstances [

22].

Alternatively, effective linear DoA estimating algorithms avoid CM factorization difficulty. The algorithms Propagator [

23] and Orthogonal Propagator [

24] divide the CM into two smaller matrices. These strategies, suggested as an alternative to the traditional MUSIC algorithm, reduced complexity significantly. Also, reconfigurable full-digital, phase-interferometric FPGA AoA has demonstrated real-time RTLS with tunable phase resolution [

25].

However, processing the entire CM is not practical in a large MIMO system. Numerous methods have been presented based on Propagators’ notion [

24,

26]. The methods are computationally efficient and do not need CM building. Nevertheless, these methods typically require a large array aperture to achieve satisfactory performance.

In Column Subset Selection (CSS), a subset of columns is extracted from a high-dimensional matrix so as to create a column submatrix that is an accurate representation of the original matrix [

27]. As a result of this method, the complexity of signal processing is significantly reduced [

28]. In recent years, the concept of sampling the CM has been proposed as a method for constructing the projection matrix (PM) from this perspective [

29,

30]. The influence of the quantity of sampled columns from the CM on the formulation of the PM has been investigated in [

28]. The DoA of incident signals can be accurately estimated by selecting L-columns from the CM, where L represents the number of sources. Moreover, it has been demonstrated that increasing the number of sampled columns enhances DoA estimation accuracy and improves the Degree of Freedom (DoF), albeit at the cost of increased computational complexity in PM construction. Nevertheless, the authors are unaware of any optimal criterion for determining which columns of the CM should be utilized for PM construction in the literature. Typically, traditional methods use the CM’s first L columns as the default Classical sampling (CS) technique [

31,

32,

33].

This methodology is poor because the chosen columns are predetermined (i.e., the first L columns) and fail to sufficiently capture the informative richness of the original CM. To overcome this limitation, Uniform Sampling (US) was proposed in [

29], which increases DOF without increasing extracted columns to improve DoA estimation performance. As compared to the conventional technique, the US distribution increases DOF from

to

, where C is the number of sampled columns and U is the ratio between the number of array elements and the number of sources. A new approach, Non-Uniform Sampling (NUS), improves DoA estimation accuracy by removing energy-rich columns [

30]. The NUS principle uses random column selection in the CM to create a sampling matrix. Applying the NUS approach results in higher energy retention for signal eigenvalues, resulting in a higher number of DoAs detected compared to the traditional technique.

Apart from sampling strategies, another important challenge in DoA estimation is the presence of signal correlation, particularly in environments with multipath or coherent sources. To mitigate this, the Spatial Smoothing (SS) technique is commonly applied, which divides the array into overlapping subarrays [

34]. While effective in decorrelating signals, SS may reduce resolution and increase computational cost. These constraints require more complicated and permanent solutions. To address these limitations, Enhanced Spatial Smoothing (ESS) was proposed in [

31], enabling more accurate DoA estimation for coherent sources [

35]. To further address the limitations of earlier ESS techniques, the ESS-SS variant was proposed, which applies ESS directly to the smoothed covariance matrix to solve subarray information underutilization in previous ESS techniques [

36]. This approach lowers noise and preserves subspace structure. However, subarray averaging and multiple covariance reconstructions become computationally expensive. To mitigate this complexity, [

37] proposed a real-valued MUSIC method that avoids complex eigen-decomposition and reduces complexity by about

. It keeps accuracy near that of traditional MUSIC and supports arbitrary array configurations. Its resolution may be limited under low SNR or coherent signal situations, though, because it ignores subspace redundancy and inter-column correlation.

In addition to these traditional methods, machine learning (ML) techniques have been investigated recently for estimating DoA. Several examples include CNN-assisted estimators for robust Capon processing [

38], multi-task CNN frameworks that jointly address DOA and noise power estimation [

39], and DeepMUSIC [

40], which uses deep neural networks to improve the classical MUSIC spectrum. Although promising, these techniques usually necessitate large labeled training datasets and may encounter domain mismatch when used in various propagation conditions or array geometries.

DoA estimation is still difficult due to a number of real-world issues, such as low SNR, few snapshots, array size restrictions, and source correlation, even with the success of Standard MUSIC and its variations. Current approaches frequently compromise computational complexity for resolution or exhibit poor generalization across array configurations. Nevertheless, during the subspace reduction process, the inter-column correlation structure inside the noise subspace has not been explicitly taken into consideration by any of the previously described sampling techniques. A high correlation between noise eigenvectors is known to adversely affect the formulation of the PM, which reduces the effectiveness of DoA estimation methods. A correlation-aware subspace refinement approach is used to solve this problem, in which columns from the noise subspace are adaptively chosen according to their pairwise decorrelation efficiency. The goal is to reduce redundancy among the chosen noise eigenvectors in order to improve DoA estimation resolution and accuracy. This is accomplished by calculating the noise subspace’s correlation matrix and assessing each eigenvector’s correlation norm, each of which measures the overall correlation between a particular column and every other column. A lower norm value is preferred for subspace building, since it shows less mutual dependency. The first column, the last column, and the inside column with the lowest correlation norm constitute the selection procedure. In the condensed subspace, these choices ensure low redundancy, edge representation, and structural variety. In addition to being robust and information-rich, the resulting projection matrix is numerically stable, providing enhanced resolution and noise tolerance, particularly in difficult situations like source coherence, low SNR, or limited snapshots.

The following is a summary of this paper’s primary contributions:

RASA, a structured LCCS-based algorithm that consistently chooses minimum correlated vectors to minimize the dimensionality of the noise subspace. To accomplish this, a correlation-aware sampling technique is used, which evaluates column-wise -norms to find low-redundancy directions and computes Pearson correlation coefficients from the real half of the noise subspace. The resultant smaller subspace greatly reduces processing cost while maintaining excellent resolution and estimation accuracy.

A generic steering vector formulation is developed to ensure compatibility with arbitrary array geometries, including ULAs, URAs, and their non-uniform counterparts (NULA and NURA). While all main simulations are conducted on the ULA configuration, additional results with NULA and NURA confirm that the RASA retains effectiveness under irregular sensor placements.

To assess the algorithm’s performance under various SNR levels, snapshot counts, and array topologies, a thorough simulation study is carried out.

Through a correlation-aware sampling technique to select and keep minimally correlated columns from the noise subspace, RASA decreases computational cost, improves orthogonality, decorrelation, and estimate accuracy under difficult signal conditions.

The rest of this paper is structured as follows:

Section 2 presents the DoA estimation model using arbitrary array geometry, while

Section 3 covers PM construction principles, including sampling methodologies for PM.

Section 4 introduces the proposed Reduced Angle Subspace Algorithm (RASA), outlining its technique and theoretical assessment. In

Section 5, comparisons of DoA algorithms are shown and analyzed.

Section 6 presents the simulation results. In

Section 7, we summarize our paper and conclude our findings.

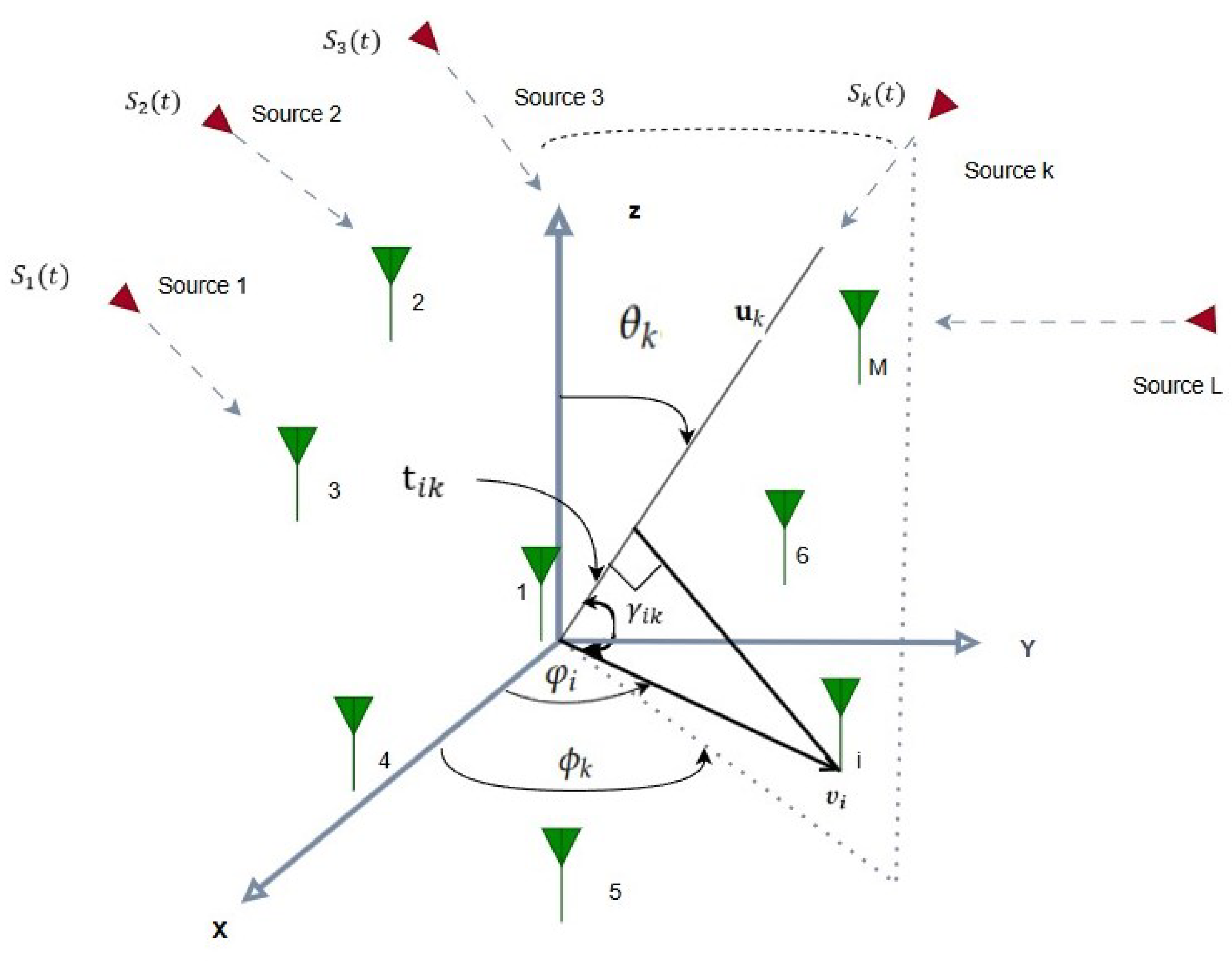

2. An Arbitrary Array-Based DoA Estimation Model

A receiving antenna array composed of

M omnidirectional elements collects narrowband signals transmitted by

L far-field sources, as illustrated in

Figure 1.

The signals that impact the array randomly are represented by

where

and

represent the elevation and azimuth angles of the ith signal, respectively. To optimize the subsequent procedures, the array outputs are initially down-converted to baseband and subsequently processed digitally to estimate the DoA. The comprehensive received signal vector, encompassing the contributions from incoming sources and additive Gaussian noise, is represented as follows:

Assuming

is a modulated signal vector,

represents the additive white Gaussian noise associated with each channel, and each steering vector

denotes the spatial response of the array to the

kth incident signal arriving from the direction

. The vectors collectively constitute the array manifold matrix

, encompassing the steering vectors associated with

L incoming sources. This matrix is delineated as follows:

To adopt the arbitrary array configuration shown in

Figure 1, we need to drive the steering vector for the

kth plane wave incident on this array. i.e.,

. A unit vector

containing both

and

can be defined according to the established spatial modeling protocols in 3GPP TR 38.901 [

41] as Equation (

3). Here, the unit vectors for Cartesian co-ordinates are described by

,

, and

.

Next, we must establish a new unit vector

as is expressed in (4) in order to compute the distance between the positions of other elements and the reference element (i.e., element 1, see

Figure 1):

Here,

denotes the radial distance from the

z-axis;

represents the azimuthal angle in the

x–

y plane;

indicates the vertical height of the

i-th sensor element; and

,

,

are the unit vectors aligned with the

x,

y, and

z axes, respectively. The angles between the unit vectors for the

i-th sensor with respect to the reference element

and

can then be computed as follows, where the Euclidean norm is shown by

:

The entire set of

, which represents the angular relationships between

M elements and

L sources, is shown in Equation (

6) and is represented by the matrix

.

The difference in distance is used to calculate the relevant time delay associated with each

in the following manner:

Likewise, the entire set of

can be represented as follows using an

matrix:

Finally, the angular phase difference

can be calculated by multiplying

and the propagation constant

as is expressed in Equation (

9), where

is the signal wavelength.

The total

that correlate to

are shown as follows, just like in Equations (

6) and (

8). In this case, the

M sensor elements are represented by the row index

, while the

L arriving sources are represented by the column index

. The angular phase difference between the

i-th sensor and the

k-th source is represented by each entry

:

Both azimuth and elevation angles are embedded in

. Accordingly, the array steering vector corresponding to the

k-th impinging signal can be expressed, following the 3GPP spatial channel modeling framework [

41], as shown in Equation (

11):

3. Construction of the Projection Matrix

In order to obtain interpretable data summarization, the CSS problem has been used in numerous real-world applications [

42]. The estimation accuracy is considerably influenced by the sampling process employed to extract specific columns from the original matrix. Consequently, an effective sampling strategy is essential for constructing a reduced-dimension matrix to enhance DoA estimation performance. To examine this issue and reassess the most pertinent concepts, imagine an array antenna consisting of M elements utilized to gather incident signals for N instances. The comprehensive data matrix obtained can be depicted as follows:

The predicted value of the outer product defines the real CM of the received signal,

:

Therefore, the source covariance matrix of size is . is the identity matrix of size , whereas represents the variance of the noise. The variance of the noise is indicated by the term , and the identity matrix of size is represented by . The expectation operator is denoted by and the Hermitian (conjugate) transpose is represented by the notation . The signal and noise components are assumed to be independent in this formulation.

Since noise and signals are uncorrelated [

42], the right-hand side equality in Equation (

14) holds. As a sample-average, it can be calculated:

For each element in the calculated covariance matrix , the spatial correlation between the signals received at the i-th and j-th antenna elements is recorded. Array signal processing makes extensive use of this statistical representation to estimate the spatial characteristics of the incoming wavefronts. To tackle the dimensionality issue, numerous structured and randomized sampling techniques have been suggested to create a lower-dimensional projection matrix , which selects L columns from or directly from . In CS, US, and NUS, this sampling is generally implemented using fixed or randomly distributed indices.

3.1. Sampling Methodologies for Projection Matrix Construction

The three most common sampling methodologies used to construct a PM from a CM are Classical Sampling, Uniform Sampling, and Non-Uniform Sampling. It is important to note that each methodology has its own advantages and challenges, which impact the overall performance of the DoA estimation process.

3.1.1. Classical Sampling Methodology (CSM)

The simplest and most natural method for creating the PM from the CM is the classical sampling method. This method, which was first presented in earlier research [

31,

32], chooses the first

L columns of the sample

and arranges them into a matrix

as follows:

We can rewrite based on the conventional technique. The sampled matrix can be rewritten using the conventional sampling approach as follows: where each , for , corresponds to the sample ’s column.

In this case, the matrix

is built so that its columns try to cover the same subspace as the actual signal components. The dominant subspace properties of the sampled matrix are preserved by this design assumption. The following expression illustrates how the PM based on the classical technique is created by projecting onto the orthogonal complement of the subspace spanned by

:

In the spatial spectrum, the clear peak locations represent the directions from which the signals arrive, which are obtained using Equation (

17).

As shown in

Figure 2, sampling CM is implemented using a classical method to construct a sampled matrix. For all the methodologies considered, we plot the selected columns’ positions (x-axis) against the normalized column norm (i.e., y-axis), which is a direct representation of the correlation levels between incident signals within each column. In this approach, there is no need for complex computations or algorithms, which is its main advantage. However, this method has significant drawbacks. The classical selection technique derives the first L columns from the CM without assessing their structural importance or intercorrelations. This fixed selection diminishes the DOF and leads to inefficient utilization of the array aperture. Furthermore, the absence of inter-column correlation consideration frequently results in selected columns demonstrating significant linear dependence. This redundancy can result in the sampled matrix inadequately representing the true signal subspace, hence significantly impairing the accuracy of DoA calculation [

31].

3.1.2. Uniform Sampling Methodology (USM)

USM was developed to overcome some limitations of classical sampling methods, particularly in improving the degree of freedom. USM spreads selected columns more evenly across the CM instead of simply selecting the first L columns [

29]. This formula determines their positions in accordance with a predetermined formula:

In

Figure 3, the sampled matrix generated using the USM is depicted. While USM improves the DOF compared to conventional methods by more evenly distributing the selected columns across the array aperture, it still has notable limitations. Specifically, the locations of the selected columns remain non-adaptive and are predefined by the system, preventing the method from dynamically adjusting to the characteristics of the CM. Additionally, similar to the classical method, USM continues to select columns with high correlation coefficients (CCs), which can impair the effectiveness of the DoA estimation. Despite the increased DOF, these issues, particularly the persistent high correlation between columns, limit the potential improvements in estimation performance.

3.1.3. Non-Uniform Sampling Methodology (NUSM)

NUSM is an advanced approach developed to enhance the performance of DoA estimation by focusing on the total energy of the signal eigenvalues. Unlike classical and uniform sampling methods that rely on predefined or systematic column selection from the CM, NUSM randomly selects columns using principles from random matrix theory and low-rank matrix approximation techniques [

30,

43]. This random selection process maintains the essential characteristics of the original matrix while effectively reducing its dimensionality, which helps preserve the signal’s integrity.

One of the key advantages of NUSM is its ability to improve noise immunity. By randomly distributing the selected columns, the total energy of the signal eigenvalues is increased, leading to a more robust DoA estimation process. This enhancement in signal energy directly contributes to improved performance in noisy environments, making the estimation algorithm more resilient against interference. While NUSM’s column locations are dynamic, the issue of picking high-CC columns remains unresolved. This is an important issue for both classical and USM, as well as NUSM. Once the sampled matrix QNUSM is created, the algorithm follows the conventional technique by replacing QClass with QNUSM and estimating DoAs using Equations (

16) and (

17).

Figure 4 demonstrates that the QNUSM matrix has L randomly extracted columns from the CM. The NUS methodology selects columns without using criteria, such as high or low correlation between signals (e.g, column in

Figure 4).

However, despite these improvements, NUSM still struggles with correlation issues. The random nature of column selection does not guarantee that columns with low CCs will be chosen. As a result, some selected columns may still have high correlations as shown in [

43], which can have a negative impact on the accuracy and reliability of DoA estimation. This persistent issue indicates that while NUSM advances beyond classical methods, further refinement is needed to address the correlation problem effectively.

4. Reduced Angle Subspace Algorithm (RASA)

According to the comprehensive descriptions and conceptual representations in

Figure 2,

Figure 3 and

Figure 4 of

Section 3, a primary problem in DoA estimation is the heightened computing complexity and the correlation among the columns derived from the sample covariance matrix

.

These problems frequently result in a rank-deficient matrix

, which restricts the estimate process’s accuracy and stability. By choosing the least redundant and most decorrelated columns from the noise subspace, the RASA is proposed and presented in this paper as a solution to this issue. The approach is appropriate for real-time or resource-constrained contexts because of this selection procedure, which also minimizes computational cost and reduces the dimensions of the subspace. Furthermore, RASA improves the estimation’s robustness in the presence of partially coherent signals, which frequently impair the performance of conventional subspace approaches, by concentrating on lowering inter-column correlation. In general, conventional methods such as CSM, USM, NUSM, Proposed LCCS, Proposed SS, and Real Proposed do not comprehensively address inter-column correlation and numerical conditioning, often leading to degraded performance under coherent or low-SNR conditions [

29,

30,

31,

32].

4.1. Correlation-Aware Column Selection in RASA

The RASA method uses a selective column sampling approach to reduce rank deficit and redundancy in sampled subspace matrices. This approach is founded on correlation-aware selection principles. The fundamental objective is to ensure that each selected column from the noise subspace offers unique information about the directions of incoming signals with minimum correlation. The technique initiates by calculating the sample covariance matrix

from the received signal matrix

, where

M denotes the number of sensors and

N represents the number of snapshots:

The subsequent stage is performing eigen-decomposition on the estimated covariance matrix

to derive its eigenvalues and eigenvectors, which are subsequently utilized to construct the signal and noise subspaces:

where:

is the diagonal matrix of eigenvalues, arranged in descending order.

is the eigenvector matrix.

From this decomposition, we obtain the noise subspace matrix

, comprising the eigenvectors corresponding to the smallest

eigenvalues:

Here, represent the eigenvectors of the covariance matrix associated with the smallest eigenvalues, together constituting the noise subspace matrix .

To achieve effective decorrelation and preserve orthogonality across subspace components, the correlation matrix

is calculated using the Pearson correlation coefficient applied to the real-valued portion of the noisy subspace matrix

. This is articulated as:

In Equation (

22), only the real component of

is utilized, as the Pearson correlation coefficient is applicable solely to real-valued variables, and its direct application to complex entries may produce ambiguous outcomes. Limiting the procedure to

yields restricted and interpretable correlation values, while empirical studies validated that this selection maintains the fundamental inter-column structure without compromising subspace information.

To measure the overall correlation strength of each column

in

, we assess its

-norm, which indicates the total correlation of column

i with the remaining subspace. This is mathematically defined as:

The RASA algorithm uses this metric to facilitate resilient subspace selection by identifying and ranking columns with low redundancy. It gives a scalar measure of the correlation density for each column. Less redundant and more decorrelated columns have a lower -norm.

In order to improve the selection process even further,

-norm are standardized in the following ways:

The normalization function in Equation (

24) rescales

by dividing it by the maximum of all

values, where

k represents the set of elements. Here,

is a scalar metric (e.g., norm or correlation) for the

i-th column, and

is the maximum value of all such metrics. The normalized score

falls inside the range

, enabling fair comparison and ranking across all columns.

The first and last columns are not taken into account while choosing a subspace in order to reduce boundary effects. Therefore, the following defines the candidate subset:

The index set of candidate columns,

, ranges from the second to the

-th column, eliminating border columns to avoid edge effects. Then, extract the norms of the subset:

The set contains scalar values for select column indices for evaluation. The reduced set of minimally correlated interior columns is formed by sorting in ascending order and selecting the smallest values for inclusion in the final subspace.

The RASA algorithm selects the following subspaces in order to construct a spatially informative and low-redundancy subspace:

The initial and final columns.

The columns from the interior subset that have the fewest -norm.

The following subset is extracted:

In the end, the following set of indices was selected:

In order to maintain aperture diversity, prevent degenerate subspaces, and guarantee that no crucial information of

is lost, the first and last columns in Equation (

28) are deterministically included to make up for their previous exclusion during ranking. As a result of these indices, we are able to construct the reduced noise subspace matrix according to Equation (

29).

This condensed subspace enhances the DoA estimation performance under high noise and signal coherence while preserving the most informative, minimally correlated elements of the noise subspace.

Figure 5 shows that the signals are least associated with unselected columns. The suggested method uses columns with the lowest CCs (represented by column norm) to generate the sampling matrix, as depicted in the image [

44].

A further refinement is necessary to enforce structural decorrelation and guarantee representation uniqueness, even though the -norm-based approach mentioned above guarantees the selection of minimally correlated columns from the noise subspace. The following part addresses this by introducing a structured anti-correlated subspace approximation approach that uses normalized correlation metrics to systematically analyze the pairwise inter-column dependencies.

4.2. Reduced and Structured Anticorrelated Subspace Approximation RASA Methodology

This section introduces a systematic modification to column selection within the noise subspace to retain array aperture and increase subspace decorrelation. By using their -norm to identify minimally correlated columns, the suggested method makes sure that every component chosen transmits unique signal information with the least amount of redundancy. A more robust and illuminating subspace representation is made possible by measuring inter-column dependency inside the sampled noise matrix .

This strategy uses CM-based selection, where normalized correlation norms measure column similarity to all others. Lower correlation norms suggest more independent columns, improving DoA resolution in low SNR or coherent signal situations. The least dependent columns can be found practically by calculating the CM of and choosing the columns with the smallest normalized correlation norms. The correlation between one column and every other column is represented by the norm of each column. Weaker inter-sensor correlation is shown by lower norm values because the CC is a unitless measure that is formed from off-diagonal CM inputs. As a result, only when a column shows little correlation with the other columns in the matrix is it chosen.

This procedure ensures that the chosen columns retain subspace uniqueness by reducing the correlation between

and

. Since each column in the sampled matrix represents a different signal direction, RASA satisfies the unique representation property [

37].

The RASA algorithm chooses the remaining columns from the intermediate set according to their decorrelation efficiency, always including the initial and last columns to maintain aperture and edge variety. The expression for the resulting submatrix is expressed in Equation (

30). In this case,

c represents the column index set defined by:

To eliminate boundary bias, represents all intermediate column indices except the first and last. The matrix is created by picking a subset of columns from the noise subspace matrix, where represents the j-th column vector of . The RASA criterion identified as the least correlated columns. The whole set of indices, encompassing the unselected columns denoted by and the selected columns denoted by , is specifically represented by the letter c. These subsets of C have the properties and . Finding the intermediate columns once the first and last are fixed is the primary challenge.

When choosing columns, RASA expressly minimizes the correlation between signals obtained at various sensor elements, in contrast to uniform [

29] or random [

30] selection procedures. In particular, it selects

to minimize

.

It is quantified by utilizing the

-norm of each column of CM to quantify the total signal correlation. In order to select the most decorrelated columns, the algorithm uses the following criteria:

In this case,

is a generic column vector from the noise subspace matrix

. The index

s represents a selected column with minimal

norm, while

u represents an unselected column with maximal norm. The vectors

and

indicate the energy level of the most and least dominant columns in their subsets, and the

of each CM column is indicated by

. The lowest and highest norm values are returned by the min and max functions in turn. Equation (

31) is another way to restate this rule. Here,

returns the relevant indices and

(The function

returns a row vector

B that contains the

k smallest elements from the array

A. In the context of our method, we define

, where

. Similarly, the

function can be used to retrieve the

k largest elements from the same set

) and

yield the norm set’s smallest

and greatest

values, respectively.

The sample matrix

is created using deterministic and decorrelated columns, selected using Equations (

31) and (

32). The first column with the lowest

-norm is picked, followed by the

least correlated columns from the remaining contenders. Finally, the final column in

has the highest

-norm. The selected subspace has maximum angular resolution and the least redundancy with this hierarchical design.

The following is a summary of the two primary benefits of the suggested RASA sampling method. By purposefully including the first and last columns, and , in the created matrix, it initially optimizes the array aperture. Because of this design, signals coming from extreme angular directions at the array edge can be efficiently captured by the RASA based on DoA estimation. Secondly, it reduces the correlation between adjacent columns in the matrix that was sampled. This feature is crucial for resolving multiple signal directions since it guarantees that the chosen subspace columns have non-redundant spatial information. This improves angular resolution beyond what is possible with current subspace sampling techniques by using decorrelated and geographically varied subspace components, which guarantees higher overall performance.

4.3. Theoretical Evaluation of Subspace Structure Through Adjacent Column Analysis Based on Correlation

Initially, the Pearson Product Moment Correlation Coefficient (PPMCC) was used as the starting point for analysis in order to quantify the degree of the strength correlation between adjacent columns. The PPMCC, represented by

, can be used to characterize the relationship between neighboring columns within

Z, such as

and

, and can be calculated using Equation (

33).

The mean values of

and

are represented by

and

, respectively, and are calculated as follows:

In this case,

represents the likelihood of choosing the

n-th column. The symbols

and

represent the mean-adjusted values of

and

, respectively. Therefore, another way to represent

is as follows:

According to the Cauchy–Schwarz inequality, the following is accurate:

Thus, it follows logically that the PPMCC range is

. The value

degree of linear relationship between

and

is represented by the normalized measure [

45]. Perfect correlation is represented by a value of 1, and no correlation is represented by a value of 0. It is implied that the two vectors carry independent information when the coefficient is zero. On the other hand, total redundancy is indicated by a value of 1. In this situation,

is reduced to as close to zero as possible.

To quantify inter-column correlation, the Pearson Product Moment Correlation Coefficient (PPMCC) was computed as in Equation (

33) and Equation (

35).

Table 1 reports the mean absolute adjacent-column correlations for each sampling strategy. Conventional methods (CSM, USM, NUSM) exhibited consistently high correlation values (

–

), indicating strong redundancy. LCCS reduced correlation substantially, while the RASA method achieved the lowest values (≈0.05–0.09), demonstrating superior decorrelation. To validate the proposed strategies, a uniform linear array (ULA) with

sensors was considered under coherent source conditions. The covariance matrix of size

was estimated using

snapshots at an SNR of 0 dB. To ensure statistical robustness,

Monte Carlo trials were conducted, with each trial selecting

columns.

To confirm the statistical robustness of RASA’s decorrelation improvement, paired trial values were subjected to the Wilcoxon signed-rank test [

46]. The difference between a baseline value

and the accompanying RASA value

for

N paired trials can be expressed as

. Equation (

36) provides the definition of the signed-rank statistic.

In this case,

T is the Wilcoxon statistic, and

and

are the sums of ranks of the positive and negative differences. For the signed-rank test, Equation (

36) ranks positive and negative paired differences.

The distribution of

T can be roughly represented by a normal distribution for large sample sizes (

trials). This enables the significance level to be directly calculated using a

z-score. Equation (

37) provides the typical approximation used in this work.

Since RASA is evaluated against many baselines, the

p-values were adjusted using the Holm–Bonferroni procedure [

47]:

where the sorted raw

p-values from

m comparisons are indicated by

. The family-wise error rate is rigorously controlled by Equation (

38), avoiding overblown significance brought on by repeated testing. The Wilcoxon signed-rank [

46] test results are summarized in

Table 2 to ensure these reductions are not due to chance. In comparison to CSM, USM, and NUSM, RASA lowers redundancy, as indicated by negative DeltaMedian values; nonetheless, positive DeltaMedian values against LCCS confirm further improvement beyond its partial elimination. The huge effect sizes (

) and the fact that all

p-values are well below 0.05 show that the improvement is both practically and statistically meaningful. Together,

Table 1 and

Table 2 confirm that RASA generates decorrelated and structurally robust subspaces, which are essential for accurate DoA estimation.

In comparison to baseline approaches (RASA was used as the reference technique in Wilcoxon signed-rank analysis, and all differences are measured relative to it. The table does not have a distinct row for RASA because each baseline is directly compared to it), RASA consistently produces decorrelation improvements that are statistically significant and practically meaningful, as shown by this dual descriptive–inferential evaluation (

Table 1 and

Table 2).

4.4. Application of RASA for High-Resolution DoA Estimation

To improve spatial spectrum estimation and DoA performance, the reduced noise-subspace matrix with , formed by selecting the least-correlated columns from the estimated noise subspace , is inserted into the conventional MUSIC framework.

The steering vector for an angle

is given by:

In this case,

d represents the inter-element spacing (typically

),

represents the sensor index vector for a ULA, and

represents the angle search space, where

includes values like

. After that, the pseudo-spectrum of RASA-enhanced MUSIC is calculated as Equation (

37):

Next, the estimated directions of arrival are determined by:

This approach replaces the full MUSIC projector

with the reduced projector

, constructed from the least-correlated columns, offering the following advantages: Sharper spectral peaks, enhanced angular resolution, reduced computational complexity due to subspace compression, and improved robustness in the presence of noise and coherent signals. This RASA implementation successfully converts the conventional MUSIC framework into a high-resolution, low-complexity estimator appropriate for real-world situations involving array defects, redundancy, or interference. The algorithm steps are detailed in Algorithm 1.

| Algorithm 1: Steps of the RASA Algorithm |

Input: Received data at the output of an array composed of M antennas, with N snapshots, and L incident signals Output: Accurate DoAs Estimation - 1

Step 1: Calculate the sample covariance matrix - 2

Step 2: Perform eigen decomposition on to obtain eigenvectors Q and eigenvalues , and sort them in descending order of eigenvalues - 3

Step 3: Identify the noise subspace by selecting the eigenvectors corresponding to the smallest eigenvalues - 4

Step 4: Compute the correlation matrix - 5

Step 5: Calculate the -norms for each column of C, excluding the first and last columns - 6

Step 6: Sort the -norms in ascending order and select the columns with the smallest norms - 7

Step 7: Construct the reduced noise subspace matrix by sampling the first column, the last column, and the selected columns from Step 6 - 8

Step 8: Compute the MUSIC spectrum:

|

5. Comparative Analysis of Direction of Arrival Estimation Algorithms

This section provides a comparative analysis of DoA estimation algorithms based on a subspace, emphasizing their computational efficiency, robustness to noise, and spatial resolution. The algorithms considered include the Proposed LCCS, Proposed SS, USM, NUSM, CSM [

45,

48], and the RASA algorithm proposed in this paper.

Conventional CSM techniques employ the complete or fixed portions of the noise subspace without taking signal correlation into account, often resulting in redundancy, poor decorrelation, and reduced resolution. In contrast, USM and NUSM use random and uniform sampling techniques, respectively, to try to enhance this. While these methods increase aperture diversity, they frequently have weak durability or instability in coherent or low SNR settings [

29].

In addition, the reduced-complexity MUSIC technique avoids eigen-decomposition through real-valued translation, making it applicable to all array types and reducing processing power. The increased spatial smoothing method refines the covariance matrix using intra- and inter-subarray correlations to improve DoA estimation accuracy under low SNR and snapshot situations. These methods are complementary and address distinct DoA estimation trade-space aspects.

In order to strike a balance between computational efficiency and estimation performance, numerous sophisticated methodologies have been proposed. The RASA algorithm is one such approach, which employs correlation and a first-last and least correlated column sampling technique to select a reduced yet informative noise subspace. In order to identify minimally correlated noise subspace vectors, the matrix implements correlation-aware selection, which simplifies numerical conditioning but additionally reduces complexity.

Comparison of Execution Times and Computational Complexity

A comprehensive evaluation of the Standard MUSIC, RASA, Real Proposed, Proposed-LCCS, and Proposed SS algorithms was conducted to assess the computational efficiency of the proposed methods. Although USM and NUSM exhibit low to moderate complexity, they are excluded from the execution time comparison because RASA demonstrates substantially superior resolution performance. Therefore, the focus of this evaluation is to compare RASA with algorithms of comparable computational efficiency, such as the Real Proposed and Proposed SS methods, to provide an accurate assessment of execution times among these approaches. A series of experiments was conducted across five scenarios, varying the number of sensors (

M). The setup was as follows:

snapshots,

,

, and scanning angles

with

resolution. A total of

sources were placed at uniformly distributed directions between

and

, all with

. The number of sensors was varied as

. For the spectrum evaluation, each point represents MATLAB R2024b

tic/toc timing. The distance between elements in the array was fixed at

wavelengths.

Figure 6 displays the execution times of each method, which were measured by an AMD Ryzen 74 800H (2.9GHz) processor.

A comparison of the execution times of five algorithms with different numbers of array sensors is shown in

Figure 6a,b. The measured execution times demonstrate the effectiveness of both the Real Proposed and RASA techniques. The runtimes of Standard MUSIC increase steadily as

M increases because it scans the whole

-dimensional noise subspace and performs a full

eigenvalue decomposition (EVD). While RASA uses the same EVD stage, it lowers the scan cost and saves a significant amount of time by reducing the scan subspace to

columns chosen using the

criterion. Real Proposed attains the minimal execution durations for the majority of

M values owing to its real-valued formulation and half-field-of-view (FOV) scan, which diminishes both the guiding vector dimension and the scanning range.

Nonetheless, for bigger arrays (), the advantages of RASA in diminished scan dimensions become increasingly apparent, and its runtime either approaches or somewhat exceeds that of Real Proposed. The proposed SS demonstrates significantly increased runtimes, especially for large M, owing to the repetitive calculations of subarray covariance and numerous EVD operations. The Proposed LCCS also benefits from fixed-column projection, yielding competitive timings, albeit still above those of the two top algorithms.

In general, RASA and Real Proposed yield substantial runtime reductions in comparison to conventional methods, with the relative benefit contingent upon array size.

Among the evaluated methods, RASA and the Proposed Real-Value algorithm demonstrate practical advantages in terms of execution time. RASA achieves this through a streamlined subspace selection strategy that avoids expensive operations, while the Proposed Real-Value method benefits from its reduced matrix processing structure. In contrast, Proposed SS involves additional transformations and smoothing operations, resulting in increased computational time, particularly for RASA and the Real Proposed, for real-time or large-scale array processing systems, where both speed and accuracy are critical. These algorithms are highly suited for contemporary signal processing applications due to their ability to achieve a practical equilibrium between direction-finding precision and execution speed.

As can be seen in

Table 3, the Floating Point Operation (FLOP) count provides a direct evaluation of computing cost by quantifying the theoretical FLOP needed by each algorithm. The parameters defined as follows, the number of sensors in the array is

M, the number of sources or signals is

L, the number of selected columns used for subspace construction is

J, the size of the augmented matrix in the Proposed SS method is

P, and the smoothing window or overlapping segments is

S. Usually, parameters follow the relationships

,

, and

. Structural selection algorithms that retain boundary columns and pick decorrelated or low-norm intermediate vectors shrink RASA and Proposed LCCS subspaces to

. Besides subspace size, difficulty classification depends on operations like sorting, projection, and inversion, which influence the algorithm’s processing requirement. Furthermore, the Real-Proposed technique and RASA’s reduced FLOP orders demonstrate their appropriateness for real-time, resource-constrained applications. On the other hand, approaches that include more processing steps, like Standard MUSIC and Proposed-SS, result in greater FLOP counts because of subspace manipulation and correlation-aware selection. While RASA and Proposed LCCS can operate on a subspace of dimension

, their computational architectures differ dramatically. Without matrix inversion and with decreased data, the RASA algorithm uses correlation norms and simple sorting in the noise subspace to choose columns. Proposed LCCS chooses columns straight from the complete covariance matrix based on their norms, then builds a projection matrix using a matrix inverse and several matrix multiplications. Adding operations, such as

matrix inversion and

matrix-vector products, increases computing cost. Overall, the FLOP analysis supports the usefulness of low-complexity methods in scalable DoA estimation and enhances empirical timing results.

6. Simulation Results and Performance Analysis of RASA

To demonstrate applicability across arbitrary array geometries, ageneric steering vector formulation is adopted, ensuring compatibility with both Uniform Linear Arrays (ULAs) and Uniform Rectangular Arrays (URAs). For illustration, initial results are presented for the URA, which supports two-dimensional scanning in azimuth and elevation due to its uniform element spacing along horizontal and vertical axes. Subsequent analysis focuses on the ULA configuration, which forms the basis of the main simulations. Performance is assessed under varied SNR levels, array sizes, correlated sources, and snapshot counts. The evaluation relies on the Average Root Mean Square Error (ARMSE) in (

42) and the Probability of Successful Detection (PSD) in (

43), providing consistent benchmarking across all considered array geometries. Here,

K is the number of Monte Carlo trials,

is the number of successful detections at the

i-th simulation, and

and

are the true and estimated DoAs at the

k-th Monte Carlo iteration, respectively.

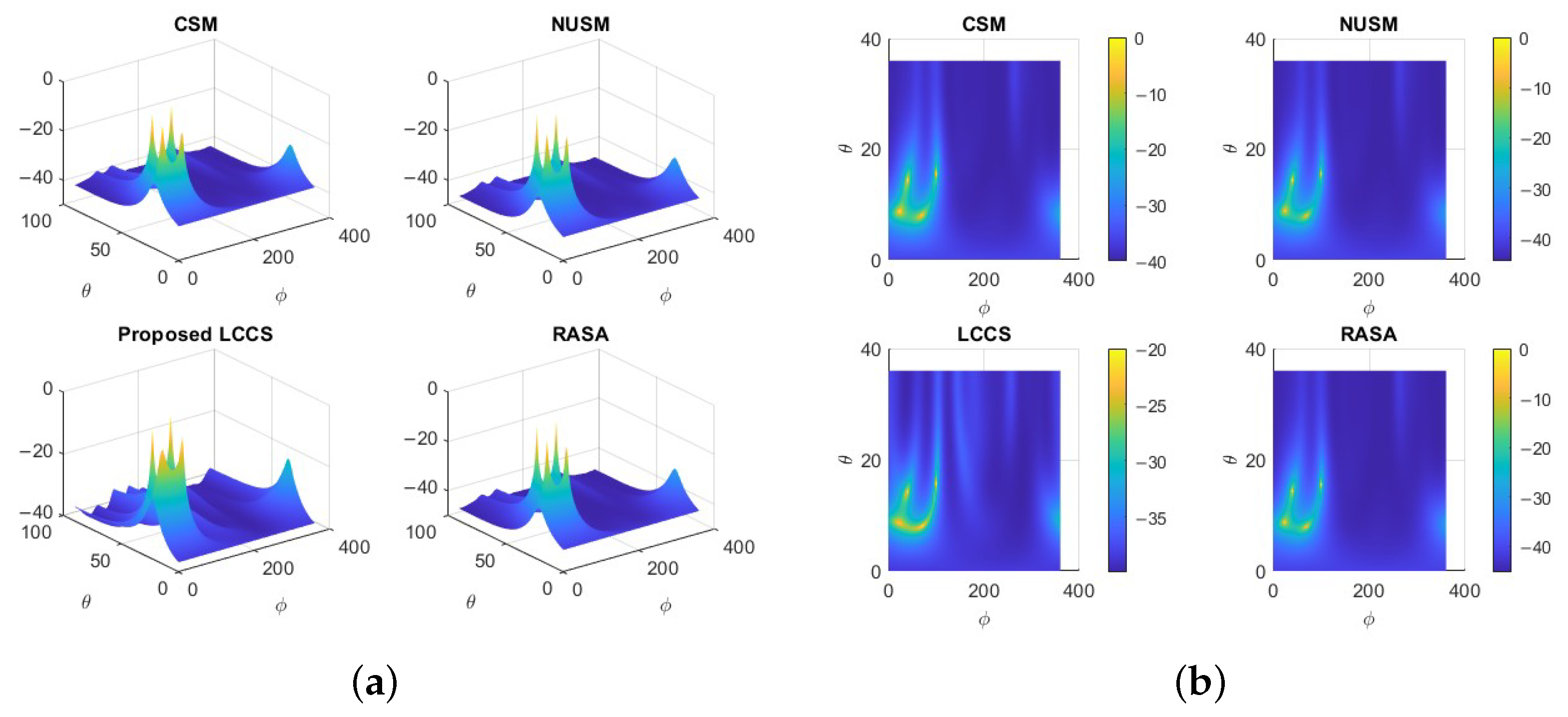

6.1. Two-Dimensional (2D) DoA Estimation

To assess the effectiveness of the proposed DoA method with a 2D array configuration, we apply the URA with M elements positioned in the x–y plane. The goal is to estimate elevation angle and azimuth angle within the ranges and , respectively. For this, we use a rectangular array with twelve equally spaced elements () to capture randomly generated BPSK signals transmitted by far-field sources. The simulation parameters are set as follows: SNR = 10 dB, element spacing , and number of snapshots . In this setup, four closely spaced plane waves () impinge on the array with elevation and azimuth angles given by: and .

In

Figure 7a, all algorithms have identical peak locations, but a comparison of 3D RASA has sharper, more concentrated peaks with lower sidelobe levels than CSM and NUSM. Even with modest visual variations, these traits improve angular resolution and target separation. In multi-source circumstances, RASA’s noise subspace refinement and decorrelation improve peak sharpness and reduce ambiguity. As seen from above, the detected DoAs can be depicted as shown in

Figure 7b. Despite the few visual variations, the RASA spectrum shows better resolution than other techniques, with less dispersion and sidelobe interference and a greater concentration around the correct angles. A comparison of 3D and 2D MUSIC-like spectra shows that the RASA yields the most compact mainlobes with reduced sidelobe leakage, making the peaks more clearly distinguishable than CSM, NUSM, and Proposed LCCS.

6.2. Performance on Non-Uniform Arrays

The algorithms’ performance under various sensor geometries was investigated by taking into account both two-dimensional non-uniform rectangular arrays and one-dimensional non-uniform linear arrays.

The simulation used

snapshots with an input SNR of 10 dB to replicate a NULA with

unevenly spaced elements. Azimuths

,

, and

were assigned to three uncorrelated sources at broadside elevation (

).

Figure 8’s azimuth spectra demonstrate that CSM generates broad, poorly resolved peaks, whereas NUSM provides shallow responses that are unable to distinguish between the sources. The higher noise level and spurious lobes introduced by the proposed LCCS suggest that linear apertures have poor decorrelation capabilities. At the proper azimuths, the RASA generates sharp, well-localized peaks; however, because linear arrays are ambiguous on the left and right, the spectra display mirror symmetry, giving rise to five apparent peaks (

,

, and

) rather than three.

A NURA comprising

elements was simulated on a perturbed

grid with

snapshots at an input SNR of 10 dB. Four sources were located at coordinates

,

,

, and

. The pseudo-spectra in

Figure 9 indicate that CSM and NUSM produce blurred ridges with uncertain localization. The Proposed LCCS recognizes certain source regions but generates duplicated and displaced peaks, indicating diminished stability with restricted snapshot support. The RASA accurately identifies all four genuine directions with distinct and separate peaks, validating its efficacy for direction-of-arrival estimate in arbitrary two-dimensional non-uniform arrays.

These tests illustrate that CSM and NUSM serve solely as baseline references and exhibit a lack of robustness when utilized in non-uniform geometries. The Proposed LCCS provides limited enhancement but is still contingent on geometry, faltering in NULA setups and producing unreliable estimates on NURA with a lower number of snapshots. Conversely, the RASA consistently attains precise and stable localization in both one-dimensional and two-dimensional non-uniform arrays, positioning it as the most dependable method for practical direction-of-arrival estimation.

6.3. Resolution Comparison: Numerical Example

To test the resolution capability of these four algorithms, a URA with 16 array elements is used to detect DoAs of seven signal sources of elevation angles () given by and the same azimuth for all sources, .

Figure 10 shows the detection performance of the algorithms for this specific case.

is the power of the signal at that specific angle

. It is clear that the three negative and positive elevation-angled sources are closely located. A closer look reveals significant variations in resolution quality and accuracy, even if it could seem that all approaches identify the seven peaks in

Figure 10. In particular, only RASA correctly detects the two clusters of closely spaced sources as well as all seven real DoAs. On the other hand, under-detection results from peak merging in those clusters for CSM and NUSM. The proposed LCCS shows six peaks, but it does not differentiate all closely spaced sources, and one of them is probably a false detection because of spectral noise. Because RASA was the only method among those tested that successfully resolved all sources with high accuracy and no observed false positives, the visual presence of seven peaks alone does not guarantee accurate detection.

As a result, only the RASA algorithm successfully resolves the two clusters of closely spaced sources, resulting in seven distinct and well-separated peaks without merging or false detection, even though all algorithms display numerous peaks. The other methods, on the other hand, show overlapping or wider peaks, which causes uncertainty in source localization. This illustrates RASA’s improved robustness and higher resolution capacity in situations with high-density sources.

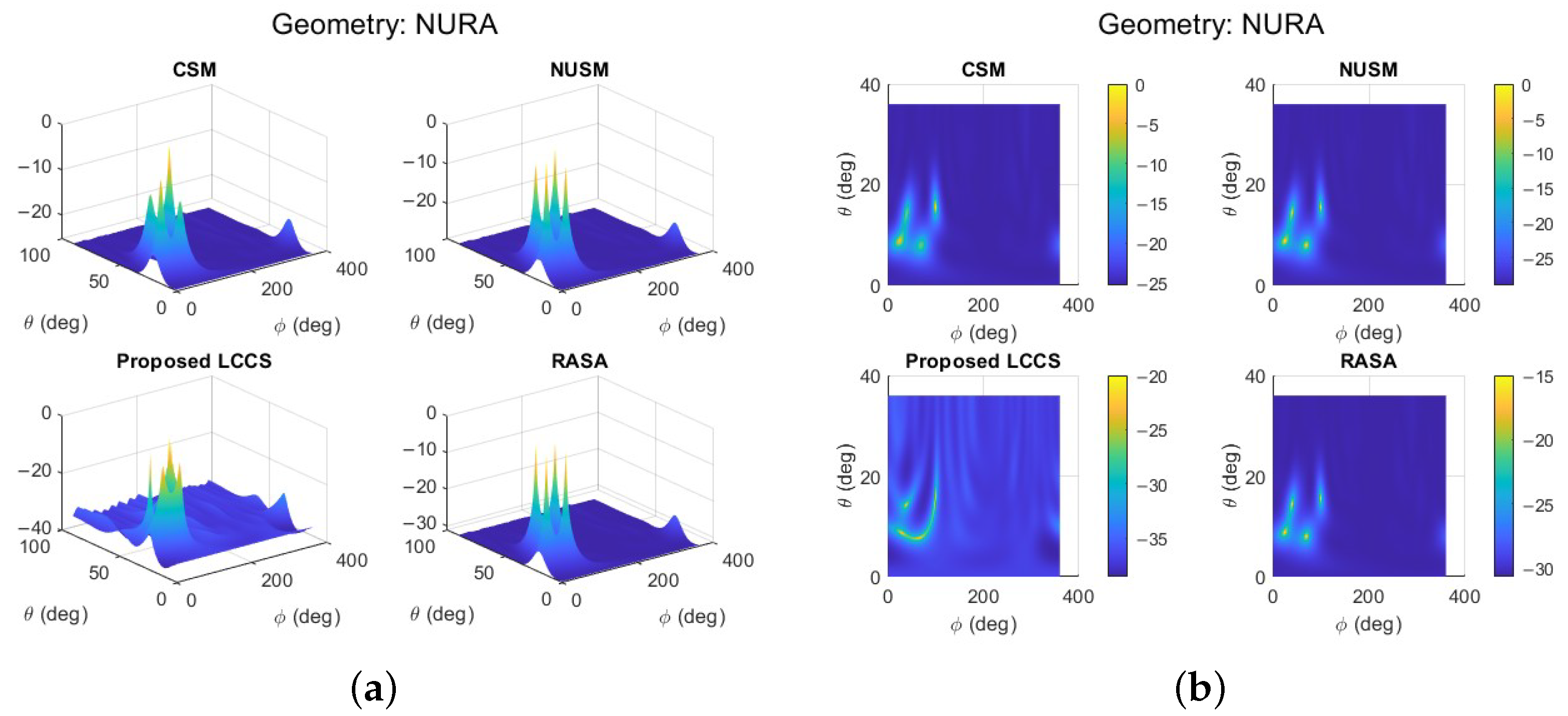

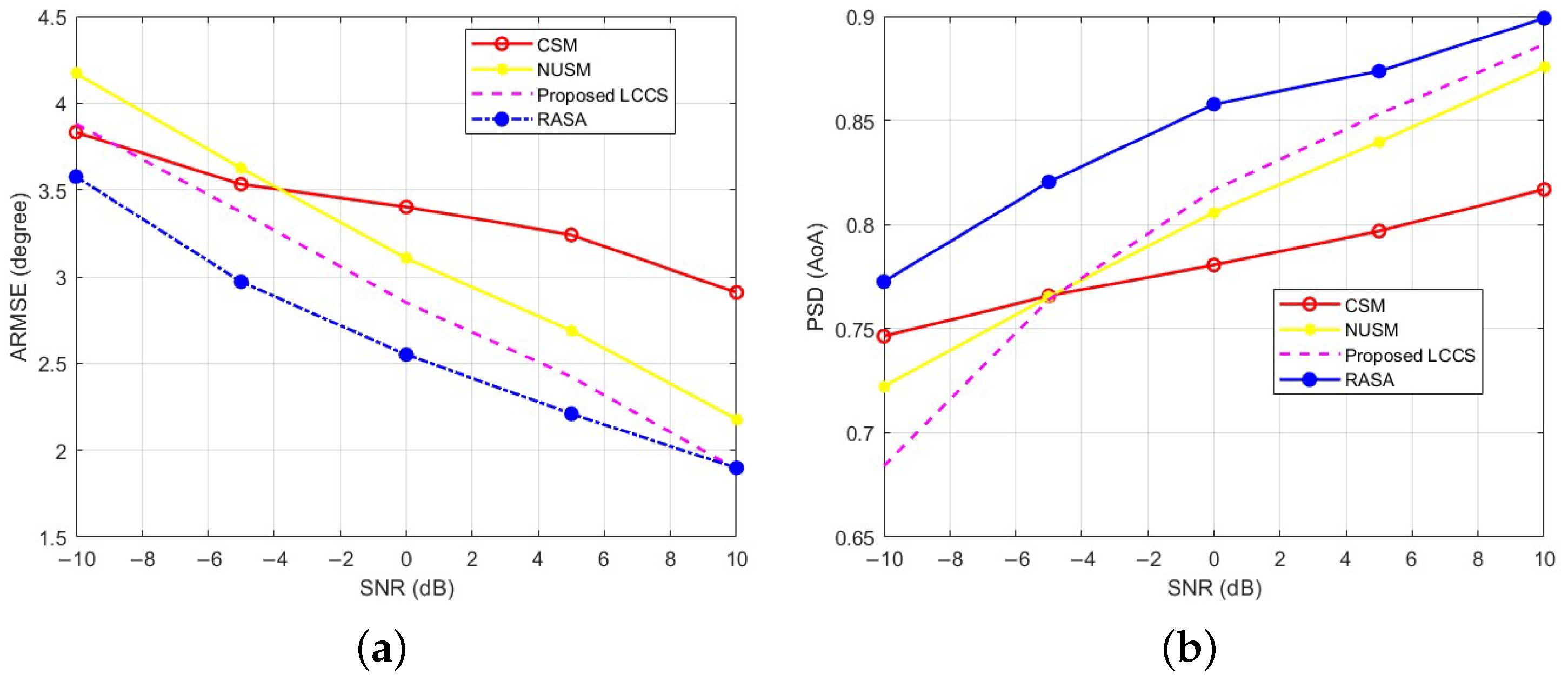

6.4. Comparison Based on Different SNR Levels

This section examines how SNR affects the estimators’ performance. The SNR varies from to 10 dB in 5 dB steps. In order to receive signals from L = 10 sources that are positioned at random throughout the angular sector of , a ULA with sensors is used. To guarantee a consistent and equitable comparison, a total of Monte Carlo trials is carried out for each SNR level. In each trial, a fresh set of ten random DoAs is created and used uniformly across all methods. is the fixed number of data samples in each trial, and all estimators use the sample covariance matrix.

An initial summary of the performance of all tested algorithms, including RASA, Standard MUSIC, Proposed LCCS, NUSM, CSM, Real Proposed, and Proposed SS, is shown in

Figure 11a. RASA and Standard MUSIC have nearly identical ARMSE at all SNRs; the curves overlap completely, rendering the RASA trace visually indistinguishable. These two methods consistently achieve the lowest angular-estimation error. Similarly,

Figure 11b shows that both methods attain the highest PSD and maintain high resolution across the entire SNR range. By contrast, Proposed SS and Real Proposed perform substantially worse, while Proposed LCCS, NUSM, and CSM are intermediate.

Similar to MUSIC, RASA is predicated on a recognizable noise subspace. With our setup (

,

,

), SNR significantly below

results in the traditional threshold effect: Eigen-separation collapses, and the degradation of all subspace DoA estimators is comparable. Consequently,

Figure 11 starts at

, the lowest SNR at which the subspace is still usable in this configuration. In accordance with the reduced subspace column correlations shown in

Table 1, RASA’s selection of the least-correlated noise-subspace columns results in a better-conditioned projector when the subspace is recognizable.

These conclusions lead us to exclude the lower-performing methods (Proposed SS and Real Proposed) from further analysis and to focus on the strongest baselines for a clearer comparison.

Figure 12a compares RASA, Proposed LCCS, NUSM, and CSM; Standard MUSIC is omitted because its performance is indistinguishable from RASA in

Figure 11. While RASA achieves the lowest ARMSE among the studied SNRs, Proposed LCCS comes in second, while NUSM and CSM show larger estimate errors, especially at low SNR. Likewise,

Figure 12b demonstrates that in this configuration, RASA attains the maximum PSD throughout the sweep; Proposed LCCS comes in second, followed by NUSM and CSM. Everything is true within the operating SNR range, which in our arrangement is

dB, where the noise subspace is discernible.

The results show RASA performing best on ARMSE and PSD in this setup; the following sections therefore concentrate on the strongest baselines.

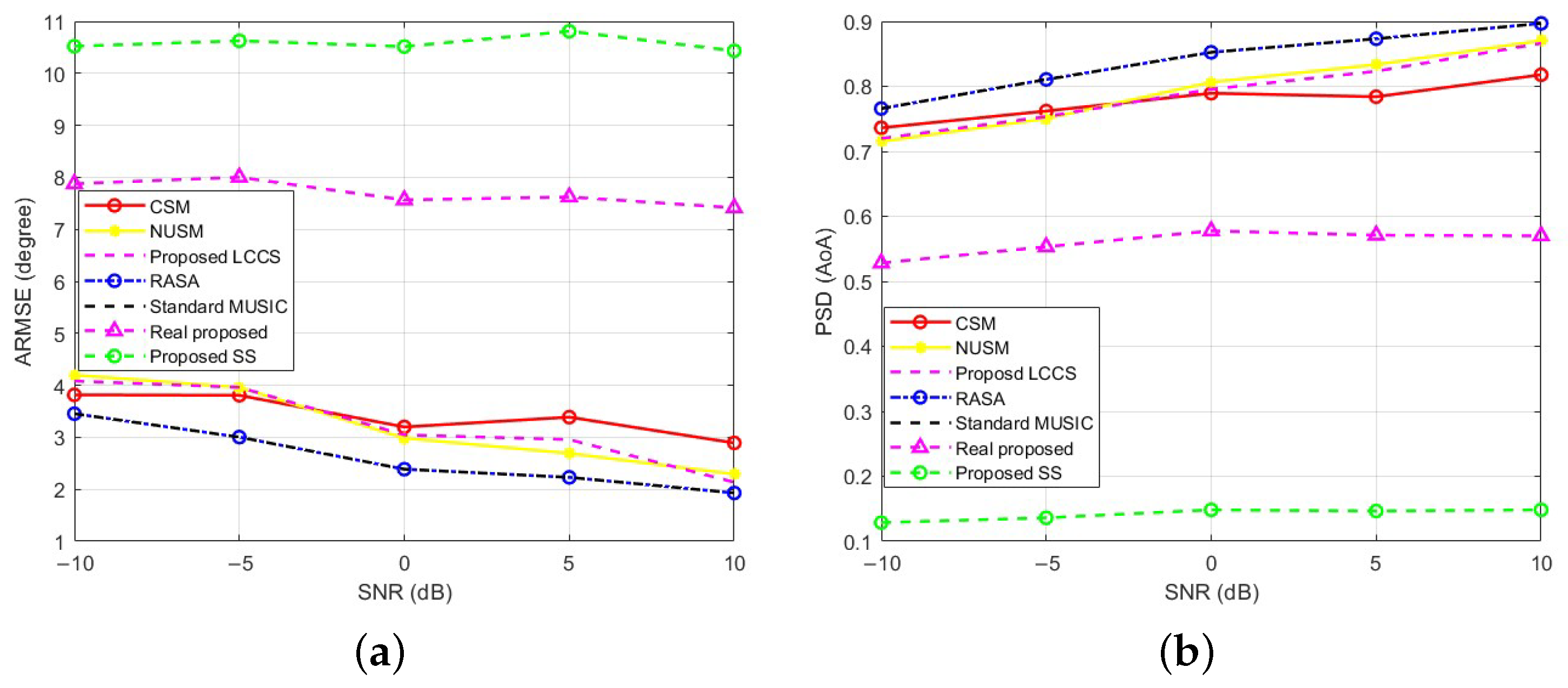

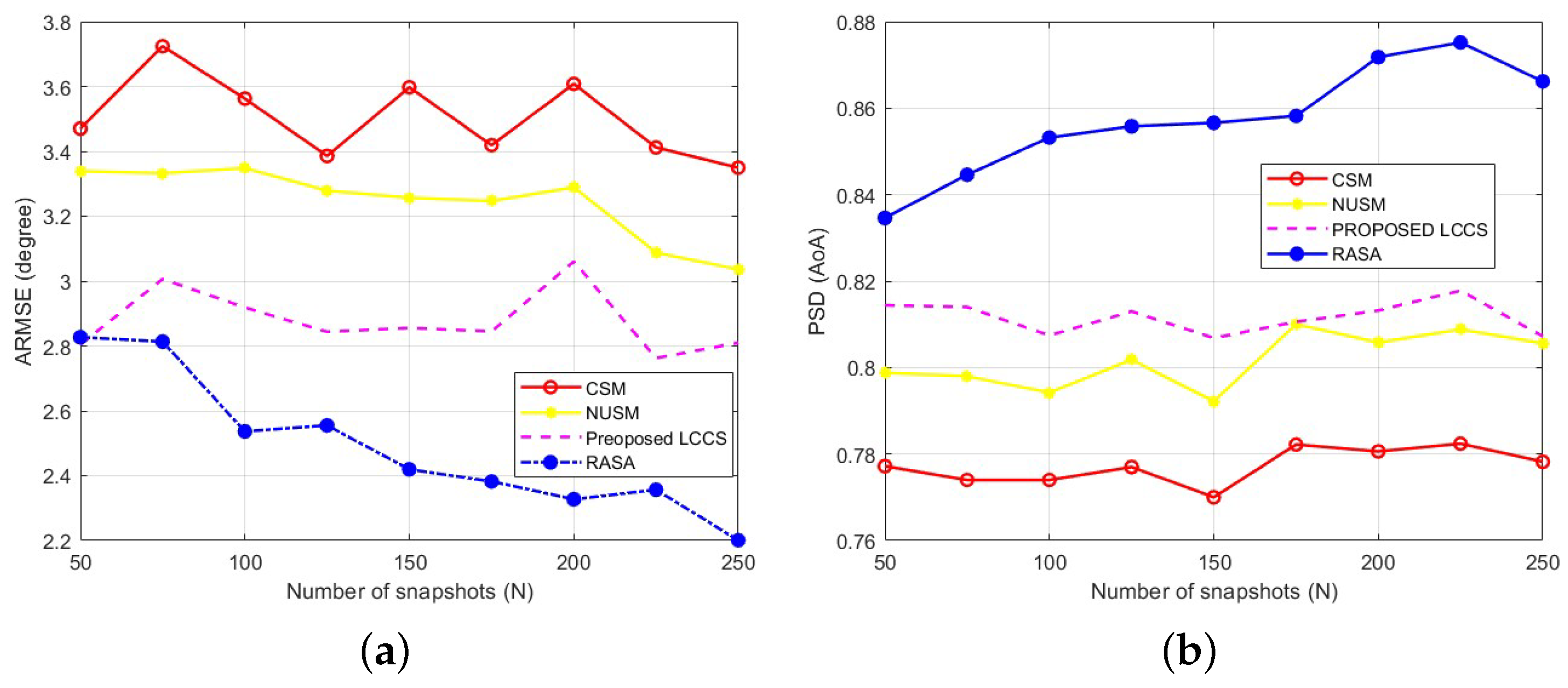

6.5. Comparison Based on Different Numbers of Snapshots

The capability of direction-finding techniques to accurately determine the location of sources with a limited number of snapshots is crucial, as obtaining a large number of snapshots is not always feasible in many real-world applications. In this context, we investigate the impact of varying the number of snapshots on the estimation accuracy of the proposed sampling method, alongside its competing techniques. To do this, we set the SNR to 0 dB and construct the CM using different snapshot values:

. The number of sources and the receiver array properties remain the same as in the scenario presented in

Section 6.3 (

,

). To ensure unbiased results, one thousand Monte Carlo trials are performed. For each value of

N, the ARMSE and PSDs are computed, and the results are presented in

Figure 13a and

Figure 13b, respectively.

The detection capacity of the other algorithms (CSM, NUSM, and the suggested LCCS) stays almost constant as the number of snapshots grows, as

Figure 13 makes evident. On the other hand, the RASA sampling technique consistently beats all benchmarks, obtaining higher detection probability and lower estimation error over the whole range of

N. This demonstrates how RASA can use the correlation structure of the noise subspace columns to choose the most informative columns, resulting in a decorrelated and well-conditioned sampling matrix with a sharper MUSIC spectrum. Furthermore, it should be mentioned that the sub-degree ARMSE and PSD values that approach unity under more moderate settings are achieved by these methods, while the absolute levels (ARMSE

, PSD

) reflect the purposefully strict simulation setup (

,

, short snapshots). The slight oscillations observed in

Figure 13a arise from the random generation of DoAs in each Monte Carlo trial and the finite number of realizations, which introduce small statistical variations even after averaging.

6.6. Comparison Based on Correlated Signals

Correlation between arriving signals leads to an increase in the Sidelobe Level (SLL) in array responses, which negatively affects direction-finding systems. To mitigate this effect, pre-processing techniques such as spatial smoothing are commonly employed. However, this solution introduces higher computational complexity. To maintain low computational costs, it is crucial that the technique can effectively handle correlated sources. This scenario examines the impact of signal correlations on the performance of the evaluated DoA techniques. For this, we assume that the incident signals are correlated due to multipath effects, with ten correlated signals (

) arriving at the same array as in the previous

Section 6.3 and

Section 6.4. Specifically, we assume that one signal is from the primary source, while the other signals are reflections of the first. To model this scenario, we vary the correlation level between the first and other signals using the following set of correlation coefficient values,

. The SNR and the number of data samples are set to 4 dB and 100, respectively.

Next, two thousand sets (

) of ten DoAs are generated, with each set applied equally across all considered algorithms. For each trial, we compute ARMSE and PSD, then plot the results against the correlation coefficients

, as shown in

Figure 14a and

Figure 14b, respectively. It is observed that the RASA algorithm achieves the highest accuracy compared to the other algorithms when the incident signals are either slightly (

) or highly (

) correlated. This indicates that the proposed sampling approach makes the PM method less sensitive and more robust to correlated signals by eliminating dependency on the steering vector. Furthermore, it is shown that the classical sampling method is the least robust to such signals, followed by the NUSM and Proposed LCCS methods, respectively.

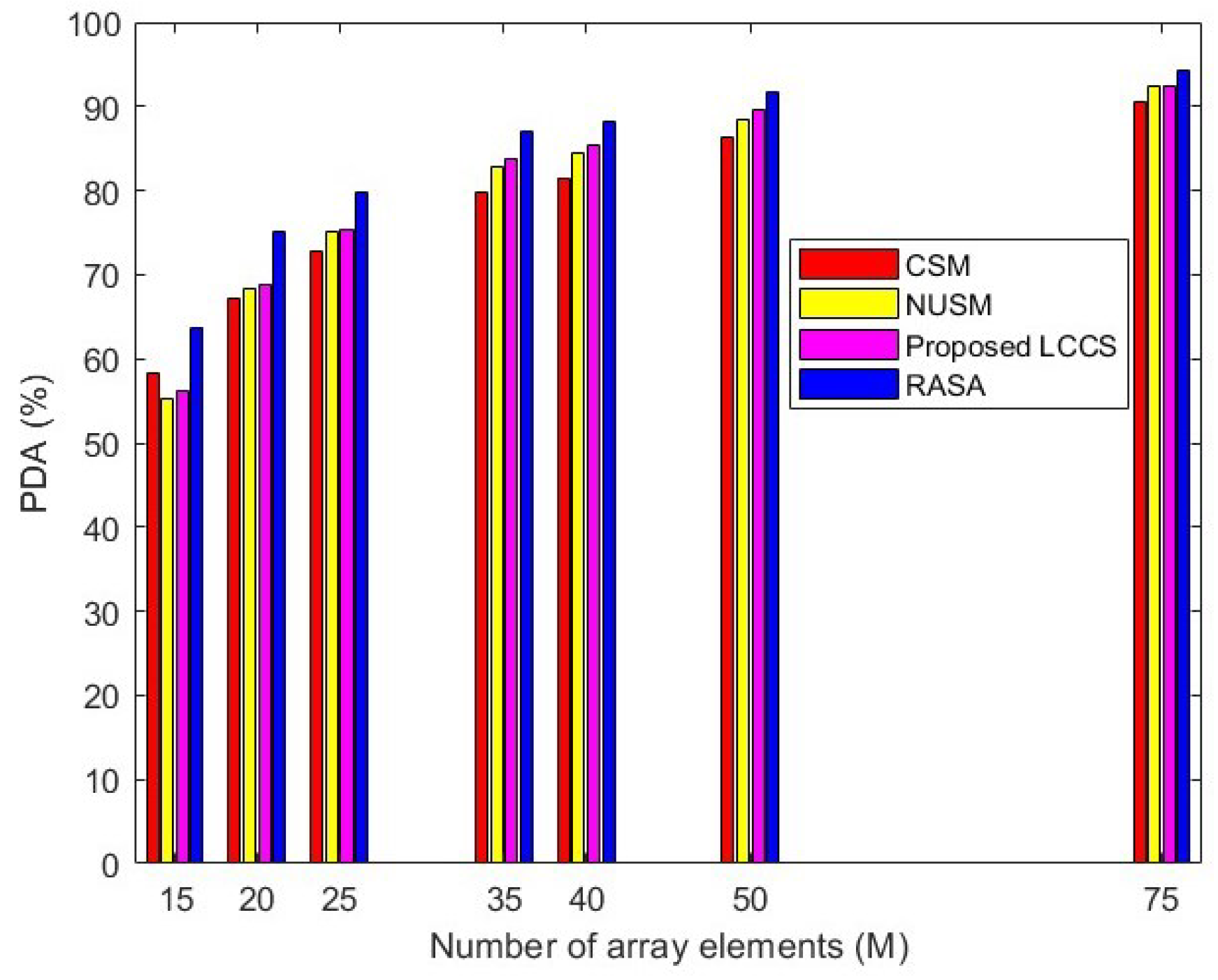

6.7. Comparison Based on Different Array Aperture

The purpose of this scenario is to examine how different values of

M influence the detection capability of the algorithms. Without loss of generality, the total number of equally spaced array elements is varied as:

. A simulation is conducted with the following parameters:

,

dB,

, and

. Therefore, the total number of generated DoAs is 5000. These DoAs are applied equally across all the algorithms. The Percentage of Detected Angles (PDA) is calculated for each sampling method using the following formula as defined in Equation (

44).

The resulting data is presented in

Figure 15. From the bar plot, it can be observed that a larger array size results in an increase in the aperture of the array, which in turn increases the number of detectable angles for all algorithms. The classical method exhibits comparable performance to the proposed method for a lower number of array elements. The NUSM and LCCS methods show moderate performance, with LCCS detecting more angles than the NUSM method. Notably, the RASA algorithm detects the highest number of angles, and this superior performance remains consistent for array sizes as small as 15 and as large as 75. This indicates that the proposed method outperforms the previous sampling techniques, regardless of whether the physical array size is small or large.

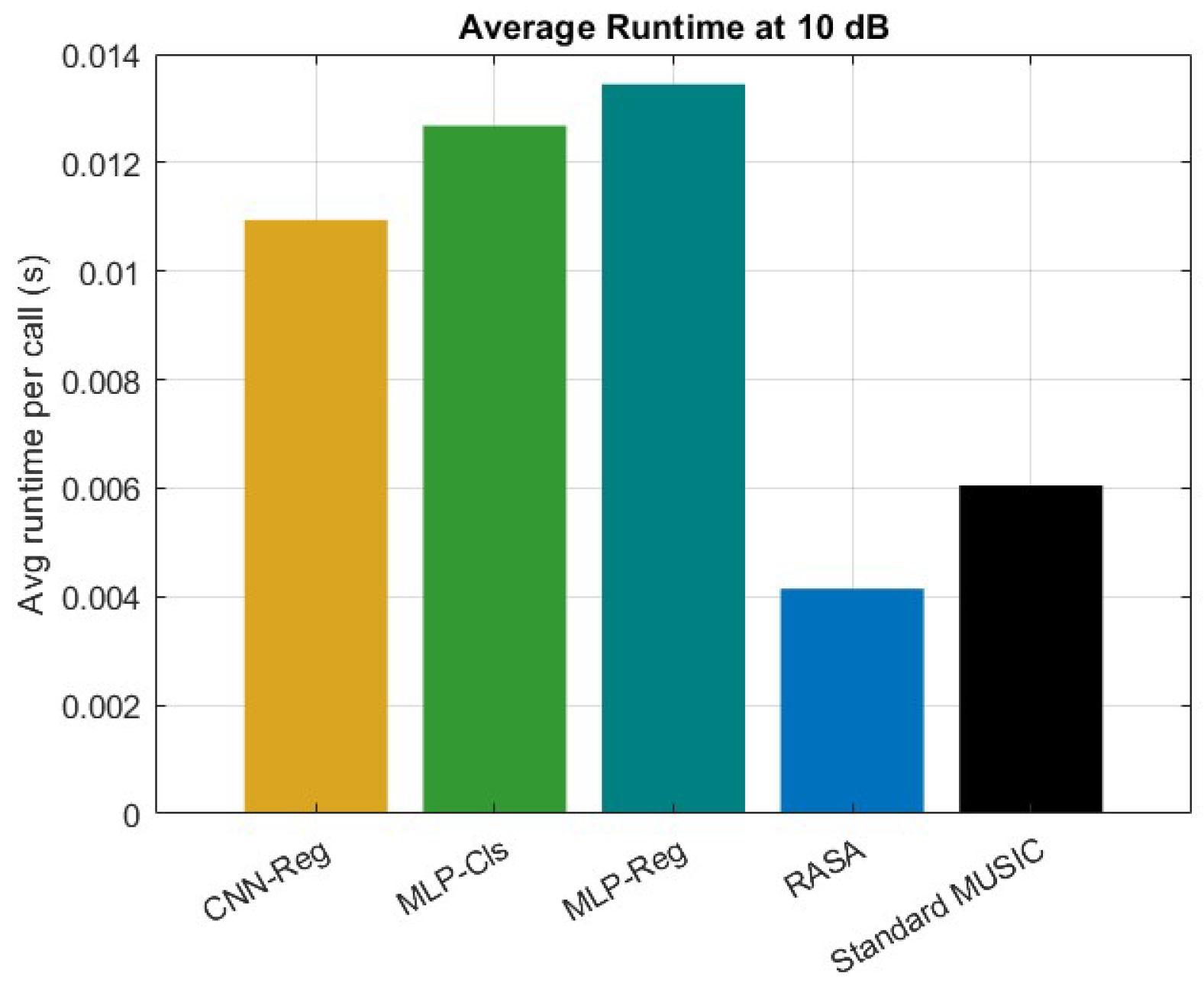

6.8. Comparative Evaluation: Standard MUSIC, RASA, and ML Post-Processors

A uniform linear array (ULA) including

sensors and

sources is examined, featuring inter-element spacing of

and

snapshots. The MUSIC pseudo-spectrum is examined throughout the interval

utilizing a grid of

(181 bins). Uniform diagonal loading is implemented on the covariance matrix

across all methodologies. Test signal-to-noise ratios (SNRs) are

dB, assessed under both uncorrelated and coherent sources, with and without perturbation of element positions. The selected metrics are root-mean-square error (RMSE, degrees), probability of successful detection within

(PSD), and average runtime per invocation. Three post-processors at the spectrum level function on the unchanged normalized MUSIC pseudo-spectrum: (i) a spectrum-to-sorted DoA vector mapping using an MLP regressor; (ii) an MLP multi-label classifier over

angular sectors that yields the top-

K sectors’ centers; and (iii) a streamlined 2D-CNN regressor with two fully connected layers, global average pooling, and

convolutions along the angle axis. All the details about these three algorithms can be found in [

38,

39,

40]. To encourage generalization, models are trained on 4000 synthetic samples with random coherence/perturbation and SNR augmentation

dB. Each ML method’s inference cost is expressed as MUSIC time + model time; neither hybrid nor restricted scanning is employed.

The suggested method has a number of clear advantages over current ML-based DoA estimate methods. Initially, it circumvents the necessity of extensive labeled training datasets, which are generally necessary for neural network-based estimators like attention-driven models, convolutional neural networks (CNNs), and multilayer perceptrons (MLPs). As a result, the technique is less susceptible to domain mismatch and more data-efficient when used with various array topologies or propagation conditions. Second, unlike deep learning models, which frequently function as black boxes, the suggested approach preserves interpretability because the underlying pseudo-spectrum is still generated from subspace principles. Third, as shown by the runtime comparison in

Figure 16, our method shows lower computational cost during inference, whereas ML-based methods require additional matrix pre-processing and neural computations.

However, it is important to recognize some restrictions. Under very low SNR or highly coherent conditions, pure machine learning techniques can occasionally provide better robustness by learning to denoise or regularize the pseudo-spectrum. Moreover, without requiring spectrum scanning, ML models can produce extremely quick DoA estimations once they are trained. In order to address these issues, we provided comparison simulations in which the suggested approach was tested with MUSIC+ML hybrids (MLP-Reg, MLP-Cls, and CNN-Reg). The findings (

Figure 16 and

Figure 17) demonstrate that although machine learning techniques can marginally lower RMSE in some low-SNR situations, their effectiveness varies in the absence of substantial training data. On the other hand, the suggested approach continuously maintains a good balance between resolution, complexity, and generalization while achieving stable accuracy throughout SNR ranges.

The suggested RASA framework has the following advantages over ML-based DOA methods: it doesn’t require any training data, it ensures interpretability through eigen-structure analysis, and it maintains predictable complexity that scales solely with

M and

N. However, in extremely low-SNR or highly coherent situations, ML post-processors (MLP, CNN) can be useful and can generate continuous DOA estimations without grid scanning. The variations in RMSE, PSD, and runtime findings shown in

Figure 16 and

Figure 17 can be explained by these trade-offs.

7. Conclusions

In this study, a new DoA estimation algorithm, denoted as RASA, is proposed, and its performance was thoroughly evaluated through extensive simulation results. The simulations demonstrated the method’s consistently better performance across the tested scenarios, including 2D DoA estimation, resolution comparison, varying SNR levels, snapshot counts, correlated signals, and different array apertures. The proposed method, based on MUSIC with QLCCS, consistently outperformed other traditional techniques such as CSM, NUSM, and Proposed LCCS in terms of both accuracy and robustness.

In particular, the proposed method excelled in resolving closely spaced DoAs, as demonstrated by its ability to detect up to seven sources with high precision, even when the sources were in close proximity. In comparison, the other methods struggled to resolve multiple closely spaced sources, leading to missed detections or ambiguity in the DoA estimates. Moreover, the proposed method exhibited significantly better performance in terms of Average Root Mean Square Error (ARMSE) and Probability of Successful Detection (PSD) when subjected to varying SNR levels, demonstrating its robustness in noisy environments.

The results also highlighted the method’s resilience in situations involving correlated signals, where it outperformed other algorithms by maintaining high estimation accuracy despite increasing correlation coefficients. Furthermore, the proposed technique demonstrated remarkable performance even with a limited number of snapshots, proving its practical applicability in real-world scenarios where data acquisition may be constrained.

Overall, the proposed DoA estimation method proves to be a reliable and efficient solution for a wide range of practical applications, including communications and radar systems, where accurate direction-finding and source localization are crucial. The method’s ability to handle various real-world challenges, such as signal correlations, limited snapshots, and closely spaced sources, sets it apart from existing approaches, making it a promising tool for future advancements in DoA estimation. In summary, the RASA approach has demonstrated consistently better performance in terms of estimation accuracy and detection probability across a wide range of simulated scenarios. The next stage of this research will employ real passive radar data, with particular emphasis on challenging coherent-source conditions. This experimental validation will allow us to confirm the robustness of the method under practical factors such as hardware impairments, array calibration, and environmental uncertainties. In parallel, hybrid strategies that integrate ML-based denoising with the proposed subspace framework will also be explored, aiming to further improve robustness in low-SNR and highly coherent scenarios.