Reconstruction Error Guided Instance Segmentation for Infrared Inspection of Power Distribution Equipment

Abstract

1. Introduction

- The first real-world UAV-based infrared dataset for distribution inspection is proposed. This dataset offers the largest data volume, diverse geographic scenarios, and varied instance patterns, while also presenting challenges such as scale imbalance, distribution imbalance, and category imbalance. It establishes a comprehensive performance benchmark and validates the superiority of the proposed framework.

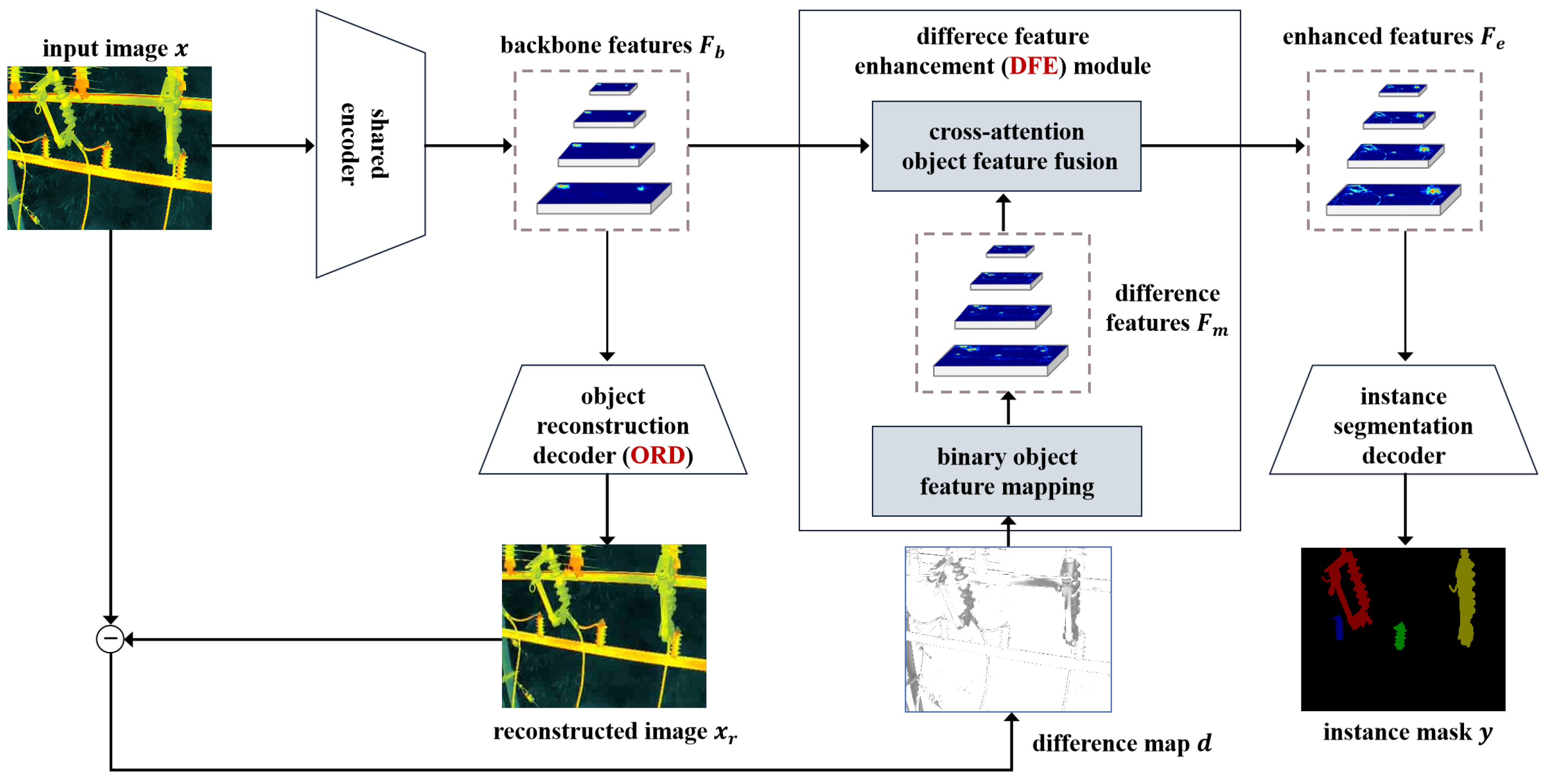

- An object reconstruction mechanism is proposed to effectively mitigate the information loss of objects by reconstructing the object regions. This approach reveals the correlation between reconstruction and degradation, providing effective object features via reconstruction error.

- A difference feature enhancement (DFE) module is proposed to enhance the spatial feature representations of objects, effectively preserving both the content integrity and the edge continuity of objects.

2. Related Work

2.1. General Instance Segmentation

2.2. Instance Segmentation of Power Infrared Scenarios

3. Methodology

3.1. Overall Architecture

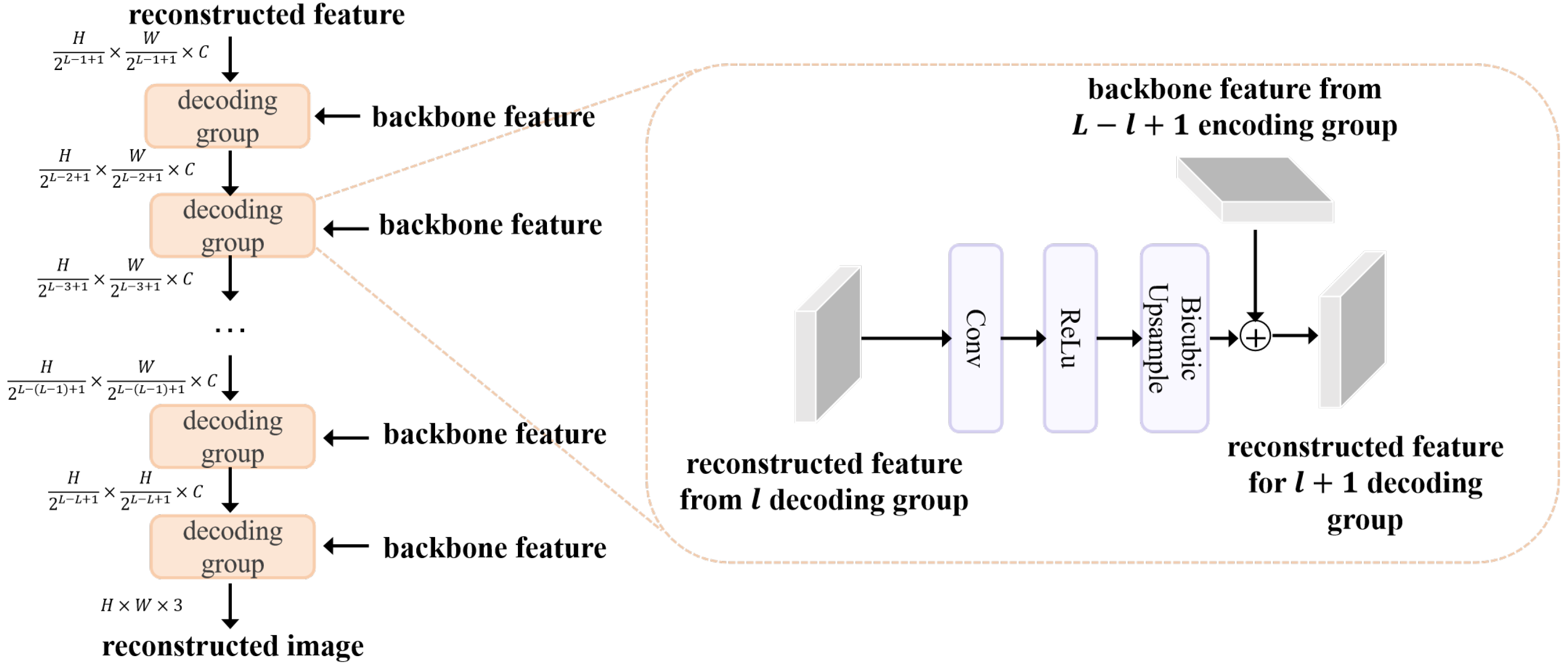

3.2. Object Reconstruction Decoder

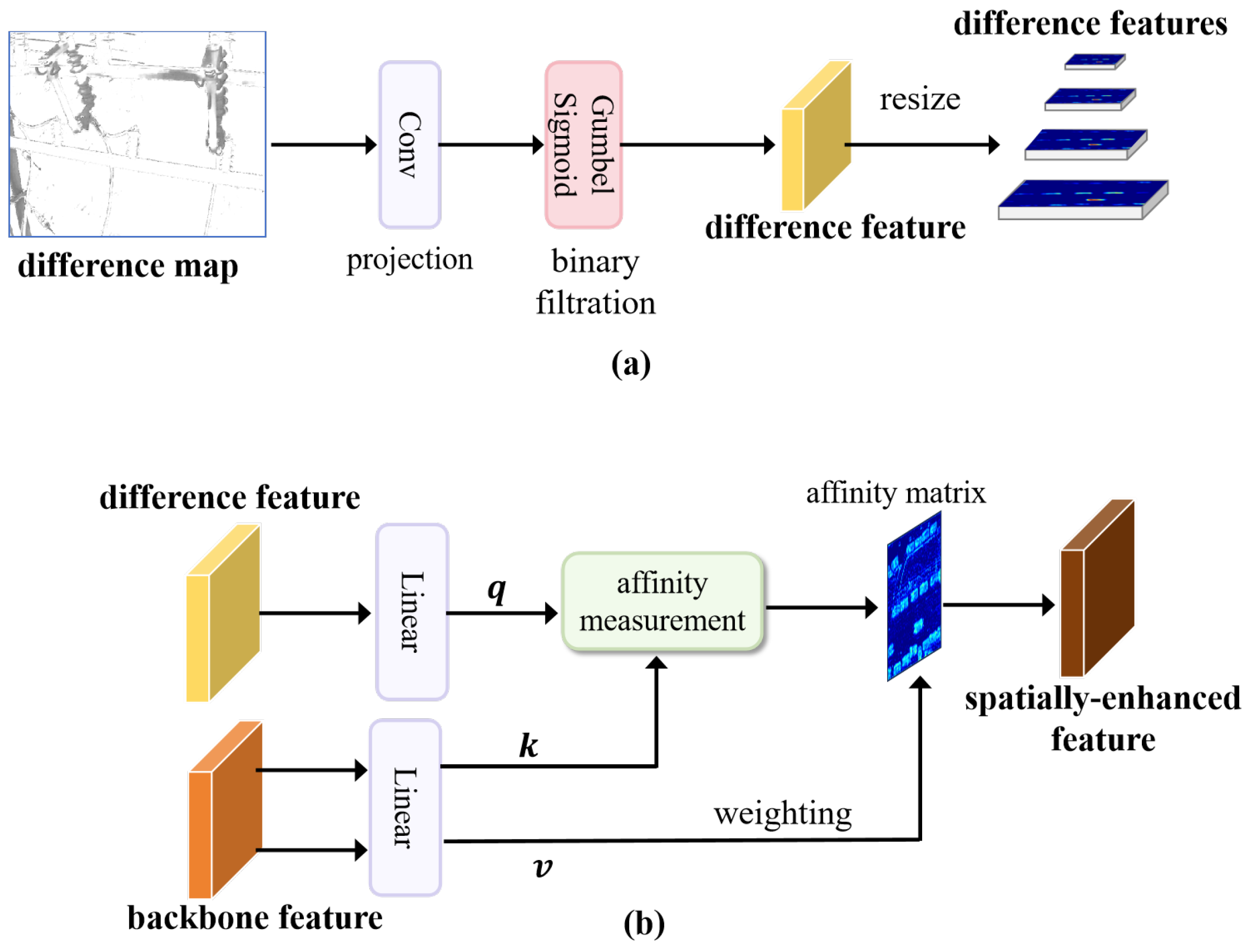

3.3. Difference Feature Enhancement Module

3.4. Objective Function

3.5. Training and Testing Pipelines

| Algorithm 1 Training pipeline of RE-guided instance segmentation framework |

Input: - Input infrared image - Ground truth instance segmentation mask (includes category , bounding box , mask , for each power distribution equipment) Output: - Predicted instance segmentation result includes category , bounding box , mask , for each power distribution equipment) - Reconstructed infrared image Steps: 1: Extract multi-scale backbone features: // Equation (1) 2: Reconstruct the image from multi-scale backbone features: // Equation (2) 3: Compute the reconstruction error (difference map): // Equation (6) 4: Project and filter difference map to obtain object features // Equations (7) and (8) 5: Align object features with backbone features to get multi-scale difference features // Equation (9) 6: Fuse multi-scale backbone and difference features: // Equations (10) and (11) 7: Predict instance segmentation mask from multi-scale fused features: // Equation (4) 8: Compute object reconstruction loss: // Equation (12) 9: Compute instance segmentation loss: // Equations (13)–(15) 10: Update network parameters by minimizing the total loss: // Equation (16) Return: , |

| Algorithm 2 Testing pipeline of RE-guided instance segmentation framework |

Input: - Input infrared image Output: - Predicted instance segmentation result (includes category , bounding box , mask , for each power distribution equipment) Steps: 1: Extract multi-scale backbone features: // Equation (1) 2: Reconstruct infrared image from multi-scale backbone features: // Equation (2) 3: Compute the reconstruction error (difference map): // Equation (6) 4: Project and filter difference map to obtain object features // Equations (7) and (8) 5: Align object features with backbone features to get multi-scale difference features: // Equation (9) 6: Fuse multi-scale backbone and difference features: // Equations (10) and (11) 7: Predict instance segmentation mask from multi-scale fused features: // Equation (4) Return:

|

4. Dataset Construction

4.1. Image Acquisition

4.2. Equipment Categories and Functional Roles

4.3. Image Annotation and Extension

- Annotation team: Seven professionally trained annotators from a data service company conducted the labeling, having received prior instruction on the specific categories of power distribution equipment.

- Annotation guidelines: A detailed guideline document was prepared, including category definitions, correct/incorrect annotation examples, and rules for handling special cases (e.g., occlusion and truncation).

- Quality supervision: Two senior engineers from China Southern Power Grid supervised the entire process. They continuously reviewed randomly selected subsets from each annotator. Ambiguities were discussed and resolved collectively, with iterative updates to the guidelines.

- Inter-annotator agreement: A randomly selected subset was cross-annotated by different annotators, and the average precision between the two sets of annotations was calculated. A high score quantitatively confirmed annotation consistency.

4.4. Comparison with Existing Infrared Datasets

- Largest scale of infrared images and instances: The PDI dataset contains 16,596 infrared images and 126,570 annotated instances, making it several times larger than existing infrared datasets in both image and annotation volume. This scale strongly meets the data requirements of modern data-driven methods and significantly improves the accuracy of instance segmentation for power distribution equipment.

- First UAV-based infrared dataset for power distribution inspection: While most existing infrared datasets focus on transmission lines or substations, PDI is the first to specifically target power distribution inspection using UAVs. Power distribution plays a critical role in delivering electricity to end-users, including households, industrial facilities, and commercial buildings, and is therefore essential for urban power grid reliability. Moreover, PDI includes seven critical categories of power distribution equipment, captured under real-world operating conditions.

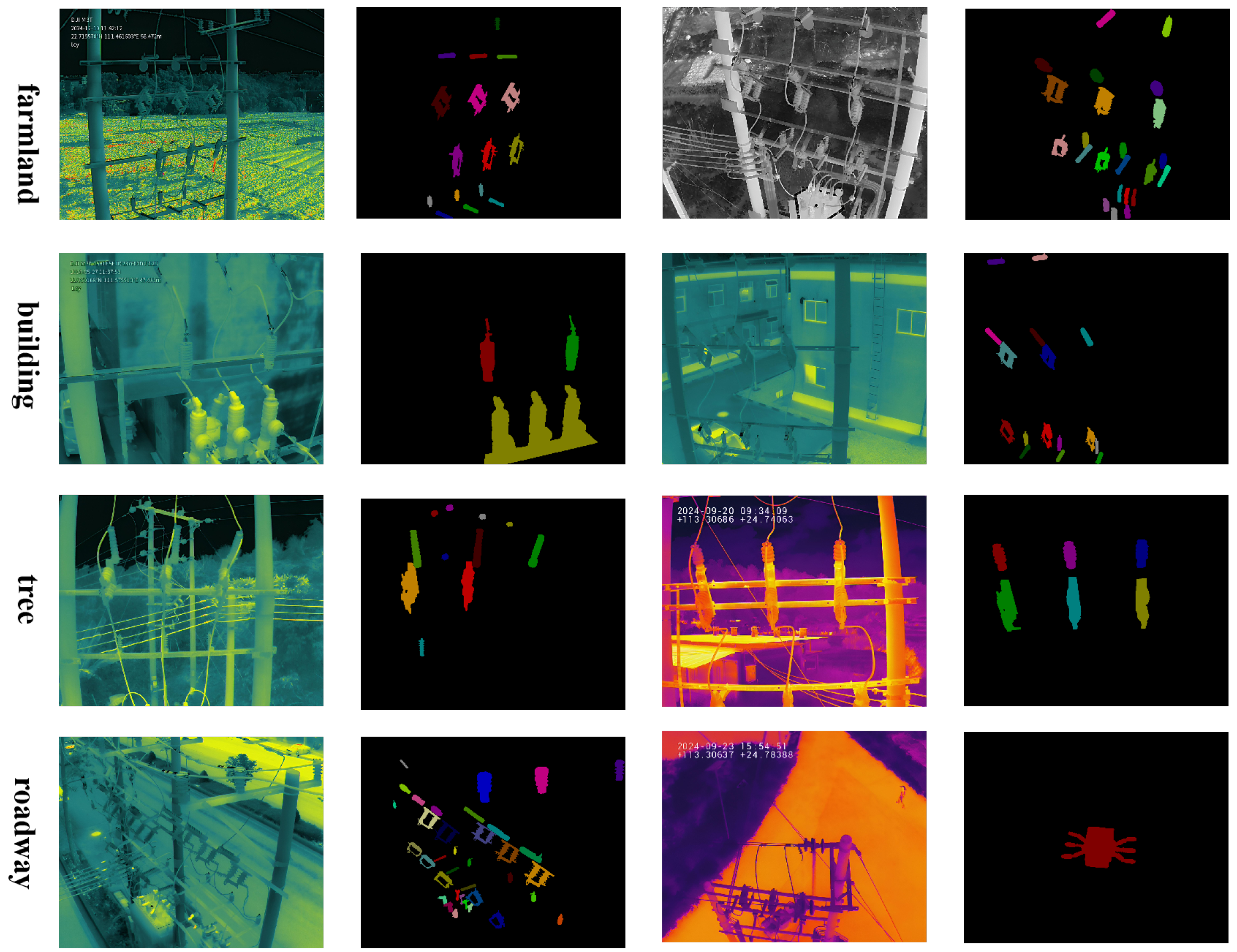

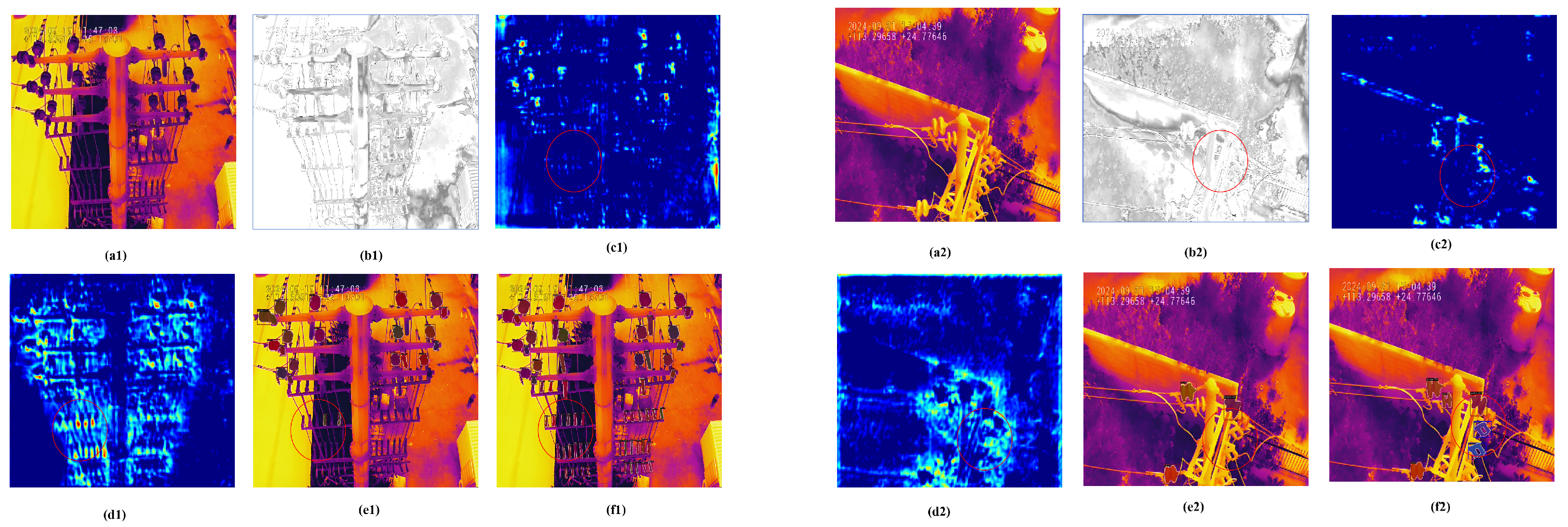

- Rich scenarios and diverse instance patterns: Power distribution equipment exhibits significant variations in appearance. Different viewpoints introduce arbitrary orientations, partial deformations, and occlusions; different flight altitudes lead to drastic scale changes; and different inspection periods result in diverse thermal radiation distributions. In addition, PDI covers hundreds of geographical regions across Guangdong Province (e.g., Yunfu City, Dongguan City), where equipment is embedded in complex backgrounds such as trees, buildings, farmland, and roadways. Representative examples are shown in Figure 5.

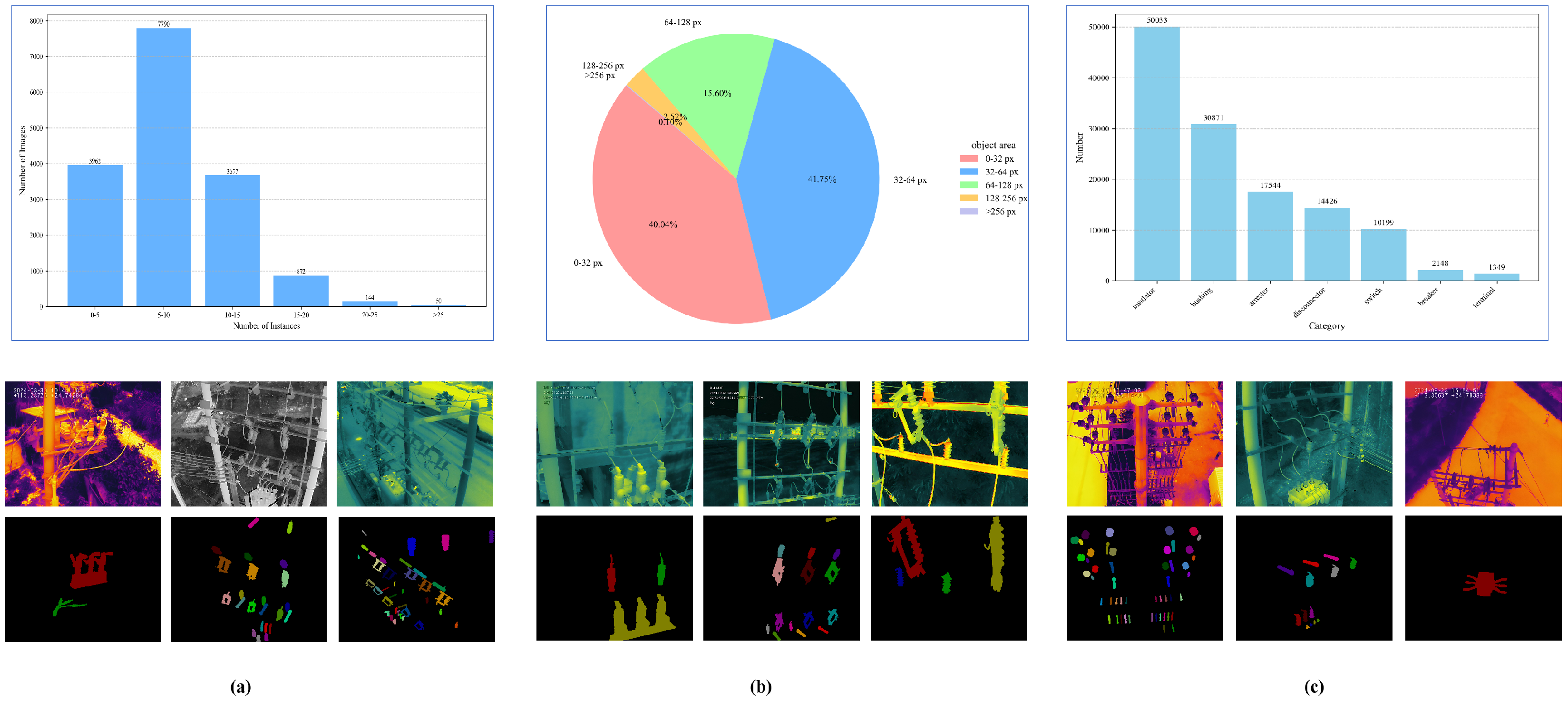

- Distribution imbalance: The number of instances per image varies considerably. Some images contain up to 49 instances, while others include only a single instance. The most common range is 5∼9 instances per image, whereas images with more than 30 instances are rare. This irregular distribution is illustrated in Figure 6a.

- Scale imbalance: Significant scale variations exist both within and across categories. For example, the largest switch measures 401 × 403 pixels (far exceeding the 96 × 96 reference, covering nearly 50% of the image area), while the smallest bushing is only 3 × 5 pixels (well below 32 × 32, less than 1% of the image area). Moreover, even within the same category, scale differences are dramatic: the largest bushing reaches 157 × 368 pixels (about 20% of the image area), whereas the smallest switch is only 7 × 15 pixels (less than 1% of the image area). Across categories, the variation is also evident, ranging from 32 × 32 pixels to 256 × 256 pixels, as shown in Figure 6b.

- Category imbalance: The frequency of categories is highly uneven. The most frequent category, insulator, contains 50,033 instances, while the least frequent, terminal, has only 1349 instances. This results in a long-tailed distribution with a ratio difference exceeding 30×, as illustrated in Figure 6c.

5. Experiment

5.1. Comparison Methods and Implementation Details

5.2. Evaluation Metrics

5.3. Ablation Studies

5.3.1. Effect of ORD

5.3.2. Effect of DFE Module

5.3.3. Effect of Filtration in DFE Module

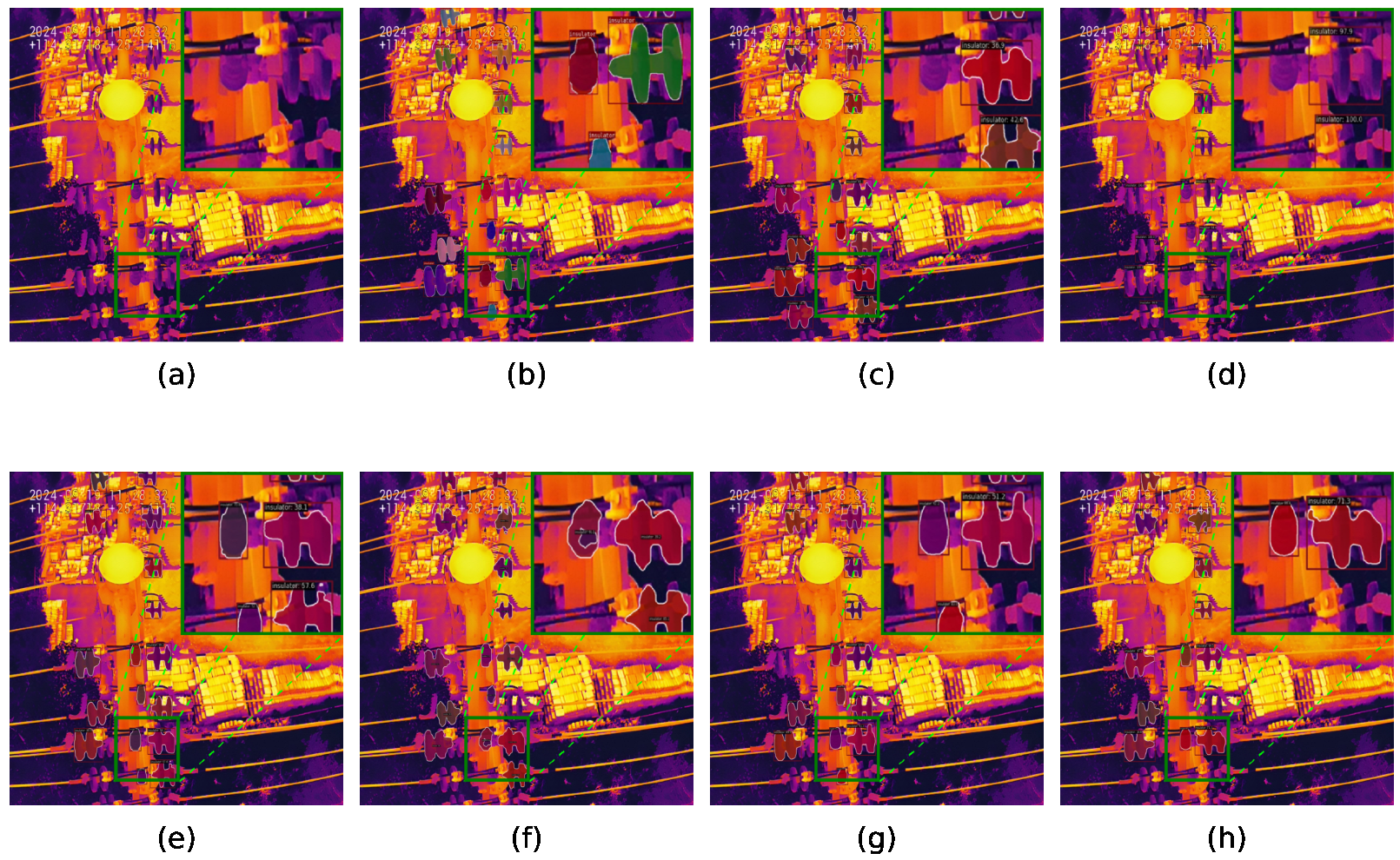

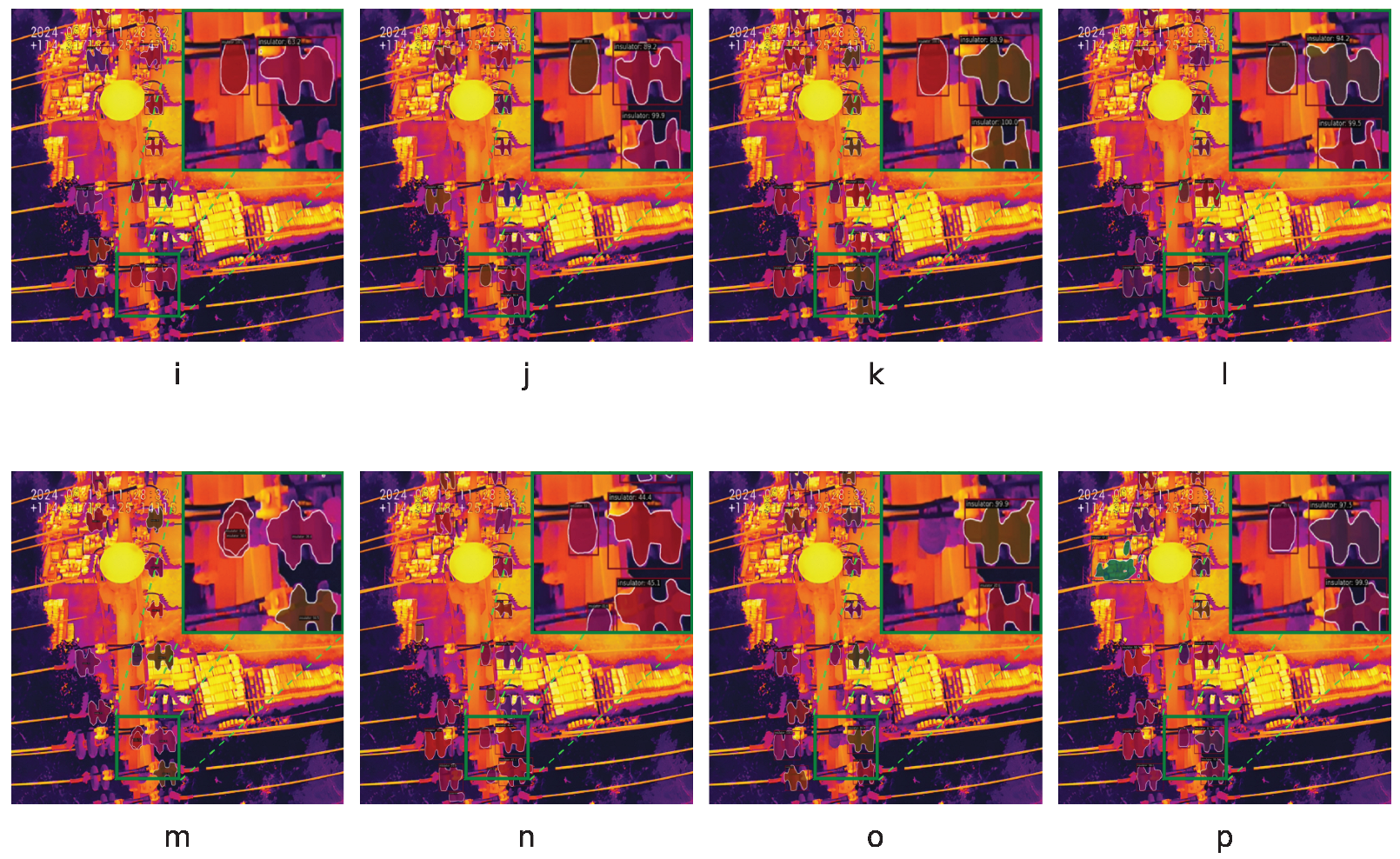

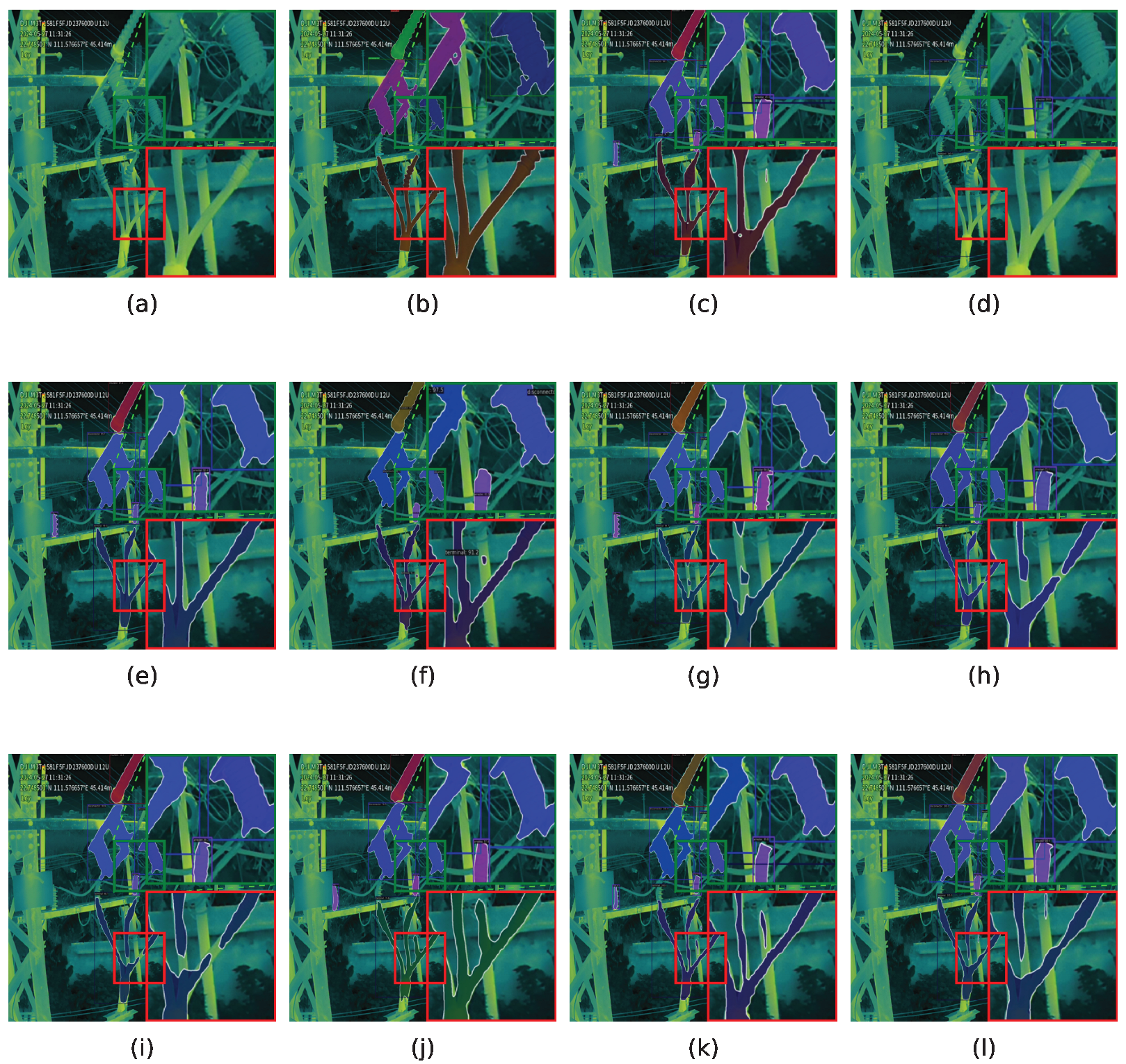

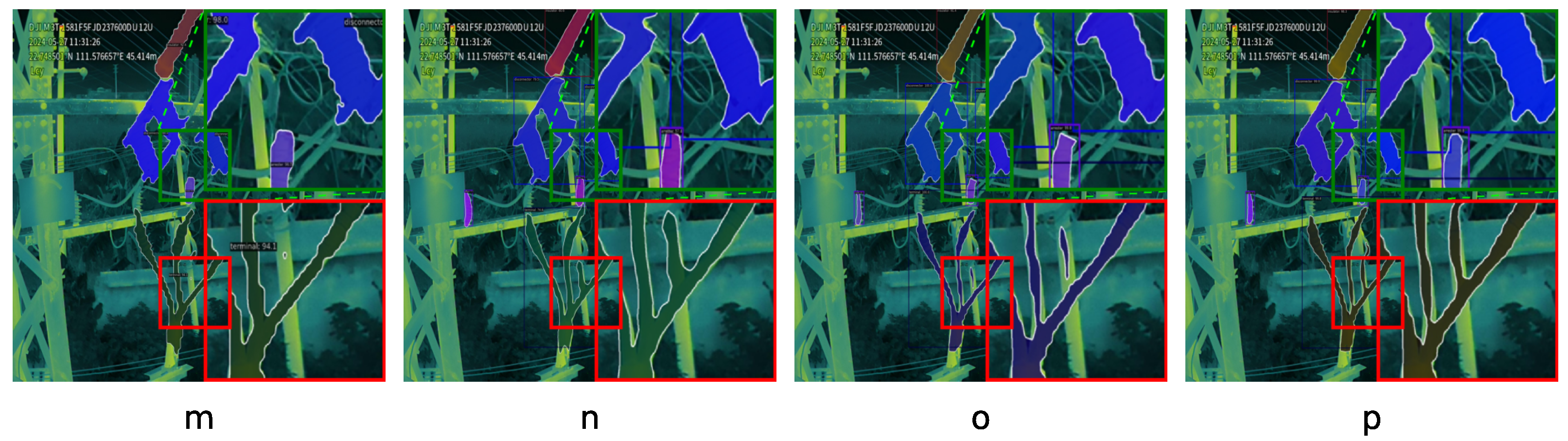

5.4. Qualitative Results

5.5. Quantitative Results

5.6. Expand Experiments

5.6.1. Category Imbalance

5.6.2. Scale Imbalance

5.6.3. Power Transmission Inspection

6. Discussion

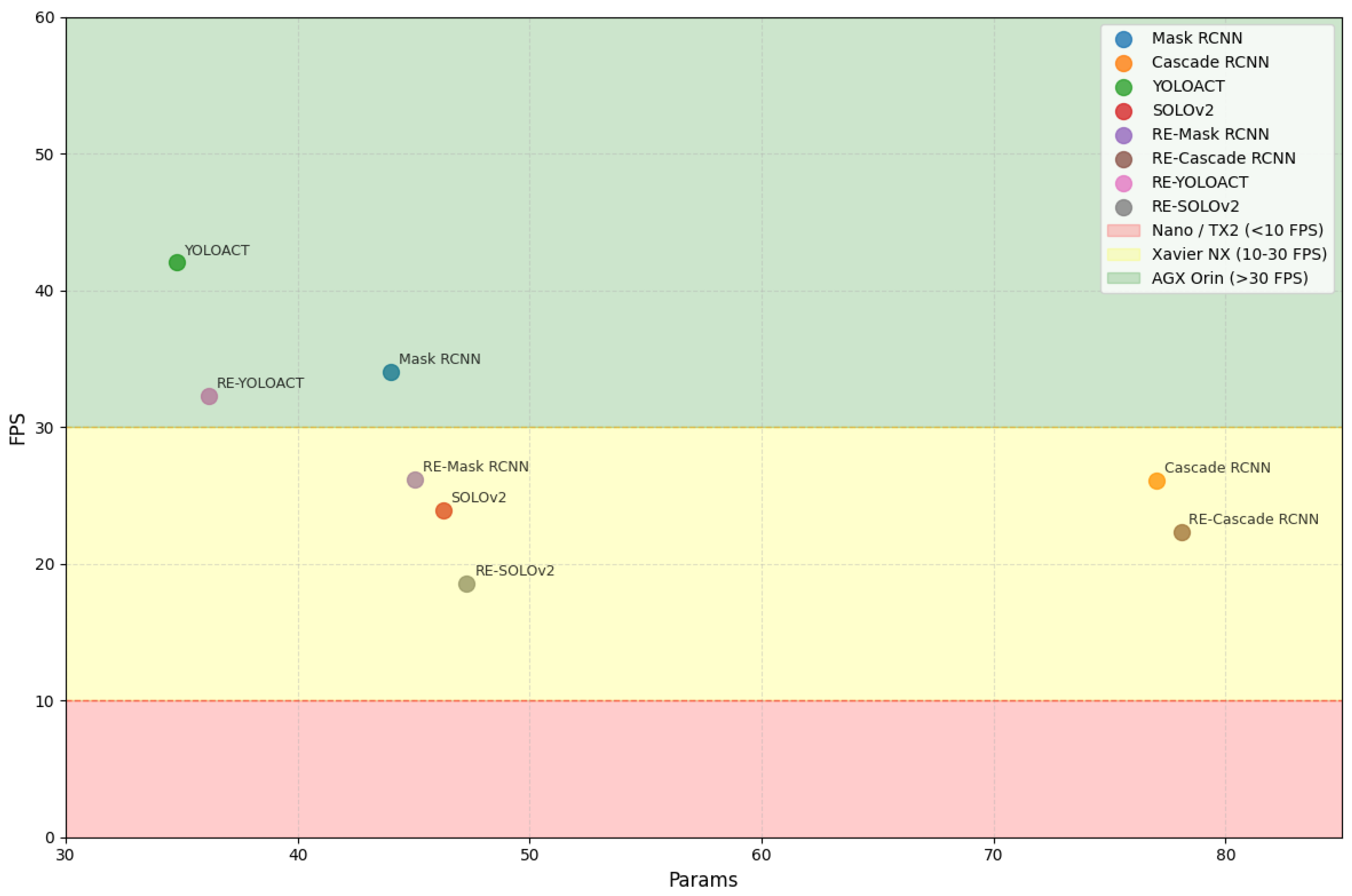

6.1. Framework Complexity Analysis

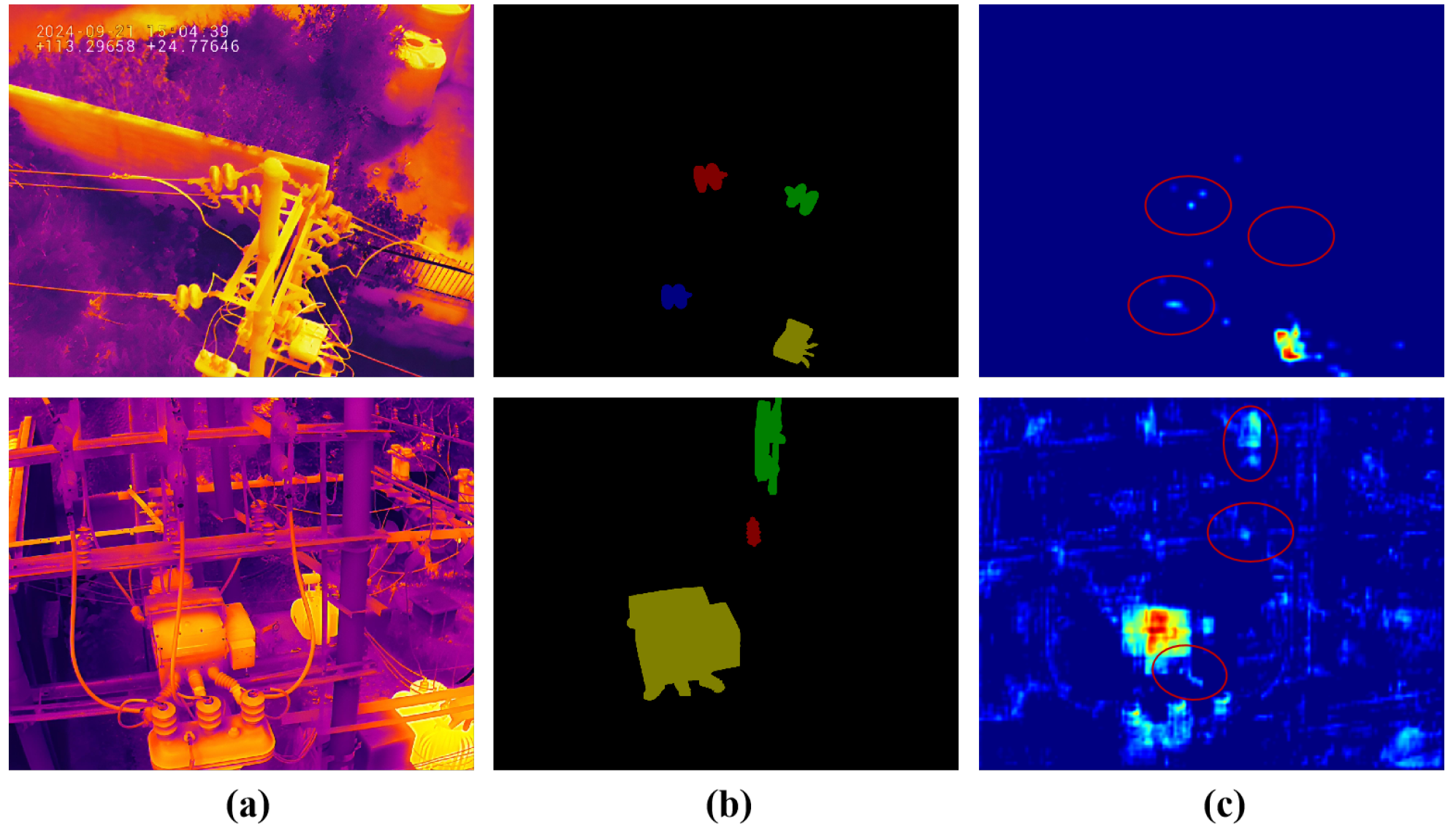

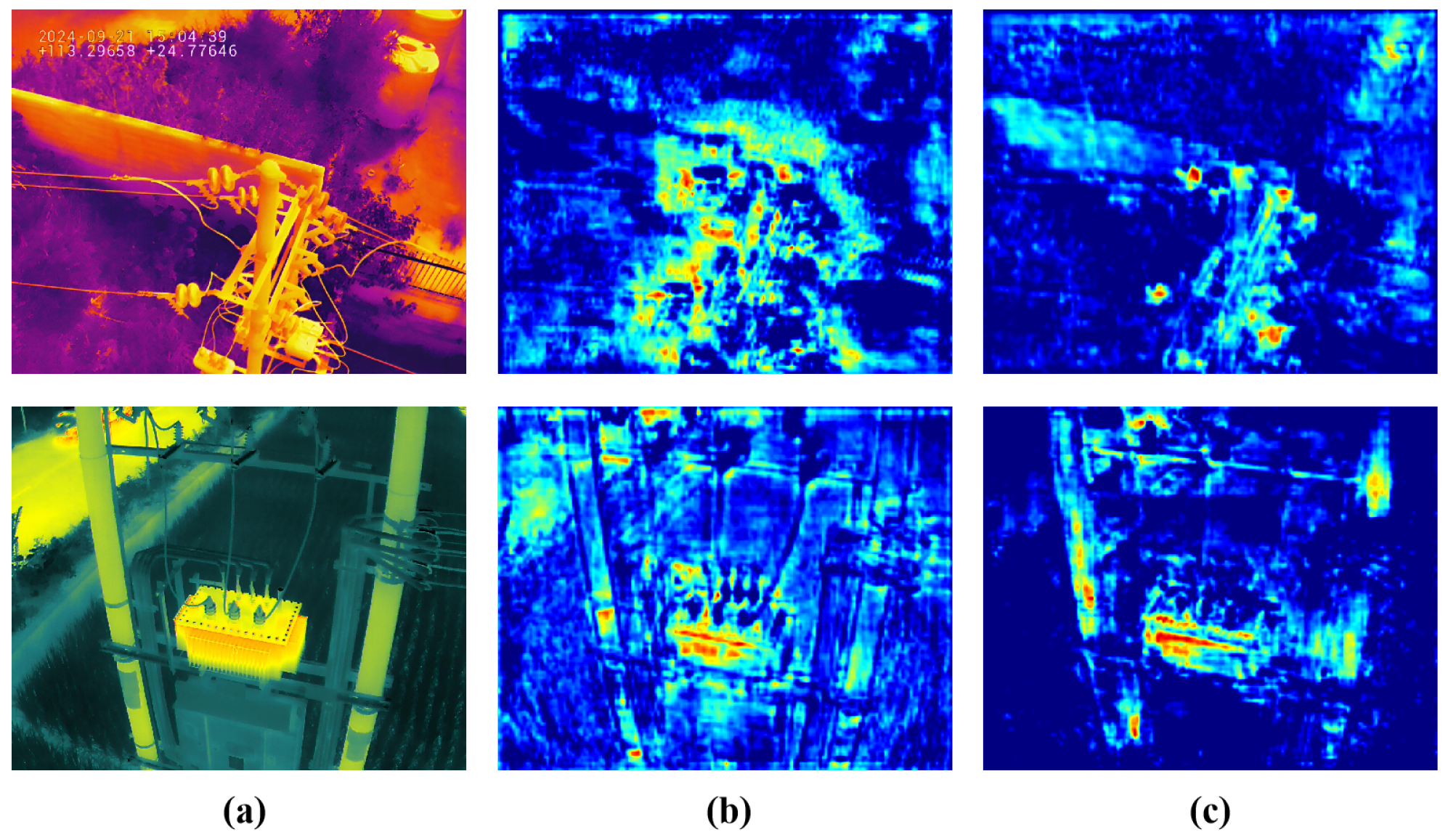

6.2. Visualization of the ORD and DFE Module

6.3. Application Discussion

- High-Accuracy Preventive Maintenance Analytics: Designed for offline processing of UAV-captured images, this scenario prioritizes maximum accuracy over computational cost. Accurate instance segmentation of all power distribution equipment serves as the foundation for subsequent automated temperature diagnosis, supporting reliable preventive maintenance.

- Real-Time Onboard Screening: Although the current framework emphasizes accuracy, its model-agnostic nature allows integration with lightweight backbones (e.g., YOLOACT). Future work will focus on pruning and quantization for deployment on embedded platforms (e.g., NVIDIA Jetson), enabling UAVs to perform real-time preliminary screening and generate immediate alerts for overheating. As shown in Table 7, current models are unoptimized, indicating additional potential for low-power real-time deployment.

7. Conclusions

- Multi-modal fusion: Infrared images often exhibit low contrast, limiting object discrimination. Fusing complementary information from visible images, which provide rich spatial textures and fine-grained details, can significantly improve detection and localization accuracy, particularly in complex scenarios.

- Semi-supervised and self-supervised learning: Annotating instance-level masks in infrared images is costly, restricting large-scale labeled datasets. Semi-supervised or self-supervised learning can exploit abundant unlabeled data, serving as effective pre-training strategies to learn generalizable and discriminative features while reducing reliance on labeled samples.

- Resource-efficient deployment: UAV-based applications require efficient processing under strict resource constraints. Future work will explore model compression and acceleration techniques, including pruning, quantization, knowledge distillation, and lightweight architectures, to enable real-world deployment while maintaining performance in dynamic aerial environments.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yin, Y.; Duan, Y.; Wang, X.; Han, S.; Zhou, C. YOLOv5s-TC: An improved intelligent model for insulator fault detection based on YOLOv5s. Sensors 2025, 25, 4893. [Google Scholar] [CrossRef]

- Ma, J.; Qian, K.; Zhang, X.; Ma, X. Weakly supervised instance segmentation of electrical equipment based on RGB-T automatic annotation. IEEE Trans. Instrum. Meas. 2020, 69, 9720–9731. [Google Scholar] [CrossRef]

- Wang, F.; Guo, Y.; Li, C.; Lu, A.; Ding, Z.; Tang, J.; Luo, B. Electrical thermal image semantic segmentation: Large-scale dataset and baseline. IEEE Trans. Instrum. Meas. 2022, 71, 1–13. [Google Scholar] [CrossRef]

- Xu, C.; Li, Q.; Jiang, X.; Yu, D.; Zhou, Y. Dual-space graph-based interaction network for RGB-thermal semantic segmentation in electric power scene. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 1577–1592. [Google Scholar] [CrossRef]

- Cui, Y.; Lei, T.; Chen, G.; Zhang, Y.; Zhang, G.; Hao, X. Infrared small target detection via modified fast saliency and weighted guided image filtering. Sensors 2025, 25, 4405. [Google Scholar] [CrossRef]

- Zhao, L.; Ke, C.; Jia, Y.; Xu, C.; Teng, Z. Infrared and visible image fusion via residual interactive transformer and cross-attention fusion. Sensors 2025, 25, 4307. [Google Scholar] [CrossRef]

- Shi, W.; Lyu, X.; Han, L. SONet: A small object detection network for power line inspection based on YOLOv8. IEEE Trans. Power Deliv. 2024, 39, 2973–2984. [Google Scholar] [CrossRef]

- Li, D.; Sun, Y.; Zheng, Z.; Zhang, F.; Sun, B.; Yuan, C. A real-world large-scale infrared image dataset and multitask learning framework for power line surveillance. IEEE Trans. Instrum. Meas. 2025, 74, 1–14. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; He, Z.; Deng, Y. Video instance segmentation through hierarchical offset compensation and temporal memory update for UAV aerial images. Sensors 2025, 25, 4274. [Google Scholar] [CrossRef]

- Du, C.; Liu, P.X.; Song, X.; Zheng, M.; Wang, C. A two-pipeline instance segmentation network via boundary enhancement for scene understanding. IEEE Trans. Instrum. Meas. 2024, 73, 1–13. [Google Scholar] [CrossRef]

- Yang, L.; Wang, S.; Teng, S. Panoptic image segmentation method based on dynamic instance query. Sensors 2025, 25, 2919. [Google Scholar] [CrossRef]

- Brar, K.K.; Goyal, B.; Dogra, A.; Mustafa, M.A.; Majumdar, R.; Alkhayyat, A.; Kukreja, V. Image segmentation review: Theoretical background and recent advances. Inf. Fusion 2025, 114, 102608. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhou, J.; Liu, G.; Gu, Y.; Wen, Y.; Chen, S. A box-supervised instance segmentation method for insulator infrared images based on shuffle polarized self-attention. IEEE Trans. Instrum. Meas. 2023, 72, 1–11. [Google Scholar] [CrossRef]

- Wang, L.; Li, D.; Zhu, Y.; Tian, L.; Shan, Y. Dual super-resolution learning for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef]

- Stergiou, A.; Poppe, R.; Kalliatakis, G. Refining activation downsampling with SoftPool. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10357–10366. [Google Scholar]

- Gao, X.; Dai, W.; Li, C.; Xiong, H.; Frossard, P. iPool—Information-based pooling in hierarchical graph neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 5032–5044. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: High quality object detection and instance segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1483–1498. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, R.; Kong, T.; Li, L.; Shen, C. SOLOv2: Dynamic and fast instance segmentation. Adv. Neural Inf. Process. Syst. 2020, 33, 17721–17732. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT: Real-time instance segmentation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Zhao, Z.; Feng, S.; Zhai, Y.; Zhao, W.; Li, G. Infrared thermal image instance segmentation method for power substation equipment based on visual feature reasoning. IEEE Trans. Instrum. Meas. 2023, 72, 1–13. [Google Scholar] [CrossRef]

- Li, Y.; Liu, M.; Li, Z.; Jiang, X. CSSAdet: Real-time end-to-end small object detection for power transmission line inspection. IEEE Trans. Power Deliv. 2023, 38, 4432–4442. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Alexandridis, K.P.; Deng, J.; Nguyen, A.; Luo, S. Long-tailed instance segmentation using Gumbel optimized loss. In Proceedings of the European Conference of Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer Nature: Cham, Switzerland, 2022; pp. 353–369. [Google Scholar]

- Liu, D.; Zhang, J.; Qi, Y.; Wu, Y.; Zhang, Y. Tiny object detection in remote sensing images based on object reconstruction and multiple receptive field adaptive feature enhancement. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

- Cui, B.; Han, C.; Yang, M.; Ding, L.; Shuang, F. DINS: A diverse insulator dataset for object detection and instance segmentation. IEEE Trans. Ind. Informat. 2024, 20, 12252–12261. [Google Scholar] [CrossRef]

- Wang, B.; Dong, M.; Ren, M.; Wu, Z.; Guo, C.; Zhuang, T.; Pischler, O.; Xie, J. Automatic fault diagnosis of infrared insulator images based on image instance segmentation and temperature analysis. IEEE Trans. Instrum. Meas. 2020, 69, 5345–5355. [Google Scholar] [CrossRef]

- Choi, H.; Yun, J.P.; Kim, B.J.; Jang, H.; Kim, S.W. Attention-based multimodal image feature fusion module for transmission line detection. IEEE Trans. Ind. Informat. 2022, 18, 7686–7695. [Google Scholar] [CrossRef]

- Zheng, H.; Liu, Y.; Sun, Y.; Li, J.; Shi, Z.; Zhang, C.; Lai, C.S.; Lai, L.L. Arbitrary-oriented detection of insulators in thermal imagery via rotation region network. IEEE Trans. Ind. Informat. 2022, 18, 5242–5252. [Google Scholar] [CrossRef]

- Ou, J.; Wang, J.; Xue, J.; Wang, J.; Zhou, X.; She, L.; Fan, Y. Infrared image target detection of substation electrical equipment using an improved Faster R-CNN. IEEE Trans. Power Deliv. 2023, 38, 387–396. [Google Scholar] [CrossRef]

- Li, J.; Xu, Y.; Nie, K.; Cao, B.; Zuo, S.; Zhu, J. PEDNet: A lightweight detection network of power equipment in infrared image based on YOLOv4-tiny. IEEE Trans. Instrum. Meas. 2023, 72, 1–12. [Google Scholar] [CrossRef]

- Zhao, Z.; Feng, S.; Ma, D.; Zhai, Y.; Zhao, W.; Li, B. A weakly supervised instance segmentation approach for insulator thermal images incorporating sparse prior knowledge. IEEE Trans. Power Deliv. 2024, 39, 2693–2703. [Google Scholar] [CrossRef]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 1290–1299. [Google Scholar]

- Vu, T.; Kang, H.; Yoo, C.D. SCNet: Training inference sample consistency for instance segmentation. Proc. of the AAAI Conf. Artif. Intell. 2021, 35, 2701–2709. [Google Scholar] [CrossRef]

- Qiao, S.; Chen, L.-C.; Yuille, A. DetectoRS: Detecting objects with recursive feature pyramid and switchable atrous convolution. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 10213–10224. [Google Scholar]

- Fang, Y.; Yang, S.; Wang, X.; Li, Y.; Fang, C.; Shan, Y.; Feng, B.; Liu, W. Instances as queries. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 6910–6919. [Google Scholar]

- Lyu, C.; Zhang, W.; Huang, H.; Zhou, Y.; Wang, Y.; Liu, Y.; Zhang, S.; Chen, K. RTMDet: An empirical study of designing real-time object detectors. arXiv 2022, arXiv:2212.07784. [Google Scholar] [CrossRef]

- Li, W.; Liu, W.; Zhu, J.; Cui, M.; Yu, R.; Hua, X.; Zhang, L. Box2Mask: Box-supervised instance segmentation via level-set evolution. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5157–5173. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

| Dataset | Images | Instances | Categories | Power Industry | Annotation Level | Scenario Level | Type | Year |

|---|---|---|---|---|---|---|---|---|

| Wang et. al. [28] | 1200 | - | 1 | substation | instance | multiple | UAV | 2020 |

| Ma et. al. [2] | 1626 | - | 4 | distribution | instance | multiple | handheld | 2020 |

| Choi et. al. [29] | 420 | - | 1 | transmission | pixel | multiple | UAV | 2022 |

| Wang et. al. [3] | 4839 | 14,005 | 17 | substation | pixel | single | handheld | 2022 |

| Zhao et. al. [22] | 400 | 563 | 4 | substation | instance | single | UAV | 2022 |

| Zheng et. al. [30] | 2760 | 8410 | 1 | substation | object | multiple | handheld | 2022 |

| Zhou et. al. [14] | 2540 | - | 1 | transmission | instance | single | handheld | 2023 |

| Ou et. al. [31] | 1263 | 245 | 5 | substation | object | single | UAV | 2023 |

| Li et. al. [32] | 1861 | - | 5 | substation | object | multiple | UAV | 2023 |

| Xu et. al. [4] | 600 | - | 4 | transmission | pixel | multiple | UAV | 2023 |

| Xu et. al. [4] | 400 | - | 4 | substation | pixel | multiple | handheld | 2023 |

| Zhao et. al. [33] | 874 | 1854 | 1 | substation | instance | multiple | UAV | 2024 |

| Li et. al. [8] | 11,415 | 45,145 | 2 | transmission | instance | multiple | UAV | 2025 |

| PDI (ours) | 16,596 | 126,570 | 7 | distribution | instance | various | UAV | 2025 |

| ORD | DFE | F | Avg |

|---|---|---|---|

| 53.50/46.80 | |||

| ✓ | 54.10/47.30 | ||

| ✓ | ✓ | 53.20/47.00 | |

| ✓ | ✓ | ✓ | 55.20/48.00 |

| Method | bushing | insulator | disconnector | arrester | breaker | switch | terminal | Avg |

|---|---|---|---|---|---|---|---|---|

| QueryInst [37] | 47.20/47.80 | 65.00/62.30 | 66.90/59.20 | 48.40/50.20 | 59.30/60.20 | 66.50/53.90 | 33.80/15.60 | 55.30/49.90 |

| RTMDet [38] | 51.00/45.10 | 70.00/60.90 | 68.50/56.30 | 49.60/44.90 | 60.70/57.20 | 68.70/48.10 | 33.60/15.80 | 57.40/46.90 |

| SCNet [38] | 53.90/55.80 | 71.00/68.80 | 69.60/64.30 | 53.80/56.30 | 67.00/67.90 | 69.00/59.90 | 44.30/21.60 | 61.20/56.40 |

| DetectoRS [36] | 47.30/- | 65.00/- | 65.40/- | 46.50/- | 69.20/- | 64.40/- | 45.70/- | 57.60/- |

| Mask2Former [34] | 55.10/57.60 | 72.20/70.50 | 69.80/66.00 | 55.60/58.70 | 70.50/70.30 | 71.20/61.50 | 46.90/21.60 | 63.00/58.00 |

| PowerNet [8] | 44.20/45.10 | 62.70/60.10 | 64.60/55.60 | 45.60/47.80 | 59.70/57.80 | 63.90/49.40 | 37.20/8.40 | 54.00/46.30 |

| Mask RCNN [13] | 44.90/45.50 | 61.50/58.90 | 62.90/55.20 | 43.30/45.80 | 63.90/62.10 | 60.40/48.90 | 37.90/11.50 | 53.50/46.80 |

| RE-Mask RCNN | 45.80/46.80 | 62.60/59.90 | 63.80/56.10 | 44.60/46.90 | 65.00/62.70 | 63.30/51.50 | 41.00/11.70 | 55.20/48.00 |

| Cascade RCNN [19] | 46.40/46.30 | 63.80/59.70 | 65.40/55.10 | 46.10/46.80 | 64.00/61.20 | 64.80/49.10 | 38.80/10.50 | 55.60/46.90 |

| RE-Cascade RCNN | 47.30/46.10 | 66.40/61.70 | 67.30/57.10 | 48.60/48.50 | 64.60/61.30 | 65.50/50.50 | 44.00/8.90 | 57.70/47.70 |

| YOLOACT [21] | 42.10/35.00 | 56.50/49.90 | 58.90/49.80 | 40.30/36.20 | 58.90/59.70 | 58.10/42.10 | 37.10/13.60 | 50.30/40.90 |

| RE-YOLOACT | 44.10/36.60 | 59.30/52.50 | 61.60/52.30 | 43.60/39.50 | 59.70/61.90 | 60.80/45.00 | 38.30/13.60 | 52.50/43.10 |

| SOLOv2 [20] | -/31.30 | -/51.20 | -/55.90 | -/39.00 | -/68.20 | -/46.20 | -/24.10 | -/45.10 |

| RE-SOLOv2 | -/33.60 | -/54.70 | -/58.40 | -/41.70 | -/68.50 | -/49.00 | -/26.20 | -/47.50 |

| Method | bushing | insulator | disconnector | arrester | breaker | switch | terminal | Avg |

|---|---|---|---|---|---|---|---|---|

| Mask RCNN [13] | 44.90/45.50 | 61.50/58.90 | 62.90/55.20 | 43.30/45.80 | 63.90/62.10 | 60.40/48.90 | 37.90/11.50 | 53.50/46.80 |

| RE-Mask RCNN | 45.80/46.80 | 62.60/59.90 | 63.80/56.10 | 44.60/46.90 | 65.00/62.70 | 63.30/51.50 | 41.00/11.70 | 55.20/48.00 |

| Mask RCNN + CBS | 45.20/45.20 | 61.70/59.10 | 63.20/55.40 | 43.80/46.30 | 64.10/62.30 | 60.90/49.60 | 40.50/14.10 | 54.20/47.40 |

| RE-Mask RCNN + CBS | 46.10/47.20 | 62.80/60.10 | 64.20/56.30 | 45.10/47.10 | 65.40/62.90 | 63.70/52.10 | 43.00/13.50 | 55.80/48.40 |

| Mask RCNN + LRW | 45.00/45.70 | 61.70/59.05 | 63.10/55.40 | 43.60/46.10 | 64.00/62.20 | 60.80/49.40 | 39.80/13.20 | 54.00/47.30 |

| RE-Mask RCNN + LRW | 45.80/46.80 | 62.10/60.10 | 64.00/56.30 | 44.90/47.30 | 65.20/62.70 | 63.60/51.90 | 42.40/12.90 | 55.40/48.30 |

| Method | bushing | insulator | disconnector | arrester | breaker | switch | terminal | Avg |

|---|---|---|---|---|---|---|---|---|

| Mask RCNN [13] | 82.70/82.90 | 85.04/84.03 | 87.00/84.98 | 75.24/74.22 | 92.40/92.08 | 84.42/84.12 | 73.77/77.42 | 82.93/82.82 |

| Cascade RCNN [19] | 85.60/80.90 | 84.70/83.30 | 87.20/- | 79.90/73.00 | 90.80/92.80 | 84.90/84.40 | 72.90/78.90 | 83.70/81.80 |

| YOLOACT [21] | 80.20/81.40 | 83.90/83.20 | 87.40/- | 72.20/72.90 | 90.80/91.80 | 86.80/85.40 | 76.50/78.50 | 82.60/82.60 |

| SOLOv2 [20] | 80.20/81.40 | 83.90/83.20 | 87.40/85.10 | 72.20/72.90 | 90.80/91.80 | 86.80/85.40 | 76.50/78.50 | 82.60/82.60 |

| RE-Mask RCNN | 82.90/82.92 | 85.65/84.05 | 86.72/84.95 | 76.55/74.55 | 92.84/92.63 | 87.08/85.32 | 77.05/79.32 | 84.33/82.52 |

| RE-Cascade RCNN | 85.30/80.90 | 89.40/81.60 | 88.60/84.30 | 80.40/72.40 | 92.40/91.80 | 89.70/84.40 | 79.00/76.60 | 86.40/81.70 |

| RE-YOLOACT | 81.60/82.60 | 86.10/84.30 | 89.20/85.60 | 76.60/74.40 | 91.90/92.10 | 87.20/85.60 | 75.30/79.20 | 84.00/83.40 |

| RE-SOLOv2 | 81.60/82.60 | 86.10/84.30 | 89.20/85.60 | 76.60/74.40 | 91.90/92.10 | 87.20/85.60 | 75.30/79.20 | 84.00/83.40 |

| Method | CI | SusC | CC | StrC | GI | PGC | TC | Avg |

|---|---|---|---|---|---|---|---|---|

| QueryInst [37] | 65.20/37.90 | 52.50/49.80 | 19.20/24.70 | 48.40/30.80 | 68.60/53.60 | 28.30/35.70 | 70.20/53.80 | 50.40/40.90 |

| RTMDet [38] | 66.00/42.20 | 58.70/50.00 | 20.10/20.30 | 57.90/24.10 | 73.20/57.20 | 30.00/27.40 | 75.80/48.00 | 54.50/38.50 |

| SCNet [35] | 68.60/42.70 | 60.30/59.80 | 23.30/28.00 | 58.40/40.80 | 73.60/60.80 | 34.80/42.70 | 78.20/62.30 | 56.80/48.20 |

| DetectoRS [36] | 61.50/- | 53.80/- | 16.10/- | 49.10/- | 66.00/- | 25.50/- | 72.50/- | 49.20/- |

| Mask2Former [34] | 70.40/43.50 | 61.80/62.20 | 27.40/33.10 | 58.70/40.20 | 74.10/60.30 | 36.60/45.00 | 78.90/62.70 | 58.30/49.60 |

| PowerNet [8] | 63.70/37.30 | 52.00/50.80 | 19.90/23.30 | 50.50/31.20 | 69.00/54.40 | 28.10/35.10 | 72.30/54.50 | 50.80/40.90 |

| Mask RCNN [13] | 61.00/34.80 | 49.90/49.10 | 18.90/22.60 | 49.00/30.20 | 66.50/52.30 | 26.60/33.60 | 70.60/53.50 | 48.90/39.50 |

| RE-Mask RCNN | 63.50/37.00 | 53.00/51.60 | 20.20/24.70 | 52.10/31.70 | 70.10/55.20 | 29.40/36.10 | 73.60/54.90 | 51.70/41.60 |

| Cascade RCNN [19] | 63.20/35.90 | 52.80/49.80 | 16.20/20.30 | 49.90/30.30 | 67.90/52.50 | 25.50/33.00 | 72.20/52.80 | 49.60/39.20 |

| RE-Cascade RCNN | 64.70/36.80 | 53.30/50.90 | 20.20/24.40 | 52.20/30.80 | 70.60/54.70 | 28.90/36.70 | 74.20/54.80 | 52.00/41.30 |

| YOLOACT [21] | 48.60/35.20 | 48.40/41.40 | 15.10/16.40 | 41.70/9.80 | 57.60/48.60 | 25.90/22.10 | 65.80/40.40 | 43.30/30.60 |

| RE-YOLOACT | 51.10/37.50 | 49.30/42.10 | 17.30/17.40 | 43.70/10.40 | 58.70/49.30 | 25.70/22.50 | 66.40/40.60 | 44.60/31.40 |

| SOLOv2 [20] | -/47.20 | -/44.20 | -/16.60 | -/24.00 | -/59.40 | -/24.10 | -/50.20 | -/38.00 |

| RE-SOLOv2 | -/48.10 | -/46.10 | -/20.10 | -/29.20 | -/61.20 | -/26.70 | -/52.40 | -/40.70 |

| Method | ART/ms | Param/M | Avg | FPS |

|---|---|---|---|---|

| Mask RCNN [13] | 29.40 | 44.00 | 53.50/46.80 | 34.01 |

| RE-Mask RCNN | 38.20 | 45.04 | 55.20/48.00 | 26.18 |

| Cascade RCNN [19] | 38.30 | 77.04 | 55.60/46.90 | 26.11 |

| RE-Cascade RCNN | 44.80 | 78.08 | 57.70/47.70 | 22.32 |

| SOLOv2 [20] | 41.80 | 46.26 | -/45.10 | 23.92 |

| RE-SOLOv2 | 53.80 | 47.29 | -/47.50 | 18.59 |

| YOLOACT [21] | 23.80 | 34.77 | 50.30/40.90 | 18.59 |

| RE-YOLOACT | 31.00 | 36.16 | 52.50/43.10 | 32.26 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, J.; Sun, Y.; Zhang, J.; Sun, B. Reconstruction Error Guided Instance Segmentation for Infrared Inspection of Power Distribution Equipment. Sensors 2025, 25, 6007. https://doi.org/10.3390/s25196007

Luo J, Sun Y, Zhang J, Sun B. Reconstruction Error Guided Instance Segmentation for Infrared Inspection of Power Distribution Equipment. Sensors. 2025; 25(19):6007. https://doi.org/10.3390/s25196007

Chicago/Turabian StyleLuo, Jinbin, Yi Sun, Jian Zhang, and Bin Sun. 2025. "Reconstruction Error Guided Instance Segmentation for Infrared Inspection of Power Distribution Equipment" Sensors 25, no. 19: 6007. https://doi.org/10.3390/s25196007

APA StyleLuo, J., Sun, Y., Zhang, J., & Sun, B. (2025). Reconstruction Error Guided Instance Segmentation for Infrared Inspection of Power Distribution Equipment. Sensors, 25(19), 6007. https://doi.org/10.3390/s25196007