Distributed Prescribed Performance Formation Tracking for Unknown Euler–Lagrange Systems Under Input Saturation

Abstract

1. Introduction

- We propose a new robust, approximation-free distributed PPC strategy, where each agent leverages only local distances from its neighbors to achieve formation tracking around a leader trajectory.

- A distributed virtual velocity reference modification mechanism is developed, enabling each agent to adjust its virtual velocity reference dynamically, in response to input saturation, thus preserving internal stability and feasibility.

- Analytical lower bounds for the input saturation thresholds are derived, ensuring feasibility of the proposed control law within prescribed performance constraints.

- A 3D simulation scenario for a group of unmanned underwater vehicles is provided to demonstrate the effectiveness of the distributed formation tracking control design.

2. Preliminaries

Graph Theory

3. Problem Formulation

3.1. System Dynamics and Standing Assumptions

3.2. Problem Definition

- 1.

- Design a distributed mechanism that appropriately modifies the local virtual velocity error of each agent whenever its input becomes saturated.

- 2.

- Design a distributed control protocol that ensures that the modified formation tracking error adheres to the prescribed specifications.

- 3.

- The modification and control mechanism should be continuous and of low computational effort, i.e., no approximation structures, such as neural networks or fuzzy approximators, should be used.

- 4.

- Provide conditions for the saturation level of each agent that make the prescribed performance specifications feasible.

4. Results

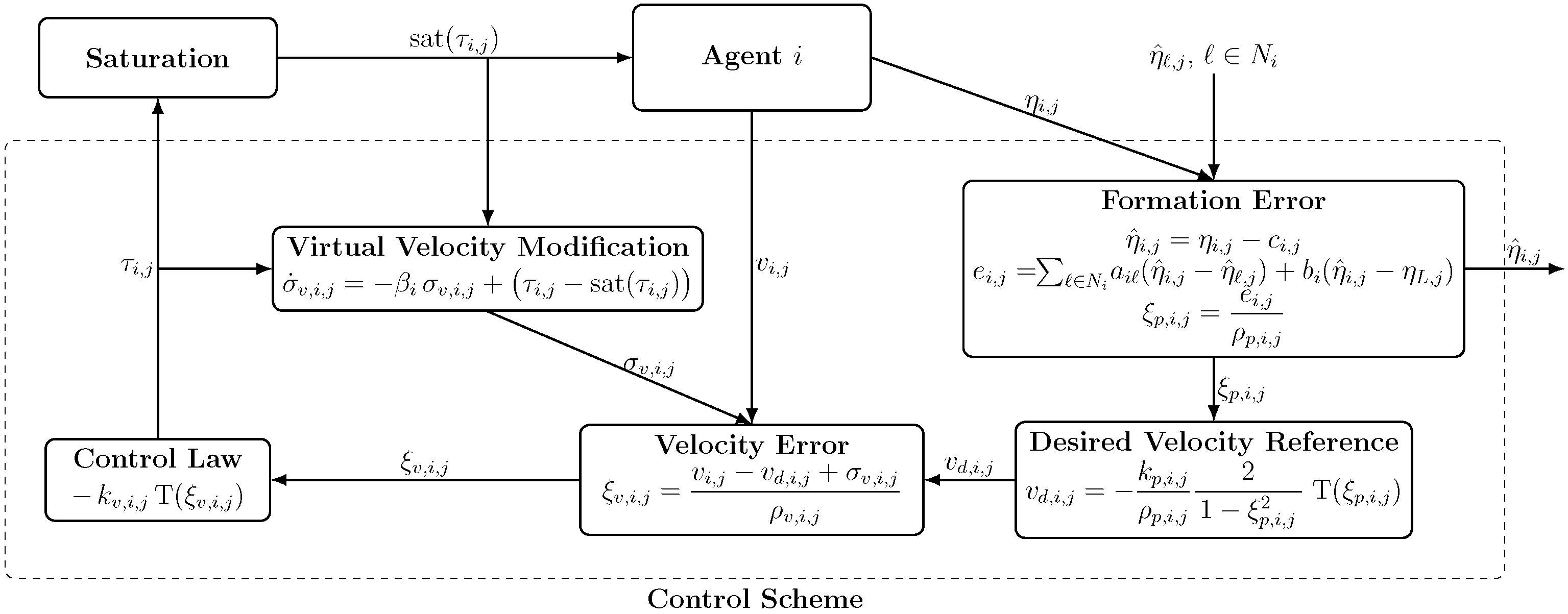

4.1. Distributed Virtual Velocity Reference Modification Mechanism

4.2. Control Design

- Step 1: Define the normalized formation error and virtual velocity:

- Step 2: Define the normalized velocity error and the control law:

4.3. Stability Analysis

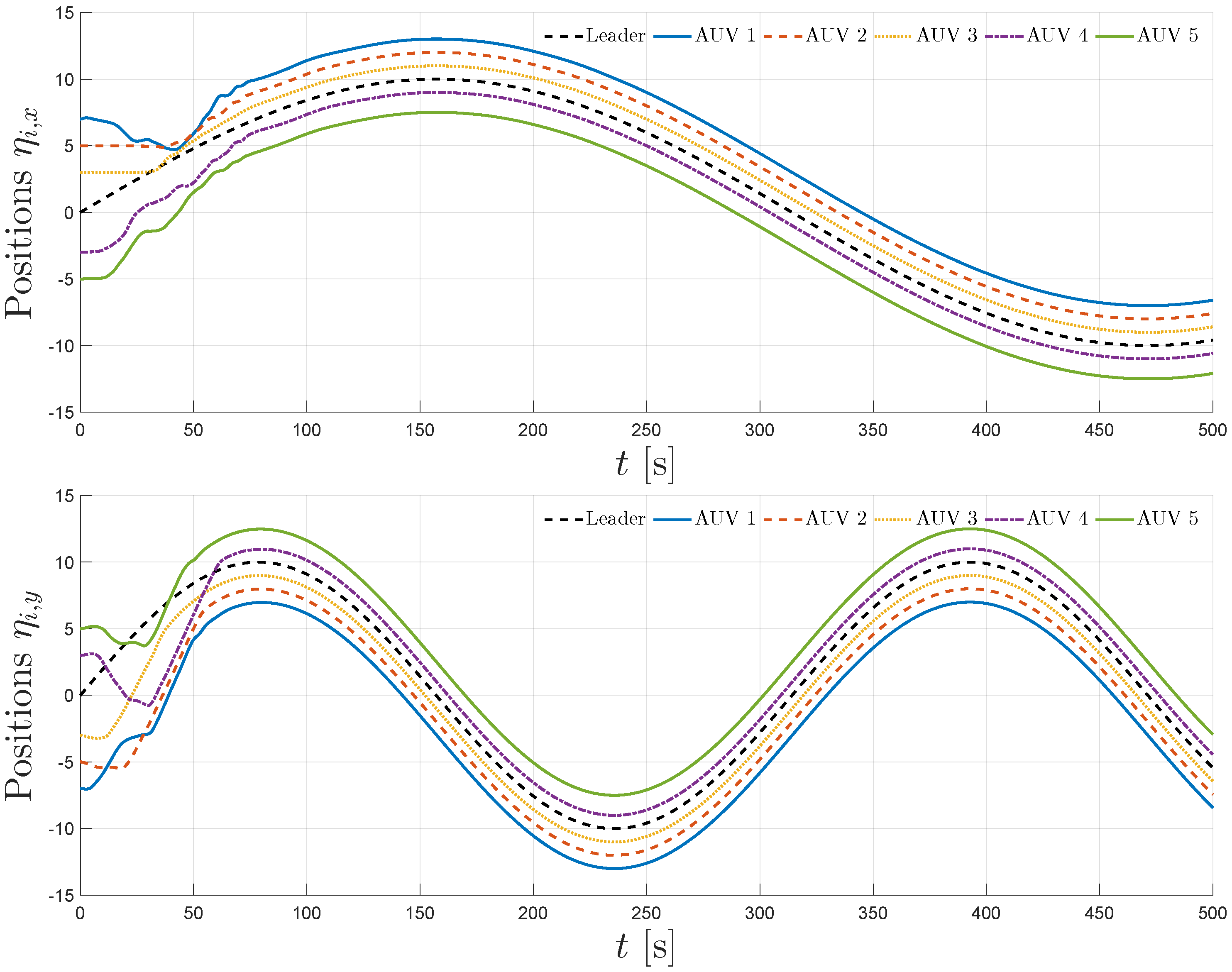

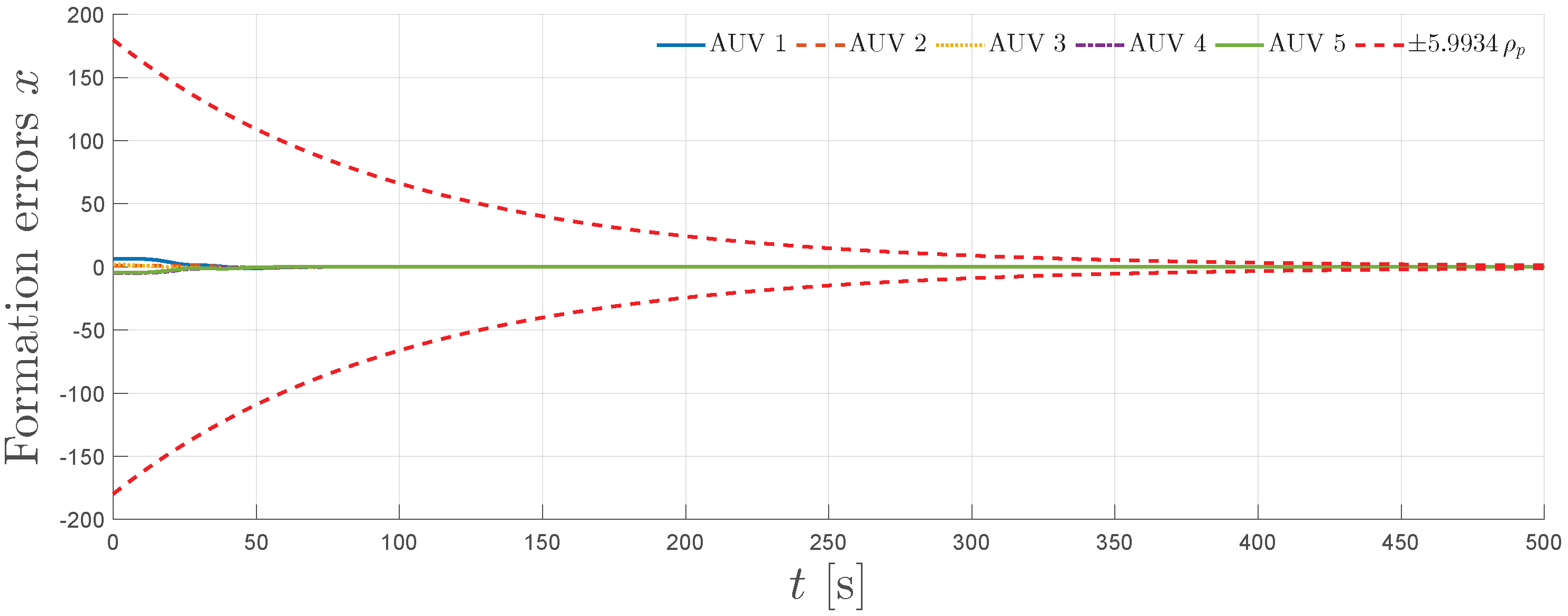

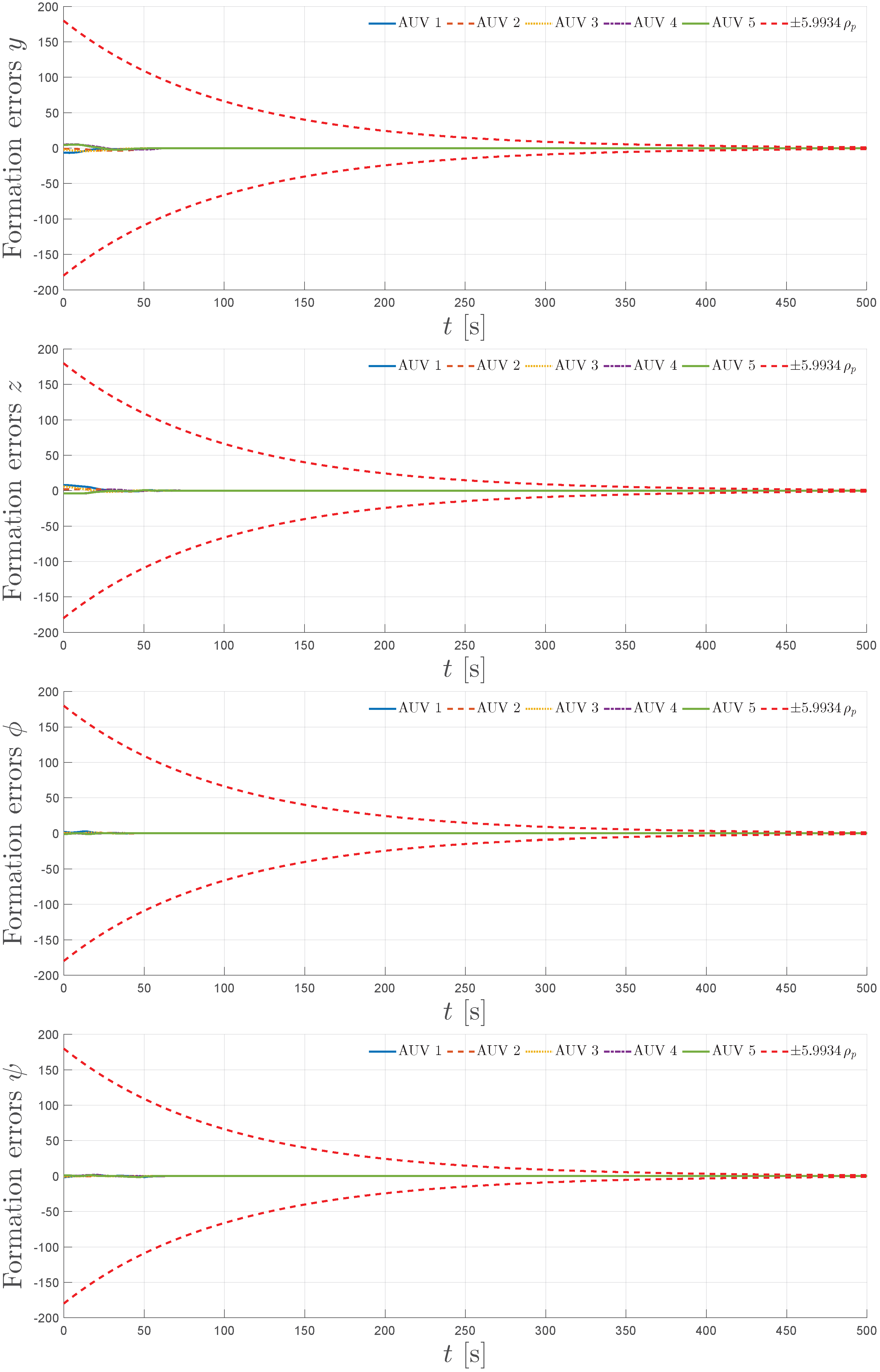

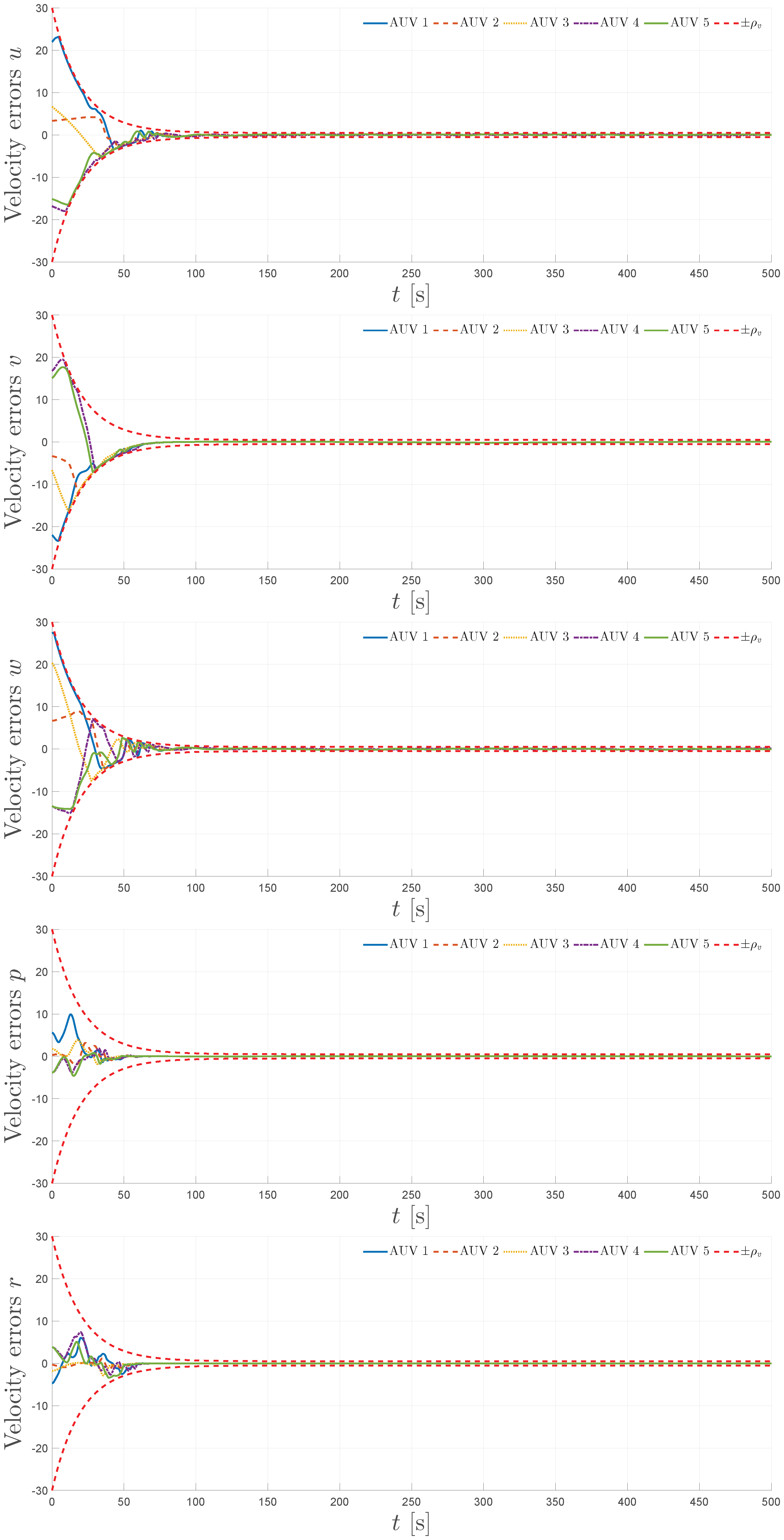

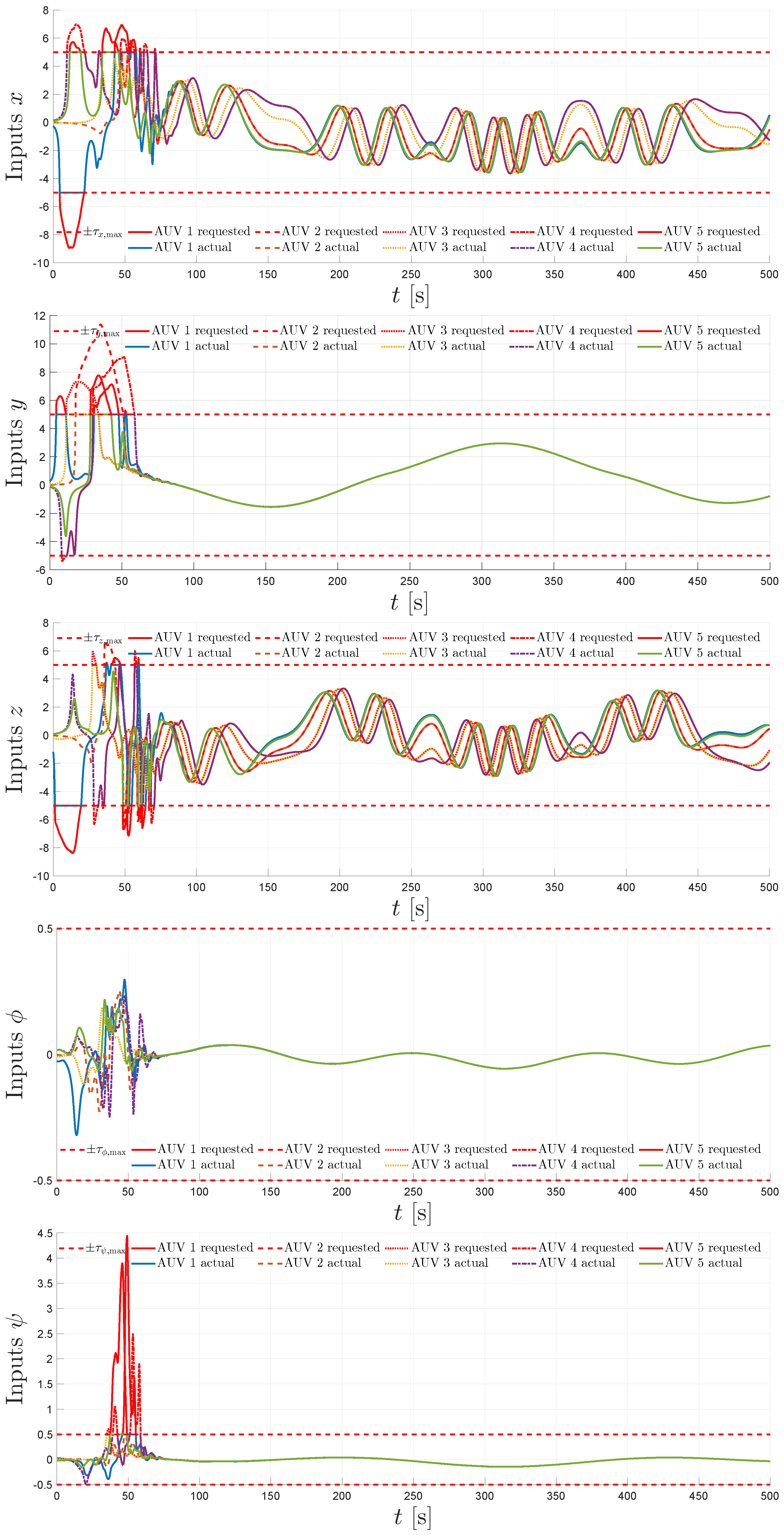

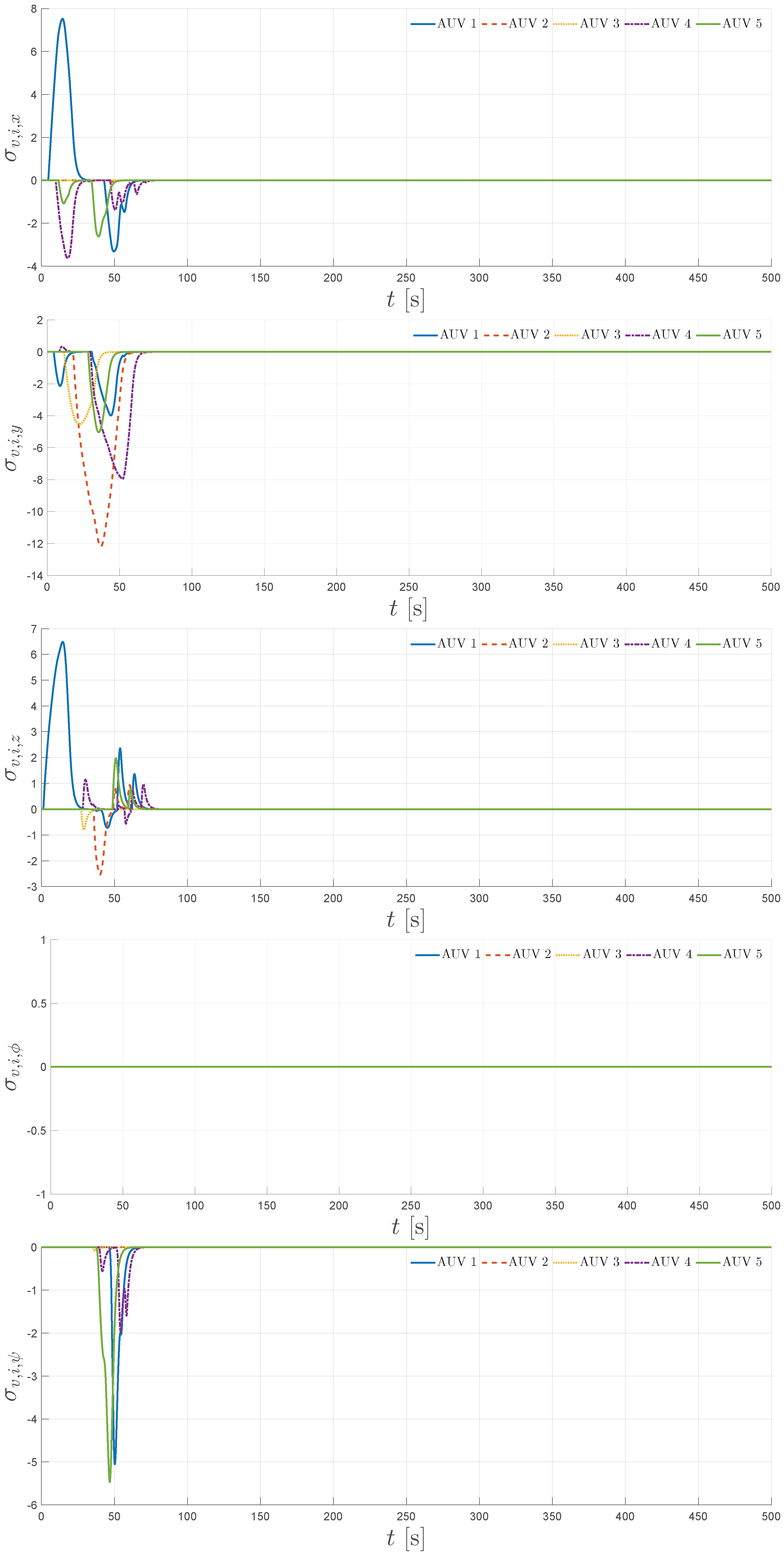

5. Simulation

5.1. Simulation Scenario

- The prescribed performance functions were selected for all agents as

- ;

- ;

- with performance parameters

- ;

- .

- Control gains were chosen as

- ;

- .

- The virtual velocity reference modification gains were selected as for all .

- The control inputs were constrained by saturation limits

5.2. Simulation Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Olfati-Saber, R. Flocking for multi-agent dynamic systems: Algorithms and theory. IEEE Trans. Autom. Control 2006, 51, 401–420. [Google Scholar] [CrossRef]

- Ren, W.; Beard, R.W. Distributed Consensus in Multi-Vehicle Cooperative Control: Theory and Applications; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Jadbabaie, A.; Lin, J.; Morse, A.S. Coordination of groups of mobile autonomous agents using nearest neighbor rules. IEEE Trans. Autom. Control 2003, 48, 988–1001. [Google Scholar] [CrossRef]

- Olfati-Saber, R.; Murray, R.M. Consensus problems in networks of agents with switching topology and time-delays. IEEE Trans. Autom. Control 2004, 49, 1520–1533. [Google Scholar] [CrossRef]

- Lewis, F.L.; Zhang, H.; Hengster-Movric, K.; Das, A. Cooperative Control of Multi-Agent Systems: Optimal and Adaptive Design Approaches; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Feng, Z.; Sun, C.; Hu, G. Robust connectivity preserving rendezvous of multirobot systems under unknown dynamics and disturbances. IEEE Trans. Control Netw. Syst. 2016, 4, 725–735. [Google Scholar] [CrossRef]

- Feng, Z.; Hu, G.; Ren, W.; Dixon, W.E.; Mei, J. Distributed coordination of multiple unknown Euler-Lagrange systems. IEEE Trans. Control Netw. Syst. 2016, 5, 55–66. [Google Scholar] [CrossRef]

- Jin, X.; Wang, S.; Qin, J.; Zheng, W.X.; Kang, Y. Adaptive fault-tolerant consensus for a class of uncertain nonlinear second-order multi-agent systems with circuit implementation. IEEE Trans. Circuits Syst. I Regul. Pap. 2017, 65, 2243–2255. [Google Scholar] [CrossRef]

- Lu, M.; Liu, L. Leader–following consensus of multiple uncertain Euler–Lagrange systems with unknown dynamic leader. IEEE Trans. Autom. Control 2019, 64, 4167–4173. [Google Scholar] [CrossRef]

- Baldi, S.; Frasca, P. Adaptive synchronization of unknown heterogeneous agents: An adaptive virtual model reference approach. J. Frankl. Inst. 2019, 356, 935–955. [Google Scholar] [CrossRef]

- Jin, X.; Lü, S.; Yu, J. Adaptive NN-based consensus for a class of nonlinear multiagent systems with actuator faults and faulty networks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 3474–3486. [Google Scholar] [CrossRef]

- Deng, C.; Jin, X.Z.; Che, W.W.; Wang, H. Learning-based distributed resilient fault-tolerant control method for heterogeneous MASs under unknown leader dynamic. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 5504–5513. [Google Scholar]

- Astrom, K.J.; Rundqwist, L. Integrator windup and how to avoid it. In Proceedings of the 1989 American Control Conference, Pittsburgh, PA, USA, 21–23 June 1989; pp. 1693–1698. [Google Scholar]

- Morabito, F.; Teel, A.R.; Zaccarian, L. Nonlinear antiwindup applied to Euler-Lagrange systems. IEEE Trans. Robot. Autom. 2004, 20, 526–537. [Google Scholar] [CrossRef]

- Guo, Y.; Huang, B.; Li, A.; Wang, C. Integral sliding mode control for Euler-Lagrange systems with input saturation. Int. J. Robust Nonlinear Control 2019, 29, 1088–1100. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, G.; Zhang, Q.; Zhang, J. Robust adaptive finite-time tracking control for uncertain euler-Lagrange systems with input saturation. IEEE Access 2020, 8, 187605–187614. [Google Scholar] [CrossRef]

- Wang, C.; Kuang, T. Neuroadaptive control for uncertain Euler-Lagrange systems with input and output constraints. IEEE Access 2021, 9, 51940–51949. [Google Scholar] [CrossRef]

- Shao, K.; Tang, R.; Xu, F.; Wang, X.; Zheng, J. Adaptive sliding mode control for uncertain Euler–Lagrange systems with input saturation. J. Frankl. Inst. 2021, 358, 8356–8376. [Google Scholar] [CrossRef]

- Hu, J.; Trenn, S.; Zhu, X. Funnel control for relative degree one nonlinear systems with input saturation. In Proceedings of the 2022 European Control Conference (ECC), London, UK, 12–15 July 2022; pp. 227–232. [Google Scholar]

- Berger, T. Input-constrained funnel control of nonlinear systems. IEEE Trans. Autom. Control 2024, 69, 5368–5382. [Google Scholar] [CrossRef]

- Wen, C.; Zhou, J.; Liu, Z.; Su, H. Robust adaptive control of uncertain nonlinear systems in the presence of input saturation and external disturbance. IEEE Trans. Autom. Control 2011, 56, 1672–1678. [Google Scholar] [CrossRef]

- Chen, M.; Tao, G.; Jiang, B. Dynamic surface control using neural networks for a class of uncertain nonlinear systems with input saturation. IEEE Trans. Neural Netw. Learn. Syst. 2014, 26, 2086–2097. [Google Scholar] [CrossRef]

- Li, C.; Chen, L.; Guo, Y.; Ma, G. Formation–containment control for networked Euler–Lagrange systems with input saturation. Nonlinear Dyn. 2018, 91, 1307–1320. [Google Scholar] [CrossRef]

- Wang, L.; He, H.; Zeng, Z.; Ge, M.F. Model-independent formation tracking of multiple Euler–Lagrange systems via bounded inputs. IEEE Trans. Cybern. 2019, 51, 2813–2823. [Google Scholar] [CrossRef]

- Silva, T.; Souza, F.; Pimenta, L. Distributed formation-containment control with Euler-Lagrange systems subject to input saturation and communication delays. Int. J. Robust Nonlinear Control 2020, 30, 2999–3022. [Google Scholar] [CrossRef]

- Zhao, J.; Wang, Y.; Wang, Q. Event-triggered formation-containment control for multiple Euler-Lagrange systems with input saturation. J. Chin. Inst. Eng. 2022, 45, 313–323. [Google Scholar] [CrossRef]

- Ma, J.; Zhang, H.; Zhang, J.; Guo, X. Predefined-time control for multi-agent systems with input saturation: An improved dynamic surface control scheme. IEEE Trans. Autom. Sci. Eng. 2024, 22, 3661–3670. [Google Scholar] [CrossRef]

- Yuan, F.; Liu, Y.J.; Liu, L.; Lan, J. Adaptive neural network control of non-affine multi-agent systems with actuator fault and input saturation. Int. J. Robust Nonlinear Control 2024, 34, 3761–3780. [Google Scholar]

- Zhang, Y.; Gao, C.; Ma, L. Event-Triggered Formation Containment Control for Euler-Lagrange Systems with Input Saturation. In Proceedings of the 2024 2nd International Conference on Frontiers of Intelligent Manufacturing and Automation, Baotou, China, 9–11 August 2024; pp. 588–592. [Google Scholar]

- Chen, C.; Yin, S.; Zou, W.; Xiang, Z. Connectivity-Preserving Consensus of Heterogeneous Multiple Euler–Lagrange Systems with Input Saturation. IEEE Trans. Ind. Inform. 2025. (Early Access). [Google Scholar] [CrossRef]

- Bechlioulis, C.P.; Rovithakis, G.A. Robust Adaptive Control of Feedback Linearizable MIMO Nonlinear Systems with Prescribed Performance. IEEE Trans. Autom. Control 2008, 53, 2090–2099. [Google Scholar] [CrossRef]

- Bechlioulis, C.P.; Rovithakis, G.A. Decentralized Robust Synchronization of Unknown High Order Nonlinear Multi-Agent Systems With Prescribed Transient and Steady State Performance. IEEE Trans. Autom. Control 2017, 62, 123–134. [Google Scholar] [CrossRef]

- Li, S.; Xiang, Z. Adaptive prescribed performance control for switched nonlinear systems with input saturation. Int. J. Syst. Sci. 2018, 49, 113–123. [Google Scholar] [CrossRef]

- Cheng, C.; Zhang, Y.; Liu, S. Neural observer-based adaptive prescribed performance control for uncertain nonlinear systems with input saturation. Neurocomputing 2019, 370, 94–103. [Google Scholar] [CrossRef]

- Yong, K.; Chen, M.; Shi, Y.; Wu, Q. Flexible performance-based robust control for a class of nonlinear systems with input saturation. Automatica 2020, 122, 109268. [Google Scholar] [CrossRef]

- Ji, R.; Li, D.; Ma, J.; Ge, S.S. Saturation-tolerant prescribed control of MIMO systems with unknown control directions. IEEE Trans. Fuzzy Syst. 2022, 30, 5116–5127. [Google Scholar] [CrossRef]

- Wang, Y.; Hu, J.; Li, J.; Liu, B. Improved prescribed performance control for nonaffine pure-feedback systems with input saturation. Int. J. Robust Nonlinear Control 2019, 29, 1769–1788. [Google Scholar] [CrossRef]

- Fotiadis, F.; Rovithakis, G.A. Input-Constrained Prescribed Performance Control for High-Order MIMO Uncertain Nonlinear Systems via Reference Modification. IEEE Trans. Autom. Control 2024, 69, 3301–3308. [Google Scholar] [CrossRef]

- Bikas, L.N.; Rovithakis, G.A. Prescribed performance under input saturation for uncertain strict-feedback systems: A switching control approach. Automatica 2024, 165, 111663. [Google Scholar] [CrossRef]

- Trakas, P.S.; Bechlioulis, C.P. Adaptive Performance Control for Input Constrained MIMO Nonlinear Systems. IEEE Trans. Syst. Man Cybern. Syst. 2024, 54, 7733–7745. [Google Scholar] [CrossRef]

- Gkesoulis, A.K.; Bechlioulis, C.P. A Low-Complexity Adaptive Performance Control Scheme for Unknown Nonlinear Systems Subject to Input Saturation. IEEE Control Syst. Lett. 2025, 9, 2115–2120. [Google Scholar] [CrossRef]

- Yang, H.; Jiang, B.; Yang, H.; Liu, H.H. Synchronization of multiple 3-DOF helicopters under actuator faults and saturations with prescribed performance. ISA Trans. 2018, 75, 118–126. [Google Scholar] [CrossRef]

- Shi, Z.; Zhou, C.; Guo, J.; Su, B. Event-triggered prescribed performance control for nonlinear multi-agent systems with input saturation. In Proceedings of the 2021 40th Chinese Control Conference (CCC), Shanghai, China, 26–28 July 2021; pp. 5050–5055. [Google Scholar]

- Trakas, P.S.; Tantoulas, A.; Bechlioulis, C.P. Formation Control of Nonlinear Multi-Agent Systems with Nested Input Saturation. Appl. Sci. 2023, 14, 213. [Google Scholar] [CrossRef]

- Chang, R.; Liu, Y.; Chi, X.; Sun, C. Event-based adaptive formation and tracking control with predetermined performance for nonlinear multi-agent systems. Neurocomputing 2025, 611, 128660. [Google Scholar] [CrossRef]

- Gkesoulis, A.K.; Psillakis, H.E. Distributed UAV formation control with prescribed performance. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 439–445. [Google Scholar]

- Khalil, H.K.; Grizzle, J.W. Nonlinear Systems; Prentice Hall: Upper Saddle River, NJ, USA, 2002; Volume 3. [Google Scholar]

- Bechlioulis, C.P.; Rovithakis, G.A. A low-complexity global approximation-free control scheme with prescribed performance for unknown pure feedback systems. Automatica 2014, 50, 1217–1226. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gkesoulis, A.K.; Christopoulou, A.; Bechlioulis, C.P.; Karras, G.C. Distributed Prescribed Performance Formation Tracking for Unknown Euler–Lagrange Systems Under Input Saturation. Sensors 2025, 25, 6002. https://doi.org/10.3390/s25196002

Gkesoulis AK, Christopoulou A, Bechlioulis CP, Karras GC. Distributed Prescribed Performance Formation Tracking for Unknown Euler–Lagrange Systems Under Input Saturation. Sensors. 2025; 25(19):6002. https://doi.org/10.3390/s25196002

Chicago/Turabian StyleGkesoulis, Athanasios K., Andreani Christopoulou, Charalampos P. Bechlioulis, and George C. Karras. 2025. "Distributed Prescribed Performance Formation Tracking for Unknown Euler–Lagrange Systems Under Input Saturation" Sensors 25, no. 19: 6002. https://doi.org/10.3390/s25196002

APA StyleGkesoulis, A. K., Christopoulou, A., Bechlioulis, C. P., & Karras, G. C. (2025). Distributed Prescribed Performance Formation Tracking for Unknown Euler–Lagrange Systems Under Input Saturation. Sensors, 25(19), 6002. https://doi.org/10.3390/s25196002