Abstract

Automated recognition and analysis of parking signs can greatly enhance the safety and efficiency of both autonomous vehicles and drivers seeking navigational assistance. Our study focused on identifying parking constraints from the parking signs. It offers the following novel contributions: (1) A comparative performance analysis of AWS Rekognition and Azure Custom Vision (CV), two leading services for image recognition and analysis. (2) The first AI-based approach to recognising parking signs typical for Melbourne, Australia, and extracting parking constraint information from them. We utilised 1225 images of the parking signs to evaluate the AI capabilities for analysing these constraints. Both platforms were assessed based on several criteria, including their accuracy in recognising elements of parking signs, sub-signs, and the completeness of the signs. Our experiments demonstrated that both platforms performed effectively and are close to being ready for live application on parking sign analysis. AWS Rekognition demonstrated better results for recognition of parking sign elements and sub-signs (F1 scores of 0.991 and 1.000). It also performed better in the criterion “No text missed”, providing the result of 0.94. Azure CV performed better in the recognition of arrows (F1 score of 0.941). Both approaches demonstrated a similar level of performance for other criteria.

1. Introduction

Reading and analysing parking signs manually can be a challenging task, especially while driving. Simple signs with only one or two elements are relatively easy to interpret. However, many parking signs are quite complex. They often present different restrictions for various time periods and may also show different rules for the areas on the right and left of the sign pole. This complexity is particularly problematic in large cities, where parking signs can easily confuse drivers. One potential solution to this issue is to implement automated analysis of parking signs using AI-based image recognition and analysis technology. Then, in this context, we can view vehicles as mobile sensors equipped to detect and relay information about their surroundings, including critical signage that informs driving decisions.

There are a number of prior works on traffic and parking sign recognition; see, for example, [1,2,3,4,5]. This demonstrates the importance of this research direction as well as a practical need to obtain a working solution, which might be a valuable addition for autonomous driving, as well as provide much-needed decision support for drivers preferring to drive manually. Some of the approaches focused on Traffic Sign Recognition (TSR), while other approaches target Parking Sign Recognition (PSR).

In both cases, the approaches aim to cover both the detection of a sign and the recognition of the information presented on the sign. TSR methods [6] typically address the signs that provide traffic-related information, warnings, or instructions to drivers. PSR methods [7] concentrate on the signs that present parking instructions and regulations. While both TSR and PSR require very high accuracy, the environmental and driving constraints for them are generally different. The timing constraints for TSR methods are stricter and require faster responses from the system. For instance, when a car is travelling at 100 km/h and approaches a speed limit sign indicating “60 km/h”, the information about the speed reduction must be processed rapidly to ensure the car can slow down in time. The parking signs should typically be detected at a slower speed when the car is slowing down to look for a potential parking spot. On the other hand, the parking signs contain many elements presenting complex parking constraints.

Our study focuses on AI-based extraction of the parking constraints, i.e., on the PSR methods. The PSR research area is less explored than TSR, but it is currently expanding to provide practical support for drivers. AWS Rekognition and Azure Custom Vision are two leading services for image recognition and analysis. In our previous studies, we analysed the applicability of computer vision technologies, including AWS Rekognition and Google Cloud Vision approaches, for semi-automated meter-reading of non-smart meters [8], where these approaches demonstrated solid results. Therefore, it would make sense to analyse their current capacity for parking sign analysis. Parking signs vary significantly from one country to another. Moreover, in some countries, parking signs differ even in different states and territories, or even between local councils. An overview of the datasets widely used in the TSR field has been introduced in [9]. It presents the following datasets of German traffic signs [10], traffic signs from Belgium and the Netherlands [11], and traffic signs from China. However, to our best knowledge, there is no equivalent database for parking signs, especially for Australia. Therefore, our study is the first to use AI-based approaches for the recognition of parking signs typical in Melbourne, Australia.

Contributions: In our study, we present a comparative performance analysis of AWS Rekognition and Azure Custom Vision performance in identifying parking constraints from the parking signs. Our aim was to answer the following research question: (RQ) How can parking signs be analysed using AWS Rekognition and Azure Custom Vision?

We utilised 1225 images of the parking signs (Melbourne, Australia) to evaluate the AI capabilities for analysing these constraints. Both platforms were evaluated based on their accuracy in recognising parking sign elements and sub-signs, as well as the completeness of the signs. We also assessed their ability to accurately recognise text elements and maintain the correct order of the text. Additionally, we examined how efficiently they handled time-related text, such as “AM” and “PM” notation. AWS Rekognition and Azure Computer Vision showed promising results for parking sign analysis. Each approach had its strengths and weaknesses. For instance, AWS Rekognition performed significantly better in the recognition of parking sign elements and sub-signs. However, it required an additional algorithmic solution to enhance performance when recognising arrows.

The rest of the paper is organised as follows. Section 2 discusses the related work, both on TSR and PSR methods. Section 3 introduces methodological aspects of our work. Section 4 and Section 5 present the results of our experiments and the corresponding discussion. In Section 6, we analyse the limitations and threats to validity of our study. Finally, Section 7 summarises the paper.

2. Related Work

2.1. Traffic Sign Recognition

In contrast to the PSR research area, many TSR approaches have been introduced over the last decade. For example, TSR algorithms for intelligent vehicles were proposed in [1,2,3,4,5]. A system for the recognition of traffic signs and traffic lights has been presented in [12]. Another system presented in [13] was trained and evaluated based on the Swedish and German traffic sign datasets [14,15] with the focus on detection of the signs. Some TSR approaches were limited to a particular type of traffic sign. For example, the method proposed in [16] focused on the prohibitory and danger signs, i.e., the signs with a red rim. The method presented in [17] focused on the recognition of warning signs such as “pedestrian crossing” and “children”. A system to detect and recognise text from traffic road signs in various weather conditions was introduced in [18]. An approach for TSR with deep learning has been presented in [19]. The authors aimed to capture and classify traffic signs, focusing on the detection of faded signs and signs obstructed by vegetation.

As this area is rapidly growing, there are also a number of literature reviews (secondary studies) aiming to systematise the research conducted in this field, see Table 1 for an overview. For example, a Systematic Literature Review (SLR) on vision-based autonomous vehicle systems was presented in [20]. Another SLR [6] aimed to address computational methods for TSR in autonomous driving.

Table 1.

Literature reviews on TSR-related studies.

Table 2 presents an overview of primary TSR studies, which present either conceptual contributions or corresponding datasets.

Table 2.

Related primary studies on TSR methods.

It is also important to mention that the acceptance of autonomous vehicles might vary in different countries, influenced by cultural aspects [51]. Therefore, the above-mentioned TSR approaches might also be applied in driver-support systems.

2.2. Parking Sign Recognition

An approach for parking sign recognition has been presented as an early prototype in [52]. This approach demonstrated a high accuracy of 96–98%, but the approach was limited to a few particular types of signs, focusing on “no parking” symbol detection and arrow recognition, while the signs with arrows were cropped to allow easier recognition. In our work, we aim to go further and to analyse the AI capacity to identify corresponding elements on the signs that are not cropped and, moreover, might be partially covered by trees or other visual obstacles.

A study focusing on parking sign detection has been presented in [53]. The authors applied a deep learning approach to detect and classify parking signs, without aiming to extract the exact parking constraints from the signs. In contrast to that work, we aim to identify parking constraints from the signs to make sure that a vehicle can be parked in the corresponding slot for the required number of minutes/hours.

An impact of the image resolution on the parking sign recognition and interpretation was investigated in [54]. The study was based on a parking sign dataset collected in Stockholm (Sweden). This work aimed to identify resolution thresholds that maintain acceptable accuracy. Our approach does not cover this aspect.

A study presented in [55] aimed to apply computer vision approaches for automated recognition and localisation of parking signs. The objective of this study was to explore the feasibility of creating a digital map of parking signs. While the research indicated that this direction shows promise, no practical solution has been implemented yet. Given the rapid advancements in AI capabilities, it may be worthwhile to pursue this area further. However, our approach goes in a slightly different direction: we did not aim to create a map of parking signs. Instead, we explore practical solutions for automated recognition of parking signs. For this reason, we investigated the current capacity of AWS Rekognition and Azure Custom Vision for real-time analysis of the parking signs.

3. Methods

In this section, we present the background terminology, the methodological aspects of the experiments we conducted, and the methods used for the analysis of the obtained results. We compared the performance of AWS and Azure models on the identification of parking constraints, focusing on the following criteria:

- C1:

- Recognition of parking sign elements (such as clearway, bus zone, no parking, etc.);

- C2:

- Recognition of arrows (denoting constraints applicable to the right, left of or both sides of the parking sign pole) and their directions;

- C3:

- Recognition of sub-signs that are typically used to specify different constraints applicable for different time periods;

- C4:

- Recognition of (in)completeness of parking signs—Check whether a parking sign is complete, i.e., whether the whole sign is presented on the image;

- C5:

- Correct recognition of the text presented on the sign.

As the core performance metrics, we apply precision, recall, and F1 score.

measures the accuracy of positive predictions, i.e., the proportion of correctly identified positives out of all predicted positives:

where denotes the number of correctly predicted labels (“True Positives”), and denotes the number of incorrect predictions (“False Positives”).

measures the model’s ability to identify all actual positives. It shows the proportion of correctly detected positives out of all actual positives:

where denotes the number of actual labels the model failed to detect (“False Negatives”).

score aims to cover both precision and recall, balancing them. When we need to auto-identify the content of the parking sign, we need both high precision and high recall. Therefore, the score is the most important metric for our experiments.

In the rest of the section, we present the settings of the experiments we conducted to compare the performance of AWS and Azure models.

3.1. Criterion C1: Symbol Recognition—Recognition of Relevant Parking Sign Elements

The Symbol Detection model is an AI model within Azure Custom Vision. It is trained to identify symbols on parking signs, see Figure 1. Since we used bounding boxes in the AWS Rekognition Custom Label project, we selected “Object Detection” as the Project Type in this instance. A “bounding box” refers to a rectangular box used in computer vision to define the area of interest in an image. The bounding box is defined by the following parameters:

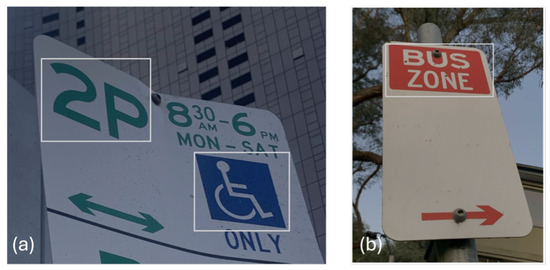

Figure 1.

Examples of parking sign symbols highlighted in white: (a) a “Park for 2 Hours” and “Disabled” symbol, and (b) a “Bus Zone” symbol.

- Coordinates: The top-left corner (often represented as x, y coordinates) and the width and height of the box.

- Width and Height: The dimensions of the rectangle that encloses the object.

For example, in the image presented in Figure 1b, the bounding box (the white rectangle) highlights the “bus zone” area.

This choice enables us to detect specific objects within an image, mirroring the functionality of bounding boxes in the AWS Rekognition project. We opted for the General domain, as it offers the best balance of cost and suitability for our project’s requirements. Table 3 presents a summary of testing data. The slight difference in the size of the training datasets between AWS and Azure arose from Azure’s requirement of “at least 15 training images for each label”. To meet this requirement, we slightly modified some of the existing training images for labels with fewer than 15 samples. Specifically, we cropped these images slightly (by 0.1 cm) to effectively increase the number of training samples. Consequently, the training dataset for Azure Custom Vision contained slightly more images than the AWS Rekognition Custom Labels dataset. Since the augmentation involved reusing existing images with minor modifications, it should not impact the model’s results.

Table 3.

Symbol detection: dataset sizes.

To create the dataset for this experiment, we followed two methods: (1) The authors captured photos of the parking signs using smartphone cameras in high-efficiency mode and a standard resolution of 24 MP. In the case of a complex sign, we cropped the image to include only one sub-section of a parking sign. (2) We also utilised digital parking signs provided by Manningham City Council [56]. As the digital images obtained from [56] are generally clearer than images obtained by photos (no obstruction by vegetation, perfect light conditions and frontal view, etc.), we aimed to have no more than 30% of the images of this kind in our training and testing data set for this experiment.

To ensure consistency between the AWS and Azure models, the same threshold level for each label used in AWS was applied when testing the Azure models. A threshold refers to the confidence score required for the model to classify a prediction as belonging to a specific label. For example, if the threshold is set to 80%, the model must be at least 80% confident in its prediction for the label to be considered correct. Table 4 summarises the information on the labels, the corresponding number of images used for training and testing, and the threshold used for testing each label. In AWS models, the “assumed threshold” for each label is automatically calculated by AWS Rekognition Custom Labels. This threshold refers to the confidence percentage for each predicted label. The assumed threshold cannot be manually set; it is calculated based on the best F1 score achieved on the test dataset during model training.

Table 4.

Image counts and threshold values per label. (*) denotes that the augmentation technique was applied to these labels in Azure Custom Vision model to meet Azure’s requirement of having “at least 15 images” per label.

To automate the process of uploading images, drawing bounding boxes around parking symbols, and testing over 1000 images, three Python 3.9 scripts were used:

- aws_to_azure_bbox_converter.py: This script was used to convert a training manifest file from AWS (training_aws_annotations.json) into a format compatible with Azure (training_azure_annotations.json). The AWS manifest, exported from an AWS model, contains detailed information about the training images, including image names, paths to AWS S3 storage, bounding box coordinates, and labels for each bounding box.

- azure_custom_vision_uploader.py: This script utilised the Azure-compatible training manifest to interact with the Azure Custom Vision API. It automated the process of uploading images and adding bounding boxes with corresponding labels to each image.

- test-bulk.py: This script was used to run tests on the images and automatically draw bounding boxes around the parking symbols. It simplified the process of quickly reviewing the results and evaluating the performance of the model.

To train the model, we utilised the “Advanced Training” option within the constraints of the free tier. We limited the training duration to a maximum of 1 h to optimise model accuracy, while ensuring that we stayed within the usage limits of the free tier.

3.2. Criterion C2: Recognition of Arrows

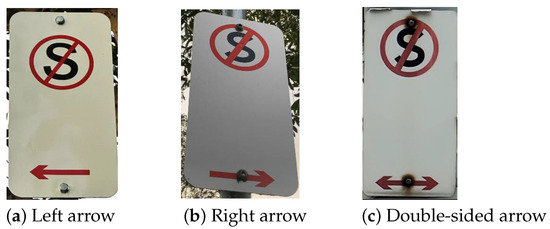

This test aims to compare the performance of image object classification models in AWS and Azure to detect the direction of arrows in parking signs. As shown in Figure 2 below, parking signs in Melbourne can contain right, left and double-sided arrows. To analyse the model’s capacity to detect arrows correctly, the following approaches can be used:

- (1)

- A model can be trained in AWS or Azure by selecting the arrow by a bounding box and labelling it as “left”, “right”, or “both-sides”; see Figure 3a. The trained model can then be called using new images, and the model will detect the desired object (the arrow) and predict a label (left, right or both-sides).

- (2)

- Another approach would be to use separate labelling, see Figure 3b, where two bounding boxes are used: one around the entire arrow, and another around the arrow head. Then, based on the position location of the bounding boxes, the arrow direction can be calculated using an algorithmic solution.

Figure 2.

The range of arrow types found on parking signs in Melbourne.

Figure 3.

Different approaches used to determine the arrow direction.

In our experiments to compare the AWS vs. Azure performance, we apply the first approach. This allows us to directly compare the ability of the model to detect arrows, rather than comparing the workaround method that relies on algorithmic calculations. As the dataset, we applied the same 64 training and 48 testing images that were used for the experiments presented in Section 3.1. The dataset covered all three arrow types—left, right and both sides, see Table 5. To create the dataset, the photos of the parking signs were taken by the authors using smartphone cameras in high-efficiency mode and a standard resolution of 24 MP. To analyse cases with a single arrow on a sign, the photos were cropped correspondingly, as can be seen in an example in Figure 3.

Table 5.

Arrow recognition: sizes of datasets used for training and testing.

All resources utilised in this project are within the free tier. Since we used bounding boxes in the AWS Rekognition Custom Label experiments, we selected “Object Detection” as the Project Type in this instance. This choice enables us to detect specific objects within an image, mirroring the functionality of bounding boxes in the AWS Rekognition project. We opted for the General domain, as it offers the best balance of cost and suitability for our project’s requirements. As explained in Section 6, AWS allows us to provide a testing dataset, which it uses to calculate performance scores based on automatically calculated thresholds, which were 0.633 for both-side arrows, 0.521 for left arrows, and 0.540 for right arrows. To compare the performance with Azure, we have used the same testing dataset, manually called the Azure model API setting the threshold ourselves, checking the predicted labels and manually calculating the precision, recall and F1 scores. In order to have similar settings in Azure, we ran the Azure model using the AWS-calculated thresholds for each label.

3.3. Criterion C3: Recognition of Sub-Signs

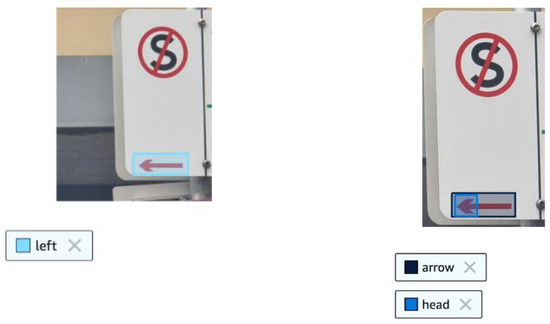

We aimed to detect the presence of sub-signs within a parking sign. We use the label “sign” to denote the presence of a sub-sign. A simple sign would consist of a single sub-sign, as presented in Figure 4a. A complex parking sign would have multiple sub-signs. For example, the sign presented in Figure 4b has three sub-signs.

Figure 4.

Examples of a sub-sign detection: (a) a simple parking sign (consisting of a single “sub-sign”), (b) a complex parking sign with multiple “sub-signs”.

In our experiments, we used a dataset of 178 images (145 images were used for training and 33 images were used for testing). To create the dataset for this experiment, the authors captured photos of the parking signs using smartphone cameras in high-efficiency mode and a standard resolution of 24MP. Since we used bounding boxes in the AWS Rekognition Custom Label project, we selected “Object Detection” as the Project Type in this instance. This choice enables us to detect specific objects within an image, mirroring the functionality of bounding boxes in the AWS Rekognition project.

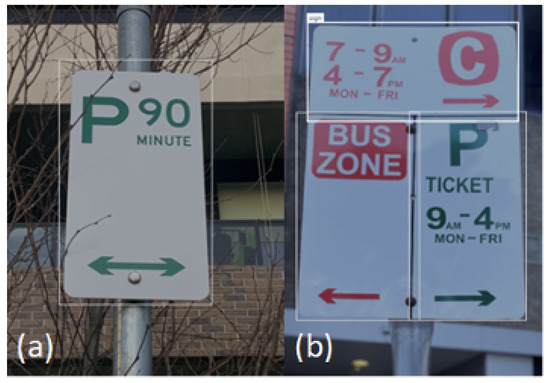

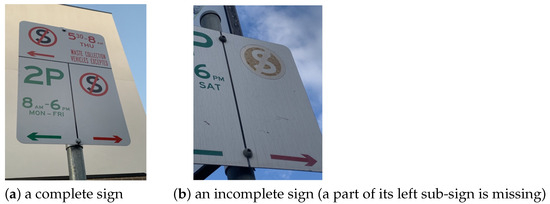

3.4. Criterion C4: Recognition of (In)Completeness of Parking Signs

Another important parameter to check is whether a model can correctly identify the completeness of the sign, i.e., whether some parts of the sign are missing on the photo; see Figure 5. The Azure model uses the image classification approach, which is different from the previous models where a bounding box was used. Image classification applies one or more labels to the whole image instead of parts of an image, as per object detection. This is equivalent to image-level labels in AWS Rekognition Custom Labels. Thus, we applied two labels here, “complete” and “incomplete”. An image is considered “complete” when it contains the entire parking sign (all four edges and corners are visible). Correspondingly, an image is considered “incomplete” when part of the parking sign is cut out of the image (not all four edges and corners are visible in the image).

Figure 5.

Examples of complete and incomplete photos of the parking signs.

As the dataset, we used 441 images (352 images have been used for training and 89 images have been used for testing), where 173 of the images presented incomplete signs and 179 images presented complete signs, see Table 6. To create the dataset for this experiment, the photos of the parking signs were taken by the authors using smartphone cameras in high-efficiency mode and a standard resolution of 24 MP. The images of incomplete signs were created by two methods: (1) an original photo contained only a part of the sign, and (2) the original photo was cropped to include only a part of a parking sign.

Table 6.

Completeness identification: sizes of datasets used for training and testing.

3.5. Criterion C5: Text Recognition

Another important parameter to analyse is the capabilities of AWS Rekognition and Azure Computer Vision to accurately capture the text presented on the parking signs. Both platforms offer a managed service to extract text from images. In our experiments, we have not conducted any training of the models to analyse this parameter. The data set used for this experiment included 51 images. To create the dataset for this experiment, the photos of the parking signs were taken by the authors using smartphone cameras in high-efficiency mode and a standard resolution of 24MP. We cropped to include only one sub-section of a parking sign. We focused on the following sub-criteria: extra text detection, missing text, and order of the text. In what follows, we discuss them in more detail.

(C5.1) No extra text detected. This criterion is considered passed if the system detects only the relevant text in the parking sign without including any irrelevant text. If irrelevant text or incorrect time markers are detected, it could be passed to the LLM or further analysis. This can alter or distort the time information on the parking sign, leading to incorrect interpretations and potentially miscalculating the parking verdict.

(C5.2) Correct order of the text. This criterion is considered passed for parking signs if the time frames, including AM/PM markers, are detected exactly as they appear on the parking sign. If the text order is incorrect (e.g., detecting “7 PM to 4 AM” instead of “7 AM to 4 PM”), it can completely change the meaning of the parking sign. This incorrect interpretation will affect the parking verdict calculation and result in a wrong parking decision.

(C5.3) No text missed. This criterion is considered passed if no essential text is missing from the sign, such as time markers or day ranges. If important elements like AM/PM, specific days (e.g., Mon-Sat), or time frames are missing, it can lead to incomplete or incorrect parking verdicts. The lack of crucial markers results in losing essential information needed to accurately calculate the parking restrictions.

Experiments with AWS Rekognition: The OCR process was driven by the DetectText API, which scans images and extracts any text it finds. The parking sign images were stored in an S3 bucket, and an AWS Lambda function was set up to automatically handle the text extraction. Every time a new image was uploaded to the S3 bucket, the Lambda function would automatically trigger the DetectText API, which would then extract the text from the image. This setup allows for a fully automated OCR process, meaning no manual effort is needed once it is configured. The DetectText API provides a structured response with the extracted text, which can then be used for further analysis. To compare the results, we used this method to validate the setup, ensuring that the OCR process was extracting text accurately.

Experiments with Azure: Vision Studio, an interactive tool within Azure, was used for testing the OCR functionality. This tool provides a user-friendly interface for uploading images and processing them to extract text. Alternatively, to perform OCR using Azure’s Computer Vision API, a cURL command can be used to send an image for text extraction. After sending the request, the API returns a JSON response containing the extracted text and bounding box coordinates around the text. To make sure the results were consistent and reliable, we cross-validated the OCR outputs from both Vision Studio and the API method (cURL). Since both methods use the same underlying API, we expected the results to align. This helped confirm that the OCR process was working correctly and that the extracted text was accurate, regardless of the method used.

4. Results

In this section, we present the results of our experiments on the detection of parking symbols, arrows, and sub-signs, as well as on the completeness checks and text recognition.

4.1. Criterion C1: Symbol Recognition

The Azure Custom Vision model achieved an F1-score of 0.848, while the AWS Rekognition Custom Labels model showed a significantly higher F1-score of 0.991; see Table 7. Based on these results, we can conclude that, under the same training technique, training images, and testing threshold, the AWS Rekognition model outperforms Azure Custom Vision in detecting parking symbols.

Table 7.

Comparison of the performance of the AWS and Azure models used to detect parking symbols.

4.2. Criterion C2: Recognition of Arrows

As shown in Table 8, the Azure model significantly outperforms the AWS-equivalent model (F1 score of 0.9 compared to 0.4). Table 9 presents a more detailed comparison, where an arrow type is also taken into account. This allows us to conclude that the core difference in the performance of the models is in the ability to detect left and right arrows. Both AWS and Azure models have good performance in detecting both-sided arrows, with very similar values of F1 scores (0.944 and 0.950, respectively). However, the performance for the left and right arrows in AWS is much lower.

Table 8.

Comparison of the performance of the AWS and Azure models used to detect arrow direction. As a threshold, the AWS- calculated thresholds have been applied.

Table 9.

Detailed comparison of the performance of the AWS and Azure models used to detect arrow direction, broken down by custom label.

4.3. Criterion C3: Recognition of Sub-Signs

Table 10 presents a summary of the performance comparison for the sub-sign detection. Overall, the AWS model identified sub-signs with a significantly higher accuracy. It has perfect precision, recall and thus an F1-Score of 1.000 with a very high confidence level of 94%. Thus, each sub-sign in the testing images was correctly identified with no false positives (where part of the image that is not a sub-sign is flagged with a “sign” label) or false negatives (where a valid sub-sign was not flagged with a “sign” label). For the same confidence level of 94%, Azure’s model is robust against false positives with 100% precision but performs poorly at preventing false negatives with just 35.4% recall. This lowers the F1-Score to 52.3%.

Table 10.

Comparison of the performance of the AWS and Azure models used to identify sub-signs with the confidence level threshold of 0.94.

4.4. Criterion C4: Recognition of (In)Completeness of Parking Sign Images

Both models performed on a similar level of accuracy. The AWS model achieved an F1 score of 0.933, while the Azure model achieved a higher F1 score of 0.976, see Table 11. AWS incorrectly labelled six parking signs as “incomplete”, whereas Azure incorrectly labelled four parking signs as “incomplete”. Neither AWS nor Azure models labelled any incomplete parking sign as “complete”.

Table 11.

Comparison of the performance of the AWS and Azure models used to detect (in)completeness of parking sign images.

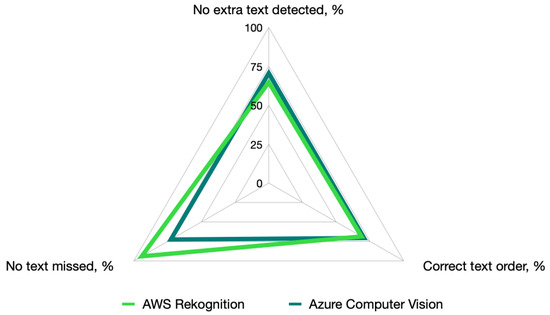

4.5. Criterion C5: Text Recognition

Figure 6 presents a summary of the performance comparison in the following three dimensions of text recognition

Figure 6.

Comparison of the performance of the AWS and Azure models in text recognition on the parking signs.

Criterion C5.1: No extra text detected. AWS Rekognition detected extra text on 18 parking sign images, which included redundant time markers (such as “PM”) and irrelevant symbols. For example, AWS incorrectly identified an extra “S” in “BUS ZONE” and also detected symbols like the no-standing symbol as the letter “S”. Azure Computer Vision detected extra text on 15 parking sign images, particularly redundant “AM” or “PM” markers. However, Azure performed better than AWS in avoiding irrelevant symbols, making it slightly more accurate in this area.

Criterion C5.2: Correct order of the text. AWS Rekognition model: Incorrect text order was observed in 16 parking sign images. For example, in time-related text like “7 AM–10 PM”, AWS sometimes swapped the markers, resulting in “7 AM PM 10”. Azure Computer Vision: Incorrect text order was observed in 15 images. Similar to AWS, the time markers (“AM” and “PM”) were sometimes out of place.

Criterion C5.3: No text missing. AWS Rekognition missed text in three images of parking signs, specifically time markers such as “PM”. For example, in the case of the time range “10 PM”, AWS detected only “10” while failing to detect the “PM”. Azure Computer Vision missed text in 14 images of parking signs, also typically related to the time markers. For example, in the case of the time range “7 AM–4 PM”, Azure only detected “7 to 4” without specifying “AM” or “PM”, leading to a loss of key contextual information.

5. Discussion

Our work is the first to employ AI-based approaches for (1) recognising parking signs that are typical for Melbourne, Australia, (2) extracting parking constraint information from them. Thus, our analysis was based on a new proposed dataset that has not been used in any prior studies. The dataset included photos of parking signs made in different weather/light conditions as well as from different distances from the signs; see Figure 7. We aimed to cover not only different types of parking constraints but also different complexities of signs. Using the new proposed dataset also means that we cannot directly compare the results of our study with the results of related works. Generally, there are many approaches to traffic sign detection and recognition, but the number of approaches focusing on parking sign recognition is very limited. One of the reasons for this might be significant variations in parking regulations and parking sign formats among the countries and cities. Table 12 presents a summary comparison of the proposed approach to the related PSR studies.

Figure 7.

Examples of photos of parking signs made in different conditions.

Table 12.

Comparison of the proposed approach to the related PSR studies.

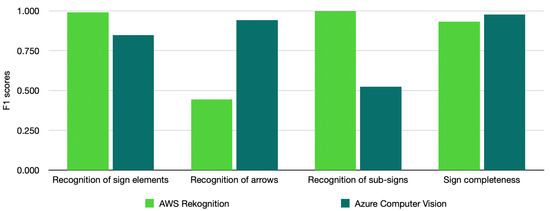

Our study is also the first to compare the performance of AWS Rekognition and Azure Computer Vision models in the context of parking sign recognition. To summarise the results of our experiments, Figure 8 presents an overview of the performance comparison of the AWS and Azure models based on their F1 scores.

Figure 8.

Overview of the performance comparison of the AWS Rekognition and Azure Computer Vision models.

AWS Rekognition significantly outperforms Azure Computer Vision in the following criteria, which makes it a more promising option for further analysis and enhancements:

- C1:

- Recognition of parking sign elements;

- C3:

- Recognition of sub-signs;

- C5.3:

- Correct recognition of the text. No text missed.

Azure Computer Vision performs significantly better in the criterion C2 (Recognition of arrows) if an approach with direct labelling is used. However, this can be compensated if we apply an approach that applies a separate labelling of arrows and arrow headers with a corresponding algorithmic solution to calculate the type of arrows (left, right, or double-sided).

AWS Rekognition and Azure Computer Vision have a similar level of performance, with Azure Computer Vision providing slightly better results, in the following criteria:

- C4:

- Recognition of (in)completeness of parking signs;

- C5.1:

- Correct recognition of the text. No extra text detected;

- C5.2:

- Correct recognition of the text. Correct order of the text.

Neither AWS Rekognition nor Azure Computer Vision has provided F1 score values of 1.000 for all criteria, which means they cannot be currently applied directly for parking sign analysis. However, further training of the models on a larger dataset might provide better results. Moreover, an algorithmic fine-tuning can also be helpful to deal with C2 and C5.

6. Limitations and Threats to Validity

There are several limitations and threats to the validity of our experiments. This section summarises several threats that may have occurred in our research and their respective mitigation strategies.

External threats to validity refer to the transferability of obtained results: The first threat is the limited scope of the dataset used for our experiments, which was limited to 1225 images of parking signs. To make the dataset representative, we aimed to cover the typical types of signs we observe in Melbourne. We used photos taken from different distances and angles as well as in different light conditions and under different impacts from shadows and obstructions. However, our set is definitely not covering all possible variants of parking signs. It might be useful to expand the dataset with images taken under a broader range of weather conditions, such as heavy fog, thunderstorms, etc.

Both AWS and Azure models are cloud-hosted, which means they depend on having a stable Internet connection. As our study is especially relevant for parking in large cities, we consider the assumption regarding Internet connection as reasonable. In remote areas, where the connection might be unstable or unavailable, the parking signs are typically much simpler, containing only a single subsection. The benefit of using an automated PSR system would be limited in these conditions.

Internal threats to validity refer to the potential/unexpected factors that might influence the outcome of the study. Our research method in Section 3 involves manual steps to calculate the performance statistics (precision, recall and F1 score). While these statistics are automatically calculated for the AWS models, we had to calculate them manually for the results produced by Azure models. After the calculations have been done by an author working on the corresponding set of experiments, the results have been reviewed by another author to avoid typos or miscalculations.

Also, in AWS models, the “assumed threshold” for each label is automatically calculated by AWS Rekognition Custom Labels. This threshold refers to the confidence percentage for each predicted label. The assumed threshold cannot be set manually, as it is calculated based on the best F1 score achieved on the test dataset during model training. To achieve a similar comparison in Azure, we called the Azure model API using the same test images used for AWS and manually calculated the F1 score. When calling the model API, we manually set the confidence threshold to be the same as AWS’ threshold for each label. However, it should be noted that these thresholds could potentially favour the AWS model as it is calculated based on the best F1 score achieved for the AWS model during testing.

7. Conclusions

In this paper, we presented the key findings of a research project designed to address the research question: (RQ1) How can parking signs be analysed using AWS Rekognition and Azure Custom Vision?

Our study offers the following novel contributions: (1) This is the first comparison of the performance of AWS Rekognition and Azure Computer Vision models specifically for parking sign recognition. (2) This is the first AI-based approach to recognising parking signs typical for Melbourne, Australia, and extracting parking constraint information from them.

We utilised 1225 images (created under different weather/light conditions, etc.) of the parking signs to evaluate the capabilities of AWS Rekognition and Azure Custom Vision models based on the following criteria:

- C1:

- Recognition of parking sign elements;

- C2:

- Recognition of arrows;

- C3:

- Recognition of sub-signs;

- C4:

- Recognition of (in)completeness of parking signs;

- C5:

- Correct recognition of the text presented on the sign.

AWS Rekognition demonstrated better results within C1 and C3 criteria (F1 scores of 0.991 and 1.000 by AWS vs. 0.848 and 0.523 by Azure, respectively), as well as one sub-criterion of C5 (C5.3, “No text missed”, 0.94 by AWS vs. 0.73 by Azure model). While Azure Computer Vision performed better in C2 (F1 score only 0.444 by AWS vs. 0.941 by Azure model), this advantage could be offset by utilising an algorithmic approach to achieve an F1 score of 1.000. For other criteria, both approaches demonstrated a similar level of performance: For C4, F1 scores were 0.933 by AWS and 0.976 by the Azure model. For C5.1 and C5.2, we obtained 0.65 and 0.69 by AWS and 0.711 and 0.71 by Azure model, respectively. While neither of them provided F1 score values of 1.000 for all criteria, this study demonstrated that the current capacity of both AWS Rekognition and Azure Custom Vision is close to being ready for live application on parking sign analysis. We consider the results demonstrated AWS Rekognition models as more promising for further refinements to achieve 100% accuracy of automated analysis of parking signs.

Future Work: As a promising future work direction, we consider further training of the models on a larger dataset to attempt obtaining improved results. Additionally, fine-tuning the algorithms may help address the challenges associated with criteria C2 and C5. It would make sense to analyse whether there is some correlation between the type of parking sign and the AI models, and adjust the training data sets accordingly.

Another promising research direction would be to investigate the capacity of deep learning approaches that can be used in offline settings. Automated PSR systems would provide the most benefit in large cities, where parking signs are complex, but the Internet connection allows utilising AWS and Azure services. Nevertheless, an offline solution might provide additional benefits towards fully automated driving.

Author Contributions

Conceptualization, M.S.; methodology, M.S.; AWS/Azure experiments, A.S., C.A., F.L., T.H.T., P.P. and M.S.; validation and formal analysis, A.S., C.A., F.L., T.H.T., P.P. and M.S.; investigation, A.S., C.A., F.L., T.H.T., P.P. and M.S.; resources, M.S.; data curation, A.S., C.A., F.L., T.H.T., P.P. and M.S.; writing—original draft preparation, A.S., C.A., F.L., T.H.T., P.P. and M.S.; writing—review and editing, M.S.; visualization, A.S. and M.S.; supervision, M.S.; project administration, M.S.; funding acquisition, M.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Shine Solutions Group Pty Ltd. (grant number PRJ00002626).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

We would like to thank Shine Solutions Group Pty Ltd. for sponsoring this project, and especially Branislav Minic and Adrian Zielonka for sharing their industry-based expertise and advice.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| AWS | Amazon Web Services |

| DL | Deep Learning |

| ML | Machine Learning |

| PSR | Parking Sign Recognition |

| SLR | Systematic Literature Review |

| TSR | Traffic Sign Recognition |

References

- Cao, J.; Song, C.; Peng, S.; Xiao, F.; Song, S. Improved Traffic Sign Detection and Recognition Algorithm for Intelligent Vehicles. Sensors 2019, 19, 4021. [Google Scholar] [CrossRef]

- Wali, S.B.; Abdullah, M.A.; Hannan, M.A.; Hussain, A.; Samad, S.A.; Ker, P.J.; Mansor, M.B. Vision-Based Traffic Sign Detection and Recognition Systems: Current Trends and Challenges. Sensors 2019, 19, 2093. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Yan, W.Q. Traffic sign recognition based on deep learning. Multimed. Tools Appl. 2022, 81, 17779–17791. [Google Scholar] [CrossRef]

- Bangquan, X.; Xiong, W.X. Real-time embedded traffic sign recognition using efficient convolutional neural network. IEEE Access 2019, 7, 53330–53346. [Google Scholar] [CrossRef]

- Flores-Calero, M.; Astudillo, C.A.; Guevara, D.; Maza, J.; Lita, B.S.; Defaz, B.; Ante, J.S.; Zabala-Blanco, D.; Armingol Moreno, J.M. Traffic sign detection and recognition using YOLO object detection algorithm: A systematic review. Mathematics 2024, 12, 297. [Google Scholar] [CrossRef]

- Chen, H.; Ali, M.A.; Nukman, Y.; Abd Razak, B.; Turaev, S.; Chen, Y.; Zhang, S.; Huang, Z.; Wang, Z.; Abdulghafor, R. Computational Methods for Automatic Traffic Signs Recognition in Autonomous Driving on Road: A Systematic Review. Results Eng. 2024, 24, 103553. [Google Scholar] [CrossRef]

- Haji Faraji, P. Efficient Street Parking Sign Detection and Recognition Using Artificial Intelligence. Ph.D. Thesis, University of British Columbia, Vancouver, BC, Canada, 2023. [Google Scholar]

- Spichkova, M.; Van Zyl, J. Application of computer vision technologies for automated utility meters reading. In Proceedings of the International Conference on Software Technologies, Online, 7–9 July 2020; pp. 521–528. [Google Scholar]

- Lim, X.R.; Lee, C.P.; Lim, K.M.; Ong, T.S.; Alqahtani, A.; Ali, M. Recent advances in traffic sign recognition: Approaches and datasets. Sensors 2023, 23, 4674. [Google Scholar] [CrossRef]

- Stallkamp, J.; Schlipsing, M.; Salmen, J.; Igel, C. The German Traffic Sign Recognition Benchmark: A Multi-class Classification Competition. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; pp. 1453–1460. [Google Scholar]

- Timofte, R.; Prisacariu, V.A.; Gool, L.V.; Reid, I. Combining traffic sign detection with 3D tracking towards better driver assistance. In Emerging Topics in Computer Vision and Its Applications; World Scientific: Singapore, 2012; pp. 425–446. [Google Scholar]

- Alawaji, K.; Hedjar, R.; Zuair, M. Traffic sign recognition using multi-task deep learning for self-driving vehicles. Sensors 2024, 24, 3282. [Google Scholar] [CrossRef]

- Sütő, J. An Improved Image Enhancement Method for Traffic Sign Detection. Electronics 2022, 11, 871. [Google Scholar] [CrossRef]

- Larsson, F.; Felsberg, M. Using Fourier descriptors and spatial models for traffic sign recognition. In Proceedings of the Scandinavian Conference on Image Analysis, Ystad, Sweden, 23–27 May 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 238–249. [Google Scholar]

- Houben, S.; Stallkamp, J.; Salmen, J.; Schlipsing, M.; Igel, C. Detection of traffic signs in real-world images: The German Traffic Sign Detection Benchmark. In Proceedings of the International Joint Conference on Neural Networks, Dallas, TX, USA, 4–9 August 2013; pp. 1–8. [Google Scholar]

- Wang, G.; Ren, G.; Jiang, L.; Quan, T. Hole-based traffic sign detection method for traffic signs with red rim. Vis. Comput. 2014, 30, 539–551. [Google Scholar] [CrossRef]

- Chen, Q.; Dai, Z.; Xu, Y.; Gao, Y. CTM-YOLOv8n: A Lightweight Pedestrian Traffic-Sign Detection and Recognition Model with Advanced Optimization. World Electr. Veh. J. 2024, 15, 285. [Google Scholar] [CrossRef]

- Khalid, S.; Shah, J.H.; Sharif, M.; Dahan, F.; Saleem, R.; Masood, A. A robust intelligent system for text-based traffic signs detection and recognition in challenging weather conditions. IEEE Access 2024, 12, 78261–78274. [Google Scholar] [CrossRef]

- Dalborgo, V.; Murari, T.B.; Madureira, V.S.; Moraes, J.G.L.; Bezerra, V.M.O.; Santos, F.Q.; Silva, A.; Monteiro, R.L. Traffic sign recognition with deep learning: Vegetation occlusion detection in brazilian environments. Sensors 2023, 23, 5919. [Google Scholar] [CrossRef] [PubMed]

- Pavel, M.I.; Tan, S.Y.; Abdullah, A. Vision-based autonomous vehicle systems based on deep learning: A systematic literature review. Appl. Sci. 2022, 12, 6831. [Google Scholar] [CrossRef]

- Ellahyani, A.; El Jaafari, I.; Charfi, S. Traffic sign detection for intelligent transportation systems: A survey. In Proceedings of the 3rd International Conference of Computer Science and Renewable Energies (ICCSRE’2020), Agadir, Morocco, 22–24 December 2021; Volume 229, p. 01006. [Google Scholar]

- Liu, C.; Li, S.; Chang, F.; Wang, Y. Machine vision based traffic sign detection methods: Review, analyses and perspectives. IEEE Access 2019, 7, 86578–86596. [Google Scholar] [CrossRef]

- Swathi, M.; Suresh, K. Automatic traffic sign detection and recognition: A review. In Proceedings of the 2017 International Conference on Algorithms, Methodology, Models and Applications in Emerging Technologies (ICAMMAET), Chennai, India, 16–18 February 2017; pp. 1–6. [Google Scholar]

- Xia, J.; Li, M.; Liu, W.; Chen, X. DSRA-DETR: An improved DETR for multiscale traffic sign detection. Sustainability 2023, 15, 10862. [Google Scholar] [CrossRef]

- Yıldız, G.; Dizdaroğlu, B. Traffic sign detection via color and shape-based approach. In Proceedings of the 2019 1st International Informatics and Software Engineering Conference (UBMYK), Ankara, Turkey, 6–7 November 2019; pp. 1–5. [Google Scholar]

- Liu, H.; Wang, K.; Wang, Y.; Zhang, M.; Liu, Q.; Li, W. An Enhanced Algorithm for Detecting Small Traffic Signs Using YOLOv10. Electronics 2025, 14, 955. [Google Scholar] [CrossRef]

- Martinović, I.; Mateo Sanguino, T.d.J.; Jovanović, J.; Jovanović, M.; Djukanović, M. One Possible Path Towards a More Robust Task of Traffic Sign Classification in Autonomous Vehicles Using Autoencoders. Electronics 2025, 14, 2382. [Google Scholar] [CrossRef]

- Tabernik, D.; Skočaj, D. Deep learning for large-scale traffic-sign detection and recognition. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1427–1440. [Google Scholar] [CrossRef]

- Yang, T.; Long, X.; Sangaiah, A.K.; Zheng, Z.; Tong, C. Deep detection network for real-life traffic sign in vehicular networks. Comput. Netw. 2018, 136, 95–104. [Google Scholar] [CrossRef]

- Liu, P.; Xie, Z.; Li, T. UCN-YOLOv5: Traffic sign object detection algorithm based on deep learning. IEEE Access 2023, 11, 110039–110050. [Google Scholar] [CrossRef]

- Prakash, A.J.; Sruthy, S. Enhancing traffic sign recognition (TSR) by classifying deep learning models to promote road safety. Signal Image Video Process. 2024, 18, 4713–4729. [Google Scholar] [CrossRef]

- Cu, T.; Vuong, X.; Nguyen, C.; Nguyen, T. detection of Vietnamese traffic danger and warning signs via deep learning. J. Eng. Sci. Technol. 2024, 19, 133–145. [Google Scholar]

- Nadeem, Z.; Khan, Z.; Mir, U.; Mir, U.I.; Khan, S.; Nadeem, H.; Sultan, J. Pakistani traffic-sign recognition using transfer learning. Multimed. Tools Appl. 2022, 81, 8429–8449. [Google Scholar] [CrossRef]

- Lin, H.Y.; Chang, C.C.; Tran, V.L.; Shi, J.H. Improved traffic sign recognition for in-car cameras. J. Chin. Inst. Eng. 2020, 43, 300–307. [Google Scholar] [CrossRef]

- Han, J.Y.; Juan, T.H.; Chuang, T.Y. Traffic sign detection and positioning based on monocular camera. J. Chin. Inst. Eng. 2019, 42, 757–769. [Google Scholar] [CrossRef]

- Reddy, S.S.; Janarthanan, M.; Khan, I.U. RT-DETR with Attention-Free Mechanism: A Step towards Scalable and Generalizable Traffic Sign Recognition. SGS-Eng. Sci. 2025, 1, 1–17. [Google Scholar]

- Yazdan, R.; Varshosaz, M. Improving traffic sign recognition results in urban areas by overcoming the impact of scale and rotation. ISPRS J. Photogramm. Remote Sens. 2021, 171, 18–35. [Google Scholar] [CrossRef]

- Chen, B.; Fan, X. Msgc-yolo: An improved lightweight traffic sign detection model under snow conditions. Mathematics 2024, 12, 1539. [Google Scholar] [CrossRef]

- Dang, Y.; Wang, H.; Fan, Y. WAC-YOLO: A real-time traffic sign detection model for complex weather with feature degradation suppression and occlusion awareness. J. Real-Time Image Process. 2025, 22, 155. [Google Scholar] [CrossRef]

- Min, W.; Liu, R.; He, D.; Han, Q.; Wei, Q.; Wang, Q. Traffic sign recognition based on semantic scene understanding and structural traffic sign location. IEEE Trans. Intell. Transp. Syst. 2022, 23, 15794–15807. [Google Scholar] [CrossRef]

- Ma, C.; Liu, C.; Deng, L.; Xu, P. TSD-Net: A Traffic Sign Detection Network Addressing Insufficient Perception Resolution and Complex Background. Sensors 2025, 25, 3511. [Google Scholar] [CrossRef] [PubMed]

- Saxena, S.; Dey, S.; Shah, M.; Gupta, S. Traffic sign detection in unconstrained environment using improved YOLOv4. Expert Syst. Appl. 2024, 238, 121836. [Google Scholar] [CrossRef]

- Satti, S.K.; Maddula, P.; Ravipati, N.V. Unified approach for detecting traffic signs and potholes on Indian roads. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 9745–9756. [Google Scholar] [CrossRef]

- Kumaran, N.N.; William, J.J.; Gnaneswar, K.; Devi, S.P. Real-Time Traffic Sign Recognition for Smart Transportation in Indian Urban Environments Using YOLOv9. In Proceedings of the 2025 International Conference on Multi-Agent Systems for Collaborative Intelligence (ICMSCI), Erode, India, 20–22 January 2025; pp. 1235–1240. [Google Scholar]

- Rodríguez, R.C.; Carlos, C.M.; Villegas, O.O.V.; Sánchez, V.G.C.; Domínguez, H.d.J.O. Mexican traffic sign detection and classification using deep learning. Expert Syst. Appl. 2022, 202, 117247. [Google Scholar] [CrossRef]

- Zhao, G.; Ma, F.; Qi, W.; Zhang, C.; Liu, Y.; Liu, M.; Ma, J. TSCLIP: Robust clip fine-tuning for worldwide cross-regional traffic sign recognition. In Proceedings of the 2025 IEEE International Conference on Robotics and Automation (ICRA), Atlanta, GA, USA, 19–23 May 2025; pp. 3846–3852. [Google Scholar]

- Wang, J.; Chen, Y.; Dong, Z.; Gao, M. Improved YOLOv5 network for real-time multi-scale traffic sign detection. Neural Comput. Appl. 2023, 35, 7853–7865. [Google Scholar] [CrossRef]

- Han, C.; Gao, G.; Zhang, Y. Real-time small traffic sign detection with revised faster-RCNN. Multimed. Tools Appl. 2019, 78, 13263–13278. [Google Scholar] [CrossRef]

- Wu, Y.; Li, Z.; Chen, Y.; Nai, K.; Yuan, J. Real-time traffic sign detection and classification towards real traffic scene. Multimed. Tools Appl. 2020, 79, 18201–18219. [Google Scholar] [CrossRef]

- Meng, B.; Shi, W. Small traffic sign recognition method based on improved YOLOv7. Sci. Rep. 2025, 15, 5482. [Google Scholar] [CrossRef]

- Muzammel, C.S.; Spichkova, M.; Harland, J. Cultural influence on autonomous vehicles acceptance. In Proceedings of the International Conference on Mobile and Ubiquitous Systems: Computing, Networking, and Services, Melbourne, VIC, Australia, 14–17 November 2023; Springer: Cham, Switzerland, 2023; pp. 538–547. [Google Scholar]

- Jiang, Z. Street Parking Sign Detection, Recognition and Trust System. Ph.D. Thesis, University of Washington, Seattle, WA, USA, 2019. [Google Scholar]

- Faraji, P.H.; Tohidypour, H.R.; Wang, Y.; Nasiopoulos, P.; Ren, S.; Rizvi, A.; Feng, C.; Pourazad, M.T.; Leung, V.C. Deep Learning based Street Parking Sign Detection and Classification for Smart Cities. In Proceedings of the Information Technology for Social Good, Rome, Italy, 9–11 September 2021; pp. 254–258. [Google Scholar]

- Marušić, Ž.; Zelenika, D.; Marušić, T. Optimizing Image Resolution for OCR-based Parking Sign Interpretation in Real-Time Applications. In Proceedings of the 2025 14th Mediterranean Conference on Embedded Computing (MECO), Budva, Montenegro, 10–14 June 2025; pp. 1–4. [Google Scholar]

- Mirsharif, Q.; Dalens, T.; Sqalli, M.; Balali, V. Automated recognition and localization of parking signs using street-level imagery. In Computing in Civil Engineering 2017; ASCE Publishing: Reston, VA, USA, 2017; pp. 307–315. [Google Scholar]

- Manningham City Council. Manningham Parking Restriction Sign Images. 2017. Available online: https://www.data.gov.au/data/dataset/manningham-parking-restrictions/resource/45b66c66-458b-4d33-b7bb-482b817fbbfe (accessed on 15 September 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).